1. Introduction

Pneumonia is a leading inflammatory lung disease and a major cause of morbidity and mortality among children under 5 years of age, especially in countries with limited health resources. With epidemiological data showing over 146 million cases and 4 million deaths annually, the disease represents a serious public health problem [

1]. Significant progress in the control and prevention of childhood pneumonia has been achieved through the introduction of vaccines against

Haemophilus influenzae type B and pneumococcal infections, which have significantly reduced morbidity and complications [

2]. However, pneumonia remains widespread, especially among children in low-income areas and those with limited access to health services.

Pneumonia is a multifactorial disease caused by viruses, bacteria, and occasionally by fungal or parasitic organisms. In children under the age of five, viral infections are the leading cause, with respiratory syncytial virus (RSV) and various bacteria being among the most common pathogens. Symptoms vary depending on age but typically include fever, cough, shortness of breath, fatigue, and loss of appetite [

3]. In younger children, nonspecific signs such as abdominal pain, stiff neck, or even apnea may also be observed. Radiographic examinations are essential for the diagnosis and follow-up of pneumonia in children. Radiography can visualize specific changes in the lung parenchyma that aid in the clinical diagnosis. Viral pneumonias are often characterized by bilateral interstitial infiltrates and peribronchial disruptions. On the other hand, bacterial pneumonias caused by

Streptococcus pneumoniae or

Staphylococcus aureus can lead to lobar involvement, pleural effusion, empyema, and even necrotizing pneumonia [

4]. In severe cases, radiographic findings may include pneumatoceles or lung abscesses, requiring urgent treatment.

Early and accurate diagnosis is critical for the successful treatment of pneumonia and minimizing complications. According to the World Health Organization (WHO), a child with pneumonia should undergo radiography in the presence of “unexplained” fever, respiratory distress, or the suspicion of bacterial infection. X-rays not only help identify the pathology but also provide a basis for monitoring the effectiveness of treatment.

Complications of pneumonia in children include respiratory distress, apnea, bronchial hyperresponsiveness, and, in severe cases, death. Long-term sequelae such as bronchiectasis, bronchiolitis obliterans, and pneumosclerosis may also develop in chronic or poorly treated cases [

5]. Radiography is an indispensable tool for detecting these complications, making it a key component in disease management.

In order to provide real training, testing, and verification of the data, we use one of the free databases, V2 [

6], as well as the conclusions to it [

7]. The dataset consists of chest X-ray images that were selected from retrospective cohorts of pediatric patients of one to five years of age from the Guangzhou Women and Children’s Medical Center, Guangzhou [

6]. All chest X-ray examinations were conducted as part of routine clinical care. For the purposes of analysis, all radiographs underwent quality control procedures, during which low-quality or unreadable images were excluded. The dataset comprises 6060 images, divided into three directories. The testing directory contains subdirectories with X-rays of “non-pneumonia” (healthy) children (234 files) and a separate one for pneumonia cases. The training set contains 1342 images of healthy children and 3876 images of children with pneumonia. The validation set includes 193 healthy and 181 pneumonia images. All files have a resolution of 1873 × 1478 pixels.

In this study, we utilize the publicly available COVIDx CXR-2 dataset, which contains pediatric chest X-ray images from children diagnosed with pneumonia as well as those without radiographic signs of disease. The latter are labeled with the term “No Finding”, which is widely accepted in clinical and radiological practice and denotes the absence of pathological findings on the image. Depending on the institution or clinical team responsible for annotation, terms such as “normal” or “non-pneumonia” may also be used to describe this category. In the present manuscript, we have standardized the terminology by using “No Finding” consistently throughout, ensuring alignment with international conventions in medical image labeling. This clarification has been included to enhance interpretability and maintain scientific rigor in the presentation of results.

Literature Review

Recent advancements in artificial intelligence, particularly in deep learning, have significantly transformed the field of medical image diagnostics. In the context of pediatric pneumonia, numerous studies propose CNN-based and hybrid models aimed at enhancing the accuracy, robustness, and generalization capability of diagnostic systems. This review summarizes five recent studies (2023–2025) that introduce innovative methods for detecting pneumonia in children using chest X-rays, with a focus on hybrid architectures and optimization strategies.

Mustapha et al. proposed an innovative hybrid deep learning framework that combines convolutional neural networks (CNNs) with modified Swin Transformer blocks. The model leverages the strengths of CNNs for localized feature extraction and transformer blocks for capturing global dependencies. The achieved accuracy was high, and the model demonstrated strong applicability in clinical environments, including in regions with limited resources [

8].

Radočaj examined the impact of various activation functions on CNN performance in pneumonia classification. They compared ReLU, Swish, and Mish functions, with the latter delivering the best results when used with the InceptionResNetV2 architecture [

9].

Lei et al. (2025) addressed the classification of thoracic diseases, including pneumonia, using deep learning models such as AlexNet, ResNet, and InceptionNet. To mitigate class imbalance, they applied focal loss, and Grad-CAM visualization was used to improve interpretability. Their InceptionV3 model achieved a 28% improvement in AUC and a 15% boost in F1-score, demonstrating the effectiveness of fine-tuning architectural components [

10].

Kumar et al. (2025) introduced the Enhanced Multi-Model Deep Learning (EMDL) framework, which combines five pre-trained CNN models with image enhancement and feature optimization techniques such as PCA, BPSO, and BGWO. The model was evaluated on two independent datasets and showed excellent accuracy in classifying pneumonia, tuberculosis, and influenza [

11].

Thilagavathi et al. (2025) developed a hybrid classification system for COVID-19 and pulmonary infections using a combination of feature extraction and selection techniques along with an attention-based deep learning architecture (MhA-Bi-GRU with DSAN). Although focused on COVID-19, the approach is applicable to pneumonia detection and reflects the trend toward increasingly complex hybrid architectures [

12].

Bhatt et al. (2021) present a comprehensive overview of the development and classification of CNN architectures, dividing them into eight main categories—from spatial exploitation strategies to attention-based models. The authors examine the wide-ranging applications of these architectures and highlight key challenges and future directions for research [

13]. Gupta et al. (2022) develop a hybrid CNN-LSTM model for non-invasive blood pressure estimation using photoplethysmographic signals, achieving high accuracy in classifying different blood pressure levels. This highlights the potential of deep learning in medical diagnostics [

14]. Xie et al. (2021) introduce the CoTr model, which effectively combines CNNs with a deformable transformer (DeTrans) to improve 3D medical image segmentation. The model successfully captures long-range dependencies and demonstrates significantly better results compared to traditional CNNs and standalone transformer-based approaches [

15]. In another key development, Pandian et al. (2022) apply CNNs and the pre-trained GoogleNet architecture for the detection and classification of lung cancer through CT image analysis. Their results show high accuracy in identifying malignant tumors, reaffirming the role of artificial intelligence in early cancer diagnosis [

16]. Deep learning (DL) has significantly advanced pneumonia detection from chest X-ray images. Sharma & Guleria (2024) classify DL approaches into CNNs, transfer learning, and hybrid models, highlighting issues like data imbalance and the need for standardized metrics [

17]. Siddiqi & Javaid (2024) review methods from 2020–2023, including Vision Transformers (ViTs) [

18], which excel at capturing global features. Both reviews emphasize the importance of model interpretability and the cautious integration of DL into clinical practice. In conclusion, while DL shows strong potential, its safe and effective use in healthcare requires addressing ethical and technical challenges.

In summary, these studies affirm that hybridization—through transformer modules, ensemble learning, optimization, or activation functions—plays a crucial role in improving the effectiveness and generalizability of models. Future work should focus on explainable AI, real-world clinical validation, and computational efficiency to support practical implementation.

2. Methodology

The proposed methodology combines three complementary technologies—modular convolutional neural networks (CNNs), intuitionistic fuzzy estimators (IFEs), and a hybrid decision integration strategy—to enhance the accuracy and robustness of pneumonia detection in pediatric chest X-rays. The modular CNN architecture consists of three parallel submodels, each specialized in analyzing specific intensity regions of the X-ray image (e.g., background, soft tissue, and bone structures). This separation allows each module to extract relevant features independently, improving the overall diagnostic sensitivity by addressing the heterogeneity of pediatric radiographs.

Each module outputs a probability score indicating the presence or absence of pneumonia. These outputs are then passed to the intuitionistic fuzzy integration module, which aggregates the results based on membership, non-membership, and hesitation degrees. This fuzzy logic layer enables the system to manage uncertainty and ambiguity, especially in cases with overlapping visual characteristics. The final decision is derived using a weighted fusion mechanism, where each module’s influence is proportional to its classification accuracy, allowing more reliable modules to contribute more strongly to the final outcome.

By unifying deep learning with fuzzy reasoning, the proposed approach not only improves interpretability but also increases resilience against noise and variability in medical images, leading to more precise clinical decision support. For the tool we use Matlab 2022.

2.1. Intuitionistic Fuzzy Sets

Intuitionistic fuzzy sets (IFSs) are sets whose elements have degrees of belonging and not belonging. They are defined by Krassimir Atanassov [

19,

20,

21] (1983) as an extension of the fuzzy sets of Lotfi A. Zadeh. In the classical theory, an element belongs or does not belong to the summary. Zadeh defines membership in the interval [0, l]. The theory of intuitionistic fuzzy sets extends above concepts by comparing belonging and not belonging real numbers in the interval [0, l], and the sum of these numbers must also belong to the interval [0, l].

Let the universe be E. Let A be a subset of E. Let us construct the set.

A* = {〈x, μA(x), νA(x)〉|x∈E},

where 0 ≤ μA(x) + νA(x) ≤ 1.

We will call A* an IFS.

The functions μA(x): E→[0, 1] and νA(x): E→[0, 1] set the degree of membership and non-membership.

It is defined by the function πA(x): E→[0, 1] through πA(x) = 1 − μA(x) − νA(x), corresponding to the degree of uncertainty.

In addition to constructing a model, here we will define an assessment of the training accuracy rate

µ, degree of training inaccuracy

ν, and the degree of uncertainty

π.

where

µ∈[0; 1];

ai—current value of accuracy;

B—maximum of the accuracy;

A—validation loss maximum; non-affiliation coefficient—

ν, reflecting the degree of recognition accuracy, and

where

ν∈[0; 1];

bi—current value of validation loss and an uncertainty degree reflecting the degree of discrepancy between these two countermeasures

At the beginning, the statistics of the values that we use for training the neural network are obtained. Initially, when still no information has been obtained, all estimations are given initial values of <0, 0>. When

k > 0, the current (

k + 1)-st estimation is calculated on the basis of the previous estimations according to the recurrence relation

where 〈

μk,

νk〉 is the previous estimation, and 〈

μ,

ν〉 is the estimation of the latest measurement, for

m,

n ∈ [0, l] and

m+

n ≤ 1.

2.2. Modular Neural Network (MNN)

In recent years, MNNs have established themselves as a powerful tool for analysis and diagnosis in medicine. Unlike traditional “monolithic” architectures, MNNs divide complex tasks into smaller and independent submodules that can be trained in parallel. This modularity provides several key advantages, especially in disease recognition. This type of neural network has the following advantages. The use of modular architectures for medical imaging allows achieving high accuracy in tasks such as tumor detection and anomaly classification. According to Dao and Ly [

22], modular systems combining convolutional networks and transformers provide a better extraction of global and local features from images, making them extremely suitable for diagnosing diseases such as cancer and brain tumors [

22]. Modular networks can process different data sources—such as computed tomography (CT) and magnetic resonance imaging (MRI)—with high efficiency. Ref. [

23] emphasizes that combining different modules in neural networks improves the recognition of the spatiotemporal features of images, which is especially useful in neurological diseases such as Alzheimer’s [

23]. An advantage of the modular architecture is the ability to train specialized modules for different subtasks. Guebsi and Chokmani [

24] demonstrated how networks can be applied in the precise recognition of heart and lung diseases by dividing the diagnostic process into separate components [

24]. MNNs allow for parallel training and the optimization of submodules, which significantly reduces computational complexity. Saleem, A. et al. (2024) demonstrated this in building models for cardiovascular disease recognition, where the modular approach reduces data processing time by up to 40% [

25]. Another advantage of MNNs is the easier interpretation of the models. Ashraf et al. [

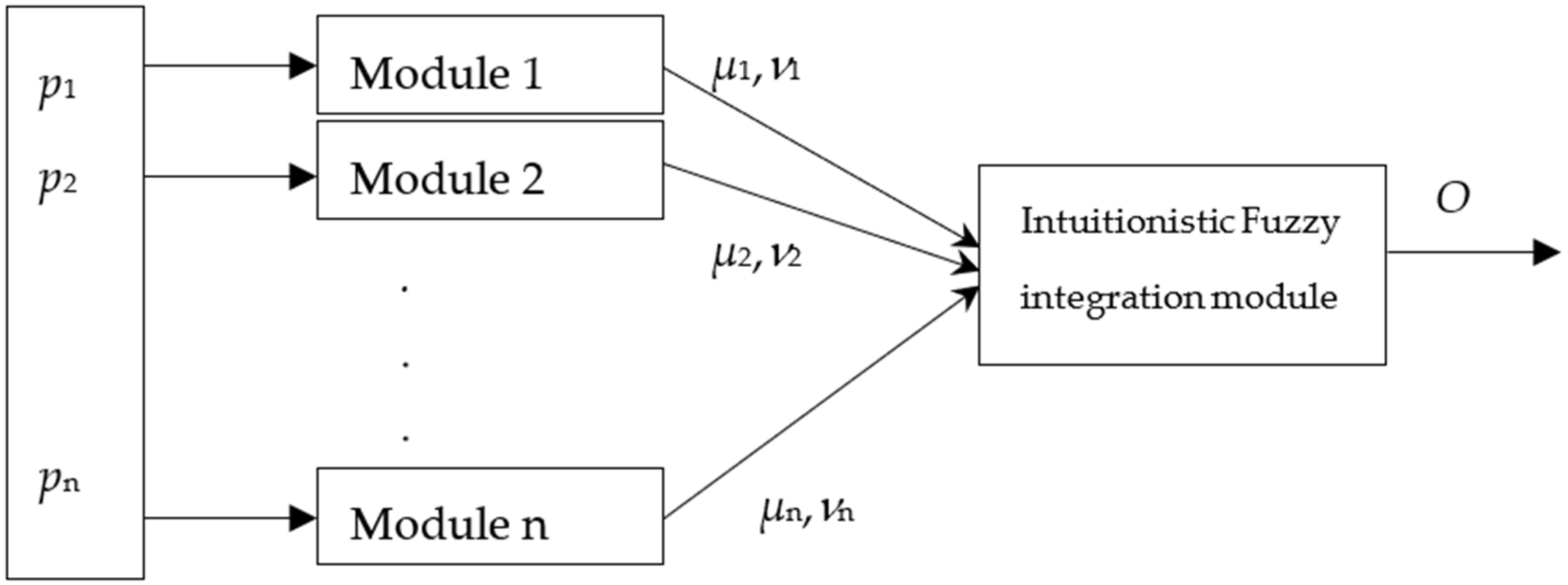

26] emphasize that modular structures provide clear dependencies between input data and predictions, which is crucial for clinical applications. The suggested structure of an MNN is presented in

Figure 1.

The output O is calculated according to the following equation [

27], which essentially performs a weighted integration of the module outputs:

where

– output of the module j∈[1, 2, …, m];

kj—coefficient of existence of module j. The coefficient of existence of every module shows the presence/absence of the respective module, with respect to the need of that module in the particular case.

In our case, m = 3 (3 for the images).

This article is a continuation of three other articles [

28,

29,

30].

They form the conceptual and methodological foundation for the current study and clearly demonstrate the evolution of the authors’ work toward increasingly precise, adaptive, and interpretable models.

In [

28], the authors introduce a modular convolutional neural network for the detection of pneumonia in children using chest X-ray images. The model is structured to analyze different anatomical regions separately, with each module processing specific image features. This design allows for better handling of the heterogeneity found in pediatric chest radiographs and represents an initial step toward the current research.

In [

29], the authors expand the architectural approach by integrating intuitionistic fuzzy estimations (IFEs) into a CNN model for detecting kidney damage in patients with diabetes. The use of IFEs enables the system to account for uncertainty and hesitation in diagnostic decision making—a concept that is further developed and refined in the present study focused on pneumonia.

Ref. [

30] presents a modular deep convolutional neural network designed to improve accuracy in prostate biopsy analysis. Here, the focus extends beyond modularity to include interpretability, by combining module outputs weighted according to their respective accuracies—a mechanism that is directly applied and adapted in the current research through the IFE module.

The present article serves as a continuation and enhancement of these three studies—combining the modular CNN structure from [

28] with the IFE approach from [

29] and implementing an improved architecture with enhanced accuracy and reliability, as seen in [

30]. The novel contribution lies in the integration of all these components into a unified system, aimed at the early and precise detection of pneumonia in children, validated through direct comparison with established CNN models and offering a greater interpretability of results.

The output integration module output O incorporates an intuitionistic fuzzy evaluator (IFE). The rationale behind its use is to leverage the advantages of refined classification based on the three degrees: membership, non-membership, and hesitation. As a result, the output of the intuitionistic fuzzy integration module yields values influenced by all inputs, with each input contributing to the final result proportionally as a coefficient that reflects the accuracy of the respective module. Consequently, the influence coefficient of each module is directly proportional to its classification accuracy. When generating the final output of the module, the positive contribution of each individual module is extracted based on its respective degree of membership μ.

3. Implementation

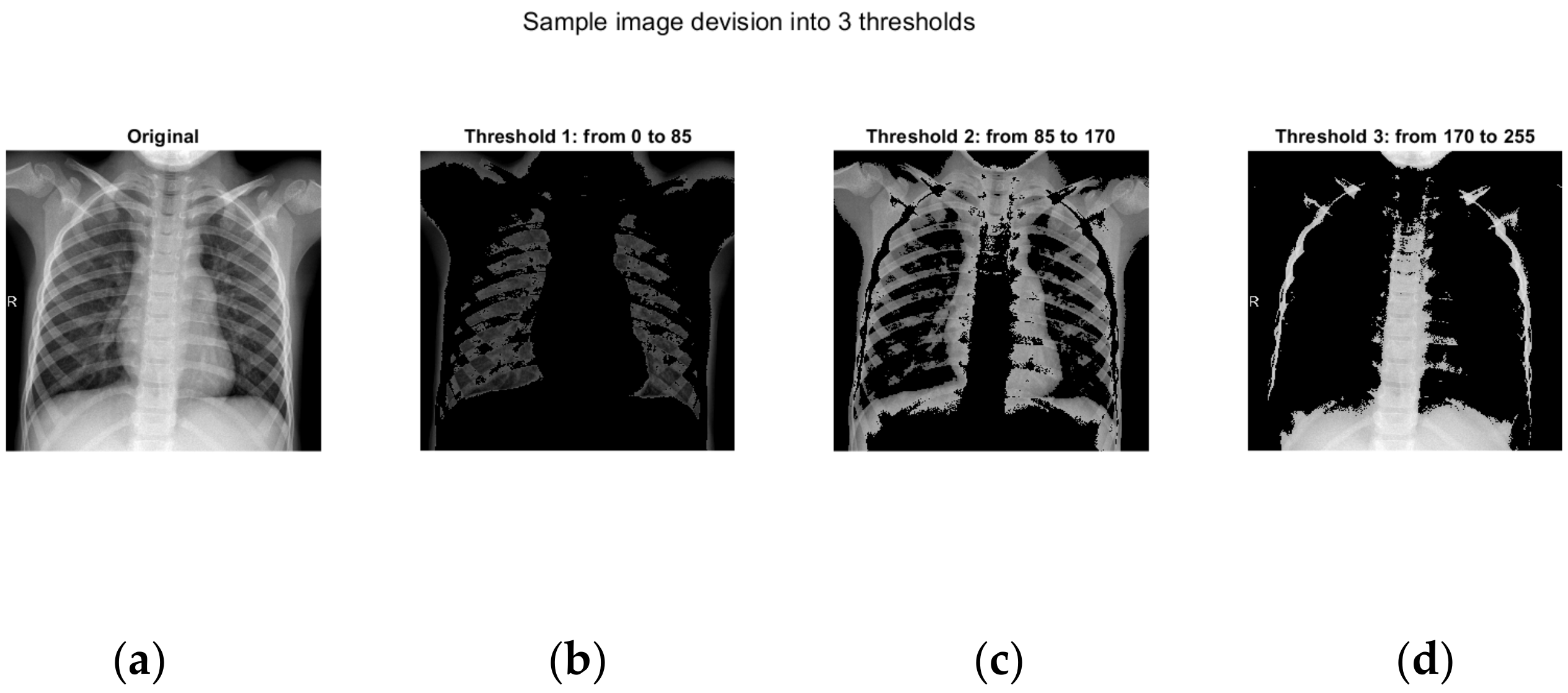

Here, we have conditionally chosen three separate modules, the inputs of which are supplied with pixels with a color in the interval 0−1/3*256; 1/3*256−2/3*256; 2/3*256−256.

The main idea of this article is based on the fact that the positive results from each of the modules are taken.

In the first module, an image is located in which the black color predominates. This is the part in which a lack of bone structure or the soft tissues of the person are visible on the X-ray. Here is the unimportant part of the image. The second module receives information from the middle part of the image. Here, the greatest information about the lungs and possible diseases is obtained.

In the third module is the brightest part of the X-ray image, which is usually in the area of the bone structure.

Data preprocessing: X-ray images are collected from publicly accessible databases and are reviewed by medical professionals. They often vary in resolution, orientation, and file format. To ensure compatibility and standardization for convolutional neural network processing, all images are first converted into a unified format and resized to a standard dimension (in our case, 299 × 299 pixels). This step is critical for batch processing and facilitates the use of pre-trained models.

To increase diversity in the training dataset and reduce the risk of overfitting, various data augmentation techniques are applied. These include geometric transformations such as random rotations, horizontal and vertical flipping, scaling (zooming in or out), and affine transformations. Additionally, adjustments to brightness, contrast, and gamma correction are performed to simulate variations in exposure and imaging conditions, thereby enhancing the model’s robustness to real-world data variability.

Pixel intensity is normalized by scaling to the [0, 1] range or standardized around the arithmetic mean with unit variance. This normalization accelerates model convergence and ensures numerical stability during training. If required, supplementary techniques such as histogram equalization or contrast enhancement are applied to improve the visualization of subtle anatomical structures and pathological changes.

The preprocessing pipeline also includes dataset curation—removing duplicates or low-quality images—and verifying correct labeling. Each image is associated with a diagnostic label (e.g., “normal,” “pneumonia,” “other pathology”), which is later used in supervised learning. Finally, the data are divided into training, validation, and test subsets using stratified sampling to preserve class distribution across all partitions.

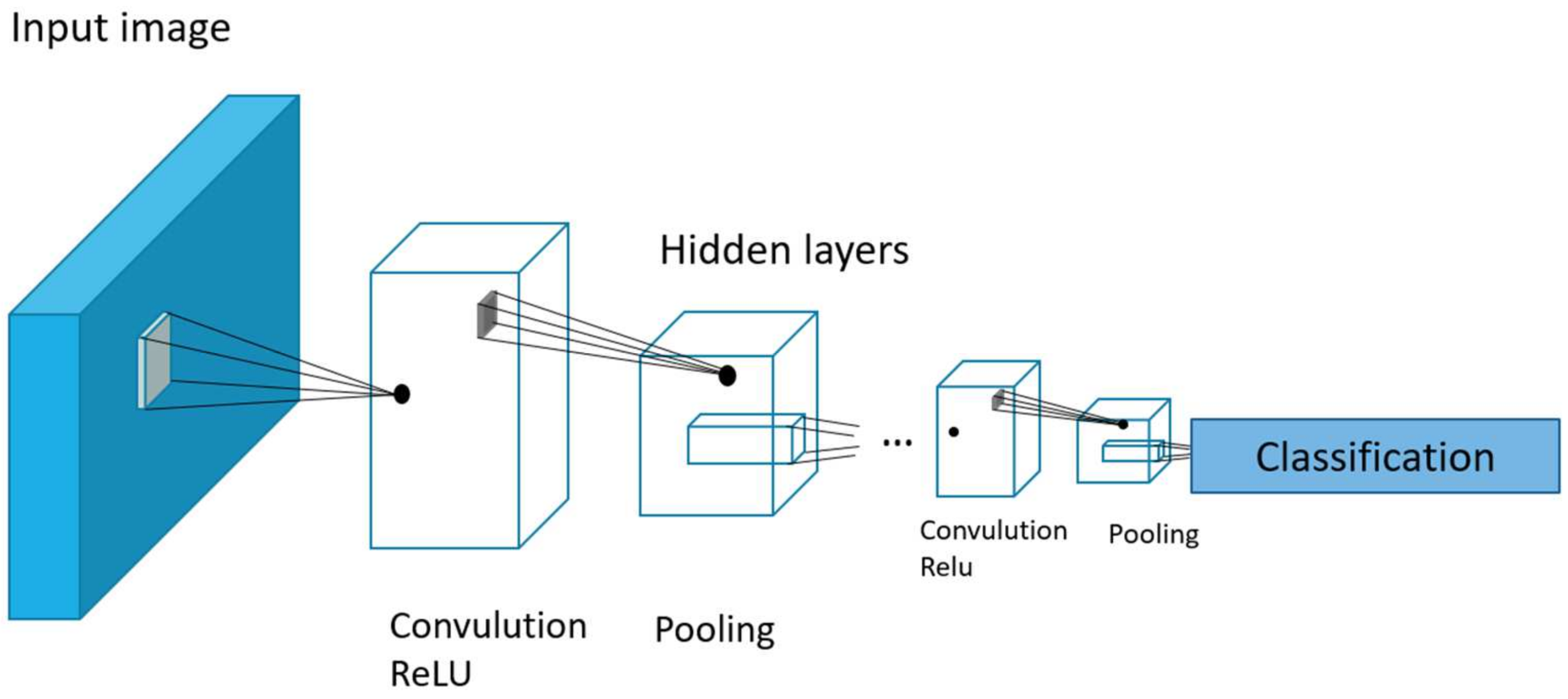

In this article, we use Inception v3 (

Figure 2), one of the neural network architectures that is specifically designed for image processing. It was developed by Google and is part of the Inception series of models. It has 33 layers and is a reasonable compromise between accuracy and processing speed.

4. Results

For image processing, a database of 3000 images of children up to 5 years of age was created, collected from various sources, mainly from Internet databases. In order to perform image processing, first the entire database of 3000 X-ray images is divided into three identical databases with three groups with the interval 0–1/3*256; 1/3*256–2/3*256; 2/3*256–256. Each group is fed to one of the three modules. For the interval 0-86, pixel values are fed to Module 1, those in the interval 87–167 are fed to Module 2, and finally, values from the interval 168–255 are fed to Module 3.

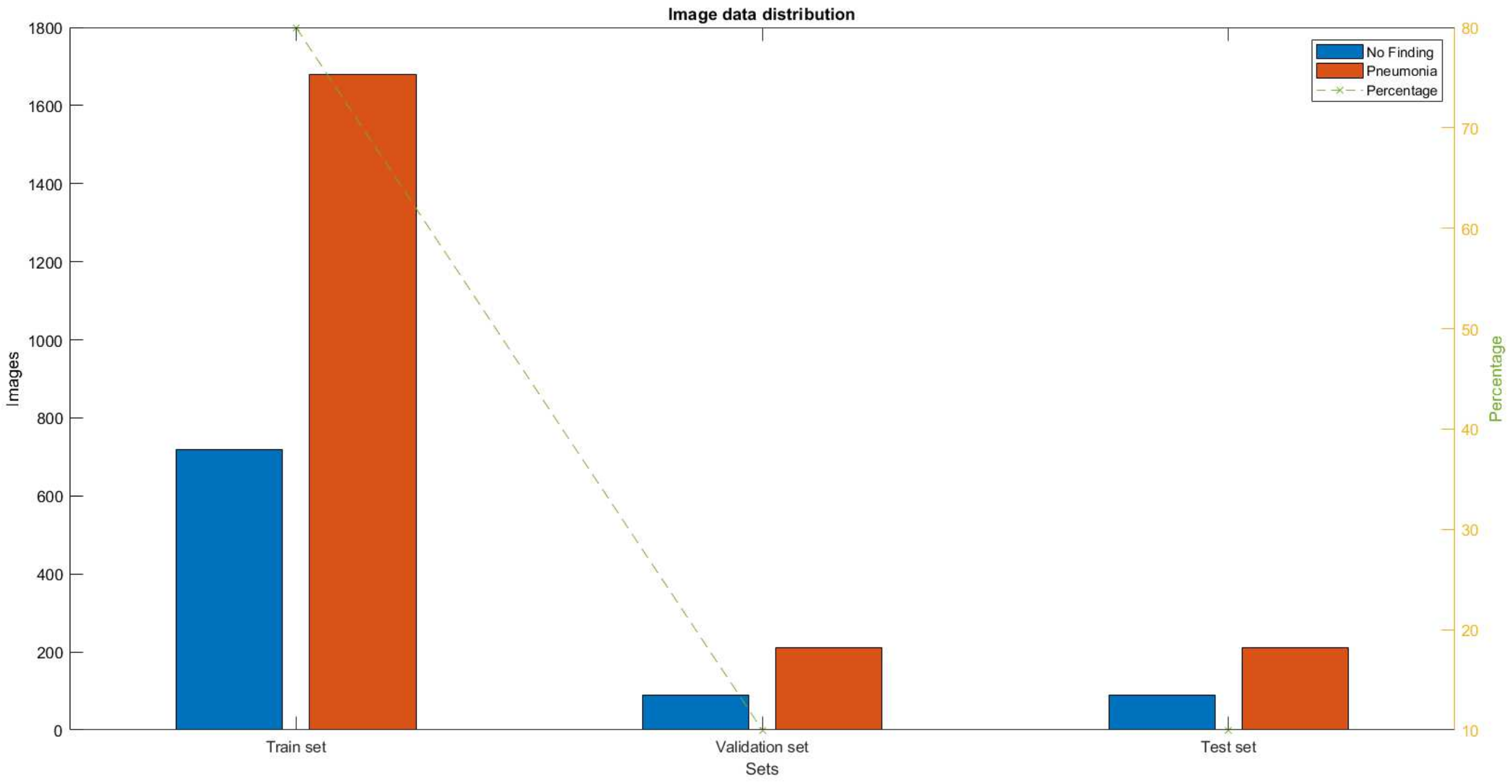

And each of the modules is fed 3000 X-ray images distributed in the ratio of 80% for training, 10% for testing, and 10% for verification.

When the X-ray images are submitted, the distribution shown in

Figure 3 is obtained. It shows the number of X-ray images labeled with the presence of pneumonia and the absence of pneumonia for the three groups (training, testing, and verification).

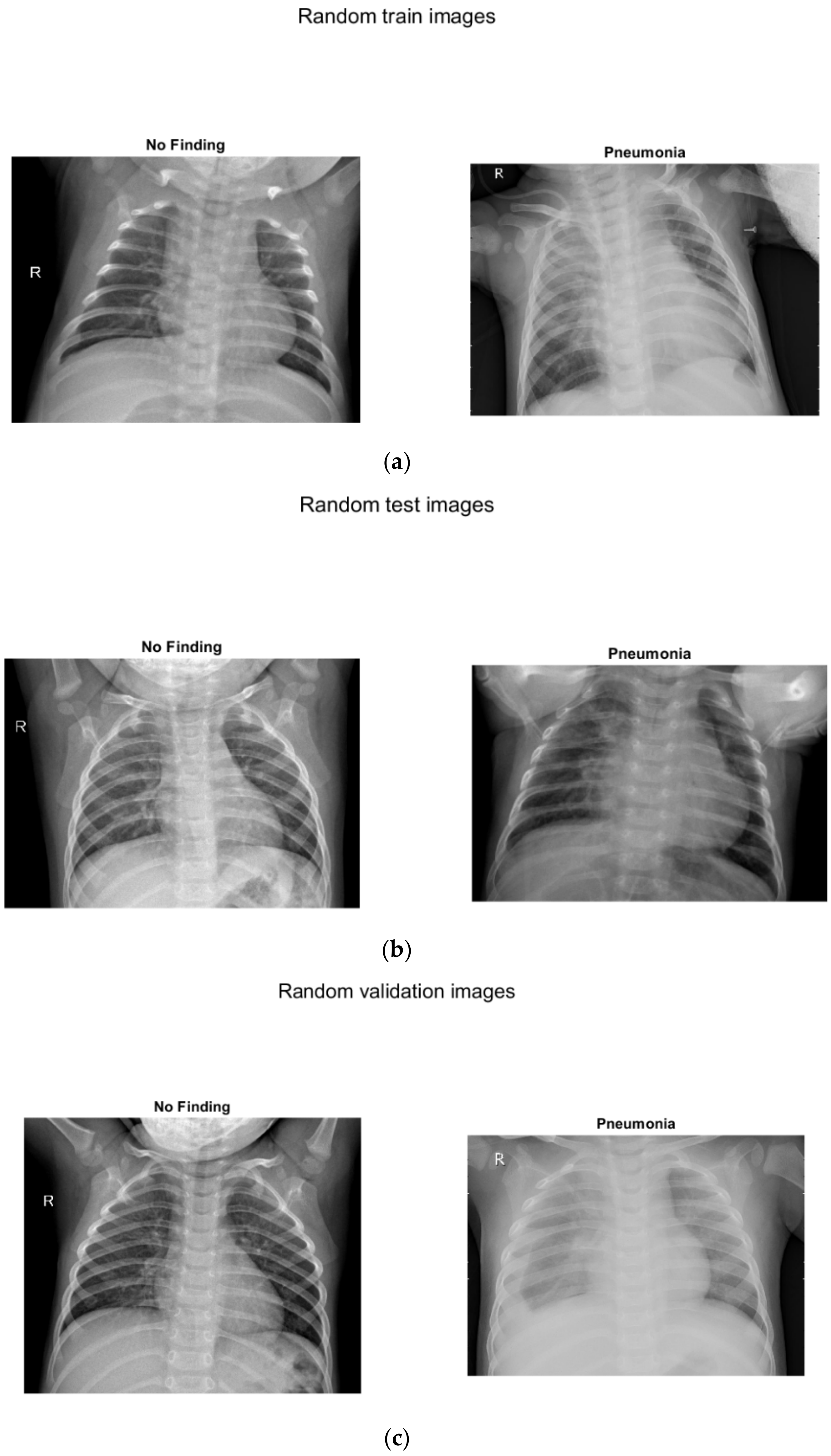

Three separate images show sample images with and without pneumonia of children up to 5 years old (

Figure 4): (a) for training, (b) for testing, (c) for verification.

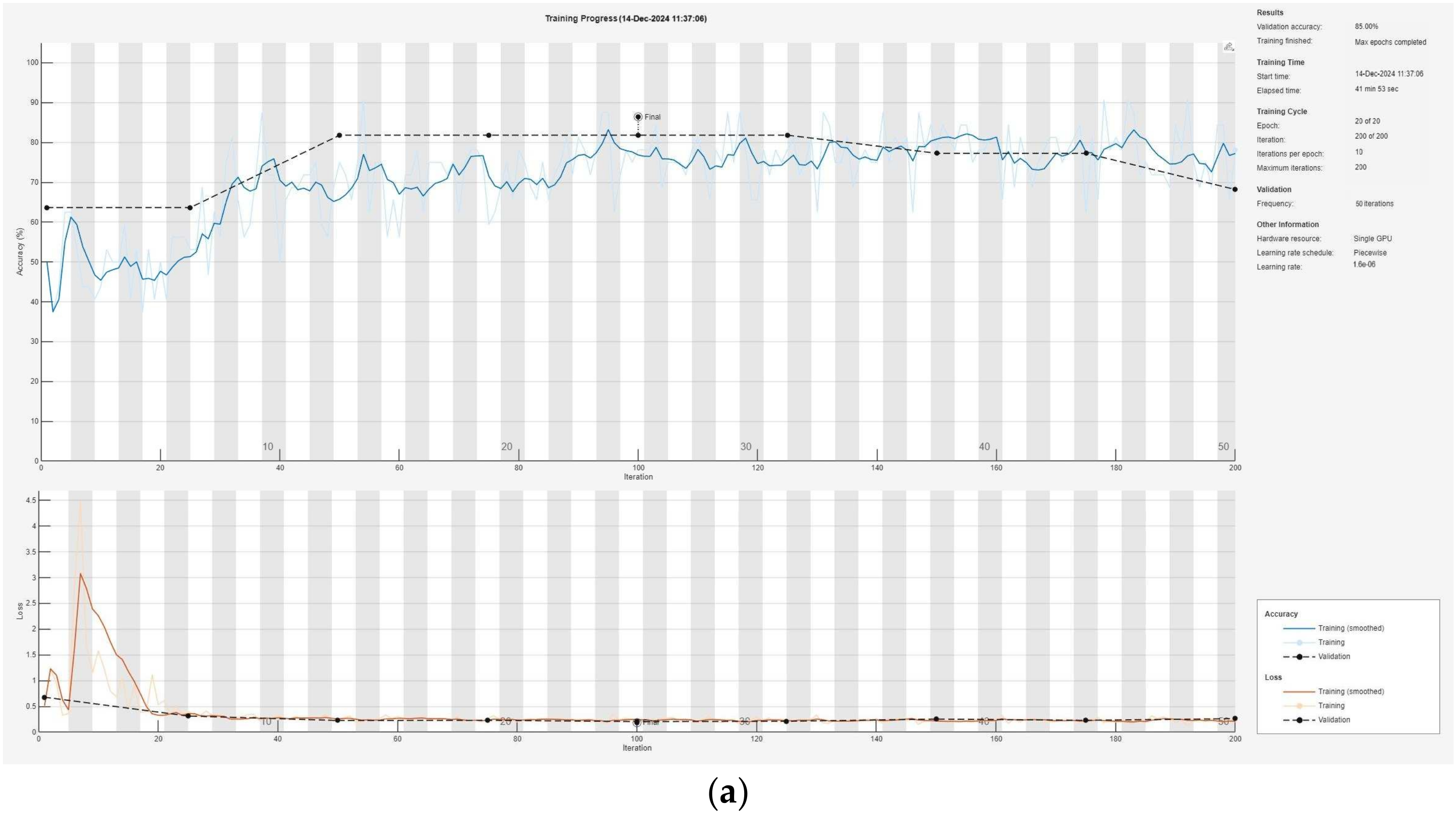

Each of the modules is taught separately with the corresponding X-rays. The teaching of each of the modules (1, 2, and 3) can be seen in

Figure 5: (a) for Module 1, (b) for Module 2, (c) for Module 3.

In

Figure 5, the images of three different chest X-rays are presented, distributed according to the indicated thresholds. As shown, the first image represents the original chest X-ray. The next three images display visualizations of the image confined to the specified intervals.

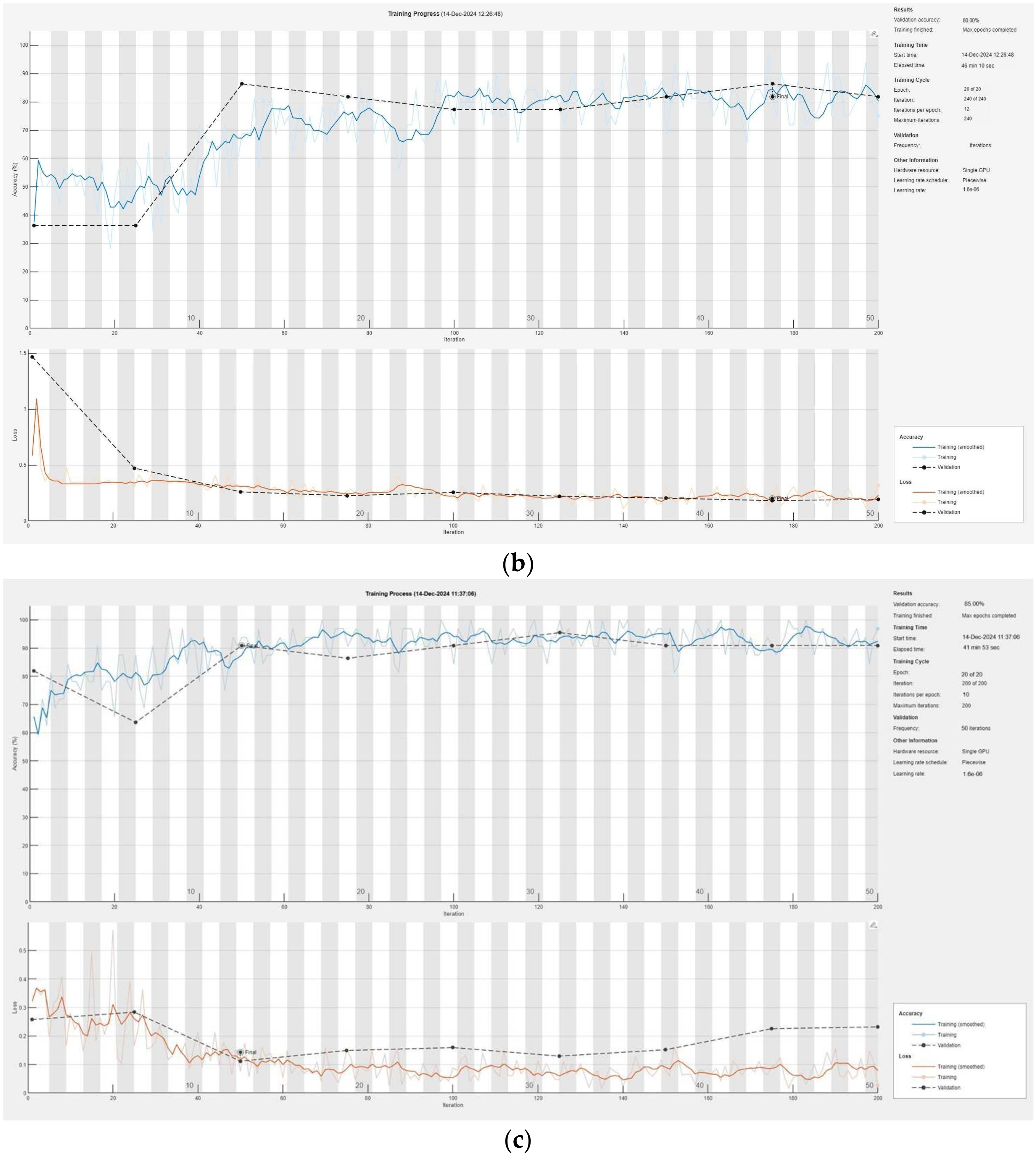

Table 1 contains the results of the three modules (1, 2, and 3) and the result after using the intuitionistic fuzzy integration module, AlexNet, and GoogLeNet.

Figure 6 illustrates the training process of the convolutional neural network, presented modularly: (a) for Module 1, (b) for Module 2, and (c) for Module 3, highlighting the progressive learning structure and specialization within each module.

In order to compare the achieved result, we use a comparison with three other neural networks. The first neural network with which we compare is a single CNN, in which we feed the same data in the form of images. The training data are displayed in

Table 1, and the training process (including the number of iterations, the number of epochs, the obtained accuracy, and validation lost) is shown in

Figure 7a. In exactly the same way, a comparison was made with another neural network created for image recognition, GoogLeNet [

31,

32]. The same images are used for its training that were used in all previous neural networks. The training data are given in

Table 1, and the training process with the corresponding data is given in

Figure 7b. Similarly, another neural network for image recognition, AlexNet [

33,

34,

35], was used. The data are similarly indicated in

Table 1 and

Figure 7c.

5. Discussion

The presented hybrid convolutional neural network (CNN) combined with intuitionistic fuzzy estimators (IFEs) [

36,

37,

38] demonstrates significant progress in the detection of pneumonia in children. The system uses three modules to process different parts of X-ray images—background, lung tissue, and bone structure—ensuring maximum information extraction. The results of each module show high accuracy: Module 1 achieved 85.00%, Module 2 achieved 80.00%, and Module 3 achieved 93.33%. After integrating the data using the intuitionistic fuzzy logic module, the overall accuracy of the system reached an impressive 94.93%, making it significantly more efficient compared to traditional approaches. The validation losses were also minimal, being 0.0043 for Module 1, 0.0053 for Module 2, 0.0032 for Module 3, and only 0.0017 for the entire system, indicating excellent model optimization.

The network uses the 33-layer Inception v3 architecture, specifically tailored for image processing, which helps in identifying complex features such as interstitial infiltrates, lobar involvement, and pleural effusions. The model was trained and tested on a dataset of 3000 X-ray images, split into a ratio of 80:10:10 for training, testing, and validation. The data distribution and modular learning ensure the robustness of the system when working with noisy and variable data. A key strength of the approach lies in the use of intuitionistic fuzzy logic, which successfully manages uncertainty and reduces the risk of misclassification.

These results have broad clinical applicability, providing a reliable tool for the early diagnosis of pneumonia in children. The developed model can be extended with additional data and adapted to detect other respiratory diseases, improving health outcomes, especially in resource-limited settings. Future steps include testing larger and more diverse datasets and comparing them with other hybrid architectures to validate the flexibility and efficiency of the approach.

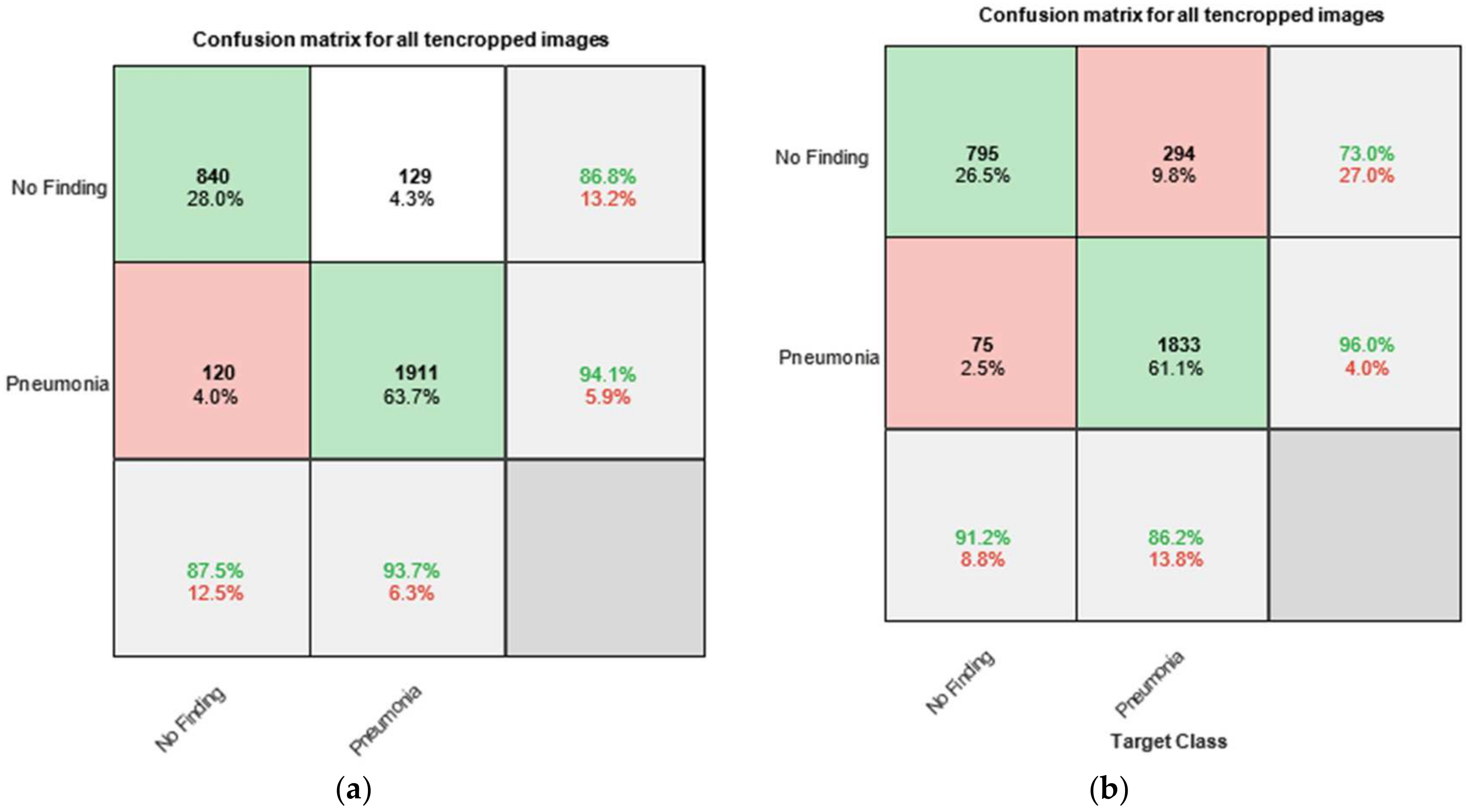

Everywhere below, “No Finding” means the absence of pneumonia.

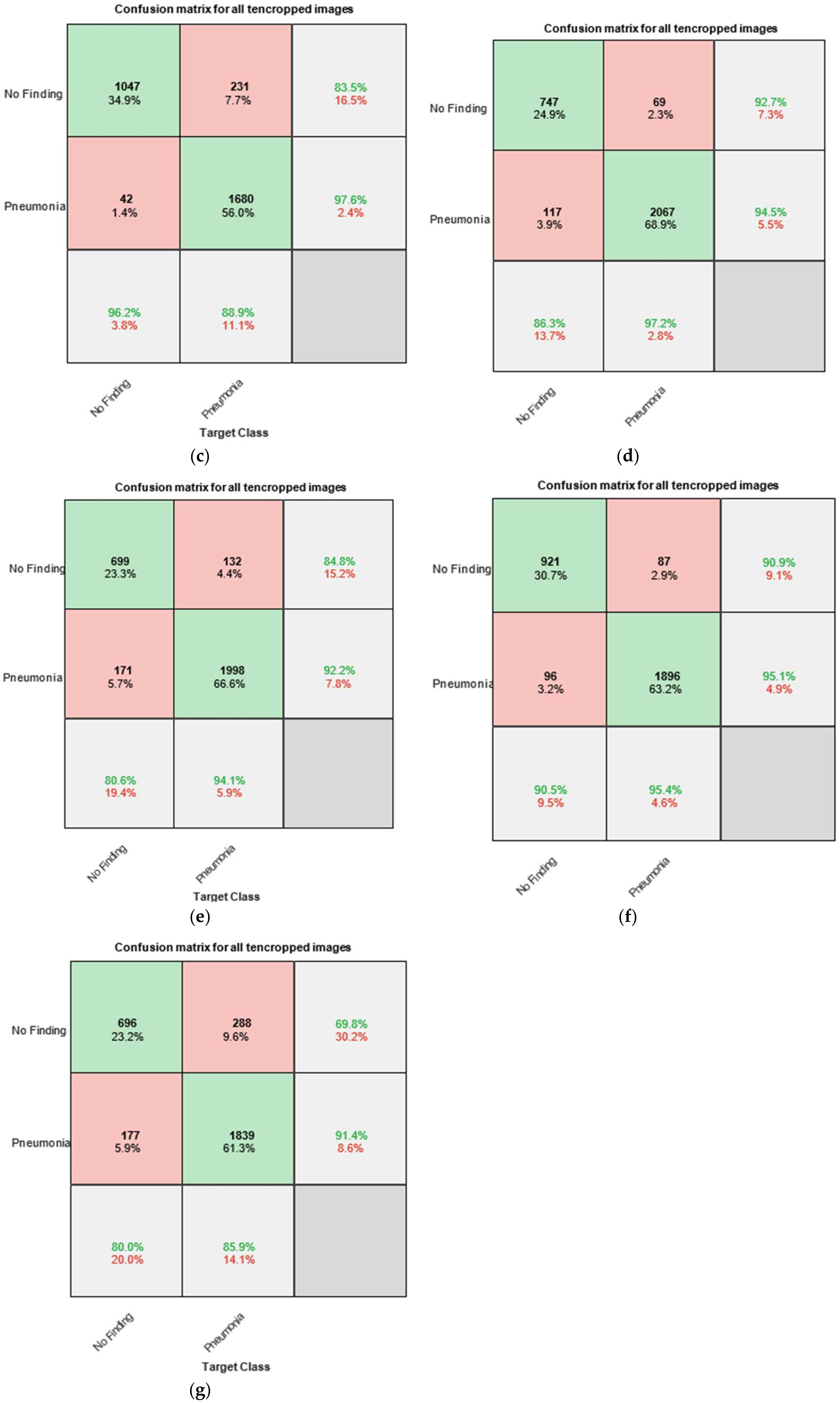

Figure 8 presents the confusion matrices, also known as error matrices, obtained by testing the trained networks with the test dataset. The chart is divided into several sections. The upper-left part consists of four cells labeled as follows: the first row contains the label “No Finding”, while the second row contains the label “Pneumonia”. The columns are labeled in the same manner—“No Finding” in the first column and “Pneumonia” in the second column.

In the confusion matrix charts, the rows correspond to the predicted class (output class), while the columns correspond to the actual class (target class). The diagonal cells represent correctly classified observations, whereas the off-diagonal cells indicate misclassified observations.

For instance, in

Figure 8a, the cell located in the second row and second column displays values that reflect the correctly classified X-ray images of pneumonia from the total of 3000 images. The reported values are 1911 (63.7%), which means that when testing the entire set of 3000 X-ray images, 1911 were labeled as “Pneumonia” and were correctly classified by the system as such. This corresponds to 63.7% of the total dataset (i.e., 3000 × 63.7% = 1911).

The other diagonal cell in the matrix (first row and first column) represents the correct classification of X-ray images with no finding of pneumonia. This corresponds to 840 images or 28.0% of the total dataset (i.e., 3000 × 28.0% = 840).

Similarly, the two off-diagonal cells represent incorrect classifications of X-ray images:

The cell in the first row and second column indicates the number (and corresponding percentage relative to the total 3000 images) of X-ray images annotated as “No Finding” but misclassified by the system as “Pneumonia”. The reported values are 129 images (or 4.3% of the total dataset), which were incorrectly identified.

The cell in the second row and first column represents the number of X-ray images annotated as “Pneumonia” but misclassified by the system as “No Finding”. The reported values are 120 images (or 4.0% of the total dataset).

The rightmost column of the chart displays the percentages of all instances assigned to a given class, whether correctly or incorrectly classified. These values are expressed as percentages, with correct classifications shown in green and incorrect classifications in red.

For example, in the second row, third column, the following values are reported: 94.1% of all X-ray images annotated as “Pneumonia” were correctly classified as “Pneumonia” by the system, while only 5.9% were misclassified as “No Finding”. These indicators are commonly referred to as the precision (or positive predictive value) and false discovery rate, respectively.

Similarly, the bottom row of the chart presents the percentages of all instances that belong to a given class, whether correctly or incorrectly classified. These indicators are commonly referred to as the sensitivity (also known as recall or true positive rate) and false negative rate.

Figure 8a–g shows the individual graphs for Module 1, Module 2, Module 3, Output O, Only CNN, GoogLeNet, and AlexNet. To enable a comparison with existing CNN models, we incorporate an SVM. Support vector machines (SVMs) are powerful supervised learning models used for image recognition by finding the optimal hyperplane that separates data into distinct classes. In image classification tasks, SVMs are particularly effective in high-dimensional spaces and with smaller datasets. Feature extraction techniques such as histogram of oriented gradients (HOG), principal component analysis (PCA), or deep CNN embeddings are typically used to convert images into vector form. These vectors are then fed into the SVM for training. By employing kernel functions (e.g., linear, polynomial, or RBF), SVMs can handle non-linear separations, making them versatile and accurate for image recognition [

39,

40].

Table 2 presents a comparative analysis of correctly and incorrectly classified photos in seven different neural networks and an SVM.

5.1. Analysis of Classification Performance Across Different Models

Table 2 presents a comparative study of the accuracy in classifying chest X-ray images as “normal” (non-pneumonia) or “pneumonia” using seven neural network models and a classical support vector machine (SVM). The models are evaluated based on the percentages of correctly and incorrectly classified images in the two main categories.

5.1.1. Classification of Normal Cases (Non-Pneumonia)

Among all models, Output O demonstrates the highest accuracy for normal images—96.2% correct classifications and only 3.8% errors. This positions it as the leading model for reliably recognizing healthy lung structures. SVM follows with a 90.6% accuracy and 9.4% errors, indicating that despite its classical nature and lack of deep learning, SVM remains competitive for clearly distinguishable cases. Only CNN and GoogLeNet also show strong results with over 90% accuracy (91.2% and 90.5%, respectively). In contrast, AlexNet (80.0%) and Module 2 (80.6%) underperform, with errors exceeding 19%, suggesting a weaker generalization capability in identifying normal cases.

5.1.2. Classification of Pneumonia Cases

Module 3 achieves the best performance in pneumonia classification, with 97.2% accuracy and only 2.8% errors. This confirms its high sensitivity to pathological findings, including interstitial infiltrates, lobar opacities, or pleural effusions. GoogLeNet also demonstrates excellent efficacy (95.4% accuracy), along with Module 2 (94.1%). These results highlight the importance of deeper architectures and complex structures for successfully detecting clinical abnormalities. At the lower end of the spectrum, Only CNN, AlexNet, and SVM show significantly reduced sensitivity—approximately 86% accuracy for pneumonia classification, accompanied by 13–14% errors. This could be critical in real-world clinical settings, where false negatives may have serious consequences. Output O, despite its outstanding performance on healthy cases, correctly classifies only 88.9% of pneumonia cases, with 11.1% errors—indicating an asymmetric sensitivity.

5.1.3. Comparative Balance Between Normal and Pneumonia Cases

The balance between correctly classifying healthy and pneumonia cases is critical for clinical applicability. Module 3 stands out as the most balanced model, achieving 86.3% accuracy for healthy cases and 97.2% for pneumonia. This underscores its ability to reliably recognize both normal and pathological structures.

5.1.4. Conclusions and Implications

Output O is the most reliable model across all metrics and emerges as the optimal choice for automated pneumonia diagnosis, characterized by a high sensitivity and balanced class performance. GoogLeNet and Module 2 also deliver exceptional results, particularly in pneumonia classification. SVM, while limited in pneumonia cases, retains strong efficacy for normal images and could be valuable in hybrid systems. AlexNet and Only CNN require optimization or integration with modern architectures, as their performance for both categories remains compromised.

As the results were derived from over 300 training and testing iterations per model, they can be interpreted as equivalent to a 5-fold cross-validation, given their stability and reproducibility. The reported average accuracies and standard deviations offer a realistic estimate of each algorithm’s performance under clinically relevant conditions. In this context, Output O achieved the most balanced result with an average accuracy of 92.55% and a standard deviation of 5.16%, making it the most suitable model for automated diagnosis. Although GoogLeNet had a slightly higher accuracy (92.95%), its reduced reliability for pneumonia cases limits its overall effectiveness. The high variability observed in Module 3 (Std. Dev. = 7.71%) also raises concerns about its consistency. These results highlight the importance of model stability as a critical factor in medical applications.

6. Conclusions

The proposed hybrid convolutional neural network (CNN) with intuitionistic fuzzy estimation (IFE) demonstrates a highly accurate and robust approach for pneumonia detection in children. By using a modular neural architecture that processes X-ray images into separate segments (background, lung tissue, and bone structure) and integrating the results through intuitionistic fuzzy logic, the system achieves an overall accuracy of 94.93%. This outperforms traditional CNN models and reveals new key challenges such as uncertainty and noise in medical images. The robust performance of the model, highlighted by minimal validation loss values, demonstrates its potential for clinical application, enabling early and accurate diagnosis of pneumonia. Future research should focus on expanding the datasets, investigating its applicability to other diseases, and validating the model in real clinical settings to improve its universality and impact. This innovative approach helps to better integrate artificial intelligence into pediatric diagnostics, especially in resource-limited settings.

Potential Clinical Implementation Challenges

Artificial intelligence (AI), in particular deep learning using convolutional neural networks (CNNs), has shown significant potential in medical diagnostics, including the automatic recognition of pneumonia from X-ray images. These technologies can speed up the diagnostic process, reduce the workload of medical professionals, and provide primary assessment in regions with limited access to doctors. However, there are a wide range of challenges that hinder their implementation in clinical practice. One of the main problems is related to the efficiency of training data—many publicly available databases contain unbalanced classes or inaccurately labeled diagnoses, often extracted automatically from text descriptions. In addition, trained models often do not adapt well to new hospital environments, different devices, or populations, which leads to a decrease in accuracy outside the laboratory. Regulatory requirements for the approval of such systems are strict, and responsibilities in the event of a potential diagnostic error are not yet fully clarified. Ethical questions also arise regarding the patient’s right to know that the diagnosis was produced by an algorithm. An additional challenge is associated with integration into the real clinical environment, where systems need to be compatible with existing hospital information platforms, and staff need to be trained to work with them. However, AI has the potential to be a valuable tool to support medical diagnostics, provided that high data quality, clinical validity, and ethical implementation are ensured.