Toward Real-Time Posture Classification: Reality Check

Abstract

1. Introduction

2. Methods

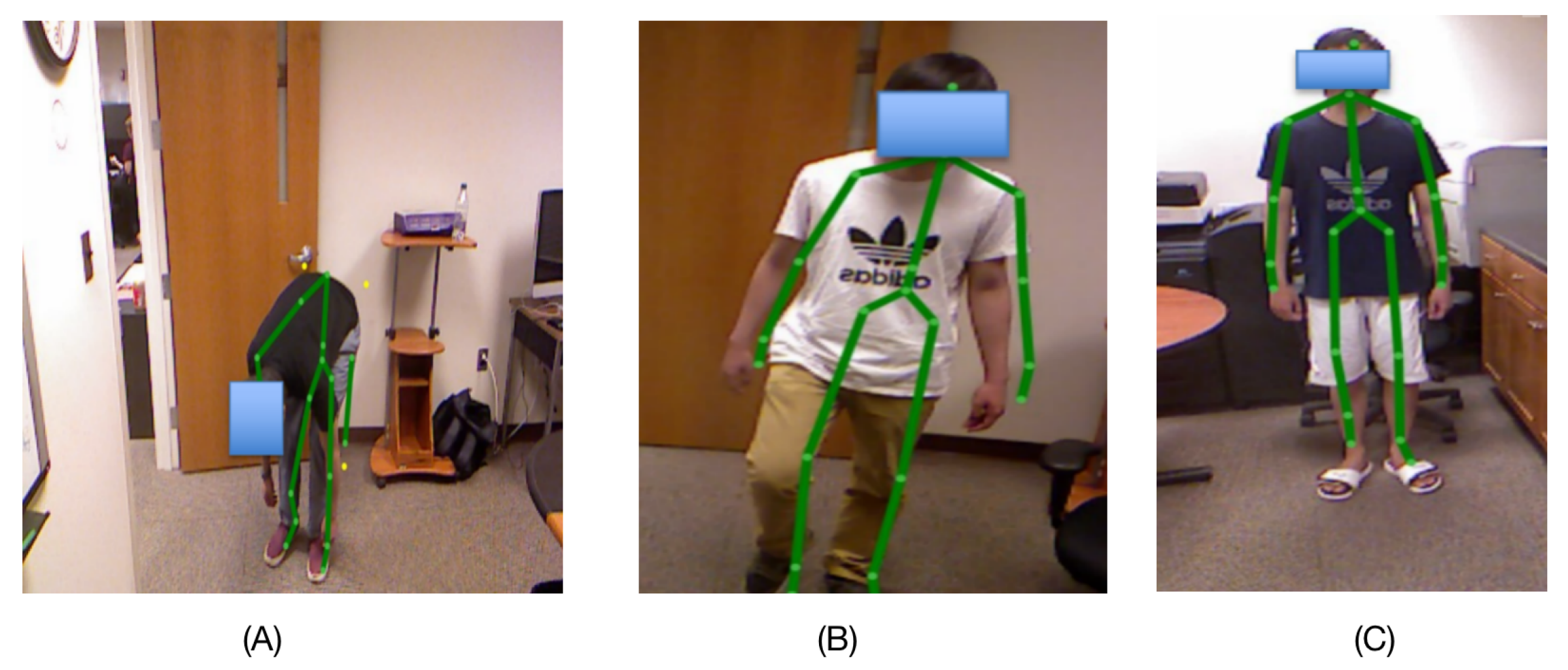

2.1. Data Collection Setup

2.2. Data Collection Procedure

2.3. Data Labeling

2.4. Training

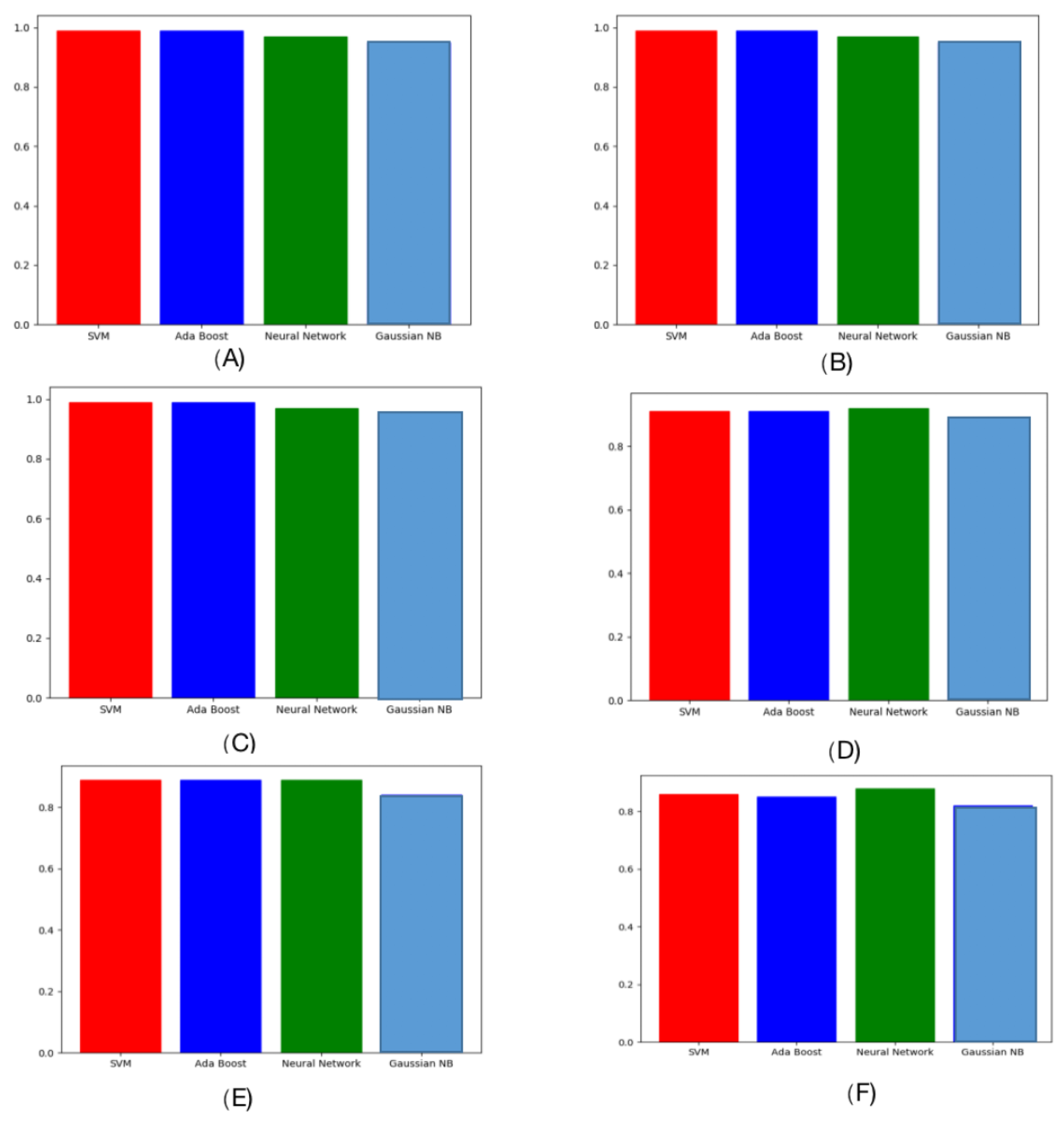

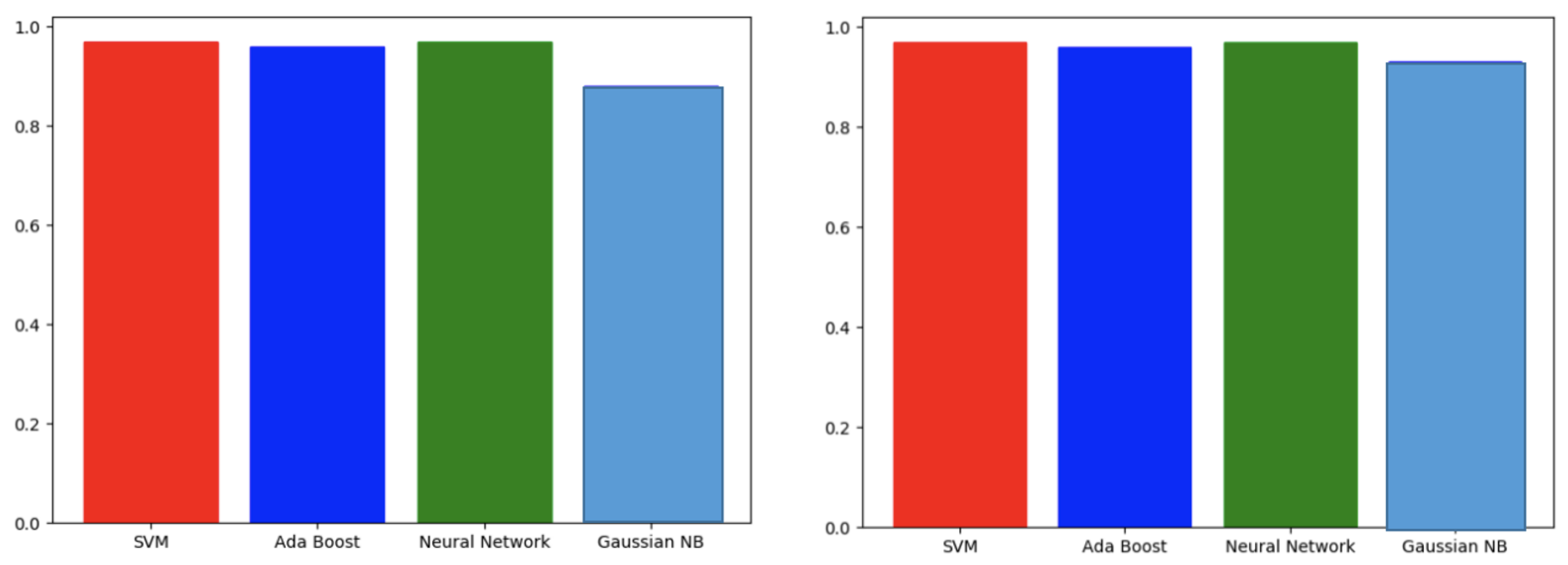

2.4.1. Aggregate Impact of Noises and Missing Joints

2.4.2. Gradual Impacts of Noises and Missing Joints

- Hip, knee, ankle, and foot joints;

- Knee, ankle, and foot joints;

- Ankle and foot joints;

- Foot joints.

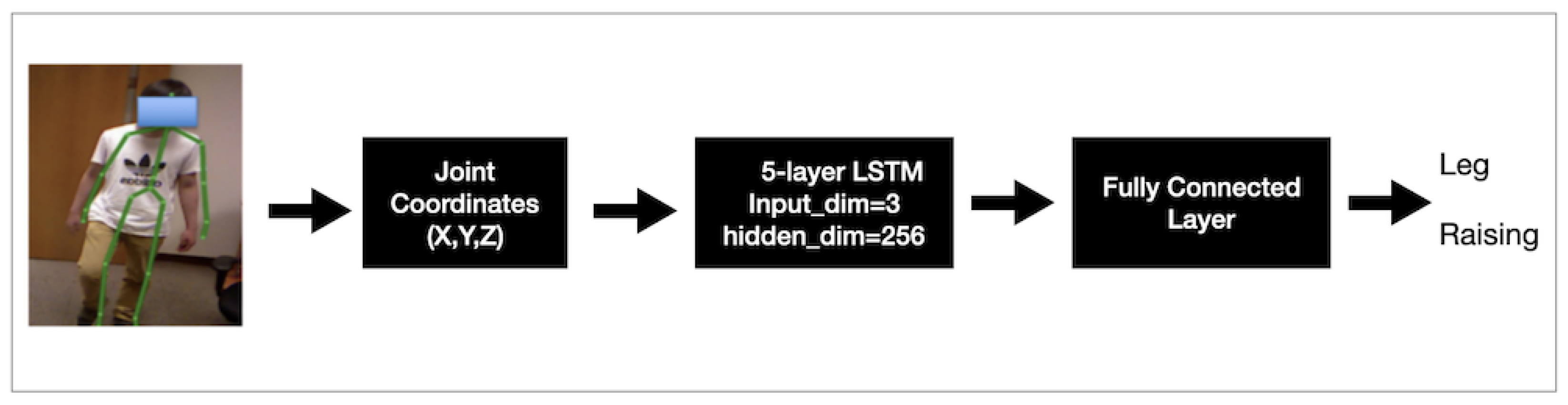

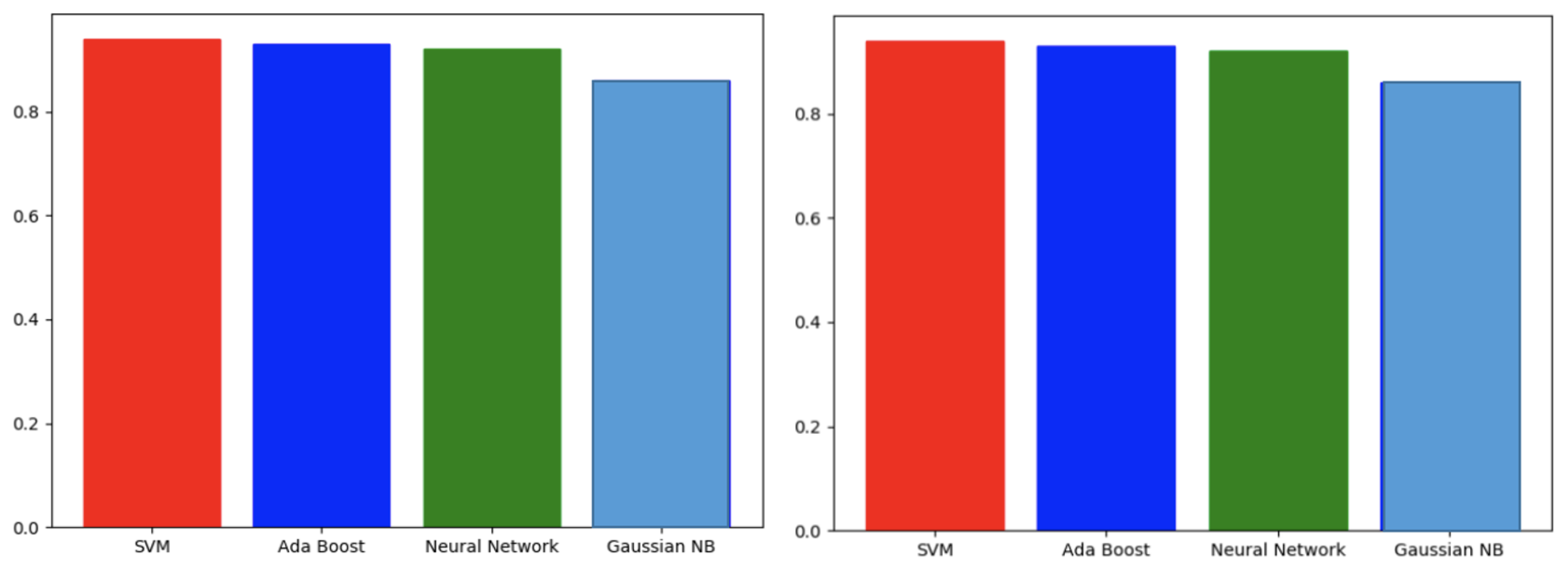

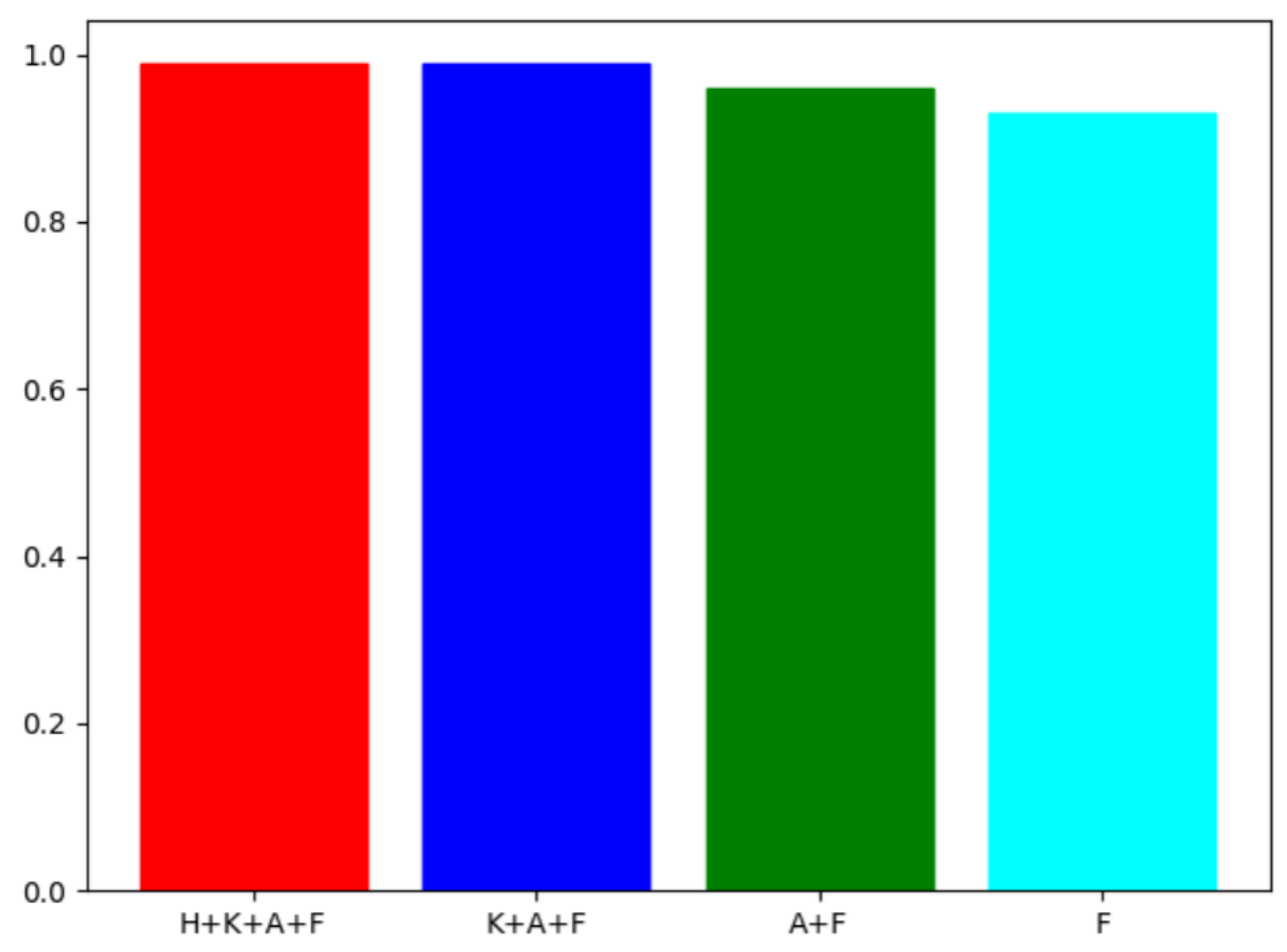

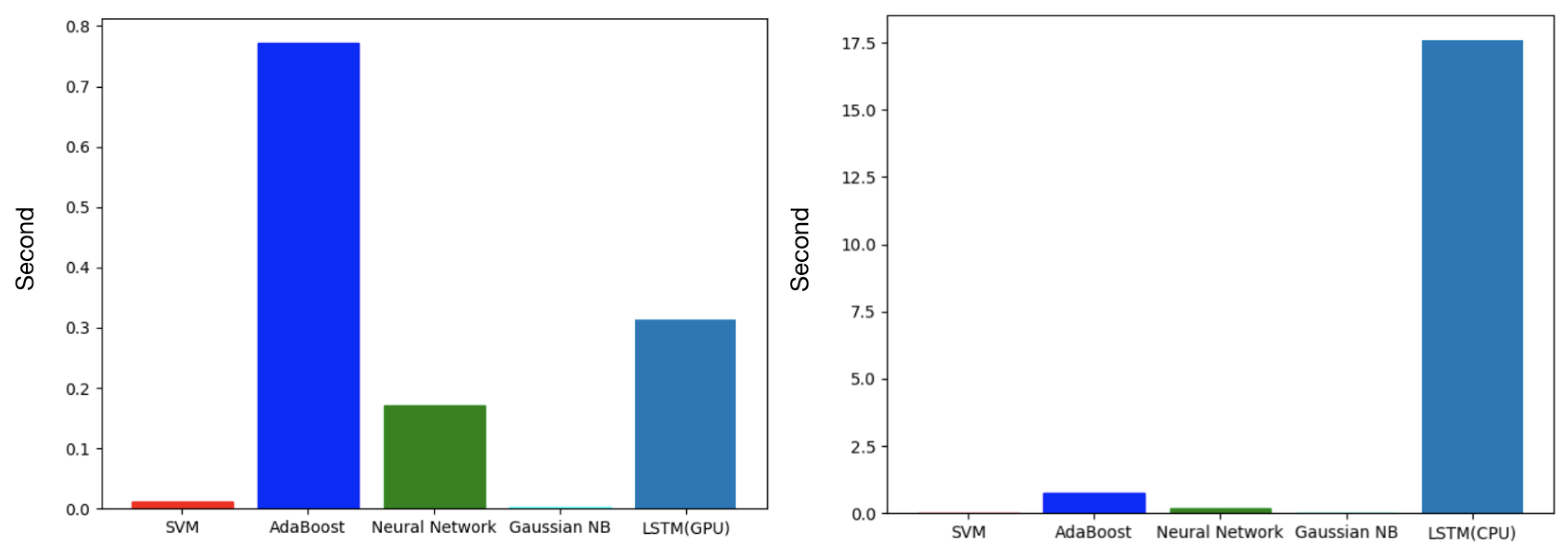

2.4.3. Comparison of Classical Machine Learning and Deep Learning

2.4.4. Deep Learning Classifier Details

3. Results

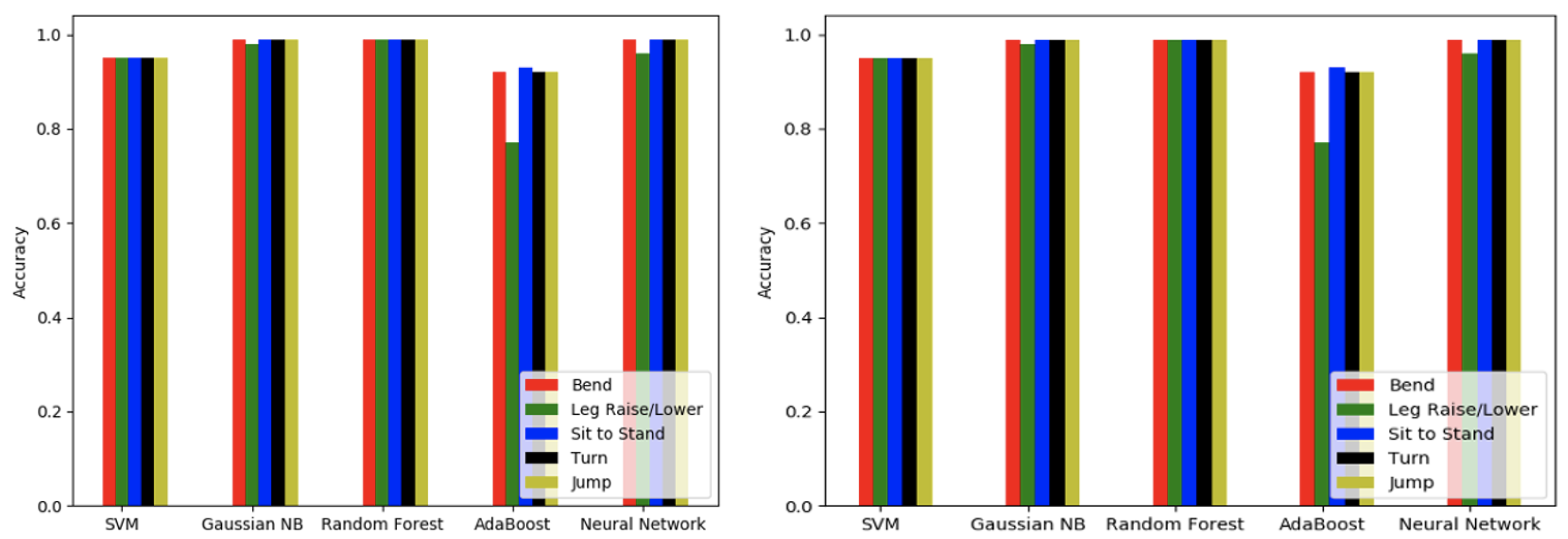

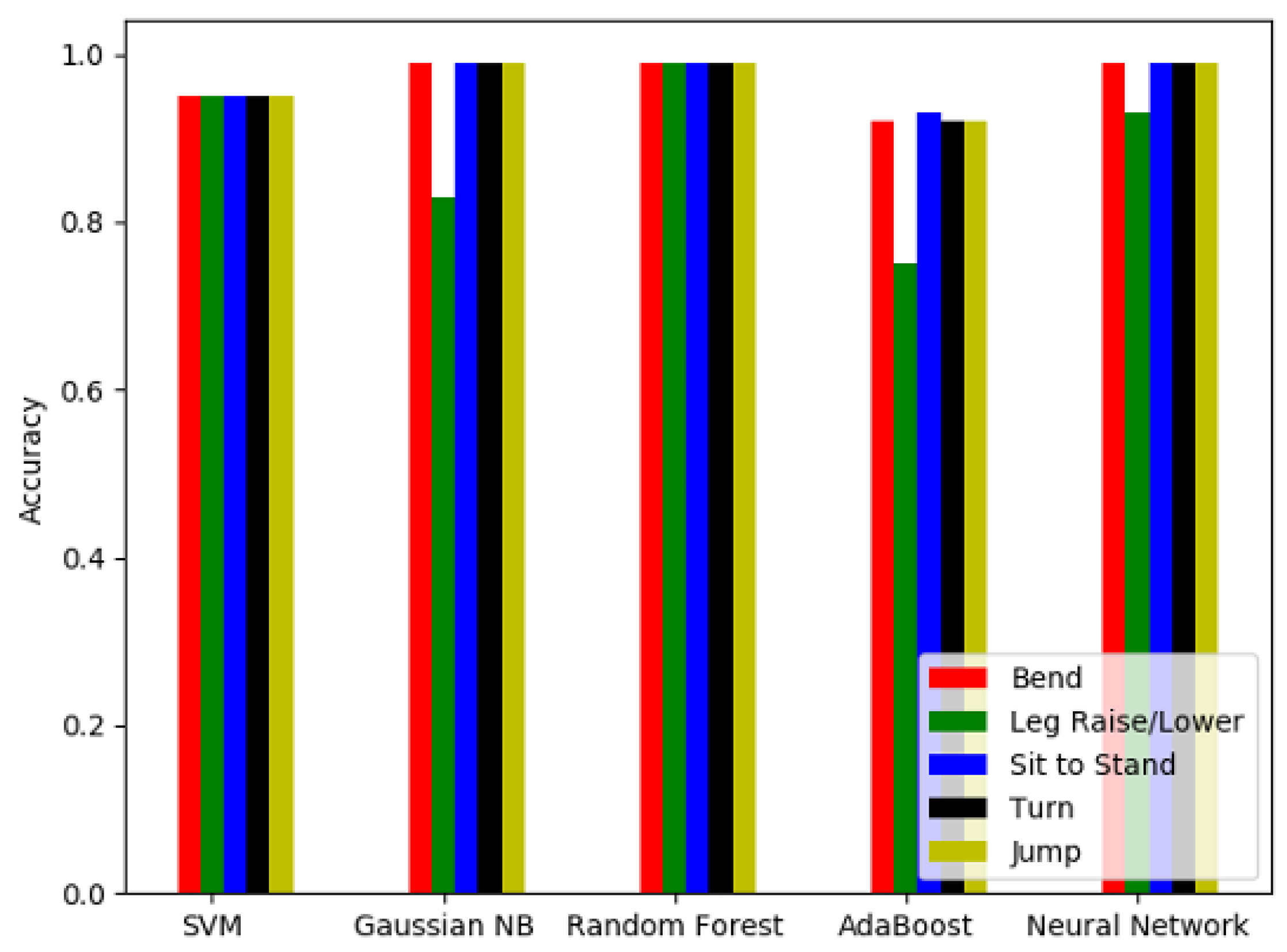

3.1. Aggregate the Impacts of Noise and Missing Joints

Gradual Impact of Noise and Missing Joints

3.2. Comparison of Classical Machine Learning and Deep Learning

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kot, A.; Nawrocka, A. Modeling of human balance as an inverted pendulum. In Proceedings of the 2014 15th International Carpathian Control Conference (ICCC), Velke Karlovice, Czech Republic, 28–30 May 2014; pp. 254–257. [Google Scholar]

- Buczek, F.L.; Cooney, K.M.; Walker, M.R.; Rainbow, M.J.; Concha, M.C.; Sanders, J.O. Performance of an inverted pendulum model directly applied to normal human gait. Clin. Biomech. 2006, 21, 288–296. [Google Scholar] [CrossRef] [PubMed]

- Morasso, P.; Cherif, A.; Zenzeri, J. Quiet standing: The single inverted pendulum model is not so bad after all. PLoS ONE 2019, 14, e0213870. [Google Scholar] [CrossRef] [PubMed]

- National Floor Safety Institute. Slip and Fall Quick Facts; NFSI: Southlake, TX, USA, 2022. [Google Scholar]

- Delahoz, Y.S.; Labrador, M.A. Survey on fall detection and fall prevention using wearable and external sensors. Sensors 2014, 14, 19806–19842. [Google Scholar] [CrossRef]

- Cucchiara, R.; Grana, C.; Prati, A.; Vezzani, R. Probabilistic posture classification for human-behavior analysis. IEEE Trans. Syst. Man Cybern.-Part A Syst. Hum. 2004, 35, 42–54. [Google Scholar] [CrossRef]

- Juang, C.F.; Chang, C.M. Human body posture classification by a neural fuzzy network and home care system application. IEEE Trans. Syst. Man Cybern.-Part A Syst. Hum. 2007, 37, 984–994. [Google Scholar] [CrossRef]

- Zhang, H.; Nussbaum, M.A.; Agnew, M.J. Use of wavelet coherence to assess two-joint coordination during quiet upright stance. J. Electromyogr. Kinesiol. 2014, 24, 607–613. [Google Scholar] [CrossRef]

- Qu, X.; Hu, X.; Tao, D. Gait initiation differences between overweight and normal weight individuals. Ergonomics 2021, 64, 995–1001. [Google Scholar] [CrossRef]

- Jia, B.; Kumbhar, A.N.; Tong, Y. Development of a Computer Vision-Based Muscle Stimulation Method for Measuring Muscle Fatigue during Prolonged Low-Load Exposure. Int. J. Environ. Res. Public Health 2021, 18, 11242. [Google Scholar] [CrossRef]

- Dubois, A.; Charpillet, F. A gait analysis method based on a depth camera for fall prevention. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 4515–4518. [Google Scholar]

- Kaenchan, S.; Mongkolnam, P.; Watanapa, B.; Sathienpong, S. Automatic Multiple Kinect Cameras Setting for Simple Walking Posture Analysis. In Proceedings of the 2013 International Computer Science and Engineering Conference (ICSEC), Bangkok, Thailand, 4–6 September 2013; pp. 245–249. [Google Scholar]

- Cucchiara, R.; Prati, A.; Vezzani, R. Posture classification in a multi-camera indoor environment. In Proceedings of the IEEE International Conference on Image Processing 2005, Genoa, Italy, 11–14 September 2005; Volume 1, p. I-725. [Google Scholar]

- Nasution, A.H.; Emmanuel, S. Intelligent video surveillance for monitoring elderly in home environments. In Proceedings of the 2007 IEEE 9th Workshop on Multimedia Signal Processing, Chania, Crete, Greece, 1–3 October 2007; pp. 203–206. [Google Scholar]

- Ben Hamida, A.; Koubaa, M.; Nicolas, H.; Amar, C.B. Video surveillance system based on a scalable application-oriented architecture. Multimed. Tools Appl. 2016, 75, 17187–17213. [Google Scholar] [CrossRef]

- Keshavarz, A.; Tabar, A.M.; Aghajan, H. Distributed vision-based reasoning for smart home care. In Proceedings of the ACM SenSys Workshop on DSC; ACM Press: New York, NY, USA, 2006. [Google Scholar]

- Xu, X.; McGorry, R.W.; Chou, L.S.; Lin, J.H.; Chang, C.C. Accuracy of the Microsoft Kinect™ for measuring gait parameters during treadmill walking. Gait Posture 2015, 42, 145–151. [Google Scholar] [CrossRef]

- Galna, B.; Barry, G.; Jackson, D.; Mhiripiri, D.; Olivier, P.; Rochester, L. Accuracy of the Microsoft Kinect sensor for measuring movement in people with Parkinson’s disease. Gait Posture 2014, 39, 1062–1068. [Google Scholar] [CrossRef] [PubMed]

- Mentiplay, B.F.; Clark, R.A.; Mullins, A.; Bryant, A.L.; Bartold, S.; Paterson, K. Reliability and validity of the Microsoft Kinect for evaluating static foot posture. J. Foot Ankle Res. 2013, 6, 10. [Google Scholar] [CrossRef] [PubMed]

- Dutta, T. Evaluation of the Kinect™sensor for 3-D kinematic measurement in the workplace. Appl. Ergon. 2012, 43, 645–649. [Google Scholar] [CrossRef]

- Zhang, L.; Chien Hsieh, J.; Wang, J. A Kinect-based golf swing classification system using HMM and Neuro-Fuzzy. In Proceedings of the 2012 International Conference on Computer Science and Information Processing (CSIP), Xian, China, 24–26 August 2012; pp. 1163–1166. [Google Scholar]

- Na, H.; Choi, J.H.; Kim, H.; Kim, T. Development of a human metabolic rate prediction model based on the use of Kinect-camera generated visual data-driven approaches. Build. Environ. 2019, 160, 106216. [Google Scholar] [CrossRef]

- Pedersoli, F.; Adami, N.; Benini, S.; Leonardi, R. XKin -: EXtendable Hand Pose and Gesture Recognition Library for Kinect. In Proceedings of the 20th ACM International Conference on Multimedia, Nara, Japan, 29 October–2 November 2012; pp. 1465–1468. [Google Scholar]

- Hu, X.; Li, Y.; Chen, G.; Zhao, Z.; Qu, X. Identification of balance recovery patterns after slips using hierarchical cluster analysis. J. Biomech. 2022, 143, 111281. [Google Scholar] [CrossRef]

- Hu, X.; Duan, Q.; Tang, J.; Chen, G.; Zhao, Z.; Sun, Z.; Chen, C.; Qu, X. A low-cost instrumented shoe system for gait phase detection based on foot plantar pressure data. IEEE J. Transl. Eng. Health Med. 2023, 12, 84–96. [Google Scholar] [CrossRef]

- Parajuli, M.; Tran, D.; Ma, W.; Sharma, D. Senior health monitoring using Kinect. In Proceedings of the 2012 Fourth International Conference on Communications and Electronics (ICCE), Hue, Vietnam, 1–2 August 2012; pp. 309–312. [Google Scholar]

- Clark, R.A.; Pua, Y.H.; Bryant, A.L.; Hunt, M.A. Validity of the Microsoft Kinect for providing lateral trunk lean feedback during gait retraining. Gait Posture 2013, 38, 1064–1066. [Google Scholar] [CrossRef]

- Manghisi, V.M.; Uva, A.E.; Fiorentino, M.; Bevilacqua, V.; Trotta, G.F.; Monno, G. Real time RULA assessment using Kinect v2 sensor. Appl. Ergon. 2017, 65, 481–491. [Google Scholar] [CrossRef]

- Ma, M.; Proffitt, R.; Skubic, M. Validation of a Kinect V2 based rehabilitation game. PLoS ONE 2018, 13, e0202338. [Google Scholar] [CrossRef]

- Geerse, D.J.; Coolen, B.H.; Roerdink, M. Kinematic validation of a multi-Kinect v2 instrumented 10-meter walkway for quantitative gait assessments. PLoS ONE 2015, 10, e0139913. [Google Scholar] [CrossRef]

- Cai, L.; Ma, Y.; Xiong, S.; Zhang, Y. Validity and reliability of upper limb functional assessment using the Microsoft Kinect V2 sensor. Appl. Bionics Biomech. 2019, 2019, 7175240. [Google Scholar] [CrossRef] [PubMed]

- Latorre, J.; Colomer, C.; Alcañiz, M.; Llorens, R. Gait analysis with the Kinect v2: Normative study with healthy individuals and comprehensive study of its sensitivity, validity, and reliability in individuals with stroke. J. Neuroeng. Rehabil. 2019, 16, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Wochatz, M.; Tilgner, N.; Mueller, S.; Rabe, S.; Eichler, S.; John, M.; Völler, H.; Mayer, F. Reliability and validity of the Kinect V2 for the assessment of lower extremity rehabilitation exercises. Gait Posture 2019, 70, 330–335. [Google Scholar] [CrossRef] [PubMed]

- Obdržálek, Š.; Kurillo, G.; Ofli, F.; Bajcsy, R.; Seto, E.; Jimison, H.; Pavel, M. Accuracy and robustness of Kinect pose estimation in the context of coaching of elderly population. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 1188–1193. [Google Scholar]

- Kondori, F.A.; Yousefi, S.; Li, H.; Sonning, S.; Sonning, S. 3D head pose estimation using the Kinect. In Proceedings of the 2011 International Conference on Wireless Communications and Signal Processing (WCSP), Nanjing, China, 9–11 November 2011; pp. 1–4. [Google Scholar]

- Saeed, A.; Al-Hamadi, A. Boosted human head pose estimation using kinect camera. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 1752–1756. [Google Scholar]

- Plantard, P.; Auvinet, E.; Le Pierres, A.S.; Multon, F. Pose estimation with a kinect for ergonomic studies: Evaluation of the accuracy using a virtual mannequin. Sensors 2015, 15, 1785–1803. [Google Scholar] [CrossRef]

- Wu, D.; Sharma, N.; Blumenstein, M. Recent advances in video-based human action recognition using deep learning: A review. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 2865–2872. [Google Scholar]

- Ma, Y.; Liu, D.; Cai, L. Deep Learning-Based Upper Limb Functional Assessment Using a Single Kinect v2 Sensor. Sensors 2020, 20, 1903. [Google Scholar] [CrossRef]

- Chang, M.J.; Hsieh, J.T.; Fang, C.Y.; Chen, S.W. A Vision-Based Human Action Recognition System for Moving Cameras Through Deep Learning. In Proceedings of the 2019 2nd International Conference on Signal Processing and Machine Learning, Hangzhou, China, 27–29 November 2019; pp. 85–91. [Google Scholar]

- Papadakis, A.; Mathe, E.; Spyrou, E.; Mylonas, P. A Geometric Approach for Cross-View Human Action Recognition using Deep Learning. In Proceedings of the 11th International Symposium on Image and Signal Processing and Analysis (ISPA), Dubrovnik, Croatia, 23–25 September 2019; pp. 258–263. [Google Scholar]

- Huynh-The, T.; Hua, C.H.; Tu, N.A.; Kim, D.S. Learning 3D spatiotemporal gait feature by convolutional network for person identification. Neurocomputing 2020, 397, 192–202. [Google Scholar] [CrossRef]

- Fuentes-Jimenez, D.; Martin-Lopez, R.; Losada-Gutierrez, C.; Casillas-Perez, D.; Macias-Guarasa, J.; Luna, C.A.; Pizarro, D. DPDnet: A robust people detector using deep learning with an overhead depth camera. Expert Syst. Appl. 2020, 146, 113168. [Google Scholar] [CrossRef]

- Wang, L.; Huynh, D.Q.; Koniusz, P. A comparative review of recent kinect-based action recognition algorithms. IEEE Trans. Image Process. 2019, 29, 15–28. [Google Scholar] [CrossRef]

- Liaqat, S.; Dashtipour, K.; Arshad, K.; Assaleh, K.; Ramzan, N. A hybrid posture detection framework: Integrating machine learning and deep neural networks. IEEE Sens. J. 2021, 21, 9515–9522. [Google Scholar] [CrossRef]

- Luo, Y.; Peng, Y.; Yang, J. Basketball Free Throw Posture Analysis and Hit Probability Prediction System Based on Deep Learning. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 934–946. [Google Scholar] [CrossRef]

- Kumar, R.A.; Chakkaravarthy, S.S. YogiCombineDeep: Enhanced Yogic Posture Classification using Combined Deep Fusion of VGG16 and VGG19 Features. IEEE Access 2024, 12, 139165–139180. [Google Scholar] [CrossRef]

- Fang, H.S.; Li, J.; Tang, H.; Xu, C.; Zhu, H.; Xiu, Y.; Li, Y.L.; Lu, C. Alphapose: Whole-body regional multi-person pose estimation and tracking in real-time. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 7157–7173. [Google Scholar] [CrossRef] [PubMed]

- Tang, S.; Chen, C.; Xie, Q.; Chen, M.; Wang, Y.; Ci, Y.; Bai, L.; Zhu, F.; Yang, H.; Yi, L.; et al. Humanbench: Towards general human-centric perception with projector assisted pretraining. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 21970–21982. [Google Scholar]

- Xu, Y.; Zhang, J.; Zhang, Q.; Tao, D. Vitpose++: Vision transformer for generic body pose estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 1212–1230. [Google Scholar] [CrossRef] [PubMed]

- Samkari, E.; Arif, M.; Alghamdi, M.; Al Ghamdi, M.A. WideHRNet: An Efficient Model for Human Pose Estimation Using Wide Channels in Lightweight High-Resolution Network. IEEE Access 2024, 12, 148990–149000. [Google Scholar] [CrossRef]

- Kishor, R. Performance Benchmarking of YOLOv11 Variants for Real-Time Delivery Vehicle Detection: A Study on Accuracy, Speed, and Computational Trade-offs. Asian J. Res. Comput. Sci. 2024, 17, 108–122. [Google Scholar] [CrossRef]

- Ultralytics. YOLO11. 2024. Available online: https://docs.ultralytics.com/models/yolo11/ (accessed on 27 April 2025).

- Welser, J.; Pitera, J.W.; Goldberg, C. Future computing hardware for AI. In Proceedings of the 2018 IEEE International Electron Devices Meeting (IEDM), San Francisco, CA, USA, 1–5 December 2018; pp. 1–3. [Google Scholar]

- Rossi, D.; Zhang, L. Network artificial intelligence, fast and slow. In Proceedings of the 1st International Workshop on Native Network Intelligence, Rome, Italy, 9 December 2022; pp. 14–20. [Google Scholar]

- Mazzia, V.; Khaliq, A.; Salvetti, F.; Chiaberge, M. Real-time apple detection system using embedded systems with hardware accelerators: An edge AI application. IEEE Access 2020, 8, 9102–9114. [Google Scholar] [CrossRef]

- Tuli, S.; Jha, N.K. AccelTran: A sparsity-aware accelerator for dynamic inference with transformers. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2023, 42, 4038–4051. [Google Scholar] [CrossRef]

- Sun, C.; Shrivastava, A.; Singh, S.; Gupta, A. Revisiting unreasonable effectiveness of data in deep learning era. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 843–852. [Google Scholar]

- Oyedare, T.; Park, J.M.J. Estimating the required training dataset size for transmitter classification using deep learning. In Proceedings of the 2019 IEEE International Symposium on Dynamic Spectrum Access Networks (DySPAN), Newark, NJ, USA, 11–14 November 2019; pp. 1–10. [Google Scholar]

- Rajput, D.; Wang, W.J.; Chen, C.C. Evaluation of a decided sample size in machine learning applications. BMC Bioinform. 2023, 24, 48. [Google Scholar] [CrossRef]

- Zeyer, A.; Bahar, P.; Irie, K.; Schlüter, R.; Ney, H. A comparison of transformer and lstm encoder decoder models for asr. In Proceedings of the 2019 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Sentosa, Singapore, 14–18 December 2019; pp. 8–15. [Google Scholar]

- Kandpal, N.; Deng, H.; Roberts, A.; Wallace, E.; Raffel, C. Large language models struggle to learn long-tail knowledge. In Proceedings of the International Conference on Machine Learning. PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 15696–15707. [Google Scholar]

- Thirunavukarasu, A.J.; Ting, D.S.J.; Elangovan, K.; Gutierrez, L.; Tan, T.F.; Ting, D.S.W. Large language models in medicine. Nat. Med. 2023, 29, 1930–1940. [Google Scholar] [CrossRef]

- Li, C. OpenAI’s GPT-3 Language Model: A Technical Overview. Available online: https://tinyurl.com/4j8ec3hz (accessed on 27 April 2025).

- Schreiner, M. GPT-4 Architecture, Datasets, Costs and More Leaked. 2023. Available online: https://tinyurl.com/5vecrkcu (accessed on 27 April 2025).

- Smith, M.S. Llama 3 Establishes Meta as the Leader in “Open” AI. Available online: https://tinyurl.com/ys3sunmj (accessed on 27 April 2025).

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Chandran, S.; Yatagawa, T.; Kubo, H.; Jayasuriya, S. Learning-based Spotlight Position Optimization for Non-Line-of-Sight Human Localization and Posture Classification. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 4218–4227. [Google Scholar]

- Odesola, D.F.; Kulon, J.; Verghese, S.; Partlow, A.; Gibson, C. Smart Sensing Chairs for Sitting Posture Detection, Classification, and Monitoring: A Comprehensive Review. Sensors 2024, 24, 2940. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.P.; Wu, X.; Yuan, Z.; Qiao, J.J.; Peng, Q. PostureHMR: Posture Transformation for 3D Human Mesh Recovery. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 9732–9741. [Google Scholar]

- Noh, S.; Bae, K.; Bae, Y.; Lee, B.D. H^ 3Net: Irregular Posture Detection by Understanding Human Character and Core Structures. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 5631–5641. [Google Scholar]

- Yeung, C.; Ide, K.; Fujii, K. AutoSoccerPose: Automated 3D posture Analysis of Soccer Shot Movements. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 3214–3224. [Google Scholar]

- Lee, M.H.; Zhang, Y.C.; Wu, K.R.; Tseng, Y.C. GolfPose: From Regular Posture to Golf Swing Posture. In International Conference on Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 2025; pp. 387–402. [Google Scholar]

- Yan, L.; Du, Y. Exploring Trends and Clusters in Human Posture Recognition Research: An Analysis Using CiteSpace. Sensors 2025, 25, 632. [Google Scholar] [CrossRef] [PubMed]

- Zhai, Y.; Jia, G.; Lai, Y.K.; Zhang, J.; Yang, J.; Tao, D. Looking into gait for perceiving emotions via bilateral posture and movement graph convolutional networks. IEEE Trans. Affect. Comput. 2024, 15, 1634–1648. [Google Scholar] [CrossRef]

- Yan, K.; Liu, G.; Xie, R.; Fang, S.H.; Wu, H.C.; Chang, S.Y.; Ma, L. Novel Subject-Dependent Human-Posture Recognition Approach Using Tensor Regression. IEEE Sens. J. 2024, 25, 1041–1053. [Google Scholar] [CrossRef]

- Samet, L. “One of the most amazing breakthroughs”: How DeepSeek’s R1 Model is Disrupting the AI Landscape. Available online: https://tinyurl.com/2je7zm2h (accessed on 27 April 2025).

- Schmid, P. Bite: How Deepseek R1 Was Trained. Available online: https://tinyurl.com/msawcht6 (accessed on 27 April 2025).

- Rai, S.; Purnell, N. What Is DeepSeek R1 And How Does China’s AI Model Compare to OpenAI, Meta? Available online: https://tinyurl.com/2jzjxnjn (accessed on 27 April 2025).

- Rizvi, N. An Empirical Comparison of Machine Learning Models for Classification. Master’s Thesis, University of South Carolina, Columbia, SC, USA, 2020. [Google Scholar]

- Sordo, M.; Zeng, Q. On sample size and classification accuracy: A performance comparison. In International Symposium on Biological and Medical Data Analysis; Springer: Berlin/Heidelberg, Germany, 2005; pp. 193–201. [Google Scholar]

- Rendle, S. Factorization machines. In Proceedings of the 2010 IEEE International conference on data mining, Sydney, Australia, 13–17 December 2010; pp. 995–1000. [Google Scholar]

- Saez, Y.; Baldominos, A.; Isasi, P. A comparison study of classifier algorithms for cross-person physical activity recognition. Sensors 2016, 17, 66. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Available online: http://www.deeplearningbook.org (accessed on 1 January 2016).

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Smith, L.N. Cyclical Learning Rates for Training Neural Networks. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 464–472. [Google Scholar]

| Posture 1 | Posture 2 | Posture 3 | |||

| Whole-Body Motion: Jumping Posture | Lower-Body Motion: Sit to Stand Posture | Body Transition Motion: Leg Raising/Lowering Posture | |||

| Participants performed intermittent jumps in the frontal plane. One image was taken when participants jumped off from the ground. Another image was taken during the landing. | Participants raised their left/right leg intermittently in the sagittal plane. One image was taken when participants either raised their left or right leg. Another image was taken when participants lowered their left or right leg. | Participants transitioned between sitting and standing postures in the sagittal plane. One image was taken while participants were in the sitting posture and another in the standing posture. | |||

| Posture 4 and 5 | |||||

| Upper-Body Motion: Bending Posture | Upper-Body Motion: Turning Posture | ||||

| Participants bent forward intermittently in the sagittal plane. One image was taken while participants assumed the upright posture. Another image was taken while participants bent forward in the sagittal plane. | Participants turned their body intermittently in the transverse plane. One image was taken while their body was turned left, in the transverse plane, with both arms held horizontally in front of the chest. Another image was taken while turned right. | ||||

| Method Name | Hyper-Parameters |

|---|---|

| Support Vector Machine | Linear kernel |

| Gaussian Naive Bayesian (NB) | Largest variance of the features = |

| Random Forest | The number of trees in the forest = 10 |

| Minimum samples split = 2 | |

| AdaBoost | Maximum number of estimators where boosting is terminated = 50 |

| Learning rate = 1 | |

| Neural Network | 9 hidden units (3 layers in total) |

| Body Motion Styles | Significant Motion Joint Centers |

|---|---|

| Whole-Body Motion: Jumping Posture | Foot, Knee, Hip, Spine, Head, Shoulder, Elbow, Wrist, Hand |

| Lower-Body Motion: Leg Raising/Lowering Posture | Foot, Ankle, Knee |

| Body Transition Motion: Sit to Stand Posture | Knee, Hip, Elbow, Wrist, Hand |

| Upper-Body Motion: | Bend posture: Spine, Hip, Head, Shoulder, Elbow, Wrist, Hand |

| Turn Posture Motion: Knee, Hip, Shoulder, Elbow, Wrist, Hand |

| Metric | Superior Method(s) |

|---|---|

| Highest Accuracy | SVM, Gaussian NB, random forest, neural network, and LSTM. All methods achieved 99% of accuracy for different scenarios. |

| Most Resistant to Noise | SVM and LSTM. Accuracy was reduced from 99% to 86% when 30% of labels contained noise. |

| Fastest Inference Speed | Gaussian NB. The inference time was 0.0024 s for inference of 40% of data. |

| Slowest Inference Speed |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, H.; Gračanin, D.; Zhou, W.; Dudash, D.; Rushton, G. Toward Real-Time Posture Classification: Reality Check. Electronics 2025, 14, 1876. https://doi.org/10.3390/electronics14091876

Zhang H, Gračanin D, Zhou W, Dudash D, Rushton G. Toward Real-Time Posture Classification: Reality Check. Electronics. 2025; 14(9):1876. https://doi.org/10.3390/electronics14091876

Chicago/Turabian StyleZhang, Hongbo, Denis Gračanin, Wenjing Zhou, Drew Dudash, and Gregory Rushton. 2025. "Toward Real-Time Posture Classification: Reality Check" Electronics 14, no. 9: 1876. https://doi.org/10.3390/electronics14091876

APA StyleZhang, H., Gračanin, D., Zhou, W., Dudash, D., & Rushton, G. (2025). Toward Real-Time Posture Classification: Reality Check. Electronics, 14(9), 1876. https://doi.org/10.3390/electronics14091876