A Dynamic Spatiotemporal Deep Learning Solution for Cloud–Edge Collaborative Industrial Control System Distributed Denial of Service Attack Detection

Abstract

1. Introduction

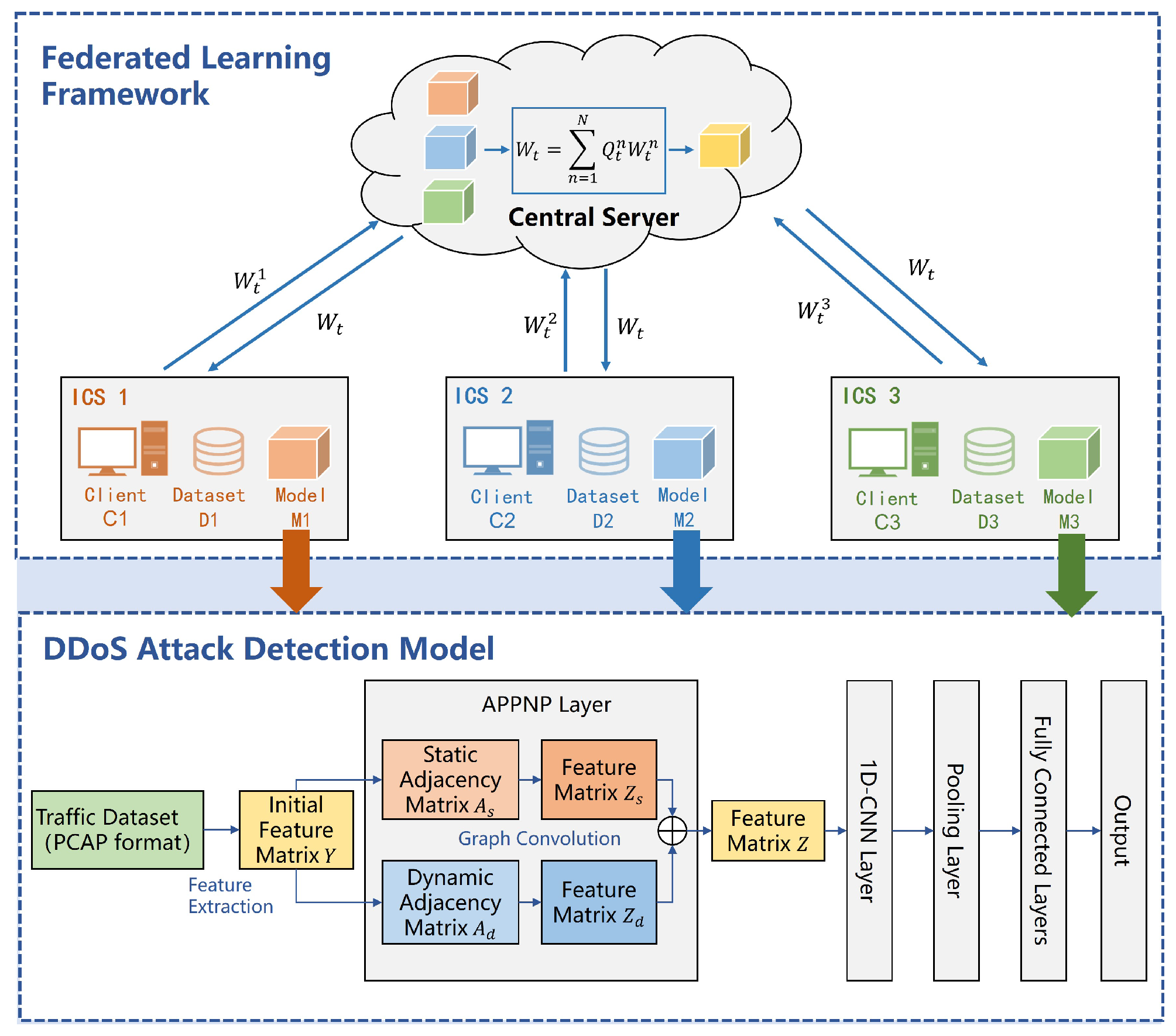

- A federated learning framework suitable for cloud–edge collaborative ICSs is proposed. By assigning dynamic weights to each industrial client, the framework optimizes the learning process of the global model, enhancing the overall performance.

- A DDoS attack detection model is introduced that constructs static and dynamic adjacency matrices to address the differences between long-term and short-term traffic data. This approach extracts relationships between the features of industrial traffic data across different time scales, enabling better capture of the deeper characteristics of DDoS attacks in industrial scenarios.

- The proposed model was tested on the CICDDoS2019 and Edge-IIoTset datasets and benchmarked against multiple federated and deep learning-based DDoS detection approaches. The results confirmed its effectiveness, demonstrating clear performance gains over existing methods.

2. Related Work

3. Methodology

3.1. Model Architecture

3.2. Federated Learning Algorithm Based on Dynamic Weights

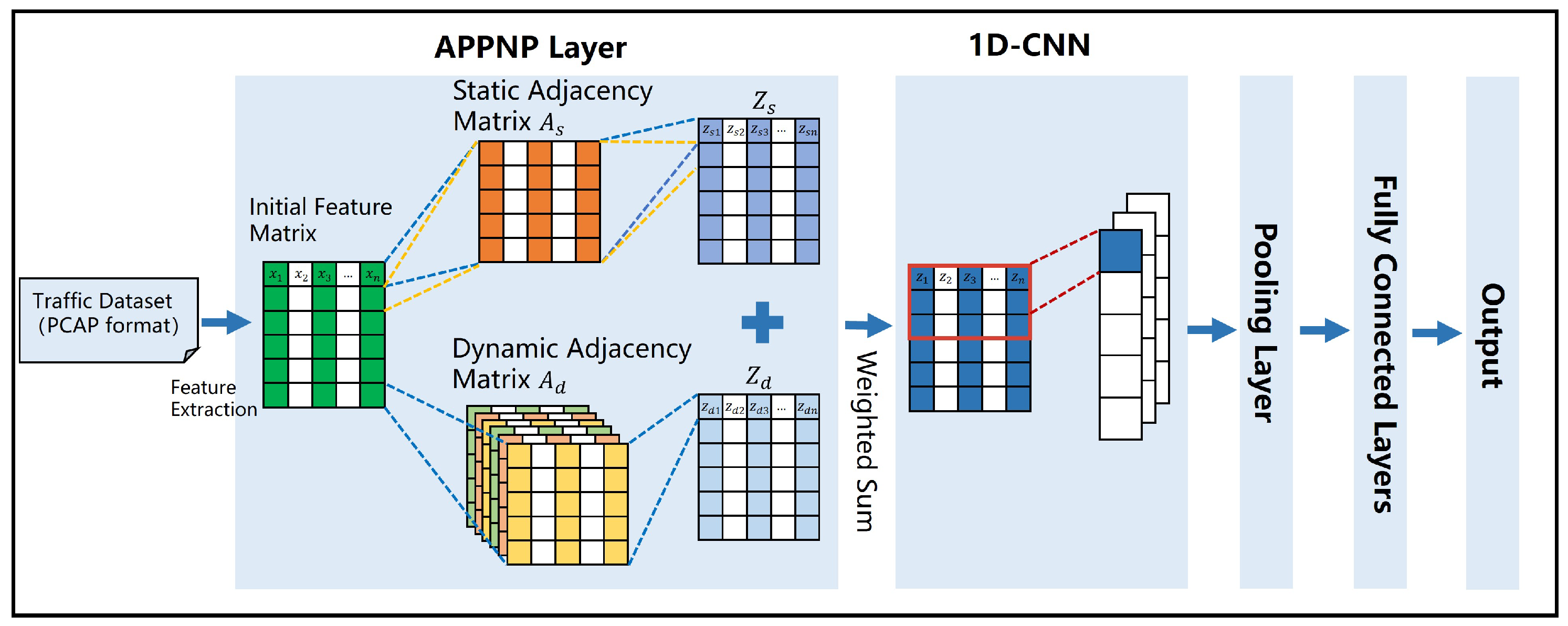

3.3. DDoS Attack Detection Model

3.3.1. APPNP Graph Convolution Layer

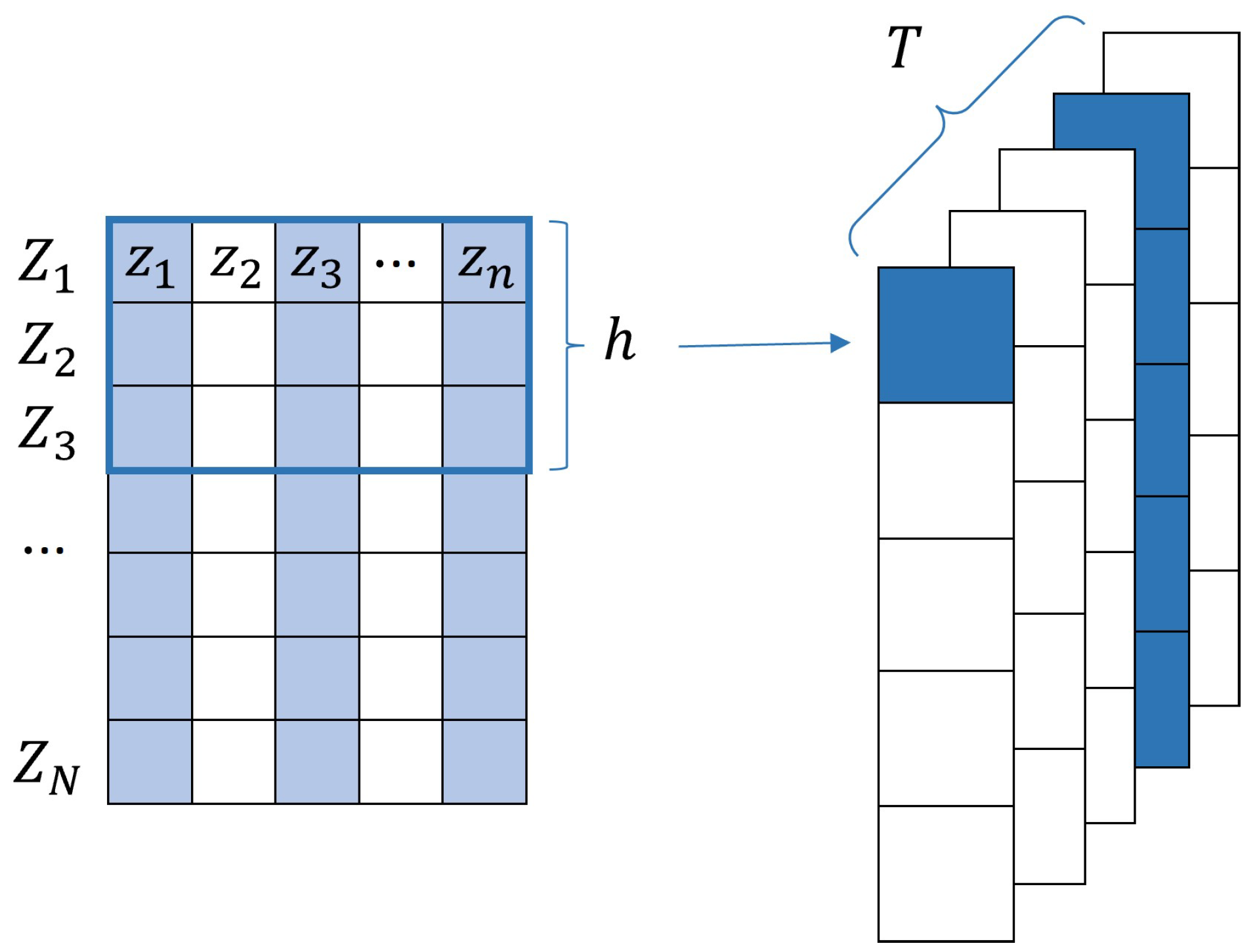

3.3.2. CNN Layer

3.3.3. Pooling Layer

4. Experiment Settings and Results Analysis

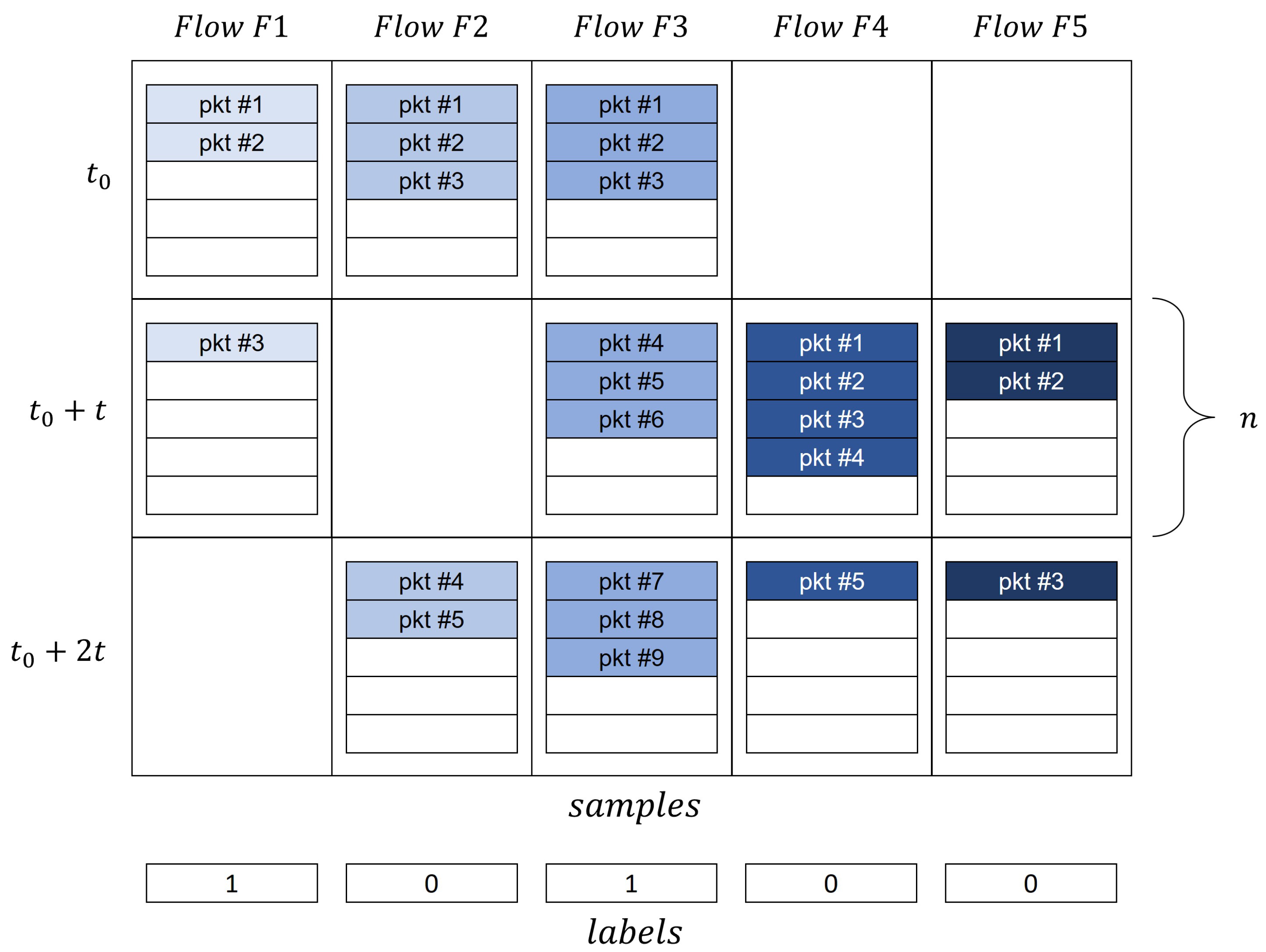

4.1. Datasets and Preparation

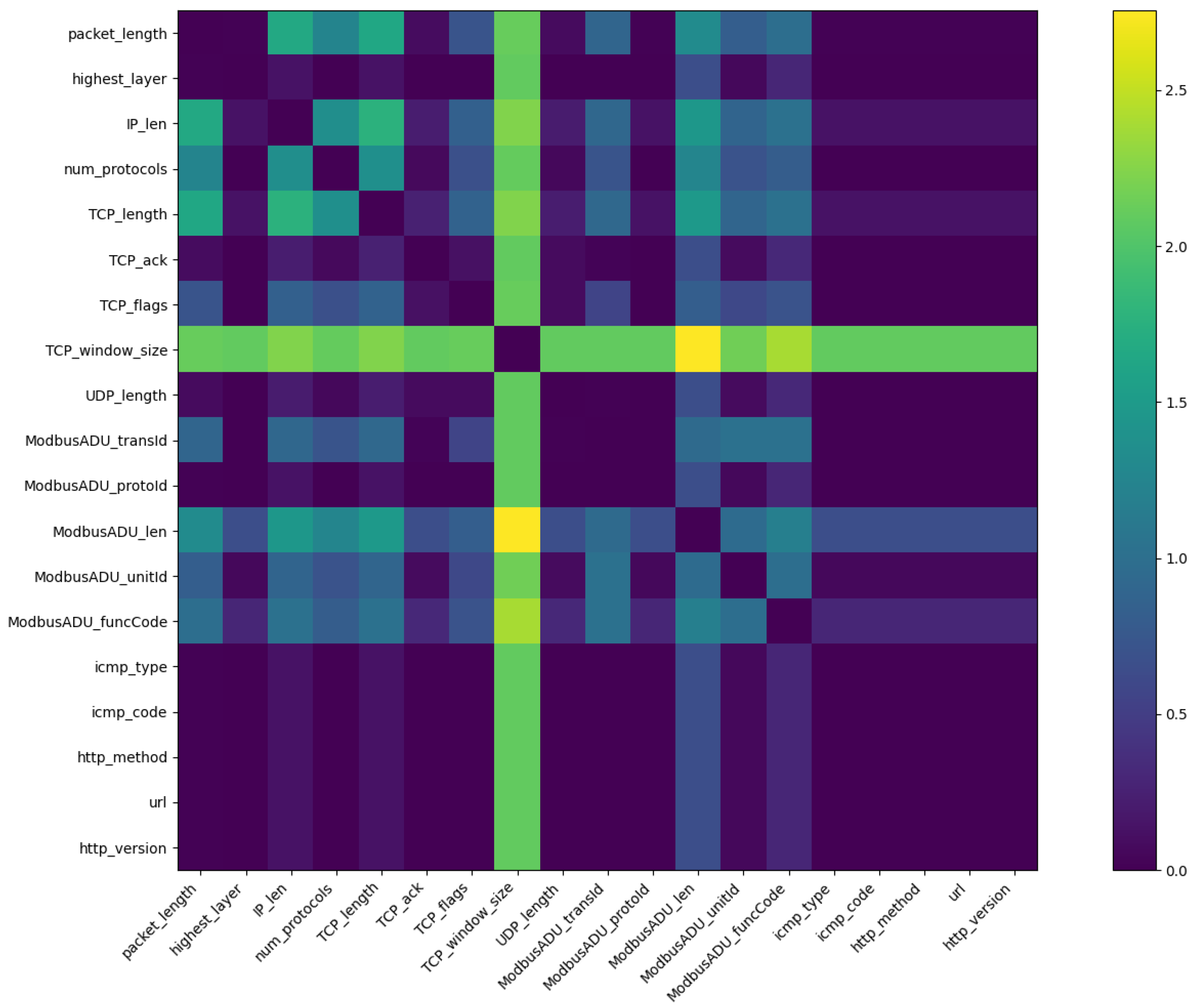

4.1.1. Dataset Preprocessing

4.1.2. Dataset Partitioning

4.2. Evaluation Methodology

4.2.1. Evaluation Metrics

- Accuracy: ;

- Precision: ;

- Recall: ;

- F1-score: ;

- AUC: the area under the ROC curve, reflecting the trade-off between the true positive rate and the false positive rate.

- where TP, TN, FP, and FN represent true positive, true negative, false positive, and false negative, respectively. These metrics are selected to provide a comprehensive assessment of model performance, taking into account not only the overall accuracy but also the balance between the detection precision and false positives, which is critical for the continuous operation of ICSs.

4.2.2. Comparison Algorithms

- FLAD: This algorithm, detailed in [41], is an adaptive federated learning algorithm for DDoS attack detection. The study used two DDoS attack detection models: FLAD + CNN and FLAD + MLP. Both models were used for comparison in this study.

- FedDDoS: This model, presented at [32], utilizes Pearson coefficient-based feature selection techniques to enhance the detection performance while reducing the model complexity. The detection model for this algorithm is CNN + MLP, and the federated learning algorithm used is FedAvg.

- FedAvg: This classic algorithm in the field of federated learning, introduced in [42], serves as a benchmark model and provides an important starting point for subsequent research. In this work, we trained both a CNN model and our proposed DDoS attack detection model under this federated learning algorithm to compare overall detection performance. These models are denoted as FedAvg + CNN and FedAvg + Ours, respectively.

4.3. Comparative Experiments

4.3.1. Parameter Settings

- APPNP layer:

- -

- Input dimension: 20;

- -

- Output dimension: 20;

- -

- Number of layers: 3;

- -

- : 0.3;

- -

- Weight for the dynamic adjacency matrix (): 0.3.

- CNN layer:

- -

- Input channels: 20;

- -

- Output channels: 64;

- -

- Kernel size: 3.

- Loss function:

- -

- Weight for the maximization of mutual information loss (unsupervised loss) (): 0.1.

- Final classification layer:

- -

- Two fully connected layers for each node with a hidden layer size of 64;

- -

- Output dimension: 1;

- -

- Dropout rate: 0.5.

- Federated learning: each round, half of the clients were randomly selected for aggregation (client selection ratio C = 0.5):

- -

- Local training epochs E = 5;

- -

- Communication rounds r = 20;

- -

- Batch size for the attack detection model: 100;

- -

- Learning rate: 0.005;

- -

- Number of epochs: 5;

- -

- Optimizer: Adam. The final trained model was the one that performed the best on the validation set.

4.3.2. Results

- (1)

- Results on CICDDoS2019 Dataset

- (2)

- Results on Edge-IIoTset Dataset

4.3.3. Analysis

4.4. Ablation Study

- FedDynST model: This model uses both static and dynamic adjacency matrices to extract relationships between traffic features across different time scales. It also employs an unsupervised mutual information maximization loss to maximize the correlation between the outputs of graph convolutions using the two types of adjacency matrices. Additionally, it improves upon the traditional FedAvg federated averaging algorithm by designing a dynamic weight-based federated learning parameter update method.

- FedDynST-APP model: this variant removes the graph convolution layer, retaining only the 1D-CNN layer, to investigate the impact of using static and dynamic adjacency matrices for extracting relationships between traffic features on the model.

- FedDynST-Loss model: this variant removes the unsupervised loss function, aiming to explore the impact of the correlation between the outputs of graph convolutions using different adjacency matrices on the model.

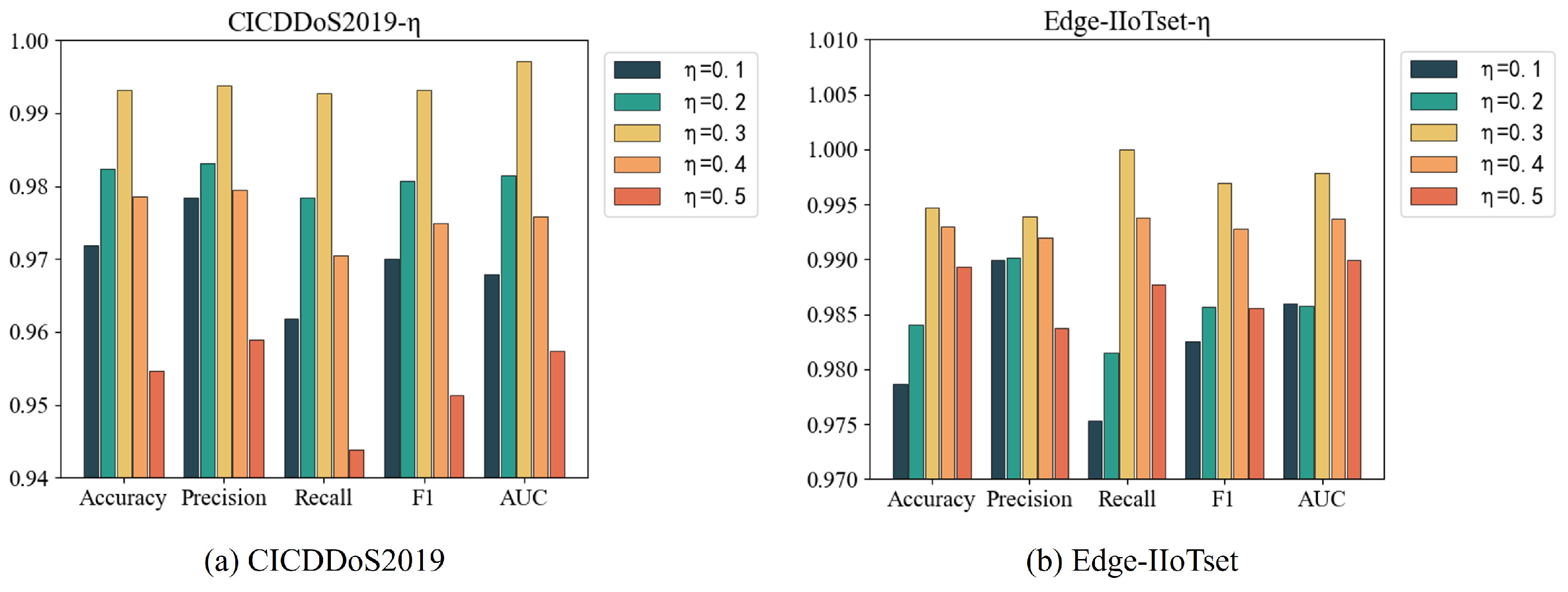

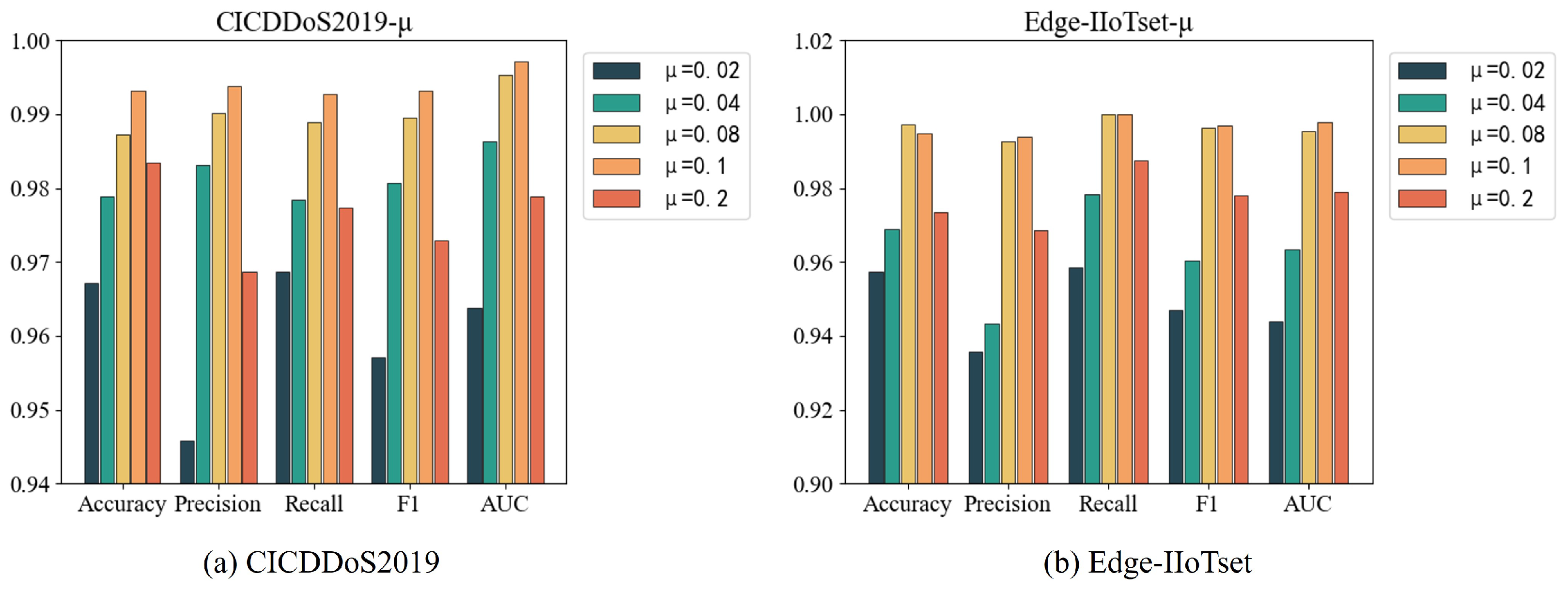

4.5. Parameter Study

4.5.1. The Weight Proportion of the Dynamic Adjacency Matrix

4.5.2. The Weight of Unsupervised Loss

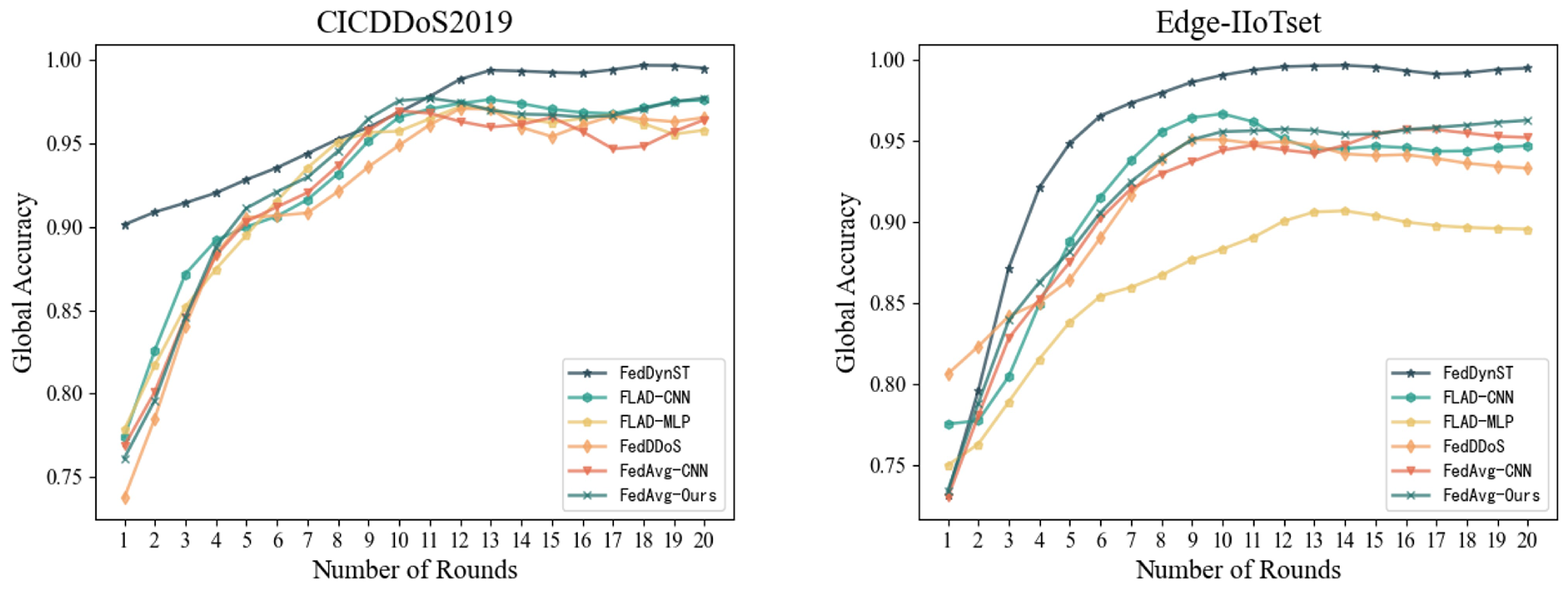

4.6. Convergence Study

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Fan, X.; Fan, K.; Wang, Y.; Zhou, R. Overview of cyber-security of industrial control system. In Proceedings of the 2015 International Conference on Cyber Security of Smart Cities, Industrial Control System and Communications (SSIC), Shanghai, China, 5–7 August 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–7. [Google Scholar]

- Zhao, L.; Li, B.; Yuan, H. Cloud Edge Integrated Security Architecture of New Cloud Manufacturing System. J. Syst. Eng. Electron. 2024, 35, 1177–1189. [Google Scholar] [CrossRef]

- Cao, K.; Hu, S.; Shi, Y.; Colombo, A.W.; Karnouskos, S.; Li, X. A survey on edge and edge-cloud computing assisted cyber-physical systems. IEEE Trans. Ind. Inform. 2021, 17, 7806–7819. [Google Scholar] [CrossRef]

- McLaughlin, S.; Konstantinou, C.; Wang, X.; Davi, L.; Sadeghi, A.R.; Maniatakos, M.; Karri, R. The cybersecurity landscape in industrial control systems. Proc. IEEE 2016, 104, 1039–1057. [Google Scholar] [CrossRef]

- Aslam, M.M.; Tufail, A.; Apong, R.A.A.H.M.; De Silva, L.C.; Raza, M.T. Scrutinizing security in industrial control systems: An architectural vulnerabilities and communication network perspective. IEEE Access 2024, 12, 67537–67573. [Google Scholar] [CrossRef]

- Kaur, A.; Krishna, C.R.; Patil, N.V. A comprehensive review on Software-Defined Networking (SDN) and DDoS attacks: Ecosystem, taxonomy, traffic engineering, challenges and research directions. Comput. Sci. Rev. 2025, 55, 100692. [Google Scholar] [CrossRef]

- Aljohani, T.; Almutairi, A. Modeling time-varying wide-scale distributed denial of service attacks on electric vehicle charging Stations. Ain Shams Eng. J. 2024, 15, 102860. [Google Scholar] [CrossRef]

- Somani, G.; Gaur, M.S.; Sanghi, D.; Conti, M.; Buyya, R. DDoS attacks in cloud computing: Issues, taxonomy, and future directions. Comput. Commun. 2017, 107, 30–48. [Google Scholar] [CrossRef]

- Kumar, S.; Dwivedi, M.; Kumar, M.; Gill, S.S. A comprehensive review of vulnerabilities and AI-enabled defense against DDoS attacks for securing cloud services. Comput. Sci. Rev. 2024, 53, 100661. [Google Scholar] [CrossRef]

- Senarak, C. Port cyberattacks from 2011 to 2023: A literature review and discussion of selected cases. Marit. Econ. Logist. 2024, 26, 105–130. [Google Scholar] [CrossRef]

- Slowik, J. Evolution of ICS Attacks and the Prospects for Future Disruptive Events; Threat Intelligence Centre Dragos Inc.: Hanover, MD, USA, 2019. [Google Scholar]

- Stouffer, K.; Falco, J.; Scarfone, K. Guide to industrial control systems (ICS) security. NIST Spec. Publ. 2011, 800, 16. [Google Scholar]

- Lin, C.T.; Wu, S.L.; Lee, M.L. Cyber attack and defense on industry control systems. In Proceedings of the 2017 IEEE Conference on Dependable and Secure Computing, Taipei, Taiwan, 7–10 August 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 524–526. [Google Scholar]

- Praseed, A.; Thilagam, P.S. HTTP request pattern based signatures for early application layer DDoS detection: A firewall agnostic approach. J. Inf. Secur. Appl. 2022, 65, 103090. [Google Scholar] [CrossRef]

- Alhaidari, F.A.; Al-Dahasi, E.M. New approach to determine DDoS attack patterns on SCADA system using machine learning. In Proceedings of the 2019 International Conference on Computer and Information Sciences (ICCIS), Sakaka, Saudi Arabia, 3–4 April 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Koay, A.M.; Ko, R.K.L.; Hettema, H.; Radke, K. Machine learning in industrial control system (ICS) security: Current landscape, opportunities and challenges. J. Intell. Inf. Syst. 2023, 60, 377–405. [Google Scholar] [CrossRef]

- Li, B.; Wu, Y.; Song, J.; Lu, R.; Li, T.; Zhao, L. DeepFed: Federated deep learning for intrusion detection in industrial cyber–physical systems. IEEE Trans. Ind. Inform. 2020, 17, 5615–5624. [Google Scholar] [CrossRef]

- Mittal, M.; Kumar, K.; Behal, S. Deep learning approaches for detecting DDoS attacks: A systematic review. Soft Comput. 2023, 27, 13039–13075. [Google Scholar] [CrossRef] [PubMed]

- Cai, J.; Wei, Z.; Luo, J. ICS anomaly detection based on sensor patterns and actuator rules in spatiotemporal dependency. IEEE Trans. Ind. Inform. 2024, 20, 10647–10656. [Google Scholar] [CrossRef]

- Wang, Y.; Fang, L.; Hu, B.; Ge, G.; Zhou, X.; Zhang, W. Overview of Research on Cloud-Edge-End Collaboration Technology of Industrial Control System. In Proceedings of the 2023 International Conference on Electronics, Computers and Communication Technology, Guilin China, 17–19 November 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 169–174. [Google Scholar]

- Huong, T.T.; Bac, T.P.; Long, D.M.; Luong, T.D.; Dan, N.M.; Quang, L.A.; Cong, L.T.; Thang, B.D.; Tran, K.P. Detecting cyberattacks using anomaly detection in industrial control systems: A federated learning approach. Comput. Ind. 2021, 132, 103509. [Google Scholar] [CrossRef]

- Haider, S.; Akhunzada, A.; Mustafa, I.; Patel, T.B.; Fernandez, A.; Choo, K.K.R.; Iqbal, J. A deep CNN ensemble framework for efficient DDoS attack detection in software defined networks. IEEE Access 2020, 8, 53972–53983. [Google Scholar] [CrossRef]

- Kim, J.; Kim, J.; Kim, H.; Shim, M.; Choi, E. CNN-based network intrusion detection against denial-of-service attacks. Electronics 2020, 9, 916. [Google Scholar] [CrossRef]

- Ortega-Fernandez, I.; Sestelo, M.; Burguillo, J.C.; Piñón-Blanco, C. Network intrusion detection system for DDoS attacks in ICS using deep autoencoders. Wirel. Netw. 2024, 30, 5059–5075. [Google Scholar] [CrossRef]

- Dev, A.; Lal, A.; Yadav, N.; Kumar, M. Enhancing Intrusion Detection Systems through Federated Learning and Gated Recurrent Units. In Proceedings of the 2024 IEEE Conference on Engineering Informatics (ICEI), Melbourne, Australia, 20–28 November 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–8. [Google Scholar]

- Shen, C.; Xiao, G.; Yao, S.; Zhou, B.; Pan, Z.; Zhang, H. An LSTM based malicious traffic attack detection in industrial internet. In Proceedings of the 2021 International Conference on Security, Pattern Analysis, and Cybernetics (SPAC), Chengdu, China, 18–20 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 60–65. [Google Scholar]

- Yazdinejad, A.; Kazemi, M.; Parizi, R.M.; Dehghantanha, A.; Karimipour, H. An ensemble deep learning model for cyber threat hunting in industrial internet of things. Digit. Commun. Netw. 2023, 9, 101–110. [Google Scholar] [CrossRef]

- Zainudin, A.; Ahakonye, L.A.C.; Akter, R.; Kim, D.S.; Lee, J.M. An efficient hybrid-dnn for ddos detection and classification in software-defined iiot networks. IEEE Internet Things J. 2022, 10, 8491–8504. [Google Scholar] [CrossRef]

- Diaba, S.Y.; Elmusrati, M. Proposed algorithm for smart grid DDoS detection based on deep learning. Neural Netw. 2023, 159, 175–184. [Google Scholar] [CrossRef] [PubMed]

- Söğüt, E.; Erdem, O.A. A multi-model proposal for classification and detection of DDoS attacks on SCADA systems. Appl. Sci. 2023, 13, 5993. [Google Scholar] [CrossRef]

- Li, J.; Lyu, L.; Liu, X.; Zhang, X.; Lyu, X. FLEAM: A federated learning empowered architecture to mitigate DDoS in industrial IoT. IEEE Trans. Ind. Inform. 2021, 18, 4059–4068. [Google Scholar] [CrossRef]

- Zainudin, A.; Akter, R.; Kim, D.S.; Lee, J.M. FedDDoS: An efficient federated learning-based DDoS attacks classification in SDN-enabled IIoT networks. In Proceedings of the 2022 13th International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 19–21 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1279–1283. [Google Scholar]

- Shao, J.M.; Zeng, G.Q.; Lu, K.D.; Geng, G.G.; Weng, J. Automated federated learning for intrusion detection of industrial control systems based on evolutionary neural architecture search. Comput. Secur. 2024, 143, 103910. [Google Scholar] [CrossRef]

- Bao, G.; Guo, P. Federated learning in cloud-edge collaborative architecture: Key technologies, applications and challenges. J. Cloud Comput. 2022, 11, 94. [Google Scholar]

- Gasteiger, J.; Bojchevski, A.; Günnemann, S. Predict then propagate: Graph neural networks meet personalized pagerank. arXiv 2018, arXiv:1810.05997. [Google Scholar]

- Belghazi, M.I.; Baratin, A.; Rajeswar, S.; Ozair, S.; Bengio, Y.; Courville, A.; Hjelm, R.D. Mine: Mutual information neural estimation. arXiv 2018, arXiv:1801.04062. [Google Scholar]

- Kiranyaz, S.; Avci, O.; Abdeljaber, O.; Ince, T.; Gabbouj, M.; Inman, D.J. 1D convolutional neural networks and applications: A survey. Mech. Syst. Signal Process. 2021, 151, 107398. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Hakak, S.; Ghorbani, A.A. Developing realistic distributed denial of service (DDoS) attack dataset and taxonomy. In Proceedings of the 2019 International Carnahan Conference on Security Technology (ICCST), Chennai, India, 1–3 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–8. [Google Scholar]

- Ferrag, M.A.; Friha, O.; Hamouda, D.; Maglaras, L.; Janicke, H. Edge-IIoTset: A new comprehensive realistic cyber security dataset of IoT and IIoT applications for centralized and federated learning. IEEE Access 2022, 10, 40281–40306. [Google Scholar] [CrossRef]

- Doriguzzi-Corin, R.; Millar, S.; Scott-Hayward, S.; Martinez-del Rincon, J.; Siracusa, D. LUCID: A practical, lightweight deep learning solution for DDoS attack detection. IEEE Trans. Netw. Serv. Manag. 2020, 17, 876–889. [Google Scholar] [CrossRef]

- Doriguzzi-Corin, R.; Siracusa, D. FLAD: Adaptive federated learning for DDoS attack detection. Comput. Secur. 2024, 137, 103597. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 20–22 April 2017; PMLR: Birmingham, UK, 2017; pp. 1273–1282. [Google Scholar]

| Clients | Types of Attack Traffic | Number of Attack Traffic Samples | Number of Normal Traffic Samples |

|---|---|---|---|

| 1 | DNS, MSSQL | 3824 | 3824 |

| 2 | LDAP, NETBIOS | 5522 | 5522 |

| 3 | MSSQL | 143,510 | 143,510 |

| 4 | NETBIOS | 143,325 | 143,325 |

| 5 | NTP, SSDP | 872 | 872 |

| 6 | ALL Types | 4730 | 4730 |

| 7 | SNMP | 10,998 | 10,998 |

| 8 | SSDP | 20,481 | 20,481 |

| 9 | SYN | 18,032 | 18,032 |

| 10 | TFTP | 33,266 | 33,266 |

| 11 | UDP | 24,044 | 24,044 |

| 12 | UDPFLAG, MSSQL, TFTP, UDP | 5065 | 5065 |

| 13 | WebDDoS, MSSQL, TFTP, UDP | 8705 | 8705 |

| Clients | Types of Attack Traffic | Number of Attack Traffic Samples | Number of Normal Traffic Samples |

|---|---|---|---|

| 1 | HTTP, ICMP, TCP, UDP | 2740 | 2740 |

| 2 | ICMP, TCP | 5697 | 5697 |

| 3 | TCP | 2499 | 2499 |

| 4 | UDP | 4896 | 4896 |

| Clients | FedDynST (Ours) | FLAD + CNN | FLAD + MLP | FedDDoS | FedAvg + CNN | FedAvg + Ours |

|---|---|---|---|---|---|---|

| 1 | 0.9989 | 0.9870 | 0.9286 | 0.9385 | 0.9377 | 0.9658 |

| 2 | 0.9937 | 1.0 | 0.9288 | 0.9584 | 0.9354 | 0.9841 |

| 3 | 0.9972 | 0.9757 | 0.9667 | 0.9584 | 0.9535 | 0.9756 |

| 4 | 0.9959 | 0.9758 | 0.9515 | 0.9635 | 0.9617 | 0.9836 |

| 5 | 0.9978 | 0.9611 | 0.9772 | 0.9658 | 0.9541 | 0.9684 |

| 6 | 0.9948 | 0.9742 | 0.9375 | 0.9737 | 0.9647 | 0.9785 |

| 7 | 1.0 | 0.9748 | 0.9683 | 0.9417 | 0.9541 | 0.9914 |

| 8 | 1.0 | 0.9720 | 0.9402 | 0.9614 | 0.9587 | 0.9781 |

| 9 | 0.9986 | 0.9455 | 0.8961 | 0.9356 | 0.9344 | 0.9654 |

| 10 | 0.9984 | 0.9677 | 0.9662 | 0.9258 | 0.9543 | 0.9874 |

| 11 | 1.0 | 0.9443 | 0.9471 | 0.9325 | 0.9148 | 0.9647 |

| 12 | 0.9803 | 0.9578 | 0.9412 | 0.9412 | 0.9458 | 0.9682 |

| 13 | 0.9629 | 0.9486 | 0.9343 | 0.9428 | 0.9215 | 0.9874 |

| Model | Accuracy | Precision | Recall | F1 | AUC |

|---|---|---|---|---|---|

| FedAvg + Ours | 0.9769 | 0.9547 | 0.9617 | 0.9582 | 0.9736 |

| FedAvg + CNN | 0.9454 | 0.9389 | 0.9568 | 0.9478 | 0.9554 |

| FedDDoS | 0.9492 | 0.9498 | 0.9602 | 0.9550 | 0.9675 |

| FLAD + MLP | 0.9449 | 0.9538 | 0.9606 | 0.9572 | 0.9435 |

| FLAD + CNN | 0.9680 | 0.9758 | 0.9738 | 0.9748 | 0.9678 |

| FedDynST | 0.9937 | 0.9835 | 0.9897 | 0.9866 | 0.9957 |

| Model | Accuracy | Precision | Recall | F1 | AUC |

|---|---|---|---|---|---|

| FedAvg + Ours | 0.9874 | 0.9698 | 0.9654 | 0.9676 | 0.9745 |

| FedAvg + CNN | 0.9687 | 0.9725 | 0.9635 | 0.9680 | 0.9674 |

| FedDDoS | 0.9698 | 0.9654 | 0.9683 | 0.9668 | 0.9699 |

| FLAD + MLP | 0.9667 | 0.9883 | 0.9649 | 0.9765 | 0.9687 |

| FLAD + CNN | 0.9751 | 0.9835 | 0.9759 | 0.9797 | 0.9785 |

| FedDynST | 0.9932 | 0.9938 | 0.9927 | 0.9932 | 0.9972 |

| Clients | FedDynST (Ours) | FLAD + CNN | FLAD + MLP | FedDDoS | FedAvg + CNN | FedAvg + Ours |

|---|---|---|---|---|---|---|

| 1 | 0.9989 | 0.9725 | 0.9821 | 0.9628 | 0.9838 | 0.9738 |

| 2 | 0.9578 | 0.9328 | 0.8000 | 0.9125 | 0.9725 | 0.9417 |

| 3 | 0.9500 | 0.9148 | 0.7700 | 0.9243 | 0.9135 | 0.9384 |

| 4 | 0.9798 | 0.9594 | 0.8202 | 0.9248 | 0.9438 | 0.9489 |

| Model | Accuracy | Precision | Recall | F1 | AUC |

|---|---|---|---|---|---|

| FedAvg + Ours | 0.9507 | 0.9785 | 0.9343 | 0.9559 | 0.9695 |

| FedAvg + CNN | 0.9534 | 0.9587 | 0.9334 | 0.9459 | 0.9547 |

| FedDDoS | 0.9311 | 0.9415 | 0.9210 | 0.9311 | 0.9458 |

| FLAD + MLP | 0.8431 | 0.8604 | 0.7875 | 0.7787 | 0.8916 |

| FLAD + CNN | 0.9449 | 0.9243 | 0.9374 | 0.9308 | 0.9818 |

| FedDynST | 0.9694 | 0.9903 | 0.9489 | 0.9681 | 1.0 |

| Model | Accuracy | Precision | Recall | F1 | AUC |

|---|---|---|---|---|---|

| FedAvg + Ours | 0.9678 | 0.9689 | 0.9752 | 0.9720 | 0.9614 |

| FedAvg + CNN | 0.9518 | 0.9484 | 0.9918 | 0.9731 | 0.9718 |

| FedDDoS | 0.9318 | 0.9358 | 0.9812 | 0.9580 | 0.9574 |

| FLAD + MLP | 0.8953 | 0.8537 | 0.8642 | 0.8589 | 0.6104 |

| FLAD + CNN | 0.9468 | 0.9418 | 1.0 | 0.9701 | 0.9608 |

| FedDynST | 0.9947 | 0.9939 | 1.0 | 0.9969 | 0.9979 |

| Model | Accuracy | Precision | Recall | F1 | AUC |

|---|---|---|---|---|---|

| FedDynST-Loss | 0.9768 | 0.9638 | 0.9725 | 0.9681 | 0.9528 |

| FedDynST-APP | 0.9602 | 0.9534 | 0.9720 | 0.9626 | 0.9625 |

| FedDynST | 0.9937 | 0.9835 | 0.9897 | 0.9866 | 0.9957 |

| Model | Accuracy | Precision | Recall | F1 | AUC |

|---|---|---|---|---|---|

| FedDynST-Loss | 0.9685 | 0.9583 | 0.9789 | 0.9685 | 0.9832 |

| FedDynST-APP | 0.9874 | 0.9589 | 0.9687 | 0.9648 | 0.9568 |

| FedDynST | 0.9932 | 0.9938 | 0.9927 | 0.9932 | 0.9972 |

| Model | Accuracy | Precision | Recall | F1 | AUC |

|---|---|---|---|---|---|

| FedDynST-Loss | 0.9588 | 0.9687 | 0.9326 | 0.9503 | 0.9778 |

| FedDynST-APP | 0.9429 | 0.9714 | 0.9289 | 0.9497 | 0.9874 |

| FedDynST | 0.9694 | 0.9903 | 0.9489 | 0.9681 | 1.0 |

| Model | Accuracy | Precision | Recall | F1 | AUC |

|---|---|---|---|---|---|

| FedDynST-Loss | 0.9785 | 0.9712 | 0.9841 | 0.9776 | 0.9678 |

| FedDynST-APP | 0.9834 | 0.9759 | 0.9878 | 0.9818 | 0.9752 |

| FedDynST | 0.9947 | 0.9939 | 1.0 | 0.9969 | 0.9979 |

| Model | Accuracy | Precision | Recall | F1 | AUC | Time (s) |

|---|---|---|---|---|---|---|

| FedAvg + Ours | 0.9378 | 0.9389 | 0.9338 | 0.9388 | 0.9409 | 449.56 |

| FedAvg + CNN | 0.9245 | 0.9374 | 0.9412 | 0.9393 | 0.9285 | 420.27 |

| FedDDoS | 0.9201 | 0.9118 | 0.9287 | 0.9202 | 0.8978 | 445.82 |

| FLAD + MLP | 0.9288 | 0.9217 | 0.9324 | 0.9270 | 0.9125 | 388.87 |

| FLAD + CNN | 0.9330 | 0.9414 | 0.9442 | 0.9428 | 0.9325 | 415.34 |

| FedDynST | 0.9628 | 0.9714 | 0.9541 | 0.9629 | 0.9518 | 405.98 |

| Model | Accuracy | Precision | Recall | F1 | AUC | Time (s) |

|---|---|---|---|---|---|---|

| FedAvg + Ours | 0.9365 | 0.9579 | 0.9011 | 0.9286 | 0.9487 | 25.21 |

| FedAvg + CNN | 0.9324 | 0.9613 | 0.8974 | 0.9284 | 0.9512 | 18.93 |

| FedDDoS | 0.9284 | 0.9584 | 0.8741 | 0.9178 | 0.9416 | 19.86 |

| FLAD + MLP | 0.9587 | 0.9614 | 0.9014 | 0.9304 | 0.9657 | 41.89 |

| FLAD + CNN | 0.9478 | 0.9636 | 0.8947 | 0.9279 | 0.9587 | 53.38 |

| FedDynST | 0.9728 | 0.9714 | 0.9128 | 0.9412 | 0.9762 | 21.83 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, Z.; Liu, B.; Gao, D.; Zhou, D.; Han, X.; Cao, J. A Dynamic Spatiotemporal Deep Learning Solution for Cloud–Edge Collaborative Industrial Control System Distributed Denial of Service Attack Detection. Electronics 2025, 14, 1843. https://doi.org/10.3390/electronics14091843

Cao Z, Liu B, Gao D, Zhou D, Han X, Cao J. A Dynamic Spatiotemporal Deep Learning Solution for Cloud–Edge Collaborative Industrial Control System Distributed Denial of Service Attack Detection. Electronics. 2025; 14(9):1843. https://doi.org/10.3390/electronics14091843

Chicago/Turabian StyleCao, Zhigang, Bo Liu, Dongzhan Gao, Ding Zhou, Xiaopeng Han, and Jiuxin Cao. 2025. "A Dynamic Spatiotemporal Deep Learning Solution for Cloud–Edge Collaborative Industrial Control System Distributed Denial of Service Attack Detection" Electronics 14, no. 9: 1843. https://doi.org/10.3390/electronics14091843

APA StyleCao, Z., Liu, B., Gao, D., Zhou, D., Han, X., & Cao, J. (2025). A Dynamic Spatiotemporal Deep Learning Solution for Cloud–Edge Collaborative Industrial Control System Distributed Denial of Service Attack Detection. Electronics, 14(9), 1843. https://doi.org/10.3390/electronics14091843