1. Introduction

The rapid technological advancements over the past decade, particularly in internet-based financial services such as banking, insurance, and bill payments, have led to a significant increase in the number of users requiring fast and secure transactions [

1,

2]. Ensuring the security of user data during these transactions is paramount, as fraudulent activities and data breaches—exemplified by the notable password leaks in 2013 and 2017 [

3]—underscore the limitations of traditional knowledge-based authentication methods (e.g., text passwords, PINs, and graphical passwords). Consequently, there is a growing need for more robust, automated systems that are capable of accurately verifying user identities.

Biometric authentication, specifically fingerprint recognition, has emerged as a promising alternative due to the inherent uniqueness of fingerprints. Deep learning is successful in many applications [

4,

5,

6,

7,

8,

9,

10] and has significantly advanced biometric recognition, yielding impressive results in distinguishing genuine users from impostors [

11,

12,

13,

14,

15]. However, despite these advances, most research to date has focused on full fingerprint recognition. The increasing use of compact devices (such as smartphones, tablets, and finger scanners) has necessitated the development of methods that can accurately match partial fingerprint images—images that capture only a small portion of the fingerprint due to the limited sensing area of the scanner [

16].

A major challenge in partial fingerprint recognition is the reduction in distinguishable features; partial images contain far fewer minutiae points compared to full fingerprints. This limitation complicates the matching process and compromises the accuracy. Traditional approaches often rely on extracting local features, but recent work, drawing inspiration from theories of holistic perception, suggests the importance of considering global structural information [

17]. In line with this, topological data analysis (TDA) offers a framework for extracting such global topological features from data sources [

17]. For instance, a recent study introduced a topological set network (TSNet) to extract and vectorize topological information from images and social networks, demonstrating improved performance by leveraging these holistic features [

17].

To address the challenge of limited features in partial fingerprint recognition, our research proposes a novel approach that combines deep learning techniques with handcrafted feature descriptors to enhance the matching evaluation. This paper clearly describes our partial fingerprint recognition system [

18]. By integrating a Siamese neural network based on a CNN architecture [

11] with feature descriptors such as SIFT [

19,

20], our method aims to improve the evaluation of matching scores—leveraging both global and local features to achieve lower Equal Error Rates (EERs) and False Rejection Rates (FRRs) at high security thresholds.

In addition to overcoming the challenges associated with partial fingerprint images, our study also addresses the broader issues of system efficiency and security. We evaluate our proposed method on datasets from FVC2002 [

21] (DB1, DB2, and DB3), focusing on performance metrics including EER, FRR@FAR 1/50,000, and the ROC curve. The experimental results demonstrate that combining deep learning with handcrafted approaches significantly enhances the performance of partial fingerprint recognition [

22,

23]. Moreover, our work builds on previous research employing Siamese Networks for various biometric and image recognition tasks [

24,

25,

26,

27,

28,

29] and contributes a novel combined matching evaluation method that has not previously been explored for partial fingerprint recognition.

Overall, this paper presents a comprehensive investigation into a combined deep learning and feature descriptor approach for partial fingerprint recognition. Our contributions include the development of a robust system that improves the matching accuracy on partial fingerprints, providing valuable insights and directions for future research in biometric authentication.

2. Related Work

Fingerprint recognition has been widely studied in biometric authentication systems, with extensive research focusing on both minutiae-based and machine learning-based approaches. In this section, we review key advancements in fingerprint recognition, emphasizing partial fingerprint matching and hybrid methodologies that integrate deep learning and handcrafted feature descriptors.

2.1. Minutiae-Based Fingerprint Recognition

Traditional fingerprint recognition relies primarily on minutiae-based techniques, which extract ridge endings and bifurcations as key discriminative features [

1,

2]. These methods have been widely used in full fingerprint matching due to their robustness and interpretability. However, their effectiveness significantly decreases when applied to partial fingerprint recognition, as fewer minutiae points are available for comparison [

3]. Various strategies have been proposed to address this limitation, such as global orientation modeling [

11] and adaptive feature selection [

12], but their accuracy remains constrained when dealing with highly degraded or incomplete fingerprint regions.

2.2. Deep Learning for Fingerprint Recognition

In recent years, deep learning has revolutionized biometric authentication by enabling automatic feature extraction and robust classification [

13,

14,

30,

31,

32,

33]. Convolutional Neural Networks (CNNs) have been particularly successful in full fingerprint recognition, achieving high accuracy across various benchmark datasets. The Siamese Network, a specialized deep learning model designed for pairwise similarity learning, has demonstrated effectiveness in biometric verification tasks [

16,

34,

35,

36]. Notably, studies have leveraged CNN-based architectures for minutiae extraction [

37], as well as for end-to-end fingerprint recognition [

38]. However, deep learning models that are trained on full fingerprint datasets often struggle with partial fingerprint images due to the limited availability of discriminative features.

2.3. Hybrid Approaches: Combining Deep Learning with Feature Descriptors

To mitigate the challenges associated with both minutiae-based and deep learning methods, researchers have explored hybrid approaches that integrate traditional feature descriptors with machine learning models. One notable strategy involves incorporating Scale-Invariant Feature Transform (SIFT) descriptors to enhance local feature matching [

18,

39]. SIFT-based fingerprint recognition has demonstrated robustness against variations in scale, rotation, and illumination [

40]. Moreover, hybrid models that combine CNN-extracted features with handcrafted descriptors have been proposed to improve the matching accuracy [

41,

42]. These methods leverage the hierarchical representation capabilities of deep learning while retaining the precision of handcrafted feature extraction.

2.4. Limitations and Research Gaps

Despite significant progress, several research gaps remain in the field of partial fingerprint recognition. Minutiae-based methods suffer from feature loss when dealing with partial fingerprints, while deep learning models require large-scale annotated datasets for effective generalization. Hybrid approaches offer a promising solution; however, optimal feature fusion strategies and real-time performance improvements are still open research challenges [

43]. This study addresses these gaps by proposing a novel weighted score fusion framework that combines deep learning and feature descriptor-based matching for improved partial fingerprint recognition accuracy.

3. Methodology

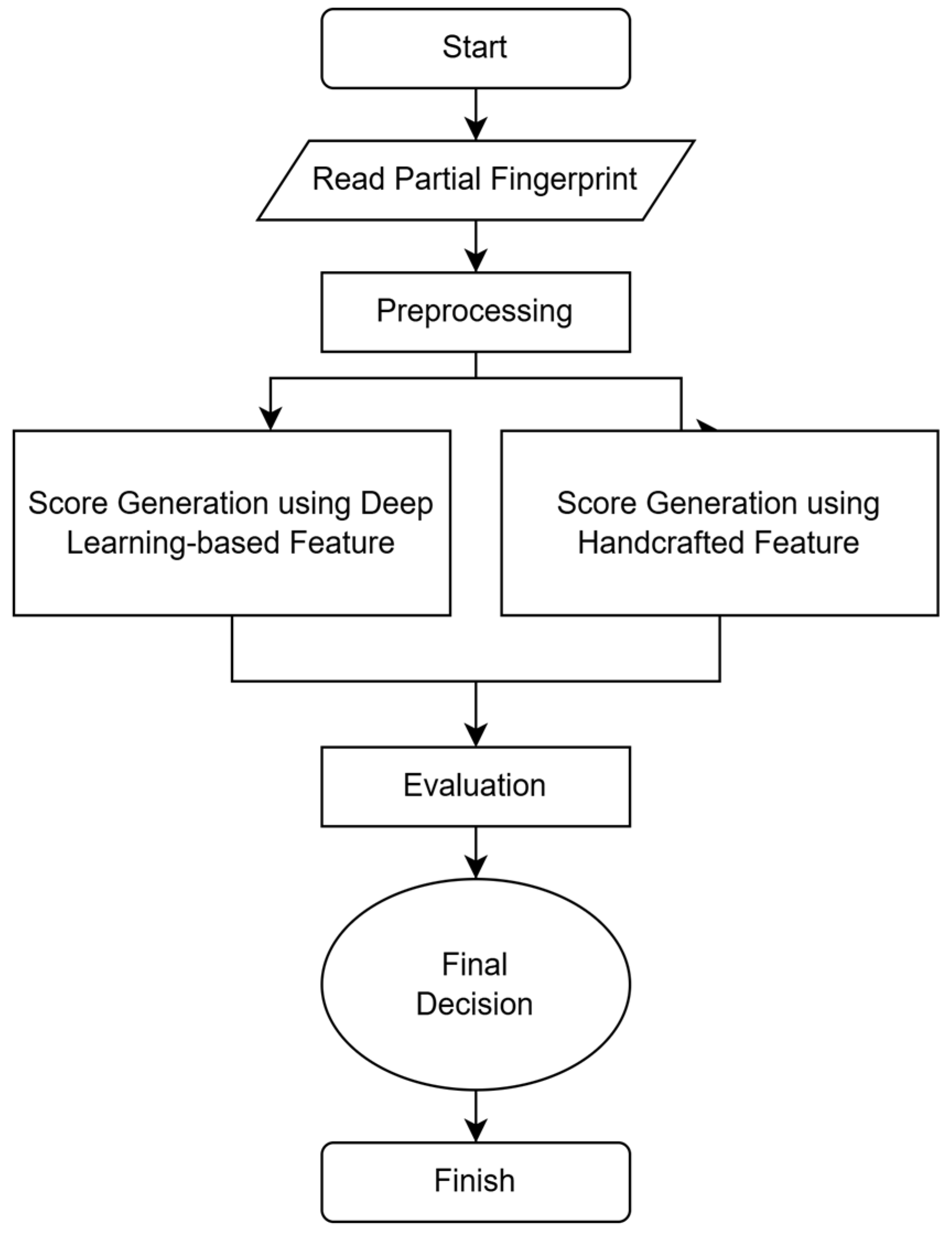

This part presents a novel mixed technique for identifying partial fingerprints, merging a deep learning strategy with traditional Scale-Invariant Feature Transform (SIFT) descriptors to improve accuracy, as depicted in

Figure 1. The process starts by importing datasets consisting of fingerprint templates and queries. After undergoing a preprocessing phase to enable feature extraction, the Siamese CNN—trained beforehand—produces a similarity score. In parallel, SIFT-based keypoints are derived and compared using the K-Nearest Neighbors (k-NN) algorithm to yield a separate similarity metric. These two independent scores are then integrated through a weighting formula, to yield a final similarity value, which informs the matching decision.

3.1. Region of Interest (ROI) Extraction

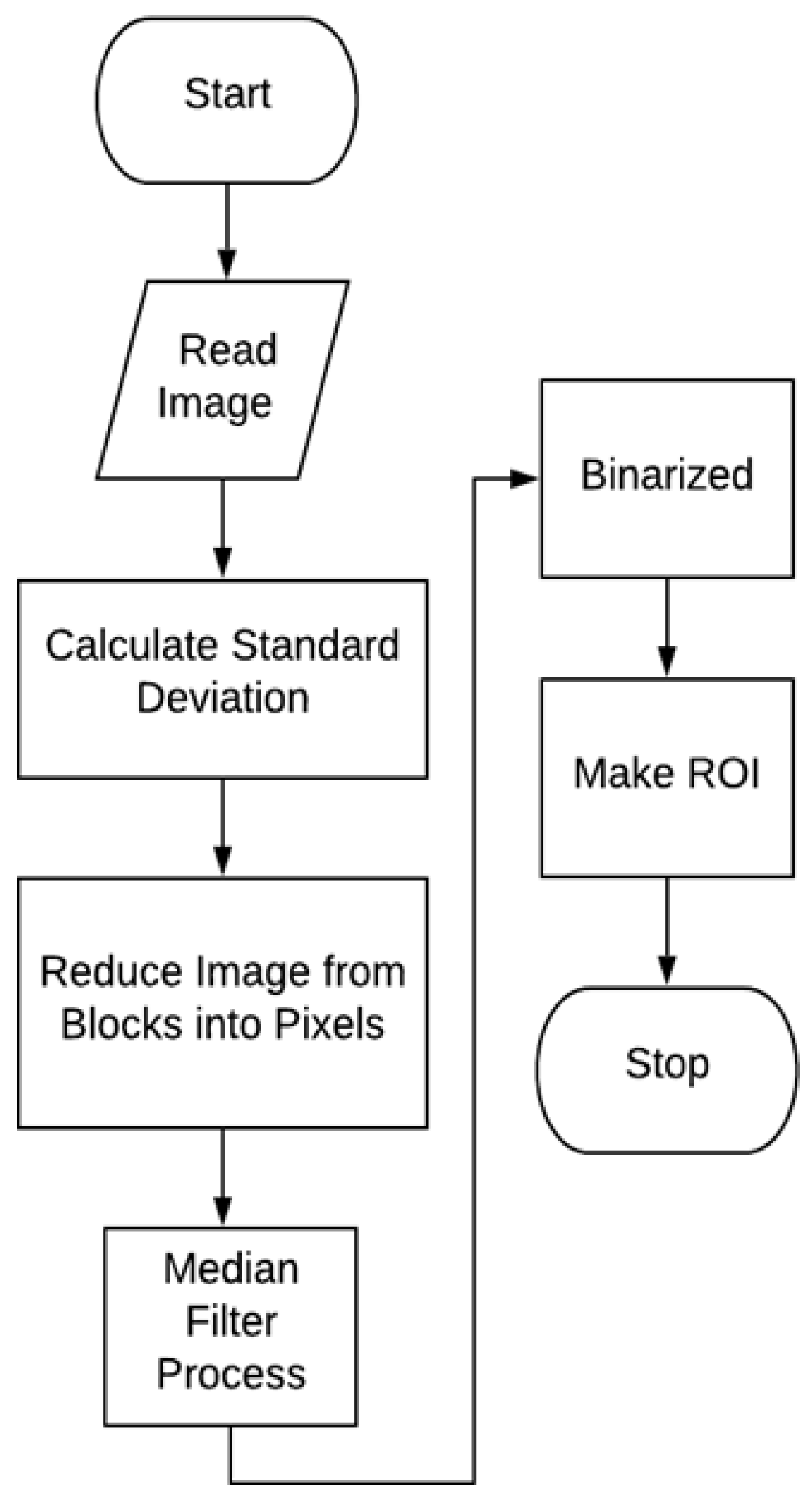

Before the cropping procedure can be executed, ROI extraction must be performed. This step is essential, since the FVC2002 dataset consists of full fingerprint images. Partial fingerprints are subsequently obtained by extracting the relevant region from the full image. A flowchart illustrating the ROI extraction process is presented in

Figure 2.

The initial phase of ROI extraction involves computing the standard deviation across the full image. This calculation facilitates the assessment of gray-level variations and the division of the image into blocks. The representation of the image is then refined by reducing it from block-level to pixel-level granularity. Next, a median filter is applied to enhance image homogenization. Finally, a binary conversion is performed to differentiate the fingerprint from the background.

3.2. Preprocessing for Feature Extraction

To enhance the image quality and standardize input formats before feature extraction, several preprocessing steps are conducted:

Region of Interest (ROI) Extraction: Since partial fingerprint images contain extraneous background information, segmentation techniques are employed to isolate the fingerprint region.

Normalization: To mitigate variations in image contrast and brightness, pixel intensity normalization is applied.

Resizing: To ensure uniformity across images, all samples are resized to a standardized dimension of 184 × 184 pixels, facilitating consistency in feature extraction.

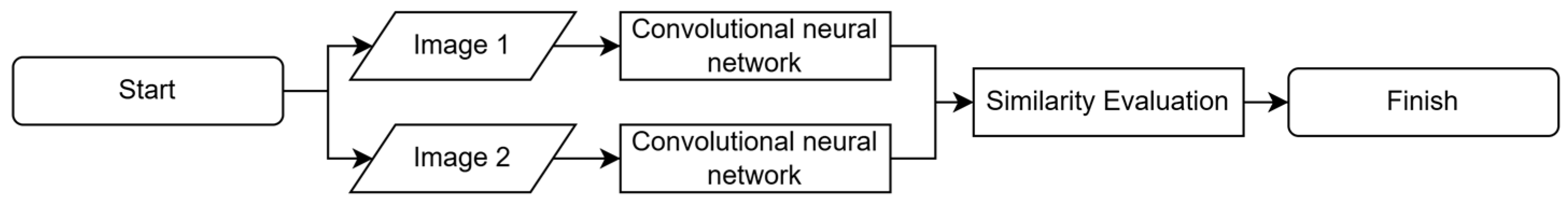

3.3. Siamese Convolutional Neural Network (CNN)

In our approach, we leverage a Siamese Convolutional Neural Network (CNN) to perform comparative analysis between pairs of fingerprint images. The framework of the Siamese CNN, depicted in

Figure 3, remains central to our architecture, comprising parallel branches that share identical network parameters. Each branch processes one of the input images and extracts its corresponding feature representation.

The input to the Siamese CNN consists of two images with varying experimental resolutions, including 184 × 184, 160 × 160, and 152 × 152 pixels. These input image pairs are processed through the convolutional layers, where convolutional operations extract feature representations. The convolution outputs for each image are subsequently compared by computing their difference, which is then fed into the fully connected layer.

The CNN model employed in this study operates through two main processes: the Convolution Feature extraction and the Convolution Model processing. The partial fingerprint image dataset that is used for experimentation consists of images with dimensions of 184 × 184, 160 × 160, and 152 × 152 pixels. For an input image of 184 × 184 pixels, the CNN processing details are outlined in

Table 1. The fingerprint images undergo convolutional feature extraction using a 3 × 3 kernel with a stride of 1, followed by batch normalization and activation via the ReLU function.

The output of this process generates feature maps with a filter size of 32. Subsequently, the convolutional feature extraction process is executed with two additional convolutional layers, each followed by batch normalization and ReLU activation. The feature extraction pipeline consists of four layers in total. Within each convolutional layer, the model processing incorporates additional convolutional operations using a 3 × 3 kernel with a stride of 2, followed by batch normalization. The resulting feature map undergoes pooling operations in the preceding layer, ensuring that the learned filters in the model processing stage remain adaptable. This iterative process continues until the final convolutional operation is passed into a dense layer. In the final model operation, downsampling is performed using a stride of 1. The last output, with dimensions of 14 × 14 pixels, is subsequently processed through a dense layer with two activation functions, ReLU and Sigmoid.

Based on

Table 1, the CNN architecture, which integrates both feature extraction and model processing, is depicted in

Figure 4 as an illustration of CNN reconstruction. The CNN architecture is designed to process partial fingerprint images of different resolutions (184 × 184, 160 × 160, and 152 × 152 pixels). Each image size produces unique parameters while following the same CNN structure. The training process starts with an image being input into the ConvF1 layer, where batch normalization is applied before passing it through the pooling layer. The image is then processed through ConvMod, which includes a batch normalization step before re-entering the subsequent ConvF layer. After the initial feature convolution operation, the following convolution steps involve two sequential convolutional layers, each configured similarly to ConvF1, incorporating batch normalization and ReLU activation before transitioning to the pooling layer. This iterative ConvMod process is repeated after each pooling layer operation.

3.4. Scale-Invariant Feature Transform (SIFT)

The Scale-Invariant Feature Transform (SIFT) is a widely used algorithm for detecting and describing local features within an image. The features extracted through SIFT, referred to as keypoints, contain information regarding their spatial coordinates, orientation, and descriptors. SIFT features exhibit robustness to variations in scale and rotation, as well as changes in illumination and three-dimensional perspectives [

44].

3.4.1. Detection of Keypoint Candidates

The initial step in keypoint identification involves detecting potential keypoints within an image. To ensure that the extracted features remain invariant to scale variations, feature extraction must be performed at multiple scales. This process begins with the construction of a Gaussian image, denoted as

L(

x,

y,

σ), which is obtained by convolving the input image

I(

x,

y) with a Gaussian function

G(

x,

y,

σ), as defined in Equations (1) and (2) [

44]:

where

x and y represent the spatial coordinates within the image;

σ represents the scale parameter of the Gaussian function;

L(x, y, σ) represents the Gaussian-smoothed image;

G(x, y, σ) denotes the two-dimensional Gaussian filter;

I(x, y) is the original input image.

Following the computation of

L(

x,

y,

σ), the Difference of Gaussian (DoG) image, denoted as

D(

x,

y,

σ), is generated. This is achieved by subtracting two Gaussian images at different scales, as expressed in Equation (3) [

44]:

where

D(x, y, σ) represents the DoG image;

L(x, y, σ) denotes the Gaussian image;

K is a constant scale factor.

3.4.2. Keypoint Selection

Once keypoint candidates have been identified, those with low contrast values are discarded, as they are highly susceptible to noise [

44]. Keypoint selection involves eliminating keypoints whose contrast values fall below a predetermined threshold. Additionally, unstable keypoints are removed due to their sensitivity to noise. This selection process is conducted based on the condition expressed in Equation (3) [

45], ensuring that only robust keypoints are retained.

3.4.3. Orientation Assignment to Keypoints

To achieve rotational invariance, an orientation is assigned to each keypoint. This is accomplished by computing the gradient magnitude and orientation direction using Equations (4) and (5):

where

x and y represent the spatial coordinates within the image;

m(x, y) represents the gradient magnitude;

L(x, y) denotes the Gaussian image;

θ(x, y) specifies the orientation direction.

3.4.4. Keypoint Descriptor Computation

Each keypoint is described in a vectorized form using a keypoint descriptor. To generate this descriptor, gradient and orientation values are used to construct an orientation histogram. This histogram consists of 36 bins, covering 360 degrees of orientation. Each bin is weighted by the gradient magnitude, and the bin with the highest value represents the dominant direction of the keypoint. Additionally, bins with values exceeding 80% of the highest value are also considered valid orientations for the keypoint [

44].

3.4.5. Keypoint Matching

Keypoint matching in this study is performed using the K-Nearest Neighbor (KNN) algorithm, a choice motivated by its simplicity, ease of implementation, and effectiveness when dealing with keypoint descriptors of moderate dimensionality.

Figure 5 illustrates the steps involved in extracting these feature descriptors.

In our approach, a database image typically contains multiple clusters of SIFT features, automatically formed using KNN with a KD-tree indexing structure to accelerate the search process. Feature matching between a query image and database images is then conducted through a KNN search, which identifies the database clusters whose descriptor vectors exhibit the smallest distances to those of the query image. Once a promising cluster is identified, the nearest descriptor vector within that cluster is determined, and a match is established if a descriptor vector in the database closely corresponds to one in the query image. This process leverages the non-parametric nature of KNN, which makes no assumptions about the underlying data distribution, offering flexibility when working with diverse descriptor types.

While KNN can be computationally intensive for very large datasets or high-dimensional descriptors, its straightforward nature makes it a common and often effective approach for keypoint matching, where the number of matching keypoints directly influences the reliability of feature point detection—a higher number of matches indicates a more robust feature detection process.

3.5. Weighted Score Fusion

The final recognition decision is obtained by fusing matching scores from both the Siamese CNN and SIFT-based descriptor matching. The weighted fusion is computed as follows:

where

and

denote the similarity scores obtained from the SIFT descriptor and Siamese CNN, respectively, and

is an empirically determined weight factor set to 0.02. This small value reflects the greater emphasis placed on the Siamese CNN ’s output. This decision was motivated by several factors. First, the Siamese CNN model demonstrated a higher degree of reliability in addressing the inherent challenges of partial fingerprint matching, such as limited feature availability and image distortions. Second, the feature descriptor, while providing useful information, was observed to be less robust in these challenging scenarios. Consequently, minimizing its weight in the final score calculation optimized the overall performance of the combined approach.

4. Experimental Results

This section presents the experimental setup, evaluation metrics, and results of the proposed hybrid fingerprint recognition approach. The effectiveness of our method is assessed using the FVC2002 [

21] benchmark dataset under various experimental conditions.

4.1. Dataset and Experimental Setup

The experiments are conducted using the FVC2002 [

21] fingerprint dataset, which contains full fingerprint impressions from multiple individuals. To simulate partial fingerprint recognition, we extract 4000 partial fingerprint images per dataset (DB1, DB2, and DB3), each containing 40 impressions from 100 unique fingers. The differences between DB1, DB2, and DB3 are presented in

Table 2. The images are cropped to include only the central region, ensuring a realistic partial fingerprint scenario.

All experiments are conducted on a high-performance computing server equipped with the following:

Processor: Intel i7-6800K CPU @ 3.40GHz (Intel Corporation, Santa Clara, CA, USA);

Memory: 128 GB RAM;

Graphics Card: NVIDIA GTX 1080 (Nvidia Corporation, Santa Clara, CA, USA);

Software: Python 3.12.7, Pytorch 2.5.1, OpenCV 4.10;

4.2. Evaluation Metrics

The recognition performance is evaluated using standard biometric system metrics:

Equal Error Rate (EER): The error rate at which the False Acceptance Rate (FAR) equals the False Rejection Rate (FRR).

False Rejection Rate (FRR) @ FAR 1/50,000: Measures the system’s reliability in preventing unauthorized access at an extremely low FAR.

Receiver Operating Characteristic (ROC) Curve: Illustrates the trade-off between the FAR and FRR.

Area Under the Curve (AUC): Quantifies the overall system performance.

4.3. Impact of Epochs on Recognition Performance

To examine the effect of training epochs on the model’s performance, we train the Siamese CNN using three different epoch settings: 500, 1000, and 2500. The results on DB1 with an image size of 184 × 184 pixels are summarized in

Table 3.

From

Table 3, it is evident that increasing the number of training epochs significantly improves performance. The model achieves optimal results at 2500 epochs, yielding an EER of 3.90% and an FRR of 6.36% at FAR 1/50,000.

4.4. Comparison Across Different Datasets

We further evaluate our method on different datasets (DB1, DB2, and DB3) at an image resolution of 184 × 184 pixels using the optimal training epoch (2500). The results are presented in

Table 4.

Specifically, datasets DB1 and DB3 yielded an EER of approximately 4%, while DB2 exhibited a slightly higher EER of around 5%. Furthermore, the evaluation of FRR@FAR 1/50,000 indicates that DB1 and DB2 achieved favorable results, with FRR values of 6.36% and 8.11%, respectively. However, the FRR@FAR 1/50,000 for DB3 was found to be 14.02%, which is notably higher than the results obtained for DB1 and DB2. This discrepancy can be attributed to the distinct data characteristics inherent to each dataset.

Consequently, despite utilizing the same model and methodological approach, the evaluation outcomes vary across different datasets due to their unique properties.

4.5. Effect of Image Resolution

To analyze the impact of the image resolution, we evaluate different fingerprint sizes (152 × 152, 160 × 160, and 184 × 184) on DB1. The results are summarized in

Table 5.

The results suggest that higher image resolutions lead to better recognition performance, as larger images retain more fingerprint details. Notably, reducing the image size to 152 × 152 results in a significant performance drop, with the FRR increasing to 75.97%.

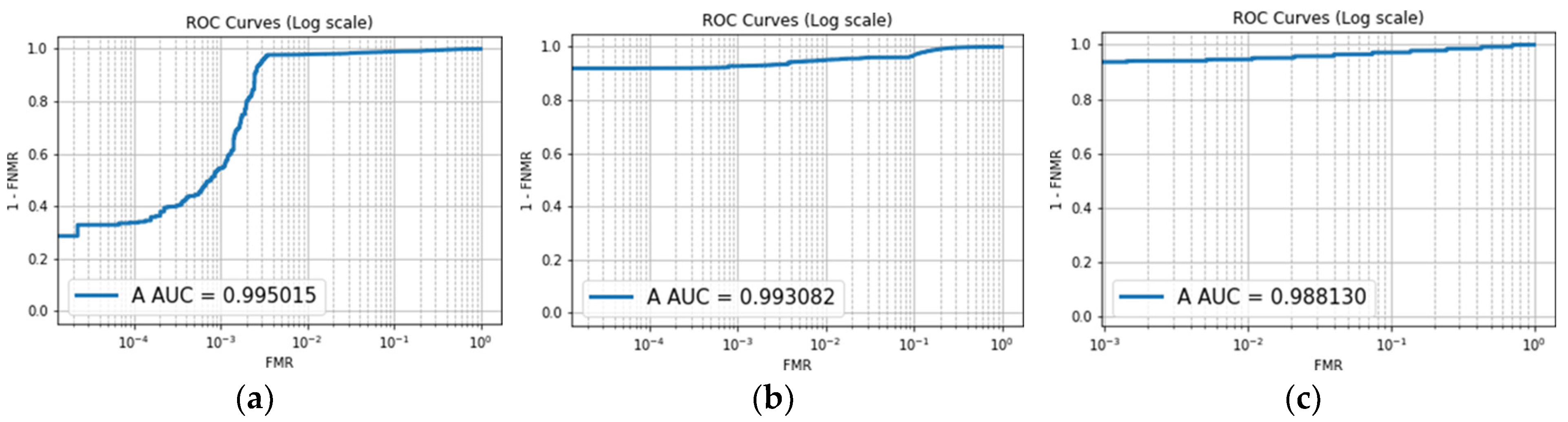

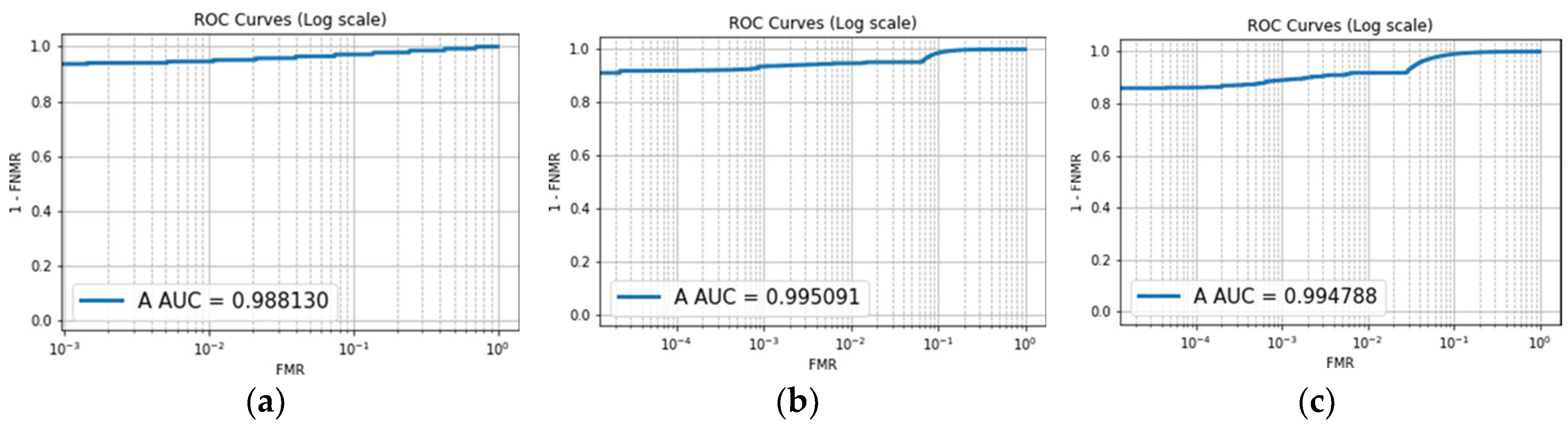

4.6. Receiver Operating Characteristic (ROC) Curves

The Receiver Operating Characteristic (ROC) curve presented in

Figure 6 allows for an analysis of the degree of separation achieved across different epochs. The Area Under the Curve (AUC) value quantitatively represents the effectiveness of the method in distinguishing between classes. The experimental results indicate that epochs 500, 1000, and 2500 demonstrate improved performance in handling varying sensitivity levels. Furthermore, the AUC scores presented in the figure consistently exceed 90%, reinforcing the efficacy of the method.

Similarly, the ROC curve illustrated in

Figure 7 enables the evaluation of the impact of an appropriate number of epochs on an image size of 184 × 184 pixels. The resulting ROC curves exhibit an almost linear trend, with AUC values exceeding 90%. This observation suggests a high likelihood that the classifier successfully differentiates between genuine and impostor class values. This performance is attributed to the classifier’s ability to effectively detect False Acceptance Rates (FARs) and False Rejection Rates (FRRs).

Additionally, a comparison between the ROC curves depicted in

Figure 7a–c and those in

Figure 6a reveals notable variations. These differences indicate that the selection of epochs influences the ROC curves obtained from the three distinct datasets, highlighting the importance of using an appropriate number of epochs for optimal classification performance.

4.7. Discussion

The experimental findings confirm the effectiveness of our hybrid approach for partial fingerprint recognition. Our method achieves state-of-the-art results on the FVC2002 dataset, demonstrating superior robustness compared to traditional minutiae-based techniques. The key observations from our study are as follows:

Training for 2500 epochs yields optimal results, significantly reducing the EER and FRR.

Higher image resolutions improve the recognition accuracy by preserving fingerprint details.

The dataset’s characteristics influence the performance, with DB3 showing a higher FRR due to increased intra-class variations.

Overall, these results validate the efficacy of combining a Siamese CNN and SIFT descriptors in partial fingerprint recognition. Future work may focus on optimizing feature fusion strategies and exploring additional datasets for further validation.

5. Conclusions and Future Work

This study introduced a hybrid approach for partial fingerprint recognition by integrating deep learning-based feature extraction with handcrafted descriptors. The proposed method leverages a Siamese Convolutional Neural Network (CNN) for high-level feature representation and Scale-Invariant Feature Transform (SIFT) for robust local feature matching, employing a weighted score fusion strategy to enhance the recognition performance.

Extensive experiments on the FVC2002 dataset demonstrate the effectiveness of our approach, achieving an Equal Error Rate (EER) of approximately 4% on DB1 and DB3, alongside a notable reduction in the False Rejection Rate (FRR) at stringent security thresholds. Key findings highlight the importance of the training duration, image resolution, and dataset characteristics in influencing the recognition accuracy. These results confirm that combining deep learning with traditional feature descriptors mitigates the limitations of minutiae-based and CNN-only methods, leading to improved robustness and reliability in partial fingerprint recognition.

Future research can extend this work by exploring high-resolution fingerprint datasets for better scalability, developing adaptive feature fusion techniques, and optimizing computational efficiency through model pruning and knowledge distillation. Additionally, advancements in deep learning architectures, such as transformer-based models, and the integration of multimodal biometric systems can further enhance recognition accuracy and security.

By addressing these challenges, future studies can build upon our findings to drive innovation in biometric authentication, improving the reliability and applicability of partial fingerprint recognition systems in real-world scenarios.

6. Limitations

Despite its effectiveness, our study has certain limitations:

The method is tested on FVC2002, and its generalization to other datasets, such as high-resolution fingerprint datasets, requires further validation.

The computational complexity increases when the CNN and SIFT are integrated due to extensive processing at different stages. SIFT adds overhead with keypoint detection, descriptor computation, and matching, while its high-dimensional descriptors increase the memory usage and processing time. Feature matching and deep matrix computations place further strains on resources. Although this combination enhances the robustness of features, optimization is necessary for real-time applications.

The weighted fusion parameter is empirically determined, and an adaptive weighting mechanism could further enhance the performance.

While effective, our method requires further validation on diverse datasets, optimization for real-time use, and an adaptive weighting mechanism for improved performance.

Author Contributions

Conceptualization, J.-C.W.; Methodology; C. and F.H.R.; Writing—original draft preparation, C.; Writing—review and editing, Z.-S.C., S.-K.C., C.-I.H., K.-C.L., S.-L.C., and Y.-H.L.; Supervision, J.-C.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Barkadehi, M.H.; Nilashi, M.; Ibrahim, O.; Zakeri Fardi, A.; Samad, S. Authentication systems: A literature review and classification. Telemat. Inform. 2018, 35, 1491–1511. [Google Scholar] [CrossRef]

- Velásquez, I.; Caro, A.; Rodríguez, A. Authentication schemes and methods: A systematic literature review. Inf. Softw. Technol. 2018, 94, 30–37. [Google Scholar] [CrossRef]

- Delahaye, J.-P. The Mathematics of (Hacking) Passwords. Sci. Am. 2019. Available online: https://www.scientificamerican.com/article/the-mathematics-of-hacking-passwords/ (accessed on 7 March 2025).

- Pranata, Y.D.; Wang, K.-C.; Wang, J.-C.; Idram, I.; Lai, J.-Y.; Liu, J.-W.; Hsieh, I.-H. Deep Learning and SURF for Automated Classification and Detection of Calcaneus Fractures in CT Images. Comput. Methods Programs Biomed. 2019, 171, 27–37. [Google Scholar] [CrossRef]

- Thi Le, P.; Pham, T.; Hsu, Y.-C.; Wang, J.-C. Convolutional Blur Attention Network for Cell Nuclei Segmentation. Sensors 2022, 22, 1586. [Google Scholar] [CrossRef]

- Huang, C.D.; Wang, C.Y.; Wang, J.-C. Human Action Recognition System for Elderly and Children Care Using Three Stream ConvNet. In Proceedings of the 2015 International Conference on Orange Technologies (ICOT), Hong Kong, China, 19–22 December 2015; pp. 5–9. [Google Scholar]

- Wang, C.-Y.; Chang, P.-C.; Ding, J.-J.; Tai, T.-C.; Santoso, A.; Liu, Y.-T.; Wang, J.-C. Spectral–Temporal Receptive Field-Based Descriptors and Hierarchical Cascade Deep Belief Network for Guitar Playing Technique Classification. IEEE Trans. Cybern. 2022, 52, 3684–3695. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Tai, T.-C.; Wang, J.-C.; Santoso, A.; Mathulaprangsan, S.; Chiang, C.-C.; Wu, C.-H. Sound Events Recognition and Retrieval Using Multi-Convolutional-Channel Sparse Coding Convolutional Neural Networks. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 1875–1887. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Wang, J.-C.; Santoso, A.; Chiang, C.-C.; Wu, C.-H. Sound Event Recognition Using Auditory-Receptive-Field Binary Pattern and Hierarchical-Diving Deep Belief Network. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 26, 1336–1348. [Google Scholar] [CrossRef]

- Lee, Y.S.; Wang, C.Y.; Wang, S.F.; Wang, J.C.; Wu, C.H. Fully Complex Deep Neural Network for Phase-Incorporating Monaural Source Separation. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017. [Google Scholar]

- Minaee, S.; Abdolrashidi, A.; Su, H.; Bennamoun, M.; Zhang, D. Biometric Recognition Using Deep Learning: A Survey. arXiv 2019, arXiv:1912.00271. [Google Scholar] [CrossRef]

- Le, H.H.; Nguyen, N.H.; Nguyen, T.-T. Automatic Detection of Singular Points in Fingerprint Images Using Convolution Neural Networks. In Intelligent Information and Database Systems; Springer: Cham, Switzerland, 2017; pp. 207–216. [Google Scholar]

- Wani, M.; Bhat, F.; Afzal, S.; Khan, A. Supervised Deep Learning in Fingerprint Recognition; Springer: Singapore, 2020; pp. 111–132. [Google Scholar]

- Anand, V.; Kanhangad, V. PoreNet: CNN-Based Pore Descriptor for High-Resolution Fingerprint Recognition. IEEE Sens. J. 2020, 20, 9305–9313. [Google Scholar] [CrossRef]

- Chen, L.; Xie, B.; Liu, T. Query2Set: Single-to-Multiple Partial Fingerprint Recognition Based on Attention Mechanism. IEEE Trans. Inf. Forensics Secur. 2022, 17, 1243–1253. [Google Scholar] [CrossRef]

- Lee, W.; Cho, S.; Choi, H.; Kim, J. Partial fingerprint matching using minutiae and ridge shape features for small fingerprint scanners. Expert Syst. Appl. 2017, 87, 183–198. [Google Scholar] [CrossRef]

- Wang, C.; Cao, R.; Wang, R. Learning discriminative topological structure information representation for 2D shape and social network classification via persistent homology. Knowl.-Based Syst. 2025, 311, 113125. [Google Scholar] [CrossRef]

- Chrisantonius; Priyambodo, T.K.; Raswa, F.H.; Wang, J.-C. Partial Fingerprint on Combined Evaluation Using Deep Learning and Feature Descriptor. In Proceedings of the 2021 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Tokyo, Japan, 14–17 December 2021; pp. 1611–1614. [Google Scholar]

- Mathur, S.; Vjay, A.; Shah, J.; Das, S.; Malla, A. Methodology for partial fingerprint enrollment and authentication on mobile devices. In Proceedings of the 2016 International Conference on Biometrics (ICB), Halmstad, Sweden, 13–16 June 2016; pp. 1–8. [Google Scholar]

- Aravindan, A.; Anzar, S.M. Robust partial fingerprint recognition using wavelet SIFT descriptors. Pattern Anal. Appl. 2017, 20, 963–979. [Google Scholar] [CrossRef]

- Maio, D.; Maltoni, D.; Cappelli, R.; Wayman, J.L.; Jain, A.K. FVC2002: Second Fingerprint Verification Competition. In Proceedings of the International Conference on Pattern Recognition, Quebec City, QC, Canada, 11–15 August 2002; pp. 811–814. [Google Scholar]

- Zeng, F.; Hu, S.; Xiao, K. Research on partial fingerprint recognition algorithm based on deep learning. Neural Comput. Appl. 2019, 31, 4789–4798. [Google Scholar] [CrossRef]

- Soni, U.; Mahesh, G. A Survey on State of the Art Methods of Fingerprint Recognition; Technoscience Academy: Gujarat, India, 2018. [Google Scholar]

- Tolosana, R.; Vera-Rodriguez, R.; Fierrez, J.; Ortega-Garcia, J. Exploring Recurrent Neural Networks for On-Line Handwritten Signature Biometrics. IEEE Access 2018, 6, 5128–5138. [Google Scholar] [CrossRef]

- Zhang, C.; Liu, W.; Ma, H.; Fu, H. Siamese neural network based gait recognition for human identification. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech, and Signal Processing, Shanghai, China, 20–25 March 2016; pp. 2832–2836. [Google Scholar]

- Zhong, D.; Yang, Y.; Du, X. Palmprint Recognition Using Siamese Network; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Lin, C.; Kumar, A. Multi-Siamese networks to accurately match contactless to contact-based fingerprint images. In Proceedings of the 2017 IEEE International Joint Conference on Biometrics (IJCB), Denver, CO, USA, 1–4 October 2017; pp. 277–285. [Google Scholar]

- Lin, C.; Kumar, A. Contactless and partial 3D fingerprint recognition using multi-view deep representation. Pattern Recognit. 2018, 83, 314–327. [Google Scholar] [CrossRef]

- Maheshwary, S.; Misra, H. Matching Resumes to Jobs via Deep Siamese Network. In Proceedings of the Companion Proceedings of The Web Conference 2018, Lyon, France, 23–27 April 2018; pp. 87–88. [Google Scholar]

- Yu, J.; Niu, L.; Gao, C.; Cao, Z.; Zhao, H. Partial Fingerprint Matching via Feature Similarity and Pre-training. In Proceedings of the International Joint Conference on Biometrics (IJCB), Buffalo, NY, USA, 15–18 September 2024; pp. 1–9. [Google Scholar]

- Zhang, Y.; Liu, H.; Wang, J. Robust Partial Fingerprint Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Sun, Y.; Wang, H.; Li, Z. A Neural Network-Based Partial Fingerprint Image Identification Method for Crime Scenes. Appl. Sci. 2023, 13, 1188. [Google Scholar] [CrossRef]

- He, X.; Zhou, M.; Yang, F. PFVNet: A Partial Fingerprint Verification Network Learned from Large Fingerprint Matching. IEEE Trans. Inf. Forensics Secur. 2022, 17, 3706–3719. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Tahir, H.; Abdelrazek, M.; Babar, A. Deep Learning Methods for Credit Card Fraud Detection. arXiv 2020, arXiv:2012.03754. [Google Scholar]

- Guan, X.; Pan, Z.; Feng, J.; Zhou, J. Joint Identity Verification and Pose Alignment for Partial Fingerprints. IEEE Trans. Inf. Forensics Secur. 2025, 20, 249–263. [Google Scholar] [CrossRef]

- Deshpande, A.; Kumar, R.; Singh, R. CNNAI: A Convolution Neural Network-Based Latent Fingerprint Matching Using the Combination of Nearest Neighbor Arrangement Indexing. Front. Robot. AI 2020, 7, 113. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Deng, W. Deep Face Recognition: A Survey. arXiv 2018, arXiv:1804.06655. [Google Scholar] [CrossRef]

- Alam, M.; Samad, M.D.; Vidyaratne, L.; Glandon, A.; Iftekharuddin, K.M. Survey on Deep Neural Networks in Speech and Vision Systems. arXiv 2019, arXiv:1908.07656. [Google Scholar] [CrossRef] [PubMed]

- Min, S.; Lee, B.; Yoon, S. Deep Learning in Bioinformatics. arXiv 2016, arXiv:1603.06430. [Google Scholar] [CrossRef]

- Deng, J.; Guo, J.; Zafeiriou, S. ArcFace: Additive Angular Margin Loss for Deep Face Recognition. arXiv 2018, arXiv:1801.07698. [Google Scholar]

- Darlow, L.N.; Rosman, B. Fingerprint minutiae extraction using deep learning. In Proceedings of the 2017 IEEE International Joint Conference on Biometrics (IJCB), Denver, CO, USA, 1–4 October 2017; pp. 22–30. [Google Scholar]

- Stojanović, B.; Marques, O.; Nešković, A.; Puzović, S. Fingerprint ROI segmentation based on deep learning. In Proceedings of the 2016 24th Telecommunications Forum (TELFOR), Belgrade, Serbia, 22–23 November 2016; pp. 1–4. [Google Scholar]

- Alaslani, M.; Elrefaei, L. Convolutional Neural Network Based Feature Extraction for IRIS Recognition. Int. J. Comput. Sci. Inf. Technol. 2018, 10, 65–78. [Google Scholar] [CrossRef]

- Lowe, D. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91. [Google Scholar] [CrossRef]

- Zhu, D. SIFT algorithm analysis and optimization. In Proceedings of the 2010 International Conference on Image Analysis and Signal Processing, Zhejiang, China, 9–11 April 2010; pp. 415–419. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).