1. Introduction

As technology increasingly permeates nearly every aspect of modern life, understanding how individuals trust systems has become critical in Human–Computer Interaction (HCI). Despite the growing importance of trust in technology, there has been limited attention toward validating trust assessment tools, as the rapid pace of technological change often conflicts with the time-intensive process of instrument development and validation. However, it is crucial to ensure that these tools function consistently across different demographic groups to enable meaningful cross-cultural analyses.

This study seeks to address this issue by advancing the Human–Computer Trust Scale (HCTS), a trust assessment instrument [

1], by critically examining its applicability across Brazil, Singapore, Malaysia, Estonia, and Mongolia. We use Measurement Invariance of Composite Models (MICOM), a statistical procedure within Partial Least Squares Structural Equation Modeling (PLS-SEM), to assess whether the HCTS demonstrates consistent psychometric properties across the five countries [

2,

3,

4]. Our guiding research question is whether measurement invariance can be established for the HCTS across the five countries with distinct cultural dimensions [

5].

Additionally, despite a growing body of research on trust in technology [

6,

7], limited attention has been paid to how one’s national culture shapes their propensity to trust technological systems. This article builds on the evidence that national culture moderates trust in technology [

8] and focuses on providing a more rigorous assessment of the HCTS’s psychometric properties. By exploring these dynamics, we provide insights into measuring propensity to trust and offer methodological guidance for applying MICOM in HCI research. Thus, our main research question is complemented by our secondary goal, which is to explore if and how MICOM analysis can help identify differences in trust in technology between the countries.

This paper begins with an overview of trust in technology, the conceptual grounding of the HCTS, and the impact of national culture on trust. We then outline our data collection and analysis procedures, followed by a detailed account of the MICOM procedure and results. In the discussion, we integrate our findings to address the research questions and provide practical insights for conducting trust assessments across countries. By addressing these questions, our study enhances the validity of the HCTS across cultures and presents a methodological approach for future HCI research.

3. Methodology

Our study follows the steps recommended for the MICOM procedure in the literature [

2,

48,

49] to explore individuals’ national culture’s influence on the HCTS. While the MICOM is at the core of our article, we first evaluated the measurement model to ensure its validity in the countries included and later analyzed the path coefficients to further explore the effect of national culture on the model’s behavior. All procedures are detailed in this section.

The five studies were conducted independently but followed the same protocol in each country between 2023 and 2024. This study presented a stimulus and relied on participants’ perceptions regarding the hypothetical implementation of such a system in their country. Facial Recognition Systems (FRSs) were chosen for this study because they have not yet been widely implemented anywhere. This allowed participants to form impressions of the technology in a more abstract manner, making it easier to compare their predispositions. In contrast, using an existing system could trigger past experiences that are context-specific and may vary significantly between countries, complicating the comparison of results.

The stimulus consisted of a 2 min long video featuring excerpts of FRSs implemented in China (Skynet) and England (Metropolitan Police of London). Each excerpt showcased real applications of the technology with slightly different approaches: Skynet focuses on how the system can enhance safety, while the Metropolitan Police emphasizes the importance of maintaining citizens’ privacy. The objective of this stimulus was to help participants understand the potential benefits and risks associated with FRSs without being limited to a specific example.

The questionnaire included items on socio-demographic factors, including age, gender, and education level; items related to technology usage and access; and the HCTS. The HCTS was tailored to reflect the focus on FRSs, following the most recent version of the instrument, containing 11 items measured on a 5-point Likert scale [

25], and three items measuring Trust for the PLS-SEM validation [

1].

In all cases, the questionnaire was also made available in English, its originally validated version. Additionally, translated versions were provided by native speakers in the local languages: Portuguese in Brazil; Chinese, Tamil, and Malay in Singapore; Chinese and Malay in Malaysia; Estonian in Estonia; and Mongolian and Chinese in Mongolia. Participants were recruited through convenience sampling, with assistance from local institutions and the researchers’ networks in each country. Data collection occurred online via LimeSurvey (

https://www.limesurvey.org/ accessed 11 November 2024).

Samples

Participants were recruited using a convenience sampling strategy, which leveraged the authors’ network while also striving to include a diverse sample. We chose convenience sampling because of resource limitations that made probability sampling impractical. Since this is a preliminary investigation aimed at validating the measurement instrument and identifying patterns, a convenience sample is appropriate. However, we emphasize that further studies should focus on generalizability, as our sample may not be fully balanced or representative of the broader populations.

The sample size (N) for each country considered in this study is as follows: Brazil = 133, Singapore = 109, Malaysia = 107, Estonia = 117, and Mongolia = 120, resulting in a total sample size of N = 586. A detailed description of the sample breakdown by gender and age range can be seen in

Table 1.

4. Analysis

4.1. Measurement Model Evaluation

Before conducting the MICOM analysis, we assessed the measurement model for each sample. Although the HCTS has already been validated, this assessment is advisable before the MICOM to ensure that the properties of the constructs remain in the different contexts investigated [

50].

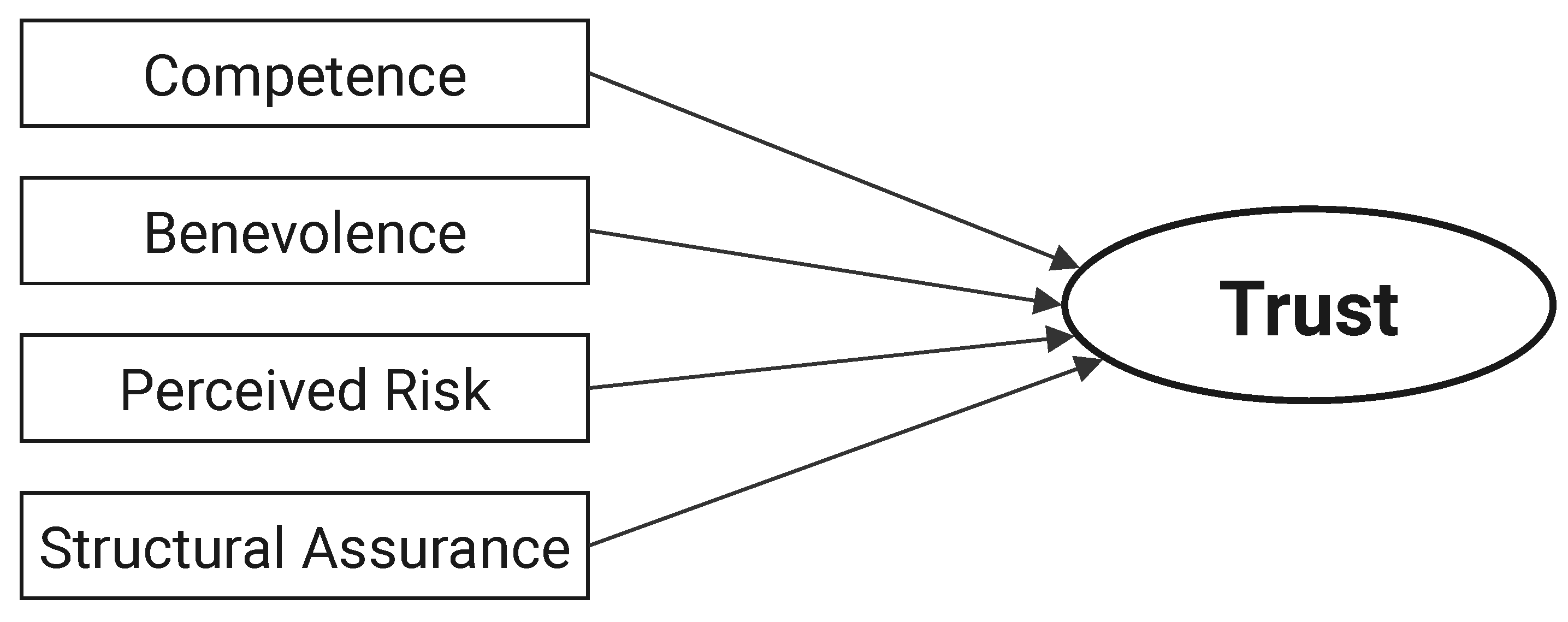

Table 2 presents the HTCS questionnaire adopted for the assessment. It comprises the constructs Competence (COM), Benevolence (BEN), Perceived Risk (PR) (reversed), and Structural Assurance (SA). The content within brackets refers to the specific technology assessed.

Our assessment of the measurement models focused on the measures’ internal consistency, reliability, convergent validity, and discriminant validity. The results showed considerable variability in the measures’ reliability and validity.

Reliability values refer to how well the indicator reflects the latent construct. Values above 0.5 are considered adequate [

3]. All the samples presented at least one problematic item in all cases in the construct COM or PR. The most critical issues were in the construct COM3 for the samples of Singapore and Estonia, and the construct PR2 for Mongolia and Malaysia.

Next, we looked at the average variance extracted (AVE), which represents how well the indicators explain the constructs. Following the problems with the item’s reliability, PR was below the 0.5 threshold for Mongolia. Finally, we considered composite reliability (CR), which assesses the internal consistency of the indicators. The problems remained, with values for PR below the necessary thresholds of 0.7 for Malaysia and Mongolia. Addressing these issues, we removed the constructs with the lowest loadings: COM3 and PR2.

The model without these items yielded better results, with only one loading below the 0.7 threshold for the Malaysia sample (PR3). However, it did not affect the AVE and CR, which were adequate for this version of the model. The results can be seen in

Table 3. The results for the original model are available in

Appendix A (

Table A1).

Next, we assessed the discriminant validity (DV) for the refined model using the Fornell–Larcker criterion. This criterion evaluates the correlations between each item and the constructs in the model. In all samples, each construct’s highest correlation was with its own construct, demonstrating that there are no issues with DV. The table with the DV results is available in the

Appendix B (

Table A2). The highest values per construct per country are highlighted to facilitate interpretation. Finally, we looked at R², representing the explained variance of the endogenous construct (Trust) by the exogenous constructs (COM, BEN, PR, and SA). The values were as follows: Brazil = 0.625, Singapore = 0.589, Malaysia = 0.510, Estonia = 0.682, and Mongolia = 0.736; all were considered adequate in our domain of study.

After removing two items, all the samples met most of the assessment criteria for reliability, convergent, and discriminant validity. Thus, we proceeded with the MICOM using the adjusted model with nine items. The problems encountered in the measurement model evaluation are further addressed in the

Section 5.

4.2. Measurement of Invariance Assessment (MICOM)

Next, we proceeded with the MICOM. The procedure is composed of three steps, which should be followed if the previous criteria have been met: (1) configural invariance, (2) compositional invariance, and (3) equal mean values and variances [

48].

4.2.1. Configural Invariance

The first step refers to the assessment of the conceptual model structure, requiring (1) identical indicators per measurement model, (2) identical data treatment, and (3) identical algorithm settings [

4].

Following the studies’ design, the scale was implemented with the same items and under the same protocol in all the countries. The scale was administered in English and the local languages, following a back-translation process by native speakers. Our group-specific model estimations draw on identical algorithm settings, and due to the measurement model evaluation and adjustments in the previous step of the analysis, we can also consider that the PLS path model setups are equal across the three countries. Thus, configural invariance is established.

4.2.2. Compositional Invariance

Compositional invariance assesses if the relationships between indicators and the composite constructs are similar across groups. This step is required to ensure that the constructs are formed in the same way across the countries, which is necessary for comparing results between them [

4].

This procedure was performed in paired comparisons. Since we had five countries, we had a total of 10 comparisons. We ran the permutation procedure with 1000 permutations and a 5% significance level for each paired comparison.

To assess compositional invariance, we compared the original correlations of composite scores (C) with those generated from the permutation test (Cu). If C is higher than the 5% threshold of Cu, also reflected in non-significant p-values (p > 0.05), compositional invariance is established.

A permutation

p-value > 0.05 means that the difference in the construct’s composition is not significantly different, so their results can be compared.

Table 4 presents the p-values of the comparisons to facilitate the overview of the results.

Only three pairs from our samples achieved full compositional invariance: Singapore vs. Brazil, Singapore vs. Estonia, and Mongolia vs. Malaysia. All other paired comparisons had between one and three constructs violating compositional invariance. The violation most commonly happened for the endogenous construct (Trust). The values in bold represent the cases in which compositional invariance was achieved, indicating that they can be compared. For some comparisons, the difference was significant only for Trust but close to the 0.05 threshold. Similarly, some comparisons had only one significantly different construct.

Although full compositional invariance was not achieved in most cases, we proceeded with further analyses to understand the differences between groups in more depth. This decision is based on the fact that the HCTS has already been validated and variations in invariance have been observed across the samples. Furthermore, it aligns with the exploratory nature of the MICOM, allowing us to further examine whether these problems are reflected in the analysis of equal means and variances.

4.3. Equal Mean Values and Variances

The final step of MICOM evaluates whether the mean values and variances of the constructs are equal across different groups [

4]. Equal means indicate that the groups have similar tendencies for each construct, and equal variances imply similar dispersion. If the means and variances are considered equal, full measurement invariance is achieved, and the data from different groups can be pooled. It also means that any differences in path coefficients can be interpreted confidently rather than attributed to measurement variability.

Results are calculated similarly to compositional invariance, with

p-values > 0.05 indicating that the differences are not significantly different, and that the results can be compared across the groups. Within our samples, no paired comparison presented equal composite means, and the similarities varied considerably between the pairs. Regarding variance, Brazil vs. Estonia, Singapore vs. Malaysia, and Singapore vs. Estonia had equal variances for all constructs. The number of constructs achieving measurement invariance in other paired comparisons varied considerably.

Table 5 and

Table 6 present overviews of the results, with values in bold representing the cases in which the conditions were satisfied.

To answer our research question, measurement invariance was not achieved for the HCTS in most cases. Partial measurement invariance was achieved for the pairs Singapore vs. Brazil, Singapore vs. Estonia, and Mongolia vs. Malaysia, indicating that only in these cases can the HCTS results be compared, but not pooled.

If measurement invariance is not achieved, comparing path coefficients can be problematic because differences might be due to the measurement varying across groups rather than genuine differences in the relationships between constructs. Nevertheless, based on our exploratory intentions, we analyze the path coefficients. This analysis focuses on cautious relative comparisons, taking into account the results of the MICOM.

4.4. Path Coefficients

We proceed with the path coefficients analysis, considering that our comparisons reached mostly partial compositional invariance.

Table 7 presents the path coefficients per country. There, it can be observed that certain countries have higher similarities. For instance, based on the weights of the constructs BEN and COM, it is possible to identify two groups: Brazil, Singapore, and Mongolia, with a higher weight of BEN over COM, and Singapore and Estonia, with an inverse proportion of these constructs’ weights. Additionally,

Figure 2 is included to facilitate the visualization of the results.

To evaluate the significance of the differences, we ran a bootstrapping analysis with 5000 subsamples at a 0.05 significance level. The analysis revealed that the difference was only significant in a few comparisons and on more than two constructs per case. The bootstrapping table with complete results is available in

Appendix C (

Table A3).

Therefore, although we identified differences between the groups, the lack of statistical significance indicates that the findings must be interpreted cautiously.

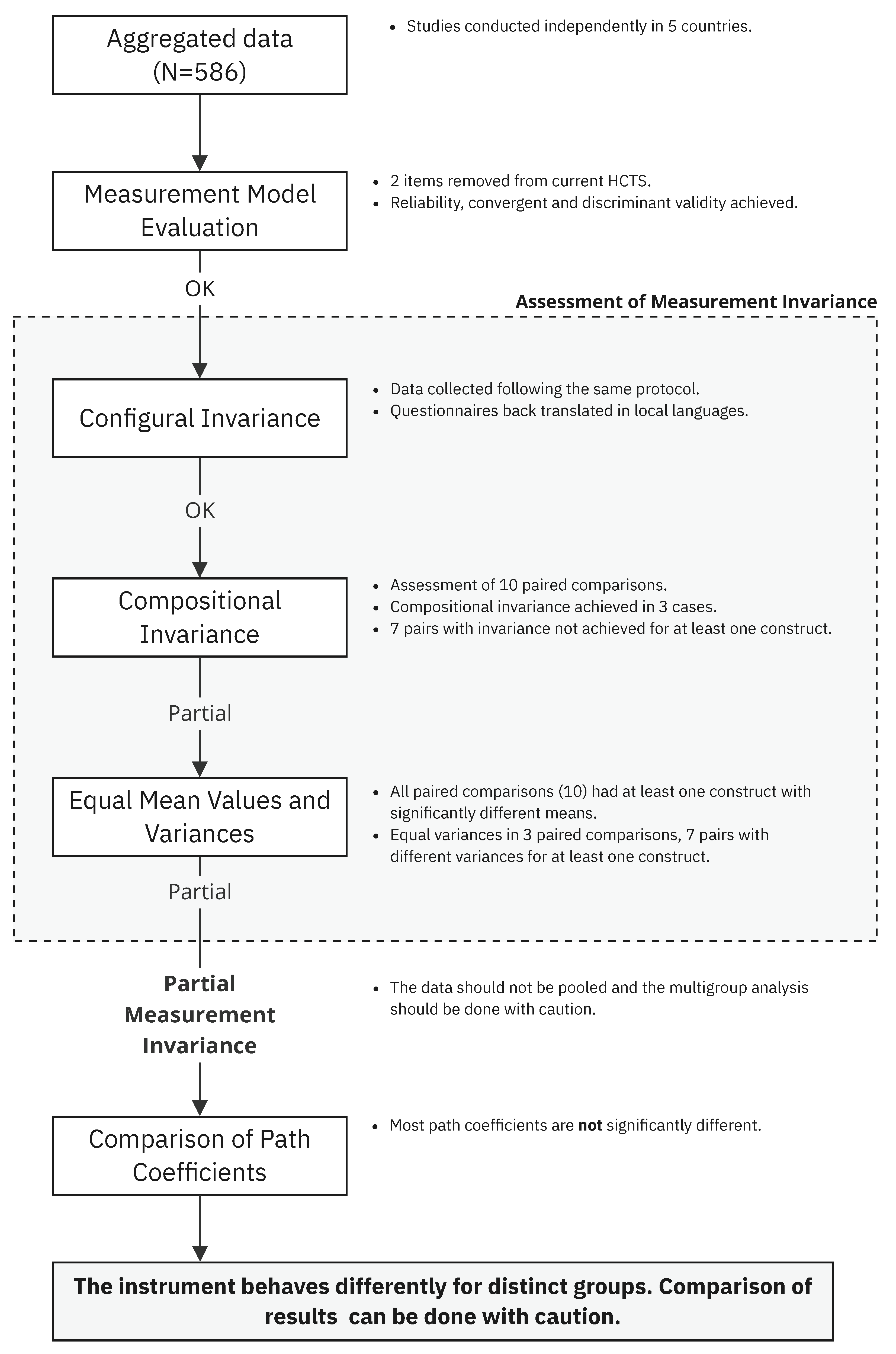

Figure 3 presents the summary of the procedure adopted, highlighting the outcomes of each step. The next section provides a complete reflection of our findings.

5. Discussion

The HCTS is a validated instrument for assessing trust in technology, which has been validated and subsequently applied in various contexts with distinct groups of participants. Although researchers have been careful with the application of the scale, limited attention has been paid to the effects of the context of the evaluation on the instrument’s functioning. The MICOM results demonstrated the procedure’s potential for a deeper understanding of the instrument and the potential for exploring the groups investigated. In this section, we discuss the implications of our findings, aiming to assist other researchers and practitioners in HCI in implementing the procedure.

First, the measurement model evaluation, a prerequisite for the MICOM, revealed that some constructs were problematic in particular samples, pointing towards a review of the scale [

25] to ensure higher adequacy in different contexts—in this case, countries. The items with loading inadequacies varied according to the samples, providing initial indications that the scale behaves differently in each country, and not necessarily that there are structural problems with the scale [

50], which we further explored with the MICOM. After the two items with persistent issues, COM3 and PR2, were removed, the scale’s reliability and convergent validity were improved, and adequate DV and R² were achieved for all samples, fulfilling the prerequisites for running the MICOM. Furthermore, it supported the assumption that there were problems with these items. As both constructs (COM and PR) remained with two items each, the removal improved the scale’s measurement quality without compromising the constructs’ conceptual meaning.

Thus, the first contribution of this study is the advancement of the HCTS [

1], building on the revised version [

8]. The revised scale is available in

Appendix D Table A4, and can more consistently be used across different national groups. Insights into the behavior of the HCTS and of the countries investigated are described next.

5.1. Measurement of Invariance Assessment (MICOM)

The first step of the MICOM analysis, configural invariance, assessed the conceptual model structure. This condition was met because the data were collected following the same protocol and analyzed using the same procedures.

The next step, the analysis of compositional invariance, is a requisite for confidently comparing the results between groups. Our empirical research included five samples, so we ran ten paired comparisons. This analysis revealed that 3 out of 10 pairs have significantly different compositions of at least one construct. As shown in

Table 4, the invariance was most commonly not achieved for the endogenous construct (Trust). This finding suggests that the differences observed primarily relate to how the constructs affect trust rather than how the items compose the exogenous constructs. From a broader perspective, this result also points to variations in trust formation between the groups compared [

8], rather than distinct interpretations of single items.

This result has more serious implications for the HCTS, as the lack of compositional variance indicates that the constructs are formed differently across groups, and thus, their comparison can be misleading [

4], as the differences may be a result of differences in the measurement model.

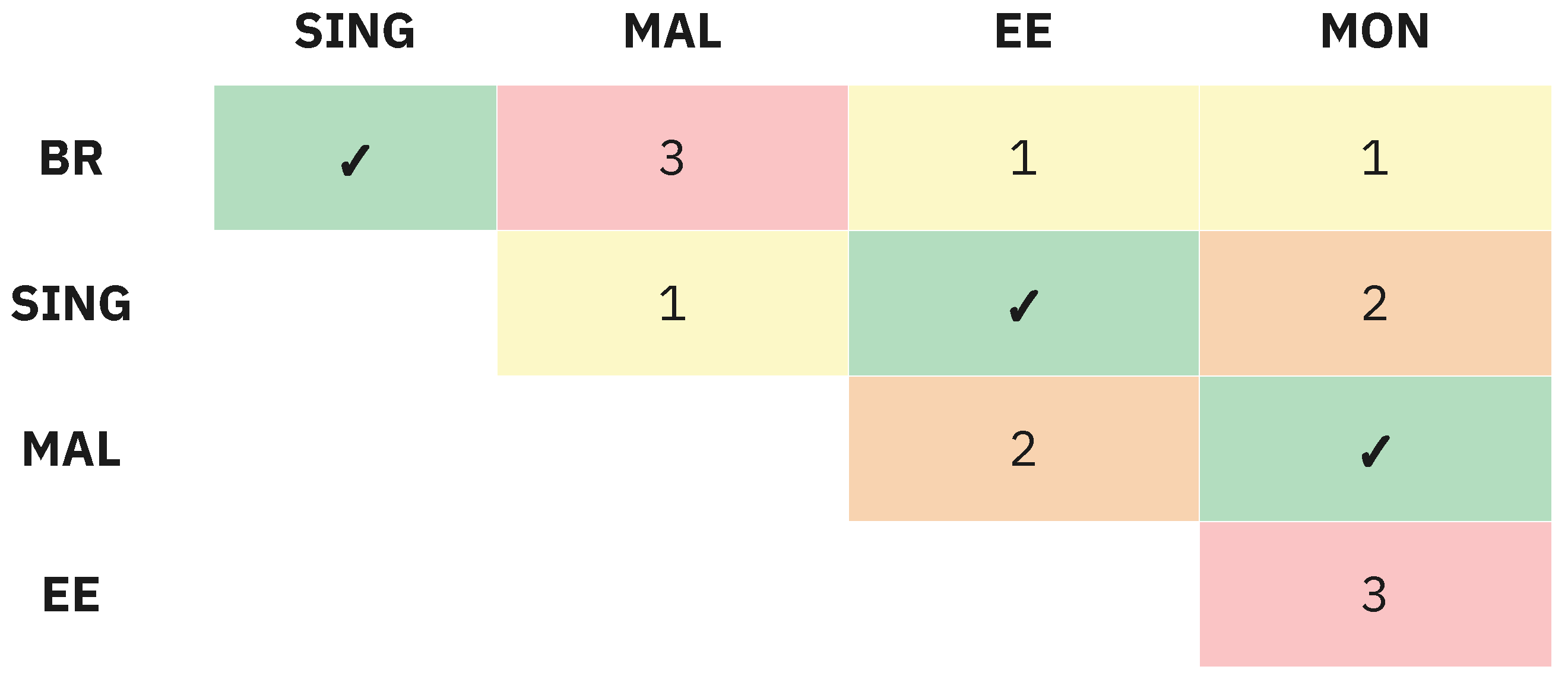

In practical terms, this implies that comparisons between countries can only be confidently conducted between the pairs in which compositional invariance was achieved. Figure 4 summarizes the paired comparisons. The pairs with check marks (✓) achieved compositional invariance. The others have partial compositional invariance, and the numbers in the cells reflect the amount of constructs that do not satisfy the condition. For the pairs with partial compositional invariance, only the constructs that satisfy the condition should be compared, as per

Table 4.

According to MICOM’s guidelines, the third step of the analysis should only be performed if compositional invariance is achieved [

4], which was not the case in our study. Most pairs did not reach compositional invariance, as shown in

Table 4. However, three pairs did not meet the criteria but had a single borderline variance value for Trust, while two others had variances for a single exogenous construct each. Considering that these results are not so far from meeting the criteria in most cases, we chose to proceed with the analysis. Nevertheless, we stress that this decision deviates from a rigorous MICOM procedure and is justified by our aim to provide further exploratory insights into our work.

In the third and final MICOM step, the assessment of equal means and variances, no pair had equal composite mean values, but three pairs had equal variances. The fact that none of the group pairs had equal mean values indicates that the average scores on the constructs differ between the groups. This suggests that individuals from different countries likely perceive trust in technology differently. For the three pairs that exhibited equal variances (Brazil vs. Mongolia, Singapore vs. Malaysia, and Singapore vs. Estonia), the consistency or spread of responses within those groups is similar. More specifically, the only pair that satisfied the conditions for compositional invariance and equal variances was Singapore vs. Estonia. These results imply that while significant differences exist between the countries, some comparisons remain valid, particularly regarding the relationships between constructs.

Thus, we can assume that the scale has partial compositional invariance across the countries investigated, with the invariance varying between the countries compared. This means that the data cannot be pooled, and comparing the results between countries is feasible with caution [

4],considering which specific indicators or constructs differ across the analyzed groups.

Although our results are limited to five countries, the findings provide evidence that it is necessary to further evaluate the behavior of the psychometric instrument (HCTS or others) before making cross-country comparisons, as the differences between the populations can affect the relationship between endogenous and exogenous constructs. In practice, it means that the comparison of results between groups might embed the differences in their interpretation of the constructs.

Interestingly, the study outcomes also enabled us to explore these differences further. The results helped us identify areas where the instrument behaves consistently or inconsistently, serving as a basis for understanding the disparities between groups and improving the accuracy of our trust assessment results. Building on this, we examined the results for each country.

5.2. Effects of National Culture on the HCTS

The path coefficients (

Table 7 and

Figure 2) point towards the existence of two main groups among the countries. The first includes Brazil, Malaysia, and Mongolia, in which COM has a considerably higher weight than BEN in shaping Trust. Additionally, Malaysia and Mongolia have similar proportions of PR and SC.

Another group can be identified between Singapore and Estonia, where the weights of all constructs follow similar proportions. Most notably, BEN and COM have inverse weights compared to the other group. The bootstrapping results revealed that the differences are not statistically significant in most cases, but this might have been caused by the lack of full measurement invariance, which can affect the validity of the estimations [

3].

If analyzed with the MICOM results, we can see that the paired comparisons between Malaysia and Mongolia, and Singapore and Estonia, reached compositional invariance, and Brazil and Mongolia had a borderline result; and Singapore and Estonia have additionally equal variances. These findings suggest that the outcomes of the HCTS for these specific pairs can be compared with greater confidence. Notably, this interpretation prioritizes the triangulation of the methods over the strictness of the thresholds to explore how the procedures applied can be used to explore further differences between the groups, which can lead to practical insights. However, we emphasize that these findings are speculative and should be used to guide further investigations and not generalizations.

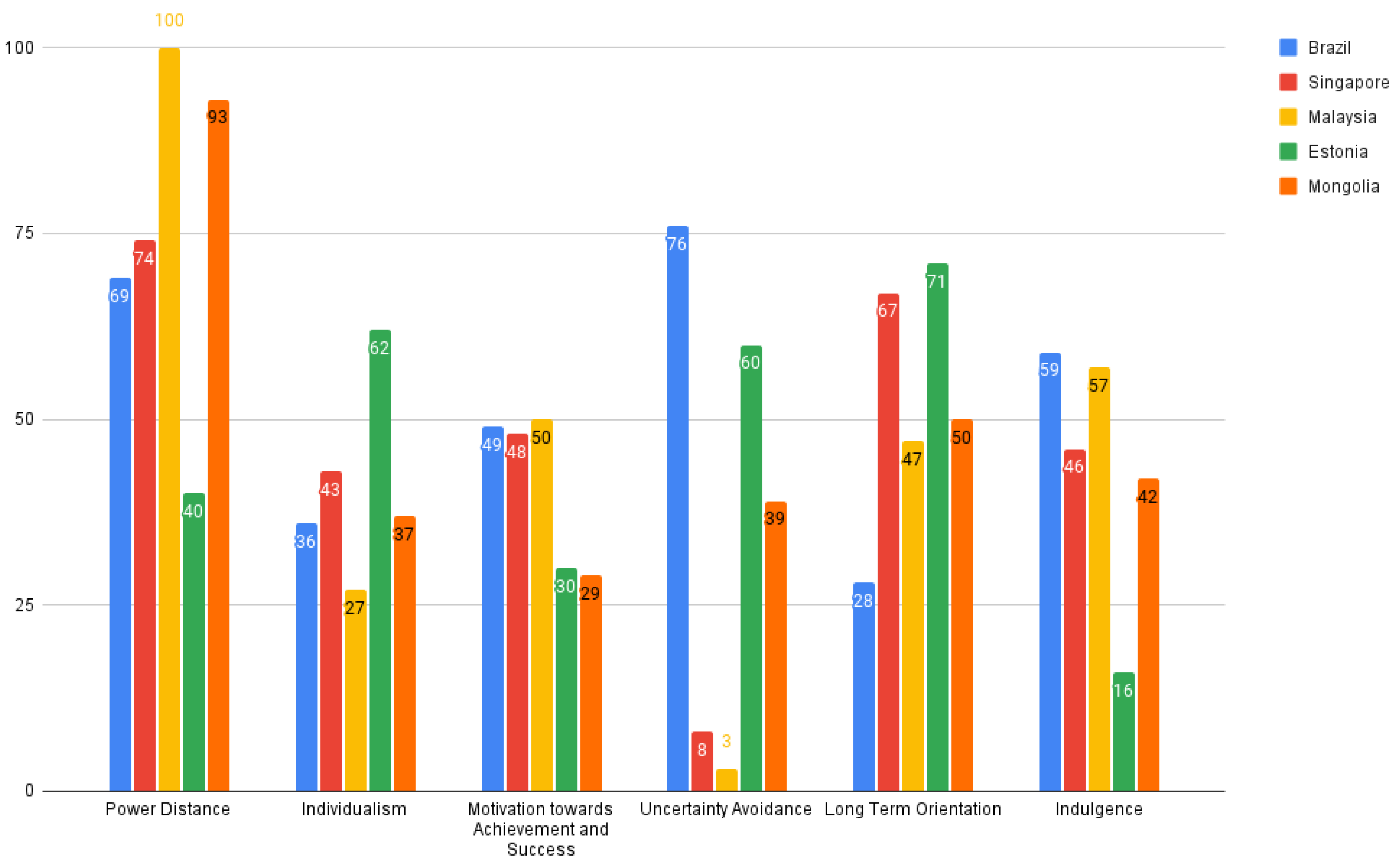

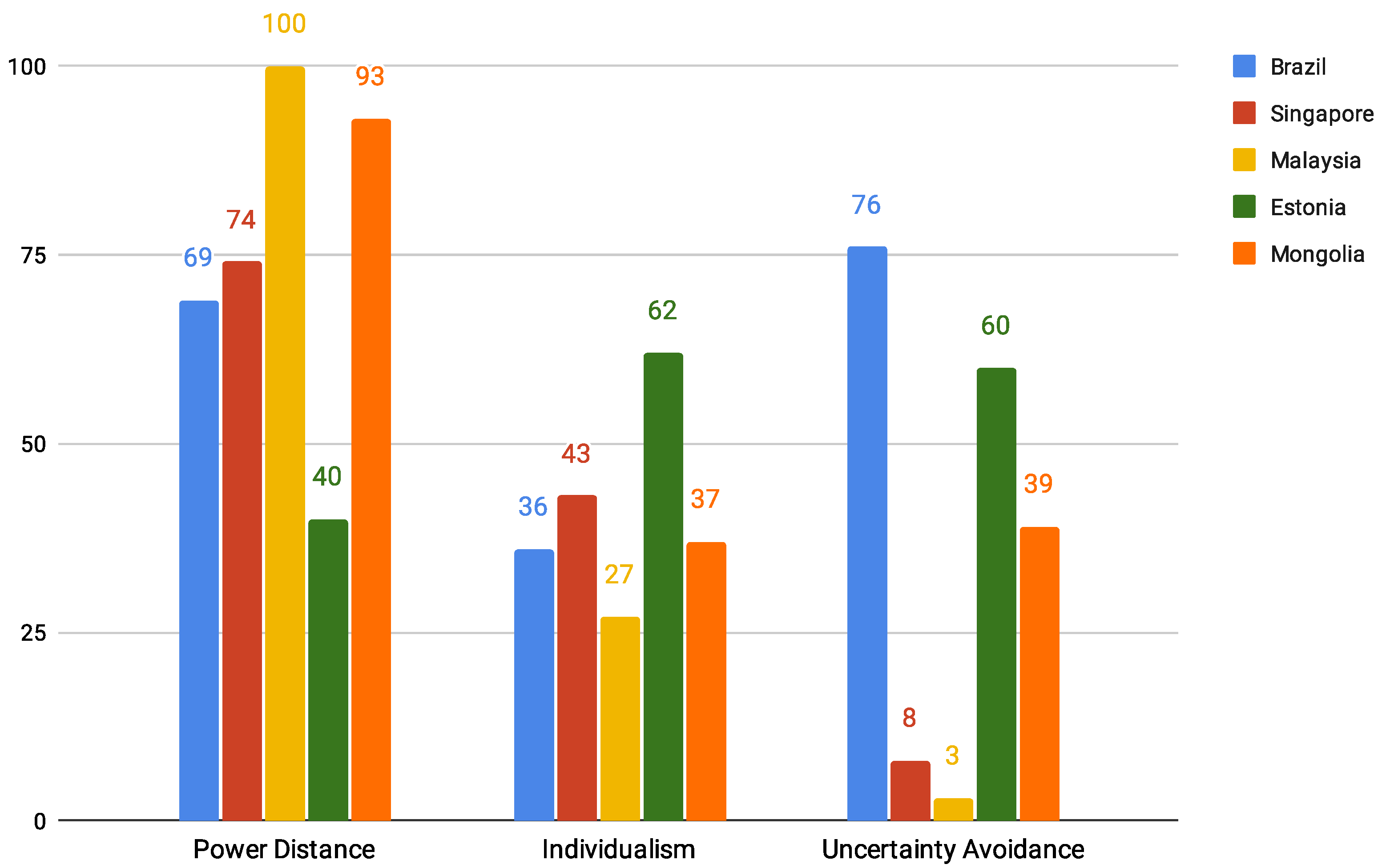

Returning to Hofstede’s cultural dimensions model [

37], we observe that it provides limited insight into the groupings. As illustrated in

Figure 5, Singapore and Estonia, which show the highest similarities in how the model functions, have notably different scores for Power Distance (Estonia = 40, Singapore = 74) and Uncertainty Avoidance (Estonia = 60, Singapore = 8). While they also differ in Individualism (Estonia = 62, Singapore = 43), both nations have the highest scores among the analyzed countries. One hypothesis is that their higher levels of Individualism foster similar views on autonomy and privacy, which, in turn, may shape their understanding of trust in technology.

Malaysia and Mongolia exhibit close values for Power Distance (Malaysia = 100, Mongolia = 93). This dimension might also be related to these populations’ similar interpretations of trust in technology stemming from analogous views on authority and their acceptance of it. In both cases, the technological object under discussion, FRSs, may have intensified these relationships. However, we stress that these interpretations are speculative, and further studies are needed to investigate these hypotheses, taking into account the unique characteristics of each country as defined by their cultural dimensions or by considering alternative models.

5.3. Implications

Through the implementation of the MICOM, this study demonstrates that the HCTS, an instrument to measure propensity trust in technology, can behave differently across countries. As such, the main implication of our study is that the comparison of such assessment results between countries may be misleading if these differences are not accounted for. The most rigorous solution to this issue is to follow the MICOM procedure before making such comparisons. However, as this may not be feasible, other strategies may be adopted to mitigate this issue, such as investigating the differences between the countries from qualitative-oriented approaches.

In addition, the results indicate that the understanding of trust in technology can vary significantly from one country to another, shaped by cultural perceptions and values. This is crucial for HCI because understanding these differences is essential for designing technologies that better align with users’ expectations in various regions.

By highlighting how trust formation varies across cultures, this study also demonstrates how multilayered this topic is. While the focus of our study is to move towards more accurate assessments using the HCTS, the analysis also led to insights about the differences in the meaning of trust in technology for the groups. It is also noteworthy that we approached culture from a single perspective, from the national lens, which is one among numerous ways to investigate culture. Our results underscore the necessity for further cross-cultural investigations, following other approaches.

If we consider emerging discussions about reaching the optimal, not the highest, levels of trust [

29], these findings imply that trust calibration mechanisms must be tailored, considering the varying expectations of system performance and reliability. For instance, in Brazil, Malaysia, and Mongolia, mechanisms focused on Benevolence [

14], such as providing adequate support or fostering community involvement, could more strongly influence trust. Conversely, Competence plays a greater role in Estonia and Singapore, so demonstrating that the system meets high technical standards, has precision, and is reliable [

26] would have more meaningful effects on trust.

Finally, our outcomes demonstrate the complex and contextual nature of trust in technology. While the insights are useful for designing systems that address different concerns, ethical considerations must be prioritized. Knowledge about differences in trust can improve interactions and empower individuals, but it can similarly be used to deceive them and exacerbate existing disparities. This is even more crucial when considering our object of study, FRSs, as this technology has strong social implications. This discussion is beyond the scope of our research, as here, FRSs were used merely as a prompt, and questions regarding their actual implementation require a much more detailed account. Nevertheless, we highlight that researchers and practitioners investigating FRSs, and more generally, trust in technology, must follow ethical practices and commit to respecting fundamental rights.

6. Conclusions

This study contributes to the field of trust in technology by advancing the cross-country validation of the HCTS. By employing the MICOM procedure, we assessed the psychometric properties of the HCTS across five culturally diverse countries: Brazil, Singapore, Malaysia, Estonia, and Mongolia. Our findings revealed partial measurement invariance, indicating that while the instrument shows potential for cross-cultural applications, the results should be interpreted with caution because there are differences in how trust is understood and formed across these groups.

Our results underscore the importance of rigorous validation processes when applying psychometric instruments in cross-cultural contexts. Without such assessments, researchers may draw inaccurate conclusions about trust differences based on measurement variances rather than genuine disparities. The exploratory nature of our approach highlights the challenges of establishing full invariance and demonstrates the method’s potential in identifying patterns among the groups.

Additionally, our outcomes provide evidence of the interplay between national culture and trust in technology, emphasizing the need for future research to move beyond national boundaries to explore other dimensions of culture. While we focused on a single case (facial recognition systems for law enforcement), the findings are also useful for reflecting on the interaction with other applications.

Overall, this study takes a step toward enhancing the robustness and applicability of the HCTS for cross-cultural research and understanding differences in trust in technology across countries. We expect our findings to guide further culturally sensitive research on trust in technology.