Abstract

Intelligent generation technology has been widely applied in the field of design, serving as an essential tool for many designers. This study focuses on the paper-cut patterns of Qin Naishiqing, an inheritor of Dong paper-cutting intangible cultural heritage, and explores the AI-assisted generation of Dong paper-cut patterns under designer–AI collaborative control. It proposes a new role for designers in human–AI collaborative design—the “designer-in-the-loop” model. From the perspective of dataset annotation, designers conduct visual feature analysis, Shape Factor Extraction, and Semantic Factor extraction of paper-cut patterns, actively participating in dataset construction, annotation, and collaborative control methods, including using localized LoRA for detail enhancement and creating controllable collaborative modes through contour lines and structural lines, evaluation of generated results, and iterative optimization. The experimental results demonstrate that the intelligent generation approach under the “designer-in-the-loop” model, combined with designer–AI controllable collaboration, effectively enhances the generation of specific-style Dong paper-cut patterns with limited sample data. This study provides new insights and practical methodologies for the intelligent generation of other stylistic patterns.

1. Introduction

Dong paper-cutting originated in the Dong ethnic communities of Guizhou, Hunan, and Guangxi provinces in China, particularly in areas such as Tongdao County (Hunan), Liping County (Guizhou), and Sanjiang Dong Autonomous County (Guangxi). Dong paper-cutting is not only a folk art form but is also a symbol that has been inherited through generations with ethnic memories. It has strong roots in Dong embroidery, serving as an important foundation for the design of Dong embroidery patterns and providing a unique visual representation. In Sanjiang, Dong embroidery is also known as “paper-cut embroidery”, which reflects the central role core of paper-cutting in pattern conception and technique.

Qin Naishiqing, born in Sanjiang Dong Autonomous County, is a representative inheritor of Dong embroidery. Her paper-cutting works exhibit highly personal styles, with unique forms, vivid individuality, and graceful smooth lines. Her works show a strong artistic identity that rivals that of outstanding graphic designers. Qin’s paper-cut motifs are primarily inspired by the totemic and nature-based beliefs of the Dong people, deeply rooted in life yet transcending its materials. She presents her love for plants, birds, and other animals through a symbolic lens, creating designs with a spiritual quality. During the paper-cutting process, she incorporates her understanding, emotions, and experiences to form an art style that mixes Dong culture, beliefs, and personal intentions into a symbolic language. This visual presentation has contributed to Qin’s unique artistic charm and emotional appeal.

Qin passed away in 2022. Due to the high demands of time and skills required for learning Dong paper-cutting, fewer artisans are willing to learn the craft, which has led to the risk of Dong paper-cutting being lost. In recent years, with the development of AIGC (AI-generated content) technology, generative design has attracted increasing attention and application from designers. Intelligent generation has been widely integrated into various fields of artistic design, bringing new possibilities for creative exploration, design innovation, and efficiency [1,2,3,4].

Currently, many scholars are researching intelligent generation tools, with most studies focusing on computer experts analyzing different generation models from an algorithmic perspective [5]. Others examine the use and impact of generative tools in various stages of the design process from the designer’s perspective, focusing on the evolving roles of designers in new workflows [6]. AIGC technology is revolutionary, but the point-to-point generation from input to output often fails to align with the creative exploration and cognitive processes inherent in design. Design creation is an iterative process of problem discovery and solution development, representing a co-evolutionary dialogue between designers and intelligent models [7]. Intelligent generation is not a replacement for design work, but rather collaboration between designers and machines can complement each other, enhancing output quality [8]. When designers intervene in the generated output through editable parameters, they are more likely to accept and trust the machine’s output [9,10].

In the collaboration between designers and AIGC, designers are transitioning from traditional creators to creators with management and training skills, presenting new learning opportunities [9]. The selection and aesthetic evaluation of generated results requires designers to have excellent taste and decision-making abilities. In the field of graphic intelligent generation, much research has focused on generating full images, emphasizing deep analysis of image content and the semantic interpretation of image elements, both of which are crucial for generation quality [11,12]. However, regarding the generation of small-sample patterns with specific, distinct styles, several questions remain to be explored:

- How should designers participate in dataset construction and annotation?

- How can designers intervene in the model to improve generation quality?

- How should designers evaluate and iterate on generated results?

These issues deserve further exploration and research.

This paper analyzes the visual features and cultural semantics of Dong paper-cutting patterns from the perspective of dataset annotation, proposing a dataset pattern analysis and semantic analysis method led by designers. It primarily explores the “designer-in-the-loop” full-process participation model in the era of generative AI, constructs a human–AI collaborative intelligent generation framework, and investigates the collaborative control methods between designers and intelligent models to generate paper-cutting patterns with both traditional stylistic features and innovative expression. The specific contributions can be summarized as follows:

- The new role of “designer-in-the-loop” in the generative AI era, where designers participate in the entire process of dataset construction, collaborative control of the generation process, evaluation of generated results, and continuous iteration.

- A visual analysis of Dong paper-cutting patterns from the perspective of dataset annotation, proposing methods for designers to analyze and semantically interpret paper-cutting datasets.

- Verification of the intelligent generation model through experiments, exploring methods of collaborative control between designers and AI to generate Dong paper-cutting patterns, including using localized LoRA for detail enhancement and creating controllable collaborative modes through contour lines and structural lines.

2. Related Work

2.1. Dataset Construction and Annotation for Traditional Patterns

In the intelligent generation of traditional patterns, dataset annotation is crucial for both model training and the quality of generation results. A high-quality dataset not only needs to include a diverse range of pattern samples but also must contain precise annotation information to capture the cultural semantics and stylistic features of the patterns. Currently, most dataset annotations use machine labeling followed by the human correction method, where automatic annotations are first generated by tools like DeepBooru or WD1.4 Tagger and then manually refined by humans [13,14]. Many researchers have developed intelligent automatic annotation systems for different datasets. For example, some researchers have used multi-class dictionary learning methods for semantic annotation and analysis of ethnic cultural patterns, achieving automatic classification and annotation of the patterns (e.g., “Semantic Annotation of Ethnic Cultural Patterns Based on Dictionary Learning”, Zhao Haiying [15]).

Chen Jing from the School of Arts at Nanjing University, in collaboration with major universities, initiated the Traditionow Lab, which has collected and integrated a vast amount of multimedia data on Chinese intangible cultural heritage. They have constructed a knowledge graph based on professional terminology and its network, deeply deconstructing, reproducing, and reorganizing intangible cultural heritage knowledge from the dimensions of craftsmanship, color, and pattern, and established the “Chinese Intangible Cultural Heritage Gene Database”, providing an important foundation for training generative AI models. Similarly, David G. Stork [16] pointed out some challenges in AI semantic extraction methods through research on automatically computing metaphorical meanings of artworks and mentioned that using semantic segmentation methods to annotate the features of artworks can significantly enhance the detail expression capabilities of generative models.

The multidimensional features of cultural relics (including shape, material, decoration, and cultural background) serve as a basis for constructing metaphorical knowledge graphs. By systematically analyzing the relationships between these features, a classification system for cultural relics knowledge can be established, enabling the identification of deeper metaphorical knowledge such as symbolic meaning and cultural connotations. This knowledge graph-based approach provides theoretical support for annotating traditional pattern datasets, facilitating the precise identification and classification of cultural semantics in the patterns, thereby promoting the digital preservation and research of traditional patterns [17].

2.2. Application of Generative AI in Traditional Pattern Design

The application of generative AI technology in traditional pattern design has made significant progress in recent years, offering new possibilities for the digital preservation and innovation of traditional art. Among the mainstream technologies, Generative Adversarial Networks (GANs) have been widely used in the generation research of traditional patterns, such as embroidery, ceramics, and paper cutting. For example, Yongho Kim et al. [18] used CycleGAN to perform style transfer on real images, generating images that resemble the styles used in historical books and successfully integrating tradition with reality through style transfer. Yan, Han, et al. [19] applied style transfer techniques to integrate traditional ceramic patterns into modern decorative designs, demonstrating the potential of generative AI in cross-era design. Additionally, Variational Autoencoders (VAEs) and Diffusion Models have also demonstrated unique advantages in pattern generation [20,21]. VAEs excel in feature extraction and data compression [22], while Diffusion Models are highly effective in generating high-quality images [23]. However, existing research is mainly focused on technological implementation, with limited attention given to collaborative cooperation between designers and intelligent generative models. Furthermore, challenges remain in understanding cultural semantics and expressing fine details. For instance, Jiangfeng Liu et al. [24] noted that generative AI struggles to accurately capture the cultural connotations behind traditional patterns, leading to generation outcomes that lack cultural accuracy. To address these limitations, this paper proposes an AI-controllable collaborative method between designers and intelligent generative models, specifically targeting small sample-sized Dong paper-cut patterns. This approach aims to explore methods for improving cultural semantic understanding and detail expression in generative AI, offering new insights for the digital preservation and innovation of traditional pattern design.

3. Study Methods

3.1. Dataset Visual Feature Analysis and Annotation from the Designer’s Perspective

In the intelligent generation of traditional patterns, the involvement of designers is crucial, especially during the dataset annotation phase, which aligns with the “designer-in-the-loop” paradigm. In this paradigm, designers approach the task by focusing on the overall visual features of the dataset, conducting systematic and comprehensive analyses, and completing annotations based on the significance of these features. The sample size of Qin’s Dong paper-cut pattern dataset is relatively limited, making it especially important to ensure the quality of annotation to extract effective features from this limited sample [25]. Consequently, this study investigates the visual features of paper-cut patterns from a design perspective and optimizes the machine-generated annotation. This dual-stage processing approach enhances annotation quality while maintaining efficiency, providing reliable data support for subsequent research.

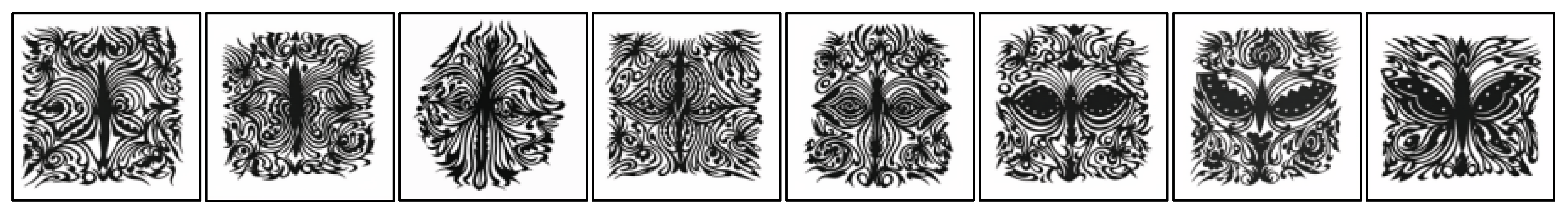

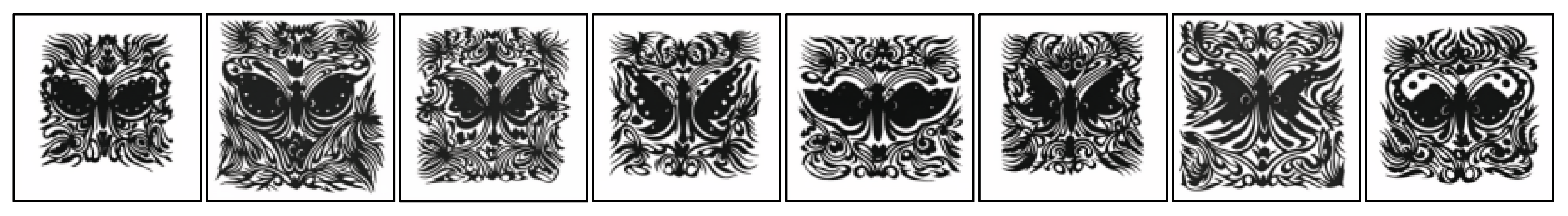

Qin’s Dong paper-cut patterns mainly include animal patterns, plant patterns, and totem patterns. Animal patterns feature motifs such as fish, birds, butterflies, roosters, and horses; plant patterns include banyan trees, gourds, and lotus flowers; and totem patterns incorporate sunflowers, phoenixes, and spider flower patterns. These patterns reflect the Dong people’s wishes for peace, happiness, and a better life, serving as artistic interpretations of life, nature, and belief. When analyzing the visual features from a design perspective, the focus is on “form” and “meaning”. By interpreting the “form”, one can understand the “meaning” and “significance” embedded in the patterns. Before generating paper-cut patterns, it is necessary to analyze the visual features of the patterns and extract meaningful graphical shapes and structures, and the symbolic meanings they convey, including form, style, metaphor, symbolism, etc. [18]. This process guides and supports dataset annotation and evaluation during the intelligent generation process, as illustrated in Figure 1.

Figure 1.

Visual feature design analysis map based on dataset annotation.

3.1.1. Shape Factor Extraction for Qin Naishiqing’s Paper-Cut Patterns Based on Data Annotation

The shapes and lines of paper-cut patterns are the core of the design. The arrangement, size, and proportional relationships of these elements directly affect the aesthetics, balance, and style of the patterns [26]. In Qin Naishiqing’s 130 paper-cut works, we focused on analyzing the shape elements and lines, extracting and summarizing the most recognizable features. These features are primarily reflected in the pattern’s shape factors, which can be considered the reusable “language” of the pattern [11]. The use of reusable language helps create consistent styles. These lines and shape elements can be combined and applied in various ways across different contexts, resulting in diverse works that maintain consistent stylistic characteristics.

During the process of analyzing Qin’s paper-cut patterns (lines), we selected 25 representative works for analysis. The patterns were categorized by their subject matter: 5 were plant-based, namely, 4 floral patterns and 1 gourd pattern; 20 were animal-based, with 7 dragon patterns, 4 phoenix patterns, 3 butterfly patterns, 3 shrimp patterns, 3 bird patterns, and 1 lion pattern. As shown in Figure 2 and Table 1, from these 25 patterns, 43 single-line structure patterns, 20 double-line structure patterns, and 20 multi-line structure patterns were extracted.

Figure 2.

Schematic diagram of pattern structure splitting and extraction.

Table 1.

Structure line extraction and examples.

A single-line structure refers to the most basic pattern involving one or several lines, with relatively simple arrangements. A double-line structure consists of two or more interwoven lines, creating additional layers and details. A multi-line structure involves a higher number of lines and greater complexity, with combinations of multiple sets of lines. By extracting single, double, and multi-line structures, we aim to ensure that, in the later generation process, single- and double-line structures appear more diversely within the same generated pattern, rather than merely repeating the single structure patterns. Additionally, we decomposed the structure of animal patterns, extracting 6 head patterns, 17 body patterns, 2 foot patterns, and 9 tail patterns. This decomposition aims to better control the generated shapes through dynamic poses in the intelligent generation process. This is detailed in Table 2.

Table 2.

Trunk pattern extraction and examples.

3.1.2. Extraction of Semantic Factors in Qin Naishiqing’s Paper-Cut Patterns Based on Data Annotation

The extraction of semantic factors aims to identify the hidden meanings, symbols, and cultural connotations embedded within the patterns, serving as implicit features to interpret their style and symbolic significance [16,27]. In the context of Dong culture, certain symbols carry specific meanings. Through discussions with Qin, we explored her interpretations and understanding of pattern semantics, as well as how she infused specific meanings and emotions into her designs during the creative process.

Based on this understanding, our research team—consisting of eight designers and three Dong artisans—classified and summarized the semantic factors from multiple perspectives, including visual symbolism, regional culture, and totemic worship.

For the extraction of semantic factors in paper-cut patterns, we conducted an analysis of three primary categories: animals (as shown in Table 3), plants (as shown in Table 4), and religious symbols (as shown in Table 5). From this analysis, we distilled various symbolic meanings associated with the patterns, including auspiciousness, peace, happiness, love, freedom, fertility, power, strength, hope, harmony, health, and life. These insights ensure that during interpretation, preservation, and AI generation, the cultural connotations and symbolic significance of these patterns are accurately conveyed [28]. Furthermore, this process contributes to the generation of high-quality paper-cut patterns that retain meaningful semantic factors.

Table 3.

Symbolic meaning of patterns.

Table 4.

Experimental researcher information.

Table 5.

Pre-experimentation generates results.

3.2. Intelligent Generation Model for Dong Paper-Cut Patterns

In graphic intelligent generation models, Generative Adversarial Networks (GANs) are currently the mainstream approach [26,29,30]. These models learn the artistic expression methods of sample patterns through adversarial training, enabling the generation of entirely new patterns based on the extracted features. However, this method requires substantial training data [31]. For Dong paper-cut patterns, which exhibit specific stylistic characteristics, the dataset is limited in size, varies in dimensions, and lacks strong regularity. Although data augmentation techniques can be applied to expand the dataset, excessive repetition often leads to generating patterns that lack meaningful novelty [32].

Stable Diffusion, a diffusion-based image generation model, can produce high-quality, high-resolution images by simulating a diffusion process that gradually transforms a noise image into a target image [33]. This model is characterized by its high stability and controllability, making it a valuable tool for designers [34]. It enables the generation of diverse and high-quality images, the restoration of damaged images, the enhancement of image resolution, and the application of specific segmentation techniques, thereby facilitating advanced creative visual expression [35]. Given the importance of preserving traditional Dong paper-cut art and maintaining the distinctive stylistic features of Qin’s patterns, this study adopts the Stable Diffusion model for pattern generation.

Due to the limited dataset of Dong paper-cut patterns—comprising just over a hundred images—it is insufficient to support the retraining of foundational models such as Stable Diffusion or contrastive text–image pretraining models like CLIP. Direct fine-tuning of the base model presents two key challenges: (1) Fine-tuning such a large-scale model requires significant computational resources, including time and memory, posing a major challenge for researchers and institutions [36]; and (2) with an extremely small dataset, fine-tuning can lead to overfitting, which may degrade the pre-trained model’s generalization capability and disrupt its prior knowledge [14].

To address these challenges, this study utilizes a lightweight Low-Rank Adaptation (LoRA) model to learn the specific style of Dong paper-cut patterns within a limited dataset. LoRA offers several advantages, including low parameter requirements, minimal training data needs, and reduced hardware demands, making it feasible to train on consumer-grade GPUs. Importantly, LoRA does not alter the parameters of the base model, thereby preserving the original generative capabilities of Stable Diffusion.

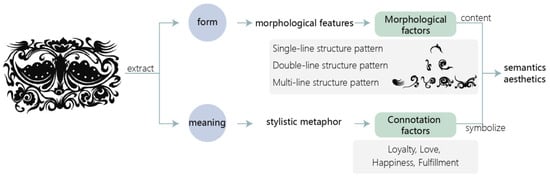

In our experiments, we fine-tuned an open-source Stable Diffusion model using LoRA. The structure of the LoRA model [37,38] is illustrated in Figure 3.

Figure 3.

LoRA model structure.

In our experiment, we fine-tuned the open-source Stable Diffusion model using LoRA (Low-Rank Adaptation) technology (The experiments were conducted using a desktop computer equipped with a 13th Gen Intel(R) Core(TM) i5-13490F CPU, NVIDIA GeForce RTX 4070 GPU (NVIDIA Corporation, Santa Clara, CA, USA), and 16 GB of RAM). Figure 3 shows the overall structure and working principle of the model.

The image on the left is the overall flowchart of the model. The model takes text or images as conditional inputs, and after feature extraction through the conditional encoder, these inputs are fed into the model. The blue box represents the pre-trained Stable Diffusion model, whose parameters remain fixed during fine-tuning. The red box represents the LoRA model, whose parameters are dynamically learned during fine-tuning. By adjusting the output of the pre-trained model, the model optimizes the task of generating Dong ethnic paper-cut designs.

The image on the right illustrates the principle of embedding the LoRA model into the pre-trained Stable Diffusion model. The LoRA model is loaded onto the linear layer of the pre-trained model () by introducing low-rank matrices and (where r is the rank, weights are initialized as (0, 2), and is initialized to zero). This process decomposes and adjusts the original weight matrix. After modulation by LoRA, the input feature generates the output feature , thus enabling dynamic adjustments to the output of the pre-trained model.

The fine-tuning process is represented by Formula (1):

where h represents the final model output after fine-tuning with the LoRA model, W0 is the output of the initial base model, and BA is the low-rank matrix representing the LoRA model’s output. Intuitively, the fine-tuning process integrates the LoRA model’s output on top of the base model’s original output. During training, the base model parameters W0 are frozen, and only the low-rank matrices B and A, which contain a minimal number of parameters, are trained. This approach preserves the generative capabilities of the base model while enabling the learning of Dong paper-cut styles at a lower computational cost.

Additionally, to further enhance control over the generated results, we integrate ControlNet to impose additional constraints during the generation process. By specifying key points, structural frameworks, or silhouettes, ControlNet enables finer control over the output, thereby improving artistic quality and stylistic accuracy [39].

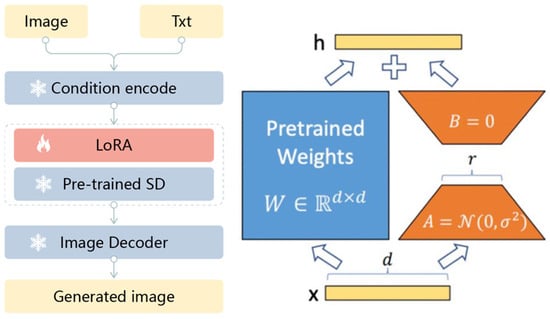

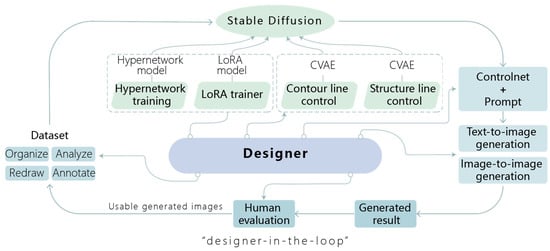

3.3. Designer Participation in the Dong Paper-Cutting Intelligent Generation Workflow

During the research on the intelligent generation of Qin Naishiqing’s paper-cutting patterns, the research team was actively involved in the construction and annotation of the dataset in the initial stages. By systematically analyzing the visual characteristics of the patterns, the team fine-tuned a pre-trained generative model using the LoRA approach to learn and capture the unique style of the paper-cut patterns. Simultaneously, the team explored interactive control mechanisms using contour lines and structural lines as key visual elements to refine the generative output. This approach further enhanced the artistic expression and stylistic consistency of the generated patterns.

Additionally, the research team actively participated in evaluating generated patterns. Through comprehensive assessments of the outputs, the team iteratively optimized the annotated dataset, interactive control parameters, and text prompts, leading to continuous improvements in the generation results. Figure 4 illustrates the collaborative workflow between designers and the intelligent generation model for Dong paper-cutting. It provides a detailed breakdown of the designer’s role in the pattern generation process and their interaction with the AI system.

Figure 4.

Designers participate in Dong paper-cutting intelligent generation flowchart.

3.4. Experiment

3.4.1. Participants

As shown in Table 4, the research team comprised eight members, including four PhD holders and four graduate students, with an equal gender distribution of four males and four females. The team members represented diverse academic backgrounds: spanning visual design, industrial design, and computer science. Specifically, the team included three visual designers, three industrial designers, and two software engineers. The researchers with design backgrounds had over seven years of experience in artistic design, while the engineers with computing backgrounds had more than eight years of relevant professional experience.

The research team was actively involved in key stages of the study, including dataset analysis, experimental design, and generation evaluation. Furthermore, the pre-experiment result analysis and finalization of the experimental plan were collaboratively conducted by all eight team members, ensuring the rigor of the research process and the reliability of the results.

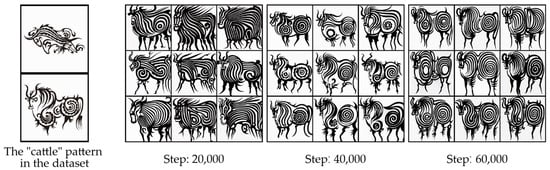

3.4.2. Pre-Experiment Process and Analysis

The research team collected a total of 130 paper-cut artworks by Qin Naishiqing. Prior to conducting the pre-experiment, the team performed model training experiments using the original dataset, testing training steps at 20,000, 40,000, and 60,000 iterations to determine the optimal number of training steps.

Through a comparative analysis of the generated results, the team observed that at 40,000 training steps, the generated patterns exhibited significantly better shape integrity and line clarity compared to those trained with other step counts. In contrast, the model trained with 60,000 steps demonstrated evident overfitting. Consequently, subsequent pre-experiments (1–4) and the formal experiment were all conducted using 40,000 training steps, as illustrated in Figure 5. This approach ensured that the model maintained high artistic expressiveness in generating patterns while mitigating the adverse effects of overfitting.

Figure 5.

Generate results in different steps of arithmetic.

Data Organization and Annotation

The experiment commenced with the systematic organization of the collected Qin Naishiqing paper-cut patterns, resulting in a dataset comprising 130 raw images. The research team utilized tools such as DeepBooru and WD1.4 Tagger to automatically annotate the images, ensuring consistency and accuracy in the data annotation process. To facilitate a comprehensive comparative analysis of the experimental results, the study was based on the Stable Diffusion (SD) model and conducted experiments using both text-to-image and image-to-image modules. The theme “cattle” was chosen for the experiment, with a unified guiding prompt applied for generating the patterns.

Pre-experiment Design and Dataset Optimization

The study conducted four rounds of pre-experiments, progressively optimizing the dataset and annotation strategies to enhance the quality of AI-generated paper-cut patterns:

Pre-experiment 1: The 130 raw images collected were used directly without any analysis or processing. Automatic annotation tools were employed to generate labels, and the experiment was conducted based on these annotations.

Pre-experiment 2: From the original dataset, 27 paper-cut patterns related to animal themes were selected, and the animal attributes of each image were explicitly labeled during the annotation process. For example, patterns featuring “cattle” were labeled with the tag “Tag: cattle”.

Pre-experiment 3: In the annotation of the 27 Zodiac animal patterns, the animal attribute words were removed and replaced with stylistic descriptors to explore the impact of stylized annotations on the generation results.

Pre-experiment 4: A subset of 100 high-quality patterns was selected from the original dataset and the generation results of pre-experiments 1 to 3 to create a new sample dataset. To further diversify the data, the research team performed horizontal flipping on these 100 patterns, resulting in an augmented dataset of 200 patterns. Additionally, all attribute content words were removed from the annotations, retaining only stylistic descriptors. The generation results from the four pre-experiments are presented in Table 5, illustrating the optimization effects achieved at each stage.

Evaluation and Optimization

The experimental results were evaluated through expert interviews to assess their artistic expressiveness and cultural alignment. Based on feedback, adjustments were made to the dataset, labels, and guiding word configurations.

In Pre-experiment 2, the text-to-image generation was significantly influenced by the labeled tags. Specifically, when the dataset included samples tagged with “cattle”, the AI tended to focus on these keywords, leading to overfitting in terms of the generated shape and pattern details, which resulted in overly uniform and repetitive designs. These findings indicate that the label-based approach may constrain diversity in generated results.

In Pre-experiment 3, although this experiment focused on animal patterns, some of the animal motifs were not the most representative of traditional paper-cut styles, which limited the model’s ability to recognize distinctive pattern features. Additionally, the sample size further restricted the model’s ability to generalize and generate diverse outputs that reflect the full range of traditional design motifs.

In Pre-experiment 4, the use of a larger and more refined dataset led to significant improvements. The text-to-image generation produced more varied shapes and intricate patterns, with a more distinct style. However, the model remained influenced by the fixed shapes within the dataset, leading to high similarity among generated images. While the richness of the patterns improved compared to the previous experiments, some repetition in the generated results.

Through four rounds of iterative optimization in pre-experiments, the research team finalized the formal experiment’s dataset, label system, and guiding word configurations, establishing a robust foundation for subsequent research. These findings further indicate that iteratively expanding the dataset by continuously selecting high-quality patterns from generated results could enhance output diversity, enabling the model to produce culturally accurate and artistically rich patterns with improved quality through refined examples and diversified training data.

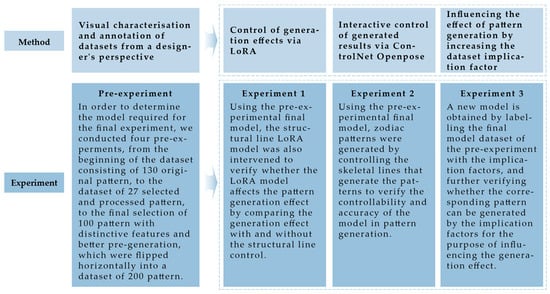

3.4.3. Experimental Methodology and Framework

Based on the results of the pre-experiments, the research team finalized the optimized dataset and labeling strategy. To further enhance the controllability and artistic richness of the generated results, the team integrated the LoRA model with contour and structural lines for interactive control, enabling fine-tuned adjustments throughout the generation process.

Additionally, leveraging semantic analysis from the previous data annotation phase, the team introduced semantic factors to guide the generation process. This ensured that the generated patterns not only adhered to the required visual style but also embodied deeper cultural meanings. The generated results were systematically analyzed to evaluate their artistic expressiveness and cultural coherence. The corresponding experimental framework is illustrated in Figure 6, which outlines the entire workflow, from data preprocessing to the final evaluation of the generated results.

Figure 6.

Experimental design for collaborative generation of patterns between designers and AI.

To investigate the controllable generation of Qin’s Dong paper-cutting patterns, the study followed a structured experimental framework encompassing dataset construction, model training, and controlled generation strategies. The full experimental workflow is illustrated in Figure 6.

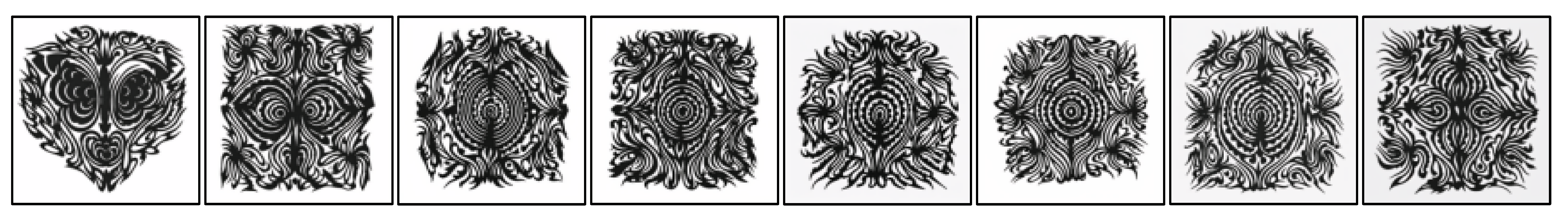

LoRA Integration for Detail Enhancement

A comparative analysis of the pre-experiment results and representative samples from the original dataset revealed deficiencies in the richness of generated pattern details. Taking the “cattle”-themed paper-cut pattern as an example, the original dataset exhibited a high degree of diversity and complexity in the use of single-structure, double-structure, and multi-structure lines. A systematic analysis of the dataset’s visual characteristics revealed that pattern diversity and stylization were primarily determined by structural factors. By extracting and summarizing shape elements and line structures from 25 of the most representative paper-cut patterns by Qin Naishiqing, the research team identified 43 single-structure lines, 20 double-structure lines, and 20 multi-structure lines. To further examine the impact of structure lines on the generation process, three independent LoRA models were trained, each corresponding to the single-line, double-line, and multi-line structures. These LoRA sub-models were subsequently applied to the base model, enabling controlled generation and systematic exploration of how different structural lines affect the final output.

Contour and Skeletal Line-Based Control

In the generation of the “cattle” pattern, designers often require precise control over key structural characteristics, such as the orientation of the cattle’s head, body posture, and limb movements. To accommodate this requirement, the research team conducted experiments to examine the influence of skeletal lines on pattern generation. The twelve zodiac animals were categorized into two groups: (1) animals with a complete skeletal structure, including a head and four limbs and (2) animals without a complete skeletal structure, such as those with no limbs or only two limbs.

For zodiac animals with a full skeletal structure, the research team applied a consistent skeletal framework to generate diverse patterns, ensuring structural uniformity across different animals. In contrast, for zodiac animals without a complete skeletal structure, such as the dragon, the team experimented with multiple skeletal lines spliced into curved forms lines to explore alternative generative possibilities.

Semantic Factor Integration and Prompt Design

In the visual analysis of Dong ethnic paper-cut patterns, the research team examined not only morphological factors but also semantic factors, systematically exploring their significance. From the perspective of pattern generation, incorporating meaning-driven prompts to produce paper-cut designs that convey specific cultural connotations holds both artistic and practical value. Building upon the pre-experiment dataset, the research team assigned semantic factor labels to each pattern, constructing an enriched dataset for further model training. Using this enhanced model, the team tested and compared the generation effects of single-word semantic prompts versus composite semantic prompts. For example, in the case of the “butterfly” motif, 40 images were generated for each prompt type, from which 8 representative images were selected for comparative analysis.

4. Results

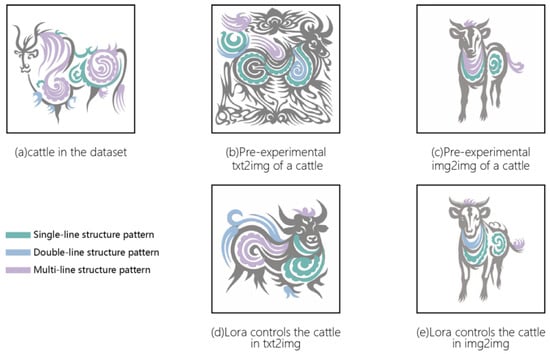

4.1. Enhancing Generation Quality Through LoRA Control

As illustrated in Figure 7, the original “cattle” patterns achieved a layered and visually engaging effect through the interweaving and overlapping of multi-structure lines. However, in the generated patterns, the application of structural lines remained simplistic, failing to fully capture the delicacy and refined craftsmanship characteristic of traditional paper-cut art.

Figure 7.

Structural line resolution of cattle in the original dataset and in AI generation.

Table 6 presents a comparative analysis of the generated results with and without LoRA model control. The findings indicate a significant difference between the two approaches: when the LoRA-controlled structure lines were incorporated, the artistic expressiveness of the generated patterns was substantially enhanced. The optimal results, in terms of detail fidelity and overall composition, were achieved when the three LoRA models were applied with an equal weight of 0.2 during generation. This finding provides crucial insights into parameter optimization for future experiments while also validating the effectiveness of LoRA models in controlling pattern generation and enhancing artistic quality.

Table 6.

Experiment 1—LoRA control experiment.

4.2. Generation Control Based on Skeletal Lines

Table 7 presents the generation results for the twelve zodiac animals. The experimental findings demonstrate that incorporating skeletal lines enabled the generation of new patterns that aligned with the stylistic characteristics of Qin Naishiqing’s paper-cut art. The resulting forms and movements closely adhered to the constraints set by the skeletal lines, confirming that skeletal structures can effectively guide the generation process in large models, enabling precise control over the final outputs. Additionally, the experiment demonstrated the potential of skeletal-line control in pattern generation, offering designers greater creative flexibility and enhanced control over the artistic outcome.

Table 7.

Experiment 2—Skeleton line control experiment.

4.3. Incorporating Semantic Factors for Enhanced Generation

The detailed results are presented in Table 8. The experimental results indicate that integrating semantic factors effectively enhances the cultural expressiveness of generated patterns. Moreover, composite semantic prompts significantly outperform single-word prompts in terms of diversity and artistic expression. This discovery introduces a novel approach for cultural-semantic control in pattern generation, while also validating the critical role of semantic factors in the digital preservation and transmission of traditional motifs.

Table 8.

Experiment 3—Connotation factor control experiment.

4.4. Evaluation and Analysis of Generated Results

The Dong ethnic paper-cut patterns by Qin Naishiqing exhibit distinct artistic characteristics, making the preservation of stylistic continuity, detailed expression, and innovative presentation the core focus of this study. In the process of intelligent generation of paper-cut patterns, a key challenge lies in achieving creative expression while maintaining the original artistic style.

To assess the artistic quality of the generated results, this study adopts the expert evaluation method. The evaluation panel consists of eight experts, including scholars and visual designers with extensive research experience on Qin’s paper-cutting style. These experts possess in-depth knowledge of her stylistic traits, structural language, and decorative elements of his work.

The evaluation process integrates semi-structured interviews with discussion-based analysis, allowing experts to examine the generated images through in-depth dialogue. The assessment covers images generated across multiple experimental stages, ultimately selecting eight patterns that best demonstrate artistic expression. These selected images are then systematically analyzed based on the following five key dimensions:

- Stylistic Consistency: Evaluates whether the generated patterns accurately replicate the overall style of Qin’s paper-cuts, including composition methods, line characteristics, and visual expressiveness. The consensus among all eight experts indicated that the generated patterns generally maintained Qin’s stylistic legacy, with the pre-identified visual stylistic factors (e.g., symmetrical topology, symbolic zoomorphic motifs) being consistently preserved. As noted by E3: “The style and morphology of the generated zodiac patterns align precisely with Qin’s signature aesthetic”. However, critical feedback emerged regarding the fluidity of curvilinear forms. Expert E6 emphasized: “The transitions at curve inflection points lack the rhythmic cadence inherent to manual paper-cutting, appearing overly smoothed and mechanically rigid”. This highlights a key challenge in emulating the organic tension between scissors, paper, and artisan gestures through computational generation.

- Detail Expression: Evaluates whether the generated patterns effectively depict intricate details and successfully incorporate common decorative elements found in Dong paper-cutting, such as floral motifs and geometric patterns. Regarding the dimension of detail expression, five experts unanimously agreed that the generated patterns from Experiment 1, after incorporating LoRA training, exhibited a significantly higher level of detail richness compared to the outputs from the preliminary experiment. However, several experts also raised concerns. Expert E2 noted: “Although the details are rich, their distribution is overly uniform and lacks the rhythmic structure found in the original dataset”. Expert E4 suggested that “more openwork (cut-out) areas could be introduced”, while Expert E8 commented that “some detailed segments lack the structural linkage typically found in traditional paper-cutting”. These insights highlight the importance of not only increasing detail density but also enhancing the spatial rhythm and structural coherence of those details. Future work will focus on refining prompt design and LoRA fine-tuning to improve the rhythmic placement of detailed elements, as well as exploring new annotation strategies to better capture the linkage logic of traditional paper-cut motifs.

- Form Integrity: Assesses whether the structure of the zodiac motifs is clear and proportionally balanced, ensuring alignment with the visual norms and aesthetic standards of paper-cut art. All eight experts affirmed that the generated representations of the twelve zodiac animals exhibited coherent proportions and avoided visual distortions or awkward anatomical configurations. The overall compositions were considered visually harmonious, successfully reflecting the aesthetic sensibilities inherent in Dong paper-cutting traditions. However, Expert E8 raised a specific concern regarding anatomical precision, stating that “the depiction of animal limbs lacks structural accuracy, sometimes resulting in the presence of redundant or excessive lines”. This observation points to occasional inconsistencies in the model’s interpretation of limb articulation, which may detract from the overall visual integrity of the pattern. These findings suggest that while the general form and composition of the motifs are effectively captured, further refinement is needed in the depiction of specific structural elements—particularly limb outlines. In future iterations, targeted annotation of limb structures and the incorporation of refined skeletal line controls could help reduce unnecessary linework and enhance anatomical clarity, thereby strengthening the structural fidelity of the generated motifs.

- Innovation: Examines whether the generated patterns, while retaining traditional stylistic elements, introduce new artistic expressions, demonstrating advancements in shape, structure, or detail design. Overall, the evaluation revealed that the AI-generated patterns succeeded in maintaining the core visual language of traditional Dong paper-cutting while simultaneously integrating new visual elements. The motifs appeared more enriched, with enhanced decorative layers and a more dynamic expression in background line compositions. Expert D2 specifically noted that “the patterns, while preserving traditional stylistic elements, introduce new visual effects-resulting in more elaborate motif compositions and background linework that conveys a stronger sense of expressive tension”. This suggests that the model, especially after LoRA-based fine-tuning, is capable of facilitating stylistic innovation within the boundaries of cultural continuity.

- Cultural Appropriateness: Determines whether the generated results accurately reflect the cultural essence of Dong paper-cutting, adhering to its traditional aesthetics and artistic conventions. All eight experts unanimously agreed that the generated patterns effectively capture the cultural spirit embodied in Qin’s unique paper-cutting style. The intricate motif details, the depiction of zodiac animals, and the overall composition approach were found to align closely with the stylistic characteristics of Qin’s work. This strong stylistic alignment can be attributed in part to the early-stage implementation of the controllable collaborative interaction model between designers and AI-generated systems. By involving designers directly in the loop—particularly in data labeling, prompt optimization, and structural guidance—the model successfully embedded Qin’s artistic style into the generative process.

Each iteration of the evaluation and analysis process provides valuable insights for refining intelligent generation strategies. Moreover, it substantiates the effectiveness of LoRA training models and dataset optimization in controlling style and fostering innovation. The findings highlight that a well-structured style control strategy can preserve the core essence of traditional paper-cut art while enabling more creative visual expressions. This research contributes to the digital innovation of paper-cutting art.

5. Discussion

5.1. The New Positioning of Designers in the AIGC Era “Designer-in-the-Loop”

In the AIGC era, the role of the designer has fundamentally changed. The designer is always deeply involved in dataset iteration, control of the generation process, and the evaluation and optimization of the results, making him or her no longer a creator in the traditional sense but rather a key decision maker integrated into the whole generation process. This model can be defined as “Designer-in-the-loop”, i.e., designers play the central role of guidance, intervention, and optimization in the closed-loop system of AI generation. This shift not only answers the question of the position of designers in the era of generative AI but also signals a change in AIGC’s skill set for designers. Instead of relying only on manual creation, designers need to have composite capabilities such as data thinking, model regulation, algorithm understanding, and intelligent generation and optimization. This change prompts us to rethink the training mode of designers in the era of AIGC and explore how to build the core competence of designers in the context of the deep integration of AI into design.

5.2. Dataset Labeling Strategy

In the process of using Stable Diffusion to generate specific styles of paper-cut patterns, dataset quality is a critical factor in ensuring optimal results. This is particularly crucial when constructing small-sample datasets, where high-quality data not only enhance the model’s learning capability but also directly impact stylistic consistency and detail richness in the generated patterns. Therefore, in Qin’s generative paper-cutting experiments, the dataset labeling approach is a key determinant in shaping the final output, ensuring that the model effectively captures and reproduces essential artistic elements.

During the label assignment process, multiple aspects must be carefully considered, including stylistic characteristics, semantic expression, and visual presentation. A precise and descriptive approach, aligned with the artistic traits of paper-cutting, is essential to ensure meaningful classification and optimal model training [40]. Experimental results indicate that avoiding direct labeling of content attributes and instead adopting stylistic descriptors significantly enhances the diversity of generated patterns. For instance, explicitly labeling an image with “cattle” or another specific animal attribute often causes the model to rigidly adhere to the predefined shape characteristics thereby restricting the creative variation of the generated paper-cut patterns. Conversely, employing style-based descriptors—such as “symmetrical”, “hollowed-out”, and “streamlined”—allows the model to learn the abstract visual features of paper-cut art more freely, resulting in greater diversity and stronger stylization in the generated patterns.

Furthermore, incorporating visual analysis dimensions and introducing semantic elements in the labeling process can further enhance the symbolic significance of the generated paper-cut patterns. This approach not only facilitates the creation of culturally meaningful and symbolically rich paper-cut designs but also expands the potential applications of AI-generated paper-cutting in diverse artistic and cultural contexts.

5.3. Controllable Collaborative Interaction Model Between Designers and AI-Generated Systems

In the intelligent generation of paper-cut patterns, enhancing the Stable Diffusion model’s ability to control fine details remains a key challenge in AI-assisted design. This study adopts LoRA technology to enhance the detailed expression of generated patterns, thereby improving the model’s controllability and artistic expressiveness.

To enrich the detail quality of paper-cut designs, the research team conducted a preliminary visual analysis and identified three structural categories in Qin’s paper-cut patterns: single-line structures, double-line structures, and multi-line structures. Independent LoRA models were trained for each category to strengthen the expressive quality of the generated results. Through continuous parameter tuning and iterative optimizations, the research team successfully improved the final outputs, making the line details more layered and refined in alignment with the aesthetic logic of traditional paper-cut art.

In Experiment 2, the study further introduced contour lines and skeletal lines as control variables to examine their controllability and applicability in the generation process. The results indicated that contour line control significantly impacts the morphology of the final paper-cut patterns, contributing to greater stylistic stability. By adjusting the proportion of structural lines in LoRA training samples, diverse stylistic characteristics can be achieved in the generated designs. In human figures and symmetrical patterns, skeletal lines exhibited strong controllability, facilitating the construction of z compositional frameworks.

However, in the zodiac-themed paper-cut generation experiments, skeletal line control presented certain limitations. For example, some zodiac animals, such as snakes, lack a standard skeletal structure comparable to that of humans. When subjected to skeletal constraints, these patterns failed to conform to ideal curvilinear forms, ultimately compromising the quality of the generated patterns.

By integrating contour lines and skeletal lines into a controllable collaborative model, designers can actively intervene in and guide the AI-generated output, ensuring that the final paper-cut designs align more closely with their creative intentions. This approach not only enhances the controllability of the Stable Diffusion model but also strengthens the designer’s role in shaping the generative results, fostering a deeper synergy between human creativity and AI.

Moreover, developing controllable collaboration models enables designers to participate more consistently and reliably in the intelligent generation process. By embedding designers in critical stages such as semantic prompt engineering, structural input control (e.g., contour and skeletal lines), and iterative evaluation, the generative process becomes more transparent and interpretable. This transparency not only helps demystify the black-box nature of deep generative models but also empowers designers to actively shape and steer the outcomes according to their creative vision and cultural expertise. However, while these advancements enhance control, their potential impact on design thinking and creative exploration remains an open question for future research.

6. Limitations

During dataset labeling, semantic factors extracted from the initial visual analysis were incorporated as metadata in the dataset. However, throughout the training process, it was observed that Stable Diffusion, influenced by its pretrained large-scale model, sometimes struggled to generate patterns with specific symbolic meanings efficiently. This issue may arise from the quantity and organization of dataset labels. A key challenge for future research is to improve the description of symbolic meanings in dataset labels and to integrate symbolic terms into prompt engineering to generate patterns with richer semantic content. These findings provide insights into structuring symbolic labels and guiding phrases to enhance meaningful pattern generation.

Additionally, in the collaborative process between designers and the intelligent generation of Dong paper-cut patterns, further advancements are needed to enhance the designer’s control over the generation model. Future research may explore the development of a ControlNet model specifically designed to regulate animal skeletal structures or apply skeletal constraints using curved lines. Such advancements could potentially improve the controllability of generated and precision of generated patterns.

7. Conclusions

This study focuses on an intelligent generation method for Dong paper-cut patterns within an AI-assisted design framework, exploring data-driven optimization strategies from the designer’s perspective. A novel dataset annotation approach that integrates both visual and semantic factors is proposed, enhancing the model’s ability to learn the stylistic features of traditional paper-cut designs.

In the context of human-AI collaborative generation, the study introduces the concept of “Designer-in-the-loop”, a new role for designers that emphasizes deep involvement throughout the entire process—from dataset annotation and model controllability optimization to results evaluation and iterative refinement. This approach aims to achieve more precise and culturally meaningful intelligent generation.

Using Stable Diffusion as a case study, the research systematically presents the iterative process of generating Dong paper-cut patterns and explores the collaborative control model between designers and AI. Techniques such as LoRA-based detail enhancement, contour line control, and symbolic prompt engineering are investigated to improve the quality and stylistic expressiveness of generated results. These methods not only increase AI’s adaptability in paper-cut art generation but also enhance designer control and trust in AI-assisted creative workflows.

The findings of this study hold significant cultural value, expanding the application boundaries of AI-generated content (AIGC) in the digital preservation of traditional craftsmanship. Additionally, this research contributes to the field of intangible cultural heritage digitization by proposing a novel human-AI collaboration framework. The insights gained provide valuable references for future stylized pattern generation and lay the groundwork for further advancements in AIGC applications, including heritage conservation, digital art creation, and intelligence-assisted design.

Furthermore, significant progress has been made in advancing the interactive collaboration between designers and AI. The implementation of a controllable collaborative interaction model, alongside the designer-in-the-loop framework, not only empowered designers with more nuanced control and real-time feedback mechanisms but also demonstrated how semantic and structural inputs can form an effective interactive language. These advancements enhance both the transparency and trust in AI-assisted creation, offering practical pathways for the future development of intelligent co-creation systems in cultural and artistic domains.

Nevertheless, the relatively limited size of the Dong paper-cut dataset presents challenges for model generalization. To address this limitation, future research may explore more diversified data augmentation strategies—such as rotation, cropping, and noise injection—to increase data variability. Additionally, applying transfer learning techniques, such as pretraining on other traditional motif datasets and fine-tuning for the Dong style, could help mitigate overfitting and further improve the quality and cultural fidelity of generated patterns.

Author Contributions

Conceptualization, Y.X. and X.L.; Methodology, Y.X. and X.L.; Software, J.Q. and B.M.; Validation, X.L., J.Q. and B.M.; Formal analysis, X.L.; Investigation, X.L.; Resources, T.J.; Data curation, J.Q.; Writing—original draft, X.L.; Writing—review & editing, X.L.; Visualization, H.G.; Supervision, Y.X.; Project administration, T.J.; Funding acquisition, Y.X. and X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Ministry of Education Humanities and Social Sciences Research Project (Grant No. 22YJC760049), the Hunan Provincial Department of Education Scientific Research Fund (Grant No. 23B0206), Natural Science Foundation of Hunan Province (Grant No. 2025JJ50400), and Hunan Lushan Lab Research Funding (Grant No. Z202333452590).

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| SD | Stable Diffusion |

| AIGC | Artificial Intelligence-Generated Content |

| LoRA | Low-Rank Adaptation of Large Language Models |

References

- Meron, Y. Graphic Design and Artificial Intelligence: Interdisciplinary Challenges for Designers in the Search for Research Collaboration. In Proceedings of the DRS2022, Bilbao, Spain, 25 June–3 July 2022. [Google Scholar]

- Shi, Y.; Shang, M.; Qi, Z. Intelligent layout generation based on deep generative models: A comprehensive survey. Inf. Fusion 2023, 100, 101940. [Google Scholar] [CrossRef]

- Xu, Z.; Zhao, W.; Zong, J.; Li, X. Research on Generating Naked-Eye 3D Display Content Based on AIGC. Electronics 2025, 14, 744. [Google Scholar] [CrossRef]

- Kim, M.; Kim, T.; Lee, K.-T. 3D Digital Human Generation from a Single Image Using Generative AI with Real-Time Motion Synchronization. Electronics 2025, 14, 777. [Google Scholar] [CrossRef]

- Yue, X.; Li, H.; Fujikawa, Y.; Meng, L. Dynamic Dataset Augmentation for Deep Learning-Based Oracle Bone Inscriptions Recognition. J. Comput. Cult. Herit. 2022, 15, 1–20. [Google Scholar] [CrossRef]

- Chong, L.; Kotovsky, K.; Cagan, J. Human Designers’ Dynamic Confidence and Decision-Making When Working with More Than One Artificial Intelligence. J. Mech. Des. 2024, 146, 081402. [Google Scholar] [CrossRef]

- Lawton, T.; Grace, K.; Ibarrola, F.J. When Is a Tool a Tool? User Perceptions of System Agency in Human–AI Co-Creative Drawing. In Proceedings of the 2023 ACM Designing Interactive Systems Conference, Pittsburgh, PA, USA, 10–14 July 2023; ACM: New York, NY, USA, 2023; pp. 1978–1996. [Google Scholar]

- Jin, X.; Dong, H.; Evans, M.; Yao, A. Inspirational Stimuli to Support Creative Ideation for the Design of Artificial Intelligence-Powered Products. J. Mech. Des. 2024, 146, 121402. [Google Scholar] [CrossRef]

- Figoli, F.; Rampino, L.; Mattioli, F. AI in Design Idea Development: A Workshop on Creativity and Human-AI Collaboration. In Proceedings of the DRS2022, Bilbao, Spain, 25 June–3 July 2022. [Google Scholar]

- Chong, L.; Raina, A.; Goucher-Lambert, K.; Kotovsky, K.; Cagan, J. The Evolution and Impact of Human Confidence in Artificial Intelligence and in Themselves on AI-Assisted Decision-Making in Design. J. Mech. Des. 2023, 145, 031401. [Google Scholar] [CrossRef]

- Murray-Rust, D.; Nicenboim, I.; Lockton, D. Metaphors for Designers Working with AI. In Proceedings of the DRS2022, Bilbao, Spain, 25 June–3 July 2022. [Google Scholar]

- Van der Burg, V.; Akdag Salah, A.; Chandrasegaran, S.; Lloyd, P. Ceci n’est pas une chaise: Emerging practices in designer-AI collaboration. In Proceedings of the DRS2022, Bilbao, Spain, 25 June–3 July 2022. [Google Scholar]

- Vinayavekhin, P.; Khomkham, B.; Suppakitpaisarn, V.; Codognet, P.; Terada, T.; Miura, A. Identifying Relationships and Classifying Western-Style Paintings: Machine Learning Approaches for Artworks by Western Artists and Meiji-Era Japanese Artists. J. Comput. Cult. Herit. 2024, 17, 1–18. [Google Scholar] [CrossRef]

- Hanh, L.D.; Bao, D.N.T. Autonomous Lemon Grading System by Using Machine Learning and Traditional Image Processing. Int. J. Interact. Des. Manuf. 2023, 17, 445–452. [Google Scholar] [CrossRef]

- Zhao, H.; Chen, H.; Jia, G.; Zheng, Q.; Wang, S. Semantic Annotation of National Cultural Patterns Based on Dictionary Learning. Sci. Sin. Informationis 2019, 49, 172–187. [Google Scholar] [CrossRef]

- Stork, D.G. Automatic Computation of Meaning in Authored Images Such as Artworks: A Grand Challenge for AI. J. Comput. Cult. Herit. 2022, 15, 1–11. [Google Scholar] [CrossRef]

- Li, J.; He, M.; Yang, Z.; Siu, K.W.M. Anthropological Insights into Emotion Semantics in Intangible Cultural Heritage Museums: A Case Study of Eastern Sichuan, China. Electronics 2025, 14, 891. [Google Scholar] [CrossRef]

- Kim, Y.; Im, C.; Mandl, T. Object Detection in Historical Images: Transfer Learning and Pseudo Labelling. J. Comput. Cult. Herit. 2024, 17, 1–15. [Google Scholar] [CrossRef]

- Yan, H.; Zhang, H.; Liu, L.; Zhou, D.; Xu, X.; Zhang, Z.; Yan, S. Toward Intelligent Design: An AI-Based Fashion Designer Using Generative Adversarial Networks Aided by Sketch and Rendering Generators. IEEE Trans. Multimed. 2023, 25, 2323–2338. [Google Scholar] [CrossRef]

- Yang, H.; Yang, H.; Min, K. Artfusion: A Diffusion Model-Based Style Synthesis Framework for Portraits. Electronics 2024, 13, 509. [Google Scholar] [CrossRef]

- Bao, Q.; Zhao, J.; Liu, Z.; Liang, N. AI-Assisted Inheritance of Qinghua Porcelain Cultural Genes and Sustainable Design Using Low-Rank Adaptation and Stable Diffusion. Electronics 2025, 14, 725. [Google Scholar] [CrossRef]

- Zhou, Z.; Liu, X.; Shang, J.; Huang, J.; Li, Z.; Jia, H. Inpainting Digital Dunhuang Murals with Structure-Guided Deep Network. J. Comput. Cult. Herit. 2022, 15, 1–25. [Google Scholar] [CrossRef]

- Liu, C.; Wang, D.; Zhao, Z.; Hu, D.; Wu, M.; Lin, L.; Liu, J.; Zhang, H.; Shen, S.; Li, B.; et al. SikuGPT: A Generative Pre-Trained Model for Intelligent Information Processing of Ancient Texts from the Perspective of Digital Humanities. J. Comput. Cult. Herit. 2024, 17, 1–17. [Google Scholar] [CrossRef]

- Liu, J.; Ma, X.; Wang, L.; Pei, L. How Can Generative Artificial Intelligence Techniques Facilitate Intelligent Research into Ancient Books? J. Comput. Cult. Herit. 2024, 17, 1–20. [Google Scholar] [CrossRef]

- Jung, S.; Heo, Y.S. Temporal Adaptive Attention Map Guidance for Text-to-Image Diffusion Models. Electronics 2025, 14, 412. [Google Scholar] [CrossRef]

- Wu, X.; Li, L. An Application of Generative AI for Knitted Textile Design in Fashion. Des. J. 2024, 27, 270–290. [Google Scholar] [CrossRef]

- Cui, J.; Tang, M.-X. Integrating Shape Grammars into a Generative System for Zhuang Ethnic Embroidery Design Exploration. Comput.-Aided Des. 2013, 45, 591–604. [Google Scholar] [CrossRef]

- Yin, Y.; Ding, S.; Zhang, X.; Wang, C.; Li, X.; Cai, R.; Shou, Y.; Qiu, Y.; Chai, C. Cultural Product Design Concept Generation with Symbolic Semantic Information Expression Using GPT. In Proceedings of the DRS2024, Boston, MA, USA, 23–28 June 2024. [Google Scholar]

- Cheng, K.; Neisch, P.; Cui, T. From Concept to Space: A New Perspective on AIGC-Involved Attribute Translation. Digit. Creat. 2023, 34, 211–229. [Google Scholar] [CrossRef]

- Carrasco, M.; González-Martín, C.; Navajas-Torrente, S.; Dastres, R. Level of Agreement between Emotions Generated by Artificial Intelligence and Human Evaluation: A Methodological Proposal. Electronics 2024, 13, 4014. [Google Scholar] [CrossRef]

- Subbaraman, B.; Shim, S.; Peek, N. Forking a Sketch: How the OpenProcessing Community Uses Remixing to Collect, Annotate, Tune, and Extend Creative Code. In Proceedings of the 2023 ACM Designing Interactive Systems Conference, Pittsburgh, PA, USA, 10–14 July 2023; ACM: New York, NY, USA, 2023; pp. 326–342. [Google Scholar]

- Liu, L.; Tong, D.; Shao, W.; Zeng, Z. AmazingFT: A Transformer and GAN-Based Framework for Realistic Face Swapping. Electronics 2024, 13, 3589. [Google Scholar] [CrossRef]

- Goyal, M.; Mahmoud, Q.H. A Systematic Review of Synthetic Data Generation Techniques Using Generative AI. Electronics 2024, 13, 3509. [Google Scholar] [CrossRef]

- Li, Y.; Li, Y.; Yan, W.; Yang, F.; Ding, X. Advancing Design with Generative AI: A Case of Automotive Design Process Transformation. In Proceedings of the DRS2024, Boston, MA, USA, 23–28 June 2024. [Google Scholar]

- Lu, Y.; Wu, J.; Wang, M.; Fu, J.; Xie, W.; Wang, P.; Zhao, P. Design Transformation Pathways for AI-Generated Images in Chinese Traditional Architecture. Electronics 2025, 14, 282. [Google Scholar] [CrossRef]

- Sbai, O.; Elhoseiny, M.; Bordes, A.; LeCun, Y.; Couprie, C. DesIGN: Design Inspiration from Generative Networks. In Computer Vision—ECCV 2018 Workshops; Leal-Taixé, L., Roth, S., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2019; Volume 11131, pp. 37–44. ISBN 978-3-030-11014-7. [Google Scholar]

- Van Der Burg, V.; De Boer, G.; Akdag Salah, A.A.; Chandrasegaran, S.; Lloyd, P. Objective Portrait: A practice-based inquiry to explore Al as a reflective design partner. In Proceedings of the 2023 ACM Designing Interactive Systems Conference, Pittsburgh, PA, USA, 10–14 July 2023; ACM: New York, NY, USA, 2023; pp. 387–400. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685v1. Available online: https://openreview.net/pdf?id=nZeVKeeFYf9 (accessed on 14 March 2025).

- Muller, M.; Chilton, L.B.; Kantosalo, A.; Martin, C.P.; Walsh, G. GenAICHI: Generative AI and HCI. In Proceedings of the CHI Conference on Human Factors in Computing Systems Extended Abstracts, New Orleans, LA, USA, 27 April 2022; ACM: New York, NY, USA, 2022; pp. 1–7. [Google Scholar]

- Wang, L.; Hu, Y.; Xia, Z.; Chen, E.; Jiao, M.; Zhang, M.; Wang, J. Video Description Generation Method Based on Contrastive Language–Image Pre-Training Combined Retrieval-Augmented and Multi-Scale Semantic Guidance. Electronics 2025, 14, 299. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).