1. Introduction

The image captioning task integrates the fields of computer vision and natural language processing. It aims to enable the computer to recognize the content of a given image and automatically generate natural language text that describes the image, thereby achieving a modality conversion from vision to language. This task is highly challenging as it not only requires the accurate identification of objects, their actions, and their attributes in the image, but also necessitates an understanding of the relationships between objects and the generation of grammatically correct descriptions. Image captioning has broad application prospects in the fields of human–computer interaction, image retrieval, and navigation for the visually impaired. It is a research topic with significant practical value [

1].

Currently, most of the image captioning methods are based on the encoder–decoder framework. Early studies typically employed a Convolutional Neural Network (CNN) as the encoder to extract visual features from the image and used a Recurrent Neural Network (RNN) as the decoder to generate the description statement. However, these methods have shortcomings in capturing long-distance dependencies. In recent years, the Transformer architecture has become the mainstream approach for image captioning due to its strong ability to model long-distance dependencies and associate contextual information [

2,

3,

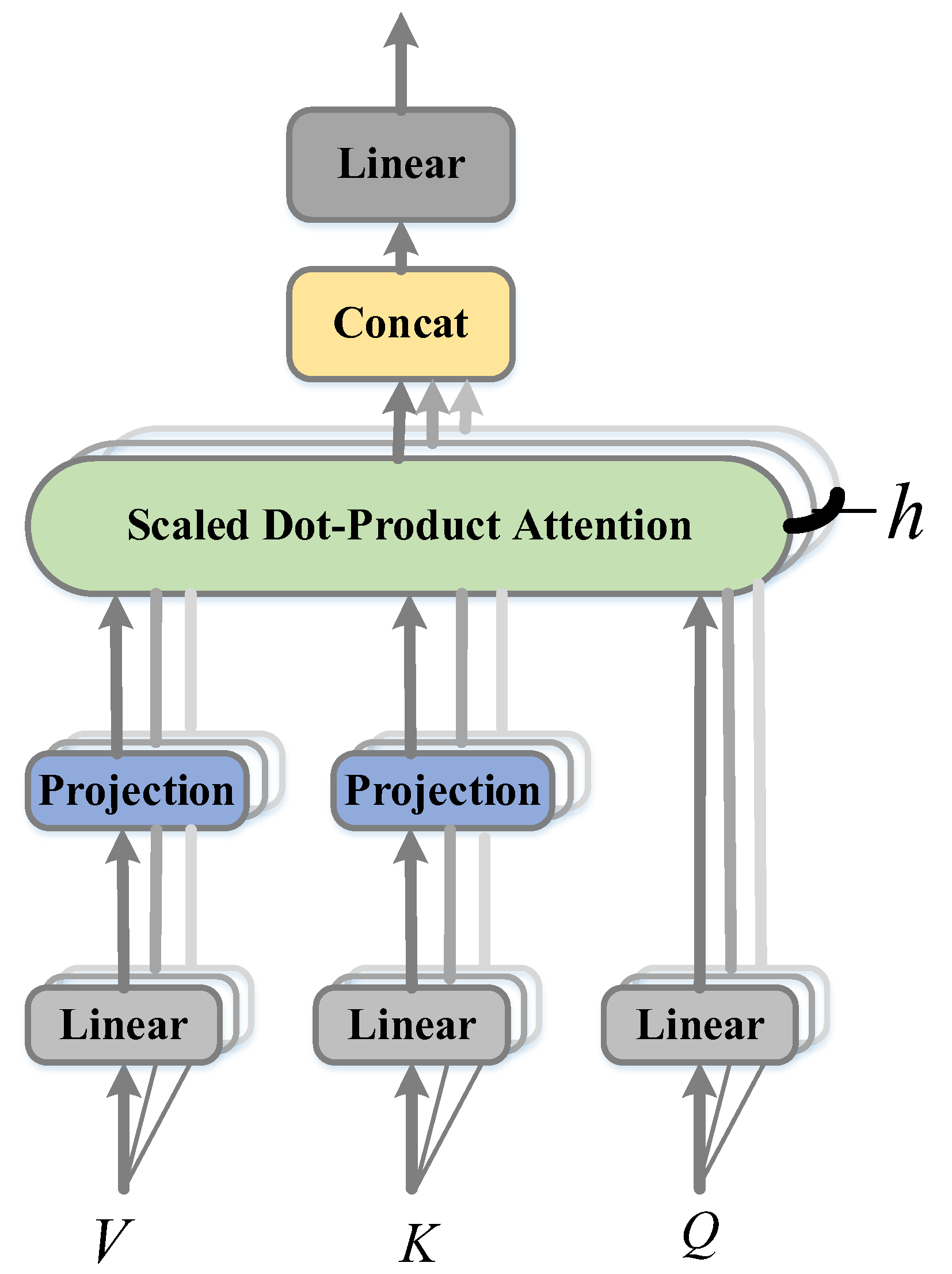

4]. The core component of the Transformer is the Multi-Head Self-Attention (MHA) module, which has a time and space complexity of

. Recent studies have proposed a new module called Multi-Head Linear Self-Attention (MHLA), which has a complexity of

and has comparable performance to traditional MHA [

5,

6]. The linear Transformer built upon MHLA has shown significant advantages in processing large-scale image captioning tasks. However, the application of Linformer in the field of image captioning is still limited, and its potential has not been fully explored. Therefore, we attempt to build an image captioning model using Linformer to leverage its efficiency and scalability.

In the encoder–decoder-based image captioning methods, the encoder models the contextual features of the image and passes the refined visual signals to the decoder to generate fluent text description. Therefore, designing efficient encoders and decoders is crucial for the image captioning task. Although recent methods have greatly promoted the development of the field of image captioning [

5,

6,

7,

8,

9], but there still are the following shortcomings: (1) Each region in an image does not exist in isolation, but has complex spatial and semantic correlations with other regions. These spatial relationships are crucial for understanding the overall semantics of the image. However, existing methods are insufficient in capturing the spatial correlations between the internal features of image regions, lacking prior knowledge of the relationships between regional features. As a result, the generated description often fails to accurately describe the spatial structure of the image and struggles to associate contextual visual information. (2) The image captioning task is essentially an interactive process between image and text information. However, existing methods have shortcomings in the interaction between image features and text features, and cannot fully explore their semantic associations. This results in information loss and affects the accuracy and richness of the descriptions.

To address the aforementioned problems, Wang et al. [

10] attempted to extend the absolute position encoding in the Transformer architecture from one-dimensional to two-dimensional, aiming to enhance the spatial perception of the model. However, this simple extension often performs poorly when dealing with complex and dense image content. Meanwhile, Kuo et al. [

11] tried to introduce external knowledge to assist the cross-modal semantic interaction between images and text. Although this approach is theoretically innovative, it inevitably brings higher computational and time costs, which may hinder the development of subsequent related research. In contrast, multi-level spatial perception and cross-modal modeling show the potential to solve these problems. This strategy not only helps the model to understand the image content in depth, but also more effectively captures the complex spatial relationships and semantic correlations between image regions. Moreover, cross-modal modeling, by integrating the semantic information of images and text, is able to more comprehensively explore the semantic associations between them. This not only improves the accuracy of the descriptions, but also enhances the model’s ability to perceive contextual information.

To this end, we propose a novel image captioning method, the Dense Memory Linformer for Image Captioning (DMFormer), based on Linformer. By reducing time and space complexity, Linformer enables the construction of an efficient encoder–decoder structure. We design a low-complexity multi-layer cross encoder–decoder structure that includes two core components: the Relation Memory Augmented Encoder (RMAE) and the Dense Memory Augmented Decoder (DMAD). Specifically, in the RMAE, we introduce Relation Memory Augmented Attention (RMAA). This module encodes the complex spatial relationships between image regions using geometric information in an explicit spatially aware manner. Meanwhile, it learns the contextual information of relationships between image region features through memory units in an implicit spatially aware manner. The combination of explicit and implicit spatial perception enhances the model’s ability to capture image features rich in spatial information. In the DMAD, we propose Dense Memory Augmented Cross Attention (DMACA). This module adopts a multi-layer structure with dense connections to fully utilize both low-level and high-level features from the RMAE. It employs an adaptive gating mechanism to learn cross-modal associations between visual and language features. Additionally, memory units are constructed to store prior knowledge of images and text, capturing the intrinsic semantic information and global dependencies of objects and reducing information loss.

Our major contributions are summarized as follows:

We propose the RMAE. On the one hand, it encodes the complex spatial relationships between image regions using geometric information in an explicit spatially aware manner. On the other hand, it introduces memory units to design the Relation Memory Augmented Attention (RMAA), which learns the contextual information of relationships between image region features in an implicit spatially aware manner. The collaborative operation of the two spatial perception methods is conducive to capturing image features containing rich spatial information.

We propose the DMAD to fully utilize both low-level and high-level features from the RMAE through dense connections. We design the DMACA to associate visual and linguistic information across different layers, thereby capturing deeper correlations between image features and text descriptions.

We build a novel DMFormer model by integrating the above two modules based on Linformer. This model effectively handles the interaction between image and text features while capturing the spatial semantic correlations between image regions. Experimental results on the MS-COCO dataset show that compared to existing mainstream methods, our proposed method generates richer and more accurate sentences, with significant improvements in all evaluation metrics.

3. Proposed Method

We propose a novel low-complexity multi-layer cross encoder–decoder structure based on Linformer, namely the DMFormer. The DMFormer mainly consists of the encoder-RMAE for extracting visual features from the images and the decoder-DMAD for generating sentences. Specifically, in the RMAE, we introduce RMAA. On the one hand, we encode the complex spatial relationships between image regions using geometric information, explicitly perceiving spatial signals in the image. On the other hand, we construct a memory unit matrix to implicitly learn the contextual information of the image region features. The combination of these two spatial perception methods is conducive to deepening the model’s understanding of image content. In the DMAD, we propose DMACA. To fully explore the semantic associations between image and text features, on the one hand, we adopt a multi-layer structure with dense connections to better utilize both the low-level and high-level features generated by the RMAE. On the other hand, we employ an adaptive gating mechanism to learn cross-modal associations between visual and language features and construct memory units to store prior knowledge of images and text. This approach captures the intrinsic semantic information and global dependencies of objects, reducing information loss. The specific model structure is shown in

Figure 2.

3.1. Positional Encoding

Since the model does not use any recurrence or convolution, it cannot directly capture the order of sequence. To enable the model to utilize the order information of the input sequence, it is essential to fully incorporate the relative or absolute positional information of the sequence tokens. In this paper, we apply positional encoding to the input before feeding it into the encoder and decoder. Most existing methods only model the positional relationships of regions in a relative manner. However, to better leverage the spatial geometric information between different types of image features, we integrate both absolute and relative positional information in an explicit spatially aware manner. This approach aims to simulate the complex visual and positional relationships between input features. Specifically, we employ the following two types of positional encoding:

Firstly, we use sine and cosine functions to obtain the absolute positional encodings. For each position

, the absolute positional encoding

is defined as follows:

where

is the position index,

is the dimension index, and

is the embedding dimension.

Secondly, to better integrate the positional information of the visual features, we add relative positional information based on the geometric structure of the bounding boxes. Assume the bounding box of a region can be represented as

, where

denotes the center coordinates, and

and

represent the width and height of the box, respectively. The relative positional encoding is defined as follows:

where

and

denote the indices of two regions, and

represents the positional encoding of region

relative to region

.

By combining absolute and relative positional encodings, our method can more comprehensively capture the spatial geometric relationships between image regions, thereby generating more accurate and richer descriptions.

3.2. Relation Memory Augmented Encoder

In the image captioning task, the role of the encoder is to refine the visual representation of the image, and its ability to capture visual semantic information is crucial. Each region in the image does not exist in isolation, but has complex spatial and semantic correlations with other regions. These spatial relationships are vital for understanding the overall semantics of the image. However, existing methods fall short in capturing the spatial position correlations between the internal features of image regions, lacking prior knowledge of the relationships between regional features. As a result, the generated descriptions often cannot accurately depict the spatial structure of the image and struggle to associate contextual visual information. To address this problem, we design the encoder-RMAE. Combined with explicit and implicit spatial perception, we propose RMAA to embed geometric information into the attention module with memory units. This approach enables the modeling of complex spatial relationships between regional features and captures the overall semantic structure of the image, allowing the model to generate richer semantic information.

The RMAE consists of three identical encoder layers stacked together. Each encoder layer contains two sub-layers: the first sub-layer is Multi-Head Relation Memory Augmented Attention, which is used to capture the correlation coefficients in multiple dimensions between features. By the combination of explicit and implicit spatial perception, it enhances the model’s understanding of the complex spatial relationships between image regions. The second sub-layer is the Position-wise Feed-Forward Network (FFN), which performs dimension transformation and enhances the expression ability of the model through a fully connected network of two linear layers. Residual connection and layer normalization are used between all sub-layers, which can not only accelerate the convergence speed, but also effectively avoid the vanishing gradient problem during training. The architecture of the encoder layer is shown in

Figure 3 and is defined as follows:

where

denotes the Multi-Head Relation Memory Augmented Attention module,

denotes the normalization operation of the layers,

and

are trainable weight matrices,

and

are bias terms, and

denotes the Gaussian error linear unit.

By stacking the aforementioned encoder layers times and using the output of the -th layer as the input to the -th layer, the result is equivalent to creating a multi-level encoding of relationships between image regions. The shallow encodings tend to model the association between single visual elements, while deeper encodings focus on the semantic relationship after cascading multiple visual elements. There are complementary advantages between the encoding results of these different layers. Therefore, we concatenate these results, denoted as , and feed them into the decoder for cross-modal modeling.

Relation Memory Augmented Attention

To more accurately model the spatial and semantic relationships between image regions, thereby generating descriptions with explicit spatial structures, we propose RMAA. RMAA explicitly leverages geometric information to model the geometric relationships between image regions, and implicitly constructs a memory unit matrix to store the contextual information of image region features. The combination of these two structures enhances the model’s understanding of image content, enabling it to capture rich image features that contain deep spatial relationships. It not only strengthens the understanding of image spatial structure, but also provides a solid foundation for generating more detailed and accurate descriptions.

Firstly, to enhance the relative position representation of image features, we incorporate explicit spatially aware region geometry into the computation of the Self-Attention module. Specifically, we add the computed position representation after the Scaled Dot-Product Attention in Self-Attention. This design allows the model to directly leverage geometric information to enhance its understanding of the spatial relationships between image regions. This is defined as follows:

where

is the position encoding used to enhance the position representation of image features. The introduction of the position encoding

enables the model to capture the positional information between image regions, thereby more accurately modeling the spatial structure of the image.

Secondly, to further enhance the model’s understanding of the contextual relationships between image regions, RMAA introduces implicit spatial perception. Specifically, additional memory unit matrices

and

are added to the Key and Value components. These matrices can be automatically learned through Stochastic Gradient Descent (SGD), allowing the model to capture prior knowledge that cannot be expressed by the input features. This is defined as follows:

where

and

are learnable matrices with

rows, which are used to store the contextual information between image region features. By introducing these memory unit matrices, the model is able to more effectively capture the semantic associations between image regions, thereby enhancing its understanding of the image content.

Finally, to fully leverage the advantages of the multi-head attention mechanism, RMAA employs a multi-head approach. The outputs from different heads are concatenated and then projected through a linear layer. This design enables the model to capture complex relationships between image features from multiple perspectives and integrates this information via linear projection, thereby generating more accurate descriptions. This is defined as follows:

where

,

, and

are the weight matrices for the

-th head, and

is the linear projection matrix for the output. The multi-head attention mechanism allows the model to capture the relationship between features from multiple sub-spaces, while linear projection integrates this information into a unified representation.

Through the joint modeling of explicit and implicit spatial perception, RMAA not only leverages explicit spatial information to enhance the model’s understanding of image region locations, but also captures more complex contextual relationships via implicit spatial perception. This integrated approach enables the model to more comprehensively grasp the overall semantic structure of the image, thereby generating richer semantic information.

3.3. Dense Memory Augmented Decoder

The role of the decoder is to transform the visual features extracted by the encoder into a text sequence that describes the image content. This process is essentially an interaction between image and text information. However, existing methods have limitations in the interaction between image features and text features, failing to fully explore the semantic associations between them. This leads to information loss, which affects the accuracy and richness of the generated description. To address this problem, we propose the DMAD. The DMAD employs a multi-layer structure that densely connects all multi-layer feature representations from the encoder. This design aims to reduce the loss of feature information extracted from the RMAE during sentence generation, ensuring that both low-level and high-level features are fully utilized. Furthermore, the DMAD introduces DMACA. DMACA incorporates memory units that collect current image information as persistent memory, which is continuously updated during training. These memory units serve as additional semantic information sources to help the model capture the semantic relevance in the input sequence. They further capture the intrinsic semantic information and global dependencies of the object, thereby reducing information loss. In this way, the model can better utilize information from previous positions to guide the generation of descriptions at the current position. This enhances the context-aware ability of the model and improves the quality and consistency of the generated descriptions. Additionally, DMACA employs an adaptive gating mechanism to learn cross-modal associations between visual and language features, further enhancing the model’s ability to capture semantic associations.

The DMAD consists of three stacked decoder layers with identical structures. Each decoder layer contains three sub-layers: The first is Masked Multi-Head Linear Attention, which ensures that the output at the current position is only related to the input of the previous position to prevent the position from involving subsequent positions. The second sub-layer is Multi-Head Dense Memory Augmented Cross Attention, which captures the correlation coefficients between image features and words in multiple dimensions. The third sub-layer is FFN, which enhances the expression ability of the model through a fully connected network with two linear layers for dimension transformation. Each sub-layer employs residual connection and layer normalization, which can not only accelerate the convergence speed, but also effectively prevent the vanishing gradient problem during training. The architecture of the decoder layers is shown in

Figure 4, which is defined as follows.

where

represents the input features,

denotes the Masked Multi-Head Linear Attention module,

denotes the Multi-Head Dense Memory Augmented Cross Attention module, and

represents the layer normalization operation.

Dense Memory Augmented Cross Attention

To fully explore the semantic associations between visual and textual features, we propose DMACA. DMACA effectively integrates the semantic associations between visual and textual features by introducing additional memory unit matrices, cross-attention of multi-level features, and an adaptive gating mechanism. Firstly, DMACA introduces additional memory unit matrices

and

to store the prior knowledge of image region features. These memory unit matrices are automatically updated through backpropagation during training and can capture prior knowledge that cannot be expressed by the input features. This design enables the model to leverage richer semantic information to enhance its understanding of image content. Secondly, to fully utilize the multi-level features output by the encoder output, DMACA performs cross-attention between the decoder input

and the output information from all encoder layers. In this way, the model can capture different levels of feature information, so as to understand the image content more comprehensively. Specifically, the cross-attention mechanism enables the decoder to dynamically focus on multiple layers of image features when generating textual descriptions. Finally, to further integrate cross-attention information from different encoder layers, DMACA introduces an additional adaptive gating mechanism. The mechanism dynamically adjusts the importance of each layer’s features by learning the contribution weights of the different encoding layers. This adaptive adjustment enables the model to make more flexible use of multi-level feature information, thereby better capturing the semantic associations between visual and textual features. Specifically, this is defined as follows:

where

is a weight matrix that represents the contribution of each encoding layer and the relative importance between the different encoding layers. It is calculated based on the correlation between the cross-attention results of each encoder layer and the input query. This is defined as follows:

where

denotes the concatenation,

is the weight matrix of

, and

is a learnable bias vector.

Through the above design, DMACA is capable of effectively integrating multi-level feature information, thereby enhancing the model’s understanding of the relationships between image regions. This design not only makes full use of the detailed information of low-level features, but also incorporates the semantic information from high-level features, so as to more comprehensively mine the semantic association between image features and text features. Ultimately, DMACA is able to generate more accurate and richer descriptions, thereby improving the overall performance of the model.

3.4. Training Strategy

We adopt two-stage training. Firstly, we use the cross-entropy loss function [

30] for pre-training, followed by fine-tuning using a reinforcement learning-based method [

31]. The specific process is as follows:

Firstly, we employ the cross-entropy loss function for pre-training. Given a word sequence

of a real annotated dataset, the optimization objective is to minimize the cross-entropy loss as follows:

where

is the model parameter and

denotes the length of the word sequence.

Then, we fine-tune the model using reinforcement learning to optimize for the CIDEr metric. The goal of the training model is to minimize the negative expected reward score:

where

denotes the sentence obtained by the model through Monte Carlo sampling, and

denotes the score of CIDEr. The gradient of the above objective function is calculated using reinforcement learning methods as follows:

Finally, to mitigate training instability and high variance in the gradient estimates, we use the reward of the sentence generated by the current model during the test stage as a baseline. Specifically, we employ beam search to generate sentences and use their CIDEr scores as the baseline rewards. This helps stabilize the training process and reduce the variance in gradient updates.

where

is the baseline reward.

4. Experiments

4.1. Dataset and Evaluation Metrics

Dataset: To validate the effectiveness of our proposed DMFormer, we conducted extensive experiments on the MS-COCO dataset, which is the most widely used and largest benchmark dataset in image captioning. The dataset contains 82,783 training images, 40,504 validation images, and 40,775 testing images. Each image is annotated with at least five captions. For a fair comparison, we divided all those images and their corresponding captions into three pairs of sets, 113,287 for training, 5000 for validation, and 5000 for testing.

Caption Preprocessing and Tokenization: (1) Text Cleaning: Captions are converted to lowercase and stripped of special characters and digits to ensure uniformity. (2) Tokenization: We use the word_tokenize function from the NLTK library to split captions into individual words. (3) Sequence Padding: Sequences are padded to ensure consistent length, which is essential for batch processing during training.

We use the word_tokenize function from the NLTK library to split captions into individual words, and any words not included in the 10k token vocabulary are treated as unknown tokens. Additionally, we specify that the maximum sequence length for padding is set to 20, ensuring all sequences are of uniform length for batch processing.

Evaluation metrics: To evaluate the quality of the generated captions, we employed a variety of automatic evaluation metrics based on the similarity between ground-truth captions and generated captions. Following the standard evaluation protocol, we used the full set of captioning metrics, including BLEU [

32], METEOR [

33], ROUGE [

34], CIDEr [

35], and SPICE [

36].

4.2. Implementation Details

To extract visual features, we used the pre-trained Faster-RCNN with ResNet-101 backbone, fine-tuned on the Visual Genome dataset. We extracted 2048 dimensional features from the first FC-layer of the detection head. These features correspond to the outputs of the last convolutional layer of ResNet-101, with a spatial resolution of 7 × 7 × 2048. In our implementation, we set the output dimension

to 512 and the number of attention heads to 8. The visual and textual memory vectors were set to 40 and 20, respectively. Both the encoder and decoder consisted of 3 layers. We employed dropout with a keep probability of 0.9 after each attention and feed-forward layer. We used the Adam optimizer to train the model, with the batch size set to 50 and the beam size for beam search set to 5. In the first stage of pre-training, which is based on cross-entropy loss, we adopt a standard learning rate strategy. The baseline learning rate is set to 1 × 10

−4, and the number of training epochs is set to 28. In the second stage, which involves CIDEr-D optimization, we adjusted the learning rate in three stages based on the number of training epochs: (1) Before epoch 28, we used a fixed baseline learning rate of 5 × 10

−6. (2) Between epochs 28 and 50, we adjusted the baseline learning rate to 5 × 10

−7. (3) After epoch 50, we applied a composite exponential decay strategy to adjust the learning rate dynamically. The formula for the reinforcement learning rate is as follows:

where

is the baseline learning rate;

is the learning rate scheduling strategy period of the warming cycle, set to 20,000;

is the number of rounds of the current training;

is the minimum value calculation function; and

is the input and output dimension of each layer of the model.

In our work, the memory units and are designed as learnable parameters optimized via stochastic gradient descent (SGD). Below are the key technical details:

(1) Initialization: and are initialized by sampling from a normal distribution with zero-mean and a small standard deviation ( = 0.01). This low-variance initialization prevents unstable attention weights in early training stages, aligning with standard practices in Transformer-based models. It ensures that initial memory entries are diverse yet bounded, avoiding premature convergence to suboptimal patterns.

(2) Update Mechanism: During training,

and

are updated via SGD with momentum (

= 0.9), where the gradients are computed through backpropagation. Specifically, the updates are performed as follows:

where

is the learning rate,

and

represent the gradient of the loss

with respect to

and

,

controls the momentum term, and

and

store the accumulated gradients from previous updates. The use of momentum helps accelerate the convergence and smooth out noisy gradients, which is particularly beneficial for high-dimensional parameters like

and

.

This design ensures that the memory units are optimized in a stable and efficient manner, while allowing them to adapt dynamically during training.

4.3. Experimental Results and Analysis

4.3.1. Analysis of Module Ablation Experiments

In order to verify the effectiveness of each module proposed in the DMFormer, a number of ablation experiments were conducted to investigate the contribution of each module. Firstly, we use an image captioning model based on the original Linformer as the baseline model. Secondly, we use the RMAE module to replace the encoder of the baseline model. Thirdly, the decoder of the baseline model is replaced by the DMAD module. Finally, the RMAE and DMAD are fused to construct the DMFormer model. The above experiments are conducted on the standard MSCOCO dataset and the experimental results are shown in

Table 1.

From the results of

Table 1, it can be seen that the various modules of the DMFormer have made significant contributions to the performance improvement of the model. Specifically, the following contributions were made: (1) After incorporating the RMAE module, the model is able to more effectively extract spatial relationships and semantic information from image regions, increasing the CIDEr score from 121.8 to 127.1. (2) By replacing the decoder of the baseline model with the DMAD module, the model is able to dynamically focus on the bidirectional relationships between visual and textual features, providing richer reference information for text generation. This improvement raises the CIDEr score from 121.8 to 130.5. (3) When the RMAE and DMAD modules are used as the encoder and decoder, respectively, the DMFormer achieves significant improvements across all evaluation metrics. This shows that by integrating these two modules, the DMFormer not only performs well in semantic information extraction and feature interaction but also optimizes the overall architecture, reducing information loss and further enhancing the accuracy and richness of the generated descriptions.

4.3.2. Analysis of Memory Vectors Ablation Experiments

To validate our choice of memory vector dimensions, we conducted ablation studies comparing different numbers of memory vectors in both the encoder and decoder. The results, summarized in

Table 2 and

Table 3, demonstrate that

= 40 for the encoder and

= 20 for the decoder yield optimal performance across multiple evaluation metrics.

4.3.3. Analysis of RMAA Variants in Ablation Experiments

To validate the performance of RMAA, we have conducted ablation studies across different configurations, including explicit-only, implicit-only, and combined configurations. The results summarized in

Table 4 consistently demonstrate that the combined approach outperforms the individual variants in terms of multiple metrics, providing solid empirical evidence for the effectiveness of our design choice.

4.3.4. Comparison and Analysis with Advanced Baseline Models

To verify that the performance improvement of the DMFormer is not dependent on the regional visual features extracted by the Faster R-CNN object detector, we conducted comparative experiments on the MSCOCO dataset. In the experiments, all models were trained based on the regional visual features extracted by Faster R-CNN to ensure the consistency of visual features. Additionally, we set the input and output dimensions

of the encoder and decoder layers of all models to 512 and fixed the number of training epochs to 50. The experimental results are shown in

Table 5.

The experimental results demonstrate that the DMFormer can achieve significantly better performance than other state-of-the-art methods under the same visual features and architecture configurations. This indicates that the performance advantage of the DMFormer does not merely stem from high-quality visual features, but is primarily attributed to its distinctive module design and architectural optimization. These innovations allow the DMFormer to more effectively explore the semantic connections between visual and textual information, thereby producing more accurate and richer image captions.

4.3.5. Comparative Analysis with Advanced Models

The state-of-the-art of the DMFormer model is validated by comparing it to the existing mainstream models using the performance metrics on the MSCOCO Karpathy test. Several mainstream methods involved in the comparison include SCST [

31], RFNet [

40], Up-Down [

41], GCN-LSTM [

42], SGAE [

37], ORT [

38], AoANet [

39], M2-Transformer [

29], X-Transformer [

24], RSTNet [

43], DGET [

44], GAT [

10], ViTCAP [

17], CATNet [

45], MAENet [

46], D2 Transformer [

20], VaT [

47], AS-Transformer [

48], LATGeO [

19]. As can be seen from

Table 6, the DMFormer demonstrates excellent performance, especially in terms of the CIDEr metric, which is highly valued in the field of image captioning, achieving a score of 133.2. This indicates that the DMFormer is highly effective in generating captions that closely match human references. Additionally, the DMFormer achieves remarkable results in other key metrics, including BLEU-1, BLEU-4, METEOR, ROUGE-L, and SPICE. These results highlight the DMFormer’s ability to generate high-quality captions that are both accurate and diverse. The superior performance of the DMFormer can be attributed to its innovative modules and architecture. The RMAE module enhances the extraction of spatial relationships and semantic information from image regions, while the DMAD module improves the interaction between visual and textual features. Together, these modules enable the DMFormer to generate more accurate and contextually rich captions compared to other state-of-the-art models.

4.3.6. Computational Efficiency Analysis

To prove the superiority of the DMFormer in terms of the trade-off between the amount of calculations (FLOPS), parameter quantity (Params), and reasoning speed (Inference Time), we can follow a structured approach. Below is a step-by-step demonstration of the superiority of the DMFormer based on the information provided in

Table 7:

The results are shown in

Table 2. The DMFormer demonstrates the best trade-off among the amount of calculations, parameter quantity, and reasoning speed. It achieves significant improvements in captioning performance with moderate increases in computational resources and parameter count, while maintaining faster inference times compared to other state-of-the-art models. This makes the DMFormer a superior choice for image captioning tasks, offering a balanced and efficient solution.

4.3.7. Qualitative Analysis

To intuitively demonstrate the performance improvement of the DMFormer model,

Figure 5 compares manually labeled statements, captions generated by the baseline model, and captions generated by the DMFormer model. For the first image, our method generates more informative and accurate captions than the baseline by capturing both spatial arrangement (e.g., “in front of a clock tower”) and event context (e.g., “festival type”). This demonstrates the model’s ability to incorporate spatial and contextual information effectively. For the second image, our method generates more precise captions by identifying specific details (e.g., “bananas and oranges”) and adding contextual information (e.g., “next to plates”). This highlights the model’s capability to capture finer details and relationships between objects. For the third image, while the DMFormer adds contextual details (“blue lights on the side of the road”), it fails to mention other relevant elements such as pedestrians or additional objects in the background. This indicates that the model may overlook less prominent details in complex scenes. For the fourth image, the DMFormer specifies key elements like a wooden table, chairs, and a couch. However, it does not accurately describe the color or style of these objects, showing that the model may struggle with finer aesthetic details. For the fifth image, our method captures the scene vividly with details like “adorable cat,” “big eyes,” and “luggage left open,” making the description more engaging and precise. For the sixth image, our method describes the scene more precisely with details like “fenced off field,” showing its ability to generate detailed and contextually rich captions.

4.3.8. Significance Analysis

Comparisons following standard evaluation criterion demonstrate that our DMFormer outperforms several existing methods. In order to verify the performance of the DMFormer more comprehensively, we perform significance analysis from a statistical point of view. First, we take 5000 images from the Karpathy test split as samples and make our DMFormer and existing methods predict their captions. We then use the CIDEr score, the most highly valued in image captioning, to measure of the quality of the generated captions. Finally, paired two-tailed

t-tests were employed to assess whether there were significant differences, and the results are shown in

Table 8.

The results of the t-tests indicate that the improvements are statistically significant at the 0.05 significance level. This provides strong evidence that our method outperforms the baselines in a reliable and repeatable manner.

5. Limitations and Future Work

5.1. Limitations

Inference Speed: While the DMFormer achieves competitive performance, its inference speed may not be sufficient for real-time applications requiring faster response times. Future work could explore model optimization techniques to further reduce inference time.

Memory Usage: The DMFormer requires approximately 4.5 GB of GPU memory during inference, which may be a limitation in environments with constrained computational resources. We plan to investigate more memory-efficient architectures and optimization strategies.

Robustness: The robustness of the DMFormer in real-world scenarios with diverse and complex imagery remains to be tested. We anticipate that extreme cases, such as highly ambiguous images or those with severe occlusions, may pose challenges. Future work will include testing on more diverse datasets.

Scalability: Deploying the DMFormer in real-world applications with massive and dynamic datasets will require further evaluation. We plan to explore distributed computing and incremental learning approaches to enhance scalability.

5.2. Future Work

Model Optimization: We plan to explore techniques such as quantization, pruning, and knowledge distillation to create more efficient versions of the DMFormer without significantly compromising performance.

Real-World Testing: We aim to conduct extensive testing in real-world scenarios to better understand and address the model’s limitations.

Hardware Acceleration: Investigating hardware acceleration options, such as TPUs and specialized GPUs, could further enhance inference speed and memory efficiency.

Enhanced Robustness: We will incorporate advanced data augmentation techniques and robustness training methods to improve the model’s performance on challenging and diverse datasets.

Multi-Modal Applications: Extending the DMFormer to multi-modal applications, such as video captioning and visual question answering, is another promising direction for future research.

6. Conclusions

We introduce a novel image captioning method named the DMFormer, which aims to address the limitations of existing methods in modeling spatial and semantic relationships between image regions, as well as the insufficient interaction between visual and textual features. The DMFormer is built upon the Linformer architecture, inheriting its low-complexity advantages, and incorporates a multi-layer RMAE-DMAD structure to more efficiently handle the image captioning task. The RMAE combines explicit spatial perception and implicit spatial perception, proposing a new attention module called RMAA. This module embeds geometric information into an attention mechanism augmented with memory units, effectively modeling the complex spatial relationships between image region features and capturing the overall semantic structure of the image. This design enables the model to generate richer and more accurate semantic information, thereby better reflecting the spatial structure and contextual information of the image. The DMAD adopts a multi-layer structure that densely connects multi-layer feature representations from all encoder layers. This module introduces DMACA, which constructs memory units to store prior knowledge of both visual and textual features. Through an adaptive gating mechanism, DMACA learns cross-modal associations between visual and language features. This design further explores the semantic associations between visual and textual features, reduces information loss, and generates more accurate and richer descriptions. Experiments conducted on the MS-COCO dataset demonstrate that the DMFormer generates more accurate and richer descriptions, with significant improvements in various evaluation metrics compared to mainstream methods.