Abstract

The rapid growth of IoT devices has increased security attack behaviors, posing a challenge to IoT security. Some Federated-Learning-based detection methods have been widely used to detect malicious attacks in the IoT by analyzing network traffic; because of the nature of Federated Learning, these methods can protect user privacy and reduce bandwidth consumption. However, existing malicious traffic detection models are often complex, requiring significant computational resources for training. In addition, high-dimensional input features often contain redundant information, which further increases computational overhead. To mitigate this, many model lightweighting techniques are utilized, and many non-end-to-end dimensionality reduction methods are employed; however, these lightweighting methods still struggle to meet the computational demands, and these feature downscaling methods tend to compromise the model’s generalizability and accuracy. In addition, existing methods are unable to dynamically select long-term dependencies when extracting traffic time-series features, limiting the performance of the model when dealing with long time series. To address the above challenges, this paper proposes a lightweight malicious traffic detection model, named the lightweight gated bidirectional temporal convolutional network (LG-BiTCN), based on Federated Learning. First, we use global average pooling (GAP) and a pointwise convolutional layer as a classification module, significantly reducing the model’s parameter count. We also propose an end-to-end adaptive PCA dimension adjustment algorithm for automatic dimensionality reduction to reduce computational complexity and enhance model generalizability. Second, we incorporate gated convolution into the LG-BiTCN architecture, allowing for the dynamic selection of long-term dependencies, enhancing detection accuracy while maintaining computational efficiency. We evaluated the LG-BiTCN’s effectiveness by comparing it with three advanced baseline models on three generic datasets. The results show that the LG-BiTCN achieves over 99.6% accuracy while maintaining the lowest computational complexity. Additionally, in a Federated Learning setup, it requires just two communication rounds to reach 96.75% accuracy.

1. Introduction

With the rapid development of wireless communication and intelligent sensing technologies, Internet of Things (IoT) devices have become integral to various sectors [1], such as energy [2,3,4], industry [5,6,7], transportation [8,9], healthcare [10,11], and smart homes [12,13]. Although the pervasiveness of IoT devices has greatly improved convenience, their inadequate security design and insufficient testing can lead to exploitable security vulnerabilities, leading to security attacks. According to Nokia’s 2023 Threat Intelligence Report, IoT devices involved in distributed denial of service (DDoS) attacks surged from 200,000 to 1 million, leading to an estimated global financial loss of USD 2.5 billion. Therefore, detecting security attack behaviors in IoT networks has become crucial for ensuring IoT security.

Common attack detection models are designed by analyzing whether network traffic is benign or malicious to detect attack behaviors in the network. Such malicious traffic detection methods have been validated as effective methods for maintaining the security of IoT systems [14]. Initially, centralized training approaches were widely adopted for developing IoT malicious traffic detection models [15,16]. In this paradigm, raw traffic data are transferred to the cloud [15] for model training, leveraging extensive computational resources. However, due to the increased emphasis on privacy protection, such approaches have faced increasing scrutiny due to concerns over potential privacy breaches [17], especially in regions with strict data protection regulations. For instance, the European Union’s General Data Protection Regulation (GDPR) [18] imposes severe restrictions on cross-border data transfers, prompting a search for alternative solutions.

Subsequently, with advancements in chip technology and edge computing, distributed training methods like Federated Learning have become more popular as a promising alternative [17,19,20,21]. Federated Learning enables model training to occur directly on local edge devices, with only model parameter updates being transmitted to the cloud. This method eliminates the need for raw data transmission, thereby reducing privacy risks and bandwidth consumption [22].

Despite its promises, existing Federated-Learning-based approaches to IoT malicious traffic detection have two key challenges.

First, training on complex model structures and redundant high-dimensional input features often requires significant computational overheads, especially for resource-constrained edge devices. To address this problem, an increasing number of researchers are committed to exploring lightweight neural networks and feature downscaling techniques for malicious traffic detection [14,23,24,25]. For instance, for developing a lightweight model for IoT malicious traffic detection in Federated Learning environments, MobileNet-Tiny [14] and LwResnet [24] achieve model complexity reduction by decreasing the number of convolutional layers and kernels. Despite their achievements, they have all neglected lightweighting in the classification phase, where a fully connected layer is used to classify the extracted features, resulting in substantial parameter and computation overhead. To efficiently handle redundant high-dimensional input features, works [26,27,28] utilize autoencoders to reduce the dimensionality of the features. Although effective in reducing computational overhead, these methods necessitate a separate training process for the feature reduction module. This extra step introduces complexity and constrains the overall performance of the model. To address this issue, Wang et al. [29] and Rey et al. [30] utilized a semi-supervised training approach, where the autoencoder extracts features from a large amount of unlabeled data and the classifier utilizes these features to classify the labeled data. This approach allows the autoencoder to be trained simultaneously with the classifier. Moreover, to eliminate the need for training autoencoders, works [31,32] utilize a mayfly optimization algorithm and PCA to reduce the dimensionality of the features. However, these methods still require manual tuning to determine the optimal output dimension and thus remain outside the end-to-end category. These onerous tuning processes, especially when applied to different datasets, such as different participants in Federated Learning (where participants operate in varying network environments and use heterogeneous IoT devices that generate network traffic), hinder the model’s performance and adaptability.

Second, to improve the accuracy of lightweight IoT malicious traffic detection models in Federated Learning environments, current methods typically focus on extracting time-series features from network traffic but neglect the importance of dynamically selecting valid long-term dependencies. For instance, Jin et al. [33] utilize a 1D CNN to transform network traffic into one-dimensional traffic time series and apply one-dimensional convolutions for classification. Yang et al. [26] enhance this approach by using the TCN’s dilated convolutions to expand the receptive field, which allows the network to view further historical information at each time step, thus enabling the capture of longer-range dependencies between traffic time series. However, since the models lack a mechanism for selecting important information after extracting long-term dependencies from the input series, they remain vulnerable to noise interference during classification, which ultimately compromises model performance.

In response, in order to address the two issues discussed above, we propose a lightweight gated-bidirectional temporal convolutional network (LG-BiTCN) framework designed to detect IoT malicious traffic using a lightweight and efficient method. The contributions of this paper are presented as follows:

- (1)

- To reduce the model parameter count, we improve BiTCN’s classification module with GAP and pointwise convolutional layers, and we propose an end-to-end adaptive principal components analysis (PCA) dimensionality reduction algorithm that can automatically reduce the feature dimension to its optimal state. GAP effectively preserves the spatial structure of input data while significantly reducing feature dimensionality; meanwhile, the end-to-end adaptive PCA dimensionality reduction algorithm can automatically compare the accuracy of different feature dimensions on a dataset to determine an optimal input feature dimension, therefore eliminating the manual intervention and improving the generalizability and detection efficiency of the model.

- (2)

- We integrate a gated convolution mechanism into the LG-BiTCN to address the issue of BiTCN’s inability to dynamically select long-term dependencies. This mechanism acts as an information selector, allowing the model to retain only the most essential long-term dependencies by dynamically controlling the flow of information.

- (3)

- We compare the LG-BiTCN against three state-of-the-art models on three datasets. The experiments demonstrate our method’s excellence in accuracy and computational overhead. On the Edge-IIoTset dataset with 280,000 samples selected and the ToN-IoT dataset with 191,789 samples selected, our model achieves the highest accuracy with the lowest FLOPs and parameter count. On the CIC-IoT2023 dataset with 180,000 samples selected, our model’s accuracy is only 0.07% lower than the top performer, while using just 6.69% of the FLOPs. Thus, it demands minimal computational overhead while achieving outstanding detection performance.

2. Related Work

As a key component in the field of cybersecurity, Federated Learning-based malicious traffic detection for the IoT plays an important role in identifying malicious attacks in the IoT and preventing privacy data leakage. Currently, research in this area focuses on three aspects. First, given the resource constraints of IoT edge devices, research on lightweight detection models has received increasing attention. These models aim to achieve efficient malicious detection with limited computational and storage resources. Second, the diversity and complexity of IoT devices lead to the generation of high-dimensional network traffic data. In order to address the resulting ‘dimensionality disaster’ [34], researchers are exploring feature dimensionality reduction techniques for IoT scenarios. These techniques not only reduce computational overhead, but also preserve key information in the data, thus improving detection efficiency and accuracy. Finally, given the inherent time-series nature of IoT network traffic data, methods that treat it as time-series data have been shown to significantly improve detection accuracy. Therefore, this paper will review the existing Federated Learning-based methods for IoT malicious traffic detection from the above three perspectives.

2.1. Research on Model Lightweighting Techniques

Due to the limited computational capacity of IoT devices compared to the cloud, careful management of model complexity and training overhead is essential. To mitigate this burden, Qin et al. [35] proposed splitting model training, assigning the fully connected layer to IoT devices while edge devices handle the remainder. Nonetheless, many IoT devices still lack the capability for independent model training. LwResnet [24] utilizes residual units that contain multiple layers of one-dimensional convolutions, as they reduce the number of filters in the residual units, making their structure contain fewer layers and convolution kernels compared to residual networks used for image processing tasks [36]. MobileNet-Tiny [14] completes the model lightweighting by removing three specific bottleneck layers in the original MobileNet V3. The authors believe that the streamlined structure is more suitable for IoT traffic, thus enhancing the stability of training and the reliability of the model. Shen et al. [37] suggested that the performance of the GRU is similar to that of LSTM, but with lower computational overhead. To further improve the computational efficiency of the GRU, they also stacked two GRUs together at the hidden layer to form a stacked GRU layer. However, they all used hugely complex fully connected networks for classification.

2.2. Research on Feature Dimensionality Reduction Techniques and End-to-End Improvements

For high-dimensional IoT traffic, feature dimensionality techniques not only reduce the computational overhead but also reduce the multiple covariance of features. Given these advantages, feature dimensionality reduction techniques play a crucial role in Federated-Learning-based IoT malicious traffic detection. For example, Yang et al. [26] first trained a stacked sparse autoencoder (SSAE) to extract more general and efficient features, and then used a TCN to classify the extracted features. The results indicated that the training time could be reduced by more than 50% using this method. Wang et al. [29] proposed a semi-supervised Federated Learning framework utilizing an autoencoder based on a multilayer 1D CNN for reconstructing unlabeled traffic data. Subsequently, a CNN was employed for labeled data classification. This approach achieved over 96% accuracy on the ISCX VPN2016 dataset [38]. However, the above methods are independent for feature downscaling and classification, so the whole detection process is non-end-to-end, which results in locally optimal solutions rather than globally optimal solutions and increases the computational overhead of model optimization.

In order to solve the above problems, researchers started to try end-to-end malicious traffic detection. Xia et al. [31] and Zhang et al. [32] directly input features extracted by the mayfly optimization algorithm and PCA into depth-separable convolutional neural networks and graph convolutional networks, respectively. These algorithms enhance training efficiency by selecting the most representative features. Nevertheless, their feature dimensionality reduction schemes still require manual fine-tuning to obtain optimal parameters, thus falling short of a truly end-to-end approach.

2.3. Research on Feature Extraction Techniques for Traffic Time Series

Due to the popularity of dynamic ports and encryption, traditional traffic classification methods lose their usefulness. Therefore, methods based on traffic feature extraction and using deep learning classification become the solution. Given the inherent time sequence characteristics of IoT network traffic data, methods that treat them as time-series data have been demonstrated to significantly enhance detection accuracy. He et al. [39] employed long short-term memory (LSTM) networks to classify IoT traffic based on extracted time-series features. Their approach achieved a classification accuracy of 88.2% on the dataset published by Meidian et al. [40]. Shen et al. [37] utilized gated recurrent units (GRUs) for IoT malicious traffic time series detection. GRUs, compared to LSTM, offer advantages such as a reduced parameter count, lower computational overhead, and faster convergence rates. Their approach attained over 90% detection accuracy on the N-BaIoT dataset [41]. However, it is noteworthy that the aforementioned variants of RNNs all employed a serial architecture, which results in a relatively time-intensive process for model training and updating.

One-dimensional CNNs and TCNs support parallel computation when dealing with time series, making them more suitable choices. Yang et al. [26] used a TCN model to detect IoT malicious attacks, achieving 98.62% accuracy on the CIC-IDS-2017 dataset, as well as a shorter training time than the GRU. Jin et al. [33] used a 1D CNN for malicious traffic detection, achieving 95.62% accuracy on the ISCX-VPN-2016 dataset [38]. However, these models are still unable to select long-term dependencies dynamically, which may lead to the loss of valid long-term dependencies or the retention of categorically irrelevant information.

In conclusion, striking a balance between model accuracy and complexity while ensuring that enhanced end-to-end models are not afflicted by the loss of long-term dependencies remains a critical challenge in Federated Learning and IoT security. Further exploration is essential for meeting these practical constraints.

3. Preliminaries

This section presents background knowledge about our proposed method, i.e., the specific structure of the BiTCN model.

The bidirectional temporal convolutional network (BiTCN) is a predictive architecture that utilizes two temporal convolutional networks (TCNs). The first network processes future covariates of the traffic time series while the second focuses on past observations and covariates. This approach maintains the traffic time series’s temporal structure and is computationally more efficient than traditional RNN methods like LSTM and GRUs. Compared to Transformer-based models, the BiTCN significantly reduces space complexity, requiring a far lower parameter count.

To effectively process long time series, the BiTCN employs causal dilated convolution, an innovative approach for extracting global information from extended traffic time series. This architecture implements strategic padding of the input traffic time series prior to convolution, ensuring two critical properties: first, the output at any given time step is determined exclusively by elements from previous time steps, maintaining temporal causality; and, second, the output time-series length precisely matches the input time-series length, preserving temporal dimensionality. The dilated convolution mechanism introduces systematic gaps in the convolution kernel, significantly expanding the receptive field of each convolution operation without the need for deeper layers. This sophisticated structure enables the BiTCN to effectively handle traffic time series while maintaining relatively low computational overhead, offering a more efficient alternative to traditional recurrent architectures.

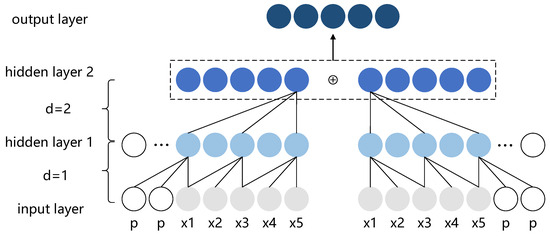

Consider the forward TCN model, where the network traffic one-dimensional time series obtained from past time steps is denoted as

with T representing the length of the time series. Let

represent the convolutional kernel. The dilated convolution operation at timestamp t can be expressed as follows:

where is the feature of the forward network traffic, d represents the dilation rate (when d = 1, the dilated convolution degenerates to normal convolution), k is the size of the convolution kernel, and represents the information from past time steps. As an example illustrated in Figure 1, we input a traffic time series with a time step of 5 into the forward TCN, which captures global features in the future time direction. Meanwhile, we reverse the traffic time series along the time dimension, and input it into the backward TCN, capturing global features in the past time direction. Furthermore, by infusing the results of both the forward and backward TCN, the network can comprehensively learn the characteristics of the traffic time series from both future and past perspectives. After obtaining the infused features from the forward and backward TCN, the BiTCN applies a fully connected layer to integrate all the features extracted in the previous layers and map the extracted features to the final output space to produce the final output.

Figure 1.

Architectural elements in a BiTCN. A dilated causal convolution with dilation rate d = 1, 2, and convolution kernel size k = 3; p stands for padding, x represents an element in the input sequence.

Although the BiTCN has shown promise in previous traffic detection tasks, it requires substantial improvements to meet the stringent computational and accuracy demands of Federated Learning environments. First, the fully connected layer in the classification module, which contains the highest number of parameters, imposes a significant computational burden on IoT edge devices. Optimizing this component is essential for ensuring feasibility in resource-constrained settings. Furthermore, the absence of an information selection module that does not introduce additional parameters limits the model’s ability to achieve high classification performance, particularly after the fully connected layer has been removed as part of the model lightweighting. In such cases, the model becomes more vulnerable to noise, as operations such as global average pooling—often used as a replacement—tend to aggregate both informative and irrelevant features, thereby diluting the quality of the final representation.

4. Methodology

In this section, we first introduce the implementation of an LG-BiTCN IoT malicious traffic detection model under a Federated Learning framework. Subsequently, we introduce the specific structure of the model.

4.1. The Process of Implementing LG-BiTCN

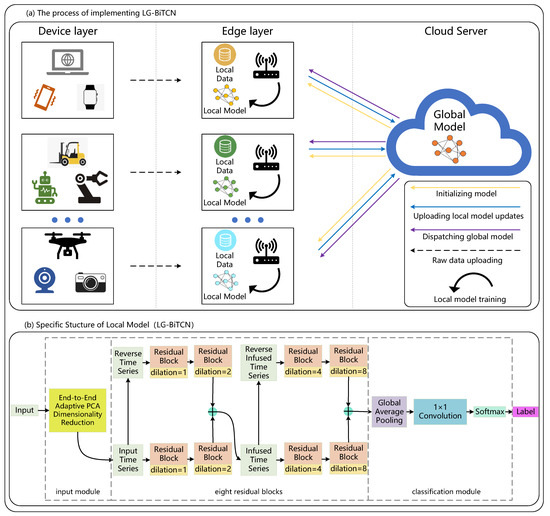

The proposed LG-BiTCN IoT malicious traffic detection network operates in five key stages as illustrated in Figure 2. In the first stage, the cloud server issues an initialized global model to the edge layer. Secondly, the edge layer will collect raw traffic time-series data from the device layer for local training. After completing local training, the model updates from the edge layer are transmitted to the cloud server for integration into a global model. Finally, the global model will be dispatched to the edge layer. This process is repeated for several rounds, until the final global model is deployed on the edge devices for malicious traffic detection.

Figure 2.

The process implementing LG-BiTCN.

4.2. The Specific Structure of LG-BiTCN

The LG-BiTCN is a lightweight IoT malicious traffic detection model proposed in this paper, and its specific structure is shown in Figure 2. The LG-BiTCN contains 3 main parts: (1) an end-to-end adaptive PCA dimensionality reduction algorithm, (2) the eight residual blocks, and (3) a classification module. First, we address the redundancy in raw traffic features by proposing an end-to-end adaptive PCA dimensionality reduction algorithm. This algorithm allows LG-BiTCNs in different network regions to automatically adjust the dimensionality of the input features to the optimum. Next, the optimized low-dimensional traffic time series are fed into the eight residual blocks for further feature extraction. To achieve dynamic selection of long-term dependencies in the time series, we incorporate gated convolution into the residual blocks. Finally, the classification module is composed of global average pooling and pointwise convolution, i.e., 1 × 1 convolution and a softmax layer; this improvement significantly reduces the parameter count in the classification module and improves detection efficiency.

4.2.1. End-to-End Adaptive PCA Dimensionality Adjustment Algorithm

In this section, we will explain how to automate the feature downscaling process for the model using an end-to-end adaptive PCA dimension adjustment algorithm.

The end-to-end adaptive PCA dimensionality adjustment algorithm automates the search for optimal dimensionality by systematically downscaling the original high-dimensional features. It compares the performance of the downscaled models and records the dimensions associated with the best-performing models, thereby facilitating the identification of the most suitable dimensionality.

Users in Federated Learning often operate in different network regions, where various IoT devices generate diverse traffic patterns. As a result, the manual tuning of model input data dimensions is typically required for each user to achieve optimal detection accuracy. By fully automating the search for optimal input dimensions, the algorithm enhances input data sparsity and minimizes the need for manual adjustments for higher generalizability and detection accuracy.

We demonstrate our proposed algorithm in Algorithm 1: first, the input dataset D, along with the total feature dimension , step size s, fine step size , and minimum dimension , are provided (lines 1–3). Next, the algorithm starts with the maximum feature dimension , decreasing by a fixed step size s at each iteration, and evaluates the model’s accuracy on the dataset after PCA dimensionality reduction (lines 4–7). If the accuracy at a certain dimension is a local maximum, the dimension is added to the list L (lines 8–10). The algorithm then initializes variables and (line 12). For each local maximum dimension stored in L, the algorithm performs a finer search around using the fine step size (lines 13–14). For each refined dimension d, PCA is applied again to reduce the dimensionality, the model is retrained, and the accuracy is re-evaluated (lines 15–18). If the current accuracy exceeds the best accuracy , the algorithm updates and (lines 19–21). Finally, the algorithm returns the optimal dimension and its corresponding best accuracy (lines 23–24).

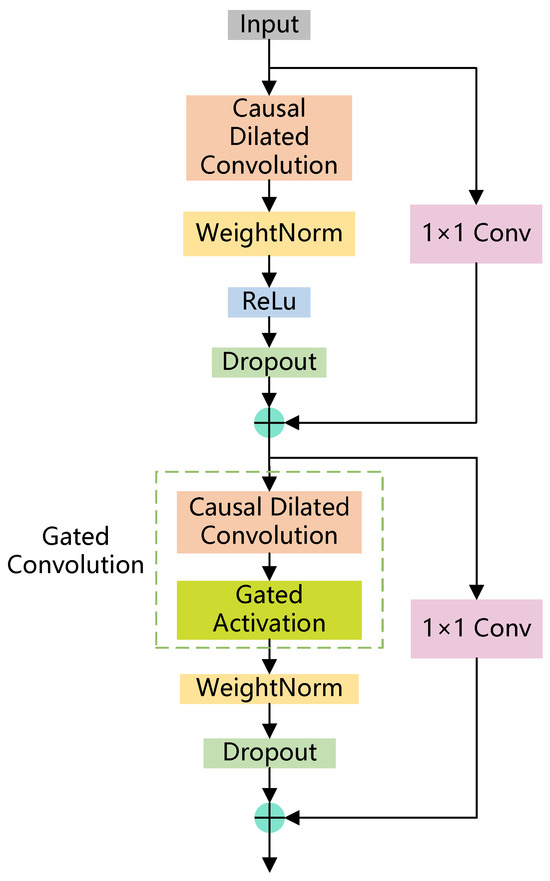

4.2.2. Residual Block of LG-BiTCN (For Dynamic Selection of Long-Term Dependencies)

The specific structure of the LG-BiTCN’s residual block is illustrated in Figure 3. After entering the residual block, the input time series will firstly undergo parallel processing through two distinct pathways: the primary branch and a residual connection. In the primary branch, causal dilated convolution is employed to efficiently capture extended temporal dependencies from input time series, after which the output time series is then subjected to standard optimization procedures, including weight normalization, ReLU activation, and dropout. Concurrently, the residual branch applies 1 × 1 convolution to ensure dimensional compatibility with the primary branch’s output channels. The final output is obtained by concatenating the time series from both branches. This combined output then undergoes a similar process, with the notable distinction that the conventional causal dilated convolution is replaced by a gated convolution mechanism inspired by the LSTM architecture. This strategic architectural enhancement supersedes the conventional causal dilated convolution in the residual blocks, enabling them to dynamically discriminate and preserve the most informative long-term dependencies from the expanded temporal receptive field achieved through the causal dilated convolution.

| Algorithm 1 Adaptive PCA Dimensionality Reduction |

|

Figure 3.

Structure of LG-BiTCN’s residual block.

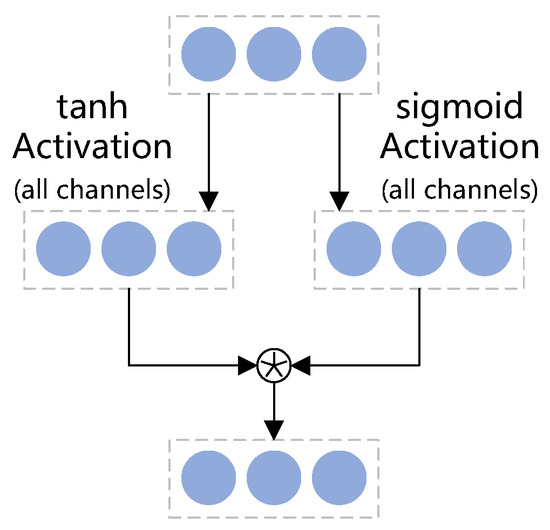

Gating mechanisms play a crucial role in controlling the flow of information through neural networks and have proven to be indispensable in recurrent architectures [42]. In LSTM, the input gate and forget gate mechanisms enable unimpeded information flow across multiple time steps, effectively addressing the vanishing gradient problem caused by information loss. The output gate mechanism controls which long-term dependencies will be propagated to the subsequent layers in the network hierarchy. Considering that convolutional networks possess inherent resistance to the same vanishing gradient problem as LSTM does [43], we carefully introduce the structure of the output gate into the residual blocks, which allows the network to selectively control the presence of long-term dependencies through its hierarchical layers. As shown in Figure 1, gated convolution integrates causal dilated convolution with gated activation mechanisms to form a coordinated architecture. While causal dilated convolution has been introduced in Section 3, the mathematical formulation of gated activation shown in Figure 4 is defined as follows:

Figure 4.

Gated activation.

Here, c and n represent the number of input and output feature maps, respectively, k is the size of the patch, is the input to the gated convolution, , , , and are the learnable parameters, tanh is the hyperbolic tangent activation function, and is the sigmoid activation function, with ⊙ denoting element-wise multiplication.

While the sigmoid function is commonly employed as an activation function in neural networks, its role in our proposed architecture is distinctly different. Here, we leverage the sigmoid function as a gating mechanism. The key property that we exploit is the sigmoid’s output range, which is bounded between 0 and 1. This distinctive characteristic enables the sigmoid output to serve as a sophisticated dynamic selecting factor for the information flow produced by the causal dilated convolutions, which retain the valid long-term dependencies and discard invalid ones.

Complementing the sigmoid gate, we utilize the tanh activation function for the main information path. The tanh function’s output, centered around zero, plays a crucial role in normalizing the activations. This centering effect helps to maintain a balanced distribution of positive and negative values, which is known to accelerate the convergence speed of the network during training.

Therefore, gated convolution implements an efficient mechanism for managing long-term dependencies within the network. The architecture leverages causal dilated convolution, which expands the temporal receptive field to allow the network to view longer temporal dependencies, while the gated activation dynamically selects the most efficient among these lengthy long-term dependencies. This carefully designed combination enhances the model’s ability to dynamically select long-term dependencies, ultimately leading to improved performance and faster convergence.

4.2.3. The Lightweight Classification Module

In this study, we have made structural enhancements to the classification layer of the traditional BiTCN. The feature maps generated through multiple rounds of causal dilated convolution often contain dense, complex information. Traditional approaches typically employ a fully connected layer to process these feature-rich representations directly. While straightforward, this method can lead to a significant increase in parameter count, potentially resulting in overfitting and reduced model generalization.

To address the aforementioned challenges, we introduce a novel approach. Instead of using fully connected layers, we have implemented a combination of GAP and a 1 × 1 convolutional layer. This design not only reduces the parameter count but also enhances the model’s ability to process flow-like data structures inherent in IoT traffic. For a traffic time series , where L is the length of the time series and C is the number of channels, represents the value at position i of the c-th channel. The output after global average pooling, , can be represented as follows:

Here, is a vector of size , where each element is the average value of the corresponding channel in the original time series. This operation compresses the length of each channel from L to 1 while retaining the information of all C channels. Following GAP, we employ 1 × 1 convolutional layers to further adjust the feature dimensions and a softmax layer to achieve class-specific feature transformations.

For instance, in the original TCN, the parameter count required for the classification layer is calculated as follows (taking two layers of fully connected layers as an example):

where is the number of input channels, L is the length of the input time series, N is the number of neurons in the hidden layer of fully connected layers, and is the number of output classes.

The parameter count for our new configuration (taking two layers of the 1 × 1 convolutional layer after GAP as an example) is calculated as follows:

where n is the number of neurons in the hidden layer of 1 × 1 convolutional layers and is the number of output classes. It can be observed that the longer the input time series, the higher the parameter count that can be saved by using 1 × 1 convolutional layers after GAP, which is particularly beneficial for time-series processing that considers long-term dependencies in data flow.

In conclusion, the proposed LG-BiTCN model significantly enhances the capabilities of the original BiTCN architecture while mitigating its limitations. Our innovations are threefold: first, we develop an end-to-end network based on our end-to-end adaptive PCA dimensionality adjustment algorithm that eliminates the need for complex model tuning, thus making it more accessible to diverse users. Second, we incorporate a robust module, i.e., gated convolution, that substantially improve the model’s capacity to process intricate traffic time series by retaining only valid information. Third, we optimize both input feature dimension and model structure aspects to dramatically reduce unnecessary and redundant computations. Consequently, the LG-BiTCN model offers a more precise, efficient, and effective solution for network traffic analysis. This advancement is particularly crucial in resource-constrained environments, where it strikes an optimal balance between computational efficiency and analytical accuracy.

5. Experiments

5.1. Description of Datasets

In our study, we mainly focus on three datasets: Edge-IIoT [44], the CIC IoT dataset 2023 [45], and the ToN-IoT [46,47,48,49] dataset. The Edge-IIoT dataset was released in 2022 and was generated through a purposely constructed testbed. The IoT data come from more than 10 types of IoT devices, such as ultrasonic sensors, heart rate sensors, etc. This dataset includes 13 attacks and benign ones related to IoT and IIoT connectivity protocols. The number of traffic samples in the training set is 211,131 and, in the test set, it is 90,350; each traffic sample has 61 recorded features and 1 label.

The CIC IoT dataset 2023 was released in 2023, and is a novel and extensive IoT attack dataset specifically designed for security analytics in real-world IoT deployments. It collects 33 attacks from 105 IoT devices, categorized into 7 main categories of traffic. We selected 7 of the representative attack categories for our study, such as DNS spoofing and OS scanning. The number of traffic samples in the training set is 160,000 and, in the test set, it is 40,000; each traffic sample has 46 recorded features and 1 label.

Another dataset, ToN-IoT, was released in 2021, collected from a realistic network environment designed at the IoT Lab of the University of New South Wales. The dataset contains normal traffic and nine attack classes, such as MITM, XSS, and ransomware. The number of traffic samples in the training set is 151,439 and, in the test set, it is 40,350; each traffic sample has 44 recorded features and 1 label.

We list all the categories associated with each task for all datasets in Table 1.

Table 1.

Dataset summary.

5.2. Experimental Environment and Hyperparameters

We organized our experiments on a Windows 10 operating system with an Intel Xeon(R) Gold 5120 CPU (2.20 GHz, 14 cores, 32 GB) (Intel Corporation, Santa Clara, CA, USA), Python 3.8, Pytorch 2.2.2 deep learning framework. The hyperparameters are set as follows: the loss function is a cross-entropy loss function, the optimizer is the Adam-Optimizer, the learning rate is 0.001, the batch size is 64, and the number of epochs is 10. The model contains 8 residual blocks, each of which consists of one layer of dilated causal convolution and one layer of gated convolution, the dilation rate is (1, 2, 4, 8), and the kernel size is 3.

5.3. Selection of Baselines

To evaluate the performance of the LG-BiTCN model, we selected the following models for experimentation as a comparison.

- The bidirectional temporal convolutional network (BiTCN) [50] utilizes dilated causal convolution to extract local patterns in time series, and uses a bidirectional processing mechanism that is able to efficiently capture the dynamic properties of time series data.

- The bidirectional gated recurrent unit (BiGRU) [51] simplifies the structure of gates, including the update gate and reset gate. The GRU has a simpler structure and lower parameter count than previous gating units, but still handles the retention and transfer of information efficiently. This model is commonly used for traffic time-series prediction, and is capable of capturing long-term dependencies in time series.

- Adaptive clustering intrusion detection (ACID) [52] is an architecture specifically optimized for network edge deployment. The framework presents an edge-optimized architecture featuring a novel adaptive clustering methodology for enhanced feature space representation. The model achieves a perfect accuracy and F1-score (100%) with zero false alarms across four distinct datasets spanning two decades, significantly outperforming conventional clustering network intrusion detection approaches.

5.4. Evaluation Metrics

In order to evaluate the performance of LG-BiTCN in detecting malicious traffic, four metrics are used in this paper: accuracy, precision, recall and F1-measurement. Among them, accuracy indicates the proportion of correctly classified samples to all tested samples and is a measure of the overall performance of the model. Precision indicates the proportion of true positive samples to all samples classified as positive. Recall indicates the proportion of correctly classified positive samples among all true positive samples. F1-measure is the harmonic mean of precision and recall, and is used to comprehensively evaluate the precision and recall of the model. These four metrics are calculated as follows:

where TP denotes the number of positive samples correctly categorized as positive, TN denotes the number of negative samples correctly categorized as negative, FP denotes the number of negative samples categorized as positive, and FN denotes the number of positive samples categorized as negative.

In addition, we use parameter count, floating point operations (FLOPs), and model size to measure the operational performance of the method. The parameter count is related to the kernel size and the number of input and output channels of the model, and measures the use of computational resources such as memory during model training and detection. FLOPs are the number of multiplication and addition operations in the model, which measure the computational overhead of the model. The model size provides a more intuitive reflection of the parameter count and computational resources used in the detection phase, and measures the portability of the method on edge computing devices.

5.5. Ablation Experiment

In this ablation study, we discuss how each of our designed component in the LG-BiTCN model contributes to the overall performance. We conducted ablation experiments on 14 types of tasks on the Edge-IIoTset dataset. The results of the experiments are shown in Table 2, where (0) represents replacing the gated convolutions in the LG-BiTCN with ordinary causal dilation convolutions, (1) refers to replacing the classification module in the LG-BiTCN, which utilizes a 1 × 1 convolutional layer followed by a hidden layer with 60 neurons after GAP, with a conventional fully connected layer that includes a hidden layer of 600 neurons, (2) represents removing the end-to-end adaptive PCA dimensionality reduction module in the LG-BiTCN, and (3) represents the LG-BiTCN proposed in this paper.

Table 2.

Results of ablation experiments.

It is evident that our structural design for the LG-BiTCN significantly reduces model complexity while enhancing detection performance. When the end-to-end adaptive PCA dimensionality reduction algorithm in the LG-BiTCN is replaced, the FLOPs, parameter count and model size of the model increase by 43.91%, 41.05% and 37.7%, respectively. However, the accuracy and F1-measure of the model are lower than before. After replacing the lightweight classification module in the LG-BiTCN, the parameter count increases to more than ten times that observed before the modification, the model size grows to more than five times its previous size, and the FLOPs rise by 37.25%. Nonetheless, the detection performance of the model is diminished. All of this proves that the two aforementioned modules not only effectively serve a lightweighting role but also confirm the model’s suitability for real-world IoT malicious traffic detection applications. After replacing the gated convolution, although the FLOPs and parameter count are reduced by 33.2% and 30.7%, respectively, there is a raise of 0.15% for both the accuracy and F1-measure. This indicates that gated convolution can indeed enhance model detection performance while maintaining an acceptable level of computational overhead.

In summary, the architectural design of the LG-BiTCN successfully reconciles the competing demands of computational efficiency and detection accuracy, exhibiting superior performance metrics while maintaining reduced computational overhead.

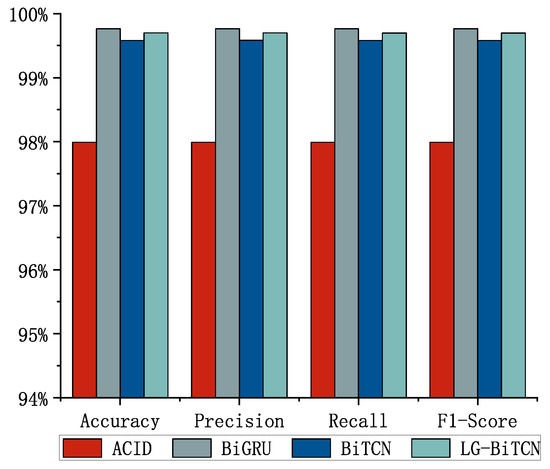

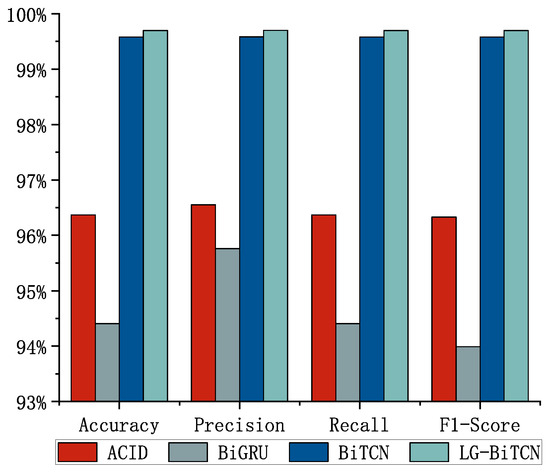

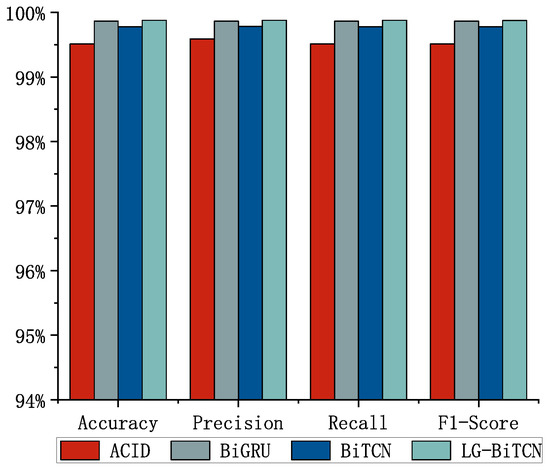

5.6. Comparison of Detection Results

We compared the LG-BiTCN with the other four models on three datasets; Figure 5, Figure 6 and Figure 7 shows the average detection results of 10 experiments, and Table 3 demonstrates the comparison of the computational overhead of different models. In the 14 classes of tasks on the Edge-IIoT dataset and the 10 classes of tasks on the ToN-IoT, the LG-BiTCN outperforms the other compared models in terms of accuracy, precision, recall, and F1-measure by 99.69% and 99.88%; moreover, it exhibits significantly lower model complexity than the other models.

Figure 5.

Edge-IIoT dataset (14-class).

Figure 6.

CIC IoT dataset 2023 (8-class).

Figure 7.

ToN-IoT dataset (10-class).

Table 3.

Computational overhead comparison.

The best performing model on the CIC IoT 2023 dataset is the BiGRU, which is able to achieve 99.76% accuracy, precision, recall, and F1-score. This is followed by the LG-BiTCN, with metrics 0.07% lower than the BiGRU’s for each of the above four measures. However, it is worth noting that the FLOPs, parameter counts, and model size of the LG-BiTCN are only 6.69%, 7.82%, and 16.67% those of the BiGRU, respectively. Therefore, the LG-BiTCN is still the most suitable model to be deployed on edge devices for cloud–edge cooperative training.

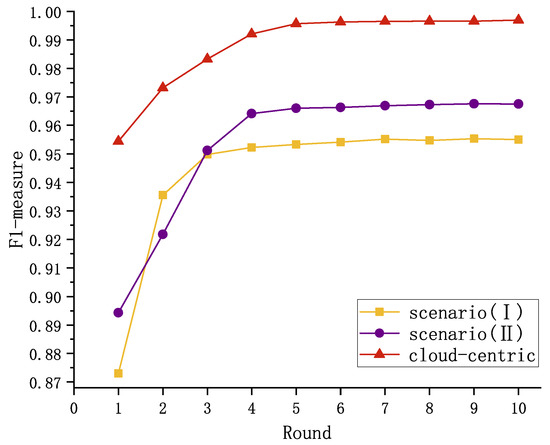

5.7. Effectiveness of Federated-Learning-Based Malicious Traffic Detection

The aforementioned experiments validate the efficacy of the LG-BiTCN in resource-constrained edge environments. To further evaluate its detection capabilities within a Federated Learning framework, we implemented a cloud–edge architecture based on the canonical FedAvg [53] algorithm. In this setup, edge devices first use the model parameters from the cloud to perform local training, and then transmit the updated parameters back to the cloud for global aggregation. This process is repeated several times to obtain the final global model. The experimental evaluation was conducted using the Edge-IIoT dataset. We set 10 participants representing edge devices to divide the training set into 10 parts, and set the number of local training rounds to 10 and the number of communication rounds between the cloud server and edge devices to 10.

By comparing the F1-scores of the final model under both cloud-centric and Federated Learning approaches, as well as the number of communication rounds required for convergence—where, in Federated Learning, this refers to the communication rounds between the cloud and edge, and, in the cloud-centric approach, it corresponds to the total number of training epochs—we can evaluate their efficiency and effectiveness.

Given that individual edge devices can only capture a limited subset of IoT malicious traffic types, detection models trained exclusively on their local datasets inherently lack the capability to identify the complete spectrum of potential IoT cyberattacks. However, detection models can be trained by sharing intelligence among participants to discover cyberattacks unknown to them. In this study, we assume that the 10 edge devices are E0, E1, E2, E3, E4, E5, E6, E7, E8, and E9, and our experiments are divided into two main scenarios:

Scenario (i): all 10 edge devices are given training samples of all malicious traffic categories.

Scenario (ii): each edge device is given training samples that are missing one malicious traffic category each.

Table 4 and Table 5 explain the local training sample distribution for the two specific cases considered in our study. Table 6, on the other hand, shows the distribution of test samples used for evaluating the performance of the global model. Specifically, for scenario (ii), the samples obtained by E0, E1, E2, E3, E4, E5, E6, E7, E8, and E9 do not contain Ransomware, XSS, PortScanning, Backdoor, Uploading, DDosTCP, VulnerabilityScan, Password, SQLinjection, and Normal, respectively. Furthermore, we also consider the cloud-centric scenario wherein a cloud center performs model training on a comprehensive dataset encompassing all categories of malicious network traffic.

Table 4.

Training data distribution for scenario (i).

Table 5.

Training data distribution for scenario (ii).

Table 6.

Test data distribution for Federated Learning.

The models obtained through both scenarios were tested on a test set containing the complete set of types; a comparison of the models based on different experimental scenarios is shown in Figure 8. It is clear that the LG-BiTCN has excellent detection results as well as fast convergence speed in the process of aggregating into the final global model. In scenario (i), although both the anomaly detection performance of the global model in a Federated Learning setting and the convergence speed are affected, the LG-BiTCN model still achieves a classification accuracy of 96.75% after just six rounds of cloud–edge training. Furthermore, starting from the fourth round, the F1-measure is only 3% lower than that observed in the cloud-centric scenario. In scenario (ii), although the model’s final F1-measure and convergence speed were not as good as the other two scenarios, it still reached and stabilized above 95.22% after only four rounds of training, and, considering its ability to share attack intelligence among edge devices to avoid unknown attacks, we conclude that this marginal performance trade-off is acceptable within the context of distributed threat detection. Therefore, the proposed LG-BiTCN demonstrates its viability as a Federated-Learning-based intrusion detection solution for IoT environments. Through federated training, the LG-BiTCN empowers edge devices with enhanced capabilities in detecting previously unseen attack patterns, thereby improving the overall security posture of distributed IoT systems.

Figure 8.

Comparison of the performance of federated-based and cloud-centric models.

6. Conclusions

In this paper, we propose an end-to-end lightweight deep learning model LG-BiTCN based on Federated Learning for IoT malicious traffic detection in order to achieve a robust traffic detection performance within the constraints of rigorous user privacy protection frameworks. We propose an end-to-end adaptive PCA dimensionality reduction algorithm and introduce gated convolution for the BiTCN, as well as optimize the classification layer in the BiTCN with global average pooling and pointwise convolution to ensure the accuracy of the model and to reduce the parameter count of the model as well as the computational resources required and the model size. Th experimental results on the Edge-IIoT dataset, CIC IoT dataset 2023, and ToN-IoT show that the LG-BiTCN not only has lower computational overhead and minimized model size but also has an excellent detection accuracy as well as F1-score. In the experiments on Federated Learning, the LG-BiTCN can converge with very few rounds and achieve detection results higher than 96.75%.

In real-world IoT networks, only a limited proportion of network traffic is labeled. Our future work will explore the integration of active and semi-supervised learning with resource-efficient models to enhance practical malicious traffic detection, addressing both the computational constraints of IoT devices and the challenge of detecting unknown malicious variants.

Author Contributions

Conceptualization, Y.H.; methodology, J.C. and W.L.; software, J.C.; validation, Y.G.; formal analysis, J.C. and W.L.; investigation, Y.H.; resources, Y.H.; data curation, Y.H.; writing—original draft preparation, J.C. and W.L.; writing—review and editing, J.S.; visualization, J.C.; supervision, Y.H.; project administration, Y.H.; funding acquisition, Y.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Fundamental Research Funds for the Central Universities under Grant 2024JCCXAQ03, and also supported by the Hillstone Network Security Project of China University Industry–University–Research Innovation Fund under Grant 2022HS061.

Data Availability Statement

The original data presented in this study are openly available in the CICIoT2023 at (http://cicresearch.ca/IOTDataset/CIC_IOT_Dataset2023, accessed on 8 March 2025), the Edge-IIoT dataset at (https://www.kaggle.com/datasets/mohamedamineferrag/edgeiiotset-cyber-security-dataset-of-iot-iiot, accessed on 8 March 2025), and the TON-IoT datasets at (https://research.unsw.edu.au/projects/toniot-datasets, accessed on 8 March 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| Abbreviations | Notations |

| IoT | Internet of Things |

| TCN | Temporal Convolutional Network |

| BiTCN | Bidirectional Temporal Convolutional Network |

| LG-BiTCN | Lightweight Gated Bidirectional Temporal Convolutional Network |

| GDPR | General Data Protection Regulation |

| DoS | Denial of Service |

| DDoS | Distributed Denial of Service |

| BiGRU | Bidirectional Gated Recurrent Unit |

| ACID | Adaptive Clustering Intrusion Detection |

| LSTM | Long Short-Term Memory |

| GRU | Gated Recurrent Unit |

| GAP | Global Average Pooling |

| PCA | Principal Components Analysis |

| FLOPs | Floating Point Operations |

| SSAE | Stacked Sparse Autoencoder |

| CNN | Convolutional Neural Network |

| RNN | Recurrent Neural Network |

References

- Al-Turjman, F.; Lemayian, J.P. Intelligence, security, and vehicular sensor networks in internet of things (IoT)-enabled smart-cities: An overview. Comput. Electr. Eng. 2020, 87, 106776. [Google Scholar] [CrossRef]

- Charef, N.; Mnaouer, A.B.; Aloqaily, M.; Bouachir, O.; Guizani, M. Artificial intelligence implication on energy sustainability in Internet of Things: A survey. Inf. Process. Manag. 2023, 60, 103212. [Google Scholar] [CrossRef]

- Silva, J.A.A.; López, J.C.; Guzman, C.P.; Arias, N.B.; Rider, M.J.; da Silva, L.C. An IoT-based energy management system for AC microgrids with grid and security constraints. Appl. Energy 2023, 337, 120904. [Google Scholar] [CrossRef]

- Kavitha, C.; Varalatchoumy, M.; Mithuna, H.; Bharathi, K.; Geethalakshmi, N.; Boopathi, S. Energy Monitoring and Control in the Smart Grid: Integrated Intelligent IoT and ANFIS. In Applications of Synthetic Biology in Health, Energy, and Environment; IGI Global: Hershey, PA, USA, 2023; pp. 290–316. [Google Scholar]

- Jayalaxmi, P.; Saha, R.; Kumar, G.; Kim, T.H. Machine and deep learning amalgamation for feature extraction in Industrial Internet-of-Things. Comput. Electr. Eng. 2022, 97, 107610. [Google Scholar] [CrossRef]

- Khang, A.; Rath, K.C.; Satapathy, S.K.; Kumar, A.; Das, S.R.; Panda, M.R. Enabling the future of manufacturing: Integration of robotics and IoT to smart factory infrastructure in industry 4.0. In Handbook of Research on AI-Based Technologies and Applications in the Era of the Metaverse; IGI Global: Hershey, PA, USA, 2023; pp. 25–50. [Google Scholar]

- Javeed, D.; Gao, T.; Saeed, M.S.; Khan, M.T. FOG-Empowered Augmented-Intelligence-Based Proactive Defensive Mechanism for IoT-Enabled Smart Industries. IEEE Internet Things J. 2023, 10, 18599–18608. [Google Scholar] [CrossRef]

- Chen, Q.; Xie, L.; Zeng, L.; Jiang, S.; Ding, W.; Huang, X.; Wang, H. Neighborhood rough residual network–based outlier detection method in IoT-enabled maritime transportation systems. IEEE Trans. Intell. Transp. Syst. 2023, 24, 11800–11811. [Google Scholar] [CrossRef]

- Dahooie, J.H.; Mohammadian, A.; Qorbani, A.R.; Daim, T. A portfolio selection of internet of things (IoTs) applications for the sustainable urban transportation: A novel hybrid multi criteria decision making approach. Technol. Soc. 2023, 75, 102366. [Google Scholar] [CrossRef]

- Rejeb, A.; Rejeb, K.; Treiblmaier, H.; Appolloni, A.; Alghamdi, S.; Alhasawi, Y.; Iranmanesh, M. The Internet of Things (IoT) in healthcare: Taking stock and moving forward. Internet Things 2023, 22, 100721. [Google Scholar] [CrossRef]

- Alshammari, H.H. The internet of things healthcare monitoring system based on MQTT protocol. Alex. Eng. J. 2023, 69, 275–287. [Google Scholar] [CrossRef]

- Perez, A.J.; Siddiqui, F.; Zeadally, S.; Lane, D. A review of IoT systems to enable independence for the elderly and disabled individuals. Internet Things 2023, 21, 100653. [Google Scholar] [CrossRef]

- Philip, S.J.; Luu, T.J.; Carte, T. There’s No place like home: Understanding users’ intentions toward securing internet-of-things (IoT) smart home networks. Comput. Hum. Behav. 2023, 139, 107551. [Google Scholar] [CrossRef]

- Huang, K.; Xian, R.; Xian, M.; Wang, H.; Ni, L. A comprehensive intrusion detection method for the internet of vehicles based on federated learning architecture. Comput. Secur. 2024, 147, 104067. [Google Scholar] [CrossRef]

- Bansal, M.; Chana, I.; Clarke, S. A survey on iot big data: Current status, 13 v’s challenges, and future directions. ACM Comput. Surv. (CSUR) 2020, 53, 1–59. [Google Scholar] [CrossRef]

- Huo, Y.; Liang, W.; Chen, J.; Zhuang, S.; Sun, J. LightGuard: A Lightweight Malicious Traffic Detection Method for Internet of Things. IEEE Internet Things J. 2024, 11, 28566–28577. [Google Scholar] [CrossRef]

- de Souza, C.A.; Westphall, C.B.; Machado, R.B.; Loffi, L.; Westphall, C.M.; Geronimo, G.A. Intrusion detection and prevention in fog based IoT environments: A systematic literature review. Comput. Netw. 2022, 214, 109154. [Google Scholar] [CrossRef]

- General Data Protection Regulation (GDPR). Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the Protection of Natural Persons with Regard to the Processing of Personal Data and on the Free Movement of Such Data, and Repealing Directive 95/46/EC; European Union: Brussels, Belgium, 2016. [Google Scholar]

- Lavaur, L.; Pahl, M.O.; Busnel, Y.; Autrel, F. The evolution of federated learning-based intrusion detection and mitigation: A survey. IEEE Trans. Netw. Serv. Manag. 2022, 19, 2309–2332. [Google Scholar] [CrossRef]

- Hua, H.; Li, Y.; Wang, T.; Dong, N.; Li, W.; Cao, J. Edge computing with artificial intelligence: A machine learning perspective. ACM Comput. Surv. 2023, 55, 1–35. [Google Scholar] [CrossRef]

- Nguyen, D.C.; Ding, M.; Pathirana, P.N.; Seneviratne, A.; Li, J.; Poor, H.V. Federated learning for internet of things: A comprehensive survey. IEEE Commun. Surv. Tutor. 2021, 23, 1622–1658. [Google Scholar] [CrossRef]

- Bhardwaj, K.; Miranda, J.C.; Gavrilovska, A. Towards IoT-DDoS Prevention Using Edge Computing. In Proceedings of the USENIX Workshop on Hot Topics in Edge Computing (HotEdge 18), Boston, MA, USA, 10 July 2018. [Google Scholar]

- Wang, Y.; Zhang, Q.; Wei, T.; Cong, L.; Yu, P.; Guo, S.; Qiu, X. Lightweight Federated Learning Driven Traffic Prediction for Heterogeneous IoT Networks. IEEE Internet Things J. 2024, 11, 40656–40669. [Google Scholar] [CrossRef]

- Tian, Q.; Guang, C.; Wenchao, C.; Si, W. A lightweight residual networks framework for DDoS attack classification based on federated learning. In Proceedings of the IEEE INFOCOM 2021–IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Vancouver, BC, Canada, 10–13 May 2021; pp. 1–6. [Google Scholar]

- Abdel-Basset, M.; Hawash, H.; Sallam, K.M.; Elgendi, I.; Munasinghe, K.; Jamalipour, A. Efficient and lightweight convolutional networks for IoT malware detection: A federated learning approach. IEEE Internet Things J. 2022, 10, 7164–7173. [Google Scholar] [CrossRef]

- Yang, R.; He, H.; Xu, Y.; Xin, B.; Wang, Y.; Qu, Y.; Zhang, W. Efficient intrusion detection toward IoT networks using cloud–Edge collaboration. Comput. Netw. 2023, 228, 109724. [Google Scholar] [CrossRef]

- Xin, Q.; Xu, Z.; Guo, L.; Zhao, F.; Wu, B. IoT traffic classification and anomaly detection method based on deep autoencoders. Appl. Comput. Eng. 2024, 69, 64–70. [Google Scholar] [CrossRef]

- Zixu, T.; Liyanage, K.S.K.; Gurusamy, M. Generative adversarial network and auto encoder based anomaly detection in distributed IoT networks. In Proceedings of the GLOBECOM 2020–2020 IEEE Global Communications Conference, Taipei, Taiwan, 7–11 December 2020; pp. 1–7. [Google Scholar]

- Wang, Z.; Li, Z.; Fu, M.; Ye, Y.; Wang, P. Network traffic classification based on federated semi-supervised learning. J. Syst. Archit. 2024, 149, 103091. [Google Scholar] [CrossRef]

- Rey, V.; Sánchez, P.M.S.; Celdrán, A.H.; Bovet, G. Federated learning for malware detection in IoT devices. Comput. Netw. 2022, 204, 108693. [Google Scholar] [CrossRef]

- Xia, Q.; Dong, S.; Peng, T. An abnormal traffic detection method for IoT devices based on federated learning and depthwise separable convolutional neural networks. In Proceedings of the 2022 IEEE International Performance, Computing, and Communications Conference (IPCCC), Austin, TX, USA, 11–13 November 2022; pp. 352–359. [Google Scholar]

- Zhang, C.; Cui, L.; Yu, S.; James, J. A communication-efficient federated learning scheme for iot-based traffic forecasting. IEEE Internet Things J. 2021, 9, 11918–11931. [Google Scholar] [CrossRef]

- Jin, Z.; Duan, K.; Chen, C.; He, M.; Jiang, S.; Xue, H. FedETC: Encrypted traffic classification based on federated learning. Heliyon 2024, 10, e35962. [Google Scholar] [CrossRef]

- Wojtowytsch, S.; Weinan, E. Can shallow neural networks beat the curse of dimensionality? A mean field training perspective. IEEE Trans. Artif. Intell. 2020, 1, 121–129. [Google Scholar] [CrossRef]

- Qin, T.; Cheng, G.; Wei, Y.; Yao, Z. Hier-SFL: Client-edge-cloud collaborative traffic classification framework based on hierarchical federated split learning. Future Gener. Comput. Syst. 2023, 149, 12–24. [Google Scholar] [CrossRef]

- Xue, X.; Wang, Y.; Li, J.; Jiao, Z.; Ren, Z.; Gao, X. Progressive sub-band residual-learning network for MR image super resolution. IEEE J. Biomed. Health Inform. 2019, 24, 377–386. [Google Scholar] [CrossRef]

- Shen, Y.; Zhang, Y.; Li, Y.; Ding, W.; Hu, M.; Li, Y.; Huang, C.; Wang, J. IoT Malicious Traffic Detection Based on Federated Learning. In Proceedings of the International Conference on Digital Forensics and Cyber Crime, New York, NY, USA, 30 November 2023; Springer: Cham, Switzerland, 2023; pp. 249–263. [Google Scholar]

- Gil, G.D.; Lashkari, A.H.; Mamun, M.; Ghorbani, A.A. Characterization of encrypted and VPN traffic using time-related features. In Proceedings of the 2nd International Conference on Information Systems Security and Privacy (ICISSP 2016), Rome, Italy, 19–21 February 2016; SciTePress: Setúbal, Portugal, 2016; pp. 407–414. [Google Scholar]

- He, Z.; Yin, J.; Wang, Y.; Gui, G.; Adebisi, B.; Ohtsuki, T.; Gacanin, H.; Sari, H. Edge device identification based on federated learning and network traffic feature engineering. IEEE Trans. Cogn. Commun. Netw. 2021, 8, 1898–1909. [Google Scholar] [CrossRef]

- Meidan, Y.; Bohadana, M.; Shabtai, A.; Guarnizo, J.D.; Ochoa, M.; Tippenhauer, N.O.; Elovici, Y. ProfilIoT: A machine learning approach for IoT device identification based on network traffic analysis. In Proceedings of the Symposium on Applied Computing, Marrakech, Morocco, 3–7 April 2017; pp. 506–509. [Google Scholar]

- Meidan, Y.; Bohadana, M.; Mathov, Y.; Mirsky, Y.; Shabtai, A.; Breitenbacher, D.; Elovici, Y. N-baiot—Network-based detection of iot botnet attacks using deep autoencoders. IEEE Pervasive Comput. 2018, 17, 12–22. [Google Scholar] [CrossRef]

- Graves, A.; Graves, A. Long short-term memory. In Supervised Sequence Labelling with Recurrent Neural Networks; Springer: Berlin/Heidelberg, Germany, 2012; pp. 37–45. [Google Scholar]

- Dauphin, Y.N.; Fan, A.; Auli, M.; Grangier, D. Language modeling with gated convolutional networks. In Proceedings of the International Conference on Machine Learning, PMLR, Sydney, Australia, 6–11 August 2017; pp. 933–941. [Google Scholar]

- Ferrag, M.A.; Friha, O.; Hamouda, D.; Maglaras, L.; Janicke, H. Edge-IIoTset: A new comprehensive realistic cyber security dataset of IoT and IIoT applications for centralized and federated learning. IEEE Access 2022, 10, 40281–40306. [Google Scholar] [CrossRef]

- Neto, E.C.P.; Dadkhah, S.; Ferreira, R.; Zohourian, A.; Lu, R.; Ghorbani, A.A. CICIoT2023: A real-time dataset and benchmark for large-scale attacks in IoT environment. Sensors 2023, 23, 5941. [Google Scholar] [CrossRef]

- Booij, T.M.; Chiscop, I.; Meeuwissen, E.; Moustafa, N.; Den Hartog, F.T. ToN_IoT: The role of heterogeneity and the need for standardization of features and attack types in IoT network intrusion data sets. IEEE Internet Things J. 2021, 9, 485–496. [Google Scholar] [CrossRef]

- Moustafa, N. A new distributed architecture for evaluating AI-based security systems at the edge: Network TON_IoT datasets. Sustain. Cities Soc. 2021, 72, 102994. [Google Scholar] [CrossRef]

- Alsaedi, A.; Moustafa, N.; Tari, Z.; Mahmood, A.; Anwar, A. TON_IoT telemetry dataset: A new generation dataset of IoT and IIoT for data-driven intrusion detection systems. IEEE Access 2020, 8, 165130–165150. [Google Scholar] [CrossRef]

- Moustafa, N.; Keshky, M.; Debiez, E.; Janicke, H. Federated TON_IoT Windows datasets for evaluating AI-based security applications. In Proceedings of the 2020 IEEE 19th International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Guangzhou, China, 29 December 2020–1 January 2021; pp. 848–855. [Google Scholar]

- Chen, J.; Lv, T.; Cai, S.; Song, L.; Yin, S. A novel detection model for abnormal network traffic based on bidirectional temporal convolutional network. Inf. Softw. Technol. 2023, 157, 107166. [Google Scholar] [CrossRef]

- Cho, K. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Diallo, A.F.; Patras, P. Adaptive clustering-based malicious traffic classification at the network edge. In Proceedings of the IEEE INFOCOM 2021–IEEE Conference on Computer Communications, Vancouver, BC, Canada, 10–13 May 2021; pp. 1–10. [Google Scholar]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, PMLR, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).