Trustworthiness Optimisation Process: A Methodology for Assessing and Enhancing Trust in AI Systems

Abstract

1. Introduction

2. Related Work

2.1. Procedural Methodologies for TAI

2.2. TAI Documentation

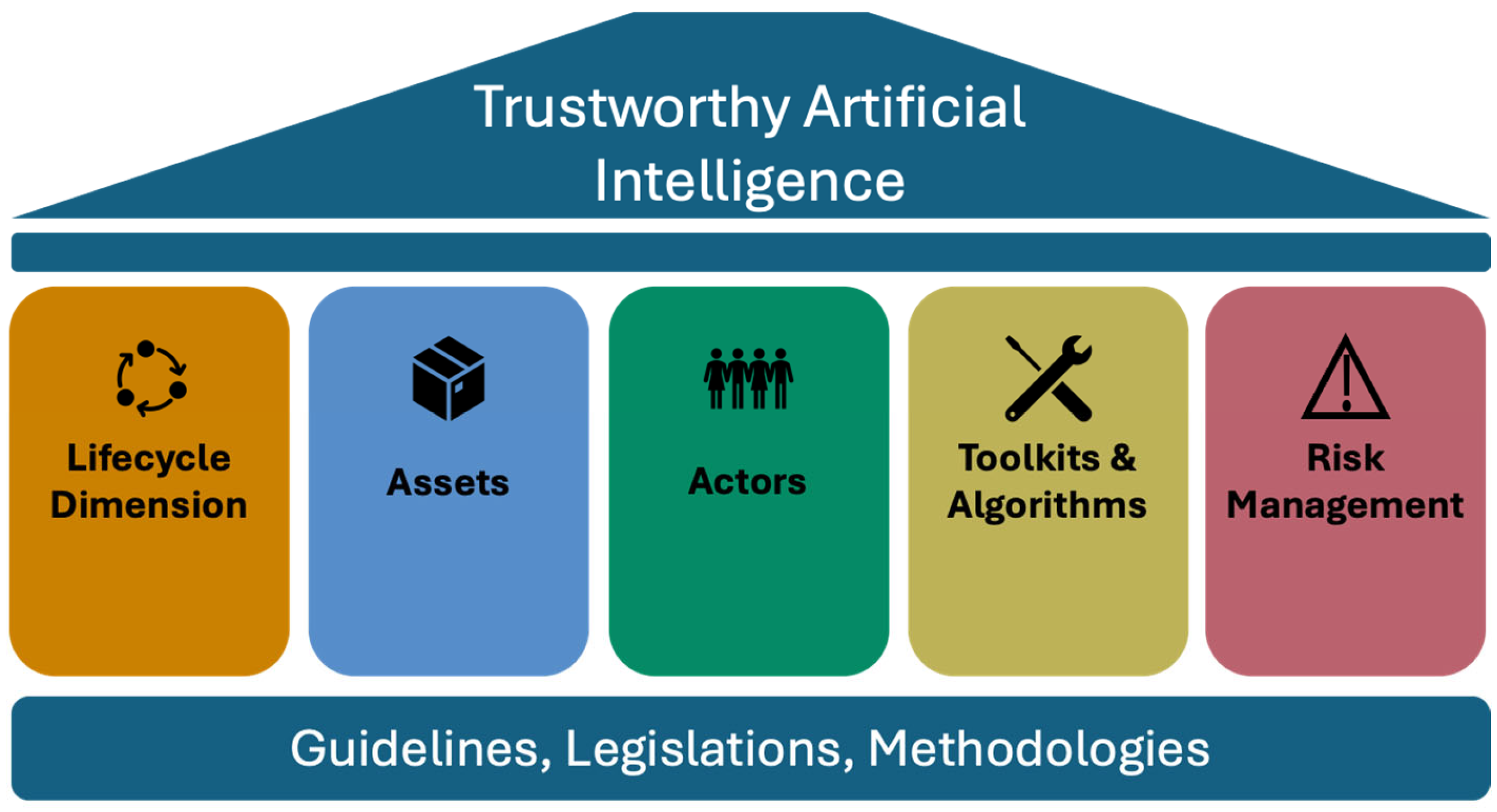

2.3. Trustworthy AI Pillars

2.4. Challenges

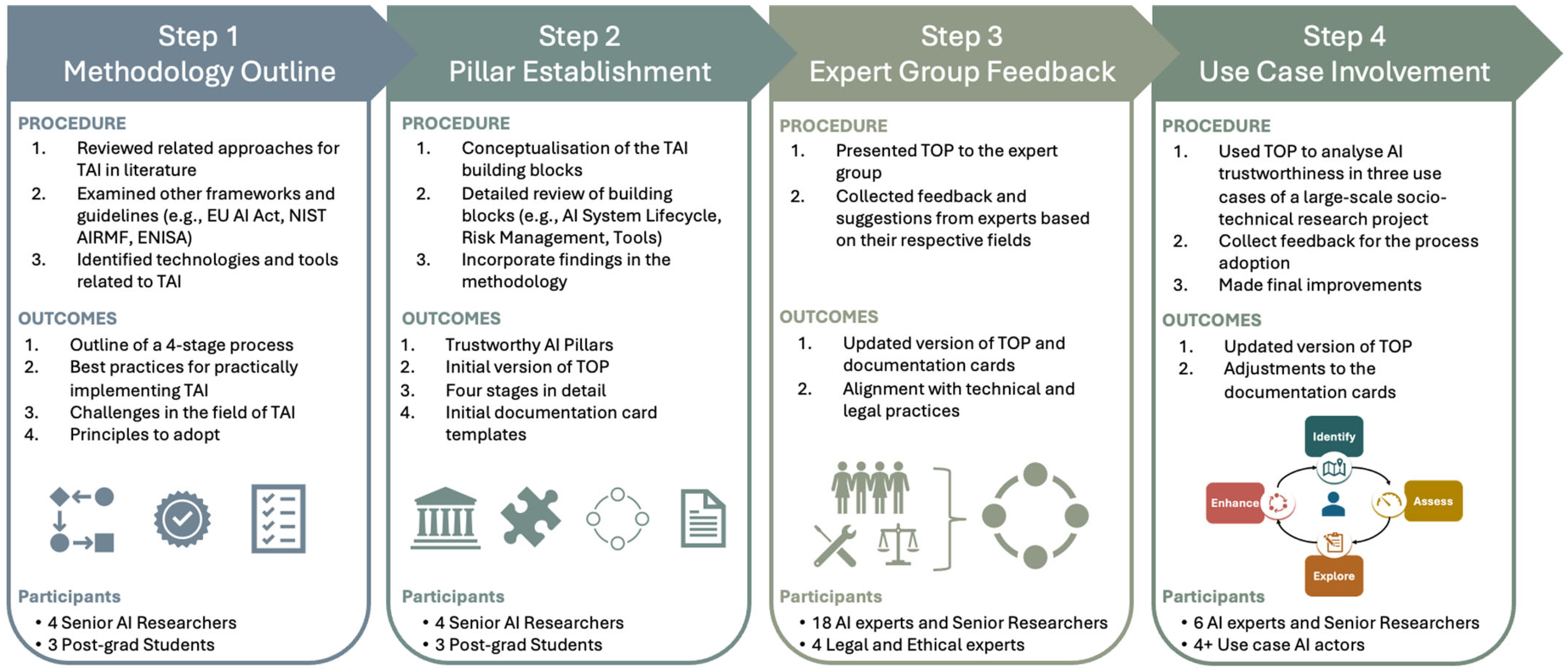

3. Research Method

- Vertical compatibility with the AI lifecycle, enabling application across all stages;

- Extensibility, facilitating continuous enrichment of the available trustworthiness methods pool;

- Conflict consideration to tackle friction and negative implications between the TC;

- Human-in-the-centre approach, placing humans as the focal point and including them through the process;

- Multidisciplinary engagement, where multiple stakeholders participate and provide inputs where needed.

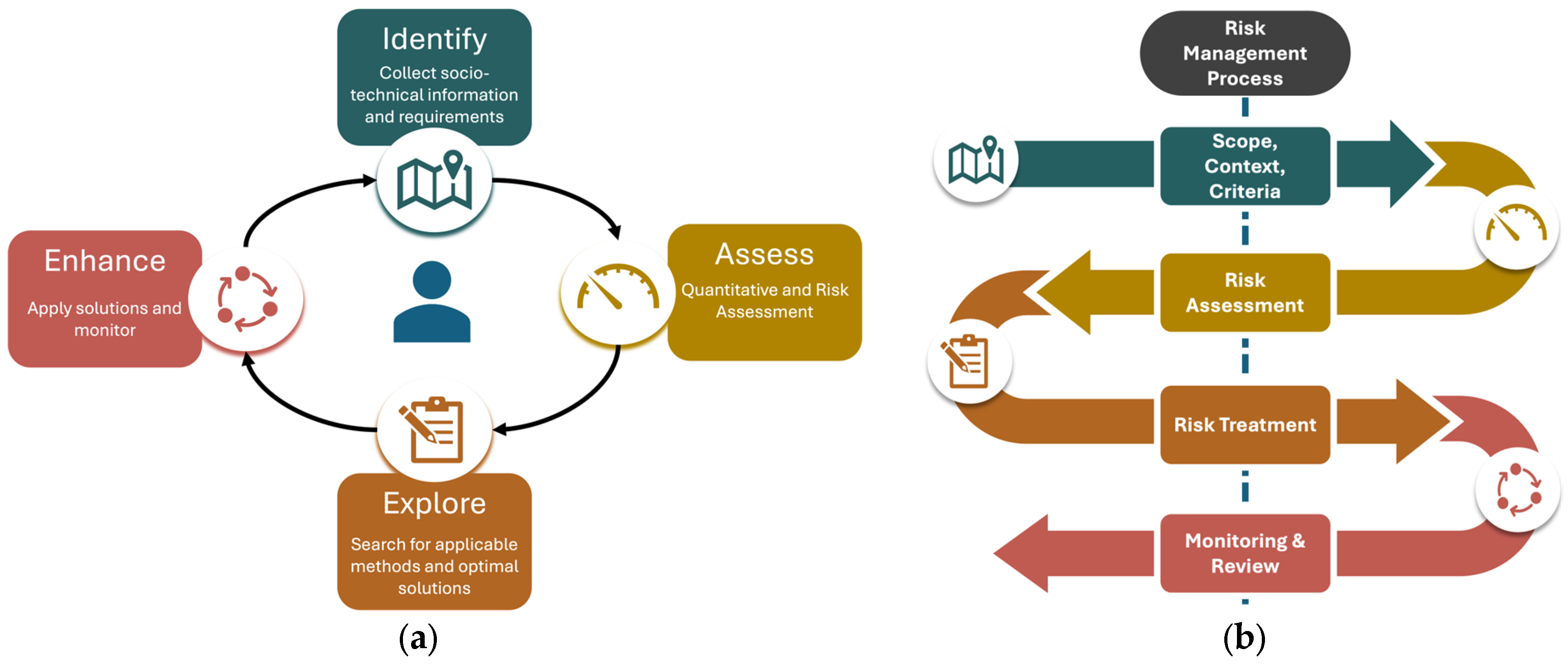

4. Methodology

4.1. Problem Definition

4.2. Identify

4.2.1. System Contextualisation

- Organisational codes of conduct, guidelines, rules, and procedures;

- Legal requirements and compliance with regulatory frameworks;

- Business environment targets and Key Performance Indicators (KPIs);

- Technical documentation of assets

- Purpose and specifications of the AI system;

- End-user requirements;

- Possible risks, limitations, and misuse scenarios;

- Third-party agreements, collaborators, and artefacts;

- Environmental and climate concerns;

- User preferences regarding trustworthiness.

4.2.2. Information Gathering

4.2.3. Metric and Method Linking

4.2.4. Risk and Vulnerability Deriving

4.2.5. Documenting Information

4.3. Assess

4.4. Explore

- The solutions sets are represented by the alternatives where ;

- The indicators, such as metrics and risk levels from are represented by the criteria where ;

- The organisational or supervisor preferences from the actors of the are represented by the weights , associated with each and ;

- The performance of each alternative , with respect to the criterion is represented by .

4.5. Enhance

5. Case Study

5.1. Identify

5.2. Assess

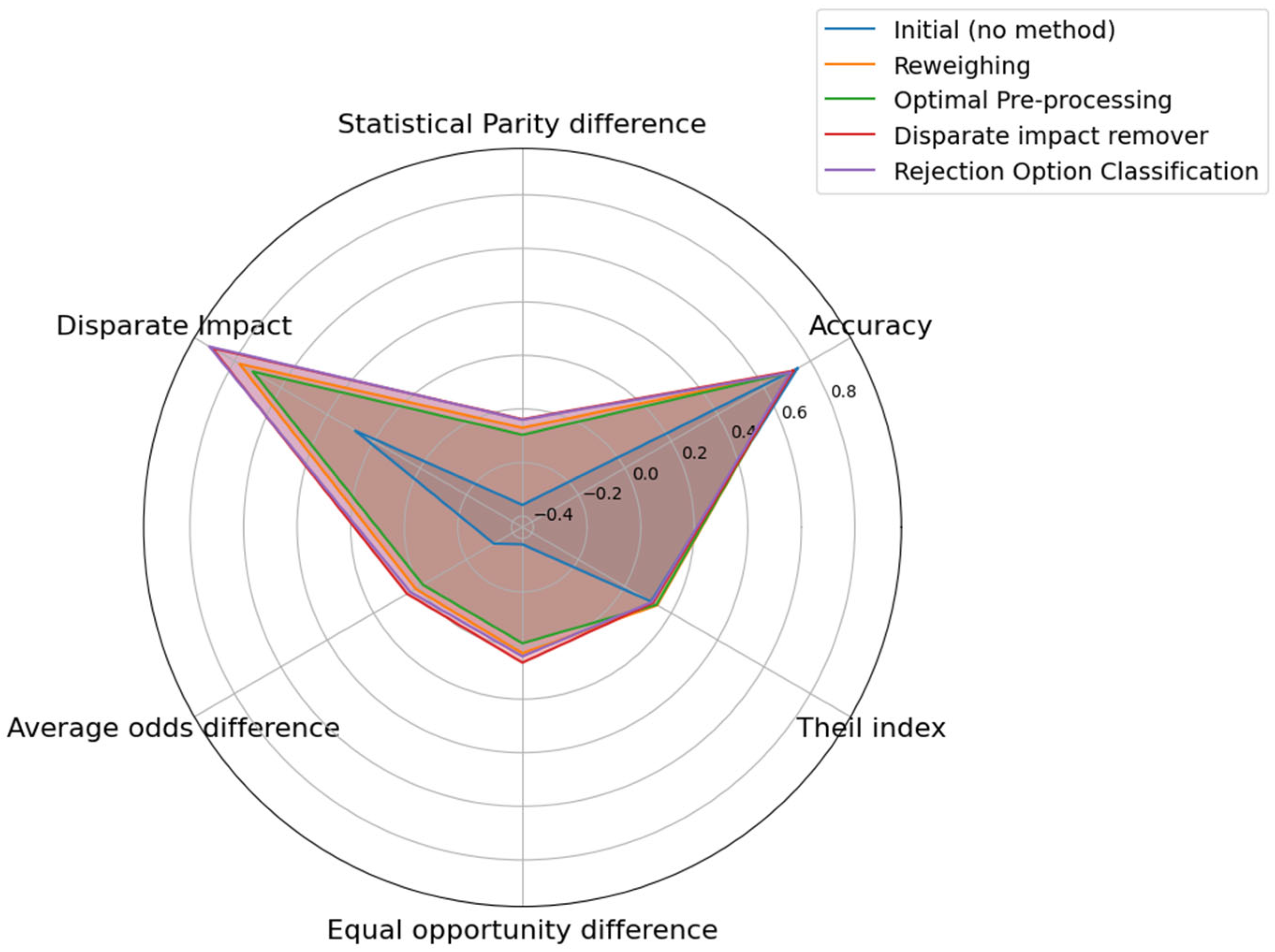

5.3. Explore

5.4. Enhance

6. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Singla, A.; Sukharevsky, A.; Yee, L.; Chui, M.; Hall, B. The State of AI in Early 2024: Gen AI Adoption Spikes and Starts to Generate Value; McKinsey and Company: Brussels, Belgium, 2024. [Google Scholar]

- Baeza-Yates, R.; Fayyad, U.M. Responsible AI: An Urgent Mandate. IEEE Intell. Syst. 2024, 39, 12–17. [Google Scholar] [CrossRef]

- Mariani, R.; Rossi, F.; Cucchiara, R.; Pavone, M.; Simkin, B.; Koene, A.; Papenbrock, J. Trustworthy AI—Part 1. Computer 2023, 56, 14–18. [Google Scholar] [CrossRef]

- Díaz-Rodríguez, N.; Del Ser, J.; Coeckelbergh, M.; de Prado, M.L.; Herrera-Viedma, E.; Herrera, F. Connecting the dots in trustworthy Artificial Intelligence: From AI principles, ethics, and key requirements to responsible AI systems and regulation. Inf. Fusion 2023, 99, 101896. [Google Scholar] [CrossRef]

- Prem, E. From Ethical AI Frameworks to Tools: A Review of Approaches. AI Ethics 2023, 3, 699–716. [Google Scholar] [CrossRef]

- Narayanan, M.; Schoeberl, C. A Matrix for Selecting Responsible AI Frameworks. Center for Security and Emerging Technology. 2023. Available online: https://cset.georgetown.edu/publication/a-matrix-for-selecting-responsible-ai-frameworks/#:~:text=The%20matrix%20provides%20a%20structured,to%20guidance%20that%20already%20exists (accessed on 26 February 2025).

- Kamiran, F.; Calders, T. Data Preprocessing Techniques for Classification without Discrimination. Knowl. Inf. Syst. 2012, 33, 1–33. [Google Scholar] [CrossRef]

- Zhang, B.H.; Lemoine, B.; Mitchell, M. Mitigating Unwanted Biases with Adversarial Learning. In Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society, New Orleans, LA, USA, 1–3 February 2018; pp. 335–340. [Google Scholar] [CrossRef]

- Hardt, M.; Price, E.; Price, E.; Srebro, N. Equality of Opportunity in Supervised Learning. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Nice, France, 2016; Volume 29, Available online: https://proceedings.neurips.cc/paper/2016/hash/9d2682367c3935defcb1f9e247a97c0d-Abstract.html (accessed on 26 February 2025).

- Boza, P.; Evgeniou, T. Implementing Ai Principles: Frameworks, Processes, and Tools; SSRN Scholarly Paper; SSRN: Rochester, NY, USA, 2021. [Google Scholar] [CrossRef]

- Floridi, L.; Holweg, M.; Taddeo, M.; Amaya, J.; Mökander, J.; Wen, Y. capAI—A Procedure for Conducting Conformity Assessment of AI Systems in Line with the EU Artificial Intelligence Act; SSRN Scholarly Paper; SSRN: Rochester, NY, USA, 2022. [Google Scholar] [CrossRef]

- Mökander, J.; Schuett, J.; Kirk, H.R.; Floridi, L. Auditing Large Language Models: A Three-Layered Approach. AI Ethics 2024, 4, 1085–1115. [Google Scholar] [CrossRef]

- Mökander, J.; Floridi, L. Operationalising AI governance through ethics-based auditing: An industry case study. AI Ethics 2022, 3, 451–468. [Google Scholar] [CrossRef]

- Zicari, R.V.; Brodersen, J.; Brusseau, J.; Dudder, B.; Eichhorn, T.; Ivanov, T.; Kararigas, G.; Kringen, P.; McCullough, M.; Moslein, F.; et al. Z-Inspection®: A Process to Assess Trustworthy AI. IEEE Trans. Technol. Soc. 2021, 2, 83–97. [Google Scholar] [CrossRef]

- Allahabadi, H.; Amann, J.; Balot, I.; Beretta, A.; Binkley, C.; Bozenhard, J.; Bruneault, F.; Brusseau, J.; Candemir, S.; Cappellini, L.A.; et al. Assessing Trustworthy AI in Times of COVID-19: Deep Learning for Predicting a Multiregional Score Conveying the Degree of Lung Compromise in COVID-19 Patients. IEEE Trans. Technol. Soc. 2022, 3, 272–289. [Google Scholar] [CrossRef]

- Poretschkin, M.; Schmitz, A.; Akila, M.; Adilova, L.; Becker, D.; Cremers, A.B.; Hecker, D.; Houben, S.; Mock, M.; Rosenzweig, J.; et al. Guideline for Trustworthy Artificial Intelligence—AI Assessment Catalog. arXiv 2023, arXiv:2307.03681. [Google Scholar]

- Vakkuri, V.; Kemell, K.-K.; Jantunen, M.; Halme, E.; Abrahamsson, P. ECCOLA—A method for implementing ethically aligned AI systems. J. Syst. Softw. 2021, 182, 111067. [Google Scholar] [CrossRef]

- Nasr-Azadani, M.M.; Chatelain, J.-L. The Journey to Trustworthy AI- Part 1: Pursuit of Pragmatic Frameworks. arXiv 2024, arXiv:2403.15457. [Google Scholar]

- Brunner, S.; Frischknecht-Gruber, C.M.-L.; Reif, M.; Weng, J. A Comprehensive Framework for Ensuring the Trustworthiness of AI Systems. In Proceeding of the 33rd European Safety and Reliability Conference; Research Publishing Services: Southampton, UK, 2023; pp. 2772–2779. [Google Scholar] [CrossRef]

- Baker-Brunnbauer, J. TAII Framework. In Trustworthy Artificial Intelligence Implementation: Introduction to the TAII Framework; Springer International Publishing: Cham, Switzerland, 2022; pp. 97–127. [Google Scholar]

- Guerrero Peño, E. How the TAII Framework Could Influence the Amazon’s Astro Home Robot Development; SSRN Scholarly Paper; SSRN: Rochester, NY, USA, 2022. [Google Scholar] [CrossRef]

- Baldassarre, M.T.; Gigante, D.; Kalinowski, M.; Ragone, A. POLARIS: A Framework to Guide the Development of Trustworthy AI Systems. In Proceedings of the IEEE/ACM 3rd International Conference on AI Engineering—Software Engineering for AI, CAIN’24, Lisbon, Portugal, 14–15 April 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 200–210. [Google Scholar] [CrossRef]

- Stettinger, G.; Weissensteiner, P.; Khastgir, S. Trustworthiness Assurance Assessment for High-Risk AI-Based Systems. IEEE Access 2024, 12, 22718–22745. [Google Scholar] [CrossRef]

- Confalonieri, R.; Alonso-Moral, J.M. An Operational Framework for Guiding Human Evaluation in Explainable and Trustworthy Artificial Intelligence. IEEE Intell. Syst. 2023, 39, 18–28. [Google Scholar] [CrossRef]

- Hohma, E.; Lütge, C. From Trustworthy Principles to a Trustworthy Development Process: The Need and Elements of Trusted Development of AI Systems. AI 2023, 4, 904–925. [Google Scholar] [CrossRef]

- Ronanki, K.; Cabrero-Daniel, B.; Horkoff, J.; Berger, C. RE-Centric Recommendations for the Development of Trustworthy(Er) Autonomous Systems. In Proceedings of the First International Symposium on Trustworthy Autonomous Systems, TAS ’23, Edinburgh, UK, 11–12 July 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Microsoft. Responsible AI Transparency Report: How We Build, Support Our Customers, and Grow. 2024. Available online: https://cdn-dynmedia-1.microsoft.com/is/content/microsoftcorp/microsoft/msc/documents/presentations/CSR/Responsible-AI-Transparency-Report-2024.pdf (accessed on 26 February 2025).

- PwC. From Principles to Practice: Responsible AI in Action. 2024. Available online: https://www.strategy-business.com/article/From-principles-to-practice-Responsible-AI-in-action (accessed on 26 February 2025).

- Digital Catapult. Operationalising Ethics in AI. 20 March 2024. Available online: https://www.digicatapult.org.uk/blogs/post/operationalising-ethics-in-ai/ (accessed on 26 February 2025).

- IBM. Scale Trusted AI with Watsonx. Governance. 2024. Available online: https://www.ibm.com/products/watsonx-governance (accessed on 26 February 2025).

- Accenture. Responsible AI: From Principles to Practice. 2021. Available online: https://www.accenture.com/us-en/insights/artificial-intelligence/responsible-ai-principles-practice (accessed on 26 February 2025).

- Vella, H. Accenture, AWS Launch Tool to Aid Responsible AI Adoption. AI Business, 28 August 2024. Available online: https://aibusiness.com/responsible-ai/accenture-aws-launch-tool-to-aid-responsible-ai-adoption (accessed on 26 February 2025).

- Micheli, M.; Hupont, I.; Delipetrev, B.; Soler-Garrido, J. The landscape of data and AI documentation approaches in the European policy context. Ethic-Inf. Technol. 2023, 25, 56. [Google Scholar] [CrossRef]

- Königstorfer, F. A Comprehensive Review of Techniques for Documenting Artificial Intelligence. Digit. Policy Regul. Gov. 2024, 26, 545–559. [Google Scholar] [CrossRef]

- Oreamuno, E.L.; Khan, R.F.; Bangash, A.A.; Stinson, C.; Adams, B. The State of Documentation Practices of Third-Party Machine Learning Models and Datasets. IEEE Softw. 2024, 41, 52–59. [Google Scholar] [CrossRef]

- Pushkarna, M.; Zaldivar, A.; Kjartansson, O. Data Cards: Purposeful and Transparent Dataset Documentation for Responsible AI. In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, Seoul, Republic of Korea, 21–24 June 2022; pp. 1776–1826. [Google Scholar] [CrossRef]

- Mitchell, M.; Wu, S.; Zaldivar, A.; Barnes, P.; Vasserman, L.; Hutchinson, B.; Spitzer, E.; Raji, I.D.; Gebru, T. Model Cards for Model Reporting. In Proceedings of the Conference on Fairness, Accountability, and Transparency, Atlanta, GA, USA, 29–31 January 2019; ACM: Atlanta, GA, USA, 2019; pp. 220–229. [Google Scholar] [CrossRef]

- Crisan, A.; Drouhard, M.; Vig, J.; Rajani, N. Interactive Model Cards: A Human-Centered Approach to Model Documentation. In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, FAccT ’22, Seoul, Republic of Korea, 21–24 June 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 427–439. [Google Scholar] [CrossRef]

- Liang, W.; Rajani, N.; Yang, X.; Ozoani, E.; Wu, E.; Chen, Y.; Smith, D.S.; Zou, J. What’s Documented in AI? Systematic Analysis of 32K AI Model Cards. arXiv 2024, arXiv:2402.05160. [Google Scholar]

- Alsallakh, B.; Cheema, A.; Procope, C.; Adkins, D.; McReynolds, E.; Wang, E.; Pehl, G.; Green, N.; Zvyagina, P. System-Level Transparency of Machine Learning. Meta AI. 2022. Available online: https://ai.meta.com/research/publications/system-level-transparency-of-machine-learning/ (accessed on 26 February 2025).

- Meta. Introducing 22 System Cards That Explain How AI Powers Experiences on Facebook and Instagram. 29 June 2023. Available online: https://ai.meta.com/blog/how-ai-powers-experiences-facebook-instagram-system-cards/ (accessed on 26 February 2025).

- Pershan, C.; Vasse’i, R.M.; McCrosky, J. This Is Not a System Card: Scrutinising Meta’s Transparency Announcements. 1 August 2023. Available online: https://foundation.mozilla.org/en/blog/this-is-not-a-system-card-scrutinising-metas-transparency-announcements/ (accessed on 26 February 2025).

- Hupont, I.; Fernández-Llorca, D.; Baldassarri, S.; Gómez, E. Use Case Cards: A Use Case Reporting Framework Inspired by the European AI Act. arXiv 2023, arXiv:2306.13701. [Google Scholar] [CrossRef]

- Gursoy, F.; Kakadiaris, I.A. System Cards for AI-Based Decision-Making for Public Policy. arXiv 2022, arXiv:2203.04754. [Google Scholar]

- Golpayegani, D.; Hupont, I.; Panigutti, C.; Pandit, H.J.; Schade, S.; O’Sullivan, D.; Lewis, D. AI Cards: Towards an Applied Framework for Machine-Readable AI and Risk Documentation Inspired by the EU AI Act. In Privacy Technologies and Policy; Jensen, M., Lauradoux, C., Rannenberg, K., Eds.; Lecture Notes in Computer Science; Springer Nature Switzerland: Cham, Switzerland, 2024; Volume 14831, pp. 48–72. [Google Scholar] [CrossRef]

- Giner-Miguelez, J.; Gómez, A.; Cabot, J. Using Large Language Models to Enrich the Documentation of Datasets for Machine Learning. arXiv 2024, arXiv:2404.15320. [Google Scholar]

- Yang, X.; Liang, W.; Zou, J. Navigating Dataset Documentations in AI: A Large-Scale Analysis of Dataset Cards on Hugging Face. arXiv 2024, arXiv:2401.13822. [Google Scholar]

- Mehta, S.; Rogers, A.; Gilbert, T.K. Dynamic Documentation for AI Systems. arXiv 2023, arXiv:2303.10854. [Google Scholar]

- Chmielinski, K.; Newman, S.; Kranzinger, C.N.; Hind, M.; Vaughan, J.W.; Mitchell, M.; Stoyanovich, J.; McMillan-Major, A.; McReynolds, E.; Esfahany, K. The CLeAR Documentation Framework for AI Transparency. Harvard Kennedy School Shorenstein Center Discussion Paper 2024. Available online: https://shorensteincenter.org/clear-documentation-framework-ai-transparency-recommendations-practitioners-context-policymakers/ (accessed on 26 February 2025).

- Wing, J.M. Trustworthy AI. Commun. ACM 2021, 64, 64–71. [Google Scholar] [CrossRef]

- AI HLEG. Ethics Guidelines for Trustworthy AI. 2019. Available online: https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai (accessed on 26 February 2025).

- ENISA; Adamczyk, M.; Polemi, N.; Praça, I.; Moulinos, K. A Multilayer Framework for Good Cybersecurity Practices for AI: Security and Resilience for Smart Health Services and Infrastructures; Adamczyk, M., Moulinos, K., Eds.; European Union Agency for Cybersecurity: Athens, Greece, 2023. [Google Scholar] [CrossRef]

- NIST. Artificial Intelligence Risk Management Framework (AI RMF 1.0); NIST: Gaithersburg, MD, USA, 2023. [Google Scholar]

- Kaur, D.; Uslu, S.; Rittichier, K.J.; Durresi, A. Trustworthy Artificial Intelligence: A Review. ACM Comput. Surv. 2022, 55, 1–38. [Google Scholar] [CrossRef]

- Li, B.; Qi, P.; Liu, B.; Di, S.; Liu, J.; Pei, J.; Yi, J.; Zhou, B. Trustworthy AI: From Principles to Practices. ACM Comput. Surv. 2023, 55, 1–46. [Google Scholar] [CrossRef]

- U.S.G.S.A. Understanding and Managing the AI Lifecycle. AI Guide for Government; 2023. Available online: https://coe.gsa.gov/coe/ai-guide-for-government/understanding-managing-ai-lifecycle/ (accessed on 26 February 2025).

- OECD. OECD Framework for the Classification of AI Systems; OECD Digital Economy Papers, No. 323; OECD: Paris, France, 2022. [Google Scholar]

- Calegari, R.; Castañé, G.G.; Milano, M.; O’Sullivan, B. Assessing and Enforcing Fairness in the AI Lifecycle. In Proceedings of the Thirty-Second International Joint Conference on Artificial Intelligence, Macau, China, 19–25 August 2023; International Joint Conferences on Artificial Intelligence Organization: Macau, China, 2023; pp. 6554–6662. [Google Scholar] [CrossRef]

- Schlegel, M.; Sattler, K.-U. Management of Machine Learning Lifecycle Artifacts: A Survey. arXiv 2022, arXiv:2210.11831. [Google Scholar]

- Toreini, E.; Aitken, M.; Coopamootoo, K.P.L.; Elliott, K.; Zelaya, V.G.; Missier, P.; Ng, M.; van Moorsel, A. Technologies for Trustworthy Machine Learning: A Survey in a Socio-Technical Context. arXiv 2022, arXiv:2007.08911. [Google Scholar]

- Suresh, H.; Guttag, J.V. A Framework for Understanding Sources of Harm throughout the Machine Learning Life Cycle. arXiv 2021, arXiv:1901.10002. [Google Scholar]

- Liu, H.; Wang, Y.; Fan, W.; Liu, X.; Li, Y.; Jain, S.; Liu, Y.; Jain, A.K.; Tang, J. Trustworthy AI: A Computational Perspective. ACM Trans. Intell. Syst. Technol. 2022, 14, 1–59. [Google Scholar] [CrossRef]

- Mentzas, G.; Fikardos, M.; Lepenioti, K.; Apostolou, D. Exploring the landscape of trustworthy artificial intelligence: Status and challenges. Intell. Decis. Technol. 2024, 18, 837–854. [Google Scholar] [CrossRef]

- Zhao, W.; Alwidian, S.; Mahmoud, Q.H. Adversarial Training Methods for Deep Learning: A Systematic Review. Algorithms 2022, 15, 283. [Google Scholar] [CrossRef]

- OECD. Advancing Accountability in AI: Governing and Managing Risks Throughout the Lifecycle for Trustworthy AI; OECD Digital Economy Papers, No. 349; OECD Publishing: Paris, France, 2023. [Google Scholar] [CrossRef]

- EU AI Act: First Regulation on Artificial Intelligence. Topics|European Parliament. 8 June 2023. Available online: https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence (accessed on 26 February 2025).

- ISO/IEC TR 24028:2020(En); Information Technology—Artificial Intelligence—Overview of Trustworthiness in Artificial Intelligence. ISO: Geneva, Switzerland, 2020. Available online: https://www.iso.org/obp/ui/en/#iso:std:iso-iec:tr:24028:ed-1:v1:en (accessed on 2 December 2024).

- Mannion, P.; Heintz, F.; Karimpanal, T.G.; Vamplew, P. Multi-Objective Decision Making for Trustworthy AI. In Proceedings of the Multi-Objective Decision Making (MODeM) Workshop, Online, 14–16 July 2021. [Google Scholar]

- Alsalem, M.; Alamoodi, A.; Albahri, O.; Albahri, A.; Martínez, L.; Yera, R.; Duhaim, A.M.; Sharaf, I.M. Evaluation of trustworthy artificial intelligent healthcare applications using multi-criteria decision-making approach. Expert Syst. Appl. 2024, 246, 123066. [Google Scholar] [CrossRef]

- Mattioli, J.; Sohier, H.; Delaborde, A.; Pedroza, G.; Amokrane-Ferka, K.; Awadid, A.; Chihani, Z.; Khalfaoui, S. Towards a Holistic Approach for AI Trustworthiness Assessment Based upon Aids for Multi-Criteria Aggregation. In Proceedings of the SafeAI 2023-The AAAI’s Workshop on Artificial Intelligence Safety (Vol. 3381), Washington, DC, USA, 13–14 February 2023. [Google Scholar]

- ISO/IEC 31000:2018; Risk Management—Guidelines. ISO: Geneva, Switzerland, 2023. Available online: https://www.iso.org/standard/65694.html (accessed on 5 February 2025).

- ISO/IEC 42001:2023; Information Technology—Artificial Intelligence—Management System. ISO: Geneva, Switzerland, 2023. Available online: https://www.iso.org/standard/81230.html (accessed on 5 February 2025).

- ISO/IEC 23894:2023; Information Technology—Artificial Intelligence—Guidance on Risk Management. ISO: Geneva, Switzerland, 2023. Available online: https://www.iso.org/standard/77304.html (accessed on 5 February 2025).

- ISO/IEC 27005:2022; Information Security, Cybersecurity and Privacy Protection—Guidance on Managing Information Security Risks. ISO: Geneva, Switzerland, 2022. Available online: https://www.iso.org/standard/80585.html (accessed on 5 February 2025).

- Hwang, C.-L.; Yoon, K. Methods for Multiple Attribute Decision Making. In Multiple Attribute Decision Making: Methods and Applications A State-of-the-Art Survey; Hwang, C.-L., Yoon, K., Eds.; Springer: Berlin/Heidelberg, Germany, 1981; pp. 58–191. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.-I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017, 30, 4765–4774. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why should i trust you?” Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016. [Google Scholar]

- Verma, S.; Dickerson, J.; Hines, K. Counterfactual explanations for machine learning: A review. arXiv 2020, arXiv:2010.10596. [Google Scholar]

- Kohavi, R. Scaling Up the Accuracy of Naive-Bayes Classifiers: A Decision-Tree Hybrid. AAAI. Available online: https://aaai.org/papers/kdd96-033-scaling-up-the-accuracy-of-naive-bayes-classifiers-a-decision-tree-hybrid/ (accessed on 21 November 2024).

- Bellamy, R.K.E.; Dey, K.; Hind, M.; Hoffman, S.C.; Houde, S.; Kannan, K.; Lohia, P.; Martino, J.; Mehta, S.; Mojsilovic, A.; et al. AI Fairness 360: An Extensible Toolkit for Detecting, Understanding, and Mitigating Unwanted Algorithmic Bias. arXiv 2018, arXiv:1810.01943. [Google Scholar]

- Calmon, F.; Wei, D.; Vinzamuri, B.; Ramamurthy, K.N.; Varshney, K.R. Optimized Pre-Processing for Discrimination Prevention. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Nice, France, 2017; Volume 30, Available online: https://proceedings.neurips.cc/paper_files/paper/2017/hash/9a49a25d845a483fae4be7e341368e36-Abstract.html (accessed on 26 February 2025).

- Pleiss, G.; Raghavan, M.; Wu, F.; Kleinberg, J.; Weinberger, K.Q. On Fairness and Calibration. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Nice, France, 2017; Volume 30, Available online: https://proceedings.neurips.cc/paper/2017/hash/b8b9c74ac526fffbeb2d39ab038d1cd7-Abstract.html (accessed on 26 February 2025).

- Kamishima, T.; Akaho, S.; Asoh, H.; Sakuma, J. Fairness-Aware Classifier with Prejudice Remover Regularizer. In Machine Learning and Knowledge Discovery in Databases; Flach, P.A., De Bie, T., Cristianini, N., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7524, pp. 35–50. [Google Scholar] [CrossRef]

- Kamiran, F.; Karim, A.; Zhang, X. Decision Theory for Discrimination-Aware Classification. In Proceedings of the 2012 IEEE 12th International Conference on Data Mining, Brussels, Belgium, 10–13 December 2012; pp. 924–929. [Google Scholar] [CrossRef]

- Zemel, R.; Wu, Y.; Swersky, K.; Pitassi, T.; Dwork, C. Learning Fair Representations. In Proceedings of the 30th International Conference on Machine Learning, Atlanta, GA, USA, 17–19 June 2013; pp. 325–333. Available online: https://proceedings.mlr.press/v28/zemel13.html (accessed on 26 February 2025).

- Kearns, M.; Neel, S.; Roth, A.; Wu, Z.S. Preventing Fairness Gerrymandering: Auditing and Learning for Subgroup Fairness. arXiv 2018, arXiv:1711.05144. [Google Scholar]

- Feldman, M.; Friedler, S.A.; Moeller, J.; Scheidegger, C.; Venkatasubramanian, S. Certifying and Removing Disparate Impact. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Sydney, NSW, Australia, 10–13 August 2015; ACM: Sydney, NSW, Australia, 2015; pp. 259–268. [Google Scholar] [CrossRef]

- Sanderson, C.; Douglas, D.; Lu, Q. Implementing responsible AI: Tensions and trade-offs between ethics aspects. In Proceedings of the 2023 International Joint Conference on Neural Networks (IJCNN), Gold Coast, Australia, 18–23 June 2023. [Google Scholar]

- Sanderson, C.; Schleiger, E.; Douglas, D.; Kuhnert, P.; Lu, Q. Resolving Ethics Trade-Offs in Implementing Responsible AI. arXiv 2024, arXiv:2401.08103. [Google Scholar]

- Pan, S.; Luo, L.; Wang, Y.; Chen, C.; Wang, J.; Wu, X. Unifying Large Language Models and Knowledge Graphs: A Roadmap. arXiv 2023, arXiv:2306.08302. [Google Scholar]

- Wu, Q.; Bansal, G.; Zhang, J.; Wu, Y.; Li, B.; Zhu, E.; Jiang, L.; Zhang, X.; Zhang, S.; Liu, J.; et al. AutoGen: Enabling Next-Gen LLM Applications via Multi-Agent Conversation. arXiv 2023, arXiv:2308.08155. [Google Scholar]

| Use Case | AI System Lifecycle Stage | Available Assets | Completed Cards | Lifecycle Characteristic |

|---|---|---|---|---|

| S1—Design | Design | Application Domain, Data | Use case, Data | pre-processing |

| S2—Develop | Develop | Application Domain, Data, AI Model | Use case, Data, Model | pre-processing, in-processing |

| S3—Deploy | Deploy | Application Domain, Data, AI Model | Use case, Data, Model | pre-processing, in-processing, post-processing |

| Name | Definitions | Description | Ideal Value | Acceptable Range |

|---|---|---|---|---|

| BALANCED ACCURACY | Accuracy metric for the classifier | - | >0.7 | |

| STATISTICAL PARITY DIFFERENCE | Difference of the rate of favourable outcomes received by the unprivileged group to the privileged group | 0 | [−0.1, 0.1] | |

| DISPARATE IMPACT | Ration of rate of favourable outcome for the unprivileged group to that of the privileged group. | 1 | [0.7, 1.3] | |

| AVERAGE ODDS DIFFERENCE | Average difference of false positive rate and true positive rate between unprivileged and privileged groups | 0 | [−0.1, 0.1] | |

| EQUAL OPPORTUNITY DIFFERENCE | Difference of true positive rates between the unprivileged and privileged groups | 0 | [−0.1, 0.1] | |

| THEIL INDEX | Generalised entropy of benefit for all individuals in the dataset; measures the inequality in benefit allocation for individuals | 0 | - |

| Pre-Processing | In-Processing | Post-Processing |

|---|---|---|

| Optimised pre-processing [81] | Adversarial debaising [8] | Calibrated equalized odds [82] |

| Reweighting [7] | Prejudice remover [83] | Rejection option classification [84] |

| Learning fair representations [85] | Gerry-Fair (FairFictPlay) [86] | |

| Disparate impact remover [87] |

| Scenarios | Cards | |||

|---|---|---|---|---|

| Use Case | Data | Model | Method | |

| S1—Design | Partially | Partially | Incomplete | Partially |

| S2—Develop | Partially | Completed | Partially | Completed |

| S3—Deploy | Completed | Completed | Completed | Completed |

| Scenarios | Metrics | |||||

|---|---|---|---|---|---|---|

| Accuracy | Statistical Parity Difference | Disparate Impact | Average Odds Difference | Equal Opportunity Difference | THEIL Index | |

| S1—Design | - | −0.1532 | 0.5949 | - | - | - |

| S2—Develop, S3—Deploy | 0.7437 | −0.3580 | 0.2794 | −0.3181 | −0.3768 | 0.1129 |

| Method | Metrics | |

|---|---|---|

| Statistical Parity Difference | Disparate Impact | |

| No method | −0.1902 | 0.3677 |

| Reweighting | 0.0 | 1.0 |

| Disparate impact remover | −0.1962 | 0.3580 |

| Optimized pre-processing | −0.0473 | 0.8199 |

| Method | Scenarios Applicability | Metrics | |||||

|---|---|---|---|---|---|---|---|

| Accuracy | Statistical Parity Difference | Disparate Impact | Average Odds Difference | Equal Opportunity Difference | THEIL Index | ||

| Reweighting | S2—Develop, S3—Deploy | 0.7133 | −0.0705 | 0.7785 | 0.0188 | 0.0293 | 0.1400 |

| Optimal pre-processing | S2—Develop, S3—Deploy | 0.7153 | −0.0962 | 0.7207 | −0.0119 | −0.0082 | 0.1366 |

| Disparate impact remover | S2—Develop, S3—Deploy | 0.7258 | −0.0382 | 0.8965 | 0.0559 | 0.0639 | 0.1224 |

| Adversarial debiasing | S2—Develop, S3—Deploy | 0.6637 | −0.2095 | 0.0 | −0.279 | −0.4595 | 0.1793 |

| Prejudice remover | S2—Develop, S3—Deploy | 0.6675 | −0.2184 | 0.0 | −0.2900 | −0.4734 | 0.1769 |

| Gerry-Fair (FairFictPlay) | S2—Develop, S3—Deploy | 0.4698 | 0.0 | NaN | 0.0 | 0.0 | 0.2783 |

| Calibrated equalized odds | S3—Deploy | 0.5 | 0.0 | NaN | 0.0 | 0.0 | 0.2783 |

| Rejection option classification | S3—Deploy | 0.7140 | −0.0402 | 0.9088 | 0.0423 | 0.0407 | 0.1171 |

| Scenario | Weights | Selected |

|---|---|---|

| S2—Develop | [16.6, 16.6, 16.6, 16.6, 16.6, 16.6] | Disparate impact remover |

| [80, 4, 4, 4, 4, 4] | Initial | |

| [4, 4, 4, 80, 4, 4] | Optimal pre-processing, Disparate impact remover | |

| S3—Deploy | [16.6, 16.6, 16.6, 16.6, 16.6, 16.6] | Disparate impact remover |

| [4, 4, 4, 80, 4, 4] | Optimal pre-processing, Disparate impact remover |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fikardos, M.; Lepenioti, K.; Apostolou, D.; Mentzas, G. Trustworthiness Optimisation Process: A Methodology for Assessing and Enhancing Trust in AI Systems. Electronics 2025, 14, 1454. https://doi.org/10.3390/electronics14071454

Fikardos M, Lepenioti K, Apostolou D, Mentzas G. Trustworthiness Optimisation Process: A Methodology for Assessing and Enhancing Trust in AI Systems. Electronics. 2025; 14(7):1454. https://doi.org/10.3390/electronics14071454

Chicago/Turabian StyleFikardos, Mattheos, Katerina Lepenioti, Dimitris Apostolou, and Gregoris Mentzas. 2025. "Trustworthiness Optimisation Process: A Methodology for Assessing and Enhancing Trust in AI Systems" Electronics 14, no. 7: 1454. https://doi.org/10.3390/electronics14071454

APA StyleFikardos, M., Lepenioti, K., Apostolou, D., & Mentzas, G. (2025). Trustworthiness Optimisation Process: A Methodology for Assessing and Enhancing Trust in AI Systems. Electronics, 14(7), 1454. https://doi.org/10.3390/electronics14071454