Abstract

Aesthetics primarily focuses on the study of art, encompassing the aesthetic categories of beauty and ugliness, as well as human aesthetic activities. Image Aesthetics Assessment (IAA) seeks to automatically evaluate the aesthetic quality of images by mimicking the perceptual mechanisms of humans. Recently, researchers have increasingly explored using user comments to assist in IAA tasks. However, human aesthetics are subjective, and individuals may have varying preferences for the same image, leading to diverse comments that can influence model decisions. Moreover, in practical scenarios, user comments are often unavailable. Thus, this paper proposes a CLIP-based method for IAA (IAACLIP) using generative descriptions and prompts. First, leveraging the growing interest in multimodal large language models (MLLMs), we generate objective and consistent aesthetic descriptions (GADs) for images. Second, based on aesthetic images, labels, and GADs, we introduce a unified contrast pre-training approach to transition the network from the general domain to the aesthetic domain. Lastly, we employ prompt templates for perceptual training to address the lack of real-world comments. Experimental validation on three mainstream IAA datasets demonstrates the effectiveness of our proposed method.

1. Introduction

Aesthetics is a discipline primarily focuses on the study of art, exploring the aesthetic categories of beauty and ugliness, as well as human aesthetic activities. Image Aesthetics Assessment (IAA) [1,2,3] seeks to automatically evaluate the aesthetic quality of images by mimicking the perceptual mechanisms of humans. IAA is categorized into two types: General Image Aesthetics Assessment (GIAA) and Personalized Image Aesthetics Assessment (PIAA). GIAA pertains to the collective perception of image aesthetics shared by most people, whereas PIAA [4,5,6,7] focuses on the distinct aesthetic views of individual users. Benefiting from the recent advancements in science and technology, IAA applications have expanded to fields like style transfer [8], image retrieval [9], and image enhancement [10]. However, due to its inherent subjectivity, it still faces challenges.

In the early stages of IAA research [11,12,13,14], researchers constructed handcrafted features based on composition rules and photography standards. This approach has some drawbacks, as the complex extraction of handcrafted features incurs significant costs and cannot fully capture the subtle differences in human aesthetic perception of various photographic principles. Driven by the rapid advancements in deep learning [15,16,17,18,19,20,21,22,23], IAA models are generally developed to tackle three key tasks: the binary classification of aesthetics [11], regression of aesthetic scores [16], and prediction of aesthetic distributions [17]. However, current methods mostly focus on the visual aspect. Celona et al. [20] developed a method for automatically evaluating the aesthetic appeal of images by analyzing their semantic content, artistic style, and composition.

Previous approaches have predominantly assessed aesthetic quality based solely on visual data, which presents inherent limitations. Several studies have also explored the combination of visual and textual information for IAA. Nie et al. [22] aimed to simulate human implicit memory to trigger subjective aesthetic expression, proposing a brain-inspired multimodal interaction network to accomplish IAA tasks by mimicking how associative areas of the brain cortex process sensory stimuli. Some multimodal methods usually rely on both images and comments as input during testing, which restricts their practical applicability in real-world scenarios. A promising approach, as described in [23], involves using multimodal IAA datasets for model training, enabling the trained image encoder to directly conduct aesthetic inference during testing. Another promising approach is to employ visual–textual bimodality during training and to construct prompt templates during testing to assist IAA tasks.

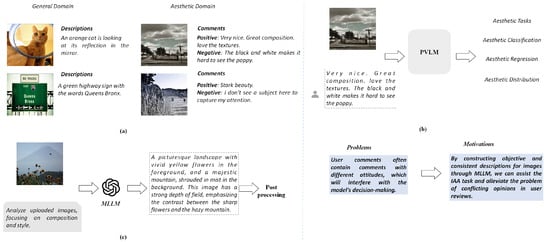

CLIP [24] is an artificial intelligence model with multimodal understanding capabilities. In [23,25], the researchers noted that due to CLIP’s extensive pre-training on diverse data, it proves highly effective in multimodal tasks. However, applying CLIP to the field of IAA presents challenges. Therefore, there is a need to devise a method to transition CLIP from the general domain to the aesthetics domain. Previous studies have utilized images from aesthetic databases along with corresponding user comments. However, we found that human aesthetic preferences are diverse, and different individuals offer varying evaluations of the same image. Using these comments may interfere with the model’s aesthetic evaluation, as illustrated in Figure 1. This figure illustrates the text descriptions in the general domain and the image comments in the aesthetic domain. It is evident that there is a domain gap between the two. For example, in the user comments in the aesthetic database, some individuals may describe the image as “very beautiful”, indicating a positive evaluation, while others may feel that the image lacks focus and fails to attract their attention. These are two entirely different types of comments. Some existing multimodal aesthetic evaluation methods use the original user comments, but we consider this approach somewhat problematic. With the rise of multimodal large language models (MLLMs), we employ the MLLM ChatGPT4 to generate objective and consistent comments, thereby facilitating the transition from the general to the aesthetic domain.

Figure 1.

The challenges and solutions related to the domain gap in Image Aesthetics Assessment. (a) illustrates the gap between the general domain and the domain of image aesthetics. (b) illustrates the problems with existing methods using user reviews. (c) illustrates our starting point to alleviate the problem of inconsistent user review opinions by using MLLMs to construct objective and consistent descriptions.

Building on these considerations, we propose a CLIP-based framework comprising two key stages: unified contrastive pre-training and perception training. In the unified contrastive pre-training stage, we utilize generated aesthetic descriptions to construct aesthetic image–description pairs. Through this process, CLIP is adapted to the aesthetic domain by learning to associate images with their corresponding aesthetic descriptors. In the perception training stage, we design structured prompt templates based on the generated aesthetic descriptions. These templates, combined with images, are then employed for IAA tasks. This approach effectively addresses the scarcity of real-world aesthetic image comments while providing a flexible and customizable framework for aesthetic evaluation.

The main contributions of this work are as follows:

- 1.

- Unlike other multimodal methods, we utilize MLLMs to generate objective and consistent aesthetic descriptions for aesthetic images to assist in IAA tasks.

- 2.

- Based on the generated aesthetic image–description pairs, we propose the use of unified learning methods to complete the unified contrastive pre-training stage of our proposed method, facilitating CLIP’s transition from the general to the aesthetics.

- 3.

- We construct composite prompt templates based on the generated aesthetic descriptions to realize the perception training stage of our proposed method. This approach addresses the lack of comments in the real world, and according to experiments conducted on three IAA databases, our method exhibits a competitive performance compared to other state-of-the-art (SOTA) models.

2. Related Works

2.1. Image Aesthetics Assessment

General Image Aesthetics Assessment (GIAA): Early GIAA work focused on using handcrafted features to describe image aesthetics. Datta et al. [11] established a significant correlation between various visual characteristics of photographic images and their aesthetic ratings. By employing an SVM classifier, they achieved good accuracy in distinguishing high-rated and low-rated photos using 15 visual features. Ke et al. [12] proposed a principled approach to designing advanced features for photo quality assessment, considering multiple perceptual features to make aesthetic decisions.

In past research, handcrafted features achieved limited success in the IAA field because the nature of aesthetics is highly abstract, making it difficult for handcrafted features to fully capture it. Benefits to the advancements in deep learning include the approach to handling IAA shifting from handcrafted features to deep features [26,27,28,29,30,31]. Lu et al. [26] merged global and local views generated from images and used a dual-column deep convolutional neural network for IAA tasks. Ma et al. [28] proposed an architecture capable of accepting images of arbitrary sizes and learning aesthetic knowledge from both details and overall image layouts simultaneously. Huang et al. [29] proposed a coarse-to-fine image aesthetic evaluation model guided by dynamic attribute selection, simulating human aesthetic perception processes by conducting coarse-to-fine aesthetic reasoning.

Personalized Image Aesthetics Assessment (PIAA): PIAA is subjective and individual, reflecting personal preferences and emotional responses to images. Ren et al. [4] proposed two personalized aesthetics datasets to predict individuals’ opinions on general image aesthetics. Since PIAA is a typical few-shot task, ref. [5,6] proposed to introduce meta-learning to solve this problem. Zhu et al. [5] introduced a bi-level optimization meta-learning algorithm to solve the PIAA task. Yan et al. [6] proposed a dual-column network based on meta-learning by considering the global and local features in personalized aesthetic images. In [7], a new user-guided PIAA framework was introduced, which leverages user interactions to adjust and rank images, using deep reinforcement learning (DRL) for aesthetic evaluation.

2.2. Multimodal Learning in IAA

Multimodal approaches have shown significant improvements in performance compared to using single modals alone. In recent IAA studies [22,23,32], researchers have applied multimodal learning to the field with great success. Sheng et al. [23] introduced a CLIP-based IAA framework using multi-attribute contrastive learning, with an emphasis on fine-grained aesthetic domain attributes in comments. They categorized comments into different classes to exploit the rich semantics of these attributes and proposed attribute-aware learning to capture the relationship between images and their aesthetic features. Nie et al. [22] argued that existing multimodal IAA approaches overlook the interactions between modalities. They proposed a brain-inspired multimodal interaction network, simulating how associative areas of the brain cortex process sensory stimuli. They introduced Knowledge-Integrated LSTM to learn the interaction between images and text. The Scalable Multimodal Fusion approach, based on low-rank decomposition, integrates image, text, and interaction modalities to predict the final aesthetic distribution.

2.3. Multimodal Large Language Models

In recent years, driven by improvements in hardware capabilities and the progress of deep learning technologies, large language models (LLMs) have made remarkable progress. Models like ChatGPT and LLaMA [33] have achieved significant milestones in understanding, reasoning, and generation, drawing considerable attention from both academia and industry. Building on the achievements of LLMs in natural language processing, the rise in multimodal large language models (MLLMs) has ignited a fresh wave of innovation in visual language processing. Notable works such as MiniGPT [34], Otter [35], and LLaVA [36] have demonstrated exceptional capabilities in combining visual and textual information. However, the performance of MLLMs in image aesthetic perception remains uncertain. To address this, Huang et al. [37] proposed an expert benchmark called AesBench. First, an expert-labeled aesthetic perception database (EAPD) was constructed, featuring diverse image content and high-quality annotations. Second, a set of comprehensive criteria was proposed to assess the aesthetic perception abilities of MLLMs from four dimensions. Based on the PARA dataset, we construct aesthetic descriptions using the MLLM ChatGPT4.

3. Methodology

3.1. Overview

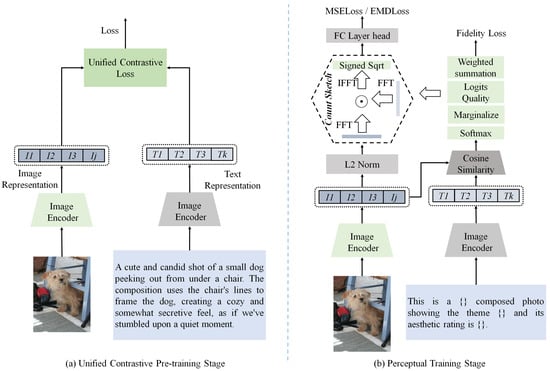

The model presented in this work is built upon the pre-trained CLIP model, which features two encoder branches for processing text and images simultaneously. Our approach involves two training stages, as illustrated in Figure 2: the unified contrastive pre-training stage and the perceptual training stage. To address the cross-domain challenges in CLIP, we introduce a unified contrastive pre-training method to link IAA-style visual and textual patterns. Given the scarcity of text data in real-world scenarios, we also propose the use of composite prompts to guide IAA during the perception training stage, alongside image data.

Figure 2.

Detailed overview of the proposed framework. (a) shows the unified contrastive pre-training stage framework. (b) illustrates the perceptual training stage framework.

3.2. The Construction of Aesthetic Descriptions

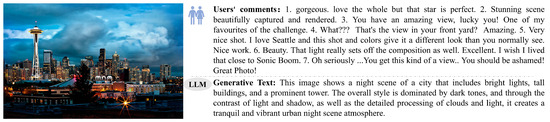

In previous work, researchers successfully utilized images and corresponding user comments for Image Aesthetics Assessment tasks. However, to our knowledge, the use of MLLMs, such as ChatGPT4, to generate aesthetic descriptions for assisting IAA remains unexplored. As illustrated in Figure 3, we find that human comments are often constrained by subjective perceptions and personal experiences, leading to incomplete or inaccurate descriptions of the visual features and rules of images. While terms like ‘love’ and ‘gorgeous’ reflect individuals’ fondness for images, they are heavily influenced by personal aesthetic preferences and emotions. A photograph may be appreciated by some and disliked by others, making such comments potentially unreliable for aesthetic evaluation. In contrast, MLLMs can generate objective and consistent descriptions by considering various aesthetic attributes and rules. This approach allows for high-quality descriptions even without specialized knowledge in art or photography.

Figure 3.

Comparison between users’ comments and generative text.

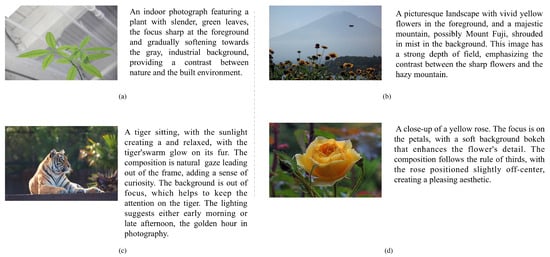

The PARA dataset is a relatively new IAA dataset comprising over 31,000 images. From this dataset, we selected 10,680 images covering 9 topics: ‘Plants’, ‘Night Scenes’, ‘Still Life’, ‘Scenes’, ‘Indoor’, ‘Animals’, ‘Food’, ‘Portraits’, and ‘Architecture’. Using ChatGPT4 with the prompt ‘Analyze the composition and style of the image’, we constructed generative aesthetic descriptions (GADs). As shown in Figure 4, four generated textual descriptions (a, b, c, and d) demonstrate a deep understanding of the aesthetic details and composition of the images. Thus, even without expertise in art or photography, high-quality aesthetic descriptions can be generated using prompts, facilitating easier interpretation of generated textual descriptions to assist in image aesthetic evaluation.

Figure 4.

Examples of aesthetic descriptions generated based on the PARA [38] database.

3.3. Unified Contrastive Pre-Training

After constructing the multimodal aesthetics dataset, we use it to complete the unified contrast pre-training stage proposed by our method. CLIP is a model for joint language–image representation. Since CLIP is trained on large-scale general image–text pairs, directly applying it to the IAA domain yields a subpar performance. Inspired by [39] and based on the aesthetic textual descriptions we constructed earlier, we propose a unified contrastive pre-training method for text–image pairs to establish a strong correlation between CLIP and aesthetic tasks. As illustrated in Figure 4, this method allows the CLIP model to better understand aesthetic images and their corresponding generative aesthetic descriptions (GADs).

Specifically, we combine images and GADs into a shared image–text–label space, enabling the CLIP model to utilize both the visual information from the images and the aesthetic data from GADs along with the associated aesthetic labels. This integration allows CLIP to learn richer aesthetic feature representations for transfer learning in the aesthetic domain given the defined aesthetic dataset , which comprises N triplet-wise data formats , where denotes the image, denotes GAD, and denotes the aesthetic label.

Firstly, we utilize the image encoder to process the image , and the corresponding GAD is processed by the text encoder, yielding their respective image features and GAD features :

For the image in batch and the GADs, we normalize their feature vectors using normalization and then map them onto a unit hypersphere. Afterward, we compute the cosine similarity score matrix for all image–text sample pairs, where the temperature coefficient controls the strength of penalizing hard samples. We consider the bidirectional learning objectives between images and GADs, which can be expressed as follows:

We compute the contrastive loss from images to GADs, denoted as . Specifically, we apply softmax to the similarity matrix of each row to obtain the relative probability distribution of each image with respect to all texts in a . Then, we calculate the corresponding negative log-likelihood loss based on the labels and finally sum and average based on the labels. This can be represented as

where denotes a batch of image and GAD data, denotes the similarity matrix between images and GADs, and denotes image feature , the similarity matrix for all GAD features with the same label . is a set containing the indexes of all GAD features that have the same label as the image .

Similarly, we compute the contrastive loss from text to images, denoted as . This can be represented as

where represents the j-th GAD feature , the similarity matrix for all image features with the same label . is a set containing the indexes of all image features that have the same label as the GAD .

Through the unified contrast pre-training method proposed above, the gap between IAA-style visual and textual modes is bridged, thereby improving the model’s ability to understand the complex interactions between aesthetic images and their corresponding GAD.

3.4. Integration of Textual Prompts and Perceptual Training

In real-world scenarios, users’ comments may not be available, and generating aesthetic descriptions might not be convenient. However, after the unified contrastive pre-training process in Section 3.3, the model’s understanding of the complex interactions between aesthetic images and GADs is enhanced, facilitating the transfer to the aesthetic domain. Inspired by [40], we select three prompts—composition, theme, and aesthetic quality—to form a text template to assist in Image Aesthetics Assessment and complete subsequent perception training.

Specifically, we select eight composition techniques: ‘Rule of Thirds’, ‘Negative Space’, ‘Color and Contrast’, ‘Leading Lines’, ‘Depth and Perspective’, ‘Symmetry and Balance’, ‘Focal Point’, and ‘Pattern and Repetition’; the nine themes mentioned in Section 3.2; and five quality ratings: ‘bad’, ‘poor’, ‘fair’, ‘good’, and ‘perfect’ to create combined templates. For instance, “This is a composed photo showing the theme and its aesthetic rating is”, resulting in a total of 360 combinations of prompts .

During the perceptual training stage, we concentrate on the task of image aesthetic quality assessment. To enhance the generalization capability and performance of our model, we decide to use different loss functions for optimization. Specifically, our perceptual training framework, as shown in Figure 4, encodes aesthetic images and constructed prompt templates into image embeddings and text embeddings, respectively, based on the model obtained from unified contrastive pre-training. This can be calculated according to Formula (1). Then, the obtained image embeddings and text embeddings are normalized, and the cosine similarity between them is calculated. On one hand, the resulting cosine similarity is processed using Softmax and marginalization to obtain quality logits, which are then weighted and summed to obtain the predicted quality score :

where L = 5 is the aesthetic quality level; it is necessary to estimate the marginal probability of l. We choose to use a monotonicity-related loss function for optimization. First, we calculate binary labels based on the ground truth (GT) scores of the given aesthetic database. Given an image pair from the IAA database, we convert their corresponding GT values into binary variables of 0 and 1. Then, we optimize using the fidelity loss [41] function.

On the other hand, we effectively fuse the obtained aesthetic quality logits with image embeddings. Since the CLIP architecture is inconsistent between different modalities, we need to fuse vectors of different types of modals to obtain a joint representation. The traditional vector fusion methods are generally dot multiplication, point addition, concat, etc., but these are not as good as vectors because the outer product is more expressive, but the outer product of vectors causes a dramatic increase in dimensionality [42]. Therefore, we introduced a multimodal compact bilinear pooling (MCBP) method to integrate the two different modes and . Specifically, we will use Count Sketch to reduce the dimensionality. Count Sketch is a hashing technique. Through it, we map high-dimensional aesthetic visual features and quality logits to a lower-dimensional space. This reduces the data dimensionality while preserving the relationships between the original data as much as possible and obtains the features after dimensionality reduction, which can be calculated using the following operations:

The obtained dimensionality-reduced features are then subjected to a Fast Fourier Transform (FFT) to convert them to the frequency domain. A vector inner product is performed in the frequency domain, followed by an Inverse Fast Fourier Transform (IFFT) to convert them back:

Finally, perform Signed Sqrt on the obtained time domain features:

The obtained features undergo fine-grained feature interactions in the multimodal space. Specifically, we optimize the network using different loss functions. For the obtained multimodal features , depending on the dataset, we use the EMD loss function for optimization on the AVA dataset and the MSE loss function on the TAD66K and AADB datasets. For the predicted aesthetic quality score Si obtained by weighted summation, we employ the fidelity loss function for optimization:

In our experiments, combining the above losses enables the model to perform well on different datasets and under different evaluation criteria. MSE/EMD losses ensure the accuracy of predictions, while fidelity losses ensure the consistency and rationality of predictions.

4. Experimental Section

4.1. Experimental Datasets

We conducted experiments on three mainstream image aesthetics databases: AVA, AADB, and TAD66K. In the UCP stage, we implemented it based on the PARA database.

AVA [43]: The AVA database is a large-scale dataset containing 250,000 images, each accompanied by approximately 210 user-provided aesthetic scores that range from 1 to 10. We use the training set and test set divided by [43].

AADB [44]: the AADB database consists of 10,000 images annotated by Amazon Mechanical Turk (AMT) workers, including aesthetic score annotation and aesthetic attribute annotation.

TAD66K [2]: the TAD66K database includes 67,125 images across 47 popular themes, with each image receiving detailed annotations from over 1200 people based on specific theme evaluation criteria.

PARA [38]: PARA consists of more than 31,000 images, which was originally designed for personality aesthetics. It has rich annotations, including aesthetic scores, composition scores, emotions, scene categories, etc. We constructed our GADs based on this dataset to perform our UCP.

4.2. Evaluation Metrics

In experiments, we will calculate the performance of the proposed method from seven widely used criteria.

Accuracy (ACC) is an important indicator for evaluating aesthetic binary classification:

P and N represent high and low aesthetic images, with TP and TN referring to correctly classified high and low aesthetic images, respectively.

For aesthetic regression tasks, common evaluation metrics include the Pearson Linear Correlation Coefficient (PLCC), Spearman Ranked Correlation Coefficient (SRCC), Root Mean Square Error (RMSE), Mean Absolute Error (MAE), and Mean Square Error (MSE). The PLCC measures the linear correlation between predicted and actual scores, with higher values indicating better correlation. Formally, the PLCC is defined as

where and represent the average of and on the test set. It is worth noting that before calculating the PLCC, a fractional remapping function needs to be used to compensate for nonlinearity:

where x and represent the prediction score and mapping score, respectively, and are the five parameters determined by fitting [45].

The SRCC evaluates the degree of monotonicity of predictions:

where N is the number of samples and is the difference between the GT and predicted aesthetic scores. The calculation formulas for the RMSE, MSE, and MAE are as follows:

High SRCC and PLCC values and low MSE, RMSE, and MAE values indicate that this is an excellent IAA model.

The Earth mover’s distance (EMD) is a measure of how close the predicted aesthetic distribution is to the actual aesthetic distribution. For effective IAA tasks, it is best to aim for lower EMD values. It is calculated as follows:

where represents the cumulative distribution function. In this article, we set r = 2.

4.3. Implementation Details

Environment: we implemented our method based on PyTorch (v2.5.1) and conducted experiments on an NVIDIA GTX 3090 GPU.

Data preprocessing: During the training stage, images were initially resized to 256 × 256, followed by data augmentation through flipping and random cropping to 224 × 224 for enhanced data variation. During the testing stage, original images were directly resized to 224 × 224 × 3. All input images underwent normalization.

Pre-training strategy: We employed CLIP’s VIT-B/16 as the image encoder and Transformer as the text encoder. Initially, we constructed GADs based on PARA data for unified contrastive pre-training, utilizing the Adam optimizer, initial learning rate of 1 × 10−5, batch size of 32, and trained for 20 epochs.

Training strategy: In the perception training phase, we formed prompt templates by combining prompts to assist images in IAA tasks. The initial learning rate of 5 × 10−6 and a cosine annealing scheduler were applied for learning rate adjustment. The batch size was the same as before, and training lasted for 30 epochs. We optimized using L2 loss and fidelity loss on the TAD66K and AADB datasets, while on the AVA dataset, we employed EMD loss and fidelity loss.

4.4. Performance Evaluation with Competing Model

Performance on the AVA: According to the official split [43], we report the performance of the proposed method on the AVA database in Table 1. AVA is the largest IAA database to date. From Table 1, it can be seen that on the official segmentation, the proposed model using CLIP as the backbone achieves the best performance in most evaluation metrics. For the aesthetic regression task, our model achieves the best results in the SRCC and PLCC. In aesthetic classification tasks and distributions, we achieve a suboptimal and best performance, respectively. These results demonstrate the versatility and effectiveness of our model across various IAA tasks in image aesthetic evaluation.

Table 1.

Performance comparison of different IAA models in AVA database. “/” means results that were not reported in the original paper. The best results are highlighted in bold.

Performance on the AADB: Most existing methods only report SRCC values, so we evaluate our model on the aesthetic score regression task. The experimental results are summarized in Table 2. Our model achieves the best results, demonstrating the effectiveness of the proposed two-stage training and prompt template construction for IAA.

Table 2.

Performance comparison in AADB dataset.

Performance on the TAD66K: We further conduct experiments on the TAD66K database, which is the recently released database for topic-based IAA. Most existing IAA models have not been experimented on them. We adopt the official partition method, and Table 3 shows our experimental results. We provide competitive results on the TAD66K database. Compared with the listed models, our model achieves the best results in the MSE and PLCC, and the SRCC reaches a suboptimal performance.

Table 3.

Performance comparison in TAD66K dataset.

4.5. Cross-Database Evaluation

We trained the models using the TAD66K dataset and then evaluated their performance on the AADB dataset without fine-tuning. To further test the model’s generalization ability, we also conducted cross-dataset validation by training on AADB and testing on TAD66K. The results presented in Table 4 demonstrate that the proposed model maintains a strong generalization performance, effectively adapting to different datasets and performing well across various aesthetic evaluation tasks.

Table 4.

Performance results of cross-dataset validation.

4.6. Ablation Study

Effectiveness of unified contrastive pre-training and perceptual training: We validated the effectiveness of the proposed two training stages on the AVA database. As shown in Table 5, our CLIP model produced encouraging results after the two-stage pre-training. On the AVA database, we observed a significant performance improvement between CLIP with unified contrastive pre-training and CLIP. The PLCC and SRCC increased by 0.019 and 0.021, respectively, indicating that CLIP successfully transitioned from the general domain to the aesthetic domain after unified contrastive pre-training. During perceptual training, we constructed prompt templates, further enhancing performance on the AVA database. Please note that in the experiments with the CLIP baseline model and CLIP after unified contrastive pre-training, we only used the visual encoder ViT because there was no text input; in the subsequent perceptual training, since we constructed prompt templates to compensate for the unavailability of comments in the real world, we used the image encoder ViT and the text encoder Transformer in the experiments. These results demonstrate that integrating these two training stages to infer image aesthetic scores is necessary and reasonable.

Table 5.

Ablation experiments of UCP stage and perceptual training stage with prompts AVA.

Effectiveness of Multimodal Compact Bilinear Pooling: In this work, we proposed using a multimodal compact bilinear pooling strategy to fuse visual features and quality logits during perceptual training. To investigate the effectiveness of multimodal compact bilinear pooling, we conducted comparative experiments on the AVA, AADB, and TAD66K databases. Specifically, we compared three other fusion strategies—element-wise multiplication, element-wise addition, and concatenation—by replacing the proposed multimodal compact bilinear pooling. For a fair comparison, all comparative experiments were conducted using the same experimental settings. Table 6 shows the experimental results in terms of the PLCC/SRCC values. The results indicate that the proposed multimodal compact bilinear pooling outperforms other feature fusion strategies on all databases, further proving that multimodal compact bilinear pooling better captures the interaction information of multimodal aesthetic vectors.

Table 6.

Performance results of the proposed model using different feature fusion blocks.

4.7. Visualization

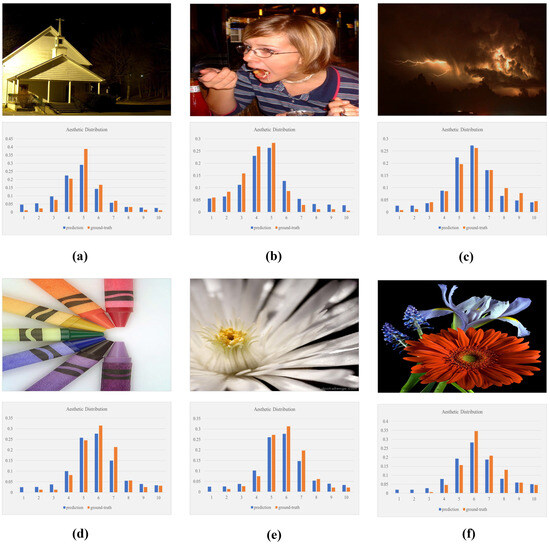

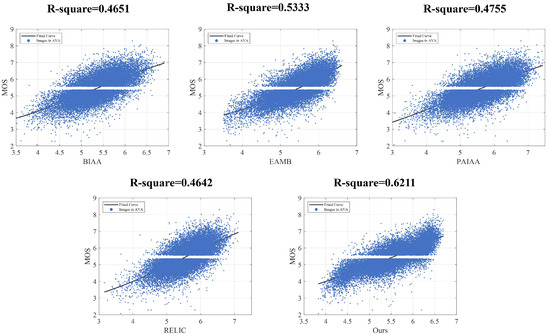

Aesthetic distribution visualization: We visualized the aesthetic distribution predictions on the AVA test set. Figure 5 shows the predicted distributions for some images, demonstrating high accuracy with predicted values closely matching the GT, regardless of aesthetic quality. Additionally, Figure 6 presents scatter plots illustrating the consistency and monotonicity between the true and predicted aesthetic scores, with the r-squared values provided. A high r-squared value, close to the diagonal line, indicates the effectiveness of our model in learning aesthetic distributions.

Figure 5.

Sample predictions by our model from the AVA. (a,b) Images with low aesthetic quality. (c–f) Images with high aesthetic quality.

Figure 6.

Visualization of aesthetic distribution on AVA datasets.

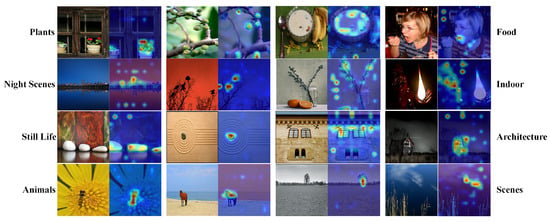

Attention visualization: We visualized attention maps on AVA. Figure 7 shows the attention maps, where we selected eight thematic categories for attention activation. The maps reveal that our model effectively focuses on key regions across images of different themes, confirming its ability to capture aesthetic attributes.

Figure 7.

Attention visualization of the proposed model of the image encoder (ViT).

4.8. Further Discussion

Performance on the art aesthetics domain: The BAID [58] dataset, designed for artistic IAA, contains 60,337 artistic images encompassing various forms of art. To test the applicability of our method in other domains, we performed a performance comparison on the BAID dataset, with results shown in Table 7. Our method outperforms others in the SRCC and PLCC, demonstrating its competitiveness and generalizability across domains.

Table 7.

Performance comparison on BAID [58].

Limitations: Although our proposed method demonstrates an excellent performance, the large parameter size introduced by the CLIP-based model also presents some drawbacks, as shown in Table 7. The substantial number of parameters leads to high consumption of computational resources and storage space during training and inference, thereby extending the training and inference time. Additionally, due to differences in data distribution, applying CLIP to the aesthetic domain requires fine-tuning. In the future, we will focus on reducing model complexity from a lightweight perspective.

5. Conclusions

CLIP has demonstrated its advantages in leveraging cross-modal interactions and has been widely adopted in various downstream tasks. However, due to differences in data distribution, its performance in the aesthetic domain has been less satisfactory. Previous multimodal aesthetic assessment approaches have primarily relied on user reviews, which, while informative, are inherently subjective and vary based on individual preferences. This subjectivity poses challenges in establishing a robust and consistent aesthetic evaluation framework. To address these limitations, we propose a novel multimodal network that transitions from contrastive learning to perceptual training for Image Aesthetics Assessment. Specifically, in the unified contrastive training stage, we leverage a multimodal large language model (MLLM) to generate objective and consistent aesthetic descriptions, mitigating the variability introduced by human subjectivity. Based on this foundation, we utilize image–description–label triples to adapt CLIP from a general domain representation model to an aesthetic-aware model. This novel adaptation process ensures that the learned representations capture the nuanced characteristics of aesthetics beyond standard semantic understanding. Moreover, in real-world applications, user reviews are often unavailable, and generating aesthetic descriptions is impractical in many scenarios. To overcome this challenge, we introduce a combined prompt template for perceptual training, enabling the model to generalize effectively even in the absence of explicit textual feedback. This methodological innovation enhances the model’s adaptability and usability across diverse aesthetic assessment tasks. Extensive experimental results on three popular Image Aesthetic Assessment (IAA) tasks demonstrate the effectiveness of our approach. Our model consistently outperforms existing baselines, showcasing its ability to bridge the gap between human aesthetic perception and automated evaluation. These findings highlight the potential of integrating contrastive learning with perceptual training to advance multimodal aesthetic assessment.

Author Contributions

Conceptualization, Z.L. and X.Y.; methodology, Z.L.; software, Z.L. and X.W.; validation, X.W.; investigation, Z.L.; writing—original draft preparation, Z.L., X.Y., X.W. and F.S.; writing—review and editing, Z.L., X.Y., X.W. and F.S.; visualization, Z.L.; supervision, F.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the “Science and Technology Innovation Yongjiang 2035” Major Application Demonstration Plan Project in Ningbo (2024Z005).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

Author Zhuo Li and Xuebin Wei were employed by the company Digital Ningbo Technology Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Zeng, H.; Cao, Z.; Zhang, L.; Bovik, A.C. A unified probabilistic formulation of image aesthetic assessment. IEEE Trans. Image Process. 2020, 29, 142–149. [Google Scholar] [CrossRef] [PubMed]

- He, S.; Zhang, Y.; Xie, R.; Jiang, D.; Ming, A. Rethinking image aesthetics assessment: Models, datasets and benchmarks. In Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, Vienna, Austria, 23–29 July 2022; pp. 942–948. [Google Scholar]

- Chen, Q.; Zhang, W.; Zhou, N.; Lei, P.; Xu, Y.; Zheng, Y.; Fan, J. Adaptive fractional dilated convolution network for image aesthetics assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 14114–14123. [Google Scholar]

- Ren, J.; Shen, X.; Lin, Z.; Mech, R.; Foran, D.J. Personalized image aesthetics. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 638–647. [Google Scholar]

- Zhu, H.; Li, L.; Wu, J.; Zhao, S.; Ding, G.; Shi, G. Personalized image aesthetics assessment via meta-learning With bilevel gradient optimization. IEEE Trans. Cybern. 2022, 52, 1798–1811. [Google Scholar] [PubMed]

- Yan, X.; Shao, F.; Chen, H.; Jiang, Q. Hybrid CNN-transformer based meta-learning approach for personalized image aesthetics assessment. J. Vis. Commun. Image Represent. 2024, 98, 104044. [Google Scholar]

- Lv, P.; Fan, J.; Nie, X.; Dong, W.; Jiang, X.; Zhou, B.; Xu, M.; Xu, C. User-guided personalized image aesthetic assessment based on deep reinforcement learning. IEEE Trans. Multimed. 2023, 25, 736–749. [Google Scholar]

- Chen, H.; Shao, F.; Chai, X.; Gu, Y.; Jiang, Q.; Meng, X.; Ho, Y.-S. Quality evaluation of arbitrary style transfer: Subjective study and objective metric. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 3055–3070. [Google Scholar]

- Luo, Y.; Tang, X. Photo and video quality evaluation: Focusing on the subject. In Proceedings of the European Conference on Computer Vision (ECCV), Marseille, France, 12–18 October 2008; pp. 386–399. [Google Scholar]

- Chai, X.; Shao, F.; Jiang, Q.; Ho, Y.-S. Roundness-preserving warping for aesthetic enhancement-based stereoscopic image editing. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 1463–1477. [Google Scholar]

- Datta, R.; Joshi, D.; Li, J.; Wang, J.Z. Studying aesthetics in photographic images using a computational approach. In Proceedings of the European Conference on Computer Vision (ECCV), Graz, Austria, 7–13 May 2006; pp. 288–301. [Google Scholar]

- Ke, Y.; Tang, X.; Jing, F. The design of high-level features for photo quality assessment. In Proceedings of the European Conference on Computer Vision (ECCV), New York, NY, USA, 17–22 June 2006; pp. 419–426. [Google Scholar]

- Tang, X.; Luo, W.; Wang, X. Content-based photo quality assessment. IEEE Trans. Multimed. 2013, 15, 1930–1943. [Google Scholar] [CrossRef]

- Wong, L.-K.; Low, K.-L. Saliency-enhanced image aesthetics class prediction. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 997–1000. [Google Scholar]

- Luo, Y.; Shao, F.; Xie, Z.; Wang, H.; Chen, H.; Mu, B.; Jiang, Q. HFMDNet: Hierarchical fusion and multilevel decoder network for RGB-D salient object detection. IEEE Trans. Instrum. Meas. 2013, 15, 1930–1943. [Google Scholar]

- Lu, X.; Lin, Z.; Jin, H.; Yang, J.; Wang, J.Z. Rating image aesthetics using deep learning. IEEE Trans. Multimed. 2015, 17, 2021–2034. [Google Scholar]

- Talebi, H.; Milanfar, P. NIMA: Neural image assessment. IEEE Trans. Image Process. 2018, 27, 3998–4011. [Google Scholar]

- Li, L.; Zhu, H.; Zhao, S.; Ding, G.; Lin, W. Personality-assisted multi-task learning for generic and personalized image aesthetics assessment. IEEE Trans. Image Process. 2020, 29, 3898–3910. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Zhi, T.; Shi, G.; Yang, Y.; Xu, L.; Li, Y.; Guo, Y. Anchor-based knowledge embedding for image aesthetics assessment. Neurocomputing 2023, 539, 126197. [Google Scholar] [CrossRef]

- Celona, L.; Leonardi, M.; Napoletano, P.; Rozza, A. Composition and style attributes guided image aesthetic assessment. IEEE Trans. Image Process. 2022, 31, 5009–5024. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Zhu, T.; Chen, P.; Yang, Y.; Li, Y.; Lin, W. Image aesthetics assessment With attribute-assisted multimodal memory network. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 7413–7424. [Google Scholar] [CrossRef]

- Nie, X.; Hu, B.; Gao, X.; Li, L.; Zhang, X.; Xiao, B. BMI-Net: A brain-inspired multimodal interaction network for image aesthetic assessment. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–November 2023; pp. 5514–5522. [Google Scholar]

- Sheng, X.; Li, L.; Chen, P.; Wu, J.; Dong, W.; Yang, Y.; Xu, L.; Li, Y.; Shi, G. AesCLIP: Multi-attribute contrastive learning for image aesthetics assessment. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 1117–1126. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Qu, B.; Li, H.; Gao, W. Bringing textual prompt to AI-generated image auality assessment. arXiv 2024, arXiv:2403.18714. [Google Scholar]

- Lu, X.; Lin, Z.; Jin, H.; Yang, J.; Wang, J.Z. RAPID: Rating pictorial aesthetics using deep learning. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 457–466. [Google Scholar]

- Lu, X.; Lin, Z.; Shen, X.; Mech, R.; Wang, J.Z. Deep multi-patch aggregation network for image style, aesthetics, and quality estimation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 990–998. [Google Scholar]

- Ma, S.; Liu, J.; Chen, C.W. A-Lamp: Adaptive layout-aware multi-patch deep convolutional neural network for photo aesthetic assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 722–731. [Google Scholar]

- Huang, Y.; Li, L.; Chen, P.; Wu, J.; Yang, Y.; Li, Y.; Shi, G. Coarse-to-fine image aesthetics assessment with dynamic attribute selection. IEEE Trans. Multimed. 2024, 26, 9316–9329. [Google Scholar] [CrossRef]

- Li, L.; Huang, Y.; Wu, J.; Yang, Y.; Li, Y.; Guo, Y.; Shi, G. Theme-aware visual attribute reasoning for image aesthetics assessment. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 4798–4811. [Google Scholar] [CrossRef]

- Chen, H.; Shao, F.; Mu, B.; Jiang, Q. Image aesthetics assessment with emotion-aware multibranch network. IEEE Trans. Instrum. Meas. 2024, 73, 1–15. [Google Scholar] [CrossRef]

- Zhang, X.; Xiao, Y.; Peng, J.; Gao, X.; Hu, B. Confidence-based dynamic cross-modal memory network for image aesthetic assessment. Pattern Recognit. 2024, 149, 110227. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.; Lacroix, T.; Roziére, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and efficient foundation language models. arXiv 2023, arXiv:2302.13971. [Google Scholar]

- Zhu, D.; Chen, J.; Shen, X.; Li, X.; Elhoseiny, M. MiniGPT-4: Enhancing vision-language understanding with advanced large language models. arXiv 2023, arXiv:2304.105921. [Google Scholar]

- Li, B.; Zhang, Y.; Chen, L.; Wang, J.; Yang, J.; Liu, Z. Otter: A multi-modal model with in-context instruction tuning. arXiv 2023, arXiv:2305.03726. [Google Scholar]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y. Visual instruction tuningg. arXiv 2023, arXiv:2304.08485. [Google Scholar]

- Huang, Y.; Yuan, Q.; Sheng, X.; Yang, Z.; Wu, H.; Chen, P.; Yang, Y.; Li, L.; Lin, W. AesBench: An expert benchmark for multimodal large language models on amage aesthetics perception. arXiv 2024, arXiv:2401.08276. [Google Scholar]

- Yang, Y.; Xu, L.; Li, L.; Qie, N.; Li, Y.; Zhang, P.; Guo, Y. Personalized image aesthetics assessment with rich attributes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 19829–19837. [Google Scholar]

- Yang, J.; Li, C.; Zhang, P.; Xiao, B.; Liu, C.; Yuan, L.; Gao, J. Unified contrastive learning in image-text-label space. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 19141–19151. [Google Scholar]

- Zhang, W.; Zhai, G.; Wei, Y.; Yang, X.; Ma, K. Blind image quality assessment via vision-language correspondence: A multitask learning perspective. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 14071–14081. [Google Scholar]

- Tsai, M.; Liu, T.; Qin, T.; Chen, H.; Ma, W. FRank: A ranking method with fidelity loss. In Proceedings of the 30th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Amsterdam, The Netherlands, 23–27 July 2007; pp. 383–390. [Google Scholar]

- Fukui, A.; Park, D.H.; Yang, D.; Rohrbach, A.; Darrell, T.; Rohrbach, M. Multimodal compact bilinear pooling for visual question answering and visual grounding. arXiv 2016, arXiv:1606.01847. [Google Scholar]

- Murray, N.; Marchesotti, L.; Perronnin, F. AVA: A large-scale database for aesthetic visual analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2408–2415. [Google Scholar]

- Kong, S.; Shen, X.; Lin, Z.; Mech, R.; Fowlkes, C. Photo aesthetics ranking network with attributes and content adaptation. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 662–679. [Google Scholar]

- Wang, Y.; Cui, Y.; Lin, J.; Jiang, G.; Yu, M.; Fang, C.; Zhang, S. Blind quality evaluator for enhanced colonoscopy images by integrating local and global statistical features. IEEE Trans. Instrum. Meas. 2024, 73, 1–15. [Google Scholar]

- Murray, N.; Gordo, A. A deep architecture for unified aesthetic prediction. arXiv 2017, arXiv:1708.04890. [Google Scholar]

- Zhang, X.; Gao, X.; Lu, W.; He, L. A gated peripheral-foveal convolutional neural network for unified image aesthetic prediction. IEEE Trans. Multimed. 2019, 21, 2815–2826. [Google Scholar]

- Hosu, V.; Goldlucke, B.; Saupe, D. Effective aesthetics prediction with multi-level spatially pooled features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9367–9375. [Google Scholar]

- Ke, J.; Wang, Q.; Wang, Y.; Milanfar, P.; Yang, F. MUSIQ: Multi-Scale image quality transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 5128–5137. [Google Scholar]

- Niu, Y.; Chen, S.; Song, B.; Chen, Z.; Liu, W. Comment-guided semantics-aware image aesthetics assessment. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 1487–1492. [Google Scholar]

- He, S.; Ming, A.; Zheng, S.; Zhong, H.; Ma, H. EAT: An enhancer for aesthetics-oriented transformers. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 1023–1032. [Google Scholar]

- Ke, J.; Ye, K.; Yu, J.; Wu, Y.; Milanfar, P.; Yang, F. Vila: Learning image aesthetics from user comments with vision-language pretraining. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 10041–10051. [Google Scholar]

- Zhou, H.; Yang, R.; Tang, L.; Qin, G.; Zhang, Y.; Hu, R.; Li, X. Gamma: Toward Generic Image Assessment with Mixture of Assessment Experts. arXiv 2025, arXiv:2503.06678. [Google Scholar]

- Malu, G.; Bapi, R.; Indurkhya, B. Learning photography aesthetics with deep CNNs. arXiv 2017, arXiv:1707.03981. [Google Scholar]

- Liu, D.; Puri, R.; Kamath, N.; Bhattacharya, S. Composition-aware image aesthetics assessment. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Snowmass, CO, USA, 1–5 March 2020; pp. 3558–3567. [Google Scholar]

- Li, L.; Duan, J.; Yang, Y.; Xu, L.; Li, Y.; Guo, Y. Psychology inspired model for hierarchical image aesthetic attribute prediction. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18–22 July 2022; pp. 1–6. [Google Scholar]

- She, D.; Lai, Y.-K.; Yi, G.; Xu, K. Hierarchical layout-aware graph convolutional network for unified aesthetics assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 8471–8480. [Google Scholar]

- Yi, R.; Tian, H.; Gu, Z.; Lai, Y.-K.; Rosin, P.L. Towards artistic image aesthetics assessment: A large-scale dataset and a new method. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 22388–22397. [Google Scholar]

- Sheng, K.; Dong, W.; Ma, C.; Mei, X.; Huang, F.; Hu, B.-G. Attention-based multi-patch aggregation for image aesthetic assessment. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Republic of Korea, 22–26 October 2018; pp. 879–886. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).