Abstract

In Mexico, around 2.4 million people (1.9% of the national population) are deaf, and Mexican Sign Language (MSL) support is essential for people with communication disabilities. Research and technological prototypes of sign language recognition have been developed to support public communication systems without human interpreters. However, most of these systems and research are closely related to American Sign Language (ASL) or other sign languages of other languages whose scope has had the highest level of accuracy and recognition of letters and words. The objective of the current study is to develop and evaluate a sign language recognition system tailored to MSL. The research aims to achieve accurate recognition of dactylology and the first ten numerical digits (1–10) in MSL. A database of sign language and numeration of MSL was created with the 29 different characters of MSL’s dactylology and the first ten digits with a camera. Then, MediaPipe was first applied for feature extraction for both hands (21 points per hand). Once the features were extracted, Machine Learning and Deep Learning Techniques were applied to recognize MSL signs. The recognition of MSL patterns in the context of static (29 classes) and continuous signs (10 classes) yielded an accuracy of 92% with Support Vector Machine (SVM) and 86% with Gated Recurrent Unit (GRU) accordingly. The trained algorithms are based on full scenarios with both hands; therefore, it will sign under these conditions. To improve the accuracy, it is suggested to amplify the number of samples.

1. Introduction

Based on data from the World Health Organization (WHO), approximately 5% of the global population, comprising an estimated 450 million individuals, suffer from hearing impairment. Regrettably, projections indicate that by 2050, the number of persons experiencing hearing loss is expected to surpass 700 million [1]. Focusing on Mexico, according to the National Institute of Statistics and Geography (Instituto Nacional de Estadística y Geografía or INEGI), in 2020, 2.4 million people (approximately 1.9% of the national population) had deafness [2].

Sign Language (SL) is a communication tool that supports people with deafness in daily living. However, since 300 different sign languages exist around the globe [3], it is more difficult for people with deafness to communicate with other non-SL speakers and with other SL speakers of different languages. Therefore, SL speakers deserve support to improve the facilitation of communication with other SL speakers of different languages and non-SL speakers.

It is worth mentioning that every scientific research study that supports SL speakers can potentially positively impact 5 out of the 17 United Nations (UN) Sustainable Goals: (4) Quality Education; (8) Decent Work and Economic Growth; (9) Industry, Innovation, and Infrastructure; (10) Reduced Inequalities; and (11) Sustainable Cities and Communities [4]. Hence, fostering inclusion with SL physically or online in a school or at work will improve the quality of life for people with deafness and will preserve and promote their sense of identity and community.

The relevance of Mexican Sign Language (MSL) has been vital in Mexico and its history. In 2005, the MSL was officially recognized as a national language and became a linguistic heritage of Mexico [5]. Such a fact facilitated the creation of many schools that teach MSL as a cultural right for people with deafness problems; in fact, the General Law on Disability was created in 2005, and the last reform occurred in 2008 [6], which supports any research for deaf people at the national level.

The significant contributions of the research work are as follows:

- This study demonstrates the feasibility of real-time, low-cost computational algorithms for both static and continuous Mexican Sign Language (MSL) recognition. The approach includes full MSL dactylology [7] (covering letters such as “LL” and “RR”) and the recognition of the first ten numbers.

- To overcome the scarcity of resources, we developed and released an open-source MSL database. This database is now available to the research community, serving as a crucial resource for further innovation in SL recognition.

- Unlike previous studies that relied on cropped-hand images, controlled environments, or complex sensor data, our method utilizes full-frame video recordings—from the waist to the head—in real-world scenarios. This comprehensive capture enables the effective application of state-of-the-art algorithms for sign language recognition.

2. Literature Review

Technology has demonstrated advances in supporting communication with SL speakers by building systems that recognize SL. In a systematic literature review [8], three types of sign language recognition systems were found: gloves, computer vision, and hybrid systems. Glove-based systems have demonstrated high accuracy (>90%), with hybrid systems achieving even greater precision (>99%). In the domain of computer vision, sophisticated sensors such as the Xbox One Kinect and the Leap Motion Controller have been widely utilized for SL recognition [9]. However, the high cost of practical glove-based solutions and specialized sensors remains a major barrier for most deaf individuals, as their financial resources are often constrained to essential expenditures due to the overall economic conditions of the SL-speaking population [10].

A systematic review of sign language recognition systems [11] mentioned that the webcam is the only device that has demonstrated a low cost, excellent scalability, and more than 50% participation in the papers in the systematic literature review. Other institutions and people can obtain an integrated camera on their cell phones or computer by default. Therefore, a SL recognition system can be implemented by downloading only the software, code repository, or app.

2.1. General Process of Sign Language Recognition

According to [11], the general steps for a sign language recognition system are the following:

- Data Acquisition: receive image or video inputs and store them.

- Preprocessing: standardize, filter, or crop images.

- Segmentation: background or other parts removed.

- Feature Extraction: extract the features for classification.

- Classification: classify the predicted label or category with an algorithm.

2.2. Related Work

Many models have been developed to recognize sign language to classify each sign of its language. In the beginning of the last decade, the recognition of an image or a frame from a video of a static sign was supported by Machine Learning Techniques [11], such as k-Nearest Neighbors, Artificial Neural Networks (ANNs), Support Vector Machine (SVM), Hidden Markov Model (HMM), Convolution Neural Network (CNN), Fuzzy Logic, and Ensemble Learning. However, implementing such models in real-life scenarios does not present the desired accuracy. The only two models that have shown desired results are SVM and CNN. However, SVM alone as a model can only recognize a sign if an algorithm or filters have previously extracted the features. On the other hand, CNN can generate the desired accuracy, but it requires a high computational cost in exchange. Moreover, as reported by [11], among the top-performing models they reviewed for static sign recognition, CNN-based approaches achieved accuracies exceeding 99%, further underscoring CNN’s potential despite its higher computational demands.

During the last decade, researchers have optimized Deep Learning techniques such as CNN and Recurrent Neuronal Network (RNN) models for sign language recognition, including continuous signs, since a continuous sign can be interpreted as a sequence of images or frames. Recent work by [12] on TARNet demonstrates that combining CNNs for spatial feature extraction with LSTMs for temporal modeling can achieve high accuracy (up to 99.92%) on trajectory-based recognition tasks. This hybrid approach reinforces the rationale for using similar architectures in dynamic sign language recognition.

In the following Table 1, we identified the essential models from other research, where Single Shot Detector (SSD) and MobileNetV2 could achieve more than 90% accuracy in recognizing static signs in real time, which means that the system responds with a translation of the hand or hands at the moment of receiving an input [13].

Table 1.

Models applied for SL recognition with CNN and RNN.

In contrast, 3D CNN showed the best accuracy for continuous signs among the other models but required more computational cost. Thus, it is essential to establish an equilibrium point to apply the models in the real world by achieving comparable levels of accuracy to a 3D CNN while maintaining equivalent or lower latency to that of the MobileNetV2 or SSD. Furthermore, practical model training necessitates adopting an automated labeling approach to consistently identify and categorize the hand in each frame.

Detecting the human pose has become a prolific area of research in light of the groundbreaking work conducted by [22]. As a result of their pioneering efforts, numerous scholarly articles have emerged on the topic. Among the algorithms focusing on hand detection and alterations when movement appears that have proven to have greater detection accuracy is MediaPipe, developed by Google researchers [23], which is an ML pipeline for hand tracking and gesture recognition. Instead of employing direct and comprehensive hand detection, the approach involves the detection of the palm. The results indicate that the method yielded an average accuracy of 95.7% in palm detection using a standard cross entropy loss without a decoder, with a baseline accuracy of 86.22%.

Behind MediaPipe’s library focused on hands is an algorithm divided into two parts, the first for detecting the palm with the SSD algorithm that has been modified, adapted, and renamed BlazePalm Detector. Consequently, once the palm is detected, an estimate is generated to graph the 21 landmarks of the palm. It is worth mentioning that periodically, the algorithm asks if it continues to detect any palm, achieving a lower computational cost and low latency (see Figure 1).

Figure 1.

Sample of using MediaPipe Holistic for hand detection for both hands.

As can be seen in Table 2, 15 articles and our study were compared that are related to sign language recognition with MediaPipe and without it. It is relevant to mention that from those studies, 13 (86.67%) created a new database, 10 (66.67%) used MediaPipe, 7 (46.67%) used a normal web camera, and 9 (60%) implied MSL. Such comparisons highlight the current gap between state-of-the-art algorithms and their application in MSL and other sign languages using similar recognition methods and models. Additionally, several MSL studies in Table 2 rely on specialized sensors such as Kinect [24,25,26], achieving high accuracy (95–100%). However, Kinect-based solutions are more expensive and less portable. In contrast, our approach utilizes a conventional camera (iPhone 11) to capture full-body frames from waist to head, aligning more closely with real-world conditions and being affordable to a broader audience. Moreover, among MSL-focused works, only a few have publicly shared their databases (e.g., Mejía-Peréz et al., 2022 [27] with OAK-D, and Sosa-Jiménez et al., 2022 [26] with Kinect). Sánchez-Vicinaiz et al. (2024) [28] generated their static signs of MSL dataset, but it is only available on request. By providing an open-source dataset of 29 dactylology characters and 10 numeric signs in MSL, the current study not only offers a viable low-cost setup but also addresses the scarcity of publicly accessible data, fostering reproducibility and encouraging further innovation in computer-vision-based MSL recognition.

Sign language translation is considered one of the more complex problems in SL due to the low latency required to recognize SL in a real-time scenario. An example of another approach where a dense pose detector was applied to sign language was with German Sign Language [29], in which a spatio-temporal cue was used as a dense pose detector and word recognizer and transformers were used to translate Gloss2Text (from sign language to the ordinary written language).

A relevant work from [30] could detect a human pose with Wifi signals with low latency because the signals are received in 1D, but unfortunately, the developed dense pose’s accuracy was around 50%. Nonetheless, the research demonstrates the beginning of new sensors for human pose instead of webcams for higher scalability.

Table 2.

Comparison table of the literature review applied to sign language recognition related to MediaPipe.

Table 2.

Comparison table of the literature review applied to sign language recognition related to MediaPipe.

| Reference | Sign Language | Device | Created New Dataset | Full Frame/Image Scenario | # of Participants | # of Samples in the Dataset | MediaPipe | # of Landmarks Used (If MediaPipe) | # of Hands Considered for Detection at the Same Time | # of Classes from Dactylology | # of Classes from Numbers (From 1 to 10) | # of Classes from Words | Trained Models for Static SL | Trained Models for Continuous SL | Best Accuracy |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Solís et al., 2015 [31] | Mexican | Digital Canon EOS rebel T3 EF-S 18–55 Camera | 1 | - | N.M. | N.M. | - | - | 1 | 24 | - | - | Jacobi-Fourier Moment + Multilayer Perceptron | - | 95% Jacobi–Fourier Moment + Multilayer Perceptron |

| Solís et al., 2016 [32] | Mexican | Digital Camera and four LED reflectors placed at the corners | 1 | - | N.M. | N.M. | - | - | 1 | 21 | - | - | Normalized moment + Multilayer Perceptron | - | 93% Normalized moment + Multilayer Perceptron |

| Jimenez et al., 2017 [24] | Mexican | Kinect | 1 | - | 100 | 1000 | - | - | 1 | 5 | 5 | - | HAAR 2D/HAAR 3D + AdaBoost | - | 95% HAAR 3D + AdaBoost |

| Shin et al., 2021 [33] | American | Camera | - | - | N.M. | ASL Alphabet 780,000 Finger Spelling A 65,774 Massey 1815 | 1 | 21 | 1 | 26 | - | - | Angle and/or Distance Features + SVM, GBL | Angle and/or Distance Features + SVM, GBL | With Angle and Distance Features + SVM: Massey dataset: 99.39% Finger Spelling A: 98.45% ASL alphabet: 87.60% |

| Indriani et al., 2021 [34] | American | Kinect | 1 | - | N.M. | 900 | 1 | 21 | 1 | - | 10 (From 0 to 9) | - | Hand condition detector | Hand condition detector | 95% Hand condition detector |

| Halder and Tayade, 2021 [35] | American Indian Italian Turkish | Camera | - | - | N.M. | N.M. | 1 | 21 | 1 | American 26 Indian 26 Italian 22 | American 10 Indian Turkish 10 | - | SVM, KNN, Random Forest, Decision Tree, Naive Bayes, ANN, MLP | - | 99% SVM in every SL |

| Espejel-Cabrera et al., 2021 [36] | Mexican | Camera with black clothes and background | 1 | 1 | 11 | 2480 | - | - | 2 | - | - | 249 | Decision Tree, SVM, Neural networks, Naive Bayes | - | +96% SVM |

| Sundar and Bagyammal, 2022 [37] | American | Camera | 1 | 1 | 4 | 93,600 | 1 | 21 | 1 | 26 | - | - | LSTM | LSTM | 99% LSTM |

| Chen et al., 2022 [38] | American | Camera | - | - | Dataset 1: 2062 Dataset 2: 5000 Dataset 3: 12,000 Dataset 4: 300 | N.M. | 1 | 21 | 1 | 21 | - | - | Recursive Feature Elimination (RFE) + proposed method, CNN | - | 96.3% RFE + proposed method |

| Samaan et al., 2022 [39] | American | Camera of OPPO Reno3 Pro mobile | 1 | 1 | 5 | 750 | 1 | 258 | 2 | - | - | 10 | - | GRU, LSTM, and BiLSTM | 99% GRU |

| Subramanian et al., 2022 [40] | Indian | Camera | 1 | 1 | N.M. | 900 | 1 | 540+ | 2 | - | - | 13 | - | Simple RNN, LSTM, Standard GRU, BiGRU, BiLSTM, MOPGRU (MediaPipe + GRU) | 99.92% MOPGRU (MediaPipe + GRU) |

| Mejía-Peréz et al., 2022 [27] | Mexican | Depth Camera (OAK-D) | 1 | 1 | 4 | 3000 | 1 | 67 | 2 | 8 | - | 22 | Gaussian Noise + RNN, LSTM, and GRU | Gaussian Noise + RNN, LSTM, and GRU | 97.11% Gaussian Noise + GRU |

| Sosa-Jiménez et al., 2022 [26] | Mexican | Kinect | 1 | 1 | 22 | 18,040 | - | - | 2 | 29 | 10 (From 0 to 9) | 43 | Hidden Markov Model (HMM) | Hidden Markov Model (HMM) | 99% HMM |

| Rios-Figueroa et al., 2022 [25] | Mexican | Kinect | 1 | - | 15 | N.M. | - | - | - | 21 | - | - | Use of Spherical and/or Cartesian features classified by a Maximum A Posteriori (MAP) | - | 100% Spherical and Cartesian features classified by a Maximum A Posteriori (MAP) |

| Sánchez-Vicinaiz et al., 2024 [28] | Mexican | Camera | 1 | - | 1 internal DB | 3822 internal DB + 4347 external DB | 1 | 21 | 1 | 21 | - | - | MediaPipe + Customized CNN | - | 99.93% training 98.43% validation 83.63% testing |

| Current Study | Mexican | Camera | 1 | 1 | 11 | 3677 | 1 | 42 | 2 | 29 | 10 | - | SVM, GBL | LSTM, GRU | 92% SVM 85% GRU |

Note: N.M. = not mentioned.

3. Materials and Methods

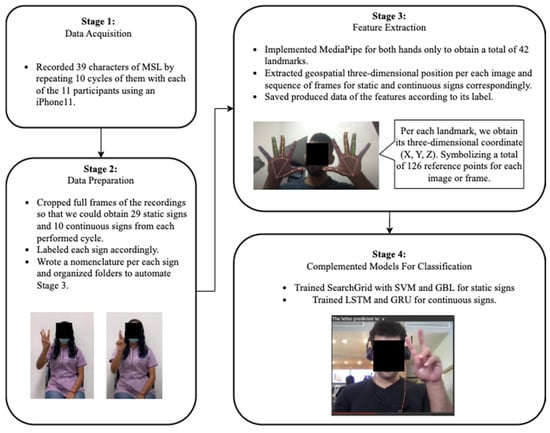

In this section, we adapted the general methodology [11] with MediaPipe since, with it, we could extract the features without preprocessing with any filters except those included within the algorithm and could later classify them (see Figure 2 and Algorithm 1).

| Algorithm 1. Pseudocode for main pipeline of current work |

| BEGIN DataAcquisition INITIALIZE dataset FOR each participant IN participant_list DO FOR cycle FROM 1 TO 10 DO // Record using both hands for augmentation IF cycle < 6 THEN RECORD video WITH right hand for current cycle ELSE THEN RECORD video WITH left hand for current cycle STORE recorded videos IN dataset END FOR END FOR SAVE dataset END DataAcquisition BEGIN DataPreparation FOR each video IN dataset DO IF video IS a static sign THEN EXTRACT representative frame (screenshot) LABEL frame WITH corresponding sign label (using PASCAL VOC format) SAVE frame INTO prepared_dataset ELSE IF video IS a continuous sign THEN TRIM video TO appropriate start and end times LABEL video WITH corresponding sign label SAVE trimmed video INTO prepared_dataset END IF END FOR SAVE prepared_dataset END DataPreparation BEGIN FeatureExtraction FOR each sample (frame or video frame) IN prepared_dataset DO APPLY MediaPipe algorithm TO extract hand landmarks // Extract 21 landmarks per hand, thus 42 landmarks total FOR each landmark DO RETRIEVE 3D coordinates (X, Y, Z) END FOR COMPILE coordinates INTO feature vector (126 data points) ASSOCIATE feature vector WITH its label STORE feature vector in feature_dataset END FOR SAVE feature_dataset END FeatureExtraction BEGIN ModelTrainingAndClassification // Process static sign samples LOAD static_signs FROM feature_dataset APPLY grid search to train SVM model using static_signs data APPLY grid search to train Gradient Boost Light (GBL) model using static_signs data SAVE trained static_sign_models // Process continuous sign samples LOAD continuous_signs FROM feature_dataset CONFIGURE LSTM model TRAIN LSTM model using continuous_signs data CONFIGURE GRU model TRAIN GRU model using continuous_signs data SAVE trained continuous_sign_models END ModelTrainingAndClassification BEGIN MainPipeline // Step 1: Data Acquisition CALL DataAcquisition() // Step 2: Data Preparation CALL DataPreparation() // Step 3: Feature Extraction CALL FeatureExtraction() // Step 4: Model Training and Classification CALL ModelTrainingAndClassification() // End of Pipeline END MainPipeline |

Figure 2.

Adapted process for sign language recognition.

3.1. Data Acquisition

We created a database of MSL containing its dactylology (29 characters) and the first ten numbers from one to ten. The database was created from recordings generated by eleven speakers of MSL who produced ten series of sign language words and numbers. The ten series were performed with both hands (five with the right hand and five with the left hand to have data augmentation and avoid overfitting) [41,42,43,44].

The speakers’ learning of MSL came from different sources:

- Two native speakers who are deaf and learned MSL to communicate.

- Two bilingual speakers who learned MSL from their parents.

- Seven speakers who learned MSL in an educational program.

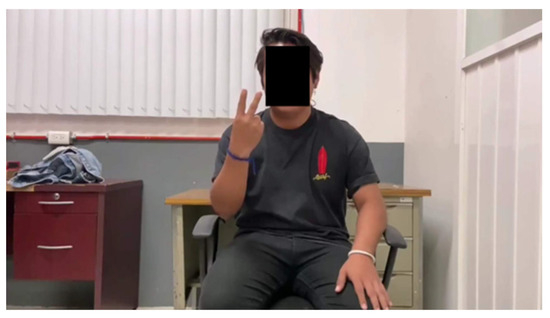

The dataset capture device was an iPhone 11 model MWM32LZ/A, where the manufacturer’s name is Apple Inc. and its headquarters are located at Cupertino, CA, USA., and the recording settings were in 4k with a resolution of 3840 × 2160. All the samples were recorded at 30 FPS (frames per second) when the iPhone was horizontal and balanced with a tripod (see Figure 3).

Figure 3.

Example of the sign for the number 2 (static sign).

3.2. Data Preparation

Together with a team of two social service volunteers from Universidad Autónoma del Estado de Morelos (UAEM), the recordings were accurately captured from the cycles of each static and continuous sign recording. Later, the videos were edited for continuous signs to have the appropriate start and end times for each continuous sign and a screenshot for each static sign. It is worth mentioning that, for the most part, each video lasted one second on average.

Moreover, static signs were tagged in Pattern Analysis, Statistical Modelling and Computational Learning Visual Object Classes (PASCAL VOC) format in XML with LabelImg for future researchers who would like to perform other experiments [45,46].

3.3. Feature Extraction

To extract the features of each image from the static sign samples and full video frames from the continuous sign samples, MediaPipe was used to extract the 21 landmarks from each hand; in other words, 42 landmarks were extracted in total per image or frame. It is worth mentioning that each landmark has three relevant data points, which are the three-dimensional coordinates (X, Y, Z) that were gathered. One hundred twenty-six coordinate data points were extracted from the 42 landmarks at the end of each image or frame.

3.4. Complemented Models for Classification

Once every three-dimensional coordinate was extracted, SVM and Gradient Boost Light (GBL) were applied to train with a grid search for static signs. On the other hand, for continuous signs, Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) models were applied with three layers each [47].

3.5. Evaluation Metrics

We utilized multiple metrics, such as accuracy, precision, recall, and F1 score, to evaluate the performance of our models. Since our study involves multiclass classification, we employed macro-averaging to calculate the precision, recall, and F1 score. As [48] described, macro-averaging () involves computing the overall mean of every single class .

This approach utilizes numerators ranging from zero to one, enabling a comprehensive assessment of each metric’s total true positives, true negatives, false negatives, and false positives.

Accuracy () refers to the proportion of correct predictions a model makes. This measure, as shown in Equation (1), is determined by computing the number of true positives () and true negatives () and subsequently dividing this value by the sum of true positives () and true negatives (), in addition to the sum of false positives and false negatives .

Precision (P) refers to a statistical metric that represents the ratio of true positives () to the sum of true positives () and false positives () as shown in Equation (2). Precision is determined using this same formula when working with weighted data as shown in Equation (3) (macro average of precision—).

Recall () refers to a measure that calculates the proportion of true positives () to the sum of true positives () and false negatives () as shown in Equation (4). This formula remains applicable when working with weighted data as shown in Equation (5) (macro average of recall—).

weighted is a statistical metric that provides a weighted mean of the measure as shown in Equation (6). The weights are based on class probability, with each class assigned a probability weight.

4. Results (Including Experimentation)

4.1. Dataset

We conducted our experiment using a self-created database with the dactylology dataset of MSL and its numbers. All the datasets and the generated results were stored in a Google Drive Repository to use Google Collab and were later published in Mendeley Data [41,42,43,44,45,46,47,49].

4.2. Implementation Details

It was supposed to have 110 samples per character, but 90–100 were recovered from the recorded videos due to data transferring (Appendix A).

During the extraction of landmarks, videos less than 1 s copied data from their last frame until 30 frames of data were gathered, while for those that exceeded 1 s, it was only cut to the first 30 frames. Code from [50] was used as the baseline and adapted according to the dataset and model’s needs.

The percentage of data used for every stage of modeling was the following: 80% for training, 10% for testing, and 10% for validation, where Google Collab free version was used [49].

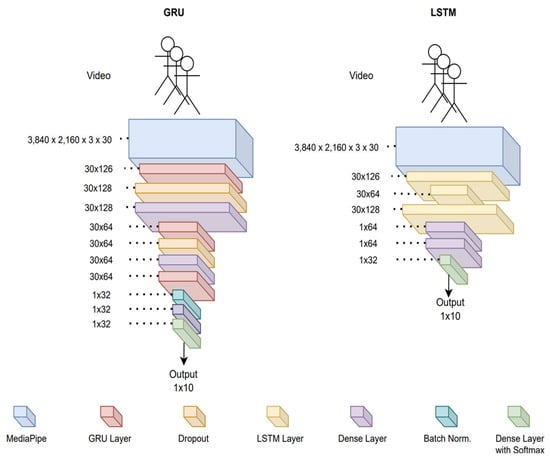

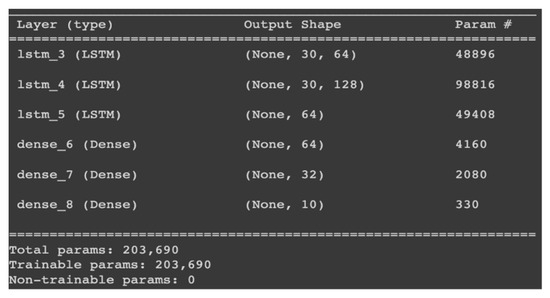

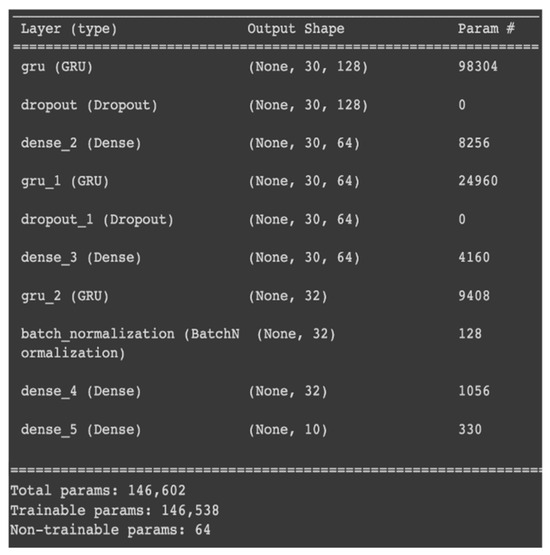

For static sign language, SVM and GBL were applied with grid search with cross validation (see Table 3). On the other hand, for continuous sign language, Tensorflow and Keras were used to apply LSTM and GRU (see Figure 4), where “he normal” initializers and “elu” activation functions were implemented instead of random initializers and “relu” activation functions correspondingly because “elu” activation function has shown a faster convergence in other studies ([51], pp. 335–338). Nonetheless, the only exception for both models was the last dense layer that used a “softmax” activation function for classification with a “glorot uniform” kernel initializer and an “L2 normalization” as a kernel regularizer. In addition, the GRU model used a Dropout of 0.2 in both layers. Moreover, the number of epochs for LSTM and GRU was 350 and 1200, respectively. Furthermore, the LSTM and GRU models had correspondingly 203,690 parameters (all trainable) and 146,602 parameters (only 62 non-trainable) (see Appendix B).

Table 3.

Grid search parameters and best parameters for SVM and GBL.

Figure 4.

LSTM and GRU’s architecture.

From Figure 4, an image representation of the layers can be seen. It is relevant to mention that the number that every number per layer mentions is the size of the received input. However, only MediaPipe can receive other different inputs, which can be later adapted inside its layers due to the quality of the camera and other factors.

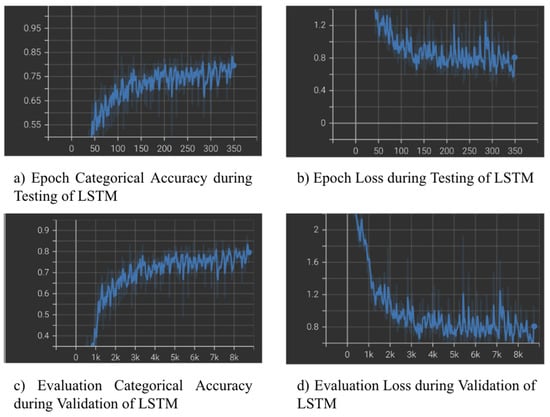

4.3. Quantitative Analysis

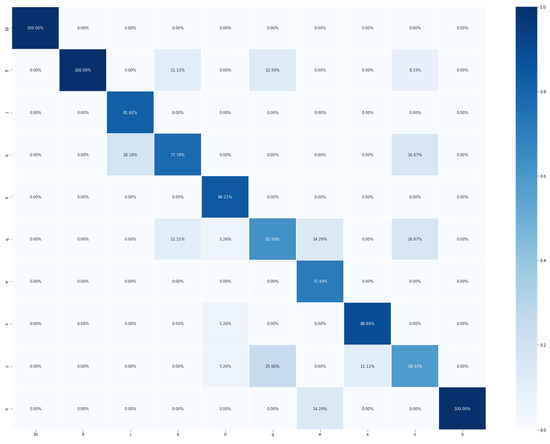

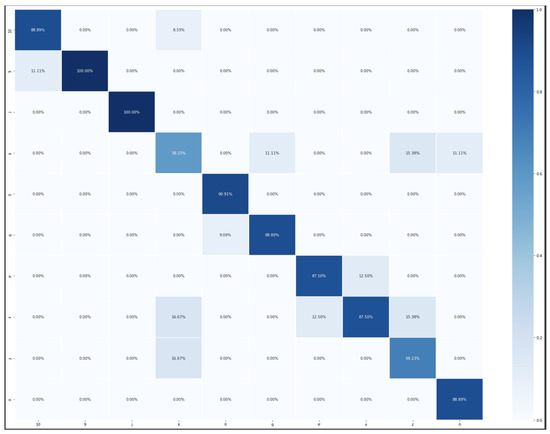

As can be seen in the following Table 4, SVM surpasses GBL in every metric in both datasets (test and validation) with a +91% accuracy. Meanwhile, GRU surpasses LSTM in every metric in both datasets (test and validation) with a +81% accuracy.

Table 4.

Evaluation metrics.

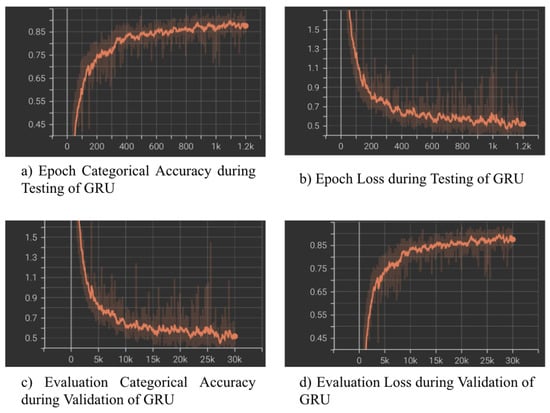

In the following Figure 5 and Figure 6, the accuracy and loss graphs were compared between the LSTM and GRU models during testing and validation. It can be seen that the GRU graphs are smoother compared to the LSTM graphs. From these, it can be said that the GRU model in this situation and problem is more prone to learning to recognize continuous sign language than the LSTM model.

Figure 5.

LSTM’s categorical accuracy and epoch loss graphs.

Figure 6.

GRU’s categorical accuracy and epoch loss graphs.

4.4. Comparative Analysis

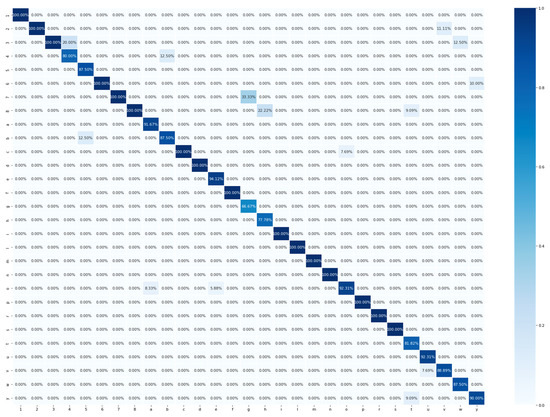

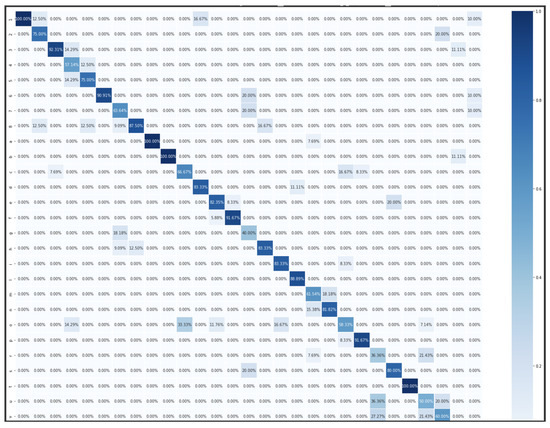

GBL and LSTM confusion matrixes gave more positive negatives than SVM and GRUM (Appendix C). Furthermore, the categorical accuracy and loss curves in testing and validation for LSTM and GRU proved that GRU has a more stable recognition at the moment of training. Therefore, SVM and GRU are more accurate in multi-classifying the dynamic signs of MSL.

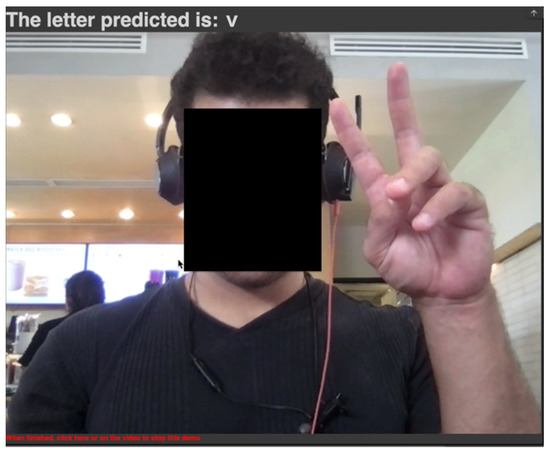

All the models were stored in the same repository with the joblib library. Only GRU and LSTM were manually saved with “h5” formats. Furthermore, an implementation inside Google Colab using the models was implemented with Javascript (see Figure 7), where the code from [52] was adapted to use a webcam in Google Collab and apply the trained models correspondingly [49].

Figure 7.

Example of letter “V” recognition.

5. Discussion

MediaPipe supported us in gathering the essential landmarks of the hands with great accuracy as shown in Table 2 and in performing real-time detection. In addition, it could give us the whole body and face landmarks if we desired. Nevertheless, the current research was only focused on MSL’s dactylology and the first ten digits, so we focused on only what was necessary. Furthermore, we think other dense pose algorithms and different sensors will be developed that could replace MediaPipe and the webcam if the results surpass current state-of-the-art metrics.

It is important to note that although we trained our models using high-quality iPhone 11 footage, MediaPipe [53] can still extract landmarks from lower-resolution inputs (224 × 224 pixels or higher). Additionally, since a complete full scenario from the hip to the head and two hands was recorded, then the models applied were trained with these real scenarios. Therefore, it was seen that SVM and GRU outperformed GBL and LSTM correspondingly in each evaluation metric. However, for static signs, SVM has shown excellent accuracy (+90%) even with a few samples (<100 per sign). In line with other studies, such a positive accuracy demonstrates that SVM [35] has the potential for recognizing static signs when features (landmarks in this case) are extracted first. Moreover, since GBL has a lower accuracy than SVM, it may need more samples to achieve a more accurate model and avoid overfitting and underfitting.

On the other hand, for continuous signs, we did not standardize or normalize the video duration when recording per sign class for training since we recorded the signs according to the rhythm of each participant, which could be suitable for the data augmentation and simulate real case scenarios. Still, it may need further summarization, standardization, or normalization when preparing the relevant data or features for modeling when the number of samples is amplified. Even the addition of angle and distance features could be considered for further training, as occurred in the paper by [33]. However, the low latency should be considered when implementing in real time.

When implementing the models in real time, it was observed that since the models were trained with both hands but one hand was almost always resting around the waist, a recognition inaccuracy was detected in the cases in which only one hand was shown and the other was missing, or both hands met but one hand was not at rest. Such cases remind us that we will need even more samples for data augmentation and considerations for other features when training, specifically around four to five times more data if we compare it with another study related to MSL [26].

Compared to existing MSL recognition systems, our method achieves competitive results while relying solely on a standard camera and MediaPipe for landmark extraction. For instance, ref. [26] reported 99% accuracy for 29 letters and 10 digits but required Kinect. By contrast, our SVM-based model reaches 92% accuracy for static signs, and our GRU-based model achieves 86% for continuous signs in a more cost-effective setup. This indicates that affordable camera-only solutions can also yield robust recognition performance, especially when paired with an appropriate feature extractor such as MediaPipe.

Furthermore, our dataset is recorded in a realistic full-frame scenario—from the waist to the head—without cropping the hands or using specialized backgrounds or clothing. This approach is distinctive compared to previous MSL research that often relied on carefully cropped hand images [31,32] or depth sensors [24,25]. By offering an open-source database under real-world conditions, our study moves the field closer to practical, large-scale deployment in public systems.

A deeper statistical comparison (e.g., confidence intervals) could provide further insight into the significance of differences between the tested methods. Due to time constraints and our current dataset size, we focused on conventional metrics that evaluate multi-classification models (accuracy, precision, recall, and F1-score) as presented in Table 4, and it is worth mentioning that those metrics are also used in the other articles of the literature review. In future work, when larger datasets become available, we aim to conduct a more comprehensive statistical analysis to assess the robustness and significance of performance differences.

In addition, future research will explore the integration of hybrid CNN architectures, such as the MediaPipe + Customized CNN Architecture for static signs by [28], or ARNet, a CNN-LSTM architecture by [12], to enhance continuous sign recognition. The model of MediaPipe + Customized CNN architecture demonstrated an accuracy of 99.93% on training data, 98.43% on validation, and 83.63% on testing for static signs only. On the other hand, ARNet demonstrated the ability to capture both spatial and temporal dynamics (achieving 95–99% accuracy on trajectory-based gesture datasets). Both models suggest that similar approaches could improve our system’s accuracy and real-time responsiveness.

The current study did not focus on word recognition or sentence translation because of the lack of open-source datasets and time constraints in MSL. However, if more research in terms of MSL develops, then a similar dataset such as PHOENIX14 [54,55] from German Sign Language shall be created with different topics and contexts in conversations.

6. Conclusions

In conclusion, this study significantly advances Mexican Sign Language (MSL) recognition by introducing an open-source MSL database—including 29 dactylology characters and the first ten digits—and by employing a low-cost, real-time recognition system that leverages MediaPipe for hand feature extraction alongside SVM and GRU models. These contributions have the potential to enhance inclusive communication systems for individuals with hearing impairments in Mexico.

Future work will focus on improving the generalizability of our approach through subject-wise data splitting, validating the system under low-resolution conditions, and conducting more comprehensive statistical analyses. These steps are expected to further refine the system and expand its practical applicability in real-world scenarios.

Author Contributions

M.R.: Conceptualization, Methodology, Software, Validation, Formal Analysis, Investigation, Resources, Data Curation, Writing—Original Draft, Writing—Review and Editing, Visualization, Project Administration. O.O.: Conceptualization, Methodology, Investigation, Resources, Writing—Review and Editing, Visualization, Supervision. A.B.: Conceptualization, Methodology, Investigation, Resources, Writing—Review and Editing, Visualization, Supervision. N.L.: Conceptualization, Methodology, Investigation, Resources, Writing—Review and Editing, Visualization, Supervision. R.T.: Methodology, Investigation, Writing—Review and Editing, Visualization, Supervision. All authors have read and agreed to the published version of the manuscript.

Funding

The authors would like to thank Tecnológico de Monterrey for the financial support provided through the ‘Challenge-Based Research Funding Program 2023’, Project ID #IJXT070-23EG99001, titled ‘Complex Thinking Education for All (CTE4A): A Digital Hub and School for Lifelong Learners’.

Data Availability Statement

The data are openly available in a public repository that issues datasets with DOIs. The data that support the findings of this study are openly available in Mendeley Data at the following DOIs: 10.17632/48xybsmvpv.1 [41], 10.17632/jp4ymf2vjw.1 [42], 10.17632/69fmdb25xm.1 [43], 10.17632/3dzn5rstwx.1 [44], 10.17632/5s4mt7xrd9.1 [45], 10.17632/67htnzmwbb.1 [46], and 10.17632/hmsc33hmkz.1 [47].

Acknowledgments

The first author would like to thank the Secretaría de Ciencia, Humanidades, Tecnología e Innovación (SECIHTI), formerly National Council of Humanities, Science, and Technology of Mexico (Consejo Nacional de Humanidades, Ciencias y Tecnologías—CONAHCyT), for funding his studies with a full-coverage scholarship. Additionally, we would like to thank the Unit for Educational Inclusion and Attention to Diversity of the UAEM (Universidad Autónoma del Estado de Morelos), the Faculty of Human Communication of the UAEM, and the Faculty of Mathematics of the UADY (Universidad Autónoma de Yucatan) for their support. We thank Eliseo Guajardo Ramo, from Educational Inclusion and Attention to Diversity of UAEM (Unidad para la Inclusión Educativa y Atención a la Diversidad), Alma Janeth Moreno Aguirre, from the Faculty of Human Communication of UAEM (Facultad de Comunicación Humana), Viridiana Aydee León Hernández, from the Faculty of Chemical Sciences and Engineering of UAEM (Facultad de Ciencias Químicas e Ingeniería—FCQeI), and María del Carmen Torres Salazar, from the FCQeI of UAEM (Secretaria de Investigación de la FCQeI), for their invaluable guidance and support throughout this study. Moreover, we thank the following people because of their availability to generate samples and perform data annotation: Patricia Salazar Díaz, María Fernanda Gutiérrez Álvarez, Luz Patricia Capistrán Pérez, Kevin Manuel Rivera Ramirez, Angel Sanyey Diaz Ceballos, Ariana Rodriguez Rojas, Irasema Rodriguez Rojas, Maria Guadalupe Sarai Guillen Garcia, Mayra Vianey Huerta, Moses Martínez Martínez, Noreli Rodriguez Rojas, Ahinoam Concha Olascuaga, Roberto Carlos Valle Reyes, Peralta Miraselva Fernando, and Jaimes Cortez Cristian Giovanni for their contributions to the research project.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Labeled Dataset

Table A1.

Labeled dataset and the number of samples for static sign language.

Table A1.

Labeled dataset and the number of samples for static sign language.

| Static | ||

|---|---|---|

| Character/Number | Labeled | # of Samples |

| 1 | 0 | 92 |

| 2 | 1 | 92 |

| 3 | 2 | 92 |

| 4 | 3 | 92 |

| 5 | 4 | 92 |

| 6 | 5 | 92 |

| 7 | 6 | 92 |

| 8 | 7 | 92 |

| a | 8 | 94 |

| b | 9 | 94 |

| c | 10 | 94 |

| d | 11 | 94 |

| e | 12 | 94 |

| f | 13 | 94 |

| g | 14 | 94 |

| h | 15 | 94 |

| i | 16 | 93 |

| l | 17 | 93 |

| m | 18 | 93 |

| n | 19 | 93 |

| o | 20 | 93 |

| p | 21 | 93 |

| r | 22 | 93 |

| s | 23 | 93 |

| t | 24 | 73 |

| u | 25 | 92 |

| v | 26 | 93 |

| w | 27 | 93 |

| y | 28 | 93 |

| Total | - | 2696 |

Table A2.

Labeled dataset and the number of samples for continuous sign language.

Table A2.

Labeled dataset and the number of samples for continuous sign language.

| Continuous | ||

|---|---|---|

| Character/Number | Labeled | # of Samples |

| 9 | 0 | 100 |

| 10 | 1 | 100 |

| j | 2 | 102 |

| k | 3 | 102 |

| ll | 4 | 86 |

| ñ | 5 | 101 |

| q | 6 | 88 |

| rr | 7 | 101 |

| x | 8 | 101 |

| z | 9 | 100 |

| Total | 981 | |

Appendix B. Detailed Architecture of the Models for Continuous Sign Language

Figure A1.

LSTM’s model architecture.

Figure A2.

GRU’s model architecture.

Appendix C. Confusion Matrixes and Categorical and Loss Curves

Figure A3.

Confusion matrix of the static signs of MSL with the SVM model.

Figure A4.

Confusion matrix of the static signs of MSL with the GBL model.

Figure A5.

Confusion matrix of the continuous signs of MSL with the LSTM model.

Figure A6.

Confusion matrix of the continuous signs of MSL with the GRU model.

References

- World Health Organization. Deafness and Hearing Loss; World Health Organization: Geneva, Switzerland, 2023; Available online: https://www.who.int/news-room/fact-sheets/detail/deafness-and-hearing-loss (accessed on 27 February 2023).

- Mariano, M. Enfrentan Personas con Discapacidad Auditiva retos en Escenario Actual. 2020. Available online: https://conecta.tec.mx/es/noticias/monterrey/educacion/enfrentan-personas-con-discapacidad-auditiva-retos-en-escenario-actual (accessed on 5 November 2021).

- Department of Economic Social Affairs of U.N. International Day of Sign Languages, 23 September 2019. 2019. Available online: https://www.un.org/development/desa/disabilities/news/news/sign-languages.html (accessed on 15 August 2021).

- United Nations. The 17 Goals. 2016. Available online: https://sdgs.un.org/goals (accessed on 19 November 2022).

- Consejo para Prevenir y Eliminar la Discriminación en la Ciudad de México. Lenguas de Señas son Fundamentales para el Desarrollo de las Personas Sordas y el Acceso a sus Derechos. 2019. Available online: https://copred.cdmx.gob.mx/comunicacion/nota/lenguas-de-senas-son-fundamentales-para-el-desarrollo-de-las-personas-sordas-y-el-acceso-sus-derechos (accessed on 7 August 2021).

- Cámara de Diputados del, H. Congreso de la Unión. Ley General de las Personas con Discapacidad. General Secretary; DOF 30-05-2011. 2008. Available online: https://www.diputados.gob.mx/LeyesBiblio/abro/lgpd/LGPD_abro.pdf (accessed on 20 October 2021).

- Serafín, M.E.; González, R. Manos con voz. Diccionario de la Lengua de Señas Mexicana; Consejo Nacional para Prevenir la Discriminación (CONAPRED) & Libre Acceso, A.C. Primera Edición, 2011; ISBN 978-607-7514-35-0. Available online: https://www.conapred.org.mx/publicaciones/manos-con-voz-diccionario-de-lengua-de-senas-mexicana/ (accessed on 18 January 2022).

- Ahmed, M.A.; Zaidan, B.B.; Zaidan, A.A.; Salih, M.M.; bin Lakulu, M.M. A Review on Systems-Based Sensory Gloves for Sign Language Recognition State of the Art between 2007 and 2017. Sensors 2018, 18, 2208. [Google Scholar] [CrossRef] [PubMed]

- Guzsvinecz, T.; Szucs, V.; Sik-Lanyi, C. Suitability of the Kinect Sensor and Leap Motion Controller—A Literature Review. Sensors 2019, 19, 1072. [Google Scholar] [CrossRef]

- Kim, E.J.; Byrne, B.; Parish, S.L. Deaf people and economic well-being: Findings from the Life Opportunities Survey. Disabil. Soc. 2018, 33, 374–391. [Google Scholar] [CrossRef]

- Adeyanju, I.A.; Bello, O.O.; Adegboye, M.A. Machine learning methods for sign language recognition: A critical review and analysis. Intell. Syst. Appl. 2021, 12, 200056. [Google Scholar] [CrossRef]

- Alam Md, S.; Kwon, K.-C.; Md Imtiaz, S.; Hossain, M.B.; Kang, B.-G.; Kim, N. TARNet: An Efficient and Lightweight Trajectory-Based Air-Writing Recognition Model Using a CNN and LSTM Network. In Human Behavior and Emerging Technologies; Yan, Z., Ed.; Wiley: Hoboken, NJ, USA, 2022; Volume 2022, pp. 1–13. [Google Scholar] [CrossRef]

- Mohammedali, A.H.; Abbas, H.H.; Shahadi, H.I. Real-time sign language recognition system. Int. J. Health Sci. 2022, 6, 10384–10407. [Google Scholar] [CrossRef]

- Mujahid, A.; Awan, M.J.; Yasin, A.; Mohammed, M.A.; Damaševičius, R.; Maskeliūnas, R.; Abdulkareem, K.H. Real-Time Hand Gesture Recognition Based on Deep Learning YOLOv3 Model. Appl. Sci. 2021, 11, 4164. [Google Scholar] [CrossRef]

- Liu, P.; Li, X.; Cui, H.; Li, S.; Yuan, Y. Hand Gesture Recognition Based on Single-Shot Multibox Detector Deep Learning. Mob. Inf. Syst. 2019, 2019, 3410348. [Google Scholar] [CrossRef]

- Bilgin, M.; Mutludogan, K. American Sign Language Character Recognition with Capsule Networks. In Proceedings of the 2019 3rd International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT), Ankara, Turkey, 11–13 October 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Lum, K.Y.; Goh, Y.H.; Lee, Y.B. American Sign Language Recognition Based on MobileNetV2. Adv. Sci. Technol. Eng. Syst. J. 2020, 5, 481–488. [Google Scholar] [CrossRef]

- Rathi, D. Optimization of Transfer Learning for Sign Language Recognition Targeting Mobile Platform. arXiv 2018, arXiv:1805.06618. [Google Scholar] [CrossRef]

- Bantupalli, K.; Xie, Y. American Sign Language Recognition Using Machine Learning and Computer Vision. Master’s Thesis, Kennesaw State University, Kennesaw, GA, USA, 2019. Available online: https://digitalcommons.kennesaw.edu/cs_etd/21 (accessed on 22 October 2021).

- Elhagry, A.; Elrayes, R.G. Egyptian Sign Language Recognition Using CNN and LSTM. arXiv 2021, arXiv:2107.13647. [Google Scholar] [CrossRef]

- Li, D.; Opazo, C.R.; Yu, X.; Li, H. Word-level Deep Sign Language Recognition from Video: A New Large-scale Dataset and Methods Comparison. arXiv 2020, arXiv:1910.11006. [Google Scholar] [CrossRef]

- Güler, R.A.; Neverova, N.; Kokkinos, I. DensePose: Dense Human Pose Estimation In The Wild. arXiv 2018, arXiv:1802.00434. [Google Scholar] [CrossRef]

- Zhang, F.; Bazarevsky, V.; Vakunov, A.; Tkachenka, A.; Sung, G.; Chang, C.-L.; Grundmann, M. MediaPipe Hands: On-device Real-time Hand Tracking. arXiv 2020, arXiv:2006.10214. [Google Scholar] [CrossRef]

- Jimenez, J.; Martin, A.; Uc, V.; Espinosa, A. Mexican Sign Language Alphanumerical Gestures Recognition using 3D Haar-like Features. IEEE Lat. Am. Trans. 2017, 15, 2000–2005. [Google Scholar] [CrossRef]

- Rios-Figueroa, H.V.; Sánchez-García, A.J.; Sosa-Jiménez, C.O.; Solís-González-Cosío, A.L. Use of Spherical and Cartesian Features for Learning and Recognition of the Static Mexican Sign Language Alphabet. Mathematics 2022, 10, 2904. [Google Scholar] [CrossRef]

- Sosa-Jiménez, C.O.; Rios-Figueroa, H.V.; Solís-González-Cosio, A.L. A Prototype for Mexican Sign Language Recognition and Synthesis in Support of a Primary Care Physician. IEEE Access 2022, 10, 127620–127635. [Google Scholar] [CrossRef]

- Mejía-Peréz, K.; Córdova-Esparza, D.-M.; Terven, J.; Herrera-Navarro, A.-M.; García-Ramírez, T.; Ramírez-Pedraza, A. Automatic Recognition of Mexican Sign Language Using a Depth Camera and Recurrent Neural Networks. Appl. Sci. 2022, 12, 5523. [Google Scholar] [CrossRef]

- Sánchez-Vicinaiz, T.J.; Camacho-Pérez, E.; Castillo-Atoche, A.A.; Cruz-Fernandez, M.; García-Martínez, J.R.; Rodríguez-Reséndiz, J. MediaPipe Frame and Convolutional Neural Networks-Based Fingerspelling Detection in Mexican Sign Language. Technologies 2024, 12, 124. [Google Scholar] [CrossRef]

- Koishybay, K.; Mukushev, M.; Sandygulova, A. Continuous Sign Language Recognition with Iterative Spatiotemporal Fine-tuning. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 10211–10218. [Google Scholar] [CrossRef]

- Geng, J.; Huang, D.; De la Torre, F. DensePose From WiFi. arXiv 2023, arXiv:2301.00250. [Google Scholar] [CrossRef]

- Solís, F.; Toxqui, C.; Martínez, D. Mexican Sign Language Recognition Using Jacobi-Fourier Moments. Engineering 2015, 7, 700–705. [Google Scholar] [CrossRef]

- Solís, F.; Martínez, D.; Espinoza, O. Automatic Mexican Sign Language Recognition Using Normalized Moments and Artificial Neural Networks. Engineering 2016, 08, 733–740. [Google Scholar] [CrossRef]

- Shin, J.; Matsuoka, A.; Hasan Md, A.M.; Srizon, A.Y. American Sign Language Alphabet Recognition by Extracting Feature from Hand Pose Estimation. Sensors 2021, 21, 5856. [Google Scholar] [CrossRef] [PubMed]

- Indriani Harris, M.; Agoes, A.S. Applying Hand Gesture Recognition for User Guide Application Using MediaPipe. In Proceedings of the 2nd International Seminar of Science and Applied Technology (ISSAT 2021), Bandung, Indonesia, 12 October 2021. [Google Scholar] [CrossRef]

- Halder, A.; Tayade, A. Real-time Vernacular Sign Language Recognition using MediaPipe and Machine Learning. Int. J. Res. Publ. Rev. 2021, 2, 9–17. [Google Scholar]

- Espejel-Cabrera, J.; Cervantes, J.; García-Lamont, F.; Ruiz Castilla, J.S.; D Jalili, L. Mexican sign language segmentation using color based neuronal networks to detect the individual skin color. Expert Syst. Appl. 2021, 183, 115295. [Google Scholar] [CrossRef]

- Sundar, B.; Bagyammal, T. American Sign Language Recognition for Alphabets Using MediaPipe and LSTM. Procedia Comput. Sci. 2022, 215, 642–651. [Google Scholar] [CrossRef]

- Chen, R.-C.; Manongga, W.E.; Dewi, C. Recursive Feature Elimination for Improving Learning Points on Hand-Sign Recognition. Future Internet 2022, 14, 352. [Google Scholar] [CrossRef]

- Samaan, G.H.; Wadie, A.R.; Attia, A.K.; Asaad, A.M.; Kamel, A.E.; Slim, S.O.; Abdallah, M.S.; Cho, Y.-I. MediaPipe’s Landmarks with RNN for Dynamic Sign Language Recognition. Electronics 2022, 11, 3228. [Google Scholar] [CrossRef]

- Subramanian, B.; Olimov, B.; Naik, S.M.; Kim, S.; Park, K.-H.; Kim, J. An integrated mediapipe-optimized GRU model for Indian sign language recognition. Sci. Rep. 2022, 12, 11964. [Google Scholar] [CrossRef]

- Rodriguez, M.; Outmane, O.; Bassam, A.; Noureddine, L. Mexican Sign Language’s Dactylology and Ten First Numbers—Raw Videos. From Person #1 to #3 [Data Set]. Mendeley. V1. 2023. Available online: https://data.mendeley.com/datasets/48xybsmvpv/1 (accessed on 30 May 2023). [CrossRef]

- Rodriguez, M.; Outmane, O.; Bassam, A.; Noureddine, L. Mexican Sign Language’s Dactylology and Ten First Numbers—Raw Videos. From Person #4 to #7 [Data Set]. Mendeley. V1. 2023. Available online: https://data.mendeley.com/datasets/jp4ymf2vjw/1 (accessed on 30 May 2023). [CrossRef]

- Rodriguez, M.; Outmane, O.; Bassam, A.; Noureddine, L. Mexican Sign Language’s Dactylology and Ten First Numbers—Raw Videos. From Person #8 to #10 [Data Set]. Mendeley. V1. 2023. Available online: https://data.mendeley.com/datasets/69fmdb25xm/1 (accessed on 1 June 2023). [CrossRef]

- Rodriguez, M.; Outmane, O.; Bassam, A.; Noureddine, L. Mexican Sign Language’s Dactylology and Ten First Numbers—Raw Videos. Person #11 [Data Set]. Mendeley. V1. 2023. Available online: https://data.mendeley.com/datasets/3dzn5rstwx/1 (accessed on 1 June 2023). [CrossRef]

- Rodriguez, M.; Outmane, O.; Bassam, A.; Noureddine, L. Mexican Sign Language’s Dactylology and Ten First Numbers—Labeled Images and Videos. From Person #1 to #5 [Data Set]. Mendeley. V1. 2023. Available online: https://data.mendeley.com/datasets/5s4mt7xrd9/1 (accessed on 30 May 2023). [CrossRef]

- Rodriguez, M.; Outmane, O.; Bassam, A.; Noureddine, L. Mexican Sign Language’s Dactylology and Ten First Numbers—Labeled Images and Videos. From Person #6 to #11 [Data Set]. Mendeley. V1. 2023. Available online: https://data.mendeley.com/datasets/67htnzmwbb/1 (accessed on 30 May 2023). [CrossRef]

- Rodriguez, M.; Outmane, O.; Bassam, A.; Noureddine, L. Mexican Sign Language’s Dactylology and Ten First Numbers—Extracted Features and Models [Data Set]. Mendeley. V1. 2023. Available online: https://data.mendeley.com/datasets/hmsc33hmkz/1 (accessed on 30 May 2023). [CrossRef]

- Grandini, M.; Bagli, E.; Visani, G. Metrics for Multi-Class Classification: An Overview. arXiv 2020, arXiv:2008.05756. [Google Scholar] [CrossRef]

- Rodriguez, M.; Outmane, O.; Bassam, A.; Noureddine, L. TrainingAndExtractionForMSLRecognition. Github. 2023. Available online: https://github.com/EmmanuelRTM/TrainingAndExtractionForMSLRecognition (accessed on 13 July 2023).

- Renotte, N. ActionDetectionforSignLanguage. GitHub. 2020. Available online: https://github.com/nicknochnack/ActionDetectionforSignLanguage (accessed on 11 May 2022).

- Géron, A. Hands-on Machine Learning with Scikit-Learn, Keras, and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems, 2nd ed.; O’Reilly Media, Inc.: Sevastopol, CA, USA, 2019; pp. 335–338. [Google Scholar]

- TheAIGuysCode. Colab-webcam. GitHub. 2020. Available online: https://github.com/theAIGuysCode/colab-webcam (accessed on 5 December 2022).

- Google. Hand Landmarks Detection Guide. 2024. Available online: https://ai.google.dev/edge/mediapipe/solutions/vision/hand_landmarker (accessed on 26 December 2024).

- Camgoz, N.C.; Koller, O.; Hadfield, S.; Bowden, R. Sign Language Transformers: Joint End-to-end Sign Language Recognition and Translation. arXiv 2020, arXiv:2003.13830. [Google Scholar] [CrossRef]

- Min, Y.; Hao, A.; Chai, X.; Chen, X. Visual Alignment Constraint for Continuous Sign Language Recognition. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 11522–11531. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).