Abstract

In dynamic concurrent data structures, memory management poses a significant challenge due to the diverse types of memory access and operations. Timestamps are widely used in concurrent algorithms, but existing safe memory reclamation algorithms that utilize timestamps often fail to achieve a balance among performance, applicability, and robustness. With the development of the CPU timestamp counter, using it as the timestamp has proven to be efficient. Based on this, we introduce TSC-SIG in this paper to guarantee safe memory reclamation and successfully avoid use-after-free errors. TSC-SIG effectively reduces synchronization overhead, thereby improving the performance of concurrent operations. It leverages the POSIX signal mechanism to restrict the memory footprint. Furthermore, TSC-SIG can be integrated into various data structures. We conducted extensive experiments on diverse data structures and workloads, and the results clearly demonstrate the excellence of TSC-SIG in terms of performance, applicability, and robustness. TSC-SIG shows remarkable performance in read-dominated workloads. As related techniques continue to evolve, TSC-SIG exhibits significant development and application potential.

1. Introduction

In order to achieve lock-freedom, modern concurrent data structures often allow multiple readers and updaters to access a shared memory node concurrently without acquiring locks. This makes the synchronization of the node’s state among all threads highly complex. A thread reclaiming a node may not be aware that other threads are accessing or will access it. Thus, the safe memory reclamation (SMR) problem [1,2,3,4] poses a challenging issue in concurrent data structures.

For high-level managed languages, automatic garbage collection is capable of handling the memory reclamation issue in concurrent data structures. In unmanaged languages, such as C/C++, the reclamation of shared memory nodes within lock-free concurrent data structures typically requires manual handling. For manual methods, the reclamation of a node is divided into two stages. First, the node is retired by unlinking it from the relevant data structure. Then, the shared memory block of the node is freed once it is determined to be safely reclaimable. This indicates that memory reclamation algorithms require the use of synchronization mechanisms to enable threads to determine whether a retired node can be safely reclaimed.

The most intuitive scheme for implementing the synchronization mechanism within memory reclamation algorithms is to reserve the nodes that a thread wishes to use by employing shared variables to record some information associated with those nodes. Hazard Pointer (HP) [4,5,6] records the pointer to the node in a shared list before using it. However, this implies that every access to a shared memory node requires issuing a memory fence, which results in significant performance loss. Additionally, for concurrent data structures in which operations manipulate nodes that have not been accessed previously, HP is incompatible [7].

Epoch-Based Reclamation (EBR) algorithms [8,9,10] utilize a global epoch counter implemented as a shared atomic variable. When a thread performs a shared memory operation, it reads the current epoch value and announces it to other threads by recording this value in a shared list. The global epoch counter is incremented only after all threads have announced the same epoch value. This allows EBR to mark threads’ shared memory operations with an epoch. Once all threads have announced the current epoch, nodes retired by operations marked with the previous epoch can be safely reclaimed. These methods are faster because they issue memory fences less frequently, transitioning from a per-pointer basis to a per-operation basis compared with HP. However, if a thread stalls, it can prevent the increment in the global epoch counter, resulting in retired nodes never being reclaimed.

A variety of hybrid methods have been proposed by combining EBR and HP. Hazard Eras (HE) [11] maintains a global epoch counter while adopting a reservation mechanism analogous to the HP approach. Rather than protecting specific memory pointers, HE reserves the instantaneous value of the global epoch counter prior to each node access. To enable safe memory reclamation, HE augments each node with two pieces of metadata to record its allocation epoch and retirement epoch. The reclamation safety is verified by ensuring no reserved epoch values exist between these two recorded epochs. HE’s key advantage lies in its capability to advance global epochs even with stalled threads present. Nevertheless, HE inherits HP’s applicability limitations stemming from their analogous reservation mechanisms.

In Interval-Based Reclamation (IBR) [12], the thread reserves a continuous epoch interval by reserving two epochs, transitioning from discrete epochs in HE. The global epoch is recorded as the begin epoch at the start of an operation and updated to the latest epoch upon accessing shared memory. Two epochs are utilized to cover the active intervals of threads. If the active intervals of all threads do not overlap with the lifetime of a retired node, the node can be safely reclaimed. This method enhances both applicability and performance but requires a larger memory footprint compared with HE.

Several methods have been proposed to enable thread awareness of reclamation through the use of POSIX signals. When a thread intends to perform reclamation, it sends a signal to other threads. TreadScan (TS) [13], a variant of HP, requires a thread to reserve a pointer after receiving a signal. While TS is straightforward to implement, its applicability remains limited. Neutralization-Based Reclamation (NBR) [7] and DEBRA+ [2] leverage the non-local goto feature of the C/C++ language. These methods partition the operation into multiple phases. Upon receiving a neutralization signal, a thread determines whether to revert to a previously set checkpoint based on its current phase. DEBRA+, which builds on EBR, addresses the issue of stalled threads by rolling back to a checkpoint upon receiving a signal during any shared memory operation. However, DEBRA+ mandates the execution of specific recovery code when a signal is received during the write phase. In contrast, NBR performs rollback exclusively when the thread is in the read phase and maintains reserved pointers prior to entering write phases.

In this study, inspired by NBR and IBR, we propose a novel method to address the SMR problem. Our approach, named TSC-SIG, which is short for the integration of the CPU timestamp counter (TSC) [14] and POSIX signals, combines timestamp reservations with the neutralization signal mechanism. In TSC-SIG, we employ the value of the TSC as the timestamp, instead of the shared atomic variable employed in previous SMR methods. The TSC possesses the properties of monotonic increment, low overhead, and synchronization across multiple processes [15,16]. By leveraging the TSC, TSC-SIG avoids the performance degradation that can arise from high contention on the shared atomic variable. In the neutralization signal mechanism, the reclaimer sends a neutralization signal before reclaiming. After a thread receives a signal while reading, it executes a rollback to the established checkpoint. Furthermore, the thread completes the reservation before initiating the write phase. This mechanism enables TSC-SIG to circumvent the need for making reservations for each node access and limit memory usage by stalled threads.

TSC-SIG exhibits outstanding performance and broad applicability. Compared with HP, EBR, and most hybrid methods, TSC-SIG exhibits significant performance advantages. Additionally, both DEBRA+ and NBR employ a reservation mechanism based on Hazard Pointers. We propose to eliminate this mechanism due to its inherent applicability limitations. Furthermore, we aim to explore the integration of TSC-SIG into the increasingly prevalent class of concurrent data structures that utilize hardware timestamps.

The paper is organized as follows: Section 2 discusses related work. Section 3 introduces the model and assumptions, provides a detailed description of TSC-SIG and its implementation, and formally proves its safety and robustness. Section 4 demonstrates performance experiments. Finally, Section 5 concludes the paper with a summary of our contributions and future research directions.

2. Related Work

There are numerous memory reclamation algorithms that address the safe memory reclamation (SMR) problem through various approaches. We present a simple classification of these algorithms based on whether the thread executing shared memory operations is aware of reclamation.

2.1. Unawareness of Reclamation

The thread responsible for reclaiming shared memory nodes is unaware of whether other threads hold pointers to these nodes. To avoid a segmentation fault, this type of algorithm implements synchronization to ensure that threads coordinate before accessing shared memory nodes, protecting them from reclamation by other threads.

Hazard Pointers (HP) [4,5,6] is a typical memory reclamation algorithm widely used in concurrent data structures. The principle of HP is simple and easy to understand, making it suitable for explaining how threads coordinate on shared memory nodes without being aware of reclamation by others. In HP, a thread reserves a pointer by recording its value in a shared list before using it. The reclaimer can determine if a node is safely reclaimable by checking whether its address is present in the HP lists of all threads. For synchronization across all threads, each reservation requires the issuance of a memory fence, which significantly impacts performance. Additionally, the reserved pointer’s accessibility must be verified because other threads may modify the data structure during the reservation process. If validation fails, the thread must retry reserving the pointer.

HP faces challenges in scenarios where the number of accessed nodes is uncertain, such as in tree rotations [17] or when traversing a lazy linked list [18]. Additionally, in lock-free data structures [19], where nodes are logically deleted by marking them, HP cannot be directly applied because it cannot determine whether a node is unlinked [2]. Furthermore, HP is not applicable to many data structures [20,21] that employ optimistic traversal because Hazard Pointers only protects accessed nodes from reclamation. In such cases, a thread may traverse to the next node even if it has been detached, potentially leading to unsafe behavior. For instance, if thread A stalls after reserving node N, and thread B subsequently adds and deletes another node after node N, thread A could potentially face an unsafe situation when resuming and attempting to access the node it believed to be the next one (relative to N), as that node might have been freed.

Drop the Anchor [1] extends HP to reduce overhead, but it can only be applied to a linked list. HP++ [22] represents a more widely applicable variant of HP, though the issue of high overhead persists.

Epoch-Based Reclamation (EBR) algorithms [8,9,10] maintain a global epoch counter, and threads synchronize based on this counter. According to RCU [8], a thread is considered to be in a quiescent state if it does not hold any pointer referencing the shared memory node. At the beginning of its operations, a thread must announce the current global epoch, at which point it is clearly in a quiescent state. These announcements are made by reserving the value of the global epoch counter in a shared list. The global epoch counter is incremented only when all threads have announced the current global epoch. Each advance of the global epoch signifies that all threads have reached a quiescent state. In EBR, each thread maintains three retired bags, each tagged with an epoch value. Nodes retired after announcing epoch n are added to the retired bag tagged by n. Once all threads have announced epoch , the global epoch is advanced to , indicating that all threads have experienced a quiescent state and that all nodes in the retired bags tagged by n are safe for reclamation.

EBR achieves excellent performance because each thread performs only one reservation per operation. However, the synchronization of the global epoch can be hindered by any stalled thread, leading to a significantly large memory footprint.

Hazard Eras (HE) [11] addresses the issue of excessive memory footprint in EBR caused by stalled threads by utilizing Hazard Pointers to discretely reserve epochs. In HE, each node is augmented with two extra variables to record its allocation epoch and retirement epoch, effectively representing its lifetime. The reclamation verification of a node is transformed into a process of checking all threads’ reserved epochs and the node’s recorded lifetime. Accessibility verification after reservation involves comparing the value of the global epoch counter after reservation with the reserved epoch. A key advantage of this approach is that it reduces issues related to memory fences caused by reservations. However, the frequency of global epoch advancement remains a critical factor that significantly impacts both performance and memory usage. Similar to HP, HE faces the same applicability limitations. Wait-Free Eras (WFE) [23] enhances HE by incorporating the helping pattern, thereby achieving wait-freedom guarantees and improved performance. However, its applicability remains limited.

Interval-Based Reclamation (IBR) [12], similar to HE, presents a hybrid approach integrating HP and EBR. In IBR, a thread records the value of the global epoch as the start epoch. Prior to accessing a node, the thread updates the latest epoch to extend the scope of its reservation. The reclaimer determines whether a node can be safely reclaimed by comparing the node’s lifetime with the reservations of all threads. For a thread that moves slowly or progresses intermittently, its reservation interval may span an excessive number of epochs, resulting in increased memory overhead. Although IBR’s reservation method is capable of covering all traversed nodes and handling operations in which the number of manipulated nodes is indeterminate, similar to HP and HE, IBR still confronts obstacles when applied to data structures that adopt optimistic traversal strategies.

Another way used in SMR algorithms to implement synchronization among threads is to leverage reference counting. Traditional reference counting methods suffer from reference cycles when using weak pointers and incur significant overhead. CDRC [24] has demonstrated improved performance in read-heavy workloads, but it still imposes significant overhead in update-heavy scenarios. Hyaline (HY) [25] and Crystalline (CY) [26] use a novel approach to manage reference counts. They record the number of active threads when retiring a node. When a thread completes its operations, it must decrement the active threads counter of nodes that were retired during its active state. HY and CY also cannot be used with data structures in which threads optimistically traverse chains of unlinked records.

2.2. Awareness of Reclamation

Reclamation algorithms can enable threads to be aware of reclamation occurrences, either by detecting them or by receiving notifications, to reduce the overhead of synchronization.

Optimistic Access (OA) [27] permits a rollback to a safe point when a thread accesses reclaimed nodes. OA requires data structures to be in a normalized form [28], and Automatic Optimistic Access (AOA) [29] and FreeAccess (FA) [30] aim to eliminate this requirement. However, all of these algorithms only support optimistic read and write operations and are still applied conservatively, using HP protection to avoid writes on reclaimed shared memory nodes. Version-Based Reclamation (VBR) [31] maintains a global epoch counter and uses it to record threads’ active epoch scope and the lifetime of a node. Therefore, VBR makes write operations able to access reclaimed nodes in the manually managed node pools [32,33]. All Optimistic Access algorithms require the implementation of a user-level allocator to support accessing reclaimed nodes.

There are some algorithms [2,7,13] that employ POSIX signals. In these algorithms, a thread signals other threads before reclamation. The main difference among these algorithms lies in the signal handler and the type of reservations. ThreadScan (TS) [13] combines HP and POSIX signals. TS delays the reservation of pointers after receiving signals. Although TS reduces the frequency of memory fence issues and improves performance, it still suffers from the applicability problem inherent in HP.

DEBRA+ [2], which is based on EBR, introduces POSIX signals to eliminate the impact of stalled threads. After receiving a signal, the thread executing shared memory operations must execute special recovery code to recover the modification of data structures. In this way, DEBRA+ causes the stalled thread in a non-quiescent state to return to the quiescent state. DEBRA+ requires recovery code to be written for each data structure and uses HP to reserve pointers used in the recovery process, thus causing difficulty in application.

Neutralization-Based Reclamation (NBR) [7] is similar to DEBRA+ in adding the rollback mechanism. If a thread receives a signal while performing read operations, it must restart the read from the checkpoint it set earlier. Unlike DEBRA+, NBR does not perform rollback when executing write operations. Before executing write operations, it reserves the pointers that will be used during writing to limit the memory usage of stalled threads.

3. Materials and Methods

3.1. System Model and Assumptions

We adopt the standard asynchronous shared memory model, as described in [7,34]. Access to the shared memory is mediated through atomic instructions supported by hardware. This set of operations encompasses atomic reads and writes, and the compare-and-swap (CAS) and fetch-and-add (FAA) instructions. Additionally, processors support the invariant CPU timestamp counter (TSC), and a thread can use the instruction to acquire the TSC’s value. The data structure is composed of nodes that are accessed through an immutable entry point. Each node can exist in one of five states: , , , , or . When a node is assigned shared memory, it is . Upon being incorporated into the data structure, it becomes , indicating that it can be reached from the entry point. Once it is removed from the data structure, it becomes . When no thread will access it again, it is deemed and thus eligible to be .

Like NBR [7], the data structure to which TSC-SIG can be applied follows a similar template, where each operation should consist of a sequence of phases: preamble, read, reservation, and write. To put it simply, access to shared memory nodes must adhere to the read–reservation–write pattern. The thread is prohibited from making system calls during the read phase and must not perform any modification operations, such as writing to shared nodes or globals or modifying thread-local data structures. Before writing, the thread must make reservations. During the write phase, only the shared memory nodes protected by the previous reservations can be modified. Other parts of the operation that do not belong to the read–reservation–write sequence are part of the preamble phase.

3.2. TSC-SIG

In TSC-SIG, we utilize the value of the CPU timestamp counter (TSC) by executing the instruction as the timestamp for manipulations on shared memory nodes. By comparing timestamps, threads can be synchronized in memory management, i.e., ensuring safe memory reclamation. A tremendous memory footprint due to stalled threads is a common problem of quiescent-based reclamation [8,9,10,11,12]. In order to eliminate this impact, we introduce the POSIX signal mechanism. When a thread collects a certain number of retired nodes, it needs to send a neutralization signal to all threads. What a thread does when it receives a signal depends on its state.

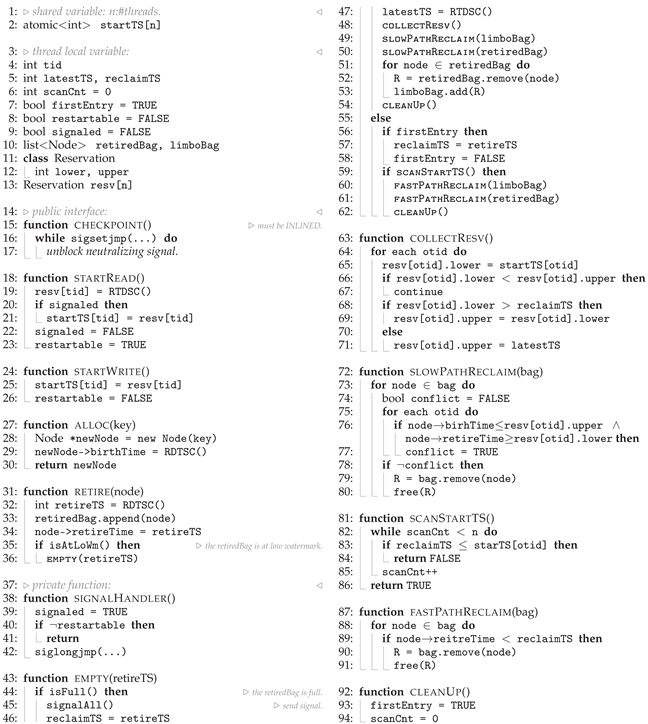

The pseudocode of TSC-SIG is presented in Algorithm 1. We use the instruction to acquire the value of the invariant TSC, which is supported by most modern x86 processors.

CHECKPOINT() and signalHandler(): To implement the rollback mechanism, we use the C/C++ procedures and . In brief, the thread executes to save the local state, and subsequently, it can execute to restore the previously saved state. TSC-SIG encapsulates within CHECKPOINT() for creating a checkpoint (line 16). If a neutralization signal is received, the thread immediately executes SIGNALHANDLER(). Within SIGNALHANDLER(), the thread executes (line 42) for a rollback to the checkpoint and restarts the operation when it has checked that the local restartable flag is set.

startRead() and startWrite(): Some preparatory work must be completed before entering the read and write phases. Before entering the read phase, one must read the current value of the TSC to mark the start time of the read operation (line 19). Subsequently, the restartable flag is set, indicating the beginning of the read operation. This also signifies that should a neutralization signal be received after this point, the thread will perform a rollback to the previously established checkpoint. The cost associated with rollbacks during the read phase is significantly lower than during the write phase. If rollbacks are performed during the write phase, specific recovery code must be executed to undo any changes already made to the data structure. Therefore, in startWrite(), timestamps must be recorded in a single-writer multi-reader array before entering the write phase (line 25). The thread then sets the restartable flag to FALSE (line 26) to prevent rollbacks when a signal is received during the write phase.

To avoid the situation where the startTS reservation is not updated for extended periods in read-dominant workloads, we added a signaled flag. Each time signalHandler() is executed, the signaled flag is set. If the signaled flag is set during startRead, the reservation will be moved forward to startRead().

| Algorithm 1 Overview of pseudocode for TSC-SIG |

|

alloc() and retire(): To ensure safe reclamation, TSC-SIG adds two variables (birthTime and retireTime) to each node. In alloc() and retire(), the thread needs to read the value of the TSC and assign it to birthTime (line 29) and retireTime (line 34), respectively. When the number of nodes in retiredBag reaches the low-set watermark threshold, the thread calls empty() to conduct the reclamation process (line 36).

EMPTY(): The task of EMPTY() is to complete the reclamation of retired nodes. If the size of retiredBag reaches the low watermark but does not exceed the maximum upper limit, the reclamation process will take the fast path. When it reaches the previously set maximum threshold, it enters the slow path.

First, we focus on the fast path. When the reclamation process enters the fast path for the first time, the thread updates reclaimTS to help determine which nodes can subsequently be safely reclaimed (line 57). Then, the thread invokes scanStartTS(), which sequentially reads the startTS value of each thread until all the read startTS values exceed the reclaimTS value. If scanStartTS() is completed before the size of retiredBag exceeds the maximum value, fastPathReclaim() can be directly called to reclaim all the nodes retired before reclaimTS. Otherwise, the process enters the slow path.

In the slow path, first, the thread needs to send a neutralization signal to other threads by calling signalAll() (line 45). In signalAll(), the reclaimer uses to send the signal. After all threads have completed the signal handler, the thread records the value of the TSC in latestTS as the timestamp of this signal sending and updates reclaimTS. Before starting to reclaim nodes, the thread reads startTS by calling collectResv() to complete the collection of reservations (line 48). Finally, by comparing the birthTime and retireTime of each individual retired node with the reservations of all threads, it can be determined which nodes can be safely reclaimed. If there is no thread whose active interval overlaps with the lifetime of the node, it means that the node will not be accessed by any thread. In addition, to avoid frequent signal sending due to stalled threads keeping retiredBag in a high-load state, we use an additional list to store the nodes protected by reservations (line 53).

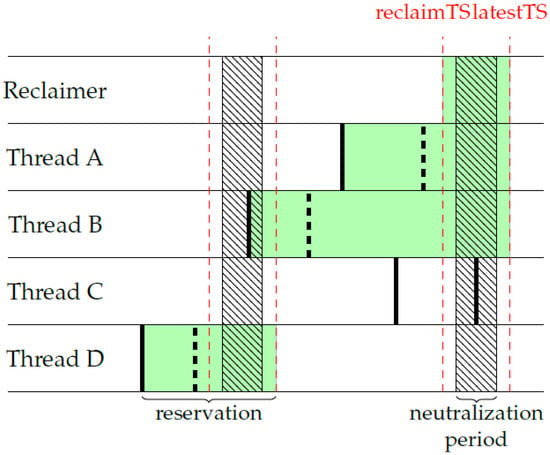

In collectResv(), we adopt an over-approximation method, as shown in Figure 1. When the startTS of another thread is read and found to be smaller than the previously recorded upper value (line 66), it indicates that this thread is still in the write phase. It has not yet started the next read phase since the last signal was sent. In this case, there is no need to update the reservations of this thread. If the read startTS is smaller than reclaimTS, regardless of the phase this thread is in, updating the upper value with the latestTS can cover its active interval. Otherwise, it cannot be determined whether the reserving startTS occurred before the signal was sent. Then, we update the upper value to the lower value and leave it to be handled when the next signal is sent. The reclaimer that executes collectResv() will record its own lower value as startTS and then update the upper value to latestTS. Therefore, its reservations span the range from reclaimTS to latestTS.

Figure 1.

Reservation collection in slow-path reclamation. The thick solid black line represents the startTS reading, while the thick dashed black line represents the startTS reservation. The green rectangle illustrates the collected results.

TSC-SIG adopts the same interface as NBR [7]. Similar to NBR, we use the Harris List [35] as an example to demonstrate the use of TSC-SIG in Algorithm 2. Compared with the examples in NBR, we have added some details. The thread allocates memory for a new node by invoking alloc() (line 2). In the search operation, before traversal, the thread calls checkpoint() (line 19) and startRead() (line 20). After search() returns, a write operation like insert or delete might be performed. Hence, the thread needs to call startWrite() (line 41) before returning. Before performing the CAS operation (line 48) to trim the marked node from the data structure, the thread also needs to call startWrite() (line 47). After unlinking the node from the data structure, retire() is used instead of free (line 53).

| Algorithm 2 Applying TSC-SIG to Harris List [35] |

|

TSC-SIG is suitable for data structures that exhibit patterns of alternating read and write phases. In such data structures, all references to shared pointers that could be accessed during the write phase must have been read by the most recent preceding read phase. TSC-SIG makes reservations of timestamps. Timestamp reservations are made by TSC-SIG. When encountering data structures that do not require restarting from the entry point, TSC-SIG can be applied without modification, unlike NBR. However, it still cannot be applied to data structures in which read and write phases interleave in a certain way. Specifically, during the write phase, a writer may manipulate nodes traversed during previous read phases, not just those accessed by the immediately preceding read phase. Future research could explore having each thread reserve a startTS for each type of write operation to adapt to these data structures.

3.3. Correctness

Lemma 1.

In fast-path reclamation, no reclaimer thread reclaims an unsafe shared memory node.

Proof.

Let us suppose that an unsafe node N is reclaimed by thread T through fast-path reclamation. T verifies that the startTS of all threads is greater than reclaimTS before reclaiming nodes. T then reclaims all nodes in retiredBag whose retireTime is less than reclaimTS. Node N will be reclaimed by T only when its retireTime is less than reclaimTS. If unsafe reclamation occurs, it is possible that either a writer or a reader accessed N while T was reclaiming its retiredBag. The reservation of startTS must be completed before entering the write phase. During the read phase, startTS records the timestamp value reserved before the previous write phase. Therefore, the retireTime of N, accessed by the reader or writer, should be greater than their respective startTS values. This contradicts what was stated before—that the retireTime of N is less than reclaimTS. Therefore, the previous supposition does not hold. □

Lemma 2.

In slow-path reclamation, no reclaimer thread reclaims an unsafe shared memory node.

Proof.

Let us suppose that an unsafe node N is reclaimed by thread during slow-path reclamation. If this occurs, only two possibilities exist: (1) a thread accessed N without the reservation during the write phase, or (2) a thread accesses N after it has been reclaimed by during the read phase. must send a neutralization signal to all other threads before starting reclamation. For (1), it clearly cannot occur because must complete the reservation before entering the write phase and will not access any node other than those accessed during the read phase within the write phase. For (2), once receives the signal during the read phase, it will execute the signalHandler() to relinquish all the acquired pointers and roll back to the previously established checkpoint. then reclaims the nodes in retiredBag after all threads have executed signalHandler(). Therefore, when reclaims node N, has already relinquished the pointer pointing to N, and (2) will not occur. □

Lemma 3

(TSC-SIG is safe). No reclaimer thread in TSC-SIG reclaims an unsafe node.

Proof.

According to Lemma 1 and Lemma 2, TSC-SIG will not reclaim an unsafe node during both fast-path and slow-path reclamation; thus, TSC-SIG is safe. □

Lemma 4

(TSC-SIG is robust). The number of nodes that are retired but not yet reclaimed is bounded.

Proof.

Let us suppose that the maximum size of retiredBag is m and the number of threads is n. is a reclaimer, and is any other thread. gets stalled in the write phase after finishing the execution of signalHandler(), which is responding to the last neutralization signal sent by . Upon the number of nodes in the retiredBag of reaching m, sends the signal again and then reads the startTS of and updates its own reservations of . The worst-case scenario is that prevents from reclaiming all the nodes in retiredBag. Similarly, when is starved instead of being stalled, the situation remains the same. When the other threads are all stalled, at most retired nodes in the limboBag and retiredBag of will be prevented from being reclaimed. Therefore, in TSC-SIG, the number of nodes that are retired but not yet reclaimed is bounded. □

4. Results and Discussion

Our experiments were carried out on a dual-socket Intel Xeon E5-2683 v3 machine with 56 threads, 32 GB of RAM, and 35 MB of L3 cache per socket (total of 70 MB). The authors behind NBR [7] have conducted extensive research on SMR algorithms. We adopted their implementations of various data structures and memory reclamation schemes. Similarly, we also implemented our own algorithm on the Setbench [36] benchmark, as the authors who designed NBR did. We compiled with the -O3 optimization flag and used jemalloc [37] as the memory allocator.

We selected several data structures with different memory access patterns. For lists, we selected the LazyList (LL) [18], the Harris List (HL) [35], and the Harris–Michael List (HMList) [4]. For trees, we selected the external binary search tree by David et al. (DGT) [38] and Brown’s relaxed (a,b)-tree (BABT) [39]. And we selected the HMList chaining-based hashtable (HMHT) [19] for the hashtable.

In our experiment, we selected several representative SMR algorithms. Specifically, we selected numerous quiescent-based methods that utilize timestamps.

RCU, an EBR scheme implemented in IBR [12].

DEBRA, a fast variant of EBR introduced by Brown et al. [2]. Since using DEBRA+ requires adding specific recovery code to each data structure, we did not include it.

HE, the Hazard Eras scheme by Ramalhete and Correia [11], which is a hybrid of Hazard Pointers and EBR.

WFE, i.e., Wait-Free Eras, by Nikolaev and Ravindran [23], which is a wait-free variant of HE.

2GEIBR, the two-global-epochs IBR scheme introduced by Wen et al. [12].

In addition, for comparison with algorithms using different synchronization schemes, we selected the following:

HP, the original Hazard Pointers-based reclamation [4].

Crystalline-L, the Crystalline-L scheme by Nikolaev and Ravindran [26], which employs an efficient form of reference counting.

NBR+, the Neutralization-Based Reclamation scheme [7], which uses the POSIX signal mechanism and Hazard Pointers.

We excluded Optimistic Access algorithms [27,29,30,31] because they require a type-preserving allocator. HP is not applicable for use with the Harris List; thus, it is not included in the comparison involving the Harris List. Crystalline-L has accidental crash problems that arise in oversubscribed scenarios of BABT and DGT, so it has been excluded from the diagrams associated with these two data structures.

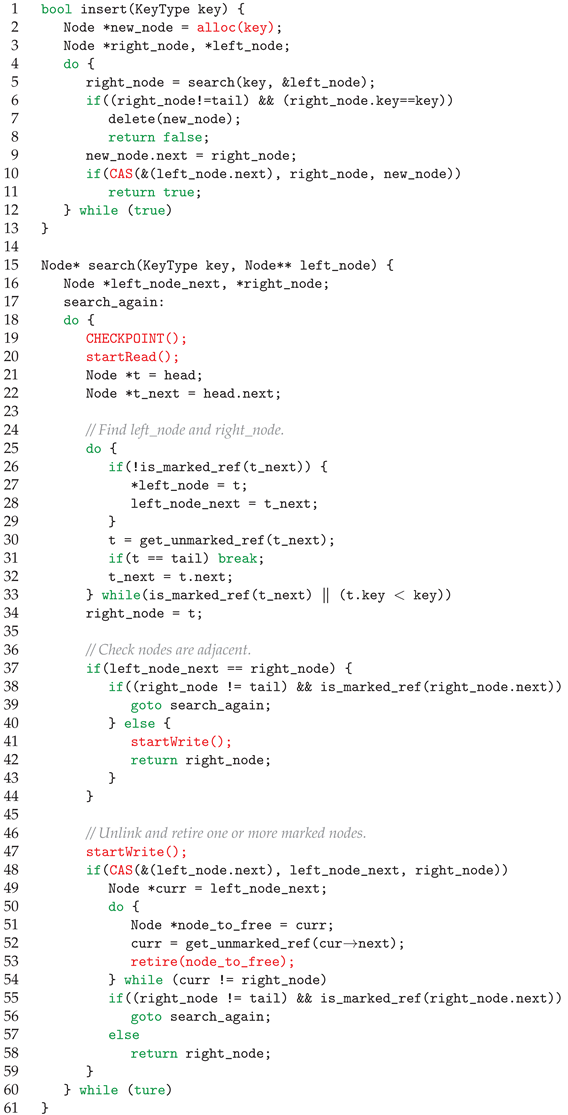

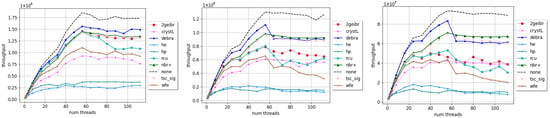

Figure 2 illustrates the performance comparison of SMR algorithms in list-type data structures. TSC-SIG exhibits performance similar to NBR+. Notably, in the LazyList implementation, TSC-SIG surpasses NBR+ in performance. Compared with HP and Crystalline-L, TSC-SIG demonstrates significantly better performance. While Crystalline-L outperforms traditional reference counting methods, the overhead of reference counting results in relatively poor performance in our experimental setup. When compared with quiescent-based methods, TSC-SIG performs slightly worse than RCU but surpasses most other quiescent-based methods in most scenarios.

Figure 2.

Throughput evaluation of list-type data structures. Y-axis: throughput in million operations per second. X-axis: number of threads. Proportion of updates: left: 10%; middle: 50%; right: 100%. Max size: 2000.

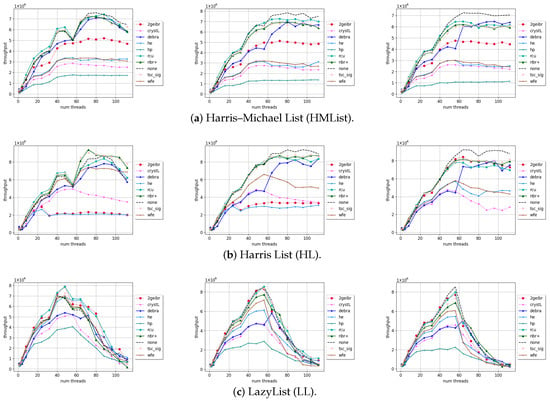

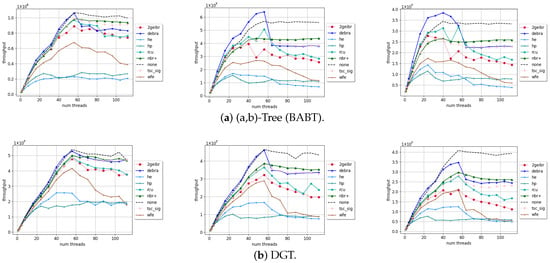

Figure 3 and Figure 4 show the throughput performance of these SMR algorithms in tree-type and hashtable-type data structures, respectively. The performance of TSC-SIG here is slightly inferior to that of DEBRA and NBR+, but it is still better than most quiescent-based methods. In the case of oversubscription, DEBRA will experience reclamation bursts [7], which involve the simultaneous reclamation of numerous nodes and result in performance degradation and significant memory consumption. In these three data structures (BABT, DGT, and HMHT), the throughput level is much higher than that of list-type structures. In the case of higher throughput, due to the over-approximation reservation scheme, the number of retired but not-yet-reclaimed nodes in TSC-SIG is greater than that in NBR+. Therefore, the signal sending frequency is higher than that of NBR+; thus, the performance is somewhat poorer. Especially when the proportion of update operations increases, this gap becomes larger.

Figure 3.

Throughput evaluation of tree-type data structures. Y-axis: throughput in million operations per second. X-axis: number of threads. Proportion of updates: left: 10%; middle: 50%; right: 100%. Max size: 1,000,000.

Figure 4.

Throughput evaluation of HMList-based hashtable (HMHT). Y-axis: throughput in million operations per second. X-axis: number of threads. Proportion of updates: left: 10%; middle: 50%; right: 100%. Load factor: 5. Buckets: 10K.

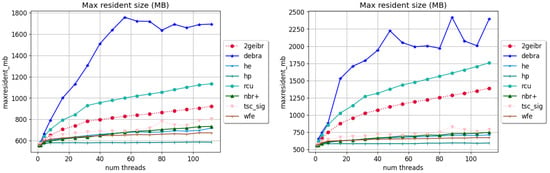

To investigate the memory footprint of TSC-SIG in the presence of stalled threads, we conducted experiments by suspending one thread and running the tests for durations of 10 s and 40 s, respectively. In 2GEIBR, we strategically employed intermittent thread execution to deliberately prolong reservation intervals, thereby maximizing memory occupation by emulating pathological scheduling patterns. While these experiments do not enable the precise measurement of memory usage with stalled threads, they effectively uncover memory usage patterns. As depicted in Figure 5, traditional algorithms such as DEBRA, RCU, and 2GEIBR all exhibit increasing memory consumption over time. In contrast, TSC-SIG functions as anticipated, maintaining consistent memory levels throughout the experimental runs.

Figure 5.

(Left) DGT with stalled threads running for 10 s. (Right) DGT with stalled threads running for 40 s.

The integration of the neutralization signal mechanism significantly reduces the frequency of memory barrier operations, which primarily occurs during reservation phases. As demonstrated in our experimental results, TSC-SIG delivers excellent performance across most scenarios, as anticipated. In list-type data structures, due to reservations of timestamps, TSC-SIG takes a slight performance advantage over NBR, though this benefit is offset by the over-approximation reservation scheme that induces more frequent signal transmissions. Nevertheless, reservations of timestamps demonstrate superior applicability in concurrent data structures. Notably, TSC-SIG exhibits greater robustness compared with other quiescent-based reclamation approaches. This characteristic enables broader practical deployment potential. As the TSC continues to evolve with higher precision and lower access latency, TSC-SIG’s design aligns well with hardware trends, suggesting expanding applicability in concurrent data structures.

5. Conclusions

In this paper, we propose a safe and robust approach, called TSC-SIG, which combines the neutralization signal and timestamp reservations for memory reclamation in dynamic concurrent data structures. By leveraging the CPU timestamp counter as the timestamp, TSC-SIG enables synchronization among threads, thereby effectively preventing use-after-free errors. Compared with other methods that use software timestamps, it reduces the contention on shared atomic variables. Meanwhile, by utilizing the POSIX signal mechanism, TSC-SIG reduces the overhead caused by synchronization. Additionally, TSC-SIG restricts the memory footprint that might be incurred by stalled (preempted) threads. TSC-SIG demonstrates highly competitive performance under most circumstances, particularly in read-dominated workloads. As the CPU timestamp counter continues to evolve and its application in the domain of concurrent data structures expands, the application prospects of TSC-SIG are becoming increasingly broad. In future investigations, TSC-SIG will be integrated into timestamp-based concurrent data structures to enable safe memory reclamation, while preserving the data structures’ inherent efficiency.

Author Contributions

Z.Y. and C.Z. collaboratively conceived and designed the concept for the study. C.Z. performed the experiments and wrote the initial draft of the manuscript. X.Z. provided valuable guidance and support throughout the project. All authors reviewed the results, contributed to the revision of the manuscript, and approved the final version for publication. All authors have read and agreed to the published version of the manuscript.

Funding

This research study was funded by the Hunan Provincial Department of Education Scientific Research Outstanding Youth Project (grant number 22B0222).

Data Availability Statement

The code is available upon request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Braginsky, A.; Kogan, A.; Petrank, E. Drop the Anchor: Lightweight Memory Management for Non-Blocking Data Structures. In Proceedings of the Twenty-Fifth Annual ACM Symposium on Parallelism in Algorithms and Architectures, Montréal, QC, Canada, 23–25 July 2013; pp. 33–42. [Google Scholar] [CrossRef]

- Brown, T.A. Reclaiming Memory for Lock-Free Data Structures: There has to be a Better Way. In Proceedings of the 2015 ACM Symposium on Principles of Distributed Computing, Donostia-San Sebastián, Spain, 21–23 July 2015; PODC’15. pp. 261–270. [Google Scholar] [CrossRef]

- Kang, J.; Jung, J. A Marriage of Pointer- and Epoch-Based Reclamation. In Proceedings of the 41st ACM SIGPLAN Conference on Programming Language Design and Implementation, London, UK, 15–20 June 2020; pp. 314–328. [Google Scholar] [CrossRef]

- Michael, M. Hazard Pointers: Safe Memory Reclamation for Lock-Free Objects. IEEE Trans. Parallel Distrib. Syst. 2004, 15, 491–504. [Google Scholar] [CrossRef]

- Herlihy, M.; Luchangco, V.; Martin, P.; Moir, M. Nonblocking Memory Management Support for Dynamic-Sized Data Structures. ACM Trans. Comput. Syst. 2005, 23, 146–196. [Google Scholar] [CrossRef]

- Dice, D.; Herlihy, M.; Kogan, A. Fast Non-Intrusive Memory Reclamation for Highly-Concurrent Data Structures. In Proceedings of the 2016 ACM SIGPLAN International Symposium on Memory Management, Santa Barbara, CA, USA, 14 June 2016; pp. 36–45. [Google Scholar] [CrossRef]

- Singh, A.; Brown, T.A.; Mashtizadeh, A.J. Simple, Fast and Widely Applicable Concurrent Memory Reclamation via Neutralization. IEEE Trans. Parallel Distrib. Syst. 2024, 35, 203–220. [Google Scholar] [CrossRef]

- McKenney, P.E.; Slingwine, J.D. Read-copy update: Using execution history to solve concurrency problems. In Parallel and Distributed Computing and Systems; Citeseer: Princeton, NJ, USA, 1998; Volume 509518, pp. 509–518. [Google Scholar]

- Fraser, K. Practical Lock-Freedom; Technical Report UCAM-CL-TR-579; University of Cambridge, Computer Laboratory: Cambridge, UK, 2004. [Google Scholar] [CrossRef]

- Hart, T.E.; McKenney, P.E.; Brown, A.D.; Walpole, J. Performance of Memory Reclamation for Lockless Synchronization. J. Parallel Distrib. Comput. 2007, 67, 1270–1285. [Google Scholar] [CrossRef]

- Ramalhete, P.; Correia, A. Brief Announcement: Hazard Eras—Non-Blocking Memory Reclamation. In Proceedings of the 29th ACM Symposium on Parallelism in Algorithms and Architectures, Washington, DC, USA, 24–26 July 2017; pp. 367–369. [Google Scholar] [CrossRef]

- Wen, H.; Izraelevitz, J.; Cai, W.; Beadle, H.A.; Scott, M.L. Interval-Based Memory Reclamation. In Proceedings of the 23rd ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, Vienna, Austria, 24–28 February 2018; pp. 1–13. [Google Scholar] [CrossRef]

- Alistarh, D.; Leiserson, W.M.; Matveev, A.; Shavit, N. ThreadScan: Automatic and Scalable Memory Reclamation. In Proceedings of the 27th ACM Symposium on Parallelism in Algorithms and Architectures, Portland, OR, USA, 13–15 June 2015; pp. 123–132. [Google Scholar] [CrossRef]

- Intel. Intel 64 and IA-32 Architectures Software Developer’s Manual. 2024. Available online: https://www.intel.com/content/www/us/en/developer/articles/technical/intelsdm.html (accessed on 12 November 2024).

- Ruan, W.; Liu, Y.; Spear, M. Boosting Timestamp-Based Transactional Memory by Exploiting Hardware Cycle Counters. ACM Trans. Archit. Code Optim. 2013, 10, 1–21. [Google Scholar] [CrossRef]

- Grimes, O.; Nelson-Slivon, J.; Hassan, A.; Palmieri, R. Opportunities and Limitations of Hardware Timestamps in Concurrent Data Structures. In Proceedings of the 2023 IEEE International Parallel and Distributed Processing Symposium (IPDPS), St. Petersburg, FL, USA, 15–19 May 2023; pp. 624–634. [Google Scholar] [CrossRef]

- Clements, A.T.; Kaashoek, M.F.; Zeldovich, N. Scalable address spaces using RCU balanced trees. In Proceedings of the Seventeenth International Conference on Architectural Support for Programming Languages and Operating Systems, London, UK, 3–7 March 2012; ASPLOS XVII. pp. 199–210. [Google Scholar] [CrossRef]

- Heller, S.; Herlihy, M.; Luchangco, V.; Moir, M.; Scherer, W.N.; Shavit, N. A Lazy Concurrent List-Based Set Algorithm. In Principles of Distributed Systems; Anderson, J.H., Prencipe, G., Wattenhofer, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 3–16. [Google Scholar]

- Michael, M.M. High performance dynamic lock-free hash tables and list-based sets. In Proceedings of the Fourteenth Annual ACM Symposium on Parallel Algorithms and Architectures, Winnipeg, MB, Canada, 11–13 August 2002; SPAA’02. pp. 73–82. [Google Scholar] [CrossRef]

- Brown, T.; Ellen, F.; Ruppert, E. A general technique for non-blocking trees. In Proceedings of the 19th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, Orlando, FL, USA, 15–19 February 2014; pp. 329–342. [Google Scholar]

- Drachsler, D.; Vechev, M.; Yahav, E. Practical concurrent binary search trees via logical ordering. In Proceedings of the 19th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, Orlando, FL, USA, 15–19 February 2014; pp. 343–356. [Google Scholar]

- Jung, J.; Lee, J.; Kim, J.; Kang, J. Applying Hazard Pointers to More Concurrent Data Structures. In Proceedings of the 35th ACM Symposium on Parallelism in Algorithms and Architectures, Orlando, FL, USA, 17–19 June 2023; pp. 213–226. [Google Scholar] [CrossRef]

- Nikolaev, R.; Ravindran, B. Universal Wait-Free Memory Reclamation. In Proceedings of the 25th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, San Diego, CA, USA, 22–26 February 2020; pp. 130–143. [Google Scholar] [CrossRef]

- Anderson, D.; Blelloch, G.E.; Wei, Y. Concurrent Deferred Reference Counting with Constant-Time Overhead. In Proceedings of the 42nd ACM SIGPLAN International Conference on Programming Language Design and Implementation, Virtual Event, Canada, 20–25 June 2021; pp. 526–541. [Google Scholar] [CrossRef]

- Nikolaev, R.; Ravindran, B. Hyaline: Fast and Transparent Lock-Free Memory Reclamation. In Proceedings of the 2019 ACM Symposium on Principles of Distributed Computing, Toronto, ON, Canada, 29 July–2 August 2019; pp. 419–421. [Google Scholar] [CrossRef]

- Nikolaev, R.; Ravindran, B. Crystalline: Fast and memory efficient wait-free reclamation. arXiv 2021, arXiv:2108.02763. [Google Scholar]

- Cohen, N.; Petrank, E. Efficient Memory Management for Lock-Free Data Structures with Optimistic Access. In Proceedings of the 27th ACM Symposium on Parallelism in Algorithms and Architectures, Portland, OR, USA, 13–15 June 2015; SPAA’15. pp. 254–263. [Google Scholar] [CrossRef]

- Petrank, E. A Practical Wait-Free Simulation for Lock-Free Data Structures. ACM SIGPLAN Not. 2014, 49, 357–368. [Google Scholar]

- Cohen, N.; Petrank, E. Automatic Memory Reclamation for Lock-Free Data Structures. In Proceedings of the 2015 ACM SIGPLAN International Conference on Object-Oriented Programming, Systems, Languages, and Applications, Pittsburgh, PA, USA, 25–30 October 2015; pp. 260–279. [Google Scholar] [CrossRef]

- Cohen, N. Every Data Structure Deserves Lock-Free Memory Reclamation. Proc. ACM Program. Lang. 2018, 2, 1–24. [Google Scholar] [CrossRef]

- Sheffi, G.; Herlihy, M.; Petrank, E. VBR: Version Based Reclamation. In Proceedings of the 33rd ACM Symposium on Parallelism in Algorithms and Architectures, Virtual, 6–8 July 2021; SPAA’21. pp. 443–445. [Google Scholar] [CrossRef]

- Herlihy, M. A Methodology for Implementing Highly Concurrent Data Objects. ACM Trans. Program. Lang. Syst. 1993, 15, 745–770. [Google Scholar] [CrossRef]

- Treiber, R.K. Systems Programming: Coping with Parallelism; International Business Machines Incorporated, Thomas J. Watson Research Center: New York, NY, USA, 1986. [Google Scholar]

- Singh, A.; Brown, T.; Mashtizadeh, A. NBR: Neutralization Based Reclamation. In Proceedings of the 26th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, Virtual Event, Republic of Korea, 27 February 2021; pp. 175–190. [Google Scholar] [CrossRef]

- Harris, T.L. A Pragmatic Implementation of Non-blocking Linked-lists. In Distributed Computing; Welch, J., Ed.; Springer: Berlin/Heidelberg, Germany, 2001; pp. 300–314. [Google Scholar]

- Brown, T.; Prokopec, A.; Alistarh, D. Non-Blocking Interpolation Search Trees with Doubly-Logarithmic Running Time. In Proceedings of the 25th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, San Diego, CA, USA, 22–26 February 2020; pp. 276–291. [Google Scholar] [CrossRef]

- Evans, J A Scalable Concurrent Malloc(3) Implementation for FreeBSD. 2006. Available online: https://papers.freebsd.org/2006/bsdcan/evans-jemalloc/ (accessed on 12 November 2024).

- David, T.; Guerraoui, R.; Trigonakis, V. Asynchronized Concurrency: The Secret to Scaling Concurrent Search Data Structures. ACM SIGARCH Comput. Archit. News 2015, 43, 631–644. [Google Scholar] [CrossRef]

- Brown, T. Techniques for Constructing Efficient Lock-free Data Structures. arXiv 2017, arXiv:1712.05406. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).