Abstract

Data distillation is an emerging research area, attracting the attention of machine learning (ML) and big data scientists and experts. The main goal of a distillation approach is to generate a compact dataset that preserves the essential characteristics of a larger one. In our study, we considered an initial large set of images and developed a novel method for distilling images from the initial set. The method combined discrete wavelet transform (DWT) and modified principal component analysis (M-PCA). Hence, our method first transforms images into vectors of low-band (LL) wavelet coefficients and then applies M-PCA to modify and reduce the number of vectors rather than their dimensionality. This distinguishes our approach from the traditional PCA method. We implemented the new method in Python 3.10 and validated it on public image databases, including Extended YaleB, digit-MNIST, and the ISIC2020. We demonstrated that creating a dictionary from a small set of distilled images and training a sparse representation wavelet-based classifier (SRWC) provides higher accuracy if compared to a classification when the SRWC method is trained with the entire initial training set of images.

1. Introduction

An important meta parameter in machine learning (ML) is the training set. For deep ML classifiers, extensive training datasets are required to achieve robust statistical results. If the large training sets also contain relatively high-resolution images, then the training process becomes extremely time-consuming. The training time could be significantly decreased if a smaller training dataset hosting the same features, qualities, statistical properties, and training abilities, is used.

Therefore, methods capable of generating or extracting, from a given large training set, a smaller one with the above abilities has become a subject of strong interest to the ML society. Early works in this field emerged around the middle of the last decade [1,2], with significant developments following in the subsequent years.

Methods for reducing training data while preserving data quality and model performance can be broadly categorized into two approaches: methods based on ML models [3,4,5] and data pruning (erasing) methods [6,7,8].

There is another concept gaining popularity among ML society, namely, transfer learning. It aims to develop methods and approaches that transfer knowledge across different tasks, for example, between image segmentation NN models and classification models [4]. Since knowledge transfer methods do not necessarily decrease the training time of the receiving model, we do not include them into the nomenclature of time reducing methods.

ML models for distillation use as a subject of their application knowledge and/or data. Knowledge distillation methods transfer meta parameters from teacher to student neural networks (NNs) [9,10,11]. Teacher NNs are generally large and complex, while student NNs are smaller and simpler as architecture. These teacher–student NN models significantly reduce the training time of student NNs, preserving the original training data and the statistical outcomes. They can solve problems in the fields of objects classification, detection, and segmentation in a variety of application areas in medicine and industry.

Data distillation methods keep the complexity and the architecture of the NN model the same but generate (distil) new data from the existing data. The distilled data has a smaller cardinality than the cardinality of the original training data, preserving its information quality and outcome statistics [5,12]. Note that in some cases, the newly distilled (generated) small-sized training set may contain images that do not visually resemble the original images at all [13]. Despite this dramatic change in the visual image features and the image theme, the distilled small image sets train the NN such that it provides the same or sometimes even better statistical measures. However, the visual form and the training ability of the ML distilled images depend on the number of epochs used in the process of distillation [14]. Note that this number could be high for some databases and may require more computer resources and longer run times, especially if the original images in the dataset are relatively sizable [14].

A comprehensive survey on the recent methods of data distillation, including the application of NNs, is given in [4]. The authors provide a detailed description of multiple data distillation techniques based on the concepts of “meta-model”, “gradient”, “distribution”, “trajectory”, and “factorization” matching.

On the other hand, data pruning methods are designed to remove less important and/or ill-defined samples from the original training set [6,7,15]. For the process of removal, pruning methods use optimization techniques to determine a sample’s importance [7]. Hence, optimization-based pruning methods can determine the smallest efficient training set from the original samples.

In 2024, ref. [8] published a new method capable of distilling vectors. This method was based on a modification of the principal components analysis (PCA) approach [16,17] and proved itself efficient for distilling vectors with relatively small dimensions. It improved the classification statistics of ML methods trained by sets augmented with distilled vectors. A disadvantage of the modified PCA distillation method is that training an ML classifier only with distilled vectors provides poor statistical outcomes.

In the present study, we developed a new method of image distillation that modifies training images and reduces the cardinality of the training set yet improves the classification accuracy of the ML classifier trained with this set. The new method does not apply ML techniques but was developed to reduce the training sample sets required in ML methods using, for example, sparse representation classification (SRC) [18,19]. These kinds of classifiers use a dictionary in the process of classification and the dictionary is designed by a set of atomic samples selected from the set of training samples.

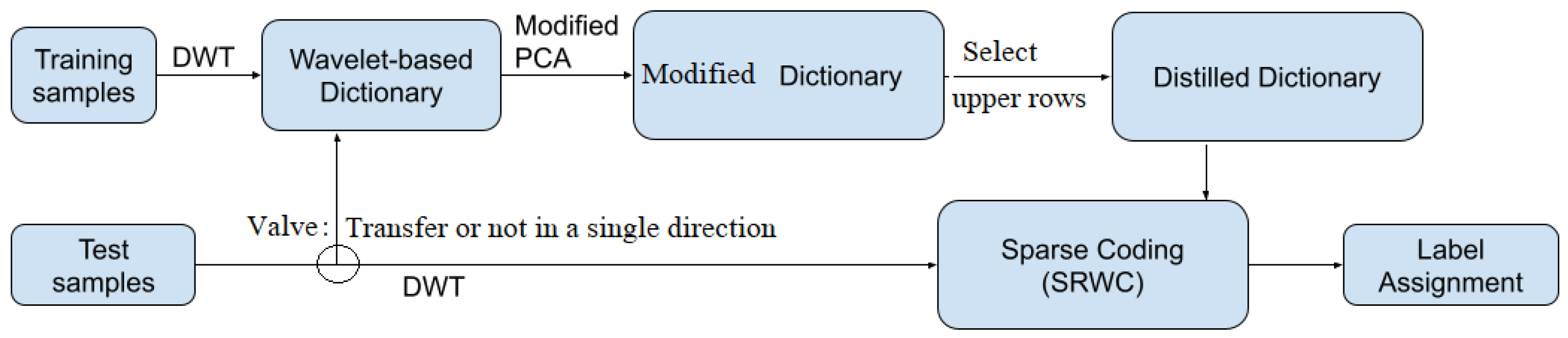

The first part of the new distillation method consists of discrete wavelet transform (DWT) [20,21], which represents the image with the help of its low band (LL) wavelet coefficients. Further, we describe every image as a vector of LL wavelet coefficients and apply an adapted version of the modified PCA method [8] to generate a modified version of the vectors created by the image wavelet LL coefficients. Out of the modified vectors, we select the top m vectors from every modified class (matrix) and name them distilled vectors. They are used to build the distilled training dictionary of an SRC method. A block diagram that describes the new data distillation method is shown in Figure 1.

Figure 1.

The main components of our data distillation method and the flow of its image classification.

Note that m is a user-selected integer that should be smaller than the cardinality of the smallest class in the training image dataset. Even though we verified the new image distillation method by creating a distilled wavelet dictionary (DWD) for the sparse representation wavelet classification (SRWC) [19] method, the distilled images could be used for training in other ML methods including NNs.

The main contribution of the present study is the development of the DWD with the help of the new image distillation method, which implements the DWT, low-band wavelet (LL) coefficients (features), and a modified PCA method (M-PCA). The advantage one may obtain, if using our DWT-LL-M-PCA method, is a reduced set of training images that possess the same training capabilities as the entire original training set.

Further, this paper is organized as follows: Section 2 presents the DWT, shows an example of image wavelet decomposition, and describes the development of the training dictionary for the wavelet vectors of images; Section 3 describes the development of the new approach to modify the wavelet matrix of each training class with the help of the M-PCA method and the creation of the DWD; Section 4 comprises five subsections that describe the SRWC method, the public image databases YaleB [22] and MNIST (digit and fashion) [23,24], and ISIC2020 [25] used for the experiments, the metrics implemented to measure the effectiveness of the methods, the experimental scheme, and the results of image classification. This paper end with Section 5 and Section 6, which present the discussion and conclusions, where we comment on the comparisons between classifications with original and distilled training sets, list the contributions of the present study and its advantages, and outline the future work.

2. Wavelet-Based Training Dictionary Design

2.1. Image Decomposition to Wavelet Sub-Bands

In the context of two-dimensional signals, let I represent an image wherein indicates the grey-level value at the pixel . Implementing a single-level two-dimensional wavelet transformation for an image can be achieved utilizing the technique that employs the Haar kernels, introduced in [26]. Therefore, the half-band high-pass and the half-band low-pass filters can be characterized, in the spatial domain, by the Haar wavelet kerne, as shown below [26]:

Note that the high-pass filters attenuate the low-band image features while passing the high-band image features. On the other hand, the low-pass filters attenuate the high-band features while passing the low-band features. In the wavelet domain, the low-band features describe image overall properties, and structures as well as large-scale patterns. The high-band wavelet features describe details, like, corners, edges, and noise.

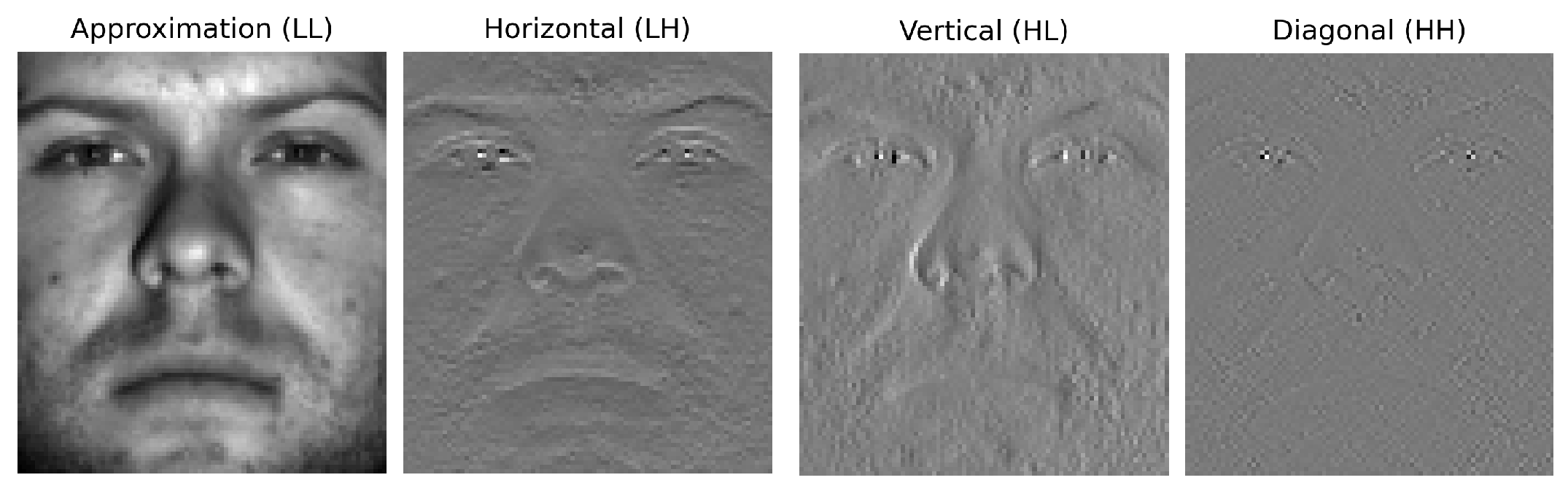

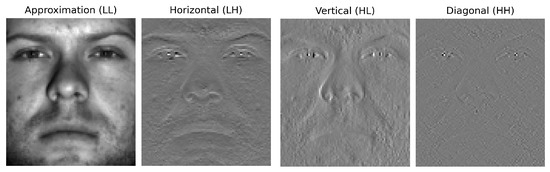

Further, 2D discrete wavelet transform (DWT) [20] decomposes an image into four distinct sub-bands: approximation (LL), vertical (HL), horizontal (LH), and diagonal (HH) components. Out of the four sub-bands, the following three are considered high-band features: LH, HL, and HH, while LL is considered as describing low-band features. An example of image decomposition to the four sub-bands is shown in Figure 2.

Figure 2.

Decomposition of a grey-level image from YaleB [22] to four wavelet sub-band images.

2.2. Training Wavelet Dictionary Design

Consider a set of total training images distributed in k different classes. Denote the i-th class of the training images with and the label of this class with , for . The images in class we indicate with , where and denote the number of rows (height) in the image, while denotes the number of columns (width) for , where denotes the number of images in class . In connection with our further study, we resize the entire set of training images such that for and .

In the next step, using the 2D DWT, we decompose every image for to the four wavelet coefficients LL, HL, LH, and HH. Following the idea introduced in [19], we flatten every image to a vector, where, for every image pixel, there is an LL wavelet value. Hence, we map every image to a vector of the wavelet coefficient , which is a vector with entries and the label . Therefore, the class can be presented as the matrix , with its extended form being (), where , and for .

If we vertically concatenate all training wavelet matrices we receive the matrix . Note that , where for is the cardinality of the i-th training class. We call matrix the training wavelet dictionary (TWD); its label’s extended form is , where the vector of labels .

3. Distilling a Dictionary from the TWD

3.1. Modifying the Wavelet-Based Class Matrices

Consider TWD , constructed by the vectors of wavelet coefficients , and . Denote , where represents a wavelet coefficient, which we call a feature.

Analogously to [8], we construct the p-th feature vector of the i-th class with , such that and . We then denote the mean of the feature vectors with and the rank of the matrix with . Then, we normalize every wavelet coefficient LL as follows:

Further, we calculate the covariance matrix, which measures the linear relationship between the features in the matrix (class) [17] for . For this purpose, we use the equation below and calculate every entry of the covariance matrix of the class, which we denote by [8]:

In the next stage, we determine the Eigen vectors and the Eigen values of using the equation below, where is the unit matrix:

Let us consider the pairs , where . Recall that is the number of features in , while is its rank. Recall that the number of classes in is k. We now select the integer and find the m largest Eigen values as well as their corresponding Eigen vectors . Then, we construct the matrix according to decreasing Eigen values from right to left:

where every Eigen vector and m are the user-selected integer. The idea behind the construction of matrix is the concept that the larger the Eigen value, the stronger the influence of its Eigen vector.

Recall that the class matrix . Therefore, the matrix product below,

maps the matrix of the class to a transformed one for . The next left product with the matrix maps the transformed matrix ,

into the original space such that . Note that unless , where is the unit matrix, the new matrix . Hence, we call the matrix the modified matrix of the i-th training class.

Please note that the modified version of the PCA method described above decreases the number of vectors in a set, while the original PCA decreases the dimension of the vectors in the set.

3.2. Creating a Distilled Wavelet Dictionary

Recall that we vertically concatenated the original classes of wavelet vectors to receive the training wavelet dictionary (TWD) . If we do the same with the modified training matrices (classes) for , we receive the modified training wavelet dictionary

Note that in the present notation of vectors, the superscript denotes that the vectors are modified.

Recall also that the modified training wavelet matrices are obtained by two left multiplications (see Equations (6) and (7)) of the original training wavelet matrices for . Since the multiplication of matrices does not change the order of the rows, it follows that the rows in are modified versions of the rows in .

To create the training distilled wavelet dictionary (DWD), we select the upper m vector rows from every modified matrix (class) of training wavelet vectors for . Concatenating the k selected sets of m modified vectors, we receive the distilled wavelet dictionary (DWD):

Note that contains only the selected m upper rows (vectors) from the matrix of the modified training wavelet class . Thus, the number of training vectors (rows) in the DWD is and the number of vectors in the modified training wavelet dictionary is . Also, the selected vectors , which complies with .

4. Experimental Validation

In the present section, we first introduce the classifier sparse representation wavelet-based classification (SRWC), which we used to measure statistics when conducting training with original and distilled sets of the training dictionary. Recall that the set of distilled training images is smaller than the set of original images.

Next, we introduce the image databases and metrics that were used for the purpose of measurement. In the last subsection, we describe the experimental procedure and the results obtained by the SRWC method when using original TWD and DWD.

4.1. Sparse Representation Wavelet-Based Classification

The sparse representation wavelet-based classification (SRWC) method [19] was implemented in the wavelet domain to construct a feature dictionary derived from training samples. Paper [19] demonstrates that utilizing features exclusively from low-pass sub-band (LL) wavelet coefficients can significantly enhance image classification performance. Hence, we used the low-pass sub-band coefficients LL to generate the TWD.

The preprocessing techniques for the TWD design applied several steps, including the following: labeling the k training classes and conducting principal component analysis (PCA) to reduce the dimensionality of each vectorized sample, named “atom”. As shown in Section 2.2, for each class i, the vectorized training atoms , creating a sub-dictionary matrix , where represents the dimension of each vectorized training atom and denotes the number of atoms within the class. Aggregating atoms from k categories (classes), the comprehensive walet training matrix of original vectors was constructed as and modified to with Equation (7), where every modified class was distilled to .

Based on the assumption of sparse representation, a new unknown test atom was associated with a specific class i if represented as a sparse linear combination of atoms associated with this class:

where constitutes the sparse vector coefficients corresponding to class i. The sparse classification methodology centered on identifying the most appropriate set of training atoms for best approximation of . This was accomplished by determining a sparse approximation vector with non-zero entries associated with the class, resolved through the following Lasso optimization formulation [19]:

The classification of the unknown vector was ultimately determined by analyzing the non-zero coefficients within . The final classification was executed by minimizing the discrepancy between the sparse sample and the original representation:

where , is a characteristic function that selects elements in that are associated with class i.

4.2. Original Datasets

To experimentally validate the new image distillation method based on the DWT, LL wavelet coefficients, and M-PCA methods, we used the public image databases YaleB [22], digit-MNIST [24], and ISIC2020 [25].

The YaleB [22] database contains 2414 frontal-face images of size taken from 38 faces. Therefore, the database consists of 38 classes and every class contains 64 pictures taken under different illumination conditions.

The public image database digit-MNIST [24] consists of 60,000 hand-written images of digits for training and 10,000 hand-written digit images for testing. Both the training and testing sets are split into 10 distinct classes. Every image has a size 28 × 28 pixels.

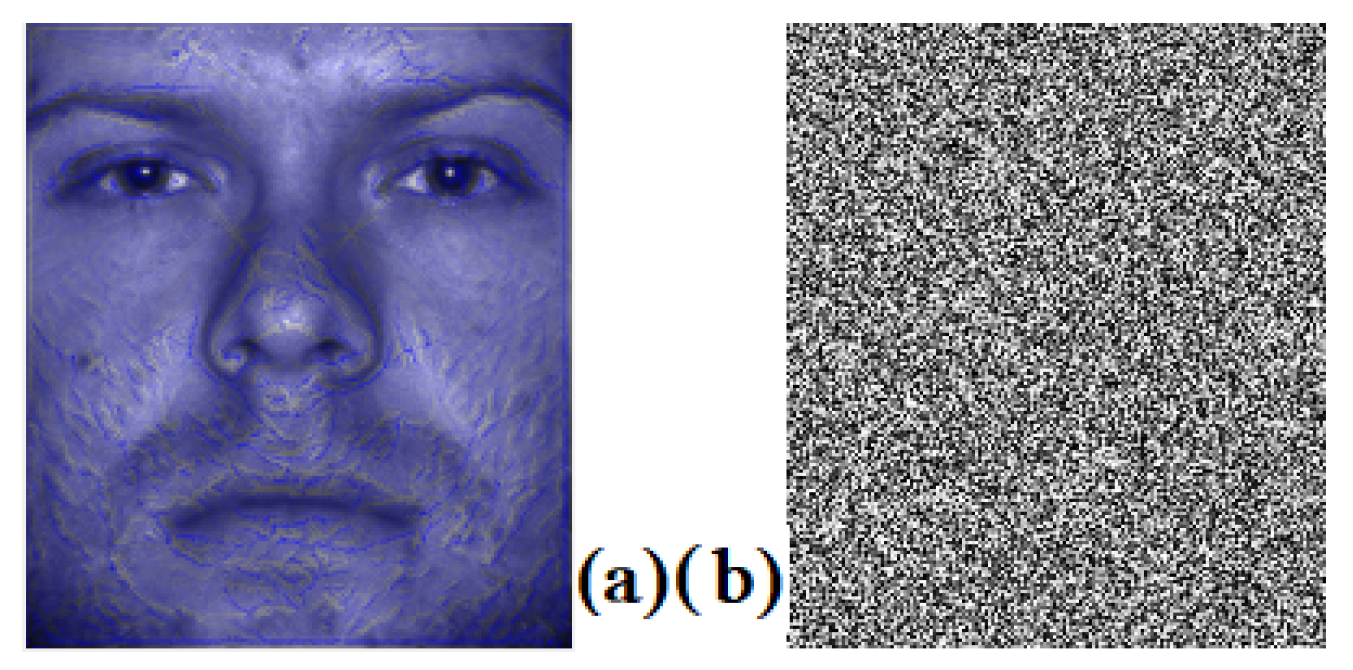

The most recent skin lesion image database is ISIC2020 [25]. It contains of 33,126 training images and 10,982 unlabeled benign and malignant images for testing. The training samples are split into 32,542 benign and 584 malignant. Experienced clinicians and dermatologists labeled the training images. The original image sizes vary between [480, 6000] for the number of rows and between [640, 6000] for the number of columns. To standardize the images, we resized them to and pixels. In Figure 3, we show an original training sample from ISIC2020, resized from to pixels. On the right side of the same figure, we show original training samples from digit-MNIST.

Figure 3.

On the left side of the figure, we show resized, original training skin lesion image from ISIC2020 [25]. On the right side, we show original training samples from digit-MNIST [24].

4.3. Classification Metrics

The notations TP = True Positives, TN = True Negatives, FP = False Positives, and FN = False Negatives usually describe the outcomes from a classification method. Also, they are implemented in the calculation of accuracy, the most often used metrics to evaluate the effectiveness of classification.

- Accuracy (AC): measures the overall correctness of a model’s predictions:

4.4. Experimental Schemes

Our experimental scheme consisted of two major stages. In the first one, we distilled m number of images applying the DWT [20], LL, and M-PCA [8] methods. In the next stage, we trained the SRWC method with original images and classified original testing images. Afterward we train the same classifier under the same conditions and parameters but with distilled samples and classify distilled training images (Case 1) or original training images (Case 2 and Case 3, defined below).

If the training or testing was conducted with the entire original or distilled training/testing image dataset, a single classification was performed. But, if a smaller number of training or testing original or distilled images was used, we implemented the Monte Carlo scheme [27] to select the necessary set for conducting the experiments. This scheme increased the statistical objectivity of the classification results and consisted of n runs of the classifier under the same conditions and parameters but with different sets of training samples. At the end, the average of the experimental outcomes was calculated and used for comparisons. In most cases, or was named 5-fold or 10-fold cross-validation, respectively.

4.5. Experimental Results

To validate our new method for training image dataset distillation, based on the DWT [20], LL wavelet coefficients, and M-PCA [8], we implemented it in Python alongside the SRWC method [19]. Employing the code, we conducted three kinds of experiments with the public image databases described in Section 4.2.

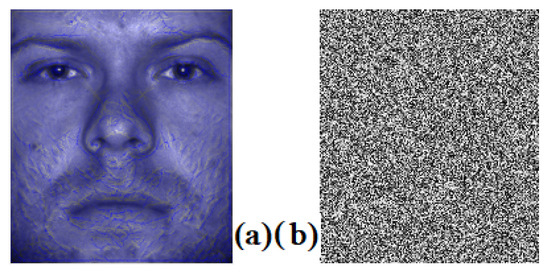

In the first kind of experiment, namely, Case 1, we distilled images from the union of the training and testing vectors (recall that every image was presented as a vector of LL wavelet coefficients) of every class. Then, we split the distilled vectors (images) into training and testing sets for every class. Further, we created the DWD by concatenating the distilled training vectors from all classes. Similarly, we merged the distilled testing vectors from all classes to form the test set for each experiment. The experimental results, which provide four different number of distilled vectors (images) from the image database YaleB, are presented in Table 1. An image from this database and a sample from its DWD are shown in Figure 4.

Table 1.

Accuracy (Ac) results obtained by the SRWC method [19] applied to the original YaleB image database and classifications results with distilled images from each of the 38 classes of YaleB implementing Case 1 and Case 2. In the Ac column the highest value is in bold.

Figure 4.

(a) Image from YaleB [22]; (b) distilled image in DWD of the image database YaleB.

In the second kind of experiment, named Case 2, we distilled training vectors (images) from the original training and testing sets of every class. Further, we used the distilled vectors to construct the DWD, which we applied to train the SRWC [19] method and classify a randomly selected subset of original testing vectors (images). The selection was conducted with the Monte Carlo scheme [27]. The experimental results obtained with distilled images when applying the present distillation Case 2 are presented at the bottom of Table 1, where we show the classification results when training with distilled images from Yale B.

In the distillation Case 3, we distilled training (images) only from the original training set of every class. Then, we created the DWD, trained the SRWC method, and classified the original testing vectors (images). The experimental results with the present setup and the image databases digit-MNIST and ISIC2020 are shown in Table 2 and Table 3.

Table 2.

Accuracy (Ac) results obtained by SRWC [19] for the images distilled from digit-MNIST implementing the distillation described in Case 3. In the Ac column the highest value is in bold.

Table 3.

The 2nd and most left columns show results of classifying, by the SRWC method, the 10,982 original testing ISIC2020 images. The 3rd column shows results when the original training and testing images are sharpened by Laplace operator. All results are in "score" metric evaluated in the ISIC2020 web page [25]. The highest value is in bold.

5. Discussion

Examining the results in Table 1 for the Case 1 distillation and classification, we observe that the accuracy (Ac) achieved with the four distilled sets was higher than the Ac obtained with the original dataset. Note that the number of distilled training images for all experiments was the same (30), while the set of distilled testing images decreased. The largest Ac = was achieved in the 3rd experiment, when we distilled 1444 images out of the 2432 original images.

These results suggest that distilling from the combined training and testing sets improved classification accuracy, even when the total number of images used was reduced. However, this approach was feasible only if the distilled training images could be identified, as demonstrated with the SRWC method.

Recall that in Case 2, we distilled both training and testing images. Then, we used the distilled images for training and tested a set of original images. The experiment in this case with the Yale B dataset is presented at the bottom of Table 1. Please note that the 18 training images per class were distilled from the 64 original images in the same class. Then, 26 original images for testing per class were selected, with the Monte Carlo scheme [27], from the original images in the class of consideration. The obtained was lower than the accuracies in Table 1 achieved for Case 1. The reason for this drop is that we used less distilled images for training and trained the SRWC method with distilled images but classified originals.

In Case 3, we conducted two classifications with distilled images from digit-MNIST. In the first experiment, we distilled 157 training images per class from the 6000 original images in the same class. Further, applying the Monte Carlo scheme [27], we selected 1000 original images from the digit-MNIST testing set and classified them employing the dictionary. Hence, we obtained Ac = . In the second experiment, we repeated the above but distilled 94 images per class and obtained an Ac = .

The above two results are many times greater than the results obtained when 600 and 1000 original images per class were used for training. The poor SRWC classification performance with the original digit-MNIST images was likely due to the small size of the original images (), which did not exhibit sufficient image features. The distilled images were of the same sizes but were “filled up” with more and condensed information, which led to higher classification accuracy.

Further, we conducted additional experiments distilling images from the training set of ISIC2020 [25] under the conditions of Case 3, described in Section 4.5. In the first experiment, we distilled 584 benign and 292 malignant skin lesion images from the original 32,542 benign and 584 malignant training images, respectively. In the second experiment, we distilled 7704 benign and 523 malignant images from the ISIC2020 training set. In the two experiments, we trained the SRWC method with the DWDs, created with the distilled sets, and classified the 10,000 original images in the ISIC2020 testing set. The classification “scores” are present in Table 3.

The measure score was provided by the International Skin Imaging Collaboration (ISIC) in [25]. It calculated the area under the ROC (receiver operating characteristic) after we uploaded our classification results to the ISIC [25] web page.

Comparing, using the digit-MNIST image database, the new DWT-LL-M-PCA distillation method with the ML-based distillation methods presented in [4,5], one may see that the latter methods provide higher accuracy than the former one. For example, the Kip ScatterNet from [4] has an Ac = 99% if trained with 10 images distilled from every digit-MNIST class and an Ac = 99.4% if trained with 50 images distilled from every class.

At the same time, the SRWC [19] gives an Ac = 95.25% and Ac = 93.24% if trained with 157 and 94 images distilled by the DWT-LL-M-PCA method from every class, respectively. Note that the latter method takes a single pass through the original data to create the DWD and a single pass through the training data to conduct the classification with the DWD.

Please note, the Kip ScatterNet takes up to 2000 epochs (passes through the data 2000 times) to distill 50 images from every digit-MNIST class [14]. This is a time-consuming procedure and requires large computer resources, even if the image sizes are very small ( pixels in digit-MNIST). But, if the images to distill from are large, as in the case of ISIC2020 [25], the needed computer resources may increase exponentially. In [29], the authors used V100 GPUs. Later, this number was reduced with the method presented in [14]. However, the latter reference does not provide details regarding the computational complexity or the run time for distillation, while our new method distilled 157 images per class, from a total of 1570 images, in about 600 seconds using a regular desktop.

6. Conclusions

The main novelty of this study consists in the development of a new image distillation method that utilizes discrete wavelet transform (DWT), low-band wavelets (LL) coefficients, and a modified principal component analysis method (M-PCA). The DWT-LL-M-PCA method generates the DWD, which trains the SRWC method and can be used for training with any other machine learning (ML) method. Thus, from a larger original training image dataset, a smaller one is distilled, which provides the advantage of increasing the classification accuracy.

Compared to the method presented in [8], which distills vectors, the newly proposed DWT-LL-MPCA method distills images. Also, the images distilled by the new method are used alone for training and produce the DWD, which provides an accuracy higher than the accuracy achieved if the entire original training set is used. On the other hand, the method published in [8] distills vectors that are not efficient if used alone for training in ML methods. They provide high and competitive classification accuracy only if they are augmented to the original set of vectors.

Studying the classification results in Table 1, Table 2 and Table 3, one may observe that the user-selected number m of vectors (images) to be distilled affects the classification statistics. Finding the optimal m is the subject of a full-length investigation and will be developed in a new publication. Other future avenues of research are to integrate k-means or another ML technique to enhance the distillation from the modified training wavelet dictionary and investigate the distillation behavior of our method when applied to databases with noisy, degraded, blurred, or sharp images and/or images with distorted or sheared objects.

Author Contributions

Conceptualization, N.M.S.; methodology, N.M.S.; software, L.H.N.; validation, L.H.N.; formal analysis, N.M.S. and L.H.N.; resources, N.M.S. and L.H.N.; data curation, L.H.N.; writing—original draft preparation, N.M.S.; writing—review and editing, N.M.S. and L.H.N.; supervision, N.M.S.; project administration, N.M.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The code for the SRWC method used for image classification is given at the website below in Software Downloads. The links to the image databases ISIC2020 [25], YaleB [22], and digit-MNIST [24], are located in Databases Downloads, Links to Original Databases: https://www.tamuc.edu/projects/augmented-image-repository/?redirect=none (accessed on 23 March 2025).

Conflicts of Interest

Author Long H. Ngo was employed by the Smile company. He did the present resecrh during his free of work time and has no conflict of interest. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ML | Machine learning |

| LL | Low-band wavelet coefficients |

| M-PCA | Modified principle component analysis |

| SRWC | Sparse representation wavelet-based classification |

| DWT | Discrete wavelet transform |

| TWD | Training wavelet dictionary |

| DWD | Distilled wavelet dictionary |

References

- Zhou, L.; Pan, S.; Wang, J.; Vasilakos, A.V. Machine learning on big data: Opportunities and challenges. Neurocomputing 2017, 237, 350–361. [Google Scholar]

- Leevy, J.L.; Khoshgoftaar, T.M.; Bauder, R.A.; Naeem, S. A survey on addressing high-class imbalance in big data. J. Big Data 2018, 5, 1–30. [Google Scholar] [CrossRef]

- Nguyen, T.; Chen, Z.; Lee, J. Dataset meta-learning from kernel ridge-regression. In Proceedings of the International Conference on Learning Representations, ICLR, Vienna, Austria, 4 May 2024. [Google Scholar]

- Sachdeva, N.; McAuley, J. Data Distillation: A Survey. Trans. Mach. Learn. Res. 2023, 7, 1–21. [Google Scholar]

- Radosavovic, I.; Dollár, P.; Girshick, R.; Gkioxari, G.; He, K. Data Distillation: Towards Omni-Supervised Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4119–4128. [Google Scholar]

- Yang, Z.; Yang, H.; Majumder, S.; Cardoso, J.; Gallego, G. Data Pruning Can Do More: A Comprehensive Data Pruning Approach for Object Re-identification. Trans. Mach. Learn. Res. 2024, 2835–8856. Available online: https://openreview.net/forum?id=vxxi7xzzn7 (accessed on 27 December 2024).

- Yang, S.; Xie, Z.; Peng, H.; Xu, M.; Sun, M.; Li, P. Dataset Pruning: Reducing Training Data by Examining Generalization Influence. In Proceedings of the 2023 International Conference on Learning Representations, ICLR, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Sirakov, N.M.; Shahnewaz, T.; Nakhmani, A. Training Data Augmentation with Data Distilled by the Principal Component Analysis. Electronics 2024, 13, 282. [Google Scholar] [CrossRef]

- Yu, X.; Teng, L.; Zhang, D.; Zheng, J.; Chen, H. Attention correction feature and boundary constraint knowledge distillation for efficient 3D medical image segmentation. Expert Syst. Appl. 2025, 262, 125670. [Google Scholar] [CrossRef]

- Zhang, W.; Zhang, Y.; Huang, F.; Qi, X.; Wang, L.; Liu, X. Negative-Core Sample Knowledge Distillation for Oriented Object Detection in Remote Sensing Image. IEEE Trans. Geosci. Remote Sens. 2024, 62, 56486182. [Google Scholar] [CrossRef]

- Li, X.; Huang, G.; Cheng, L.; Zhong, G.; Liu, W.; Chen, X.; Cai, M. Cross-domain visual prompting with spatial proximity knowledge distillation for histological image classification. J. Biomed. Inform. 2024, 158, 104728. [Google Scholar] [CrossRef]

- Zhao, B.; Mopuri, K.R.; Bilen, H. Dataset Condensation with Gradient Matching. In Proceedings of the International Conference on Learning Representations-ICLR, Vienna, Austria, 4 May 2021. [Google Scholar]

- Wang, T.; Zhu, J.-Y.; Torralba, A.; Efros, A. Dataset Distillation. arXiv 2020, arXiv:1811.10959v3. [Google Scholar]

- Vinaroz, M.; Park, M. Differentially private kernel inducing points using features from scatternets (DP-KIP-scatternet) for privacy preserving data distillation. arXiv 2024, arXiv:2301.13389. Available online: https://arxiv.org/pdf/2301.13389v2 (accessed on 15 January 2025).

- Cazenavette, G.; Wang, T.; Torralba, A.; Efros, A.A.; Zhu, J.Y. Dataset distillation by matching training trajectories. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4750–4759. [Google Scholar]

- Hotelling, H. Analysis of a complex of statistical variables into principa. J. Educ. Psychol. 1933, 24, 417. [Google Scholar] [CrossRef]

- Abdi, H.; Williams, L.J. Principal component analysis. Wires Comput. Stat. 2010, 2, 433–459. [Google Scholar] [CrossRef]

- Ngo, L.H.; Luong, M.; Sirakov, N.M.; Viennet, E.; Le-Tien, T. Skin lesion image classification using sparse representation in quaternion wavelet domain. Signal Image Video Process. 2022, 16, 1721–1729. [Google Scholar]

- Ngo, L.H.; Luong, M.; Sirakov, N.M.; Le-Tien, T.; Guerif, S.; Viennet, E. Sparse Representation Wavelet Based Classification. In Proceedings of the International Conference in Image Processing, ICIP2018, Athens, Greece, 7–10 October 2018; IEEE XPlore: Piscataway, NJ, USA, 2018; pp. 2974–2978. [Google Scholar] [CrossRef]

- Mallat, S. Wavelet Tour of Signal Processing: The Sparse Way; Academic Press: Cambridge, MA, USA, 2008. [Google Scholar]

- Zou, W.; Li, Y. Image classification using wavelet coefficients in low-pass bands. In Proceedings of the International Joint Conference on Neural Networks, IJCNN, Orlando, FL, USA, 12–17 August 2007; IEEE Xplore: Piscataway, NJ, USA, 2007; pp. 114–118. [Google Scholar]

- Georghiades, A.S.; Belhumeur, P.N.; Kriegman, D. From few tomany: Illumination cone models for face recognition under variable lighting and pose. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 643–660. [Google Scholar] [CrossRef]

- Kadam, S.S.; Adamuthe, A.C.; Patil, A.B. CNN model for image classification on MNIST and fashion-MNIST dataset. J. Sci. Res. 2020, 64, 374–384. [Google Scholar] [CrossRef]

- General Site for the MNIST Database. Available online: http://yann.lecun.com/exdb/mnist (accessed on 12 September 2024).

- International Skin Imaging Collaboration. SIIM-ISIC 2020 Challenge Dataset. Internat. Skin Imaging Collaboration. Available online: https://challenge2020.isic-archive.com/ (accessed on 17 May 2023).

- Guo, T.; Mousavi, H.S.; Vu, T.H.; Monga, V. Deep wavelet prediction for image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Workshops, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Dubitzky, D.; Granzow, M.; Berrar, D.P. Fundamentals Data Mining Genomics Proteomics; Springer: Boston, MA, USA, 2007. [Google Scholar]

- Sirakov, N.M.; Bowden, A.; Chen, M.; Ngo, L.H.; Luong, M. Embedding vector field into image features to enhance classification. J. Comput. Appl. Math. 2024, 44, 115685. [Google Scholar]

- Nguyen, T.; Novak, R.; Xiao, L.; Lee, J. Dataset distillation with infinitely wide convolutional networks. In Proceedings of the NeurIPS 2021, 35 Annual Conference on Neural Information Processing Systems, Virtual Conference, 7–10 December 2021; Ranzato, M., Beygelzimer, A., Dauphin, Y., Liang, P.S., Vaughan, J.W., Eds.; Curran Associates Inc.: Red Hook, NY, USA; Volume 34, pp. 5186–5198. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).