Abstract

Few-shot relation extraction constitutes a critical task in natural language processing. Its aim is to train a model using a limited number of labeled samples when labeled data are scarce, thereby enabling the model to rapidly learn and accurately identify relationships between entities within textual data. Prototypical networks are extensively utilized for simplicity and efficiency in few-shot relation extraction scenarios. Nevertheless, the prototypical networks derive their prototypes by averaging the feature instances within a given category. In cases where the instance size is limited, the prototype may not represent the true category centroid adequately, consequently diminishing the accuracy of classification. In this paper, we propose an innovative approach for few-shot relation extraction, leveraging instances from the query set to enhance the construction of prototypical networks based on the support set. Then, the weights are dynamically assigned by quantifying the semantic similarity between sentences. It can strengthen the emphasis on critical samples while preventing potential bias in class prototypes, which are computed using the mean value within prototype networks under small-size scenarios. Furthermore, an adaptive fusion module is introduced to integrate prototype and relational information more deeply, resulting in more accurate prototype representations. Extensive experiments have been performed on the widely used FewRel benchmark dataset. The experimental findings demonstrate that our AIRE model surpasses the existing baseline models, especially the accuracy, which can reach 91.53% and 86.36% on the 5-way 1-shot and 10-way 1-shot tasks, respectively.

1. Introduction

Relation extraction [1] is a foundational task in natural language processing. It focuses on determining and representing relational information from unstructured text as structured triples, such as (x, e1, e2, r). Here, x denotes a sentence comprised of multiple words. e1 and e2 denote the head and tail entities in the sentence, respectively. And r is expressed as the relationship between the two entities. For instance, in the sentence “Elon Reeve Musk founded SpaceX”, the entities are “Elon Reeve Musk” and “SpaceX”, with the relation being “founded”. Relation extraction facilitates a wide range of applications, including Knowledge Graph (KG) Construction [2], Social Networking Analysis, Intelligent Question-answering Systems, and Machine Reading Comprehension, etc.

In real life, humans can learn knowledge from few-shot or even zero-shot instances because they have the ability to reason [3]. Inspired by this, few-shot learning was initially introduced within the realm of computer vision. Subsequently, they applied it to relation extraction tasks to mitigate the challenges associated with long-tail distribution. Few-shot relation extraction involves training a model on extensive labeled datasets of known relation types, followed by rapid adaptation to perform relation classification using limited data from novel relation categories. In this context, the known relation types refer to the relation categories that did not appear during the test phase. In other words, the new relation types denote the category of relations to which the test instances belong.

Han et al. constructed a dataset called FewRel [4]. All instances in the dataset are made up of sentences, each of which contains annotated entity pairs. Table 1 is a subset of the dataset from the 3-way 3-shot scenario. There are three relation categories, R1, R2, and R3, listed in the table’s leftmost column. Each category comprises the name and its corresponding explanation in parentheses. The rightmost column of the table represents the support set. It provides three sentences for each relation category. In a sentence, head entities are denoted by bold formatting, while tail entities are indicated through italic styling. The query set, located at the bottom of the table, is utilized to ascertain the relation type to which each query instance belongs. That is, the last row in the table serves to discern which relation the query instance belongs to among R1, R2, or R3.

Table 1.

Example of few-shot relation extraction. The relation structure comprises three relation categories, each of which contains three instances.

Owing to their simplicity and efficiency, prototypical networks have emerged as the predominant methodology employed in few-shot relation extraction tasks [5]. A prototypical network presumes that each relation category has a prototype, which is derived by calculating the mean value of the feature vectors across all instances within the support set. The relation classification of a query instance is established by measuring the Euclidean distance between the query instance and the representative prototypes of different categories. However, a limited number of instances are provided for each relation category in the support set. This can lead to deviations in the relation prototypes. Consequently, the performance of relation classification in few-shot relation extraction tasks may be impacted.

To tackle the challenges outlined earlier, we recommend an adaptive hybrid prototypical network for interactive few-shot relation extraction (AIRE). Firstly, we integrate the labels and descriptive information about the relations into the model. Rich information helps improve the representation ability of relation. Secondly, we incorporate the semantic knowledge from the query instances into the support instances to enhance data utilization efficiency. This helps reduce the prototype deviations due to limited data and strengthens the representation capabilities of the prototypical networks. Furthermore, we introduce an adaptive framework for fusing relational information with prototype networks, which can obtain more accurate class prototypes. We performed empirical evaluations on FewRel to evaluate the proposed AIRE model. The experimental findings indicate that our method attains substantial performance gains relative to the existing most cutting-edge baseline approaches.

In conclusion, the main contributions of this paper are as follows.

- (1)

- An adaptive hybrid prototypical network for interactive few-shot relation extraction is proposed. The relation labels and the relation explanations are integrated into the model as external data to assist in prototype representation. Furthermore, the model mitigates performance degradation due to data scarcity by leveraging the interactive knowledge between the query instances and the support instances;

- (2)

- An adaptive prototype fusion mechanism is proposed, which combines prototype networks with relational information adaptively to obtain more accurate prototypes;

- (3)

- The experimental results illustrate that the two mechanisms incorporated into our model play crucial roles in the proposed model, leading to significant improvements in performance.

The remainder of this paper is structured as outlined below. In Section 2, we analyze the existing methods of the few-shot relation extraction, focusing particularly on utilizing prototypical networks. The task definition on few-shot relation extraction is elaborated in Section 3. Section 4 details the specific components of the proposed approach. Section 5 reports the experimental findings obtained from FewRel. We provide a summary of the main contributions and explore potential avenues for future work in Section 6.

2. Related Work

Supervised learning methods for relation extraction depend heavily on large volumes of high-quality annotated training instances [6,7,8,9]. Distant supervision can effectively reduce the dependence on manually annotated data [10]. However, it relies on strong assumptions, which can introduce noise into the dataset inadvertently. Moreover, there may be numerous categories with only a few instances, leading to a long-tail distribution throughout the dataset. This imbalance can diminish the effectiveness of relation extraction considerably. The introduction of the few-shot relation extraction methods can make up for the aforementioned deficiencies in a meaningful way.

In this section, we review the optimization-based and metric-based methods. Following this, we delve into the methodologies of the prototypical networks for few-shot relation extraction. Finally, we point out the limitations of existing methods and present our proposed solutions.

2.1. Optimization-Based Approaches for Few-Shot Relation Extraction

As a specialized form of relation classification tasks, few-shot relation extraction is designed to facilitate model recognition of novel relation categories using only a few labeled instances. Oriol Vinyals, a research scientist in Google DeepMind, first summarized the model-based, optimization-based, and metric-based methods of meta-learning at the NIPS 2018 Meta-Learning Workshop. Metric-based and optimization-based methods are predominant in the domain of few-shot relation extraction [11,12]. In few-shot relation extraction, optimization-based methods handle the relations between entities as distinct tasks and train a multi-class classifier to manage all possible relations. MetaNet [13] is a technique that acquires meta-level information across multiple tasks and adjusts its inductive biases through rapid parameter tuning to promote quick generalization. MAML (Model-Agnostic Meta-Learning) [14] is a flexible meta-learning framework that is applicable to any model trained using gradient descent, allowing it to tackle new learning tasks with only a few training instances. However, despite these advantages, optimization-based methods do not achieve the same level of performance as simpler metric-based techniques. As a result, most researchers have concentrated their attention on methods that are based on metrics.

2.2. Metric-Based Approaches for Few-Shot Relation Extraction

Metric-based approaches for few-shot relation extraction have attracted significant attention owing to their simplicity and efficiency. The researchers have explored various techniques, including Siamese Networks, graph neural networks, and prototypical networks, to advance few-shot relation extraction. These approaches aim to achieve relation classification by embedding and encoding instances in a manner that facilitates accurate and efficient categorization. Yuan et al. introduced Siamese Networks [15], a metric-based algorithm that processes pairs of data through two identically structured neural networks sharing the same weights and parameters. The Siamese network performs pairwise comparisons between test and training data. It inputs two examples into the two identical networks. Then, it calculates the distance between their respective outputs. When the distance between the outputs of the two networks is determined to be close enough, the samples are categorized as belonging to the same category. Xie et al. [16] presented a heterogeneous graph neural network that integrates sentence and entity nodes to enhance the ability of few-shot relation classification. By enabling message passing between these nodes, the model captures more comprehensive contextual information. Prototypical networks determine the nearest class prototype for each instance, typically by averaging all instances within a category. The prototypical networks have become a popular approach for few-shot relation classification tasks thanks to their simplicity and effectiveness.

2.3. Prototypical Networks Approaches for Few-Shot Relation Extraction

Following the introduction of FewRel, a large-scale supervised dataset designed for few-shot relation classification, they validated experimentally that the prototypical network architecture excels in this domain. Consequently, an increasing number of researchers have adopted prototypical networks to tackle few-shot relation extraction challenges. Gao et al. [17] proposed a prototypical hybrid attention network to enhance the performance of relation classification. It adopted instance-level attention and feature-level attention, respectively. Ye et al. [18] introduced a multi-level matching and aggregation network. This framework effectively captures interaction information between query instances and support sets through local and instance-level matching and aggregation, thereby significantly enhancing the model’s expressive capability. Yang et al. [19] incorporated the descriptive information of entities and relations, dynamically integrating sentence and entity information to obtain more effective instance representations, thereby enhancing classification performance. Furthermore, Yang et al. [20] introduced the inherent conceptual information of entities and proposed a few-shot relation extraction model enhanced by entity concepts. These approaches significantly enhance the accuracy of relation classification. Wen et al. [21] proposed to generate more precise relation prototypes by mining the implicit category information in the query set. These methods neglect the data information in the query set. Gao et al. [22] pioneered the use of BERT [23] as an encoder in their experiments, integrating it into the prototypical network framework. This approach yielded superior accuracy in relation to classification tasks compared to models utilizing CNN encoders. Qu et al. [24] proposed to study the relations through a global relation graph, with the relations in this graph being sourced from Wikidata. Liu et al. [25] proposed a simple and effective approach for few-shot relation extraction, which maps sentences and relational information into the same semantic space and concatenates the two views of relation representations. The two views of relation representations are concatenated through addition, which not only simplifies the model but also reduces the model parameters. Ma et al. [26] enhanced the prototypical network through relation concepts to learn new classes from a few instances incrementally. Some studies rely on naive prototypical networks to generate prototypes. When the sample size is relatively small, the classification of prototypes might not be accurate enough, thereby affecting the performance of the relationship classification. There are still some literatures that have utilized external knowledge, such as Wikipedia, but have neglected the data within the task and failed to make full use of the interaction between the query set and the support set, thus going against the original intention of few-shot learning. The detailed comparative analysis of each model is provided in Table 2.

Table 2.

Technical comparison between AIRE and other existing models. “NEK” refers to no external knowledge. “ISQ” refers to interaction between support set (S) and query set (Q). “AH” refers to adaptive hybrid.

In this paper, we introduce an interactive few-shot relation extraction framework that refines naive prototypical networks. We fully leverage the potential of the data, seeking to enhance the effectiveness of available data in few-shot scenarios by improving upon the prototypical network approach. Specifically, we utilize the semantic connections between the query and supporting instances to assign weights, thereby establishing an initial prototypical network. Subsequently, we dynamically incorporate each category’s relation, along with its name and explanation. This process culminates in the generation of a more precise and robust prototype.

3. Task Definition

The model is generally trained and evaluated using an episodic training [27] framework for the task of few-shot relation extraction of meta-learning [28,29]. Meta-learning algorithms acquire a general learning strategy by training on multiple related tasks and then apply this strategy to the target task. The dataset required for training usually includes the auxiliary set (A), the support set (S), and the query set (Q). In the context of few-shot classification, S and Q represent the support and query set, respectively, analogous to the training and test sets in supervised learning. Given limited instances in the support sets, training a classification model becomes challenging. To overcome this limitation, transferable knowledge is acquired from set A to enhance model performance. The relation extraction model can be trained on A by constructing many episodes that closely resemble the target tasks. Subsequently, the model can be adapted to the target task (comprising S and Q) to improve its generalization capabilities and address the challenges of few-shot relation extraction tasks. Specifically, a total of N classes is randomly drawn from set A, with K instances chosen for each class to construct set S, resulting in a size of N × K instances. There are N categories, and K instances are randomly chosen from each category. This type of task is formally designated as an N-way K-shot classification task. During the training phase, a 5-way-1-shot task is adopted, and this same task is also applied during testing to maintain consistency across both stages. This methodology is known as the episodic task framework. The support set can be represented as . The set of the relations can be denoted by . Each relation contains instances, and . Then, is the j-th instance of the relation . Furthermore, comprises a pair of entity . and represent the head entity and tail entity, respectively. The query set is denoted by . The support set and the query set are entirely disjoint, with no overlapping elements. That is to say, . The task of few-shot relation extraction is to determine the relation between the entity pairs in the query set Q by referencing the given relation set and support set S.

4. Methodology

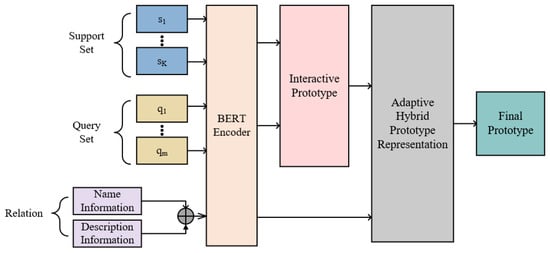

In this section, we provide a detailed overview of the Adaptive Interactive Prototype Network (AIPN) method for few-shot relation extraction. Figure 1 describes the overall model architecture. The sentence instances and relation information in the support set and query set are all fed into the BERT encoder. Here, the relation information is constructed by concatenating the entity name and relation description to ensure it matches the representation dimension of the sentence information. Clearly, classifying all query instances using a common prototype network is inappropriate. Consequently, we propose constructing an interactive prototype network tailored to each query instance based on its semantic correlation with the supporting instances. Furthermore, an adaptive fusion module is introduced to integrate the interactive prototype network with the relation information, thereby enabling the derivation of a precise prototype.

Figure 1.

The framework of the proposed AIRE model. On the left side is the input of data. In the middle part is the core processing module, including the construction and fusion process of the prototype network. On the right side is the output module, showing the final results of relation extraction. The arrows indicate the flow direction of data.

We describe the training process of the AIRE model in Algorithm 1. For a deeper understanding, more detailed information will be provided in the next subsection.

| Algorithm 1 Architecture of AIRE at the training process |

| Input: Support Set Query Set relation set Output: T = {< (x, e1, e2, r) > | e1, e2 ∈ E, r ∈ } |

| 1: Obtain the context representation of each instance of S and Q by using the sentence encoder BERT |

| 2: Concatenate the entity name and relation description of relation information |

| 3: For each episode do |

| 4: For i = 1→N do |

| 5: Build support prototypes by Equations (1) and (2) |

| 6: Build an interactive prototype based on the query instances by Equations (3) and (4) |

| 7: Fusion the interactive prototype and relation information by Equation (5) |

| 8: End for |

| 9: End for |

4.1. Sentence Encoder

We use BERT to perform context encoding on the sentences. The sentences come from the support and query sets. Each sentence is annotated with head and tail entities. Specifically, a certain sentence is given by x, which is composed of multiple words, as illustrated below. . . The head entity is indicated by , while the tail entity is represented by . We have constructed the sentences in the form of to match the input format for BERT. The token of [CLS] is placed at the beginning of each sentence. [SEP] token is used to distinguish between two input sentences. [PAD] token is utilized to extend sentences to a predefined maximum length.

The head entity is denoted by special entity markers [E1] and [/E1] to indicate its beginning and ending. Likewise, the tail entity is marked with [E2] and [/E2]. By inputting these sequences into BERT as the encoder, we can derive the hidden states of the sentence. Following Soares [30], we concatenate the hidden states associated with the start tokens of h1 and h2 to construct the sentence representation. That is to say, in the instances in both the support set S and the query set Q, the marked head and tail entities are concatenated to form the sentence representation. We represent the sentence by , , where , , d represents the length of the representation vector.

4.2. Relation Representation

Following the idea of Liu et al. [25], the name and explanation of every relation are concatenated. The resulting sequence is provided as input into the BERT encoder. Specifically, we concatenate and through the function of concat(). represents the embeddings of all tokens and represents the average embeddings of all the tokens. This approach yields a representation that matches the dimensionality of the prototypical network. That is to say, .

4.3. Interactive Prototypical Network

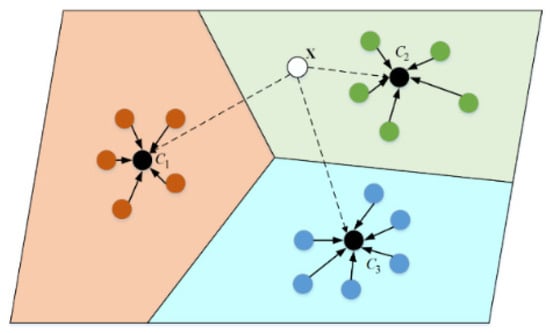

Besides being simple and efficient, the prototypical network excels at learning an embedding space that clusters the instances from the same category while maintaining clear separation between instances from the different categories. The new instances of unknown categories can be directly identified by measuring the distance in the embedding space. As illustrated in Figure 2, the query instance x is evaluated against three class prototypes (C1, C2, C3) by utilizing Euclidean distance and is ultimately found to be nearest to the C2 class prototype. Consequently, x is classified as belonging to the C2 category.

Figure 2.

Prototypical network. Different color regions serve to differentiate classes, with black dots representing class prototypes and thus symbolizing each respective class.

The prototypical network is achieved by learning an embedding function : that maps instances from a U-dimensional space to a V-dimensional space in the domain of few-shot relation extraction. More precisely, the relation prototype is determined by the mean of all the embedding vectors computed about all the instances, thereby establishing it as the central point of the class. Equation (1) produces a prototype representation for every relation class. Here, the embedding vector for the jth instance of the ith relation in the support set is indicated by . And there are K instances in each relation class. Equation (2) calculates the probability distribution across classes for a given query instance by comparing it with the prototypes of each relation class through the Softmax function. The distance between the query instance x and the prototype corresponding to each class in the relation set is compared by adopting the Euclidean distance function. The function is the metric standard.

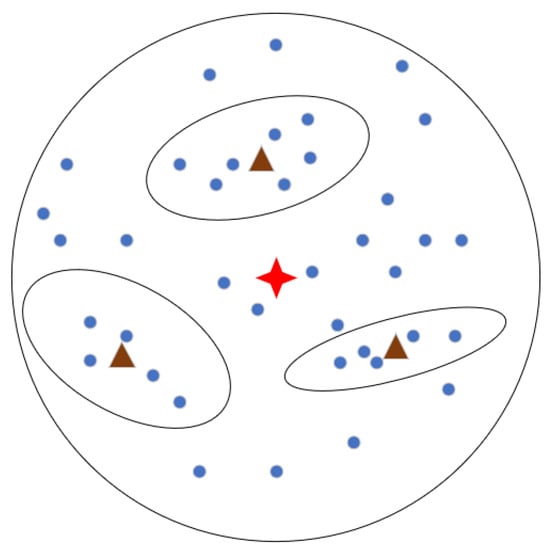

However, there are only restricted training sentences in the support set for the few-shot scenario. The instances may be far from the center of their respective classes in the embedding space, which can lead to a certain bias in the calculated class prototypes. As is shown in Figure 3, The circle denotes the collection of all instances in the support set. The instances are denoted by blue dots. The red star in the center of the circle is the expected prototype. The three ellipses represent three support sets. The brown triangles inside the ellipses are the class prototypes calculated from the support sets. It is not difficult to observe that there is a certain deviation between the brown triangles and the red star, indicating that there will be a certain deviation between the actual class prototype and the expected prototype when the support set contains a limited number of instances. It can affect the relation classification performance. Consequently, in light of the limited data size, we introduce an interactive prototypical network architecture that harnesses the extensive interaction knowledge between the query and support sets to enhance data utilization.

Figure 3.

An example demonstrates the difference between the anticipated prototype and the biased prototype. All samples are located within the outer circle, while the three ellipses constitute the support set.

The central idea of the interactive prototypical network is how to capture the interaction information between sentences. In other words, the interactive prototypical network requires the calculation of different relation prototypes determined by the semantic relatedness between query and supporting instances and assigning appropriate weights to each support instance. In Equation (3), represents the new interactive prototype network. indicates the semantic relatedness. It is presented by the weights between the query instance and the j-th support instance for the i-th relation, as evident from Formula (4). And is expressed as the t-th instance in the query set.

4.4. Adaptive Hybrid Prototype Representation

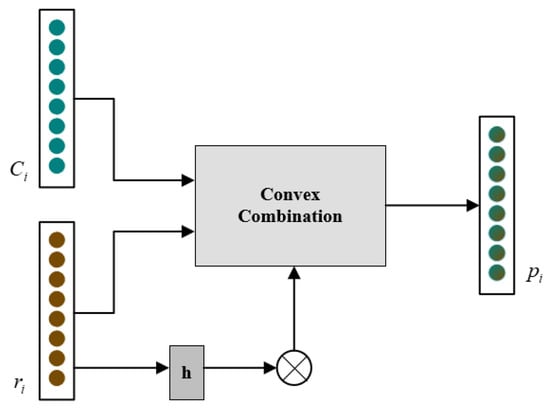

Inspired by the literature [31], we have designed a novel adaptive hybrid prototype fusion mechanism that employs a convex combination of the interactive prototype network representation and relational information representation to derive the final prototype, as presented in Equation (5).

Figure 4 illustrates the adaptive hybrid module. In Section 4.3, we have already obtained the interactive prototype network representation .The relation label name and its corresponding descriptive information have been concatenated. It is an expression of relational information, denoted as . Furthermore, represents the adaptive hybrid coefficient, which is determined by the information representation of the relation class to ensure compatibility with the computed class prototype. is computed by Equation (6). h (·) in the formula is the adaptive hybrid function. We employ a fully connected network to distinguish between similar categories in the feature embedding space. This way, the final class prototype pi can be obtained.

Figure 4.

Adaptive hybrid module. The middle box represents a convex combination, where the prototype and relation are adaptively fused to generate the final output.

5. Experiments

5.1. Dataset and Evaluation

Our model is evaluated using the public dataset of FewRel. It is a large-scale resource for few-shot relation extraction that contains 100 distinct relations. Each relation includes 700 labeled instances. Every instance is a sentence, and the head and the tail entity are annotated. On average, each sentence contains about 25 tokens. The experimental setup follows the official benchmark splitting method, which divides the dataset into three subsets. A total of 64 relations are used to train the model, 16 relations are used to verify model performance, and 20 relations are used for final test evaluation. Consistent with common evaluation practices in the literature [5,19,20], we perform experiments on FewRel involving four tasks: 5-way 1-shot, 5-way 5-shot, 10-way 1-shot, and 10-way 5-shot.

5.2. Baselines

In our experiments, we also utilize BERT as an encoder and compare its performance against several strong baseline models.

Proto [5] is an algorithm based on prototypical networks, utilizing CNN and BERT as encoders, respectively.

GNN [32] is a model that integrates the benefits of graph neural networks and few-shot learning, treating each instance both in the support and query set as nodes.

SNAIL [33] is an algorithm in the domain of meta-learning that employs CNN as an encoder and attentional mechanisms to rapidly learn from previous experiences.

Proto-HATT [17] is a model that uses the prototypical networks adapting attention mechanisms at both the instance and feature levels.

MLMAN [18] is a model that encodes instances from both the query set and support set by considering their matching information in an interactive way.

BERT-PAIR [22] is a measurement model that utilizes sentence pairs processed by BERT.

TD-Proto [19] is a model developed to enhance the representation of prototypical networks by incorporating relation and entity explanations.

REGRAB [24] is a Bayesian meta-learning algorithm designed to learn the posterior distribution of prototype vectors for various relations in the support set. The prior prototype vectors are parameterized using a graph neural network (GNN) on the global relation graph.

ConceptFERE [20] is a model that incorporates conceptual information about the entities to enhance the efficiency of few-shot relation extraction.

CTEG [34] is a model that employs two entity-guided attention mechanisms and confusion-aware techniques to address the relation confusion problem.

CBPM [21] is a model that leverages category information from the query set to generate precise prototypes.

DAPL [35] is a model that leverages dependency trees and shortest dependency paths as structural features to enhance the context representation of input sentences.

DualGraph [36] is a model that utilizes an edge-labeled dual graph to construct information within each graph and capture instance and relational information between graphs.

5.3. Implementation Details

In our work, we employ an episodic training strategy, where each episode corresponds to an N-way K-shot task. We carry out several independent runs for each task to ensure the stability and reliability of the results. It can help us gather more robust data and provide a clearer understanding of the outcomes. We use BERTBASE as the sentence encoder. The learning rate is configured to 2 × 10−5 with the maximum sentence length established at 128 and the word embedding dimension set to 768. AdamW is employed as the optimizer for loss minimization. Training involves 30,000 iterations. Table 3 lists all the hyperparameters involved in our model.

Table 3.

Hyperparameters and values of the models in our experiment.

5.4. Overall Evaluation Results

We evaluate our model against several strong baseline models using FewRel, with accuracy serving as the performance metric. Table 4 presents the experimental results, allowing us to draw the following conclusions:

Table 4.

Accuracy results on FewRel with boldface figures representing the indicates the optimal performance. The dataset has been released at the following: https://github.com/thunlp/FewRel.

- It is evident that the models utilizing BERT as the sentence encoder achieve generally higher performance compared to those using CNN. This suggests that BERT serves as a more effective sentence encoder;

- The table demonstrates that AIRE significantly outperforms the strong baseline models. Specifically, AIRE attains an average accuracy of 90.19% on the test set, surpassing all other BERTBASE models. Notably, AIRE reaches accuracies of 91.53% and 86.36% on the 5-way 1-shot and 10-way 1-shot tasks, respectively. Furthermore, it exceeds the second-best model by 0.64% and 2.27% in these respective tasks;

- Both TD-Proto and ConceptFERE model leverage external data as auxiliary information to improve the performance of their respective models. However, our proposed AIRE model does not rely on external data. Instead, it effectively extracts valuable information from the query set within the task, thereby achieving superior results compared to them;

- AIRE model is built upon the prototype network. It exhibits superior performance compared to models leveraging graph neural networks, such as DualGraph and REGRAB, thereby validating the efficacy of the prototype network. Additionally, since our model does not employ an attention mechanism, it achieves greater advantages in terms of reduced model complexity and computational cost when compared to Proto-HATT and CTEG;

- Compared to the CBPM model, both methods harness latent category information within the query set to produce more precise relation prototypes. Nevertheless, our proposed model shows superior performance with average accuracy. Notably, it achieves a substantial improvement in the 10-way 1-shot task, with an accuracy gain of 3.82%. These results provide additional evidence of the efficacy of our AIRE model.

5.5. Ablation Analysis

We perform ablation analyses to evaluate the impact of various modules on our model, as presented in Table 5. The findings demonstrate that the semantic interaction information between the supporting instances and query instances, as well as the fusion of adaptive hybrid prototypes with relations, influence the model’s overall performance significantly. After eliminating the semantic interaction module and the adaptive hybrid module, there is a decrease of 2.18% and 4.22% in the model’s performance on the 5-way 1-shot and 10-way 1-shot tasks, respectively. It demonstrates the effectiveness of our proposed method in few-shot relation extraction. Additionally, to investigate the impact of the interaction module and the adaptive hybrid module on the model’s results, we remove each module separately and find that the model’s accuracy decreased by 0.32% and 0.64%, as well as 0.26% and 0.93% on the two tasks, illustrating the effectiveness of the proposed module.

Table 5.

Ablation study of AIRE on FewRel.

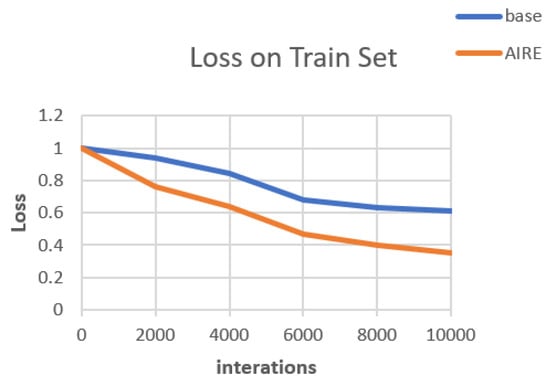

Furthermore, we exclusively utilize the BERT sentence encoder as the base model and perform the first 10,000 training iterations with our proposed AIRE model in the 5-way 1-shot task. During this process, results are documented every 2000 iterations. The evaluation of these outcomes demonstrates that our model reaches convergence more rapidly, thus supplying compelling support for the efficiency of our suggested method. The experimental results are illustrated in Figure 5.

Figure 5.

The training convergence using the base model and AIRE model.

6. Conclusions and Future Works

This paper proposes an innovative AIRE model to tackle the issues related to few-shot relation extraction. To enhance the data utilization under the premise of limited data scale, we incorporate the query set instance information into the construction of prototype networks. We propose an interactive prototypical network. Additionally, we introduce an adaptive hybrid prototype representation by integrating relational information with the interactive prototypical network to form the final prototype. The experimental results derived from the FewRel dataset highlight that the AIRE model achieves significantly higher average accuracy compared to other baseline models. Additionally, the effectiveness of each module is further validated through ablation studies. Our proposed model demonstrates superior capability in capturing the semantic information of relationships. When compared to the baseline model, AIRE exhibits a substantial enhancement in accuracy. In the future, we plan to delve into more complex scenarios of few-shot relation extraction and extend our approach to other areas, such as the construction of knowledge graphs in specialized domains such as finance and healthcare.

Author Contributions

Conceptualization, B.L. and S.L.; methodology, B.L.; software, L.Z.; validation, S.L., S.H., and L.Z.; formal analysis, S.H.; investigation, L.Z.; data curation, L.Z.; writing—original draft preparation, B.L., S.L., S.H., and L.Z.; writing—review and editing, B.L. and L.Z.; funding acquisition, S.L. and S.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Anhui Provincial Natural Science Foundation (2308085MF220) and the Anhui University Natural Science Foundation (2022AH050972, KJ2021A0516).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhao, X.; Deng, Y.; Yang, M.; Wang, L.; Zhang, R.; Cheng, H.; Lam, W.; Shen, Y.; Xu, R. A Comprehensive Survey on Relation Extraction: Recent Advances and New Frontiers. ACM Comput. Surv. 2024, 56, 293:1–293:39. [Google Scholar] [CrossRef]

- Zhong, L.; Wu, J.; Li, Q.; Peng, H.; Wu, X. A Comprehensive Survey on Automatic Knowledge Graph Construction. ACM Comput. Surv. 2024, 56, 1–62. [Google Scholar] [CrossRef]

- Bertinetto, L.; Henriques, J.F.; Valmadre, J.; Torr, P.H.S.; Vedaldi, A. Learning Feed-Forward One-Shot Learners; NIPS Foundation: La Jolla, CA, USA, 2016; pp. 523–531. [Google Scholar]

- Han, X.; Zhu, H.; Yu, P.; Wang, Z.; Yao, Y.; Liu, Z.; Sun, M. FewRel: A Large-Scale Supervised Few-Shot Relation Classification Dataset with State-of-the-Art Evaluation. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 4803–4809. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R.S. Prototypical Networks for Few-Shot Learning; NIPS Foundation: La Jolla, CA, USA, 2017; pp. 4077–4087. [Google Scholar]

- Zeng, D.; Liu, K.; Lai, S.; Zhou, G.; Zhao, J. Relation Classification via Convolutional Deep Neural Network; Dublin City University and Association for Computational Linguistics: Dublin, Ireland, 2014; pp. 2335–2344. [Google Scholar]

- Nguyen, T.H.; Grishman, R. Relation Extraction: Perspective from Convolutional Neural Networks. In Proceedings of the 1st Workshop on Vector Space Modeling for Natural Language Processing, Denver, CO, USA, 5 June 2015; pp. 39–48. [Google Scholar]

- Miwa, M.; Bansal, M. End-to-End Relation Extraction using LSTMs on Sequences and Tree Structures. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 7–12 August 2016; pp. 1105–1116. [Google Scholar]

- Lin, C.; Miller, T.; Dligach, D.; Amiri, H.; Bethard, S.; Savova, G. Self-training improves Recurrent Neural Networks performance for Temporal Relation Extraction. In Proceedings of the Ninth International Workshop on Health Text Mining and Information Analysis, Brussels, Belgium, 31 October 2018; pp. 165–176. [Google Scholar]

- Mintz, M.; Bills, S.; Snow, R.; Jurafsky, D. Distant Supervision for Relation Extraction Without Labeled Data; Association for Computational Linguistics: Suntec, France; Singapore, 2009; pp. 1003–1011. [Google Scholar]

- Yin, W. Meta-learning for Few-shot Natural Language Processing: A Survey. arXiv 2020, arXiv:2007.09604. [Google Scholar]

- Han, X.; Gao, T.; Lin, Y.; Peng, H.; Yang, Y.; Xiao, C.; Liu, Z.; Li, P.; Zhou, J.; Sun, M. More Data, More Relations, More Context and More Openness: A Review and Outlook for Relation Extraction. In Proceedings of the 1st Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics and the 10th International Joint Conference on Natural Language Processing, Suzhou, China, 4–7 December 2020; pp. 745–758. [Google Scholar]

- Munkhdalai, T.; Yu, H. Meta Networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 2554–2563. [Google Scholar]

- Finn, C.; Abbeel, P.; Levine, S. Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 1126–1135. [Google Scholar]

- Yuan, J.; Guo, H.; Jin, Z.; Jin, H.; Zhang, X.; Luo, J. One-shot learning for fine-grained relation extraction via convolutional siamese neural network. In Proceedings of the 2017 IEEE International Conference on Big Data (Big Data), Boston, MA, USA, 11–14 December 2017; pp. 2194–2199. [Google Scholar]

- Xie, Y.; Xu, H.; Li, J.; Yang, C.; Gao, K. Heterogeneous graph neural networks for noisy few-shot relation classification. Knowl. Based Syst. 2020, 194, 105548. [Google Scholar] [CrossRef]

- Gao, T.; Han, X.; Liu, Z.; Sun, M. Hybrid Attention-Based Prototypical Networks for Noisy Few-Shot Relation Classification. Proc. AAAI Conf. Artif. Intell. 2019, 33, 6407–6414. [Google Scholar]

- Ye, Z.-X.; Ling, Z.-H. Multi-Level Matching and Aggregation Network for Few-Shot Relation Classification. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 2872–2881. [Google Scholar]

- Yang, K.; Zheng, N.; Dai, X.; He, L.; Huang, S.; Chen, J. Enhance Prototypical Network with Text Descriptions for Few-shot Relation Classification. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management; Association for Computing Machinery: New York, NY, USA, 2020; pp. 2273–2276. [Google Scholar]

- Yang, S.; Zhang, Y.; Niu, G.; Zhao, Q.; Pu, S. Entity Concept-enhanced Few-shot Relation Extraction. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 2: Short Papers), Online, 1–6 August 2021; pp. 987–991. [Google Scholar]

- Wen, M.; Xia, T.; Liao, B.; Tian, Y. Few-shot relation classification using clustering-based prototype modification. Knowl-Based Syst 2023, 268, 110477. [Google Scholar] [CrossRef]

- Gao, T.; Han, X.; Zhu, H.; Liu, Z.; Li, P.; Sun, M.; Zhou, J. FewRel 2.0: Towards More Challenging Few-Shot Relation Classification. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 6249–6254. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding; Association for Computational Linguistics: Dublin, Ireland, 2019; pp. 4171–4186. [Google Scholar]

- Qu, M.; Gao, T.; Xhonneux, L.-P.A.C.; Tang, J. Few-shot Relation Extraction via Bayesian Meta-learning on Relation Graphs. In Proceedings of the ICML2020, Virtual, 13–18 July 2020; pp. 7867–7876. [Google Scholar]

- Liu, Y.; Hu, J.; Wan, X.; Chang, T.-H. A Simple yet Effective Relation Information Guided Approach for Few-Shot Relation Extraction. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2022, Dublin, Ireland, 22–27 May 2022; pp. 757–763. [Google Scholar]

- Ma, R.; Ma, B.; Wang, L.; Zhou, X.; Wang, Z.; Yang, Y. Relational concept enhanced prototypical network for incremental few-shot relation classification. Knowl. Based Syst. 2024, 284, 111282. [Google Scholar] [CrossRef]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Kavukcuoglu, K.; Wierstra, D. Matching Networks for One Shot Learning; NIPS Foundation: La Jolla, CA, USA, 2016; pp. 3630–3638. [Google Scholar]

- Huisman, M.; Rijn, J.N.v.; Plaat, A. A Survey of Deep Meta-Learning. Artif. Intell. Rev. 2021, 54, 4483–4541. [Google Scholar]

- Hospedales, T.M.; Antoniou, A.; Micaelli, P.; Storkey, A.J. Meta-Learning in Neural Networks: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 5149–5169. [Google Scholar] [CrossRef] [PubMed]

- Baldini Soares, L.; FitzGerald, N.; Ling, J.; Kwiatkowski, T. Matching the Blanks: Distributional Similarity for Relation Learning. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 2895–2905. [Google Scholar]

- Xing, C.; Rostamzadeh, N.; Oreshkin, B.N.; Pinheiro, P.O. Adaptive Cross-Modal Few-Shot Learning; NIPS Foundation: La Jolla, CA, USA, 2019; pp. 4848–4858. [Google Scholar]

- Satorras, V.G.; Estrach, J.B. Few-Shot Learning with Graph Neural Networks. In Proceedings of the ICLR 2018 Conference, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Mishra, N.; Rohaninejad, M.; Chen, X.; Abbeel, P. A Simple Neural Attentive Meta-Learner. arXiv 2018, arXiv:1707.03141. [Google Scholar]

- Wang, Y.; Bao, J.; Liu, G.; Wu, Y.; He, X.; Zhou, B.; Zhao, T. Learning to Decouple Relations: Few-Shot Relation Classification with Entity-Guided Attention and Confusion-Aware Training. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 5799–5809. [Google Scholar]

- Yu, T.; Yang, M.; Zhao, X. Dependency-Aware Prototype Learning for Few-Shot Relation Classification; International Committee on Computational Linguistics: Gyeongju, Republic of Korea, 2022; pp. 2339–2345. [Google Scholar]

- Li, J.; Feng, S.; Chiu, B. Few-Shot Relation Extraction With Dual Graph Neural Network Interaction. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 14396–14408. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).