Abstract

While Large Language Models (LLMs) have significantly advanced various benchmarks in Natural Language Processing (NLP), the challenge of low-resource tasks persists, primarily due to the scarcity of data and difficulties in annotation. This study introduces LoRE, a framework designed for zero-shot relation extraction in low-resource settings, which blends distant supervision with the powerful capabilities of LLMs. LoRE addresses the challenges of data sparsity and noise inherent in traditional distant supervision methods, enabling high-quality relation extraction without requiring extensive labeled data. By leveraging LLMs for zero-shot open information extraction and incorporating heuristic entity and relation alignment with semantic disambiguation, LoRE enhances the accuracy and relevance of the extracted data. Low-resource tasks refer to scenarios where labeled data are extremely limited, making traditional supervised learning approaches impractical. This study aims to develop a robust framework that not only tackles these challenges but also demonstrates the theoretical and practical implications of zero-shot relation extraction. The Chinese Person Relationship Extraction (CPRE) dataset, developed under this framework, demonstrates LoRE’s proficiency in extracting person-related triples. The CPRE dataset consists of 1000 word pairs, capturing diverse semantic relationships. Extensive experiments on the CPRE, IPRE, and DuIE datasets show significant improvements in dataset quality and a reduction in manual annotation efforts. These findings highlight the potential of LoRE to advance both the theoretical understanding and practical applications of relation extraction in low-resource settings. Notably, the performance of LoRE on the manually annotated DuIE dataset attests to the quality of the CPRE dataset, rivaling that of manually curated datasets, and highlights LoRE’s potential for reducing the complexities and costs associated with dataset construction for zero-shot and low-resource tasks.

1. Introduction

Relation extraction (RE) in Natural Language Processing (NLP) plays a crucial role in transforming unstructured text into structured knowledge, which is essential for applications such as knowledge graph construction and information retrieval. However, RE faces significant challenges in low-resource settings, primarily due to the lack of labeled data and the difficulties in annotating complex relations [1]. Traditional few-shot RE approaches often struggle to effectively capture nuanced relationships, particularly when data are scarce or noisy [2]. Large Language Models (LLMs) like GPT-3.5, while offering powerful data augmentation capabilities, may still fail to fully grasp the intricacies of complex relational dynamics [3,4,5]. Noisy data introduce inconsistencies and inaccuracies during the training process, which negatively impact model performance. For instance, incorrect labels generated during data alignment can reduce the overall effectiveness of RE models. In this study, we introduce a novel framework, LoRE, that combines distant supervision with the advanced capabilities of LLMs to tackle low-resource RE tasks. Specifically, LoRE is designed to enhance the accuracy and efficiency of relation extraction by addressing the common issues of noisy data and limited training resources, making it particularly well suited for zero-shot learning tasks in low-resource scenarios.

Distant supervision, which generates labeled data by aligning text with external knowledge bases [6], has been a valuable tool for RE. However, it is often affected by data noise due to the misalignment between knowledge base relations and real-world textual data. This issue, compounded by the scarcity of labeled data in many domains, limits the effectiveness of traditional RE models. LoRE addresses these challenges by integrating distant supervision with the powerful zero-shot learning capabilities of LLMs, offering a more robust and accurate approach to RE.

Zero-shot learning capacities, in this context, refer to the ability of a model to perform RE tasks without being explicitly trained on task-specific labeled data. By leveraging pre-trained knowledge from LLMs, LoRE can generalize to unseen relations, enabling scalable solutions for low-resource settings. In low-resource RE tasks, the lack of high-quality annotated data remains a key bottleneck. While advancements in multilingual NLP, such as zero-shot cross-lingual transfer and multi-task learning, have shown promise [7], their applicability in specific RE tasks is often limited by domain-specific complexities. Few-shot learning, particularly through prompt-based approaches with LLMs like GPT-3.5, has demonstrated potential in augmenting data for RE tasks; however, it also faces practical challenges, especially when faced with noisy or incomplete data. Traditional distant supervision-based RE models suffer from error propagation, where incorrect annotations are carried through the training process [8].

LoRE overcomes these limitations by integrating the zero-shot learning abilities of LLMs, allowing it to generalize across unseen relations and improve the robustness of RE models without requiring extensive labeled datasets. This framework leverages advanced techniques such as heuristic entity and relation alignment, semantic disambiguation, and dynamic relationship mapping, all of which significantly enhance data quality and enable more accurate relation extraction in low-resource environments. To streamline the structure of the manuscript, contributions previously listed in the introduction have been moved to the conclusion, providing a more cohesive flow of ideas. LoRE’s adaptability to various domains and its ability to refine its performance over time make it a powerful tool for low-resource NLP tasks, especially in settings where labeled data are scarce or difficult to obtain.

This study aims to address the following research questions: 1. How can distant supervision be effectively combined with Large Language Models to improve relation extraction in low-resource settings? 2. How does the proposed LoRE framework mitigate the challenges associated with noisy data in low-resource RE tasks?

2. Related Work

Relation extraction in low-resource contexts has historically relied on a range of techniques, starting with early rule-based systems. These foundational methods, while providing valuable insights, were hindered by their lack of scalability and high dependency on manual effort [9]. As the field progressed, machine learning (ML) techniques emerged, offering more flexibility and automation, but still faced significant challenges due to the need for large annotated datasets [10], which remain scarce in low-resource settings.

One of the persistent challenges in low-resource RE is the reliance on distant supervision, a technique that generates labeled data by aligning unstructured text with knowledge bases [11]. While distant supervision has facilitated data generation, it often suffers from noisy annotations due to the strong assumption that any co-occurrence of entities in a sentence implies a known relationship. This assumption frequently leads to misalignment between the assumed and actual relationships, resulting in data quality issues [12]. To address this, recent work has focused on few-shot learning methods, aiming to alleviate the data scarcity issue by leveraging small amounts of labeled data. For instance, Gururaja et al. [2] demonstrated the effectiveness of linguistic representations for few-shot RE across diverse domains. However, challenges like bias and hallucination in LLM-generated data persist, as highlighted by Kumar et al. [13], which emphasizes the need for robust frameworks to mitigate these issues in RE tasks. Few-shot learning methods still struggle to generalize across varied contexts, especially when training data diversity is limited [14].

The introduction of LLMs has significantly reshaped the landscape of low-resource RE. LLMs, such as GPT-3 and its successors, have demonstrated remarkable abilities in few-shot and even zero-shot settings, where they can generalize to unseen tasks without requiring extensive task-specific data. Wadhwa et al. [15] revisited RE in the context of LLMs, highlighting how these models can understand and extract complex textual relations with minimal tuning. Similarly, Xu et al. [16] explored how LLMs can perform few-shot RE tasks effectively, even with limited labeled data. Despite their significant capabilities, LLMs are not immune to limitations, particularly in specialized, domain-specific tasks where domain-specific knowledge is crucial [17]. Nonetheless, LLMs excel in open-domain information extraction (OpenIE), where they show robust performance in distilling structured information from unstructured text. This capability highlights the potential of LLMs to handle various information extraction tasks effectively, including relation extraction.

In this context, our LoRE framework builds upon the strengths of LLMs in open-domain information extraction and integrates them with distant supervision techniques to address the challenges of low-resource RE tasks. LoRE taps into the zero-shot learning capabilities of LLMs, allowing it to generalize to unseen relationships and improve the robustness of RE models without the need for extensive labeled datasets. Unlike traditional distant supervision approaches that rely on rigid assumptions, LoRE employs heuristic methods for semantic disambiguation and relationship validation, thereby improving the quality and accuracy of the generated data. This dual approach significantly enhances the overall performance of RE tasks in low-resource settings, overcoming the limitations of earlier methods.

3. The LoRE Framework

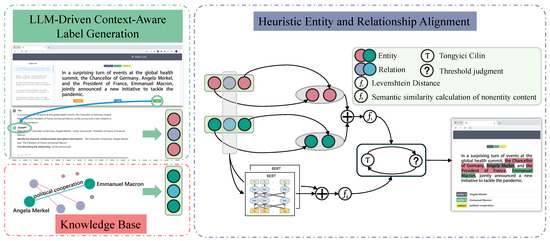

In the LoRE framework, RE in low-resource settings is achieved by combining distant supervision with LLMs. Initially, texts are annotated based on a pre-constructed knowledge base of entity relations. LLMs are then employed for OpenIE to autonomously identify entities and relationships. Entity alignment uses Levenshtein Distance, while relationship alignment relies on semantic similarity calculations, further refined by TongYiCi CiLin for semantic disambiguation. Semantic disambiguation refers to the process of resolving the ambiguity of words or phrases by identifying their intended meaning in a given context. TongYiCi CiLin provides a structured lexical resource that facilitates this process by aligning words with predefined semantic categories. This approach enhances the accuracy and reliability of alignment, as illustrated in Figure 1.

Figure 1.

The LoRE framework. This framework automates the extraction of open triples from unstructured text using ChatGPT, leveraging zero-shot learning to hypothesize their alignment with corresponding entities and relations in a knowledge base. Heuristic methods, reinforced by BERT-based semantic validation, assess the accuracy of these triples. The integration of Tongyici Cilin aids in semantic disambiguation, ensuring the correct mapping of entity relationships and enhancing the overall quality of the extracted knowledge.

3.1. Knowledge Base for Enhanced RE

Central to our framework is the establishment of a robust knowledge base for RE. This knowledge base, composed of triples representing binary relationships, covers twelve types of interpersonal relationships. We curated triples from the PersonGraphDataSet [18] and supplemented them with facts from a large-scale, open-domain encyclopedia database to enhance their coverage. To ensure high-quality triples, we prioritized entries with clear semantic relationships and high-confidence annotations. The selection process involved filtering ambiguous or noisy triples based on heuristic rules, such as consistency checks across multiple sources. Additionally, we conducted a manual verification process for a subset of the triples to validate their correctness. The final knowledge base includes 35,995 triple instances.

3.2. LLM-Driven OpenIE

In our framework, we leverage the advanced GPT-3.5-turbo model for zero-shot OpenIE, enabling the extraction of relational data directly from unstructured text. Unlike traditional methods, which rely on predefined patterns and manually associated entity pairs with fixed relational categories, our approach treats these associations as tentative. The use of GPT-3.5-turbo was driven by its accessibility and cost-effectiveness at the time of study initiation. While GPT-4.0 offers enhanced contextual understanding and reasoning capabilities, GPT-3.5-turbo demonstrated sufficient performance for the targeted task in terms of accuracy and computational efficiency. GPT-3.5-turbo dynamically identifies binary relationships from plain text without requiring task-specific training or predefined relational labels. Its sophisticated understanding of language context and ability to perform logical reasoning in a zero-shot manner allows it to identify and refine relationships directly from the text. This zero-shot learning capability makes our approach particularly suitable for low-resource settings, where labeled data are scarce. Furthermore, the framework incorporates heuristic refinement processes to mitigate potential errors stemming from the limitations of GPT-3.5-turbo, ensuring the plausibility and quality of the extracted triples. The final output consists of a set of coherent triples that are well suited for populating knowledge bases, significantly improving data quality and enhancing application outcomes.

3.3. Heuristic Entity Alignment

OpenIE involves the identification and matching of entity pairs from the non-specific triples extracted. Entities may fully match, partially match, or be unrelated. Entities may fully match, partially match, or be unrelated. For instance, the entities ⟨Zhongshan Sun, Qingling Song⟩ and ⟨Zhongshan Mr. Sun, Qingling Mrs. Song⟩ do not completely match but can be considered equivalent for our purposes. Thus, assessing the degree of match by computing the similarity between entity pairs is imperative. The Levenshtein Distance is employed as a heuristic measure, defined as the minimum number of single-character edits required to change one string into another, hence indicating the similarity between entity pairs. To enhance interpretability, we normalize the Levenshtein Distance to a range of 0 to 1 by dividing the distance by the maximum length of the two strings. A smaller normalized distance indicates higher similarity between the entities. The threshold for determining a match is set experimentally by tuning it on a development set. In line with previous work on handling ambiguous or noisy outputs from LLMs [19], the use of heuristic measures like the Levenshtein Distance ensures more reliable entity alignment. In our framework, we found that a threshold of 0.8 provides the best trade-off between precision and recall in entity matching. This threshold can be further adjusted depending on the characteristics of the dataset used. The normalized distance is crucial for aligning entities extracted from OpenIE triples with those in the knowledge base.

3.4. Heuristic Relationship Alignment

Heuristic relationship alignment involves validating entity pairs against known relationships within the knowledge base using semantic similarity. We integrate BERT to compute this similarity by capturing subtle semantic relationships through a bidirectional context. First, we encode the non-entity components of open triples and relationship descriptors into high-dimensional vectors. Using cosine similarity, we measure the semantic proximity of these vectors. This method allows us to determine how well an open triple aligns with the semantic signature of a known relationship type, serving as the cornerstone for categorizing open triples within our knowledge base. By leveraging BERT, we ensure our heuristic relationship alignment is grounded in a profound understanding of language, significantly enhancing the precision of the LoRE framework.

3.5. Semantic Disambiguation

We resolve semantic disambiguation by setting a semantic similarity threshold. When the BERT model’s similarity score exceeds this threshold, entity–relation pairs are aligned; otherwise, supplementary semantic enhancement processes are initiated. To augment the breadth of semantic similarity coverage, this study introduces a novel sentence semantic similarity algorithm that draws upon the extensive lexical database TongYiCi CiLin [20]. This algorithm is invoked when the relationship category word and the text calculated by BERT fall short of the established threshold, indicating an uncovered category word. Utilizing the structured arrangement of TongYiCi CiLin, the CiLin similarity algorithm computes word similarity based on the semantic distance and the number of meanings a word possesses. TongYiCi CiLin articulates the semantic relationships between words through a hierarchical tree structure, where the proximity of word meanings is contingent upon their respective branches. The similarity computation entails mapping to the branch and meaning similarity, subsequently adjusted by specific control parameters. The formula applied for this calculation is as follows:

where is the adjustment parameter to ensure the result falls within the [0,1] range, is the control parameter, d is the initial value function parameter corresponding to different semantic levels, n is the number of branch elements, k represents the branch spacing, and d is set according to different levels as follows: 0.1, 0.65, 0.8, 0.9, 0.96. The Harbin Institute of Technology’s extension of TongYiCi CiLin, comprising 77,456 words across 12 main categories, 95 categories, and 1428 subcategories, serves as the foundation for this algorithm. In our empirical analysis, we sampled 1000 word pairs with known semantic relationships, calculating their semantic similarity via BERT and aggregating the scores to establish the mean as the alignment threshold. The threshold thus determined was 0.872. Analogously, we defined the sentence semantic similarity threshold based on the CiLin algorithm as 0.915. Open triples exhibiting similarity scores above these thresholds in both BERT and CiLin evaluations are classified as positive examples; otherwise, they are negative.

4. Experiment Setting

Datasets. This study employs three relation extraction datasets to comprehensively assess the performance of the LoRE framework. DuIE [21], provided by Baidu, features a broad range of relationship types and extensive entity categories and is used to evaluate the model’s generalization capabilities across diverse and complex data. IPRE [22], contains specific interpersonal relationship information and is used in comparison with CPRE to demonstrate improvements in LoRE over traditional distant supervision methods. CPRE: To rigorously test the LoRE framework’s capability, the Chinese Person Relationship Extraction (CPRE) dataset was meticulously constructed, encompassing 9611 instances across ten diverse categories of interpersonal relationships. This dataset not only serves as a benchmark for evaluating LoRE’s efficacy but also contributes to the broader field of RE research.

Experimental Stages. The experiments are divided into expert evaluation and three-stage model evaluation. The expert evaluation relies on sampled data and assesses the proportion of positive examples in the samples. The three-stage model evaluation is as follows [23]:

- Stage 1: Individual Dataset Performance Assessment. In this phase, our goal is to establish a baseline performance by evaluating models trained and tested on individual datasets separately. By doing so, we can understand the unique challenges and characteristics of each dataset. This baseline helps to identify the specific strengths and weaknesses of the CPRE, DuIE, and IPRE datasets.

- Stage 2: Comparative Performance Assessment on Mixed CPRE and IPRE Test Sets. In this phase, we aim to assess the generalization capabilities of models trained on the CPRE dataset. We create mixed test sets by combining CPRE and IPRE data in different ratios (3:7 and 1:1) and evaluate the models’ performance on these test sets. This approach allows us to determine how well the CPRE data, generated by the LoRE framework, can improve the models’ robustness against noisier data from the IPRE dataset.

- Stage 3: Comprehensive Evaluation on Mixed CPRE and DuIE Test Sets. The objective of this phase is to further test the quality of the CPRE dataset by integrating it with the high-quality, manually annotated DuIE dataset. We train the models on the CPRE dataset and test them on mixed test sets with various proportions of CPRE and DuIE data. This evaluation helps to validate whether the CPRE dataset can effectively supplement and enhance the performance of models when combined with manually annotated data.

Model for comparison. To substantiate the performance of our framework, we meticulously selected a representative spectrum of models for comparison. These methodologies include Bert-base-chinese [24], mREBEL [25], GPT-2 [26], OneRel [27], and casRel [28].

Implementation Details. All models were evaluated on an NVIDIA GPU 4090 within the PyTorch framework. We initialized the training with a learning rate of and employed a step decay strategy for learning rate adjustment. Batch sizes of 32 and 64 were tested to assess their impact on model performance. The AdamW optimizer was used for parameter optimization, with dropout applied as a regularization technique to prevent overfitting. Models were evaluated after each epoch on the validation set, and the model with the best performance was retained for final evaluation on the test set.

5. Experimental Result

5.1. Expert Evaluation

A methodical quality assessment of the CPRE dataset was conducted, which involved the extraction of a substantial 30% random sample. This sample represented 2883 instances, reflective of the dataset’s comprehensive nature. Three domain experts in NLP were enlisted to perform an in-depth evaluation. Their assessment criteria were meticulously defined to include the expression clarity of semantic information within sentences, the accuracy of entity pair correspondences to the knowledge base, and the congruency of the sentences to the predefined relationship categories.

The expert evaluation results, as detailed in Table 1, revealed a promising level of accuracy with an average precision rate above 70% for most categories. The ‘Spouses’ category exhibited the highest precision, suggesting that the LoRE framework is particularly adept at identifying and classifying this relationship type. However, the ‘Classmates’ category indicated a lower precision rate, which highlights opportunities for further fine-tuning the framework. These insights from the CPRE dataset evaluation underscore the strengths of the LoRE framework in accurately extracting and categorizing relationships. Moreover, the findings provide a clear direction for future enhancements, ensuring that subsequent iterations of the framework will continue to improve in precision and reliability for all relationship types.

Table 1.

Expert evaluation of a 30% random sample of the CPRE dataset. The evaluation includes both positive and negative instances for each category.

5.2. Model Evaluation

Stage 1: Result of Individual Dataset Performance Assessment. Our experimental result, as summarized in Table 2, reveals insightful trends across the CPRE, DuIE, and IPRE datasets. On the CPRE dataset, the OneRel model demonstrates superior performance, achieving an F1 score of 0.920, followed closely by CasRel with 0.917. This suggests that models specifically designed for joint entity and RE, such as OneRel and CasRel, are well suited for datasets generated by frameworks like LoRE that prioritize data quality and precise annotations. For the DuIE dataset, which is characterized by its larger size and diversity of relational types, CasRel outperforms the other models with an F1 score of 0.937. This highlights the robustness of CasRel’s architecture in handling datasets with diverse and complex relational structures, reinforcing its effectiveness in generalized settings. In contrast, the performance on the IPRE dataset shows a different trend. The OneRel model leads with an F1 score of 0.731, followed by CasRel with 0.770. These results indicate that while models like Bert-base-chinese and mREBEL show lower precision on the IPRE dataset due to its noisy traditional distant supervision, the LoRE framework demonstrates its ability to mitigate these noise-related challenges effectively. Comparative analysis across the IPRE, CPRE, and DuIE datasets reveals that these models generally perform better on DuIE, likely due to its manually annotated data providing clearer training signals. The CPRE dataset, though influenced by distant supervision noise, shows satisfactory performance under LoRE, suggesting its efficacy in enhancing data quality for RE tasks in low-resource settings.

Table 2.

Performance comparison of RE models on CPRE, DuIE, and IPRE datasets.

Stage 2: Performance on Varying Proportions of Mixed Test Sets of Models Trained on the CPRE and IPRE Datasets. When evaluating models on mixed datasets with varying proportions of CPRE and IPRE data, we observed performance differences based on the dataset composition, as shown in Table 3. Specifically, in the 3:7 mixed test set scenario, all models experienced a performance decline, though the CasRel model demonstrated notable robustness, with an F1 score of 0.783. The adaptability of CasRel under such conditions further underscores its suitability for mixed dataset scenarios. Increasing the CPRE data proportion to a 1:1 ratio resulted in significant performance improvements, with the CasRel and OneRel models achieving F1 scores of 0.843 and 0.825, respectively. This highlights their adaptability and generalization capabilities in handling mixed data. For models trained on the IPRE dataset, the introduction of CPRE data into mixed test sets improved performance metrics. While CasRel’s performance slightly decreased in the 3:7 mixed test set, with an F1 score of 0.762, a balanced 1:1 ratio led to a substantial improvement, achieving an F1 score of 0.790. This indicates that the high-quality CPRE dataset positively impacts model generalization when exposed to mixed data conditions, emphasizing the value of integrating high-quality datasets to counteract noise-induced performance degradation. Detailed analysis and data for this experiment are provided in Appendix B.

Table 3.

Comparative performance of models trained on different datasets across mixed CPRE and IPRE (DuIE) test sets with varied ratios.

Stage 3: Performance on Varying Proportions of Mixed Test Sets of Models Trained on the CPRE and DuIE Datasets. When evaluating models on mixed test sets with varying proportions of CPRE and DuIE data, distinct performance trends emerged. In the 3:7 mixed test set scenario, all models exhibited a reduction in performance, with the CasRel model experiencing the least decline, indicating its stability when tested predominantly with high-quality annotated data. The stability of CasRel across these settings highlights its capability to balance performance under varied data distributions. In contrast, the 1:1 mixed test set showed that, despite a performance decrease, the OneRel and CasRel models achieved performances comparable to their results on the DuIE-only test set, suggesting that datasets constructed by the LoRE framework, when combined equally with manually annotated data, offer a balanced informational foundation beneficial for model generalization. For models trained on the DuIE dataset, the introduction of CPRE data in mixed test sets resulted in performance declines, as evidenced by the F1 scores. However, the 1:1 mixed test set demonstrated a nuanced performance recovery, particularly for the CasRel model, which achieved an F1 score of 0.935. This recovery underscores the complementary nature of CPRE and DuIE data, despite differences in annotation quality and data distribution. Detailed analysis and data for this experiment are provided in Appendix C.

In conclusion, the investigation into mixed test sets shows that the LoRE framework can autonomously synthesize high-quality datasets for RE tasks. While over-reliance on automatically generated data may introduce performance constraints, the results highlight LoRE’s utility in producing datasets that approximate the quality of manually annotated data. This indicates the framework’s significant role in advancing data-driven approaches in NLP, especially for RE tasks.

5.3. Ablation Study

To comprehensively understand the contribution of each component within the LoRE framework to the quality of dataset generation, this study designed a series of ablation experiments. The experiments were conducted with the following configurations: (1) complete framework, (2) without LLM-OpenIE, (3) without heuristic entity alignment, (4) without heuristic relationship alignment, and (5) without semantic disambiguation. Each configuration generated 100 data instances, encompassing the relationship types defined within the CPRE dataset. Evaluation metrics included the proportion of positive instances and a data richness score, which was developed by domain experts based on the depth and complexity of relationship expressions exhibited in the generated samples for a particular relationship type, ranging from 1 to 5.

Table 4 presents the results under each configuration. The removal of the LLM-OpenIE component resulted in a significant drop in the proportion of positive instances from 85% to 65% and a decrease in data richness score from 4.5 to 2.5. This substantial change underscores the central role of LLM-OpenIE in generating high-quality datasets. In contrast, the removal of other components, although also resulting in performance declines, had relatively limited effects, indicating that while these components contribute to enhancing data quality, their impact is not as significant as that of LLM-OpenIE. The results of the ablation experiments reveal the irreplaceable role of the LLM-OpenIE component within the LoRE framework, especially in enhancing the depth and accuracy of relationship expressions in the dataset. While heuristic entity alignment, heuristic relationship alignment, and semantic disambiguation components positively influence performance, their impacts are relatively modest. These findings suggest that future work should focus on optimizing the functionality of LLM-OpenIE and exploring effective integration of other components to further improve the overall quality and applicability of the dataset.

Table 4.

Impact of key components on framework performance. The upward arrows (↑) indicate that higher values are better.

5.4. Evaluation of Different LLMs on the LLM-OpenIE Component

This section investigates the impact of various LLMs on the LLM-OpenIE component of the LoRE framework. The focus is on assessing how the integration of different LLMs, specifically Gemini-pro, Claude2, and GPT-4, affects the framework’s performance in terms of Positive Instance Proportion and Data Richness Score. The baseline configuration uses GPT-3.5, which has shown robust results in previous evaluations. The experiment integrates Gemini-pro, Claude2, and GPT-4 in place of GPT-3.5 within the LLM-OpenIE component. Each model is evaluated under the same conditions to ensure consistency. The main metrics for assessment are the Positive Instance Proportion and the Data Richness Score, which reflect the quality and depth of the relationships extracted by each model.

The results are summarized in Table 5. Replacing GPT-3.5 with Gemini-pro led to a decrease in both evaluated metrics, confirming its lesser suitability for our specific application. Claude2 showed a slightly lower performance compared to GPT-3.5 but remained competitive. GPT-4 outperformed both GPT-3.5 and Claude2, demonstrating enhanced capabilities in generating data with greater richness and accuracy. The comparative analysis reveals significant insights into the selection of LLMs for the LLM-OpenIE component. While GPT-4 demonstrates superior performance, improving both the Positive Instance Proportion and the Data Richness Score, Claude2 remains a viable alternative with near-baseline performance. This suggests that advancements in LLM capabilities can be leveraged to significantly enhance the effectiveness of frameworks like LoRE, particularly in tasks requiring deep semantic processing and rich data generation. Selecting an effective and cost-efficient LLM can lead to more accurate and comprehensive datasets, thereby enhancing the framework’s applicability to a wider range of NLP tasks.

Table 5.

Performance comparison of different LLMs within the LLM-OpenIE component. The upward arrows (↑) indicate that higher values are better.

Overall, our experimental results provide significant evidence of the effectiveness of the LoRE framework in addressing low-resource relation extraction challenges. Notably, the framework’s ability to generate datasets that enable competitive performance in RE models, as evidenced by the close proximity in performance metrics between CPRE and DuIE datasets, underscores its applicability in scenarios where annotated data are scarce. However, the observed performance dip in mixed testing sets hints at the framework’s limitations. While the framework showcases its capacity to enhance model generalization when balanced with high-quality data, the diminishing returns observed with a higher proportion of generated data highlight the importance of maintaining an optimal noise-to-signal ratio within the training material. This underscores the need for the careful calibration of automated data generation processes. Furthermore, the framework’s performance across different relational types indicates its strength in capturing general relationships. However, it may require additional refinement to effectively handle more complex or nuanced inter-entity associations. This is particularly evident when integrating automated data with manually annotated resources, where maintaining consistency and accuracy becomes critical.

6. Conclusions

The LoRE framework demonstrates significant potential for constructing datasets for relation extraction, particularly in low-resource and zero-shot learning contexts. By leveraging distant supervision and LLMs, LoRE automates dataset generation and offers a promising solution to the challenge of data scarcity. The framework’s ability to produce high-quality data from zero-shot learning scenarios, as evidenced by performance comparisons on the CPRE and DuIE datasets, highlights its utility in supporting robust model training and generalization.

The contributions of this study are summarized as follows:

- Innovative Proposition of the LoRE Framework. We introduce LoRE, a novel approach to low-resource relation extraction that integrates distant supervision with LLMs. By incorporating zero-shot learning capabilities, LoRE addresses key challenges such as data noise, sparsity, and the difficulty of labeling complex relations in low-resource settings. Its entity recognition, context-aware relation extraction, and heuristic alignment mechanisms offer a significant improvement over traditional methods.

- Development of the CPRE Dataset. To validate the effectiveness of LoRE, we constructed the CPRE dataset. This dataset contains a diverse set of annotated relational data specifically focused on Chinese interpersonal relationships. It serves as a key resource for testing LoRE in the context of low-resource RE and demonstrates its potential for improving relation extraction performance in languages with limited annotated data.

- Advancement in Low-Resource RE. LoRE’s design makes it particularly effective for low-resource settings, as demonstrated through its application to Chinese person relationship extraction. The framework not only improves performance in these settings but also exhibits high efficiency in learning from limited data. LoRE’s flexibility makes it adaptable to other domains with similar resource constraints, offering a scalable solution for low-resource RE tasks.

Moving forward, we aim to extend the framework’s applicability to other low-resource tasks and further enhance its zero-shot learning capabilities to handle a broader range of relational types, especially in domains with limited labeled data. Future developments will focus on refining the noise management strategies in distant supervision and integrating more advanced machine learning techniques, such as sophisticated entity recognition and complex relationship extraction algorithms. These improvements will aim to capture more nuanced relationships and make LoRE even more adaptable and impactful for a wide range of NLP tasks, particularly in low-resource and zero-shot learning settings.

7. Limitations

While the LoRE framework holds significant promise for low-resource relation extraction, several limitations should be addressed to enhance its effectiveness. One key challenge is the inherent noise introduced by the integration of distant supervision with LLMs, which can affect the quality of the automatically generated datasets. Additionally, LoRE relies on pre-constructed knowledge bases for initial annotation and entity alignment, which can limit its performance, especially in underexplored domains or languages with limited resources. LLMs, including ChatGPT, are known to suffer from issues such as hallucinations, stochastic parroting, and contextual misinterpretation. These limitations can lead to inaccuracies in the generated relational data. To mitigate these issues, the LoRE framework incorporates heuristic refinement and semantic disambiguation techniques to improve data plausibility and credibility. The framework’s validation primarily focused on the CPRE dataset, which, while valuable, may not fully represent the diversity of relational types or linguistic and cultural variations across different datasets. Furthermore, the evaluation metrics such as precision, recall, and F1 score, though important, may not capture the full range of real-world complexities and the adaptability required in dynamic, continuously evolving domains. It is also important to note the broader challenges associated with using LLMs in NLP downstream tasks, such as bias and domain-specific limitations. These challenges have been considered in the study, with future work aiming to develop more robust strategies to address these issues and enhance the framework’s generalizability. Lastly, the framework’s long-term applicability is constrained by the dynamic nature of language and the constant evolution of knowledge, which presents challenges in maintaining the framework’s relevance over time. Addressing these limitations—particularly by enhancing the robustness of zero-shot learning capabilities and extending the framework’s adaptability to new domains and languages—is essential for maximizing its impact and broadening its applicability across various NLP tasks and real-world scenarios.

Author Contributions

Conceptualization, P.H. and G.L.; Data curation, G.L. and Y.W.; Funding acquisition, P.H.; Methodology, P.H. and Y.W.; Project administration, P.H.; Resources, Y.W.; Software, G.L. and Y.W.; Supervision, P.H.; Visualization, G.L.; Writing—original draft, G.L.; Writing—review and editing, P.H. and Y.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China (61572517).

Data Availability Statement

The datasets can be found in the following repositories: DuIE https://aistudio.baidu.com/datasetdetail/78774 (accessed on 15 December 2024); IPRE https://github.com/SUDA-HLT/IPRE (accessed on 15 December 2024); CPRE https://github.com/anonymous-12345678/LoRE/tree/main (accessed on 15 December 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| RE | Relation Extraction |

| NLP | Natural Language Processing |

| LLM | Large Language Model |

| CPRE | The Chinese Person Relationship Extraction Dataset |

Appendix A. The Calculation of the Levenshtein Distance

Appendix B. Detailed Analysis of Experimental Results in Stage 2

Stage 2: Performance on Varying Proportions of Mixed Test Sets of Models Trained on the CPRE Dataset. In the 3:7 mixed test set scenario, a noticeable decline in performance metrics across all models was observed compared to their outcomes on the standalone CPRE test set, as detailed in Table 3. Despite the infusion of noisier IPRE data, the casRel model demonstrated remarkable resilience with an F1 score of 0.783, indicating its robustness against data impurities. The OneRel model followed closely, achieving an F1 score of 0.753, suggesting the efficacy of its integrated entity and RE mechanism in handling heterogeneous data streams. A transition to a 1:1 mixed test set ratio revealed a substantial enhancement in the models’ performance. This upsurge implies that the balanced amalgamation of high-quality CPRE data effectively mitigates the adverse effects of noise prevalent in the IPRE dataset. The casRel model, with an F1 score of 0.843, and the OneRel model, scoring 0.825 in F1, both exhibit a commendable degree of adaptability to diverse data characteristics, underscoring their sophisticated learning architectures. The experimental results underscore the capacity of models trained on the CPRE dataset, constructed using the LoRE framework, to robustly adapt to the challenges posed by mixed test sets. Notably, the casRel and OneRel models maintain high performances on both the 3:7 and 1:1 mixed test sets, reflecting the quality and generalizability of the CPRE dataset. These outcomes reinforce the potential of LoRE in constructing datasets that support model generalization, even when confronted with test sets that significantly diverge from the training data distribution.

Stage 2: Performance on Varying Proportions of Mixed Test Sets of Models Trained on the IPRE Dataset. When subjected to the mixed test sets—comprising both CPRE and IPRE data—the models trained on IPRE data exhibited a decline in performance metrics, as shown in Table 3. Notably, on a 3:7 mixed test set, the decline in casRel’s performance was marginal, with an F1 score of 0.762, suggesting a robustness in the integration of higher-quality CPRE data. As the proportion of CPRE data increased to a 1:1 ratio, a notable improvement in casRel’s performance metrics was observed, achieving an F1 score of 0.790, indicative of the beneficial influence of the CPRE dataset’s quality. The experimental outcomes reveal a discernible disparity in dataset quality. Models initially trained on the IPRE dataset, when evaluated on mixed test sets that included CPRE data, displayed varied degrees of performance. It was observed that the presence of CPRE data within the mixed test sets contributed to performance improvements, which is suggestive of the superior quality of the CPRE dataset constructed via the LoRE framework. This is particularly evident in the balanced 1:1 mixed test set scenario, where the performance enhancements are more pronounced. Such findings implicitly affirm the high-quality nature of the CPRE dataset and its positive impact on the model’s ability to generalize when exposed to mixed data conditions, as opposed to a performance assessment directly augmented by CPRE data during training.

Appendix C. Detailed Analysis of Experimental Results in Stage 3

Stage 3: Performance on Varying Proportions of Mixed Test Sets of Models Trained on the CPRE Dataset. As demonstrated in Table 3, all models experienced a reduction in performance on the 3:7 and 1:1 mixed CPRE and DuIE test datasets compared to their performance on the original test sets. The models showed a general decrease in performance on the 3:7 mixed test set, dominated by DuIE data, with the casRel model exhibiting the smallest decline. This suggests that while the LoRE framework can generate valuable training data, the noise inherent in these datasets may still adversely affect performance when tested predominantly with high-quality annotated data. Conversely, on the 1:1 mixed test set, despite a decrease in performance, the OneRel and casRel models demonstrated comparable performance to the DuIE-only test set, with GPT-2 showing a significant increase in performance. This implies that datasets constructed by the LoRE framework, when combined equally with manually annotated data, can provide a rich and balanced informational foundation that benefits model generalization.

The observed decrease in performance on the mixed test sets may indicate that, while the LoRE framework is capable of constructing useful datasets, the integration of these with manually annotated data might introduce noise that challenges the models when encountering previously unseen expressions during testing. Additionally, the mixed datasets may have altered the data distribution, which could further challenge the models’ ability to generalize to new data. The superior performance on the 1:1 mixed test set compared to the 3:7 mixture suggests that a more uniform data distribution is advantageous for model generalization. This is likely because the 1:1 mixture balances the characteristics of both the LoRE and DuIE datasets, whereas in the 3:7 mixture, the features of the DuIE dataset may be more prominent, possibly underutilizing the unique aspects of the LoRE dataset.

Stage 3: Performance on Varying Proportions of Mixed Test Sets of Models Trained on the DuIE Dataset. Models originally trained on DuIE showcased a diminution in performance when subjected to mixed test sets with CPRE data, as evidenced by the F1 scores in Table 3. A 3:7 mixed test set resulted in slightly reduced F1 scores, such as the Bert-base-chinese model at 0.897 and mREBEL at 0.899, and a more pronounced decline in GPT-2 at 0.875. This decrement is consistent with the expectation that models are typically optimized for the distribution from which they were trained. A shift to a balanced 1:1 mixed test set illuminated a nuanced trend of performance recuperation, albeit not surpassing the original DuIE-trained results. The casRel model’s performance on a 1:1 mixed set is noteworthy, achieving an F1 score of 0.935, which, while commendable, does not quite match its DuIE standalone performance. This indicates that while the CPRE dataset’s influence is beneficial, it does not entirely bridge the gap in data distribution complexities.

The synthesis of these results provides a lucid narrative on the relative dataset quality. The CPRE dataset, whilst contributing positively to the models’ adaptability, does not entirely supplant the specialized training that models receive from the DuIE dataset. The performance metrics illustrate that LoRE’s CPRE data do bolster the models’ robustness to a certain extent, evidenced by the lessened performance drop in mixed data scenarios. The CPRE dataset’s quality is inferred to be of a high standard, given the models’ sustained performance on mixed test sets. In essence, the LoRE framework manifests its utility in generating quality datasets that can complement existing resources like DuIE.

References

- Zhou, W.; Zhang, S.; Naumann, T.; Chen, M.; Poon, H. Continual Contrastive Finetuning Improves Low-Resource Relation Extraction. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 13249–13263. [Google Scholar] [CrossRef]

- Gururaja, S.; Dutt, R.; Liao, T.; Rosé, C. Linguistic representations for fewer-shot relation extraction across domains. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 7502–7514. [Google Scholar] [CrossRef]

- Xu, B.; Wang, Q.; Lyu, Y.; Dai, D.; Zhang, Y.; Mao, Z. S2ynRE: Two-stage Self-training with Synthetic data for Low-resource Relation Extraction. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 8186–8207. [Google Scholar] [CrossRef]

- Bonifacio, L.; Abonizio, H.; Fadaee, M.; Nogueira, R. Inpars: Data augmentation for information retrieval using large language models. arXiv 2022, arXiv:2202.05144. [Google Scholar]

- Gholami, S.; Omar, M. Does Synthetic Data Make Large Language Models More Efficient? arXiv 2023, arXiv:2310.07830. [Google Scholar]

- Mintz, M.; Bills, S.; Snow, R.; Jurafsky, D. Distant Supervision for Relation Extraction without Labeled Data. In Proceedings of the Joint Conference of the 47th Annual Meeting of the ACL and the 4th International Joint Conference on Natural Language Processing of the AFNLP: Volume 2—Volume 2, ACL ’09, Singapore, 2–7 August 2009; pp. 1003–1011. [Google Scholar]

- Schick, T.; Schütze, H. True Few-Shot Learning with Prompts—A Real-World Perspective. Trans. Assoc. Comput. Linguist. 2022, 10, 716–731. [Google Scholar] [CrossRef]

- Xiao, Y.; Jin, Y.; Cheng, R.; Hao, K. Hybrid attention-based transformer block model for distant supervision relation extraction. Neurocomputing 2022, 470, 29–39. [Google Scholar] [CrossRef]

- Ben Abacha, A.; Zweigenbaum, P. Automatic extraction of semantic relations between medical entities: A rule based approach. J. Biomed. Semant. 2011, 2, 1–11. [Google Scholar] [CrossRef]

- Cui, M.; Li, L.; Wang, Z.; You, M. A Survey on Relation Extraction. In Proceedings of the Knowledge Graph and Semantic Computing. Language, Knowledge, and Intelligence, Chengdu, China, 26–29 August 2017; Li, J., Zhou, M., Qi, G., Lao, N., Ruan, T., Du, J., Eds.; Springer: Singapore, 2017; pp. 50–58. [Google Scholar]

- Zhou, K.; Qiao, Q.; Li, Y.; Li, Q. Improving Distantly Supervised Relation Extraction by Natural Language Inference. Proc. AAAI Conf. Artif. Intell. 2023, 37, 14047–14055. [Google Scholar] [CrossRef]

- Chen, J.; Guo, Z.; Yang, J. Distant Supervision for Relation Extraction via Noise Filtering. In Proceedings of the 2021 13th International Conference on Machine Learning and Computing, ICMLC ’21, Shenzhen, China, 26 February–1 March 2021; pp. 361–367. [Google Scholar] [CrossRef]

- Kumar, V.; Ntoutsi, E.; Rajawat, P.S.; Medda, G.; Recupero, D.R. Unlocking LLMs: Addressing Scarce Data and Bias Challenges in Mental Health. arXiv 2024, arXiv:2412.12981. [Google Scholar]

- Hiller, M.; Ma, R.; Harandi, M.; Drummond, T. Rethinking generalization in few-shot classification. Adv. Neural Inf. Process. Syst. 2022, 35, 3582–3595. [Google Scholar]

- Wadhwa, S.; Amir, S.; Wallace, B. Revisiting Relation Extraction in the era of Large Language Models. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 15566–15589. [Google Scholar] [CrossRef]

- Xu, X.; Zhu, Y.; Wang, X.; Zhang, N. How to Unleash the Power of Large Language Models for Few-shot Relation Extraction? In Proceedings of the Fourth Workshop on Simple and Efficient Natural Language Processing (SustaiNLP), Toronto, ON, Canada, 13 July 2023; pp. 190–200. [Google Scholar] [CrossRef]

- Li, B.; Fang, G.; Yang, Y.; Wang, Q.; Ye, W.; Zhao, W.; Zhang, S. Evaluating ChatGPT’s Information Extraction Capabilities: An Assessment of Performance, Explainability, Calibration, and Faithfulness. arXiv 2023, arXiv:2304.11633. [Google Scholar]

- Liu, H.; Person Relation Knowledge Graph. GitHub Repository. 2018. Available online: https://github.com/liuhuanyong/PersonRelationKnowledgeGraph (accessed on 15 December 2024).

- Li, J.; Cheng, X.; Zhao, W.X.; Nie, J.Y.; Wen, J.R. Halueval: A large-scale hallucination evaluation benchmark for large language models. arXiv 2023, arXiv:2305.11747. [Google Scholar]

- Huang, C.; Li, X.; Jiang, Y.; Lv, W.; Xu, M. Feature Extension for Chinese Short Text Based on Tongyici Cilin. In Computer and Information Science; Springer International Publishing: Cham, Switzerland, 2023; pp. 167–180. [Google Scholar] [CrossRef]

- Li, S.; He, W.; Shi, Y.; Jiang, W.; Liang, H.; Jiang, Y.; Zhang, Y.; Lyu, Y.; Zhu, Y. DuIE: A Large-Scale Chinese Dataset for Information Extraction. In Natural Language Processing and Chinese Computing: 8th CCF International Conference, NLPCC 2019, Dunhuang, China, October 9–14, 2019, Proceedings, Part II 8; Tang, J., Kan, M.Y., Zhao, D., Li, S., Zan, H., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 791–800. [Google Scholar]

- Wang, H.; He, Z.; Ma, J.; Chen, W.; Zhang, M. IPRE: A Dataset for Inter-Personal Relationship Extraction. In Natural Language Processing and Chinese Computing: 8th CCF International Conference, NLPCC 2019, Dunhuang, China, October 9–14, 2019, Proceedings, Part II 8; Tang, J., Kan, M.Y., Zhao, D., Li, S., Zan, H., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 103–115. [Google Scholar]

- Brody, S.; Wu, S.; Benton, A. Towards realistic few-shot relation extraction. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 5338–5345. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Huguet Cabot, P.L.; Navigli, R. REBEL: Relation Extraction By End-to-end Language generation. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2021, Punta Cana, Dominican Republic, 16–20 November 2021; pp. 2370–2381. [Google Scholar] [CrossRef]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Shang, Y.M.; Huang, H.; Mao, X. OneRel: Joint Entity and Relation Extraction with One Module in One Step. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; Volume 36, pp. 11285–11293. [Google Scholar] [CrossRef]

- Wei, Z.; Su, J.; Wang, Y.; Tian, Y.; Chang, Y. A Novel Cascade Binary Tagging Framework for Relational Triple Extraction. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 1476–1488. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).