How to Construct Behavioral Patterns in Immersive Learning Environments: A Framework, Systematic Review, and Research Agenda

Abstract

1. Introduction

2. Methods

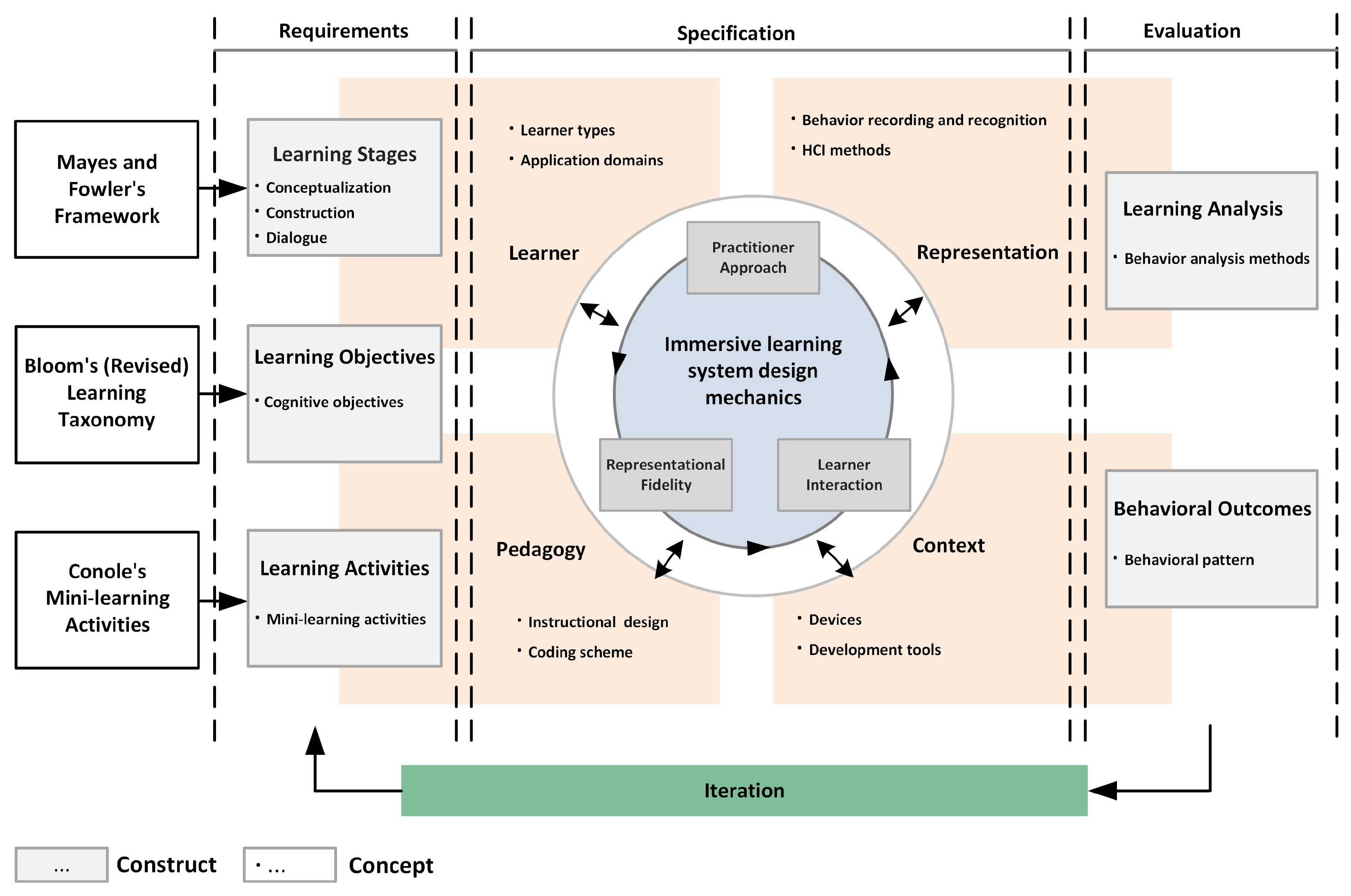

2.1. Conceptual Framework

2.1.1. Requirement

2.1.2. Specification

- The 1st dimension in the framework involves learner specification. In this study, the learner types and application domains are examined.

- The 2nd dimension in the framework analyzes the pedagogical perspective when conducting learning activities and includes a consideration of instructional models to support learners throughout the learning process. The selection of learning theories can particularly influence the analysis of intended learning outcomes. Consequently, the systematic review of pedagogical perspectives, such as instructional design and coding scheme development, is essential for identifying effective ways to facilitate knowledge construction and transformation in 3D VLEs.

- The 3rd dimension in the framework outlines the representation of the immersive learning system, including the interactive representation of the learning experience and pedagogical characteristics. This highlights the significance of learner interaction, one of the two unique characteristics of 3D VLEs identified in Dalgarno and Lee’s model [53]. In this study, learning behavior related to human–computer interactions and observation data collection are examined.

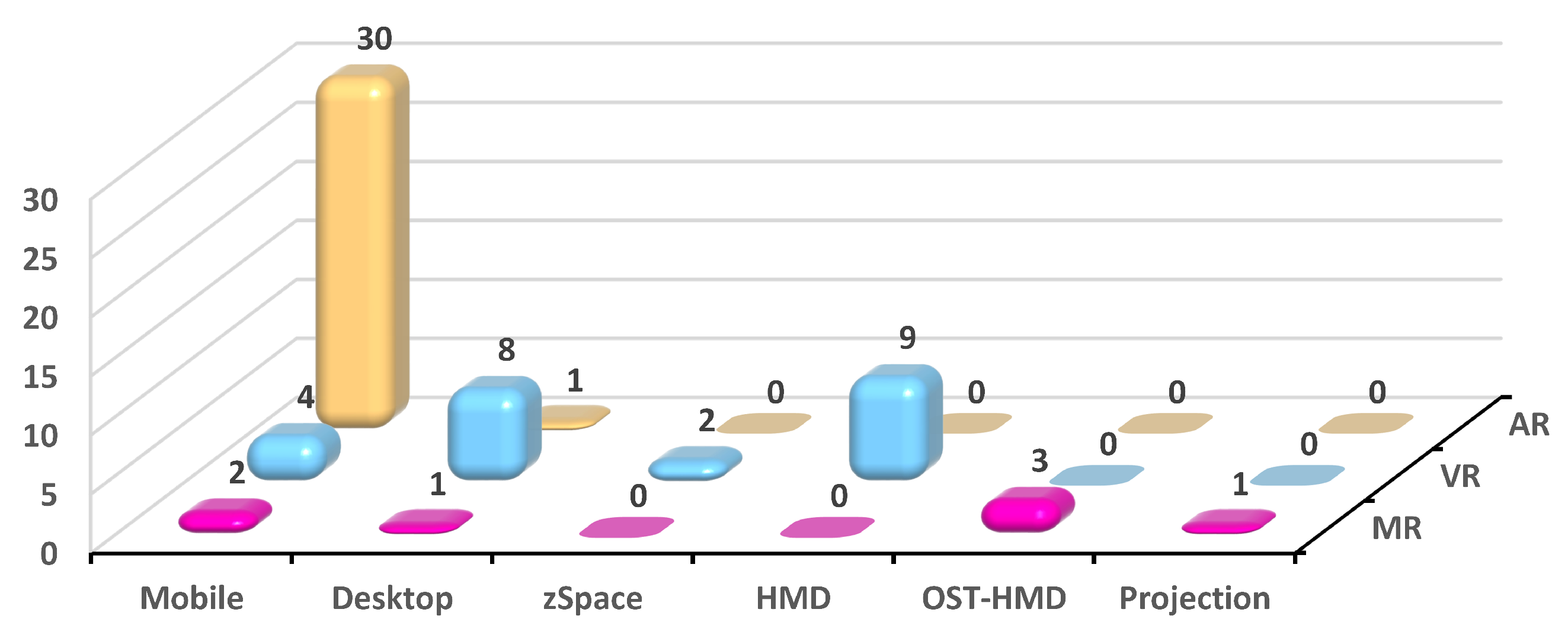

- The 4th dimension in the framework focuses on the context in which immersive learning occurs. Hardware and software platforms are critical factors in supporting the construction of the learning context and its fidelity, which corresponds the second unique characteristic of 3D VLEs identified in Dalgarno and Lee’s model [53].

2.1.3. Evaluation

2.1.4. Iteration

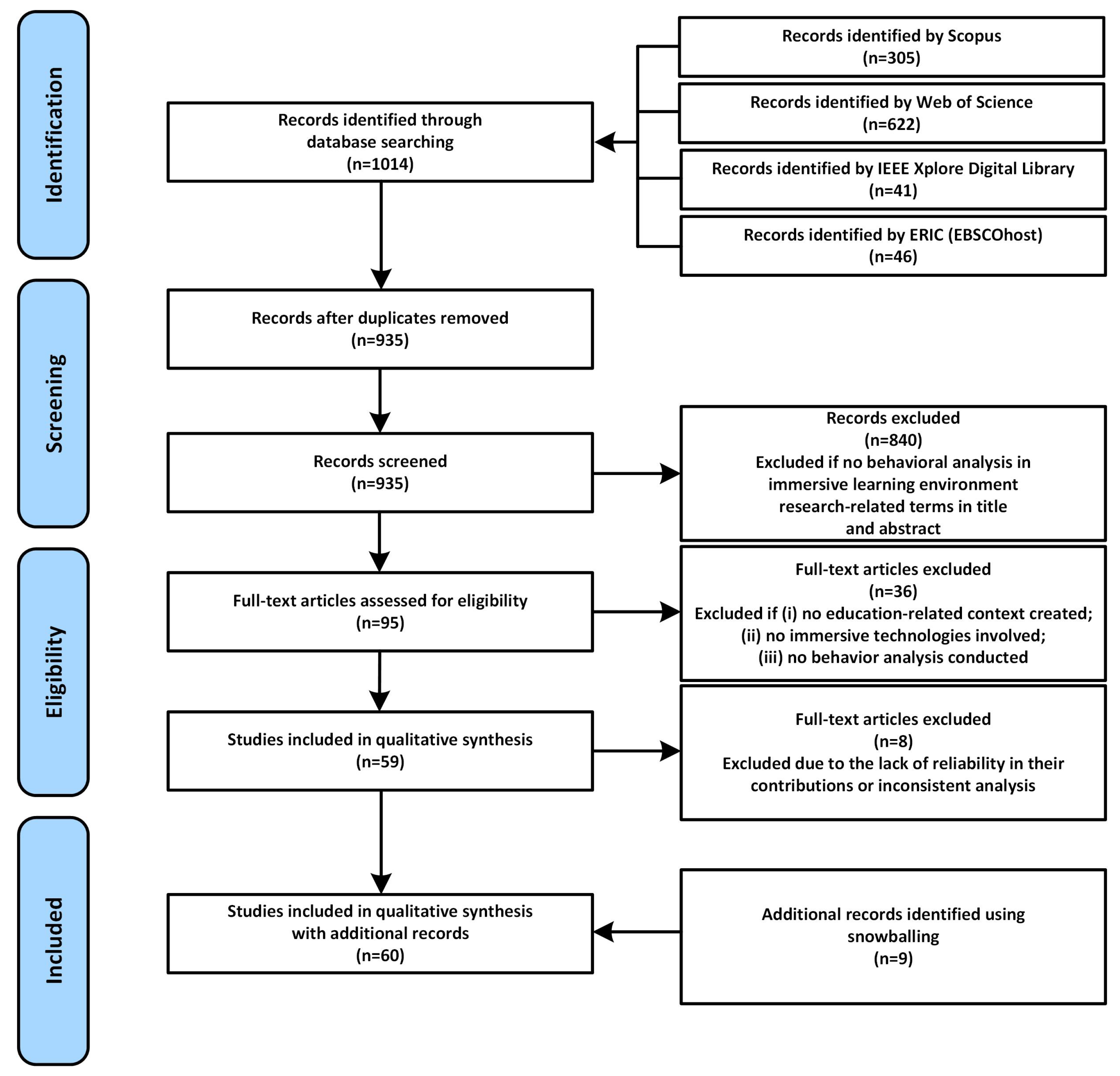

2.2. Systematic Literature Review

2.2.1. Information Sources

2.2.2. Search Criteria

2.2.3. Inclusion and Exclusion Criteria

- (1)

- Papers were peer-reviewed, primary-source articles.

- (2)

- Papers whose full text was accessible.

- (3)

- Research areas: education-related, immersive technology-related papers.

- (4)

- Papers dealt with behavioral pattern analysis and construction.

- (1)

- Papers were commentaries, literature reviews, or book chapters.

- (2)

- Papers whose full text was not accessible.

- (3)

- The focus of the study was not on the education-related, immersive technology-related context.

- (4)

- The analysis of the study did not involve content about learners’ behavior analysis.

- (5)

- The behavior analysis techniques extended beyond those outlined in Table 1.

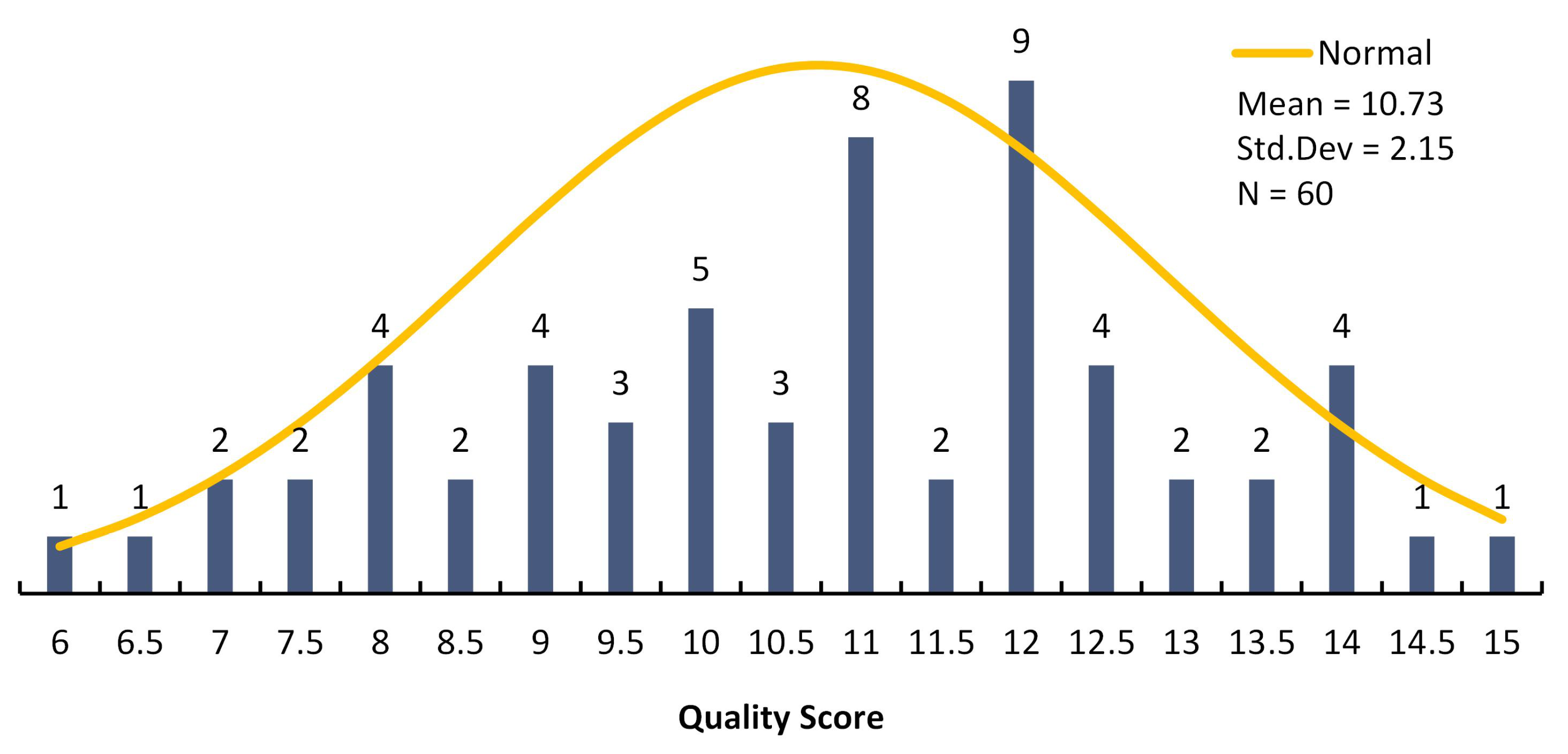

2.2.4. Paper Quality Assessment

- (1)

- How suitable is the study design to address this review’s research questions and sub-questions (higher weighting for including a control group in the study)?

- High—3, e.g., randomized control trial

- Medium—2, e.g., quasi-experimental trial with a control group

- Low—1, e.g., pre-test/post-test study design, single subject-experimental study

- (2)

- How adequate are the data processing and evaluation techniques to address this review’s research questions and sub-questions?

- (3)

- How applicable are the results of the research to the target population concerning the typicality of the sample size?

- (4)

- How relevant is the specific emphasis of the research (including immersive system prototype design, implementation context, and behavior measure methods) to address the research questions as well as sub-questions of this review?

- (5)

- How reliable are the research results in addressing the research questions?

2.2.5. Coding Procedure

- RQ1: What are the learning requirements (1.1, 1.2, and 1.3 in Table 3) in the design of behavioral pattern construction within immersive learning environments?

- RQ2: What are the learner specifications based on the 4DF (2.1 and 2.2 in Table 3) for behavioral analysis within immersive learning environments?

- RQ3: What are the pedagogical considerations based on the 4DF (3.1 and 3.2 in Table 3) for behavioral analysis within immersive learning environments?

- RQ4: How can immersive contexts be constructed based on the 4DF (4.1 and 4.2 in Table 3) for behavioral analysis?

- RQ5: What interactive representation dimensions based on the 4DF (5.1 and 5.2 in Table 3) are suitable for behavioral analysis within immersive learning environments?

- RQ6: What behavioral patterns (6.1 and 6.2 in Table 3) are identified within immersive learning environments?

- RQ7: What are the challenges (7.1 in Table 3) in analyzing learners’ behavior within immersive learning environments?

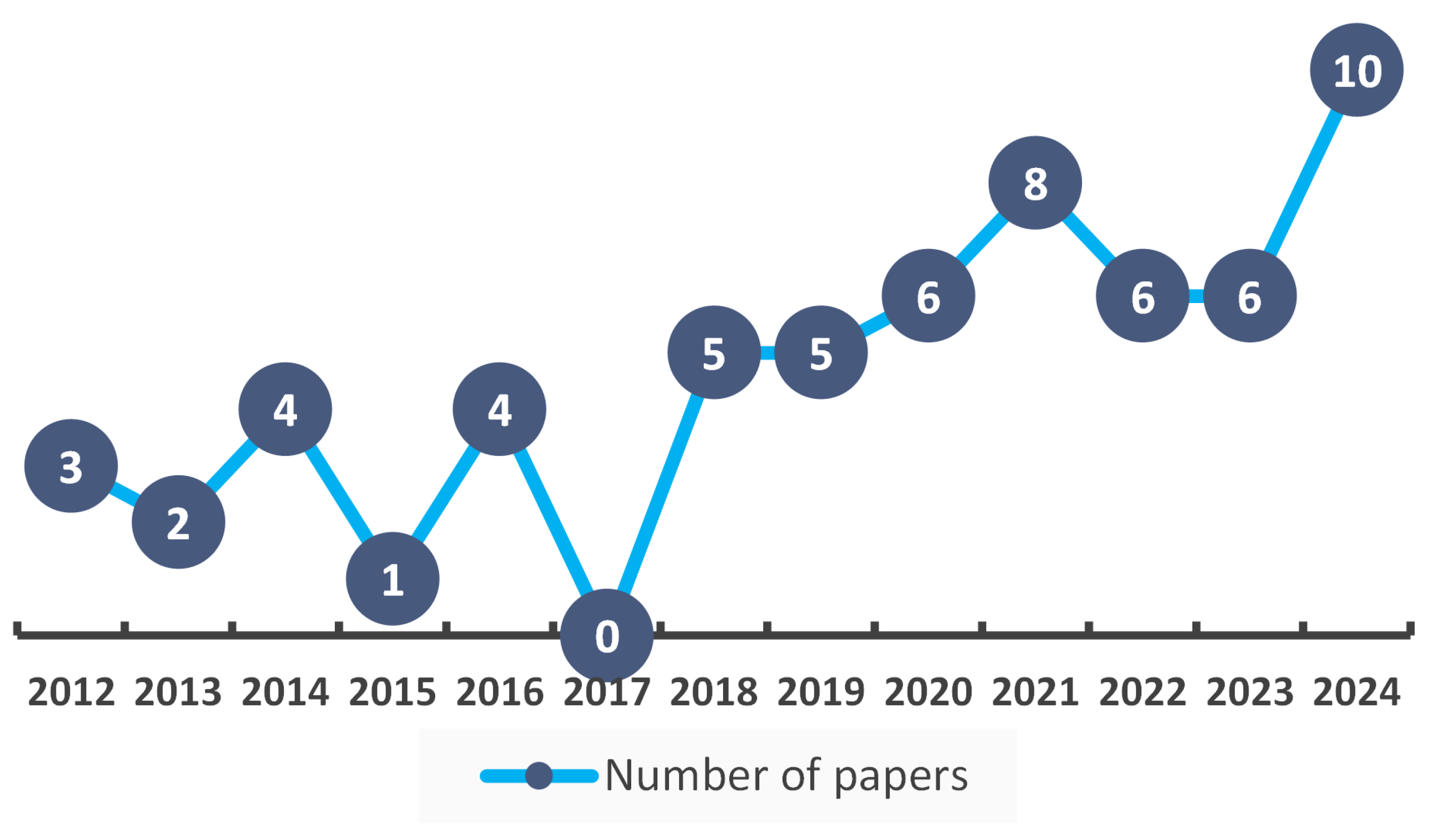

3. Results

3.1. RQ1: What Are the Learning Requirements in the Design of Behavioral Pattern Construction Within Immersive Learning Environments?

3.2. RQ2: What Are the Learner Specifications Based on the 4DF for Behavioral Analysis Within Immersive Learning Environments?

3.3. RQ3: What Are the Pedagogical Considerations Based on the 4DF for Behavioral Analysis Within Immersive Learning Environments?

3.3.1. Instructional Design Methods

3.3.2. Coding Scheme

3.4. RQ4: How Can Immersive Contexts Be Constructed Based on the 4DF for Behavioral Analysis?

3.5. RQ5: What Interactive Representation Dimensions Based on the 4DF Are Suitable for Behavioral Analysis Within Immersive Learning Environments?

3.6. RQ6: What Behavioral Patterns Are Identified Within Immersive Learning Environments?

3.6.1. Techniques Used in Constructing Behavioral Patterns

3.6.2. Behavioral Pattern Outcomes in Immersive Learning Environments

3.7. RQ7: What Are the Challenges in Analyzing Learners’ Behavior Within Immersive Learning Environments?

3.7.1. Technology-Related Challenges

3.7.2. Implementation-Related Challenges

3.7.3. Analysis-Related Challenges

4. Discussion

4.1. Key Findings and Future Research Agenda

4.1.1. Focusing on Meeting Learning Requirements

4.1.2. Elaborating on Learning Specification

4.1.3. Revealing More Profound Pedagogical Implications Through Learning Evaluation

4.1.4. Refining Instructional Implementation Through Learning Iteration

- Coding schemes can be designed in an iterative process to better match the coded behavior with the intended learning outcomes of behavioral aspects.

- Pilot studies and multi-round study designs can be considered to allow instructors to acquire prior information about learners, including preferences for immersive technologies, particular behavioral habits, or design defects, conducting sufficient preparation for the final major study.

- The results of various behavioral analysis methods in the same behavioral sequence data can be compared to deeply understand the behavioral differences in the immersive learning environment from different perspectives.

- More studies dealing with technology-related challenges are needed to enhance the stability and usability of immersive systems. The adverse effects of immersion learning systems on learners should be examined, such as the Hawthorne effect and simulator disease.

- Regarding implementation-related challenges, small sample sizes, research time restriction, and the absence of a control group are the most severe challenges in practical exercises and can negatively affect the evaluation of this technology.

- Regarding analysis-related challenges, advanced instruments and adequate equipment are needed to record requisite behavioral data for subsequent analysis.

4.2. Theoretical Implications

4.3. Practical Implications

4.4. Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Yuen, S.C.Y.; Yaoyuneyong, G.; Johnson, E. Augmented Reality and Education: Applications and Potentials. In Reshaping Learning: Frontiers of Learning Technology in a Global Context; Huang, R., Kinshuk, Spector, J.M., Eds.; Springer: Heidelberg, Germany, 2013; pp. 385–414. [Google Scholar] [CrossRef]

- Dede, C.J.; Jacobson, J.; Richards, J. Introduction: Virtual, Augmented, and Mixed Realities in Education. In Virtual, Augmented, and Mixed Realities in Education; Liu, D., Dede, C., Huang, R., Richards, J., Eds.; Springer: Singapore, 2017; pp. 1–16. [Google Scholar] [CrossRef]

- Beck, D.; Morgado, L.; O’Shea, P. Educational Practices and Strategies With Immersive Learning Environments: Mapping of Reviews for Using the Metaverse. IEEE Trans. Learn. Technol. 2024, 17, 319–341. [Google Scholar] [CrossRef]

- Huang, T.C.; Chen, C.C.; Chou, Y.W. Animating eco-education: To see, feel, and discover in an augmented reality-based experiential learning environment. Comput. Educ. 2016, 96, 72–82. [Google Scholar] [CrossRef]

- Wei, X.; Weng, D.; Liu, Y.; Wang, Y. Teaching based on augmented reality for a technical creative design course. Comput. Educ. 2015, 81, 221–234. [Google Scholar] [CrossRef]

- Han, I. Immersive virtual field trips and elementary students’ perceptions. Br. J. Educ. Technol. 2021, 52, 179–195. [Google Scholar] [CrossRef]

- Daponte, P.; De Vito, L.; Picariello, F.; Riccio, M. State of the art and future developments of the Augmented Reality for measurement applications. Meas. J. Int. Meas. Confed. 2014, 57, 53–70. [Google Scholar] [CrossRef]

- Sereno, M.; Wang, X.; Besancon, L.; Mcguffin, M.J.; Isenberg, T. Collaborative Work in Augmented Reality: A Survey. IEEE Trans. Vis. Comput. Graph. 2020, 2626, 2530–2549. [Google Scholar] [CrossRef]

- Alkhabra, Y.A.; Ibrahem, U.M.; Alkhabra, S.A. Augmented reality technology in enhancing learning retention and critical thinking according to STEAM program. Humanit. Soc. Sci. Commun. 2023, 10, 174. [Google Scholar] [CrossRef]

- Azuma, R.T. A survey of augmented reality. Presence Teleoperators Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Wang, X.; Ong, S.K.; Nee, A.Y. A comprehensive survey of augmented reality assembly research. Adv. Manuf. 2016, 4, 1–22. [Google Scholar] [CrossRef]

- Che Dalim, C.S.; Sunar, M.S.; Dey, A.; Billinghurst, M. Using augmented reality with speech input for non-native children’s language learning. Int. J.-Hum.-Comput. Stud. 2020, 134, 44–64. [Google Scholar] [CrossRef]

- Limbu, B.; Vovk, A.; Jarodzka, H.; Klemke, R.; Wild, F.; Specht, M. WEKIT.One: A Sensor-Based Augmented Reality System for Experience Capture and Re-enactment. In Transforming Learning with Meaningful Technologies; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 158–171. [Google Scholar] [CrossRef]

- Alvarez, H.; Aguinaga, I.; Borro, D. Providing guidance for maintenance operations using automatic markerless Augmented Reality system. In Proceedings of the 2011 10th IEEE International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; pp. 181–190. [Google Scholar] [CrossRef]

- Kardong-Edgren, S.S.; Farra, S.L.; Alinier, G.; Young, H.M. A Call to Unify Definitions of Virtual Reality. Clin. Simul. Nurs. 2019, 31, 28–34. [Google Scholar] [CrossRef]

- Yang, C.; Zhang, J.; Hu, Y.; Yang, X.; Chen, M.; Shan, M.; Li, L. The impact of virtual reality on practical skills for students in science and engineering education: A meta-analysis. Int. J. STEM Educ. 2024, 11, 28. [Google Scholar] [CrossRef]

- Slater, M.; Wilbur, S. A framework for immersive virtual environments (FIVE): Speculations on the role of presence in virtual environments. Presence Teleoperators Virtual Environ. 1997, 6, 603–616. [Google Scholar] [CrossRef]

- Rose, T.; Nam, C.S.; Chen, K.B. Immersion of virtual reality for rehabilitation—Review. Appl. Ergon. 2018, 69, 153–161. [Google Scholar] [CrossRef]

- Milgram, P.; Kishino, F. A taxonomy of mixed reality visual displays. IEICE-Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Benford, S.; Greenhalgh, C.; Reynard, G.; Brown, C.; Koleva, B. Understanding and Constructing Shared Spaces with Mixed-Reality Boundaries. ACM Trans.-Comput.-Hum. Interact. 1998, 5, 185–223. [Google Scholar] [CrossRef]

- Rauschnabel, P.A.; Felix, R.; Hinsch, C.; Shahab, H.; Alt, F. What is XR? Towards a Framework for Augmented and Virtual Reality. Comput. Hum. Behav. 2022, 133, 107289. [Google Scholar] [CrossRef]

- Petersen, G.B.; Petkakis, G.; Makransky, G. A study of how immersion and interactivity drive VR learning. Comput. Educ. 2022, 179, 104429. [Google Scholar] [CrossRef]

- Miller, H.L.; Bugnariu, N.L. Level of Immersion in Virtual Environments Impacts the Ability to Assess and Teach Social Skills in Autism Spectrum Disorder. Cyberpsychology Behav. Soc. Netw. 2016, 19, 246–256. [Google Scholar] [CrossRef]

- Lämsä, J.; Hämäläinen, R.; Koskinen, P.; Viiri, J.; Lampi, E. What do we do when we analyse the temporal aspects of computer-supported collaborative learning? A systematic literature review. Educ. Res. Rev. 2021, 33, 100387. [Google Scholar] [CrossRef]

- Chen, J.C.; Huang, Y.; Lin, K.Y.; Chang, Y.S.; Lin, H.C.; Lin, C.Y.; Hsiao, H.S. Developing a hands-on activity using virtual reality to help students learn by doing. J. Comput. Assist. Learn. 2020, 36, 46–60. [Google Scholar] [CrossRef]

- Wu, W.; Sandoval, A.; Gunji, V.; Ayer, S.K.; London, J.; Perry, L.; Patil, K.; Smith, K. Comparing Traditional and Mixed Reality-Facilitated Apprenticeship Learning in a Wood-Frame Construction Lab. J. Constr. Eng. Manag. 2020, 146, 04020139. [Google Scholar] [CrossRef]

- Cai, S.; Niu, X.; Wen, Y.; Li, J. Interaction analysis of teachers and students in inquiry class learning based on augmented reality by iFIAS and LSA. Interact. Learn. Environ. 2021, 31, 5551–5567. [Google Scholar] [CrossRef]

- Chiang, T.H.; Yang, S.J.; Hwang, G.J. Students’ online interactive patterns in augmented reality-based inquiry activities. Comput. Educ. 2014, 78, 97–108. [Google Scholar] [CrossRef]

- Zhang, N.; Liu, Q.; Zheng, X.; Luo, L.; Cheng, Y. Analysis of Social Interaction and Behavior Patterns in the Process of Online to Offline Lesson Study: A Case Study of Chemistry Teaching Design based on Augmented Reality. Asia Pac. J. Educ. 2021, 42, 815–836. [Google Scholar] [CrossRef]

- Hou, H.T. Exploring the behavioral patterns of learners in an educational massively multiple online role-playing game (MMORPG). Comput. Educ. 2012, 58, 1225–1233. [Google Scholar] [CrossRef]

- Liu, S.; Hu, Z.; Peng, X.; Liu, Z.; Cheng, H.N.; Sun, J. Mining learning behavioral patterns of students by sequence analysis in cloud classroom. Int. J. Distance Educ. Technol. 2017, 15, 15–27. [Google Scholar] [CrossRef]

- Poldner, E.; Simons, P.R.; Wijngaards, G.; van der Schaaf, M.F. Quantitative content analysis procedures to analyse students’ reflective essays: A methodological review of psychometric and edumetric aspects. Educ. Res. Rev. 2012, 7, 19–37. [Google Scholar] [CrossRef]

- Riff, D.; Lacy, S.; Fico, F. Analyzing Media Messages: Using Quantitative Content Analysis in Research, 3rd ed.; Routledge: New York, NY, USA, 2014; pp. 1–214. [Google Scholar] [CrossRef]

- Bakeman, R.; Quera, V. Sequential Analysis and Observational Methods for the Behavioral Sciences; CambridDge University Press: Cambridge, UK, 2011. [Google Scholar] [CrossRef]

- Hou, H.T. A Framework for Dynamic Sequential Behavioral Pattern Detecting and Automatic Feedback/Guidance Designing for Online Discussion Learning Environments. In Advanced Learning; Hijon-Neira, R., Ed.; IntechOpen: Rijeka, Croatia, 2009; Chapter 19. [Google Scholar] [CrossRef]

- Scott, J.; Carrington, P.J. The SAGE Handbook of Social Network Analysis; SAGE Publications: Thousand Oaks, CA, USA, 2011. [Google Scholar]

- Wu, J.Y.; Nian, M.W. The dynamics of an online learning community in a hybrid statistics classroom over time: Implications for the question-oriented problem-solving course design with the social network analysis approach. Comput. Educ. 2021, 166, 104120. [Google Scholar] [CrossRef]

- Tan, P.N.; Steinbach, M.; Karpatne, A.; Kumar, V. Introduction to Data Mining; Pearson: London, UK, 2019. [Google Scholar]

- Cheng, K.H.; Tsai, C.C. Affordances of Augmented Reality in Science Learning: Suggestions for Future Research. J. Sci. Educ. Technol. 2013, 22, 449–462. [Google Scholar] [CrossRef]

- Luft, J.A.; Jeong, S.; Idsardi, R.; Gardner, G. Literature Reviews, Theoretical Frameworks, and Conceptual Frameworks: An Introduction for New Biology Education Researchers. CBE-Life Sci. Educ. 2022, 21, rm33. [Google Scholar] [CrossRef] [PubMed]

- Rocco, T.S.; Plakhotnik, M.S. Literature Reviews, Conceptual Frameworks, and Theoretical Frameworks: Terms, Functions, and Distinctions. Hum. Resour. Dev. Rev. 2009, 8, 120–130. [Google Scholar] [CrossRef]

- Fowler, C. Virtual reality and learning: Where is the pedagogy? Br. J. Educ. Technol. 2015, 46, 412–422. [Google Scholar] [CrossRef]

- Biggs, J.; Tang, C. Teaching for Quality Learning at University; Open University Press: Maidenhead, UK, 2011. [Google Scholar]

- Anderson, L.W.; Krathwohl, D.R. A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives; Longmans: New York, NY, USA, 2001. [Google Scholar]

- Bloom, B.S.; Engelhart, M.D.; Furst, E.L.; Hill, W.H.; Krathwohl, D.R. Taxonomy of Educational Objectives: The Classification of Educational Goals; Number 1 in Taxonomy of Educational Objectives: The Classification of Educational Goals; Longmans: New York, NY, USA, 1956. [Google Scholar]

- de Freitas, S.; Rebolledo-Mendez, G.; Liarokapis, F.; Magoulas, G.; Poulovassilis, A. Developing an evaluation methodology for immersive learning experiences in a virtual world. In Proceedings of the 2009 Conference in Games and Virtual Worlds for Serious Applications, VS-GAMES 2009, Coventry, UK, 23–24 March 2009; pp. 43–50. [Google Scholar] [CrossRef]

- de Freitas, S.; Rebolledo-Mendez, G.; Liarokapis, F.; Magoulas, G.; Poulovassilis, A. Learning as immersive experiences: Using the four-dimensional framework for designing and evaluating immersive learning experiences in a virtual world. Br. J. Educ. Technol. 2010, 41, 69–85. [Google Scholar] [CrossRef]

- de Freitas, S.; Neumann, T. The use of ‘exploratory learning’ for supporting immersive learning in virtual environments. Comput. Educ. 2009, 52, 343–352. [Google Scholar] [CrossRef]

- Mayer, I.; Bekebrede, G.; Harteveld, C.; Warmelink, H.; Zhou, Q.; Van Ruijven, T.; Lo, J.; Kortmann, R.; Wenzler, I. The research and evaluation of serious games: Toward a comprehensive methodology. Br. J. Educ. Technol. 2014, 45, 502–527. [Google Scholar] [CrossRef]

- Lai, J.W.; Cheong, K.H. Adoption of Virtual and Augmented Reality for Mathematics Education: A Scoping Review. IEEE Access 2022, 10, 13693–13703. [Google Scholar] [CrossRef]

- Garris, R.; Ahlers, R.; Driskell, J.E. Games, motivation, and learning: A research and practice model. Simul. Gaming 2002, 33, 441–467. [Google Scholar] [CrossRef]

- de Freitas, S.; Routledge, H. Designing leadership and soft skills in educational games: The e-leadership and soft skills educational games design model (ELESS). Br. J. Educ. Technol. 2013, 44, 951–968. [Google Scholar] [CrossRef]

- Dalgarno, B.; Lee, M.J. What are the learning affordances of 3-D virtual environments? Br. J. Educ. Technol. 2010, 41, 10–32. [Google Scholar] [CrossRef]

- Ak, O. A Game Scale to Evaluate Educational Computer Games. Procedia-Soc. Behav. Sci. 2012, 46, 2477–2481. [Google Scholar] [CrossRef]

- Hsiao, H.S.; Chen, J.C. Using a gesture interactive game-based learning approach to improve preschool children’s learning performance and motor skills. Comput. Educ. 2016, 95, 151–162. [Google Scholar] [CrossRef]

- de Freitas, S.; Oliver, M. How can exploratory learning with games and simulations within the curriculum be most effectively evaluated? Comput. Educ. 2006, 46, 249–264. [Google Scholar] [CrossRef]

- Arksey, H.; O’Malley, L. Scoping studies: Towards a methodological framework. Int. J. Soc. Res. Methodol. 2005, 8, 19–32. [Google Scholar] [CrossRef]

- Khan, K.S.; Kunz, R.; Kleijnen, J.; Antes, G. Five steps to conducting a systematic review. J. R. Soc. Med. 2003, 96, 118–121. [Google Scholar] [CrossRef]

- Wendler, R. The maturity of maturity model research: A systematic mapping study. Inf. Softw. Technol. 2012, 54, 1317–1339. [Google Scholar] [CrossRef]

- Wohlin, C. Guidelines for Snowballing in Systematic Literature Studies and a Replication in Software Engineering. In Proceedings of the 18th International Conference on Evaluation and Assessment in Software Engineering, EASE’14, London, UK, 13–14 May 2014. [Google Scholar] [CrossRef]

- Fabbri, S.; Silva, C.; Hernandes, E.; Octaviano, F.; Di Thommazo, A.; Belgamo, A. Improvements in the StArt Tool to Better Support the Systematic Review Process. In Proceedings of the 20th International Conference on Evaluation and Assessment in Software Engineering, EASE ’16, Limerick, Ireland, 1–3 June 2016. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Ann. Intern. Med. 2009, 151, 264–269. [Google Scholar] [CrossRef] [PubMed]

- Connolly, T.M.; Boyle, E.A.; MacArthur, E.; Hainey, T.; Boyle, J.M. A systematic literature review of empirical evidence on computer games and serious games. Comput. Educ. 2012, 59, 661–686. [Google Scholar] [CrossRef]

- Johnson, D.; Deterding, S.; Kuhn, K.A.; Staneva, A.; Stoyanov, S.; Hides, L. Gamification for health and wellbeing: A systematic review of the literature. Internet Interv. 2016, 6, 89–106. [Google Scholar] [CrossRef]

- Salipante, P.; William, N.; Bigelow, J. A matrix approach to literature reviews. Res. Organ. Behav. 1982, 4, 321–348. [Google Scholar]

- Webster, J.; Watson, R.T. Analyzing the Past to Prepare for the Future: Writing a Literature Review. MIS Q. 2002, 26, 13–23. [Google Scholar]

- Radmehr, F.; Drake, M. Revised Bloom’s taxonomy and major theories and frameworks that influence the teaching, learning, and assessment of mathematics: A comparison. Int. J. Math. Educ. Sci. Technol. 2019, 50, 895–920. [Google Scholar] [CrossRef]

- Ibáñez, M.B.; Delgado-Kloos, C. Augmented reality for STEM learning: A systematic review. Comput. Educ. 2018, 123, 109–123. [Google Scholar] [CrossRef]

- Mayes, J.T.; Fowler, C.J. Learning technology and usability: A framework for understanding courseware. Interact. Comput. 1999, 11, 485–497. [Google Scholar] [CrossRef]

- Cheng, K.H.; Tsai, C.C. Children and parents’ reading of an augmented reality picture book: Analyses of behavioral patterns and cognitive attainment. Comput. Educ. 2014, 72, 302–312. [Google Scholar] [CrossRef]

- Cheng, K.H.; Tsai, C.C. The interaction of child-parent shared reading with an augmented reality (AR) picture book and parents’ conceptions of AR learning. Br. J. Educ. Technol. 2016, 47, 203–222. [Google Scholar] [CrossRef]

- Hsu, T.Y.; Liang, H.Y.; Chen, J.M. Engaging the families with young children in museum visits with a mixed-reality game: A case study. In Proceedings of the ICCE 2020-28th International Conference on Computers in Education, Online, 23–27 November 2020; Volume 1, pp. 442–447. [Google Scholar]

- Yilmaz, R.M. Educational magic toys developed with augmented reality technology for early childhood education. Comput. Hum. Behav. 2016, 54, 240–248. [Google Scholar] [CrossRef]

- Wu, W.; Hartless, J.; Tesei, A.; Gunji, V.; Ayer, S.; London, J. Design Assessment in Virtual and Mixed Reality Environments: Comparison of Novices and Experts. J. Constr. Eng. Manag. 2019, 145, 04019049. [Google Scholar] [CrossRef]

- Lee, K. Augmented Reality in Education and Training. TechTrends 2012, 56, 13–21. [Google Scholar] [CrossRef]

- Law, E.L.C.; Heintz, M. Augmented reality applications for K-12 education: A systematic review from the usability and user experience perspective. Int. J. -Child-Comput. Interact. 2021, 30, 100321. [Google Scholar] [CrossRef]

- Akdeniz, C. (Ed.) Instructional Process and Concepts in Theory and Practice: Improving the Teaching Process; Springer: Singapore, 2016. [Google Scholar] [CrossRef]

- Halawa, S.; Lin, T.C.; Hsu, Y.S. Exploring instructional design in K-12 STEM education: A systematic literature review. Int. J. STEM Educ. 2024, 11, 43. [Google Scholar] [CrossRef]

- Wang, C.; Xu, L.; Liu, H. Exploring behavioural patterns of virtual manipulatives supported collaborative inquiry learning: Effect of device-student ratios and external scripts. J. Comput. Assist. Learn. 2022, 38, 392–408. [Google Scholar] [CrossRef]

- Gündüz, G.F. Instructional Techniques. In Instructional Process and Concepts in Theory and Practice: Improving the Teaching Process; Akdeniz, C., Ed.; Springer: Singapore, 2016; pp. 147–232. [Google Scholar] [CrossRef]

- Cheng, K.H.; Tsai, C.C. A case study of immersive virtual field trips in an elementary classroom: Students’ learning experience and teacher-student interaction behaviors. Comput. Educ. 2019, 140, 103600. [Google Scholar] [CrossRef]

- Hou, H.T. Exploring the behavioural patterns in project-based learning with online discussion: Quantitative content analysis and progressive sequential analysis. Turk. Online J. Educ. Technol. 2010, 9, 52–60. [Google Scholar]

- Chang, Y.S.; Chou, C.H.; Chuang, M.J.; Li, W.H.; Tsai, I.F. Effects of virtual reality on creative design performance and creative experiential learning. Interact. Learn. Environ. 2020, 31, 1142–1157. [Google Scholar] [CrossRef]

- Ibáñez, M.B.; Di-Serio, Á.; Villarán-Molina, D.; Delgado-Kloos, C. Support for Augmented Reality Simulation Systems: The Effects of Scaffolding on Learning Outcomes and Behavior Patterns. IEEE Trans. Learn. Technol. 2016, 9, 46–56. [Google Scholar] [CrossRef]

- Cheng, M.T.; Lin, Y.W.; She, H.C. Learning through playing Virtual Age: Exploring the interactions among student concept learning, gaming performance, in-game behaviors, and the use of in-game characters. Comput. Educ. 2015, 86, 18–29. [Google Scholar] [CrossRef]

- Lorenzo, C.M.; Ángel Sicilia, M.; Sánchez, S. Studying the effectiveness of multi-user immersive environments for collaborative evaluation tasks. Comput. Educ. 2012, 59, 1361–1376. [Google Scholar] [CrossRef]

- Mystakidis, S.; Christopoulos, A.; Pellas, N. A systematic mapping review of augmented reality applications to support STEM learning in higher education. Educ. Inf. Technol. 2022, 27, 1883–1927. [Google Scholar] [CrossRef]

- Gao, Y.; Liu, Y.; Normand, J.M.; Moreau, G.; Gao, X.; Wang, Y. A study on differences in human perception between a real and an AR scene viewed in an OST-HMD. J. Soc. Inf. Disp. 2019, 27, 155–171. [Google Scholar] [CrossRef]

- Zhang, J.; Ogan, A.; Liu, T.C.; Sung, Y.T.; Chang, K.E. The Influence of using Augmented Reality on Textbook Support for Learners of Different Learning Styles. In Proceedings of the 2016 IEEE International Symposium on Mixed and Augmented Reality, ISMAR 2016, Merida, Mexico, 19–23 September 2016; pp. 107–114. [Google Scholar] [CrossRef]

- Lin, T.J.; Duh, H.B.L.; Li, N.; Wang, H.Y.; Tsai, C.C. An investigation of learners’ collaborative knowledge construction performances and behavior patterns in an augmented reality simulation system. Comput. Educ. 2013, 68, 314–321. [Google Scholar] [CrossRef]

- Lin, X.F.; Hwang, G.J.; Wang, J.; Zhou, Y.; Li, W.; Liu, J.; Liang, Z.M. Effects of a contextualised reflective mechanism-based augmented reality learning model on students’ scientific inquiry learning performances, behavioural patterns, and higher order thinking. Interact. Learn. Environ. 2022, 31, 6931–6951. [Google Scholar] [CrossRef]

- Sarkar, P.; Kadam, K.; Pillai, J.S. Learners’ approaches, motivation and patterns of problem-solving on lines and angles in geometry using augmented reality. Smart Learn. Environ. 2020, 7, 17. [Google Scholar] [CrossRef]

- Dosoftei, C.C. The Immersive Mixed Reality: A New Opportunity for Experimental Labs in Engineering Education Using HoloLens 2. In Service Oriented, Holonic and Multi-Agent Manufacturing Systems for Industry of the Future; Borangiu, T., Trentesaux, D., Leitão, P., Eds.; Springer: Cham, Switzerland, 2023; pp. 278–287. [Google Scholar] [CrossRef]

- Prilla, M.; Janßen, M.; Kunzendorff, T. How to interact with AR head mounted devices in care work? A study comparing Handheld Touch (hands-on) and Gesture (hands-free) Interaction. AIS Trans.-Hum.-Comput. Interact. 2019, 11, 157–178. [Google Scholar] [CrossRef]

- Wang, H.Y.; Duh, H.B.L.; Li, N.; Lin, T.J.; Tsai, C.C. An investigation of university students’ collaborative inquiry learning behaviors in an augmented reality simulation and a traditional simulation. J. Sci. Educ. Technol. 2014, 23, 682–691. [Google Scholar] [CrossRef]

- Wan, T.; Doty, C.M.; Geraets, A.A.; Nix, C.A.; Saitta, E.K.; Chini, J.J. Evaluating the impact of a classroom simulator training on graduate teaching assistants’ instructional practices and undergraduate student learning. Phys. Rev. Phys. Educ. Res. 2021, 17, 10146. [Google Scholar] [CrossRef]

- Chen, C.Y.; Chang, S.C.; Hwang, G.J.; Zou, D. Facilitating EFL learners’ active behaviors in speaking: A progressive question prompt-based peer-tutoring approach with VR contexts. Interact. Learn. Environ. 2021, 31, 2268–2287. [Google Scholar] [CrossRef]

- Cheng, Y.W.; Wang, Y.; Cheng, I.L.; Chen, N.S. An in-depth analysis of the interaction transitions in a collaborative Augmented Reality-based mathematic game. Interact. Learn. Environ. 2019, 27, 782–796. [Google Scholar] [CrossRef]

- Hou, H.T.; Keng, S.H. A Dual-Scaffolding Framework Integrating Peer-Scaffolding and Cognitive-Scaffolding for an Augmented Reality-Based Educational Board Game: An Analysis of Learners’ Collective Flow State and Collaborative Learning Behavioral Patterns. J. Educ. Comput. Res. 2021, 59, 547–573. [Google Scholar] [CrossRef]

- Wang, H.Y.; Sun, J.C.Y. Influences of Online Synchronous VR Co-Creation on Behavioral Patterns and Motivation in Knowledge Co-Construction. Educ. Technol. Soc. 2022, 25, 31–47. [Google Scholar]

- Yang, X.; Cheng, P.Y.; Lin, L.; Huang, Y.M.; Ren, Y. Can an Integrated System of Electroencephalography and Virtual Reality Further the Understanding of Relationships Between Attention, Meditation, Flow State, and Creativity? J. Educ. Comput. Res. 2019, 57, 846–876. [Google Scholar] [CrossRef]

- Chang, K.E.; Chang, C.T.; Hou, H.T.; Sung, Y.T.; Chao, H.L.; Lee, C.M. Development and behavioral pattern analysis of a mobile guide system with augmented reality for painting appreciation instruction in an art museum. Comput. Educ. 2014, 71, 185–197. [Google Scholar] [CrossRef]

- Hwang, G.J.; Chang, S.C.; Chen, P.Y.; Chen, X.Y. Effects of integrating an active learning-promoting mechanism into location-based real-world learning environments on students’ learning performances and behaviors. Educ. Technol. Res. Dev. 2018, 66, 451–474. [Google Scholar] [CrossRef]

- Yang, X.X.; Lin, L.; Cheng, P.Y.; Yang, X.X.; Ren, Y.; Huang, Y.M. Examining creativity through a virtual reality support system. Educ. Technol. Res. Dev. 2018, 66, 1231–1254. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Y.T.; Liu, T.C.; Sung, Y.T.; Chang, K.E. Augmented reality worksheets in field trip learning. Interact. Learn. Environ. 2020, 31, 4–21. [Google Scholar] [CrossRef]

- Parsons, S. Authenticity in Virtual Reality for assessment and intervention in autism: A conceptual review. Educ. Res. Rev. 2016, 19, 138–157. [Google Scholar] [CrossRef]

- Radianti, J.; Majchrzak, T.A.; Fromm, J.; Wohlgenannt, I. A systematic review of immersive virtual reality applications for higher education: Design elements, lessons learned, and research agenda. Comput. Educ. 2020, 147, 103778. [Google Scholar] [CrossRef]

- Wang, H.; He, M.; Zeng, C.; Qian, L.; Wang, J.; Pan, W. Analysis of learning behaviour in immersive virtual reality. J. Intell. Fuzzy Syst. 2023, 45, 5927–5938. [Google Scholar] [CrossRef]

| Behavioral Analysis Techniques | Definition | References |

|---|---|---|

| Behavior frequency analysis | Behavior frequency analysis performs statistical analysis of the log of the coded behaviors recorded in the interaction system to obtain the behavior’s frequency and distribution information. | [30,31] |

| Quantitative content analysis (QCA) | QCA is a research method defined as systematically, objectively, and quantitatively assigning communication content to categories according to specific coding schemes and rules, and using statistical techniques to analyze the relationships involving these categories. | [32,33] |

| Lag sequential analysis (LSA) | LSA a research method that is more appropriate for analyzing the dynamic aspects of interaction behaviors according to time and present sequential chronology information of the users’ activities. | [34,35] |

| Social network analysis (SNA) | SNA is an effective quantitative analytical method for analyzing social structures between individuals in social life, which takes as the origin point the premise that social life is constructed primarily by nodes (e.g., individuals, groups, or committees), the relations between those nodes, and the patterns generated by those relations. | [36,37] |

| Cluster analysis | Cluster analysis classifies data to form meaningful data groups based on similarity (or homogeneity) in describing the data objects and the relationships among data. | [38] |

| Immersive Technology-Related Concept | AND | Behavioral Analysis-Related Concept | AND | Education-Related Concept |

|---|---|---|---|---|

| Immersive technologies* OR Virtual reality* OR VR OR Augmented reality* OR AR OR Mixed reality* OR MR OR Cross reality* OR Extended reality* OR XR | Behavior* analysis OR Behavioral pattern* OR Quantitative content analysis* OR QCA OR Lag sequential analysis* OR LSA OR Social network analysis* OR SNA OR Cluster analysis | Education* OR Learn* OR Train* OR Teach* OR Student* |

| Concept Matrix Facets | Categories | Description |

|---|---|---|

| 1.1 Learning stages | Conceptualization | Learners come into contact with concepts through presentation and visualization in the immersive learning environment. |

| Construction | Learners construct new knowledge through interactivity with others or virtual learning content in the immersive learning environment. | |

| Dialog | Learners test their emerging understanding of new knowledge through discussion with others or a more comprehensive range of interactivity in VLEs. | |

| 1.2 Cognitive learning outcomes/ objectives | Lower-level cognitive category | Remembering is a cognitive process with low cognitive complexity, including identifying and recalling relevant information from long-term memory. Understanding is a cognitive process that helps learners construct meaning from instructional activities and has sub-categories such as interpreting, exemplifying, classifying, summarizing, inferring, and explaining [67]. |

| Higher-level cognitive category | Applying is to implement acquired knowledge into practice. Analyzing is to break learned knowledge into constituent parts and determine the relationship of the parts with an overall structure. Evaluating is to judge learned knowledge based on specific criteria. Creating is to make new learning products by mentally reorganizing fragmented elements into new knowledge patterns or structures. | |

| 1.3 Learning activities | List of mini-learning activities | Learning activities are the actions learners display to reach intended learning goals. Individual mini-learning activities (behaviors) can be grouped into learning activities with an extensive range of granularity through specific behavioral patterns. |

| 2.1 Learner types | Specific learner types | The specific type of learners participating in immersive learning activities. |

| 2.2 Application domains | STEM | Science, technology, engineering, and mathematics (STEM) describes various academic disciplines related to these four terms, such as biology, chemistry, engineering, mathematics, physics, and more. |

| Humanities | Humanities describe academic disciplines that study aspects of human society and culture, including culture, history, language, and more. | |

| General Knowledge and skills | General knowledge and skills describe the application domains where learners study basic knowledge and skills to cultivate the essential ability to deal with daily affairs, such as cognitive and social skills, art and design, and reading. | |

| 3.1 Instructional design methods | Instructional strategies | Instructional strategies entail a set of instructional models to lead learners to understand what information has been provided, how the learning process functions, and how to acquire learning acquisition effectively. |

| Instructional techniques | Instructional techniques are the rules, procedures, tools, and skills used to implement instructional strategies in practice. | |

| 3.2 Coding schemes | Specific coding schemes | Coding schemes define the specific behavior sequences that would be analyzed using various behavioral analysis techniques. |

| 4.1 Hardware devices | Specific hardware devices | Hardware devices used in immersive learning activities. |

| 4.2 Software development tools | Specific software explicitly | Software tools used to develop immersive learning systems. |

| 5.1 HCI methods in VLEs | Specific HCI methods | Interaction methods between learners and VLEs. |

| 5.2 Behavior recording and recognition methods | Specific behavior recording methods | The applied methods used to record learners’ behavior sequences, such as videotaping, classroom observation, and automatic recording methods by software tools. |

| Manual and automatic coding methods | The manual coding method refers to the method of behavior recognition that is conducted manually and usually independently by two or more coders. Automatic coding methods refer to behavior recognition that is automatically conducted using software tools. | |

| 6.1 Behavioral analysis methods | Specific behavioral analysis methods | Behavioral analysis methods are used to analyze learners’ behavior sequences to construct behavioral patterns, such as behavior frequency analysis, QCA, LSA, SNA, and cluster analysis. |

| 6.2 Behavioral pattern outcomes | Constructed behavioral patterns | Behavioral patterns are constructed as the vital outcome of behavioral analysis in immersive learning environments. |

| 7.1 Learning iteration | Iteration expectation and difficulties | The learning iteration requirements found in the implementation of learning activities of behavioral analysis in immersive learning environments. |

| Instructional Design Methods | Categories | Description | Represen-Tational Articles |

|---|---|---|---|

| Instructional Strategies | Presentation | Presentation is an instructional strategy that suggests that learners obtain new knowledge through the presentation of learning tasks or material to strengthen cognitive organization ([77], p. 65). | A23, V20 |

| Discovery | Discovery is an instructional strategy that suggests that learners obtain new knowledge through discovering rather than being told about information ([77], p. 65). | A8, A9 | |

| Inquiry | Inquiry is an instructional strategy that emphasizes that learners actively participate in the learning process, where the learners’ inquiries, thoughts, and observations are used as the focal spot of the learning process ([77], p. 67). | A1, A10 | |

| Collaboration | Collaboration is an instructional strategy that suggests that learners obtain new knowledge through working in a social setting to solve problems ([77], p. 68). | A3, A5 | |

| Collaborative Inquiry | Collaborative inquiry is an instructional strategy that suggests that learners conduct scientific inquiry learning through face-to-face collaboration [28,79]. | A6, A12 | |

| Instructional Techniques | Observation | The observation technique is an instructional technique that suggests that learners monitor and examine the indicators or conditions of objects, facts, or materials within a well-designed plan through their eyes or available visual equipment ([80], pp. 204–205). | A1, A2 |

| Field Trip | A field trip is an instructional technique that suggests that learners gain additional knowledge through direct experiences in conducting an active research-oriented field project ([80], pp. 196–198). | A6, A12 | |

| Educational Game | An educational game is an instructional technique that suggests that learners gain knowledge through playing an educational game to increase learning motivation and promote creative work ([80], pp. 201–203). | A5, A7 | |

| Role-play | Role-play is an instructional technique that suggests that learners play specific roles in an explicitly established situation and gain knowledge through experiencing their “character” ([80], pp. 172–174). | A7, V3 | |

| Simulation | Simulation is an instructional technique that suggests that learners gain knowledge in a controlled, detailed situation that intends to reflect real-life conditions ([80], pp. 187–189). | A10, A11 | |

| Project | A project is an instructional technique that suggests that learners are involved in whole-hearted purposeful learning activities to accomplish a specific goal ([80], pp. 198–201). | V1, V4 |

| Behavior Recording Methods | Behavior Recognition Methods | Representative Articles |

|---|---|---|

| Videotaping | Manual coding | A1, M5 |

| Automatic coding | A3, A4 | |

| Videotaping combined with other manual recording methods | Manual coding | A11, A16 |

| Automatic coding | M4 | |

| Classroom observation by observers | Manual coding | M3 |

| Automatic behavior recording | Manual coding | A6, A15 |

| Automatic coding | A8, A9 | |

| Mixed recording methods combining manual and automatic methods | Manual coding | A21, V7 |

| Automatic coding | V8, M2 |

| Challenge Categories | Challenge Description | Representative Articles |

|---|---|---|

| Technology-related challenges | AR software requires excessive effort in designing compatible educational applications | A1 |

| Low stability and correctness in AR marker recognition | A2, A9 | |

| Highly immersive VR can distract students’ attention or produce Hawthorne effect | V1, V14 | |

| Simulator sickness | V1 | |

| Huge tools and context differences between VR learning and practical applications | V2 | |

| VR learning system stability problem | V5 | |

| Novelty effect of emerging VR technologies | V13, V14 | |

| Implementation-related challenges | Small sample sizes | A4, A7 |

| Research time restriction | A5, A12 | |

| Absence of control group | A7, A11 | |

| Equipment’s quantitative restrictions | V4, V5 | |

| Knowledge diffusion between groups | V4 | |

| Unequal gender ratio | V4, V11 | |

| Unfriendly MR manipulation and context settings for young children | M1 | |

| Low participation or response rate. | M3, M5 | |

| Analysis-related challenges | Insufficient interaction of learners with physical learning environment using AR system | A19 |

| Special behavior sequence recording restriction | V4, M4 | |

| Insufficient recording due to limited amount of equipment or number of observations | V9, M3 | |

| Lack of observation among group members using other behavioral analysis methods | V11 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Yue, K.; Liu, Y.; Yang, S.; Gao, H.; Sha, H. How to Construct Behavioral Patterns in Immersive Learning Environments: A Framework, Systematic Review, and Research Agenda. Electronics 2025, 14, 1278. https://doi.org/10.3390/electronics14071278

Liu Y, Yue K, Liu Y, Yang S, Gao H, Sha H. How to Construct Behavioral Patterns in Immersive Learning Environments: A Framework, Systematic Review, and Research Agenda. Electronics. 2025; 14(7):1278. https://doi.org/10.3390/electronics14071278

Chicago/Turabian StyleLiu, Yu, Kang Yue, Yue Liu, Songyue Yang, Haolin Gao, and Hao Sha. 2025. "How to Construct Behavioral Patterns in Immersive Learning Environments: A Framework, Systematic Review, and Research Agenda" Electronics 14, no. 7: 1278. https://doi.org/10.3390/electronics14071278

APA StyleLiu, Y., Yue, K., Liu, Y., Yang, S., Gao, H., & Sha, H. (2025). How to Construct Behavioral Patterns in Immersive Learning Environments: A Framework, Systematic Review, and Research Agenda. Electronics, 14(7), 1278. https://doi.org/10.3390/electronics14071278