1. Introduction

In recent years, the global navigation satellite system (GNSS) has become the primary tool for obtaining geographic location information, and it plays a crucial role in daily human activities. However, GNSS signals are often unstable in complex urban environments, particularly when obstructions from dense buildings and interference from various electronic signals occur [

1]. Under such conditions, GNSS positioning accuracy often fails to meet the requirements for high-precision localization. In such cases, image-based visual geo-localization (VG) methods can serve as an effective complement, helping to complete localization tasks when GNSS signals are obstructed.

VG, also known as visual place recognition or image-based localization [

2], aims to estimate the geographic location of a query image without relying on additional information, such as GNSSs, as illustrated in

Figure 1. This task is commonly treated as an image retrieval task, in which the key challenge is to learn discriminative feature representations [

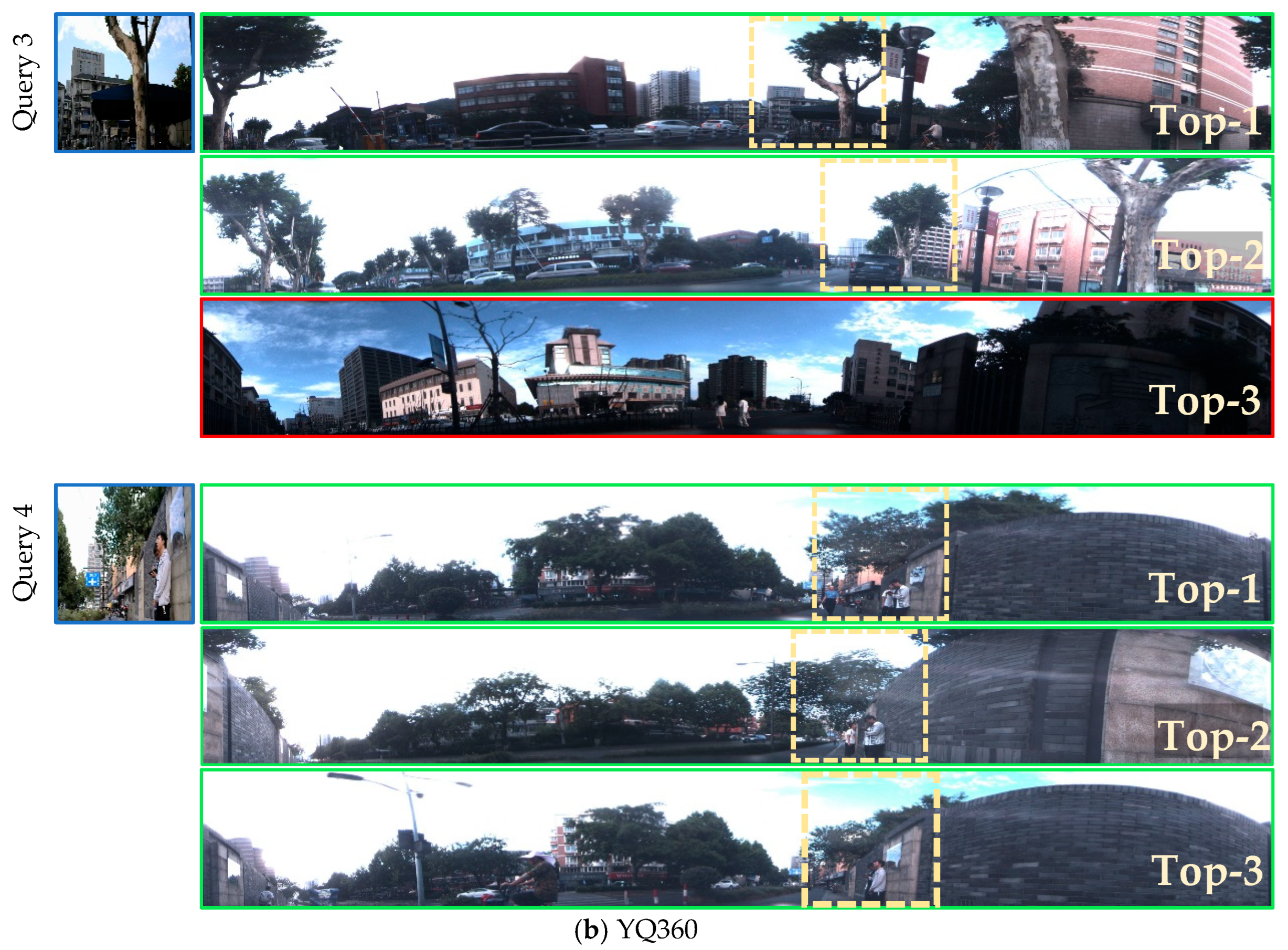

3]. Recently, deep learning has achieved remarkable success in computer vision because of its powerful feature extraction capabilities, which have significantly advanced the field of VG. However, two main challenges remain to be addressed in deep learning-based VG. The first is the increase in false positive samples caused by weakly supervised learning that relies on GPS labels. As illustrated in

Figure 2, owing to the limited field of view (FOV) of perspective images from pinhole cameras, like those in the Norland database [

4], or from cropped panoramic images in the Pitts250k [

5,

6] and SF-XL [

2] databases, geographically close images can exhibit varying scene content when oriented differently [

7,

8]. However, current deep learning-based algorithms typically select positive and negative training samples based solely on the GPS labels [

9,

10]. Specifically, reference images located within 10 m of the query image are considered positive samples; otherwise, they are classified as negative samples. This leads to the wrong selection of a large number of false positive samples, which might not have any overlapping regions with a query. During training, these false positive samples inevitably introduce substantial misleading information, ultimately degrading model performance. The second problem is the inability to achieve stable matching and localization in complex and dynamic urban environments. Urban scene appearances are highly susceptible to changes in lighting, seasonal changes, and the movement of objects (e.g., pedestrians, vehicles, and obstacles) [

11,

12], which results in significant visual differences between images captured at different times in the same location. This remains a major challenge to the stability of matching and localization tasks.

To address the problems of inaccurate GPS labels in the context of weakly supervised learning, Kim et al. [

13] attempted to extract true positive images by verifying their geometric relations; however, this approach was limited by the accuracy of off-the-shelf geometric techniques. Some researchers [

14,

15,

16] have adopted panoramic images as query and reference inputs. However, the high-dimensional feature similarity computation between panoramic image pairs imposes substantial computational overhead. Moreover, the precision optical components required in panoramic imaging systems present higher cost barriers for industrial-scale deployment.

To achieve stable geo-localization in dynamic urban environments, researchers have focused on constructing image datasets that include images taken at multiple time points and under various conditions to improve the robustness of the model to urban scene variations [

11]. However, this approach significantly increases the complexity of data collection and preprocessing. Furthermore, other studies [

17,

18,

19] have concentrated on modifying network architectures and integrating attention mechanisms to enhance the ability to learn discriminative features at the cost of increasing network complexity. Nevertheless, these methods primarily emphasize the extraction of visual appearance information while neglecting structural information.

To address this challenge, we propose a VG framework that matches perspective images to panoramic images. This framework compares query perspective images and panoramic reference images tagged with GPS labels, which eliminates potential errors in sample selection caused by the limited FOV. However, directly extracting and matching features between perspective and panoramic images will introduce substantial redundant information into the matching process. This primarily stems from the inherent asymmetry in information content and data volume caused by the narrow FOV characteristics of perspective images versus the wide FOV properties of panoramic images. Addressing this challenge constitutes the critical pathway toward achieving high-precision and computationally efficient matching between perspective and panoramic imagery. Based on this, the adaptive scene alignment (ASA) module is proposed to address this problem. Furthermore, we propose the structural feature enhancement (SFE) module, which is based on linear feature extraction. This module automatically identifies and enhances continuous and clear linear features in an image, guiding the model to focus on regions rich in structural information to extract long-term stable features in dynamic urban environments.

In summary, the contributions of this study can be summarized as follows:

An ASA-based perspective-to-panoramic image VG framework is proposed. The framework uses panoramic images as reference images to address the problems of inaccurate GPS labels in the context of weakly supervised learning. The ASA module is designed to eliminate redundant scene information between the perspective and panoramic images.

An SFE module is proposed to actively exploit and capture linear features. The linear features contain rich and stable structural attributes, providing crucial insights for aligning the image semantics.

State-of-the-art performance is achieved on two benchmark datasets for VG. The results of extensive experiments demonstrate the effectiveness of the proposed method in terms of both accuracy and efficiency.

3. Methodologies

This section describes our proposed method in detail. First, we provide an overview of the network architecture. Next, we present several key modules in the architecture. Finally, we describe the loss function.

3.1. Overall Framework

Current deep learning-based VG methods typically use perspective images with a limited FOV as reference images. However, due to the weakly supervised learning that relies on GPS labels, the model may encounter difficulties in correctly distinguishing between positive and negative samples. Conversely, we use panoramic images with a wide FOV as references. Consequently, it is possible to correctly select positive and negative samples even when relying solely on GPS labels.

Figure 3 presents our proposed network architecture. First, we set panoramic image

as the reference and input it into the network along with the query perspective image

, which effectively reduces errors in sample selection. Then, we leverage the LskNet [

26] encoder as the backbone network to extract information about the target of interest and the background context required for target recognition, thereby generating a feature representation that is robust to changes in the appearance of the scene. We regard the four convolutional residual blocks in LskNet as four stages, and the features extracted at different stages have different depth levels. To further enhance the model’s ability to adapt to changes in the appearance of the scene, we integrate the proposed SFE module into the shallow stage 2. The purpose of this is to increase the weight of reliable matching features and reduce the weight of irrelevant features. In addition, to eliminate the feature redundancy between perspective and panoramic images, the deep features extracted from stage 3 are input into the proposed ASA module, where the correlations between the perspective and panoramic image features are computed along the horizontal direction to search for aligned scene regions. The feature aggregation module MixVPR [

27] is introduced to aggregate the aligned scene region features into global representations. Finally, a weighted soft margin triplet loss function [

28] is used to train the model.

3.2. SFE: Structure Feature Enhancement by Exploiting Linear Features

To extract features, the proposed model employs LskNet [

26] as the backbone, which adaptively uses different large kernels and adjusts the receptive field for each target in space as required. This capability not only enables the model to capture the feature information of the target itself but also prompts the model to capture background information related to the foreground target. Building on this framework, we develop the SFE module and integrate it into the LskNet to augment the capacity for learning stable structural features, thus facilitating the generation of more comprehensive and robust feature representations.

The primary focus of the VG task is large urban areas. By analyzing urban street scenes, we observed that objects with stable geometric structures, such as buildings, roads, and streetlights, are ubiquitous. In contrast to visual appearance features, the features of geometric structures rarely change over time [

29,

30]. This universality and long-term stability make them highly suitable key features for street-view imagery matching. In general, objects with stable structural features have clear and continuous linear features, whereas those with ambiguous structures, such as pedestrians, trees, and vehicles, often lack pronounced linear features and appear as irregular textures and shapes in images. Therefore, the proposed SFE module is based on linear feature extraction and can automatically identify and capture linear features. These linear features focus the model on the image regions with stable structural features, ultimately generating long-term stable feature representations. The module can be flexibly integrated into different stages of the network, and its detailed architecture is shown in the blue dashed frame at the bottom of

Figure 3.

For the input feature map,

, to emphasize its salient regions, we perform a sum-pooling operation along the channel axis. The aggregated feature map

can be obtained using

where

SumPool(·) [

31] is the sum-pooling operation.

The aggregated feature map

is processed using two 3 × 3 convolutional kernels, as illustrated in

Figure 4. The kernels

are specifically designed to maximize the responses to vertical and horizontal edges, respectively. During processing, regarding these two convolution kernels as sliding windows that slide over the entire feature map

, the convolution operation results

at any spatial location

of the feature map

can be represented by the following formulas:

where

denotes the spatial coordinates on the feature map

,

denotes the relative coordinates inside the two convolution kernels

, and

Next, we compute the sum of the squares of these two convolution results

and take the square root. The resulting value represents the intensity

of pixel

in the output mask, directly reflecting the significance of the pixel as a line feature. This process can be mathematically formulated as

After normalization, the final line attention mask

is obtained. This process is mathematically expressed as follows:

where

is batch normalization [

32] and

[

33] is the rectified linear unit activation function.

To utilize the generated line attention mask

for highlighting important regions in the feature map

, we first reduplicate its channels to the same size of

and generate the expanded channel-line attention mask as

where

is used to extend the dimensions of a tensor by utilizing the tensor broadcasting mechanism to replicate the single-channel attention mask

to

, and

is the channel size of

.

is a soft mask acting as a weighting function. The straightforward stacking of the line feature mask onto the original feature image (directly perform matrix element-wise addition on

and

) may lead to a decline in performance. To mitigate this issue, we employ attentional residual learning [

34]. Specifically, we use the element-wise product to re-weight the values in the feature map

and obtain the final feature map as follows:

where

represents element-wise multiplication, i.e., the corresponding elements of the two matrices are multiplied;

represents element-wise addition, i.e., the corresponding elements of the two matrices are added.

3.3. ASA Module

The scene content missing due to the limited field of view in perspective street images leads to the presence of many false positive samples being incorrectly selected by GPS proximity filtering, which have no overlapping area with the query image. To address this challenge, in this framework, panoramic images serve as reference images, characterized by a comprehensive FOV that encompasses all scene information at a given location. By contrast, the query perspective images captured by a standard camera only include partial scene information. Due to the significant differences between the FOV coverage and information content in the two image types, a direct comparison of their features would introduce a substantial amount of redundant data, consequently reducing the accuracy and efficiency of the matching process. Drawing inspiration from the mechanisms by which the human visual system discerns scenes—in particular, the natural scanning of the environment and the acquisition of scene information from multiple directions to enable precise comparison and positioning—we have developed the ASA module in this study to emulate this process. Specifically, after extracting the deep features from the images, the module calculates the correlation between the perspective and panoramic features along the equatorial direction. The network then uses this correlation to adaptively crop the region with the highest response. This strategy aims to preselect candidate regions with high scene similarity, thereby providing a strong initial condition for subsequent matching and localization. The detailed workflow is illustrated in

Figure 5.

Upon the completion of feature extraction by the backbone network, perspective feature image

can be used as a sliding window to search across panoramic feature image

. However, because the panoramic image is imaged with equal rectangular projection [

35], the image unfolding process cuts off the left and right boundary features. To simulate the continuity and completeness of real-world scenes, the left and right boundaries of the panoramic feature image are first circularly stitched to obtain a seamless panoramic feature image

before the sliding search is performed. While the perspective and panoramic features maintain a consistent vertical viewpoint, they exhibit variation in the horizontal viewpoints, necessitating traversal along the equatorial axis. During the sliding search, we calculate the similarity

between the perspective and panoramic features in all directions, thereby quantitatively evaluating the correlation between them. This strategy helps the model adaptively locate the scene alignment region of the panoramic image with respect to the perspective image. The correlation

between the perspective and panoramic feature is expressed as

where

denote the number of channels and height of the feature images, respectively, and

,

denote the widths of the perspective and panoramic images, respectively.

After the correlation has been computed, the region with the maximum similarity score is the scene alignment area of the panoramic image with respect to the perspective image. This area is then accurately extracted from the panoramic image and then fed into the MixVPR along with the perspective feature for further processing.

3.4. Feature Aggregation Strategy

To achieve efficient and accurate visual geolocation tasks in city-scale databases, it is necessary to adopt appropriate feature aggregation strategies to semantically encode the scene-aligned feature image pairs processed by the ASA module into compact and robust feature representations. To effectively integrate both visual features and spatial structural characteristics into the feature representation, this paper introduces an efficient feature aggregation strategy—MixVPR. Unlike traditional pyramid-style hierarchical aggregation that manually weights local regions, MixVPR employs multiple structurally identical feature mixers composed of multi-layer perceptrons (MLPs) [

36] to iteratively fuse feature maps. These feature mixers leverage the capability of fully connected layers to automatically aggregate features in a holistic manner rather than focusing on localized features, thereby generating more compact and robust feature representations. The detailed is illustrated in

Figure 6.

For the feature images

extracted from the LskNet, they can be regarded as a collection of

two-dimensional features

of size

where

denote the channels, height, and width of

, and

denotes the

-th 2D feature along the channel dimension in

.

Next, each in is flattened into a one-dimensional vector using the Flatten() function, resulting in the flattened feature maps ,.

Then, a feature mixer

is applied to

to produce an output

. This process can be mathematically expressed as

where

denotes the weights of two fully connected layers and

denotes the nonlinear activation functions.

This output is then fed into a second feature mixer

and so on until it is passed through the L-th feature mixer. Through iterative fusion, cross-level feature relationships are effectively integrated, and this process can be mathematically expressed as

where

denotes the feature mixer and L denotes the number of iterations.

The dimensions of

are the same as the feature map

. To generate low-dimensional global descriptors, two fully connected layers are added after the feature mixer. The first fully connected layer is used to reduce the depth (channel) dimension, and the second fully connected layer is used to reduce the row dimension. Specifically, this is performed using a depth projection, which maps the Z dimension from

to

, and the process is expressed as follows:

where

denotes the weight of the first fully connected layer. Next, a row projection is applied to map the

dimension from

to

and the process is expressed as follows:

where

denotes the weight of the second fully connected layer and the dimensions of the final output

are

. Finally, the global feature descriptor

is produced subsequent to the processes of flattening and L2 normalization.

3.5. Weighted Soft Margin Triplet Loss

During training, the ASA module is applied to all perspective–panoramic pairs. For the matching pairs, the training process focuses on maximizing the similarity score between the feature descriptors of the perspective image and scene alignment area (the area of the panoramic image that is the most similar to the perspective image). This approach enhances the identification of viewpoint differences. For non-matching pairs, there exists no region on the panoramic image that possesses the FOV as the perspective image. Nonetheless, a region exhibiting the highest degree of similarity will be present. By minimizing the similarity score between the feature descriptors of perspective and the most similar region, the ability to distinguish features that are challenging to classify can be enhanced. Therefore, in the experiment, we train our network using weighted soft margin triplet loss [

27]. The calculations in this loss function are as follows:

where

denotes the feature descriptor of the perspective image,

and

denote the feature descriptors of the scene alignment areas cropped from the matching and non-matching panoramic images, respectively. Function

is used to calculate the Euclidean distance of the matching and non-matching pairs, and parameter

controls the convergence rate of the training process.

5. Conclusions

Traditional deep learning-based VG tasks typically rely on GPS labels to select positive and negative samples. When using perspective images as training samples, this method often leads to errors in sample selection. In this paper, we propose the ASA-VG framework, in which we select panoramic images with a complete FOV as reference images and leverage the LskNet backbone network integrated with the SFE module to extract contextual information containing geometric spatial relationships. Additionally, we design the ASA module to achieve scene alignment between perspective and panoramic images.

Experimental results demonstrate that our proposed ASA-VG algorithm achieves significant improvements in accuracy and efficiency compared to the SOTA algorithms. This method, by modeling geometric structure information and contextual information in images, can better adapt to the changes in scene appearance over time. Additionally, benefiting from the scene pre-alignment operation, the framework exhibits a faster and more accurate retrieval and localization ability.

However, we still face a challenge compared to traditional VG algorithms: the benchmark datasets required for this algorithm are relatively limited, and the generalization performance may be lower than that of traditional VG algorithms when the training sample is small.

With the development of VFMs, universal feature representations can be obtained using limited samples. In the future, we will continue to explore how to combine perspective–panoramic VG with visual foundation models to improve the model’s generalization performance.