Implicit Identity Authentication Method Based on User Posture Perception

Abstract

1. Introduction

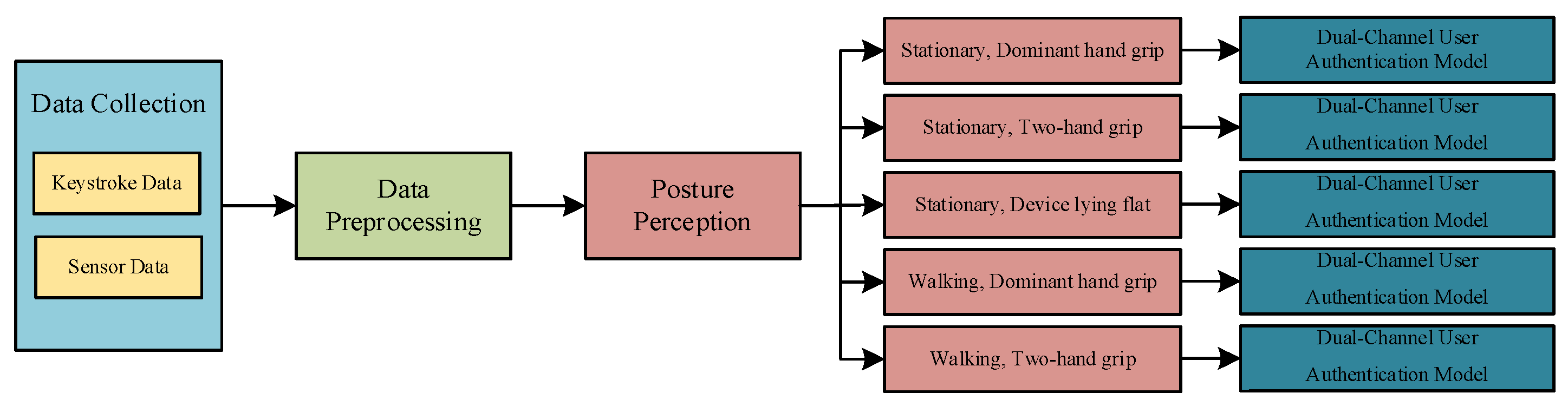

- An implicit authentication dataset containing two activity scenarios and three holding postures is constructed.

- An algorithm for recognizing user gestures using sensor data is proposed, which solves the problem of too significant feature differences caused by different operating gestures.

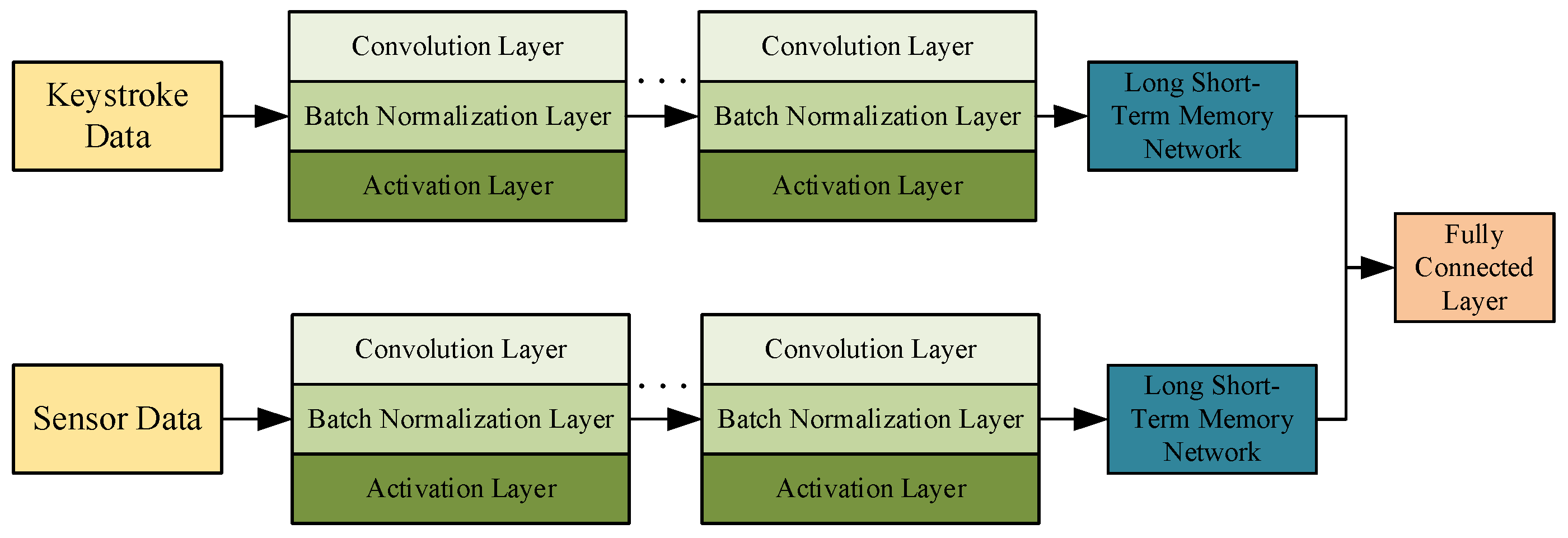

- An implicit authentication method fusing keystrokes and sensor data is proposed. This method fully utilizes CNN’s local spatial feature extraction capability and LSTM’s temporal feature extraction capability to capture keystroke data and sensor data’s continuity characteristics and unique behavioral features.

2. Related Work

3. Data Collection and Preprocessing

3.1. Data Collection

3.2. Data Preprocessing

4. Experimental Analysis

4.1. Posture Perception Model

4.2. Dual-Channel User Authentication Model

4.3. Experimental Environment

4.4. Experimental Result

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Khan, S.; Nauman, M.; Othman, A.T.; Musa, S. How Secure is Your Smartphone: An Analysis of Smartphone Security Mechanisms. In Proceedings of the 2012 International Conference on Cyber Security, Cyber Warfare and Digital Forensic (CyberSec), Kuala Lumpur, Malaysia, 26–28 June 2012; pp. 76–81. [Google Scholar]

- Naji, Z.; Bouzidi, D. Deep learning approach for a dynamic swipe gestures based continuous authentication. In Proceedings of the 3rd International Conference on Artificial Intelligence and Computer Vision (AICV2023), Marrakesh, Morocco, 5–7 March 2023; Springer: Cham, Switzerland, 2023; pp. 48–57. [Google Scholar]

- Stylios, I.; Chatzis, S.; Thanou, O.; Kokolakis, S. Continuous authentication with feature-level fusion of touch gestures and keystroke dynamics to solve security and usability issues. Comput. Secur. 2023, 132, 103363. [Google Scholar] [CrossRef]

- Al-Saraireh, J.; AlJa’afreh, M.R. Keystroke and swipe biometrics fusion to enhance smartphones authentication. Comput. Secur. 2023, 125, 103022. [Google Scholar] [CrossRef]

- Chao, J.; Hossain, M.S.; Lancor, L. Swipe gestures for user authentication in smartphones. J. Inf. Secur. Appl. 2023, 74, 103–450. [Google Scholar] [CrossRef]

- Sejjari, A.; Moujahdi, C.; Assad, N.; Abdelfatteh, H. Dynamic authentication on mobile devices: Evaluating continuous identity verification through swiping gestures. SIViP 2024, 18, 9095–9103. [Google Scholar] [CrossRef]

- Li, Z.; Han, W.; Xu, W. A Large-Scale Empirical Analysis of Chinese Web Passwords. In Proceedings of the 23rd USENIX Security Symposium (USENIX Security 14), San Diego, CA, USA, 20–22 August 2014; pp. 559–574. [Google Scholar]

- Mazurek, M.L.; Komanduri, S.; Vidas, T.; Bauer, L.; Christin, N.; Cranor, L.F.; Kelley, P.G.; Shay, R.; Ur, B. Messuring Password Guessability for an Entire University. In Proceedings of the 2013 ACM SIGSAC Conference on Computer & Communications Security, Berlin, Germany, 4–8 November 2013; pp. 173–186. [Google Scholar]

- Yang, W.Q.; Wang, M.; Zou, S.H.; Peng, J.H.; Xu, G.S. An Implicit Identity Authentication Method Based on Deep Connected Attention CNN for Wild Environment. In Proceedings of the 2021 9th International Conference on Communications and Broadband Networking, Shanghai, China, 27 February 2021; pp. 94–100. [Google Scholar]

- Kokal, S.; Vanamala, M.; Dave, R. Deep Learning and Machine Learning, Better Together Than Apart: A Review on Biometrics Mobile Authentication. J. Cybersecur. Priv. 2023, 3, 227–258. [Google Scholar] [CrossRef]

- Sandhya, M.; Morampudi, M.K.; Pruthweraaj, I.; Garepally, P.S. Multi-instance cancelable iris authentication system using triplet loss for deep learning models. Vis. Comput. 2022, 39, 1571–1581. [Google Scholar] [CrossRef]

- Zeroual, A.; Amroune, M.; Derdour, M.; Bentahar, A. Lightweight deep learning model to secure authentication in Mobile Cloud Computing. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 6938–6948. [Google Scholar] [CrossRef]

- Cao, Q.; Xu, F.; Li, H. User Authentication by Gait Data from Smartphone Sensors Using Hybrid Deep Learning Network. Mathematics 2022, 10, 2283. [Google Scholar] [CrossRef]

- Delgado-Santos, P.; Tolosana, R.; Guest, R.; Vera-Rodriguez, R.; Deravi, F.; Morales, A. GaitPrivacyON: Privacy-preserving mobile gait biometrics using unsupervised learning. Pattern Recognit. Lett. 2022, 161, 30–37. [Google Scholar] [CrossRef]

- Stragapede, G.; Delgado-Santos, P.; Tolosana, R.; Vera-Rodriguez, R.; Guest, R.; Morales, A. Mobile Keystroke Biometrics Using Transformers. In Proceedings of the 2023 IEEE 17th International Conference on Automatic Face and Gesture Recognition (FG), Waikoloa Beach, HI, USA, 5–8 January 2023. [Google Scholar]

- Zheng, Y.; Wang, S.; Chen, B. Multikernel correntropy based robust least squares one-class support vector machine. Neurocomputing 2023, 545, 126–324. [Google Scholar] [CrossRef]

- Peralta, D.; Galar, M.; Triguero, I.; Paternain, D.; García, S.; Barrenechea, E.; Benítez, J.M.; Bustince, H.; Herrera, F. A Survey on Fingerprint Minutiae-based Local Matching for Verification and Identification: Taxonomy and Experimental Evaluation. Inf. Sci. 2015, 315, 67–87. [Google Scholar] [CrossRef]

- Huang, P.; Gao, G.; Qian, C.; Yang, G.; Yang, Z. Fuzzy Linear Regression Discriminant Projection for Face Recognition. IEEE Access 2017, 5, 4340–4349. [Google Scholar] [CrossRef]

- Dovydaitis, L.; Rasymas, T.; Rudžionis, V. Speaker Authentication System based on Voice Biometrics and Speech Recognition. In Proceedings of the International Conference on Business Information Systems, Leipzig, Germany, 6–8 July 2016; pp. 79–84. [Google Scholar]

- Ignat, A.; Luca, M.; Ciobanu, A. Iris Features Using Dual Tree Complex Wavelet Transform in Texture Evaluation for Biometrical Identification. In Proceedings of the 2013 E-Health and Bioengineering Conference (EHB), Iasi, Romania, 21–23 November 2013; pp. 1–4. [Google Scholar]

- Alzubaidi, A.; Kalita, J. Authentication of Smartphone Users Using Behavioral Biometrics. IEEE Commun. Surv. Tutor. 2016, 18, 1998–2026. [Google Scholar] [CrossRef]

- Liu, J.; Yuan, Q.; Li, Y.; Lv, X. Recognition of Human Motion Based on Built-in Sensors of Android Smartphones. J. Integr. Technol. 2014, 3, 61–67. [Google Scholar]

- McLoughlin, I.V. Keypress Biometrics for User Validation in Mobile Consumer Devices. In Proceedings of the 2009 IEEE 13th International Symposium on Consumer Electronics, Kyoto, Japan, 25–28 May 2009; pp. 280–284. [Google Scholar]

- Wu, J.S.; Lin, W.C.; Lin, C.T.; Wei, T.E. Smartphone Continuous Authentication based on Keystroke and Gesture Profiling. In Proceedings of the 2015 International Carnahan Conference on Security Technology (ICCST), Taipei, Taiwan, 21–24 September 2015; pp. 191–197. [Google Scholar]

- Buchoux, A.; Clarke, N.L. Deployment of Keystroke Analysis on a Smartphone. In Proceedings of the 6th Australian Information Security Management Conference, Perth, Australia, 1 December 2008; pp. 29–39. [Google Scholar]

- Zahid, S.; Shahzad, M.; Khayam, S.A.; Farooq, M. Keystroke-based User Identification on Smart Phones. In Proceedings of the International Workshop on Recent Advances in Intrusion Detection, Saint-Malo, France, 23–25 September 2009; pp. 224–243. [Google Scholar]

- Kambourakis, G.; Damopoulos, D.; Papamartzivanos, D.; Pavlidakis, E. Introducing Touchstroke: Keystroke-based Authentication System for Smartphones. Secur. Commun. Netw. 2016, 9, 542–554. [Google Scholar] [CrossRef]

- Zhang, H.; Yan, C.; Zhao, P.; Wang, M. Model Construction and Authentication Algorithm of Virtual Keystroke Dynamics for Smartphone Users. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; pp. 171–175. [Google Scholar]

- Krishnamoorthy, S.; Rueda, L.; Saad, S.; Elmiligi, H. Identification of User Behavioral Biometrics for Authentication Using Keystroke Dynamics and Machine Learning. In Proceedings of the 2018 2nd International Conference on Biometric Engineering and Applications (ICBEA’18), Amsterdam, The Netherlands, 16–18 May 2018; pp. 50–57. [Google Scholar]

- Zheng, N.; Bai, K.; Huang, H.; Wang, H. You Are How You Touch: User Verification on Smartphones via Tapping Behaviors. In Proceedings of the 2014 IEEE 22nd International Conference on Network Protocols, Raleigh, NC, USA, 21–24 October 2014; pp. 221–232. [Google Scholar]

- Kang, P.; Cho, S. Keystroke Dynamics-based User Authentication Using Long and Free Text Strings from Various Input Devices. Inf. Sci. 2015, 308, 72–93. [Google Scholar] [CrossRef]

- Kim, J.; Kim, H.; Kang, P. Keystroke Dynamics-based User Authentication Using Freely Typed Text based on User-Adaptive Feature Extraction and Novelty Detection. Appl. Soft Comput. 2018, 62, 1077–1087. [Google Scholar] [CrossRef]

- Buschek, D.; De Luca, A.; Alt, F. Improving Accuracy, Applicability and Usability of Keystroke Biometrics on Mobile Touchscreen Devices. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Republic of Korea, 18–23 April 2015; pp. 1393–1402. [Google Scholar]

- Giuffrida, C.; Majdanik, K.; Conti, M.; Bos, H. I Sensed It Was You: Authenticating Mobile Users with Sensor-Enhanced Keystroke Dynamics. In Proceedings of the 11th International Conference on Detection of Intrusions and Malware, and Vulnerability Assessment, Egham, UK, 10–11 July 2014; pp. 92–111. [Google Scholar]

- Shankar, V.; Singh, K. An Intelligent Scheme for Continuous Authentication of Smartphone Using Deep Auto Encoder and Softmax Regression Model Easy for User Brain. IEEE Access 2019, 7, 48645–48654. [Google Scholar] [CrossRef]

- Buriro, A.; Crispo, B.; Gupta, S.; Frari, F.D. Dialerauth: A Motion-Assisted Touch-based Smartphone User Authentication Scheme. In Proceedings of the eighth ACM Conference on Data and Application Security and Privacy, Tempe, AZ, USA, 19–21 March 2018; pp. 267–276. [Google Scholar]

- Kim, J.; Kang, P. Freely Typed Keystroke Dynamics-Based User Authentication for Mobile Devices Based on Heterogeneous Features. Pattern Recognit. 2020, 108, 107556. [Google Scholar] [CrossRef]

- Sitová, Z.; Šeděnka, J.; Yang, Q.; Peng, G.; Zhou, G.; Gasti, P.; Balagani, K.S. HMOG: New Behavioral Biometric Features for Continuous Authentication of Smartphone Users. IEEE Trans. Inf. Forensics Secur. 2015, 11, 877–892. [Google Scholar] [CrossRef]

- Lamiche, I.; Bin, G.; Jing, Y.; Yu, Z.; Hadid, A. A Continuous Smartphone Authentication Method based on Gait Patterns and Keystroke Dynamics. J. Ambient. Intell. Humaniz. Comput. 2019, 10, 4417–4430. [Google Scholar] [CrossRef]

- Centeno, M.P.; Guan, Y.; Moorsel, A.V. Mobile based Continuous Authentication Using Deep Features. In Proceedings of the 2nd International Workshop on Embedded and Mobile Deep Learning, Munich, Germany, 15 June 2018; pp. 19–24. [Google Scholar]

- Volaka, H.C.; Alptekin, G.; Basar, O.E.; Isbilen, M.; Incel, O.D. Towards Continuous Authentication on Mobile Phones Using Deep Learning Models. Procedia Comput. Sci. 2019, 155, 177–184. [Google Scholar] [CrossRef]

| Field | Type | Description |

|---|---|---|

| user ID | string | Identifier assigned to the data collector. |

| action | int | 1 for stationary, 2 for walking. |

| Pose | Int | 1 for dominant-hand grip, 2 for two-hand grip, 3 for device lying flat on a table. |

| Accelerometer | List | Accelerometer data collected during user input. Each set of three represents x-axis, y-axis, and z-axis. |

| Gyroscope | List | Gyroscope data collected during user input. Each set of three represents x-axis, y-axis, and z-axis. |

| Gap | List | Represents the press time, release time, and press position of the user clicking the keyboard |

| Experimental Element | Experimental Arrangement |

|---|---|

| Number of Participants | 60 people. |

| Experimental Equipment | Personal Android phone. |

| Activity Scenarios | Stationary, Walking. |

| Holding Postures | Dominant-hand grip, Two-hand grip, Device lying flat. |

| Time Span | Each volunteer participates in data collection for 7 rounds, at least one round per day, with each round including data collection in 2 activity scenarios and 3 holding postures. |

| Layer | Convolution Kernel Size | Number of Convolution Kernels |

|---|---|---|

| Convolution Layer | 1 × 5 | 12 |

| Batch Normalization Layer | / | / |

| Activation Layer | / | / |

| Batch Normalization Layer | 1 × 5 | 24 |

| Activation Layer | / | / |

| Batch Normalization Layer | / | / |

| Batch Normalization Layer | 1 × 5 | 36 |

| Activation Layer | / | / |

| Batch Normalization Layer | / | / |

| Bi-directional LSTM Module | / | / |

| Type | Configuration |

|---|---|

| Operating System | Ubuntu 18.04 LTS |

| Python | 3.8 |

| CUDA | 11.2 |

| NVIDIA Driver | 460.8 |

| CPU | 2×Intel(R)Xeon(R)Silver4216CPU@2.10GHz |

| Memory | 8 × 32 GB |

| GPU | 8×NVIDIAQuadroRTX8000 |

| Activity Scenario | Holding Posture | Accuracy | FAR | FRR | EER |

|---|---|---|---|---|---|

| Stationary | Dominant-hand grip | 81.64% | 0.1782 | 0.1904 | 0.1880 |

| Two-hand grip | 86.78% | 0.0910 | 0.0916 | 0.0924 | |

| Device lying flat | 95.83% | 0.0624 | 0.0623 | 0.0624 | |

| Walking | Dominant-hand grip | 76.28% | 0.1927 | 0.2018 | 0.1996 |

| Two-hand grip | 83.24% | 0.1237 | 0.1224 | 0.1276 |

| User Posture | Data Type | Accuracy |

|---|---|---|

| Stationary, Dominant-hand grip | Motion sensor | 78.32% |

| Motion sensor + Keystroke | 81.64% | |

| Stationary, Two-hand grip | Motion sensor | 83.91% |

| Motion sensor + Keystroke | 86.78% | |

| Stationary, Device lying flat | Motion sensor | 93.76% |

| Motion sensor + Keystroke | 95.83% | |

| Walking, Dominant-hand grip | Motion sensor | 71.53% |

| Motion sensor + Keystroke | 76.28% | |

| Walking, Two-hand grip | Motion sensor | 78.42% |

| Motion sensor + Keystroke | 83.24% |

| User Posture | Model Type | Accuracy |

|---|---|---|

| Stationary, Dominant-hand grip | CNN | 74.84% |

| stacked LSTM | 77.38% | |

| CNN-LSTM | 81.64% | |

| Stationary, Two-hand grip | CNN | 81.24% |

| stacked LSTM | 83.75% | |

| CNN-LSTM | 86.78% | |

| Stationary, Device lying flat | CNN | 91.59% |

| stacked LSTM | 93.75% | |

| CNN-LSTM | 95.83% | |

| Walking, Dominant-hand grip | CNN | 68.88% |

| stacked LSTM | 71.42% | |

| CNN-LSTM | 76.28% | |

| Walking, Two-hand grip | CNN | 75.69% |

| stacked LSTM | 79.12% | |

| CNN-LSTM | 83.24% |

| Method | Data Source | Classifier | Activity Scenario | Holding Posture | Experimental Performance |

|---|---|---|---|---|---|

| Reference [38] | Ac, Gy, Ma | Scale Manhatten | Stationary/Walking | - | EER: Stationary-10.05%, Walking-7.16% |

| Reference [39] | Ac | MLP | Walking | - | ACC: 99.11% EER: 1% |

| Reference [40] | Ac, Gy, Ma | CNN | Stationary/Walking | - | ACC: 97.8% |

| Reference [41] | Screen, Ac, Gy, Ma | CNN | Stationary/Walking | - | ACC: 88% EER: 15% |

| Ours | Screen, Ac, Gy | CNN-LSTM | Stationary/Walking | Dominant-hand grip/Two-hand grip/Device lying flat | ACC: 85.76% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, B.; Tang, S.; Huang, F.; Yin, G.; Cai, J. Implicit Identity Authentication Method Based on User Posture Perception. Electronics 2025, 14, 835. https://doi.org/10.3390/electronics14050835

Hu B, Tang S, Huang F, Yin G, Cai J. Implicit Identity Authentication Method Based on User Posture Perception. Electronics. 2025; 14(5):835. https://doi.org/10.3390/electronics14050835

Chicago/Turabian StyleHu, Bo, Shigang Tang, Fangzheng Huang, Guangqiang Yin, and Jingye Cai. 2025. "Implicit Identity Authentication Method Based on User Posture Perception" Electronics 14, no. 5: 835. https://doi.org/10.3390/electronics14050835

APA StyleHu, B., Tang, S., Huang, F., Yin, G., & Cai, J. (2025). Implicit Identity Authentication Method Based on User Posture Perception. Electronics, 14(5), 835. https://doi.org/10.3390/electronics14050835