Spatial Plane Positioning of AR-HUD Graphics: Implications for Driver Inattentional Blindness in Navigation and Collision Warning Scenarios

Abstract

1. Introduction

2. Experiment 1

2.1. Method

2.1.1. Experimental Participants and Equipment

2.1.2. Experimental Material

- (1)

- AR-HUD navigation interface design

- (2)

- AR-HUD navigation-assisted driving simulation scenarios

2.1.3. Experimental Variables and Design

2.1.4. Experimental Process

2.2. Result

2.2.1. Inattentional Blindness

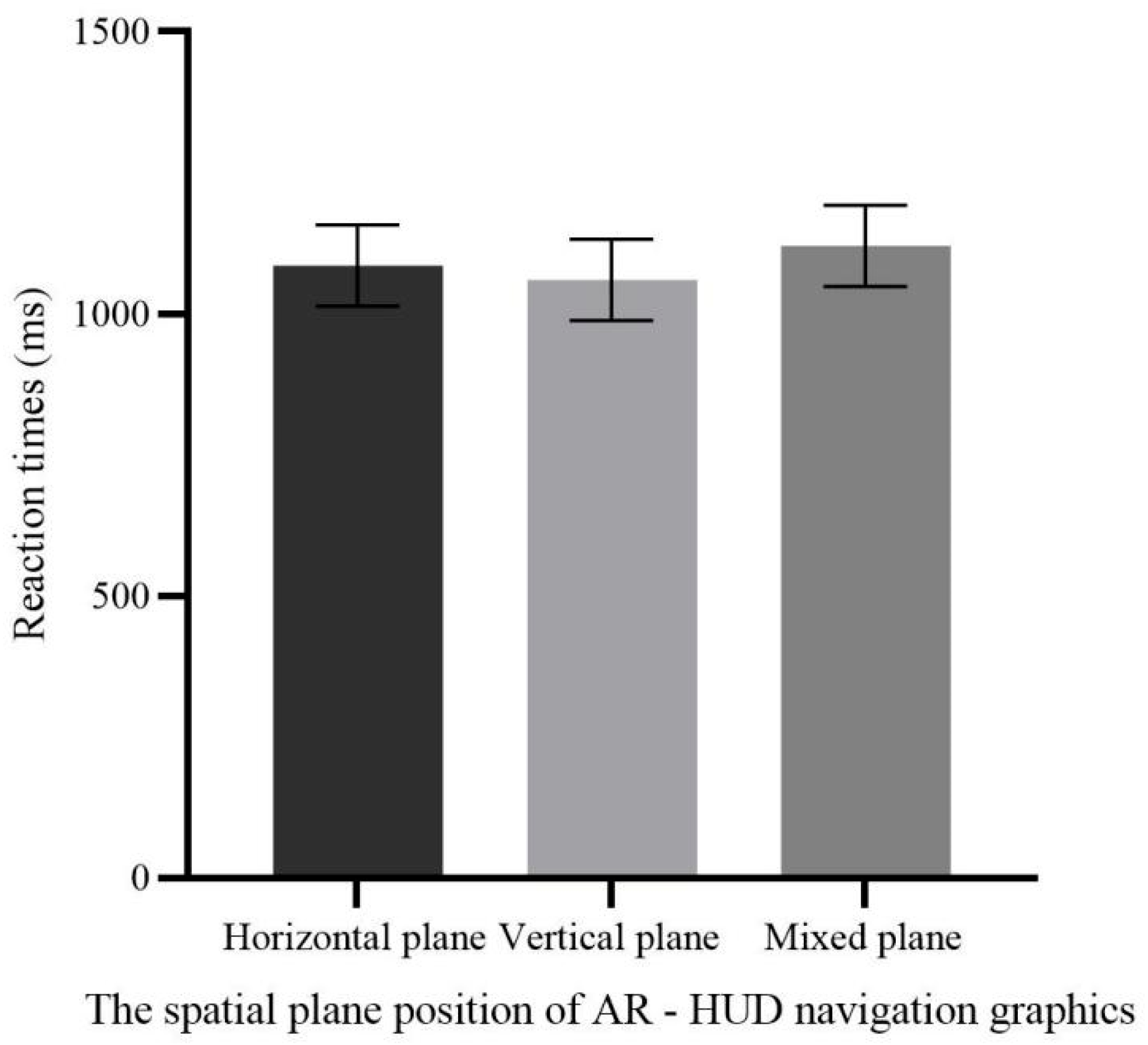

2.2.2. Reaction Time

2.2.3. Workload

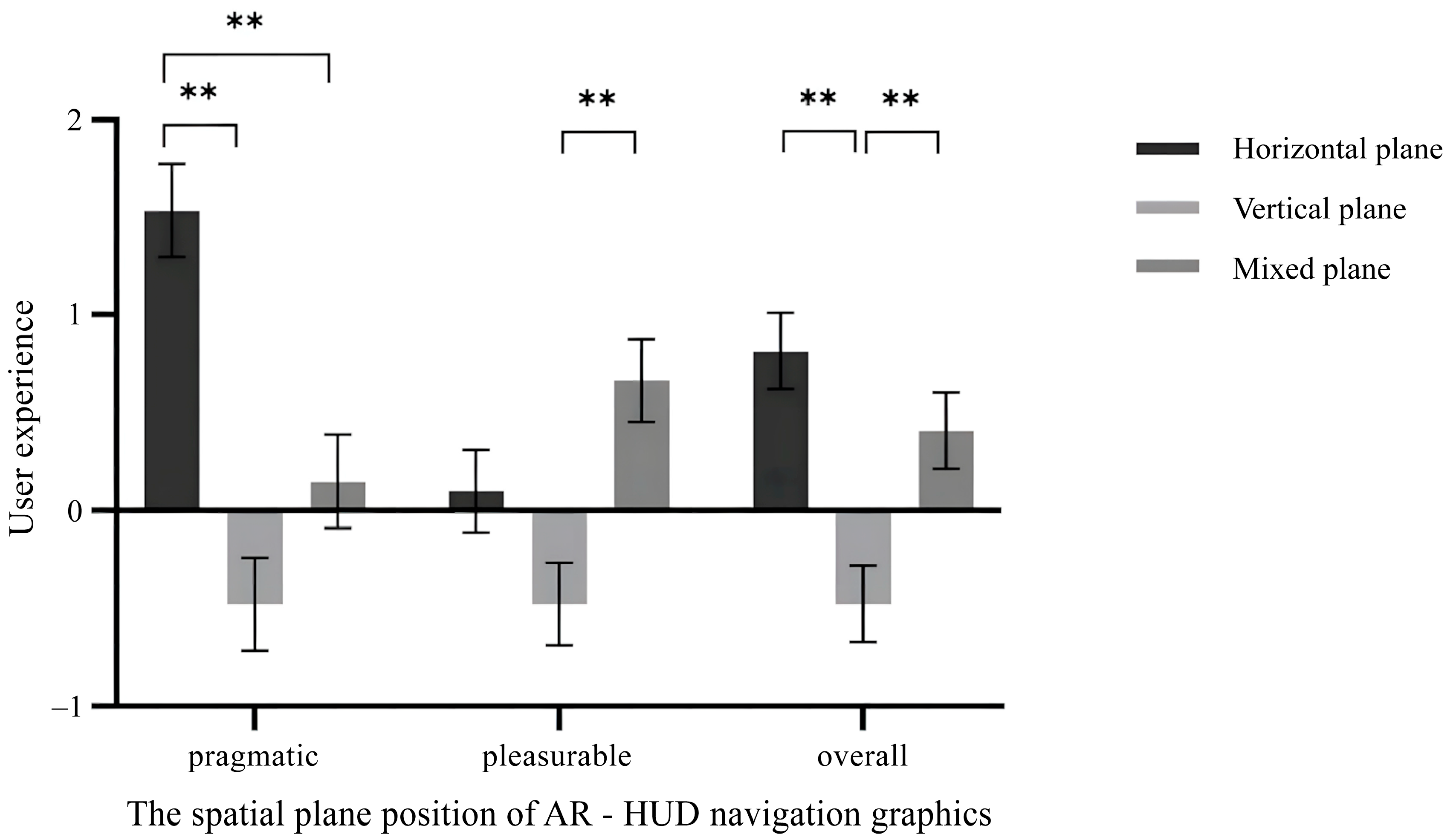

2.2.4. Subjective Evaluation of User Experience

2.2.5. Driving Behavior Is Related to Subjective Workload and User Experience

3. Experiment 2

3.1. Method

3.1.1. Experimental Participants and Equipment

3.1.2. Experimental Materials

- (1)

- AR-HUD collision warning interface design

- (2)

- AR-HUD collision warning-assisted driving simulation scenarios

3.1.3. Experimental Variables and Design

3.1.4. Experimental Procedure

3.2. Result

3.2.1. Inattentional Blindness

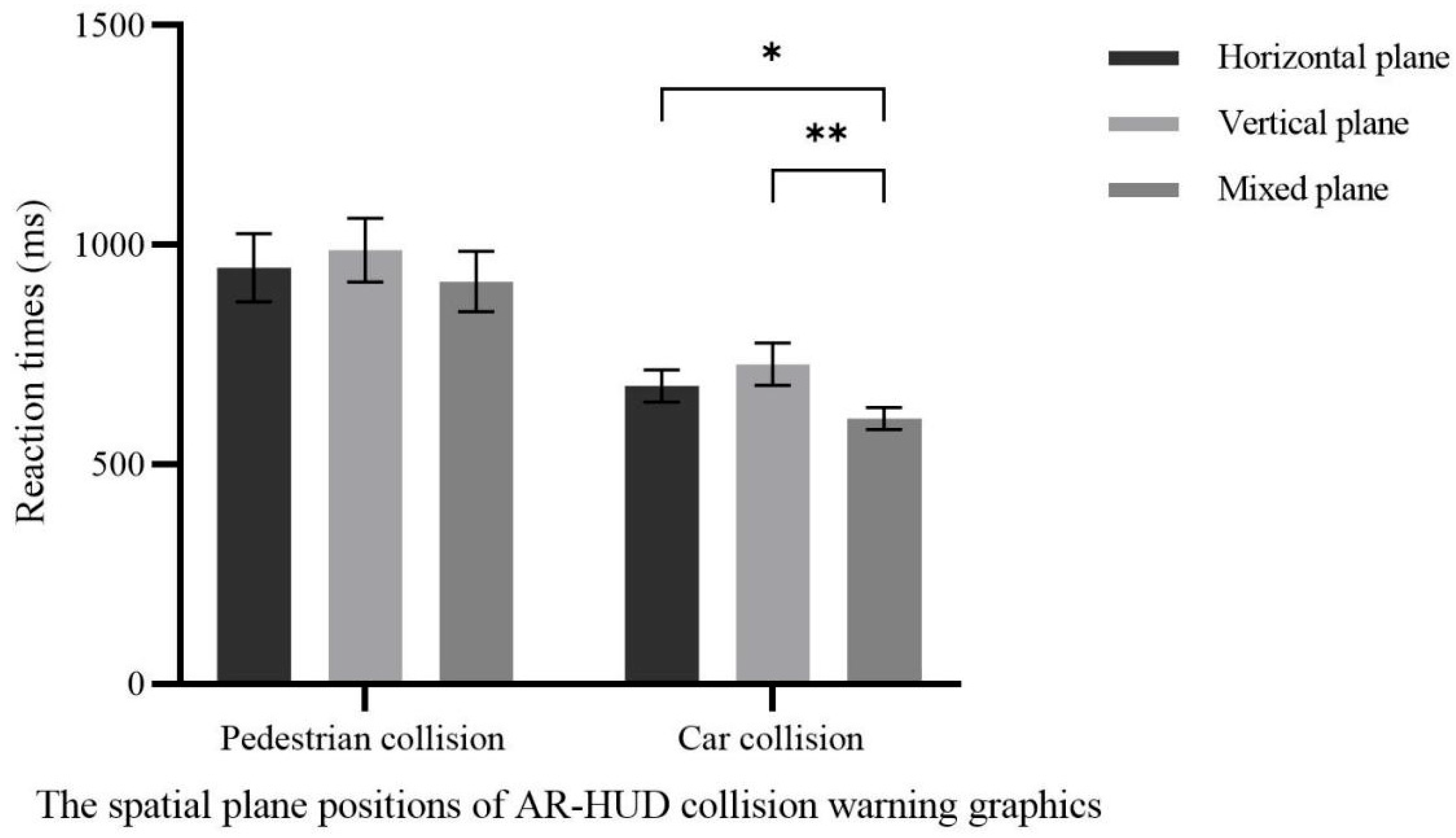

3.2.2. Reaction Time

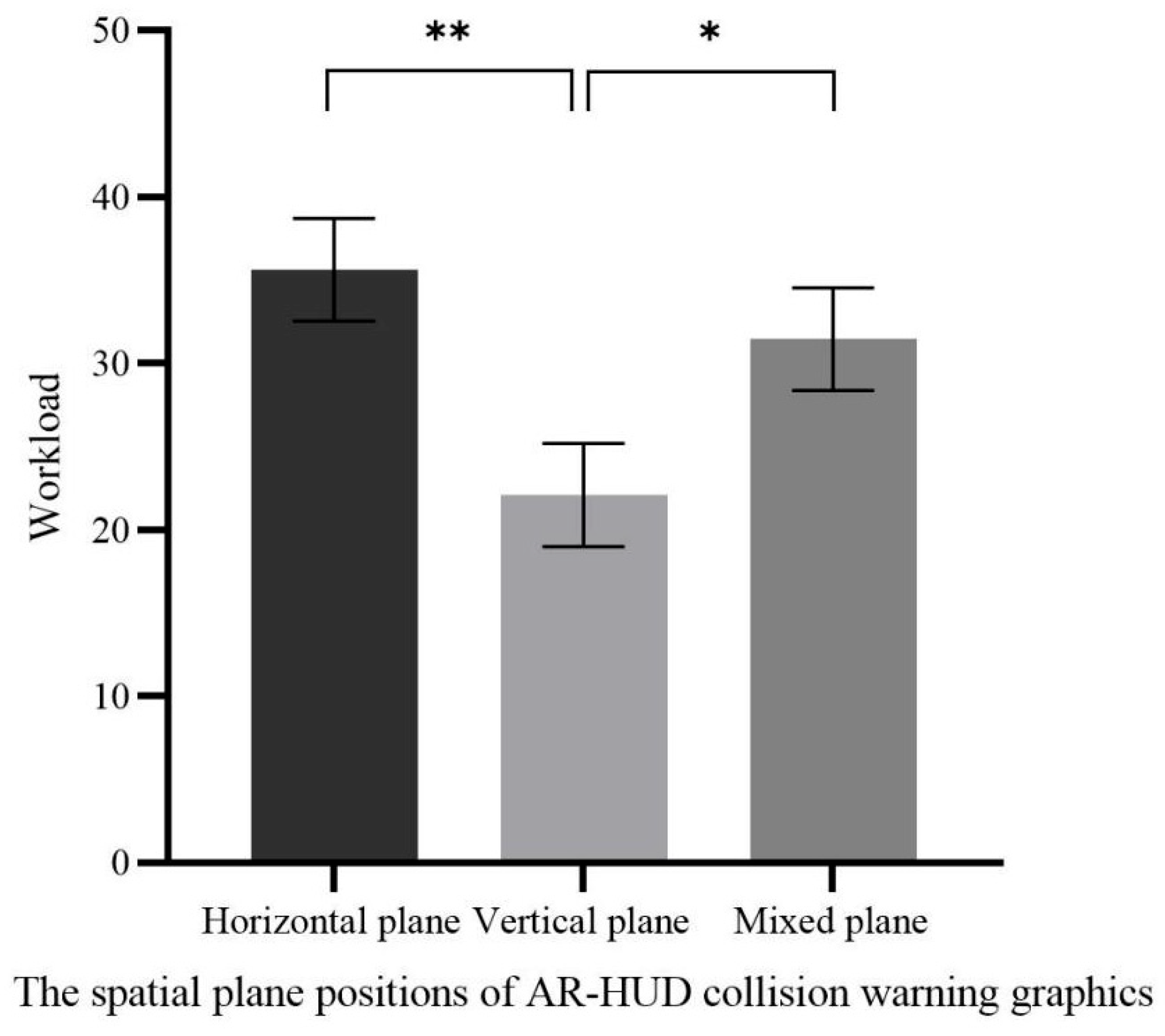

3.2.3. Workload

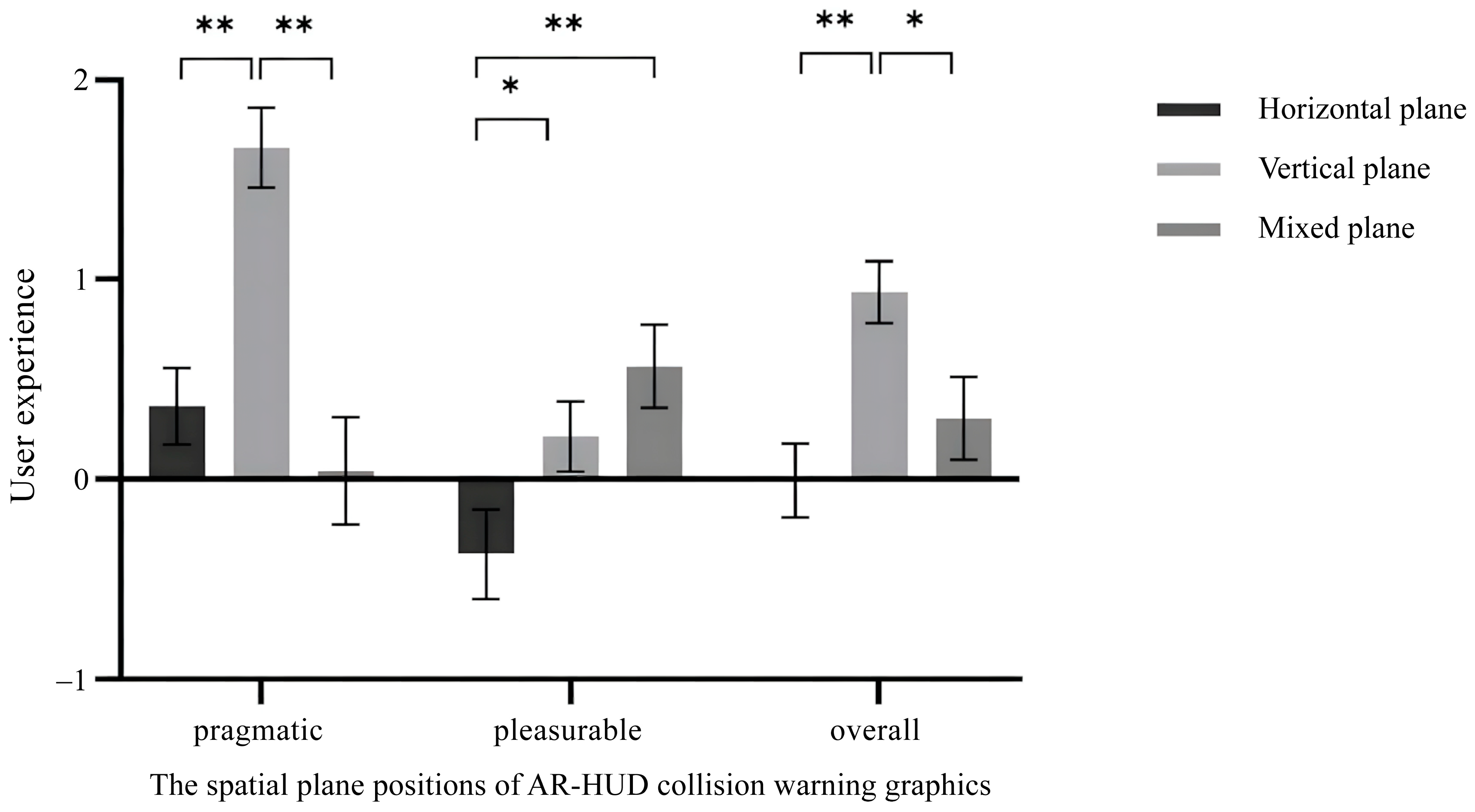

3.2.4. Subjective Evaluation of User Experience

3.2.5. Relationship Between Workload and User Experience

4. Discussion

4.1. Impact of Spatial Plane Position of Navigation Graphics on Inattentional Blindness Behavior and Reaction Time

4.2. The Spatial Plane Position of Navigation Graphics Affects Driving Workload and User Experience

4.3. The Spatial Plane Position of Collision Warning Graphics Affects Driving Behavior and Perception

4.4. Limitation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Moussa, G.; Radwan, E.; Hussain, K. Augmented Reality Vehicle system: Left-turn maneuver study. Transp. Res. Part C Emerg. Technol. 2012, 21, 1–16. [Google Scholar] [CrossRef]

- Detjen, H.; Salini, M.; Kronenberger, J.; Geisler, S.; Schneegass, S. Towards Transparent Behavior of Automated Vehicles: Design and Evaluation of HUD Concepts to Support System Predictability Through Motion Intent Communication. In Proceedings of the 23rd International Conference on Mobile Human-Computer Interaction, Toulouse, France, 27 September–1 October 2021; ACM Press: New York, NY, USA, 2021; pp. 1–12. [Google Scholar]

- Lee, J.; Koo, J.; Park, J.; Lee, M.C. Vehicle Augmented Reality Head-up Display information visualization enhancement algorithm and system. In Proceedings of the 2021 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 21–22 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 701–706. [Google Scholar]

- Kim, H.; Gabbard, J.L. Assessing Distraction Potential of Augmented Reality Head-Up Displays for Vehicle Drivers. Hum. Factors J. Hum. Factors Ergon. Soc. 2022, 64, 852–865. [Google Scholar] [CrossRef]

- Pammer, K.; Sabadas, S.; Lentern, S. Allocating Attention to Detect Motorcycles: The Role of Inattentional Blindness. Hum. Factors J. Hum. Factors Ergon. Soc. 2018, 60, 5–19. [Google Scholar] [CrossRef]

- Li, R.; Chen, Y.V.; Zhang, L.; Shen, Z.; Qian, Z.C. Effects of perception of head-up display on the driving safety of experienced and inexperienced drivers. Displays 2020, 64, 101962. [Google Scholar] [CrossRef]

- Kim, S.; Dey, A.K. Simulated augmented reality windshield display as a cognitive mapping aid for elder driver navigation. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Boston, MA, USA, 4–9 April 2009; ACM Press: New York, NY, USA, 2009; pp. 133–142. [Google Scholar]

- Bauerfeind, K.; Drüke, J.; Bendewald, L.; Baumann, M. How does navigating with Augmented Reality information affect drivers’ glance behaviour in terms of attention allocation? Front. Virtual Real. 2022, 3, 930117. [Google Scholar] [CrossRef]

- Chauvin, C.; Said, F.; Langlois, S. Augmented reality HUD vs. conventional HUD to perform a navigation task in a complex driving situation. Cogn. Technol. Work 2023, 25, 217–232. [Google Scholar] [CrossRef]

- Pfannmüller, L.; Kramer, M.; Senner, B.; Bengler, K. A Comparison of Display Concepts for a Navigation System in an Automotive Contact Analog Head-up Display. Procedia Manuf. 2015, 3, 2722–2729. [Google Scholar] [CrossRef]

- Pärsch, N.; Harnischmacher, C.; Baumann, M.; Engeln, A.; Krauß, L. Designing Augmented Reality Navigation Visualizations for the Vehicle: A Question of Real World Object Coverage? In HCI in Mobility, Transport, and Automotive Systems; Krömker, H., Ed.; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; Volume 11596, pp. 161–175. [Google Scholar]

- Smith, M.; Gabbard, J.L.; Burnett, G.; Hare, C.; Singh, H.; Skrypchuk, L. Determining the impact of augmented reality graphic spatial location and motion on driver behaviors. Appl. Ergon. 2021, 96, 103510. [Google Scholar] [CrossRef] [PubMed]

- Colley, M.; Krauss, S.; Lanzer, M.; Rukzio, E. How Should Automated Vehicles Communicate Critical Situations?: A Comparative Analysis of Visualization Concepts. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2021, 5, 1–23. [Google Scholar] [CrossRef]

- Kim, H.; Isleib, J.D.; Gabbard, J.L. Virtual Shadow: Making Cross Traffic Dynamics Visible through Augmented Reality Head Up Display. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Washington, DC, USA, 19–23 September 2016; SAGE Publications: Los Angeles, CA, USA, 2016; Volume 60, pp. 2093–2097. [Google Scholar]

- Jing, C.; Shang, C.; Yu, D.; Chen, Y.; Zhi, J. The impact of different AR-HUD virtual warning interfaces on the takeover performance and visual characteristics of autonomous vehicles. Traffic Inj. Prev. 2022, 23, 277–282. [Google Scholar] [CrossRef]

- Roh, D.H.; Lee, J.Y. Augmented Reality-Based Navigation Using Deep Learning-Based Pedestrian and Personal Mobility User Recognition—A Comparative Evaluation for Driving Assistance. IEEE Access 2023, 11, 62200–62211. [Google Scholar] [CrossRef]

- Chen, W.; Niu, L.; Liu, S.; Ma, S.; Li, H.; Yang, Z. Evaluating the Effectiveness of Contact-Analog and Bounding Box Prototypes in Augmented Reality Head-Up Display Warning for Chinese Novice Drivers Under Various Collision Types and Traffic Density. Int. J. Hum.–Comput. Interact. 2024, 41, 2677–2691. [Google Scholar] [CrossRef]

- Fujimura, K.; Xu, L.; Tran, C.; Bhandari, R.; Ng-Thow-Hing, V. Driver queries using wheel-constrained finger pointing and 3-D head-up display visual feedback. In Proceedings of the 5th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Eindhoven, The Netherlands, 27–30 October 2013; ACM Press: New York, NY, USA, 2013; pp. 56–62. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Erlbaum Associates: Mahwah, NJ, USA, 1988. [Google Scholar]

- Cohen, J. A power primer. Psychol. Bull. 1992, 112, 155–159. [Google Scholar] [CrossRef] [PubMed]

- Gabbard, J.L.; Smith, M.; Merenda, C.; Burnett, G.; Large, D.R. A Perceptual Color-Matching Method for Examining Color Blending in Augmented Reality Head-Up Display Graphics. IEEE Trans. Vis. Comput. Graph. 2020, 28, 2834–2851. [Google Scholar] [CrossRef]

- Hussain, M.; Park, J. Effect of Transparency Levels and Real-World Backgrounds on the User Interface in Augmented Reality Environments. Int. J. Hum.–Comput. Interact. 2023, 40, 4265–4274. [Google Scholar] [CrossRef]

- Hou, G.; Dong, Q.; Wang, H. The Effect of Dynamic Effects and Color Transparency of AR-HUD Navigation Graphics on Driving Behavior Regarding Inattentional Blindness. Int. J. Hum.–Comput. Interact. 2024, 41, 7581–7592. [Google Scholar] [CrossRef]

- Park, K.; Im, Y. Ergonomic Guidelines of Head-Up Display User Interface during Semi-Automated Driving. Electronics 2020, 9, 611. [Google Scholar] [CrossRef]

- Burnett, G.E.; Donkor, R.A. Evaluating the impact of Head-Up Display complexity on peripheral detection performance: A driving simulator study. Adv. Transp. Stud. 2012, 28, 5–16. [Google Scholar]

- Chen, W.; Song, J.; Wang, Y.; Wu, C.; Ma, S.; Wang, D.; Yang, Z.; Li, H. Inattentional blindness to unexpected hazard in augmented reality head-up display assisted driving: The impact of the relative position between stimulus and augmented graph. Traffic Inj. Prev. 2023, 24, 344–351. [Google Scholar] [CrossRef]

- Briggs, G.F.; Hole, G.J.; Turner, J.A.J. The impact of attentional set and situation awareness on dual tasking driving performance. Transp. Res. Part F Traffic Psychol. Behav. 2018, 57, 36–47. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, Y.; Chen, C.; Wu, B.; Ma, S.; Wang, D.; Li, H.; Yang, Z. Inattentional Blindness in Augmented Reality Head-Up Display-Assisted Driving. Int. J. Hum.–Comput. Interact. 2022, 38, 837–850. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. Adv. Psychol. 1988, 52, 139–183. [Google Scholar] [CrossRef]

- Laugwitz, B.; Held, T.; Schrepp, M. Construction and evaluation of a user experience questionnaire. In HCI and Usability for Education and Work; Holzinger, A., Ed.; Springer: Berlin/Heidelberg, Germany, 2008; Volume 5298, pp. 63–76. [Google Scholar]

- Hassenzahl, M.; Tractinsky, N. User experience—A research agenda. Behav. Inf. Technol. 2006, 25, 91–97. [Google Scholar] [CrossRef]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Design and Evaluation of a Short Version of the User Experience Questionnaire (UEQ-S). Int. J. Interact. Multimedia Artif. Intell. 2017, 4, 103–108. [Google Scholar] [CrossRef]

- Lee, J.; Lee, N.; Ju, J.; Chae, J.; Park, J.; Ryu, H.S.; Cho, Y.S. Visual Complexity of Head-Up Display in Automobiles Modulates Attentional Tunneling. Hum. Factors J. Hum. Factors Ergon. Soc. 2024, 66, 1879–1892. [Google Scholar] [CrossRef]

- Friedrich, M.; Vollrath, M. Urgency-Based color coding to support visual search in displays for supervisory control of multiple unmanned aircraft systems. Displays 2022, 74, 102185. [Google Scholar] [CrossRef]

- Chapanis, A. Hazards associated with three signal words and four colours on warning signs. Ergonomics 1994, 37, 265–275. [Google Scholar] [CrossRef]

- Ma, X.; Jia, M.; Hong, Z.; Kwok, A.P.K.; Yan, M. Does Augmented-Reality Head-Up Display Help? A Preliminary Study on Driving Performance Through a VR-Simulated Eye Movement Analysis. IEEE Access 2021, 9, 129951–129964. [Google Scholar] [CrossRef]

- Yunuo, C.; Xia, Z.; Liwei, T. How does AR-HUD system affect driving behaviour Evidence from an eye-tracking experiment study. Int. J. Veh. Des. 2023, 92, 1–21. [Google Scholar] [CrossRef]

- Murphy, G.; Greene, C.M. High perceptual load causes inattentional blindness and deafness in drivers. Vis. Cogn. 2015, 23, 810–814. [Google Scholar] [CrossRef]

- Lavie, N.; Fox, E. The role of perceptual load in negative priming. J. Exp. Psychol. Hum. Percept. Perform. 2000, 26, 1038–1052. [Google Scholar] [CrossRef]

- Lavie, N.; Tsal, Y. Perceptual load as a major determinant of the locus of selection in visual attention. Psychophys. 1994, 56, 183–197. [Google Scholar] [CrossRef]

- Sweller, J. Cognitive load during problem solving: Effects on learning. Cogn. Sci. 1988, 12, 257–285. [Google Scholar] [CrossRef]

- Tolman, E.C. Cognitive maps in rats and men. Psychol. Rev. 1948, 55, 189–208. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Shi, J.; Wu, B.; Ma, S.; Kang, C.; Zhang, W.; Li, H.; Wu, C. Effect of Warning Graphics Location on Driving Performance: An Eye Movement Study. Int. J. Hum.–Comput. Interact. 2020, 36, 1150–1160. [Google Scholar] [CrossRef]

- Dozza, M. What factors influence drivers’ response time for evasive maneuvers in real traffic? Accid. Anal. Prev. 2013, 58, 299–308. [Google Scholar] [CrossRef]

- Lavie, N. Distracted and confused?: Selective attention under load. Trends Cogn. Sci. 2005, 9, 75–82. [Google Scholar] [CrossRef] [PubMed]

- Macdonald, J.S.P.; Lavie, N. Load induced blindness. J. Exp. Psychol. Hum. Percept. Perform. 2008, 34, 1078–1091. [Google Scholar] [CrossRef] [PubMed]

- Treisman, A.; Gelade, G. A feature-integration theory of attention. Cogn. Psychol. 1980, 12, 97–136. [Google Scholar] [CrossRef]

- Treisman, A. Features and objects: The fourteenth bartlett memorial lecture. Q. J. Exp. Psychol. Sect. A 1988, 40, 201–237. [Google Scholar] [CrossRef]

| Spatial Position | Straight Driving Stage | Turning Stage |

|---|---|---|

| Horizontal planar position |  |  |

| Vertical planar position |  |  |

| Mixed planar position |  |  |

| Spatial Position | Mean Difference | Standard Error | p | 95% Wald Confidence Interval of the Difference | ||

|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | |||||

| Horizontal plane | Vertical plane | 25.103704 | 101.6220857 | 0.805 | −174.071924 | 224.279332 |

| Mixed plane | −35.23703 | 101.6220857 | 0.729 | −234.412665 | 163.938591 | |

| Vertical plane | Mixed plane | −60.34074 | 101.6220857 | 0.553 | −259.516369 | 138.834887 |

| Spatial Position | Mean Difference | Standard Error | p | 95% Wald Confidence Interval of the Difference | ||

|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | |||||

| Horizontal plane | Vertical plane | −13.6508 | 4.89353 | 0.005 | −23.2419 | −4.0596 |

| Mixed plane | −4.0873 | 4.89353 | 0.404 | −13.6785 | 5.5039 | |

| Vertical plane | Mixed plane | 9.5635 | 4.89353 | 0.051 | −0.0277 | 19.1546 |

| Dimension | Spatial Position | Mean Difference | Standard Error | p | 95% Wald Confidence Interval of the Difference | ||

|---|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | ||||||

| pragmatic | Horizontal plane | Vertical plane | 2.0111 | 0.33609 | 0.000 | 1.3524 | 2.6698 |

| Mixed plane | 1.3833 | 0.33609 | 0.000 | 0.7246 | 2.0421 | ||

| Vertical plane | Mixed plane | −0.6278 | 0.33609 | 0.062 | −1.2865 | 0.0309 | |

| pleasurable | Horizontal plane | Vertical plane | 0.5778 | 0.29844 | 0.053 | −0.0072 | 1.1627 |

| Mixed plane | −0.5667 | 0.29844 | 0.058 | −1.1516 | 0.0183 | ||

| Vertical plane | Mixed plane | −1.1444 | 0.29844 | 0.000 | −1.7294 | −0.5595 | |

| overall | Horizontal plane | Vertical plane | 1.29444 | 0.27507 | 0.000 | 0.75531 | 1.83358 |

| Mixed plane | 0.40833 | 0.27507 | 0.138 | −0.13080 | 0.94747 | ||

| Vertical plane | Mixed plane | −0.88611 | 0.27507 | 0.001 | −1.42525 | −0.34698 | |

| Spatial Position | Pedestrian Collision Scenario | Front-Vehicle Collision Scenario |

|---|---|---|

| Horizontal planar position |  |  |

| Vertical Planar Display |  |  |

| Mixed Planar Display |  |  |

| Scenes | Spatial Position | Mean Difference | Standard Error | p | 95% Wald Confidence Interval of the Difference | ||

|---|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | ||||||

| pedestrians | Horizontal plane | Vertical plane | −39.9333 | 57.33557 | 0.486 | −152.3090 | 72.4423 |

| Mixed plane | 30.8667 | 64.42707 | 0.632 | −95.4081 | 157.1414 | ||

| Vertical plane | Mixed plane | 70.8000 | 55.14147 | 0.199 | −37.2753 | 178.8753 | |

| front loader | Horizontal plane | Vertical plane | −49.5333 | 43.85844 | 0.259 | −135.4943 | 36.4276 |

| Mixed plane | 74.6963 | 35.72782 | 0.037 | 4.6710 | 144.7215 | ||

| Vertical plane | Mixed plane | 124.2296 | 36.65850 | 0.001 | 52.3803 | 196.0790 | |

| Spatial Position | Mean Difference | Standard Error | p | 95% Wald Confidence Interval of the Difference | ||

|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | |||||

| Horizontal plane | Vertical plane | 13.5370 | 4.37693 | 0.002 | 4.9584 | 22.1157 |

| Mixed plane | 4.1667 | 4.37693 | 0.341 | −4.4120 | 12.7453 | |

| Vertical plane | Mixed plane | −9.3704 | 4.37693 | 0.032 | −17.9490 | −0.7918 |

| Scenes | Spatial Position | Mean Difference | Standard Error | p | 95% Wald Confidence Interval of the Difference | ||

|---|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | ||||||

| pragmatic | Horizontal plane | Vertical plane | −1.2944 | 0.28526 | 0.000 | −1.8536 | −0.7353 |

| Mixed plane | 0.3222 | 0.35422 | 0.363 | −0.3720 | 1.0165 | ||

| Vertical plane | Mixed plane | 1.6167 | 0.38575 | 0.000 | 0.8606 | 2.3727 | |

| pleasurable | Horizontal plane | Vertical plane | −0.5889 | 0.25278 | 0.020 | −1.0843 | −0.0934 |

| Mixed plane | −0.9389 | 0.28188 | 0.001 | −1.4914 | −0.3864 | ||

| Vertical plane | Mixed plane | −0.3500 | 0.26334 | 0.184 | −0.8661 | 0.1661 | |

| overall | Horizontal plane | Vertical plane | −0.9417 | 0.23239 | 0.000 | −1.3971 | −0.4862 |

| Mixed plane | −0.3083 | 0.28020 | 0.271 | −0.8575 | 0.2408 | ||

| Vertical plane | Mixed plane | 0.6333 | 0.29174 | 0.030 | 0.0615 | 1.2051 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ye, M.; Yin, J. Spatial Plane Positioning of AR-HUD Graphics: Implications for Driver Inattentional Blindness in Navigation and Collision Warning Scenarios. Electronics 2025, 14, 4768. https://doi.org/10.3390/electronics14234768

Ye M, Yin J. Spatial Plane Positioning of AR-HUD Graphics: Implications for Driver Inattentional Blindness in Navigation and Collision Warning Scenarios. Electronics. 2025; 14(23):4768. https://doi.org/10.3390/electronics14234768

Chicago/Turabian StyleYe, Menlong, and Jun Yin. 2025. "Spatial Plane Positioning of AR-HUD Graphics: Implications for Driver Inattentional Blindness in Navigation and Collision Warning Scenarios" Electronics 14, no. 23: 4768. https://doi.org/10.3390/electronics14234768

APA StyleYe, M., & Yin, J. (2025). Spatial Plane Positioning of AR-HUD Graphics: Implications for Driver Inattentional Blindness in Navigation and Collision Warning Scenarios. Electronics, 14(23), 4768. https://doi.org/10.3390/electronics14234768