1. Introduction

Large-scale disasters, such as data center fires or power outages, pose a serious threat to IT service continuity. To ensure service availability, most systems are designed to prevent service interruptions in the event of failures through hardware (HW) and software (SW) redundancy. However, redundancy concentrated in a single data center is ineffective in the event of a site-level disaster, such as a wide-area power outage or fire. In such situations, a truly functional disaster recovery (DR) system is essential to ensure the continuity of core services.

To ensure business continuity, organizations obtain and maintain international standard certifications such as International Organization for Standardization/International Electrotechnical Commission ISO 22301 and ISO/IEC 27001 [

1,

2]. These standards define structured procedures for establishing and validating business continuity plans (BCPs) and DR plans, and mandate periodic reviews and updates to enhance organizational resilience.

However, recent data center incidents have revealed issues such as delayed recovery times and prolonged service outages, even in organizations that maintain standard certifications. This indicates not a flaw in the standards themselves, but rather the absence of mechanisms for quantitatively validating resilience performance. In other words, document-based audits and procedure-oriented inspections alone are insufficient to objectively assess resilience capabilities under real-world failure conditions.

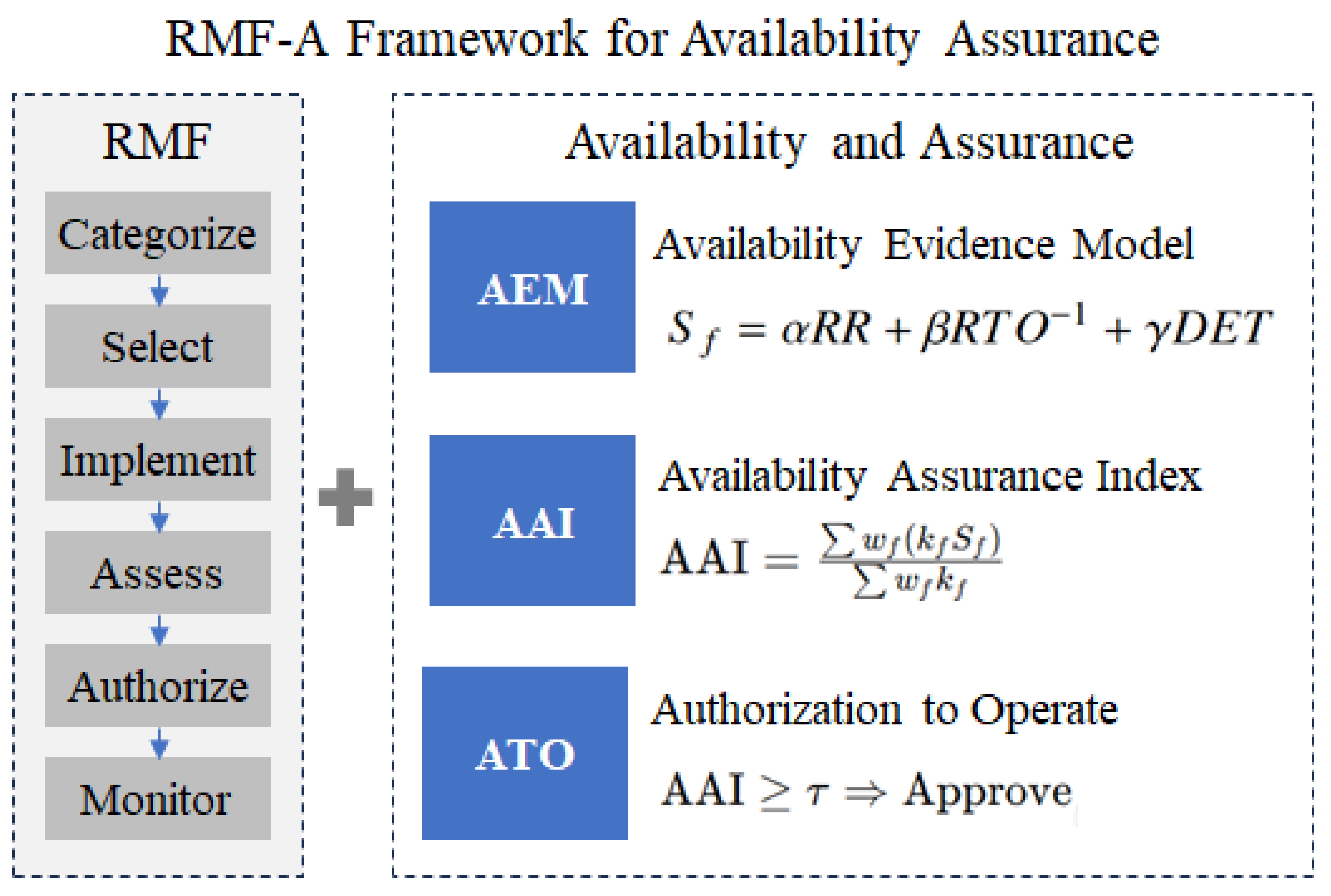

To address these limitations, this study does not seek to replace existing ISO- and National Institute of Standards and Technology (NIST)-based management systems, but rather to enhance practical resilience verification by introducing quantitative availability indicators (KPIs). Accordingly, we propose the Availability Assurance Framework (RMF-A)—an extension of the NIST Risk Management Framework (RMF)—and define an Availability Assurance Index (AAI) which integrates the Recovery Rate (RR), Recovery Time Objective (RTO), and Detection Effectiveness (DET) as core resilience metrics.

RMF-A extends the Assess–Authorize–Monitor phase of the NIST RMF into an evidence-based structure, enabling quantitative assurance while preserving procedural alignment with ISO-based management systems. To this end, this study introduces a proactive resilience enhancement mechanism driven by data-oriented KPI management, moving beyond passive, compliance-focused approaches.

The main contributions of this study are as follows:

Proposed the RMF-A, which enables quantitative resilience assessment using empirical data while maintaining the management procedures of ISO 22301 and ISO/IEC 27001.

Defined the AAI that quantifies the level of resilience by integrating RR, RTO, and DET.

Extended the Assess–Authorize–Monitor phase of the NIST RMF to implement a data-driven and proactive resilience management system.

2. Related Work

Most organizations are certifying their resilience quality through compliance with international standards such as ISO, NIST, and EU regulatory frameworks. ISO 22301 defines the requirements for a Business Continuity Management System (BCMS), while ISO/IEC 27001 specifies an Information Security Management System (ISMS) [

1,

2]. However, these standards do not include procedures for verifying resilience performance using empirical operational data, such as logs generated in real-world operations, recovery records, and monitoring output. ISO/IEC 27031 also provides general guidance for ensuring ICT resilience, but it does not link to a certification framework or include real-time assessment procedures [

3].

NIST SP 800-37 Rev. 2 (RMF) verifies control effectiveness through the Assess–Authorize–Monitor process [

4], and the Contingency Planning (CP), Incident Response (IR), and System and Communications Protection (SC) control families in SP 800-53 specifically define recovery and detection functions [

5]. This approach provides a foundation for extending ISO’s procedure-oriented management systems into a structure that enables quantitative verification.

The EU mandates operational resilience testing and evidence-based assessments for cloud service providers and financial institutions through the Network and Information Security Directive 2 (NIS2) and the Digital Operational Resilience Act (DORA) [

6,

7].

Previous studies have primarily focused on the linkage between BCM maturity assessment based on ISO 22301 and risk management frameworks [

8,

9,

10,

11]. Russo et al. [

8] and Khaghani and Jazizadeh [

9] presented the maturity and metricization of ISO management processes, while Cheng et al. [

10] and Almaleh [

11] discussed quantitative resilience models utilizing RTO, RR, and DET. However, these studies remained centered on individual indicators and provided only limited structures for integrated evaluation of resilience metrics. The RMF-A proposed in this study addresses this gap by combining the procedural management framework of ISO with the evidence-based assessment approach of the NIST RMF, and by quantifying the level of resilience through the AAI.

3. Methodology

The RMF-A extends the six-step process of the NIST RMF by adding an Availability Assurance phase, thereby expanding ISO’s managerial controls into an evidence-based verification structure.

3.1. RMF-A Structure

The RMF-A is based on the procedural structure of the NIST RMF.

Figure 1 shows this structure, which is enhanced by an Availability Assurance layer. This layer is composed of the Availability Evidence Model (AEM), the Availability Assurance Index (AAI), and the Authorization to Operate (ATO) modules. The model maintains the six-step RMF process (Categorize–Select–Implement–Assess–Authorize –Monitor) while utilizing empirical operational data to quantify the availability-focused AAI and determine the ATO decision.

Categorize: Define system criticality and set the availability threshold .

Select: Map ISO 22301 and ISO/IEC 27001 controls to the NIST SP 800-53 control families.

Implement: Apply selected controls and collect operational data.

Assess: Quantitatively evaluate control effectiveness using RR, RTO, and DET.

Authorize: Decide the ATO based on the assessment results.

Monitor: Continuously monitor and re-assess upon anomaly detection.

Availability Assurance: Integrate all phases to form the AEM and compute the AAI.

3.2. AEM in RMF-A

The AEM provides a structure for mapping ISO and NIST control items, collecting evidence for each control, and quantitatively verifying their effectiveness. For each control

, the process of “Control–Evidence–Verification–Evaluation” is performed, and indicator values are measured from operational data such as recovery logs, backup success rates, and detection success rates.

Here,

denotes the evaluation score of control

i, and

represent the corresponding metrics. The function

min() constrains normalized values not to exceed

, and when

, a lower bound of

is applied to the denominator (When

, a small lower bound

is applied to the denominator to avoid division by zero, which saturates the term at 1 and represents an ideal (instant recovery) condition). The indicator weights

are set by assigning a higher weight to RTO, reflecting its central role in business continuity management (BCM) and availability evaluation [

7,

12,

13]. This ratio also aligns with the weighting structures proposed in previous resilience models [

8,

9,

10,

11], where RR and DET are equally weighted to represent their balanced importance in resilience management.

3.3. AAI in RMF-A

The control-family scores derived from the AEM stage incorporate the relative importance of each control family and the environmental deviations, in order to quantify the overall level of availability assurance for the organization. Through this process, the AAI is calculated to represent the comprehensive assurance level.

3.3.1. Baseline Weight Configuration

The baseline weights

were determined with reference to the relative importance of resilience-related control families—CP, IR, and SC—defined in ISO 22301 Clause 8 on business continuity and NIST SP 800–53 Rev. 5. A weight of 0.35 was assigned to CP, 0.25 to IR, 0.20 to SC, and 0.20 to other control families. This configuration reflects that the CP category accounts for approximately one-third of all operational controls in ISO 22313 Annex C, and that in the NIST RMF, the CP family is classified as the highest-priority element for ensuring availability [

5,

12].

3.3.2. Application

of Tuning Coefficients

To reflect the characteristics of different operational environments, a tuning coefficient

was introduced for each control family. The coefficient

represents the relative deviation between the observed and expected values in the baseline environment, and each coefficient is normalized so that the mean value equals 1. That is,

When the performance of a control family exceeds the baseline level (), the other control families are slightly adjusted to maintain an average value of 1, thereby ensuring the stability of the overall assurance scale.

The tuning coefficient is applied as a weight to each control-family score to yield an adjusted score , which is then used as an input in the subsequent AAI computation process.

3.3.3. AAI Calculation Formula

The AAI reflecting the tuning coefficients is defined as follows:

The denominator serves as a normalization term, ensuring that the sum of the effective weights remains equal to 1 even after applying the tuning coefficients.

In implementation, the following equivalent expression can be used:

That is, in the actual validation code, each

is multiplied by its corresponding

and then divided by the normalization term

Z, which is mathematically equivalent to the theoretical Formula (

3).

3.3.4. ATO Decision Criteria

The final AAI value is evaluated against the thresholds , and the ATO result is classified as follows:

: High Assurance (ATO-Approve)

: Conditional (ATO-Conditional)

: Low Assurance (ATO-Deny)

The ATO thresholds are determined based on the practical attainability associated with system operation tiers and the compliance level with industry-standard RTO.

In the industry, systems are generally classified by criticality into three tiers: Tier 1 (Mission-Critical), Tier 2 (High-Priority), and Tier 3 (Moderate-Priority). Tier 1 systems require recovery within seconds to minutes due to potential financial, regulatory, or safety impacts, whereas Tier 2 systems target recovery within four hours (240 min).

This study focuses on Tier 2 systems, with baseline settings of and . Validation results showed that even when recovery time doubled (, ), the AAI remained around 0.85, which was adopted as the high-assurance threshold . When recovery was delayed to about and both RR and DET declined to roughly 0.8, the AAI dropped to 0.70, corresponding to the conditional range in which business continuity is only partially maintained. This indicates that organizations must strengthen short-term recovery capability through improved procedures and resource allocation. Accordingly, represents quantitative thresholds reflecting the practical recovery tolerance of Tier 2 systems relative to the 60 min baseline, consistent with industry practice and standard recovery metrics (≤4 h).

3.4. RMF-A Integration Procedure

The RMF-A integrates the AEM and AAI stages into a systematic process for quantifying an organization’s level of availability assurance. The overall procedure is summarized in four steps as follows:

Control Mapping: Link ISO/IEC 27001 and NIST SP 800-53 controls to identify availability-related families (CP, IR, SC).

Evidence Collection: Gather logs, backup/recovery tests, and incident records.

Quantitative Assessment: Compute control scores and aggregate them with weights to obtain the AAI.

Assurance Integration: Compare AAI with thresholds to determine the ATO level.

Through this process, the traditional document-based ISO auditing procedures can be transformed into a quantitative and automated assurance process, ensuring both consistency and reproducibility of the assurance outcomes.

The algorithm of the RMF-A Framework is described in Algorithm 1.

| Algorithm 1 RMF-A: AAI computation and authorization decision |

Require: Control scores , Base weights , Coefficients

Require: Assurance thresholds

Ensure: AAI and authorization outcome

1:

2:

3: if

then

4: Outcome ← ATO-Approve

5: else if

then

6: Outcome ← ATO-Conditional

7: else

8: Outcome ← ATO-Deny

9: end if

10: return

|

4. Validation and Evaluation

4.1. Trace-Driven Validation Environment

To verify the effectiveness of the RMF-A, a validation environment was constructed that reflects the statistical characteristics of publicly available datasets. Based on representative samples extracted from each dataset, the failure occurrence and recovery processes were modeled.

4.1.1. Validation Design

Three public datasets—Google Cluster Trace, Azure Cloud Trace, and LANL HPC logs—were analyzed in a Python 3.11 environment using pandas and numpy. The datasets included approximately 99k, 91k, and 2k failure–recovery events, yielding RR–RTO–DET–AAI results of (17.3%, 0 min, 0.988, 0.758), (78.7%, 14.5 min, 0.227, 0.720), and (19.6%, 32.4 min, 0.94, 0.744), respectively. This setup verified the consistency of the RMF-A model across heterogeneous infrastructures.

4.1.2. Validation Parameters and Tuning Coefficients

Table 1 summarizes the main parameters applied to each dataset.

Since the dataset specifications did not include detailed environmental attributes, all control-family tuning coefficients were fixed at to eliminate environmental bias. This decision was made due to the lack of detailed environmental metadata and risk profiles in the public datasets, making empirical assignment infeasible in this validation. Accordingly, the validation’s primary focus was shifted to verifying the structural consistency and computational stability of the RMF-A model, rather than the environment-specific tuning. Sensitivity analysis confirmed that variation changed the AAI by less than 0.03, indicating model stability.

4.1.3. AAI Calculation Methodology

The control scores

were calculated using the metrics (RR, RTO, DET) extracted from each dataset.

Here, , and the thresholds were set to and min. Based on these scores, the results for each control family—CP (Contingency Planning), IR (Incident Response), SC (System and Communications Protection), and Other—were derived.

The final AAI was computed by applying the control-family weights

,

,

, and

as follows:

4.1.4. Validation and Adjustment

To verify the reliability and robustness of the results, the following procedures were performed:

Statistical Validation: For the Google and LANL datasets, 95% confidence intervals were computed using 30 bootstrap iterations, and the standard deviation of AAI was found to be less than 0.01.

Cross-Validation: The RTO, RR, and DET results from the Azure dataset were compared with recovery logs from public cloud services, confirming their consistency.

Sensitivity Analysis: When the weights were varied within a range of , the maximum fluctuation of AAI remained within 0.03, demonstrating the stability of the model.

4.1.5. Validation Environment Configuration

The validation environment was configured as

Table 2.

4.2. Results

The results of applying the RMF-A to each dataset are summarized in

Table 3.

4.2.1. Result Analysis

The Google Cluster Trace dataset is based on instance scheduling and event logs from a large-scale cluster environment, where the linkage between failure (Fail) and recovery (Recovery) events is limited due to sampling constraints. As a result, RR appeared relatively low, while most recovery processes were completed within seconds, yielding a high RTO-related score and high DET values. These results indicate that the RMF-A’s recovery-related metrics (RR, RTO, DET) act complementarily, suggesting that automated scheduling environments strongly contribute to AAI improvement through immediate recovery characteristics.

The Azure Cloud Trace dataset records the allocation and reassignment of virtual machines, focusing primarily on resource relocation rather than explicit failure detection. In this dataset, DET appeared low, whereas RR and RTO showed relatively strong performance, resulting in an AAI of 0.720 (ATO-Conditional). This demonstrates that among the RMF-A metrics (RR, RTO, DET), recovery-related indicators contribute more significantly to the AAI, implying that even in environments with limited detection visibility, consistent service continuity and resource recovery can sustain acceptable AAI levels.

The LANL HPC dataset represents operational logs from a large-scale HPC environment, where recovery operations rely more on manual restarts and job resubmissions than automated failover mechanisms. Consequently, RR was low, but recovery times were explicitly recorded, resulting in a stable average RTO of 32.4 min and a high DET value of 0.94. These findings indicate that DET and RTO positively influence AAI within RMF-A, and that, even in HPC environments, established failure detection and response procedures can achieve conditional approval (AAI = 0.744) despite limited recovery rates.

In this validation, individual datasets for each control family (CP, IR, SC, Other) were not constructed. Instead, the same event logs from Google, Azure, and LANL were applied uniformly because the public datasets do not distinctly separate logs by control family (e.g., recovery, detection, or protection procedures). Therefore, identical input metrics (RR, RTO, DET) were used across all control families to verify the structural consistency and computational stability of RMF-A:

In practical implementations, however, validation using control-family-specific datasets will be necessary.

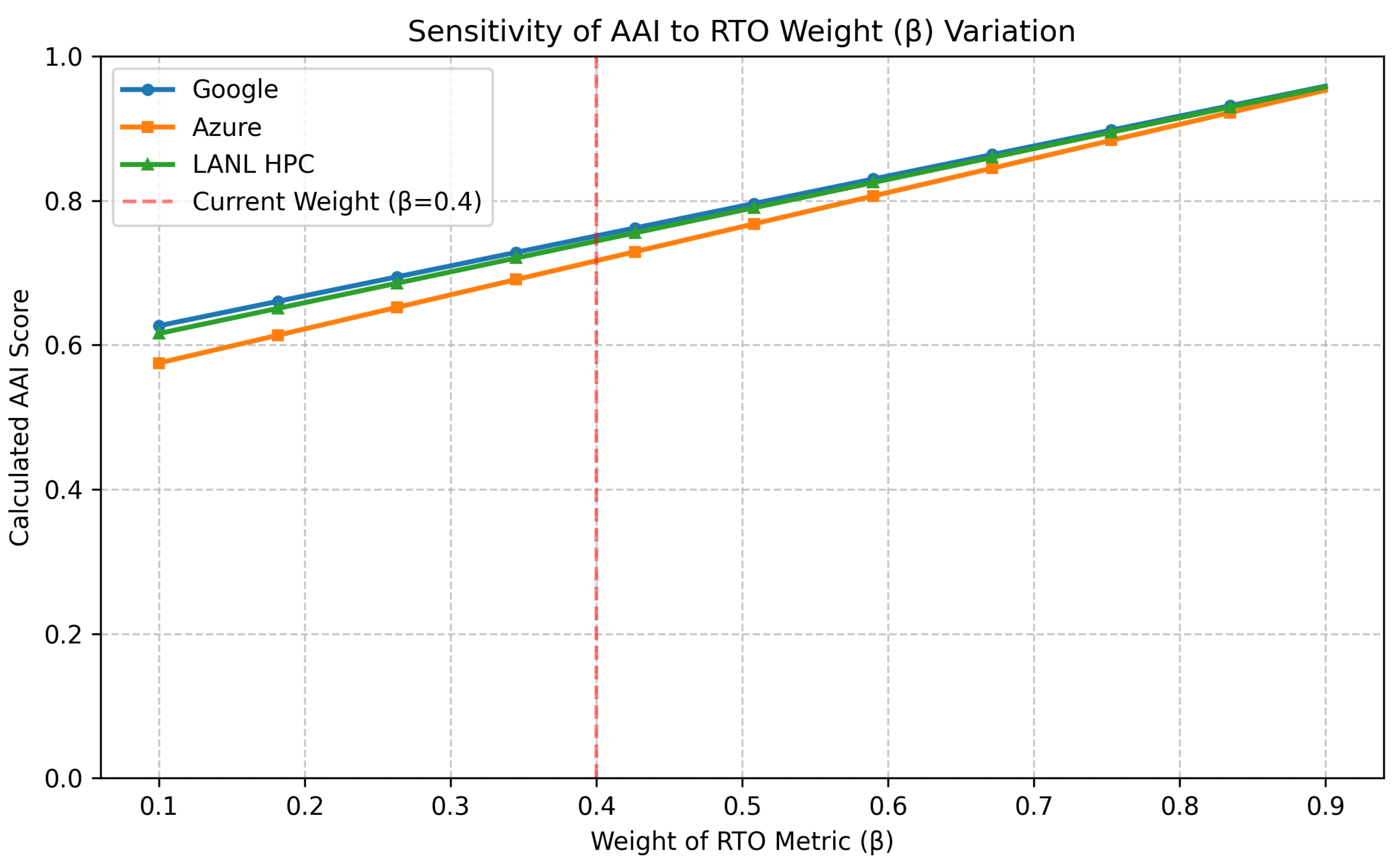

4.2.2. Correlation of Metrics and Model Validity Verification

To verify the stability of the RMF-A model against parameter variations, we analyzed the sensitivity of the AAI to changes in the most critical weighting factor, the RTO weight (

).

Figure 2 illustrates the fluctuation of AAI scores for the Google, Azure, and LANL datasets when

is adjusted from 0.1 to 0.9. The analysis demonstrates that varying the primary weight (

for RTO) across a comprehensive range (0.1∼0.9) results in a consistent linear response in AAI scores. This observation presents a compelling argument for the model’s validity:

Linear Stability: Unlike unstable models that might show erratic fluctuations or sudden jumps, the RMF-A model shows a smooth, linear progression even under extreme weight variations. This proves that the model is mathematically stable and predictable.

Reflection of Performance: The upward trend in AAI as increases correctly reflects the high RTO performance of the datasets (mostly under 60 min). This confirms that the AAI is primarily driven by intrinsic metric performance rather than being arbitrarily skewed by weights.

Therefore, the weights act as transparent scaling factors to reflect policy priorities (e.g., emphasizing time vs. reliability) without distorting the fundamental reliability of the evaluation. This experimental evidence justifies the use of the proposed baseline weights () as a balanced standard.

4.3. Comparison with Existing Resilience Metrics

To demonstrate the superiority and distinctiveness of the proposed RMF-A framework, we performed a qualitative comparison with existing resilience metrics widely used in the industry and academia. As summarized in

Table 4, traditional metrics such as MTTR/MTBF focus solely on the time dimension, failing to capture the quality of recovery. Similarly, while Site Reliability Engineering (SRE) metrics (SLI/SLO) effectively monitor service availability, they often lack direct linkage to management control families (e.g., ISO 22301). Previous academic resilience indices [

10,

11] have attempted to integrate recovery and detection metrics but typically do not support environment-specific tuning or alignment with standardized security controls. As shown in

Table 4, RMF-A is the only framework that simultaneously satisfies all three critical requirements: (1) the integration of quantitative indicators (RR, RTO, DET), (2) the granularity of control families (CP, IR, SC) aligned with international standards, and (3) the capability for environmental tuning (

). This comparison highlights that RMF-A not only quantifies technical resilience but also bridges the gap between managerial certification and operational reality, offering a more comprehensive assurance structure than existing alternatives.

4.4. Limitations and Future Work

Although RMF-A was validated on three large-scale public datasets, the following limitations stem from data availability and scope:

Dataset-specific recovery pairing: Google and LANL HPC logs lack explicit failure-recovery linkages, potentially underestimating RR (0.173 and 0.196). Azure’s higher RR (0.787) may reflect more complete event tracing.

Absence of control-family granularity: Public datasets do not provide logs segmented by NIST control families (e.g., CP, IR, SC). Future enterprise deployments should collect family-specific failure data to compute granular scores.

Static weighting (): Without operational metadata (e.g., system criticality, risk appetite), adaptive tuning of was not applied. Real-world systems require dynamic weighting based on business impact.

These constraints are inherent to public data and do not invalidate RMF-A’s core model. To address them, future work will focus on enterprise legacy system validation:

Deploy RMF-A in production environments with legacy infrastructure (manufacturing MES) to collect control-family-specific logs and validate differentiation.

Implement machine learning-based tuning using historical incident severity and recovery outcomes.

Develop a real-time AAI monitoring dashboard with API integration for continuous NIST RMF compliance and automated ATO decisions.

Conduct a comparative study aligning RMF-A outcomes with ISO 22301 audit results to quantify the certification reality gap in operational contexts.

These enhancements will transform RMF-A into a deployable, adaptive framework bridging ISO procedures with NIST’s evidence-driven assurance—particularly for modernizing legacy enterprise systems.

5. Conclusions

The ISO 27001 and ISO 22301 management systems are effective in systematically establishing organizational business continuity and information security procedures; however, they lack quantitative criteria for verifying resilience and availability in actual disaster situations. To address this limitation, this study proposed the RMF-A (Availability Assurance Framework), a quantitative assurance structure that extends the evaluation procedures of the NIST RMF and integrates ISO control items.

The empirical validity of the RMF-A was verified using three public datasets: Google Cluster Trace, Azure Cloud, and LANL HPC Failure Logs. Although all datasets yielded conditional ATO decisions, this outcome is attributed to the dataset characteristics, such as event linkage limitations and sampling constraints, rather than any overestimation or underestimation of the RMF-A evaluation model. Accordingly, the evaluation metrics proposed in this study reflect dataset biases while maintaining quantitative validity in resilience measurement.

These findings demonstrate that the RMF-A provides consistent assurance indicators across diverse operational environments and enables the establishment of a continuous availability evaluation framework grounded in quantitative evidence.

Future research will focus on validating the RMF-A using real-world operational logs and performance data, enhancing the precision of AAI calculations through AI-based weight adjustment and dynamic evaluation modeling. Furthermore, by developing a composite AAI–RI assurance model integrating resilience indicators, the framework will be advanced into a unified operational and certification-linked architecture that harmonizes ISO and NIST RMF systems.

Author Contributions

Conceptualization, Writing—Original draft, Methodology, Software, visualization, Project administration, C.-H.M.; Funding acquisition, Supervision, J.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Technology Innovation Program (RS-2024-00443436) funded By the Ministry of Trade, Industry & Energy (MOTIE, Republic of Korea) and supported by the Institute of Information & Communications Technology Planning & Evaluation (IITP) grant funded by the Republic of Korea government (MSIT) (No. RS-2024-00400302, Development of Cloud Deep Defense Security Framework Technology for a Safe Cloud Native Environment).

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest.

References

- ISO 22301; Security and Resilience—Business Continuity Management Systems—Requirements. ISO: Geneva, Switzerland, 2019.

- ISO/IEC 27001; Information Security, Cybersecurity and Privacy Protection—Information Security Management Systems—Requirements. ISO: Geneva, Switzerland, 2022.

- ISO/IEC 27031; Cybersecurity—Information and Communication Technology Readiness for Business Continuity. ISO: Geneva, Switzerland, 2025.

- National Institute of Standards and Technology. Joint Task Force Transformation Initiative. In Risk Management Framework for Information Systems and Organizations; Technical report; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2018. [Google Scholar]

- Joint Task Force Interagency Working Group. Security and Privacy Controls for Information Systems and Organizations; Technical report; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2020. [Google Scholar]

- European Parliament and Council of the European Union. Directive (EU) 2022/2555 of the European Parliament and of the Council of 14 December 2022 on Measures for a High Common Level of Cybersecurity Across the Union, Amending Regulation (EU) No 910/2014 and Directive (EU) 2018/1972, and Repealing Directive (EU) 2016/1148 (NIS 2 Directive) (Text with EEA Relevance) Text with EEA Relevance. Off. J. Eur. Union L 2022, 333, 80–152. Available online: http://data.europa.eu/eli/dir/2022/2555/2022-12-27 (accessed on 1 November 2025).

- European Parliament and Council of the European Union. Regulation (EU) 2022/2554 of the European Parliament and of the Council of 14 December 2022 on digital operational resilience for the financial sector and amending Regulations (EC) No 1060/2009, (EU) No 648/2012, (EU) No 600/2014, (EU) No 909/2014 and (EU) 2016/1011 (Text with EEA Relevance) Text with EEA Relevance. Off. J. Eur. Union L 2022, 333, 1–79. Available online: https://eur-lex.europa.eu/eli/reg/2022/2554/oj (accessed on 1 November 2025).

- Russo, N.; Reis, L.; Silveira, C.; Mamede, H.S. Towards a comprehensive framework for the multidisciplinary evaluation of organizational maturity on business continuity program management: A systematic literature review. Inf. Secur. J. Glob. Perspect. 2023, 33, 54–72. [Google Scholar] [CrossRef]

- Khaghani, F.; Jazizadeh, F. mD-Resilience: A Multi-Dimensional Approach for Resilience-Based Performance Assessment in Urban Transportation. Sustainability 2020, 12, 4879. [Google Scholar] [CrossRef]

- Yao Cheng, E.A.E.; Huang, Z. Systems resilience assessments: A review, framework and metrics. Int. J. Prod. Res. 2022, 60, 595–622. [Google Scholar] [CrossRef]

- Almaleh, A. Measuring Resilience in Smart Infrastructures: A Comprehensive Review of Metrics and Methods. Appl. Sci. 2023, 13, 6452. [Google Scholar] [CrossRef]

- ISO 22313; Security and Resilience—Business Continuity Management Systems—Guidance on the Use of ISO 22301. ISO: Geneva, Switzerland, 2020.

- Beyer, B.; Jones, C.; Petoff, J.; Murphy, N.R. Site Reliability Engineering: How Google Runs Production Systems; O’Reilly Media: Sebastopol, CA, USA, 2016. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).