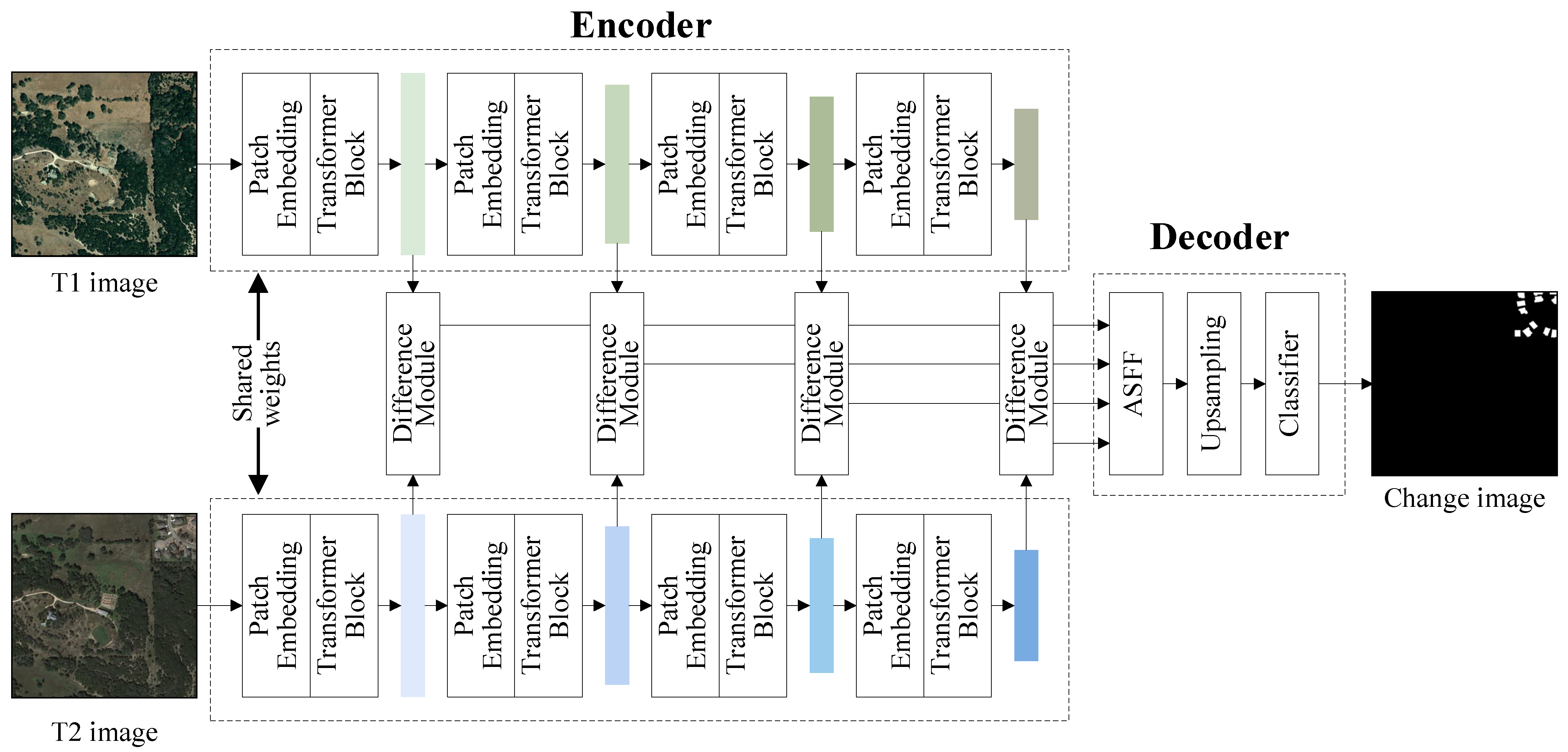

In this section, we present the experimental setup, along with the corresponding results and discussions. Specifically, we detail the datasets used, the implementation specifics. Subsequently, the performance of the MSTAN model is evaluated and compared with existing approaches to demonstrate its effectiveness and generalization. Meanwhile, ablation experiments and complexity analysis of the four-layer ASFF module were conducted to demonstrate its effectiveness.

3.2. Comparative Experiments

To evaluate the performance of the proposed model in change detection, comprehensive comparative experiments were conducted on the LEVIR-CD and CLCD datasets. The method was compared with several approaches: FC-EF [

7], FC-Siam-Di [

7], FC-Siam-Conc [

7], DTCDSCN [

20], BIT [

21], and RDP [

22]. Below is a brief introduction to these models:

FC-EF: employs a Fully ConvNet to process concatenated bitemporal images for change detection.

FC-Siam-Di: utilizes a Siamese Fully ConvNet to extract multi-level features and detect changes through feature differences.

FC-Siam-Conc: also based on a Siamese Fully ConvNet, detects changes by concatenating multi-level features. These fully convolutional network models have surpassed the state-of-the-art change detection methods (2018) in both accuracy and inference speed for change detection as described in [

7].

DTCDSCN: leverages a dual attention module to exploit channel and spatial interdependencies of ConvNet features for change detection. On the WHU building dataset, it has surpassed the state-of-the-art change detection methods (2022) as described in [

20].

BIT: employs a simple CNN backbone to extract paired feature maps, and innovatively designs a bi-temporal image Transformer to efficiently model contextual information within the spatio-temporal domain. On three change detection datasets, it has surpassed several state-of-the-art change detection methods (2022) as described in [

21].

RDP: utilizes a dual pyramid architecture with residual connections to capture multi-scale features, enhancing change detection through oriented pooling. It has achieved the state-of-the-art empirical performance (2022) as described in [

22].

Performance was assessed using five standard metrics: Accuracy, IoU, F1-Score, Precision, and Recall. The evaluation results of different methods on LEVIR-CD and CLCD datasets are shown in

Table 2 and

Table 3, respectively.

On the LEVIR-CD dataset, MSTAN achieves the highest performance in Accuracy (98.046%), IoU (82.088%), F1-score (89.321%), and Precision (91.720%), while ranking fourth in Recall (87.213%). The relatively lower Recall means that there is a certain degree of missed detection for some change areas. The F1—score, which balances Precision and Recall, indicates the overall effectiveness of change detection. The superior F1—score of MSTAN shows that the model can accurately identify change areas in general.

On the CLCD dataset, MSTAN achieves the best results in IoU (70.262%), F1-score (80.101%), and Recall (78.757%), demonstrating strong sensitivity and low omission rates. Although FC-EF achieves the highest score in the Precision (86.594%), its performance on other metrics is relatively low, especially for IoU (55.267%) and Recall (58.748%). This indicates that the model has a high rate of missed detections and low agreement with the ground truth. Clearly, it is not suitable for precise change detection tasks. Although Accuracy (94.764%) and Precision (81.611%) are lower than BIT, MSTAN achieves highter Recall metric compared to the BIT model, and its F1-score also surpasses that of the BIT model. It indicates that MSTAN possesses better generalization capability and robustness.

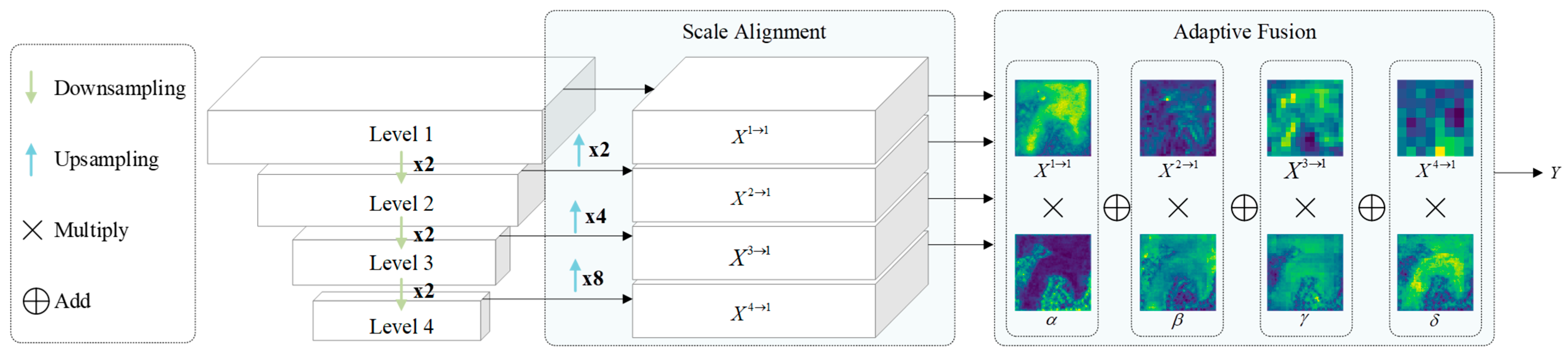

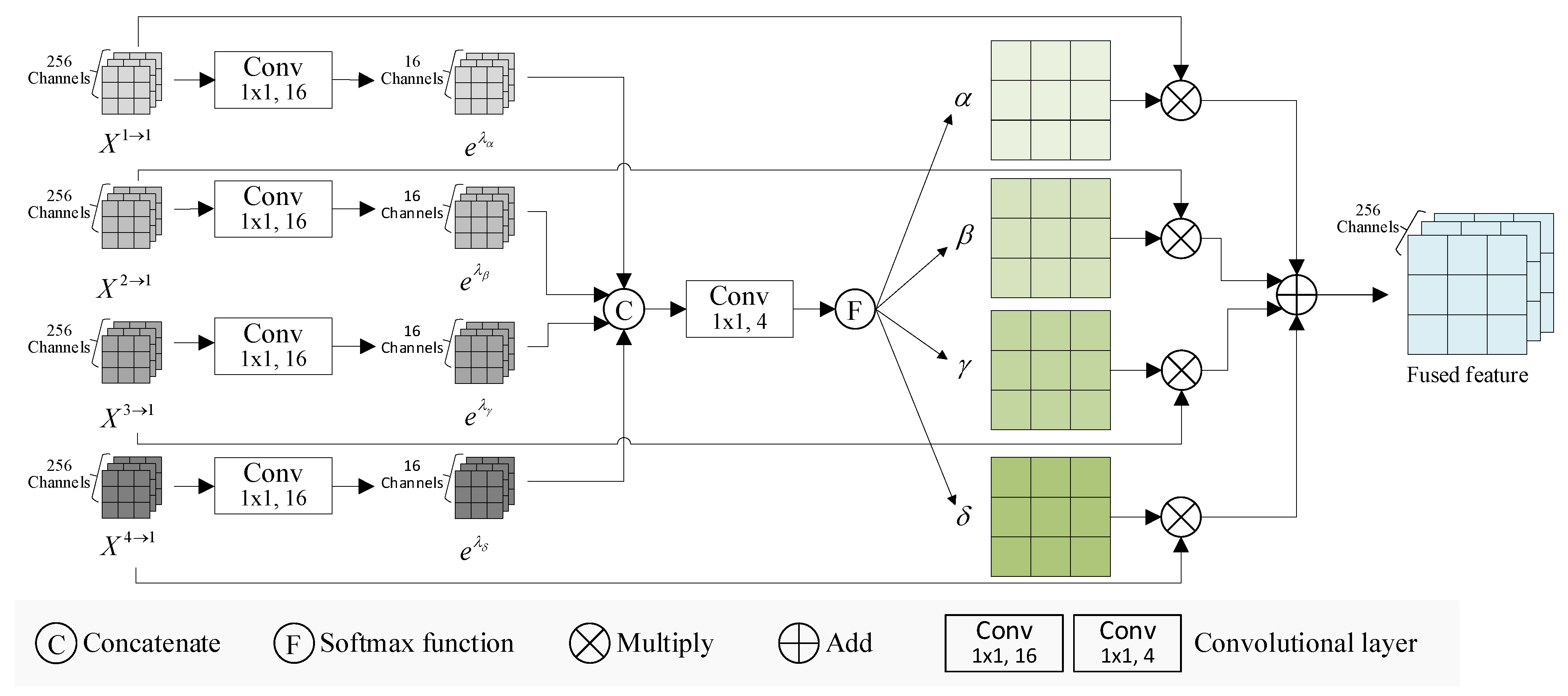

Overall, MSTAN exhibits consistent and robust performance across both datasets, reflecting strong generalization, stability, and accuracy. The performance advantage of MSTAN stems from the multi-scale Transformer encoder and the four-layer ASFF module. The multi-scale Transformer encoder captures hierarchical features across spatial scales. The adaptive learning mechanism and dynamic weight adjustment strategy of the four-layer ASFF enhance the model’s representation capability for various types of targets (e.g., building targets on LEVIR-CD dataset and farmland targets on CLCD), thereby improving the model’s generalization and robustness, leading to superior overall performance on both datasets.

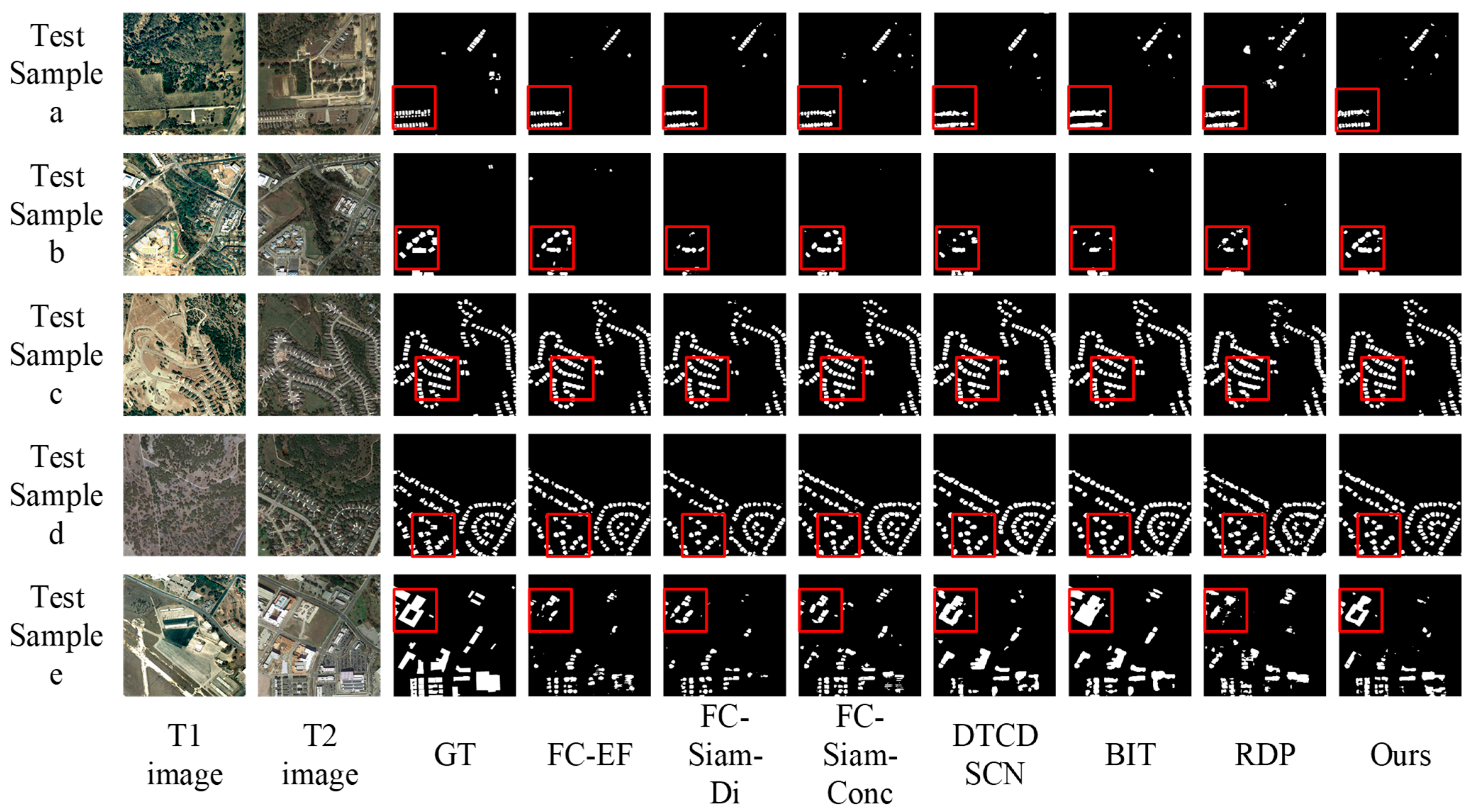

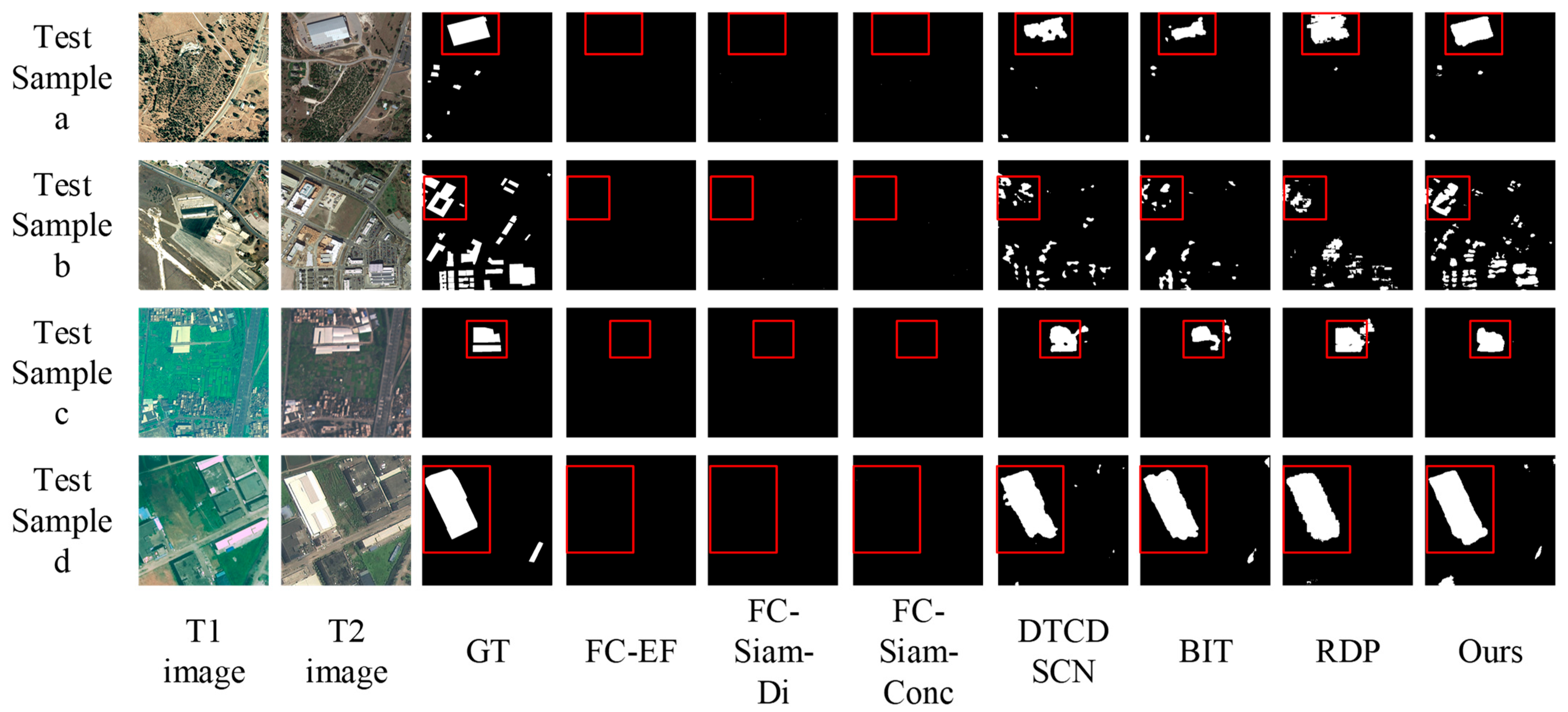

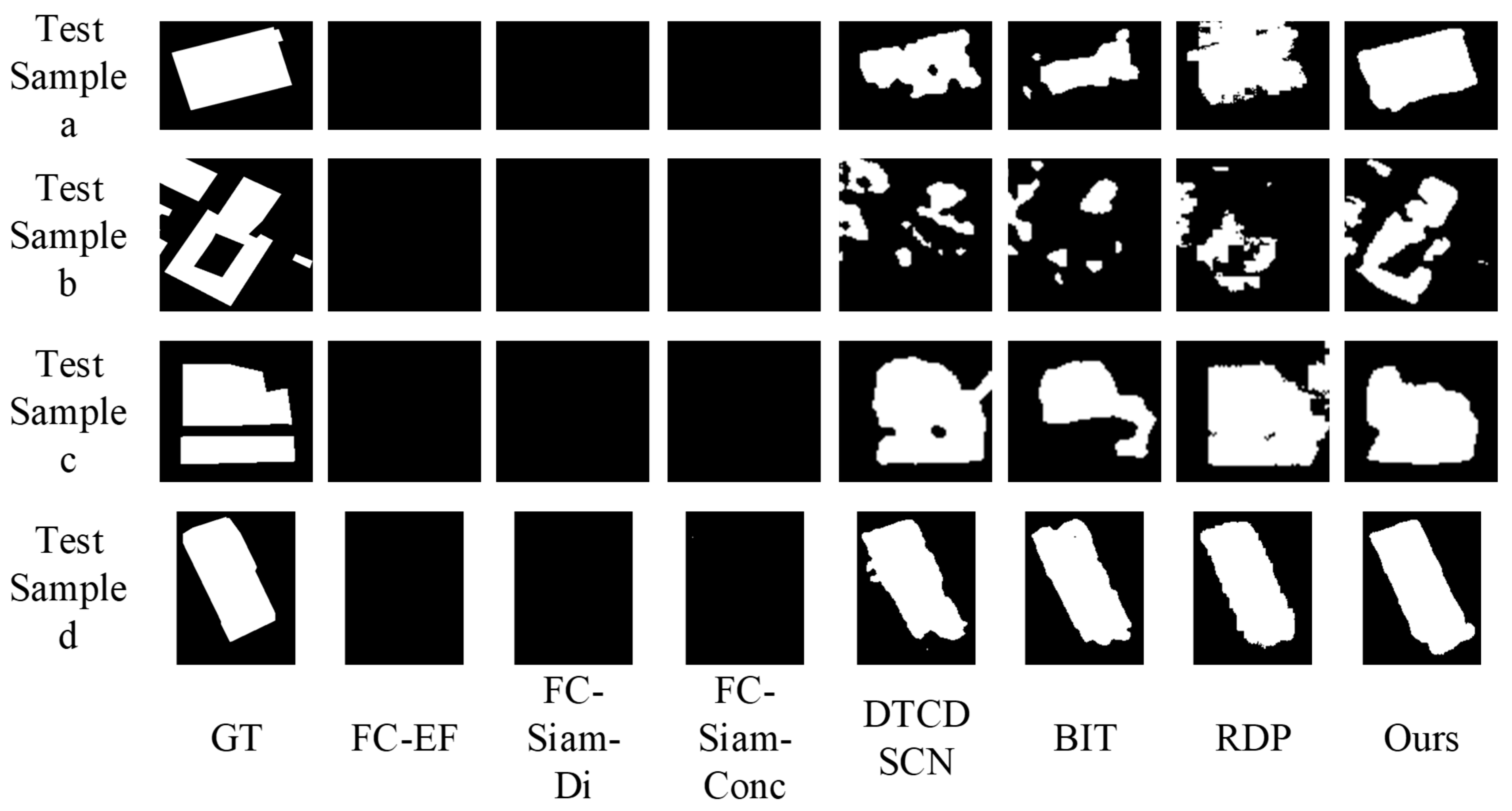

To visually demonstrate the effectiveness of different models in extracting change areas, the visual detection comparisons of various methods on LEVIR-CD dataset are provided in

Figure 4.

Figure 4.

Change detection results of different methods on LEVIR-CD. The regions marked by red rectangles are the pixel areas of interest. The more detailed texture information of this area is shown in

Figure 5.

Figure 4.

Change detection results of different methods on LEVIR-CD. The regions marked by red rectangles are the pixel areas of interest. The more detailed texture information of this area is shown in

Figure 5.

In the figure, each row corresponds to a test sample. The first and second columns show the T1 and T2 images, respectively, while the third column displays the ground truth. Subsequent columns present the change detection maps produced by FC-EF, FC-Siam-Di, FC-Siam-Conc, DTCDSCN, BIT, RDP, and the proposed MSTAN model.

On the LEVIR-CD dataset, as shown in

Figure 4, all models detect major changes (e.g., test sample c,d), but differ in detail preservation. FC-EF, FC-Siam-Di, FC-Siam-Conc and RDP exhibit notable edge degradation and missed detections (e.g., test sample b,e). This is consistent with their lower Recall scores. In this regard, BIT captures a larger number of changed areas, but suffers from false alarms (e.g., test samples a,e), which is consistent with its lower Precision score. DTCDSCN shows inferior boundary delineation compared to MSTAN (e.g., test samples b,a). Although MSTAN can accurately detect most of the changed areas, it exhibits a certain number of missed detections compared to models such as BIT (e.g., test sample e), which directly reflects its relatively low Recall score on this dataset.

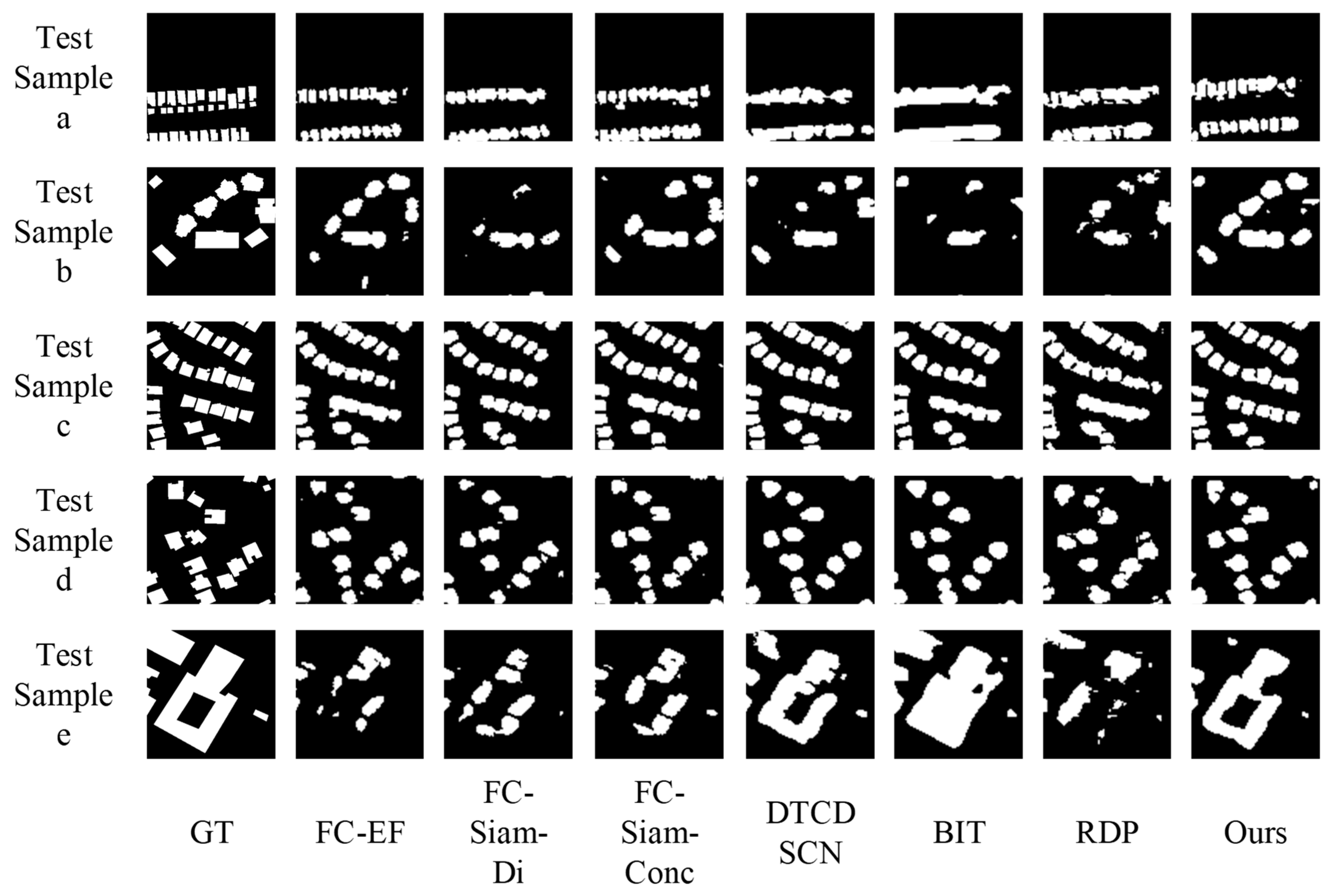

Figure 5.

Detail texture display of interesting regions on LEVIR-CD.

Figure 5.

Detail texture display of interesting regions on LEVIR-CD.

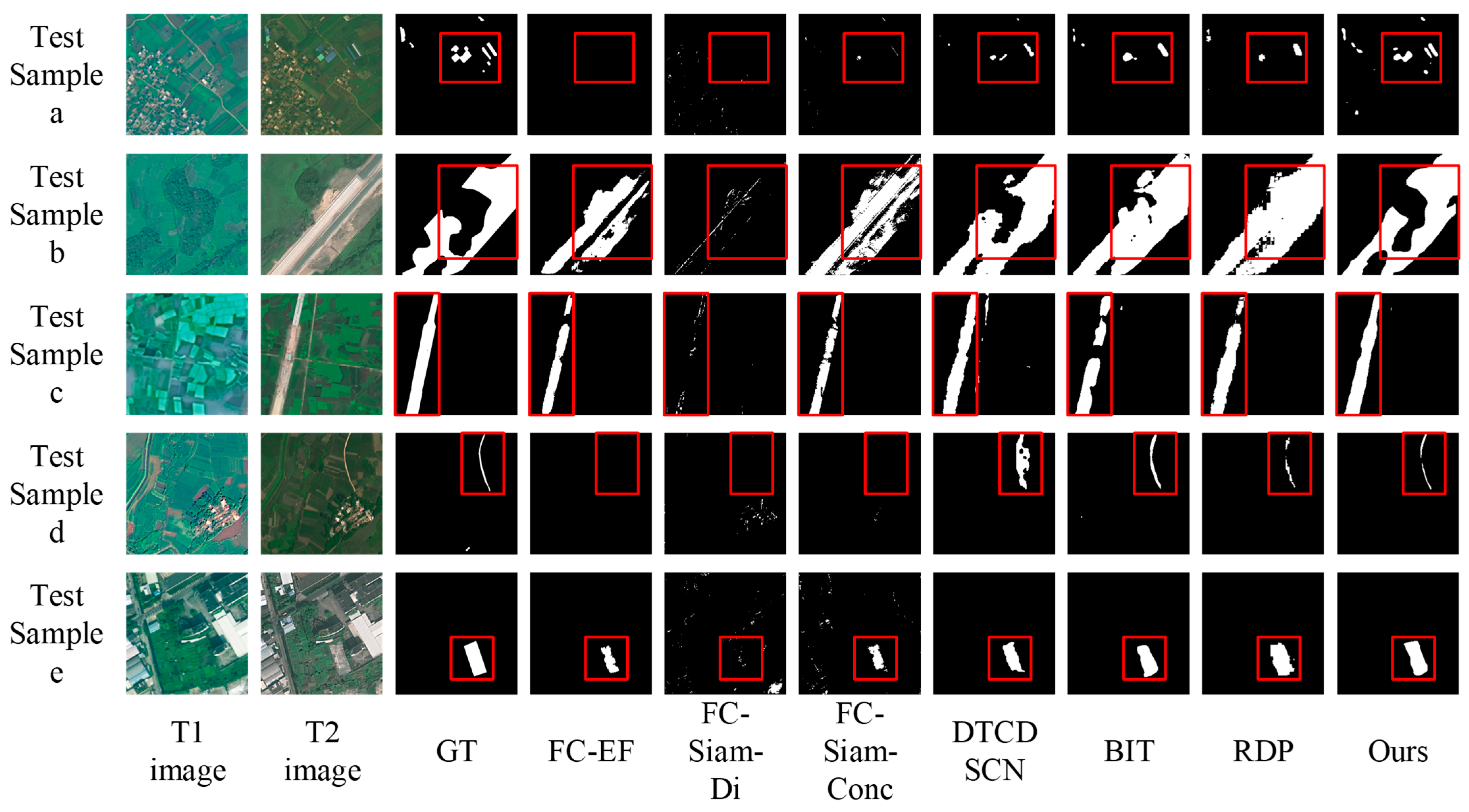

The visual detection comparisons of various methods on CLCD dataset are provid-ed in

Figure 6. The FC-EF, FC-Siam-Di, and FC-Siam-Conc models exhibit a significant drop in precision and a notable increase in missed detections (e.g., test samples a, d, and e). The RDP, BIT, and DTCDSCN models suffer from severe false and missed detections. In contrast, MSTAN shows a marked improvement in reducing missed detections (e.g., test samples b and c), which corresponds to its higher Recall value. However, it also causes some missed detections (e.g., test samples e), which corresponds to its lower Precision value.

Figure 6.

Change detection results of different methods on CLCD. The models and test samples shown in this figure are the same as those in

Figure 4. The regions marked by red rectangles are the pixel areas of interest. The detailed texture information of this area in this figure is shown in

Figure 7.

Figure 6.

Change detection results of different methods on CLCD. The models and test samples shown in this figure are the same as those in

Figure 4. The regions marked by red rectangles are the pixel areas of interest. The detailed texture information of this area in this figure is shown in

Figure 7.

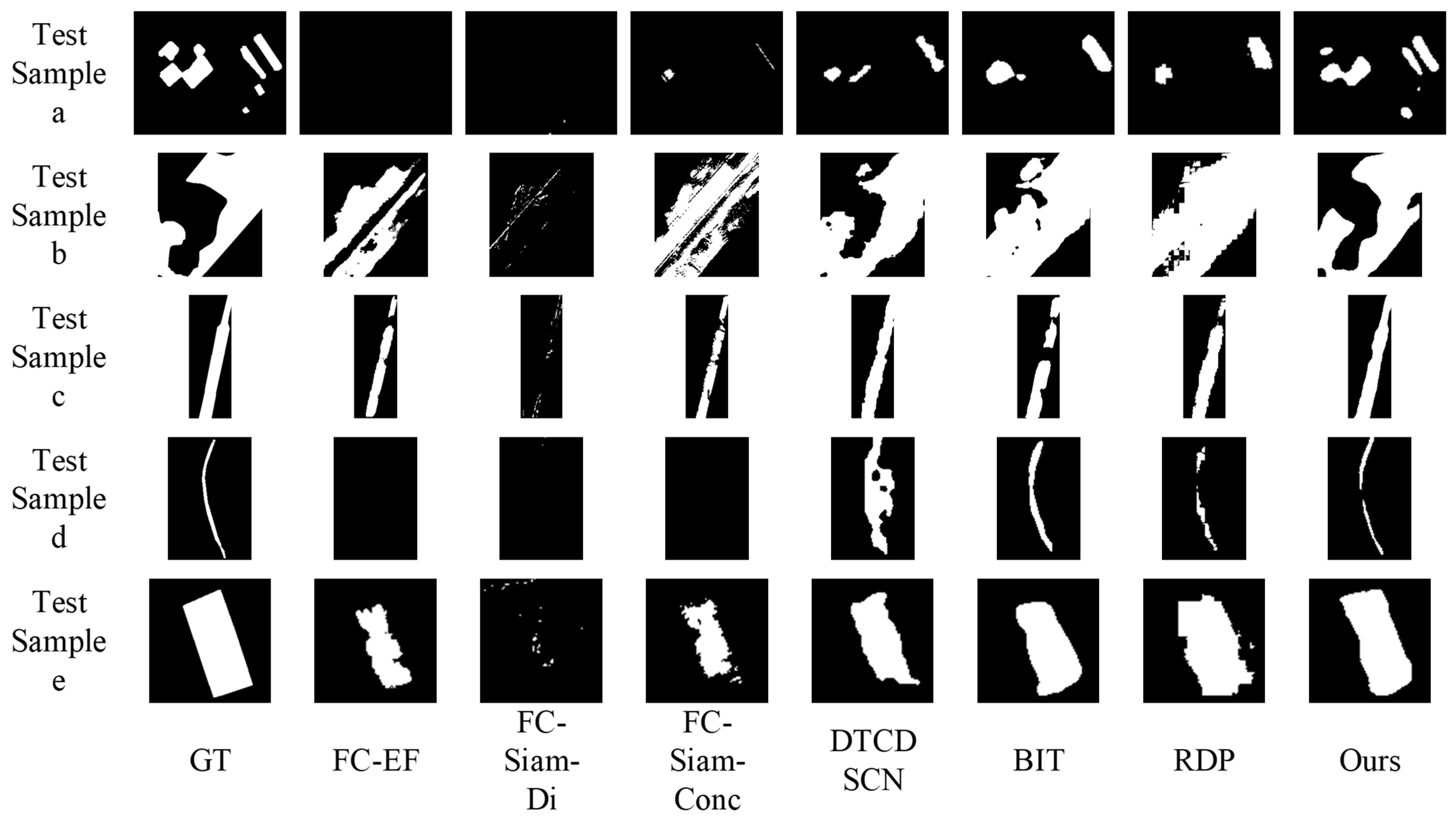

Figure 7.

Detail texture display of interesting regions on CLCD.

Figure 7.

Detail texture display of interesting regions on CLCD.

In summary, the above comparative analysis shows that MSTAN exhibits more balanced performance metrics and superior generalization capability across the two datasets. Despite the superior performance of MSTAN in most scenarios, it still has certain limitations.

In terms of handling extremely subtle changes, such as tiny alterations in vegetation coverage on the LEVIR—CD dataset, MSTAN may occasionally miss some of these minute changes. It may limit the model’s applicability in certain sensitive target perception tasks. This is because the four-layer ASFF module, while excellent at fusing multi—scale features for prominent changes, might not be sufficiently sensitive to such extremely fine—grained variations, which can lead to a slight decrease in recall for these specific cases.

When dealing with large—scale, complex scene changes on the CLCD dataset, MSTAN might have difficulty in accurately distinguishing and segmenting each individual object. The adaptive fusion of the four-layer ASFF module, in such highly complex and ambiguous situations, may not always assign the most optimal weights to different scale features, resulting in some inaccuracies in the detailed boundaries of change regions.

3.3. Cross-Dataset Evaluation

To further validate the generalization capability of MSTAN, particularly its universality for two distinct target types: building changes in the LEVIR-CD dataset and cropland changes in the CLCD dataset. We merge the two datasets into a unified data source and unify the training. This approach exposes the training process to varying target scales, thereby reducing the dependence of the model on the statistical characteristics of a single scene.

We use the same standard metrics and comparison models in

Section 3.2, and the comparison results of cross-dataset evaluation are shown in

Table 4.

Experimental results demonstrate that MSTAN achieves the best performance across all evaluation metrics, with Accuracy, IoU, F1-score, Precision, and Recall reaching 96.161%, 72.905%, 82.227%, 85.168%, and 79.806%, respectively. This indicates its outstanding adaptability to diverse scenarios. Detailed analysis reveals that F1-score of MSTAN surpasses the second-ranked model DTCDSCN by 3.145%, precision by 0.645% over the second-ranked model BIT, and recall by 2.348% over the second-ranked model DTCDSCN. This demonstrates its overall robust performance in change detection tasks and superior control over misclassifications and missed detections. The IoU is improved by 3.554% compared to the second-ranked model BIT, indicating that predictions of MSTAN exhibit higher overlap with GT labels and greater accuracy in locating change region boundaries. In contrast, models such as RDP, FC-Siam-Conc, FC-Siam-Di, and FC-EF performed poorly across the board.

Comparing these results with the single-dataset test results on LEVIR-CD in

Table 1, it can be seen that MSTAN exhibits a certain degree of decline in some metrics during cross-dataset experiments. For instance, its F1 score on the LEVIR-CD single dataset (89.321%) is significantly higher than the cross-dataset test result (82.227%). Conversely, all metrics for MSTAN improved in the CLCD single-dataset test results shown in

Table 2. This indicates that while MSTAN demonstrates exceptional generalization capabilities, there remains room for improvement in handling the compound complexity of multiple datasets. Furthermore, similar trends were observed in other comparison models, where certain metrics in cross-dataset experiments fell below their respective single-dataset test results.

Overall, MSTAN stands out with optimal generalization performance, yet all models face challenges in maintaining consistent performance when handling datasets with significant variations in data distribution.

To visually demonstrate the generalization of different models in extracting change areas, the visual detection comparisons of various methods on cross-datasets are provided in

Figure 8.

Figure 8.

Change detection results of different methods on cross-dataset. The regions marked by red rectangles are the pixel areas of interest. The more detailed texture information of this area is shown in

Figure 9.

Figure 8.

Change detection results of different methods on cross-dataset. The regions marked by red rectangles are the pixel areas of interest. The more detailed texture information of this area is shown in

Figure 9.

In the figure, the first two rows of test samples are from LEVIR-CD dataset, while the last two rows are from CLCD dataset. The first and second columns show the T1 and T2 images, respectively, while the third column displays the ground truth. Subsequent columns present the change detection maps produced by FC-EF, FC-Siam-Di, FC-Siam-Conc, DTCDSCN, BIT, RDP, and the proposed MSTAN model.

As shown in the figure, the FC-EF, FC-Siam-Di, and FC-Siam-Conc models performed poorly overall, detecting almost no regions of change. This represents a significant deviation from single-dataset test results, indicating weak cross-dataset generalization capabilities. The RDP model exhibits numerous false-positive regions (e.g., test samples a, c), corroborating its low Precision metric in

Table 4. The BIT and DTCDSCN models demonstrate significant false-negative regions (e.g., test samples a, b), confirming their low Recall scores. In contrast, the MSTAN model exhibits high spatial agreement with GT, demonstrating strong adaptability and accuracy across complex and diverse variation scenarios. This indicates its robust generalization capability and resilience. Its performance advantage likely stems from t adaptive learning strategy of four-layer ASFF module, which effectively learns cross-dataset generalized features. This enhances the target representation capability of model and improves variation detection performance. However, MSATN exhibits suboptimal edge detail representation (e.g., test samples b, c), indicating its shallow-layer information is inadequately captured. This deficiency in edge detail characterization suggests the model struggles to effectively capture or utilize shallow-layer features.

Furthermore, compared to the test results from the single dataset in

Figure 4 and

Figure 6, a certain degree of decline in detection performance can be observed across all models (e.g., test sample b). This indicates that the generalization capability of MSTAN still has room for improvement.

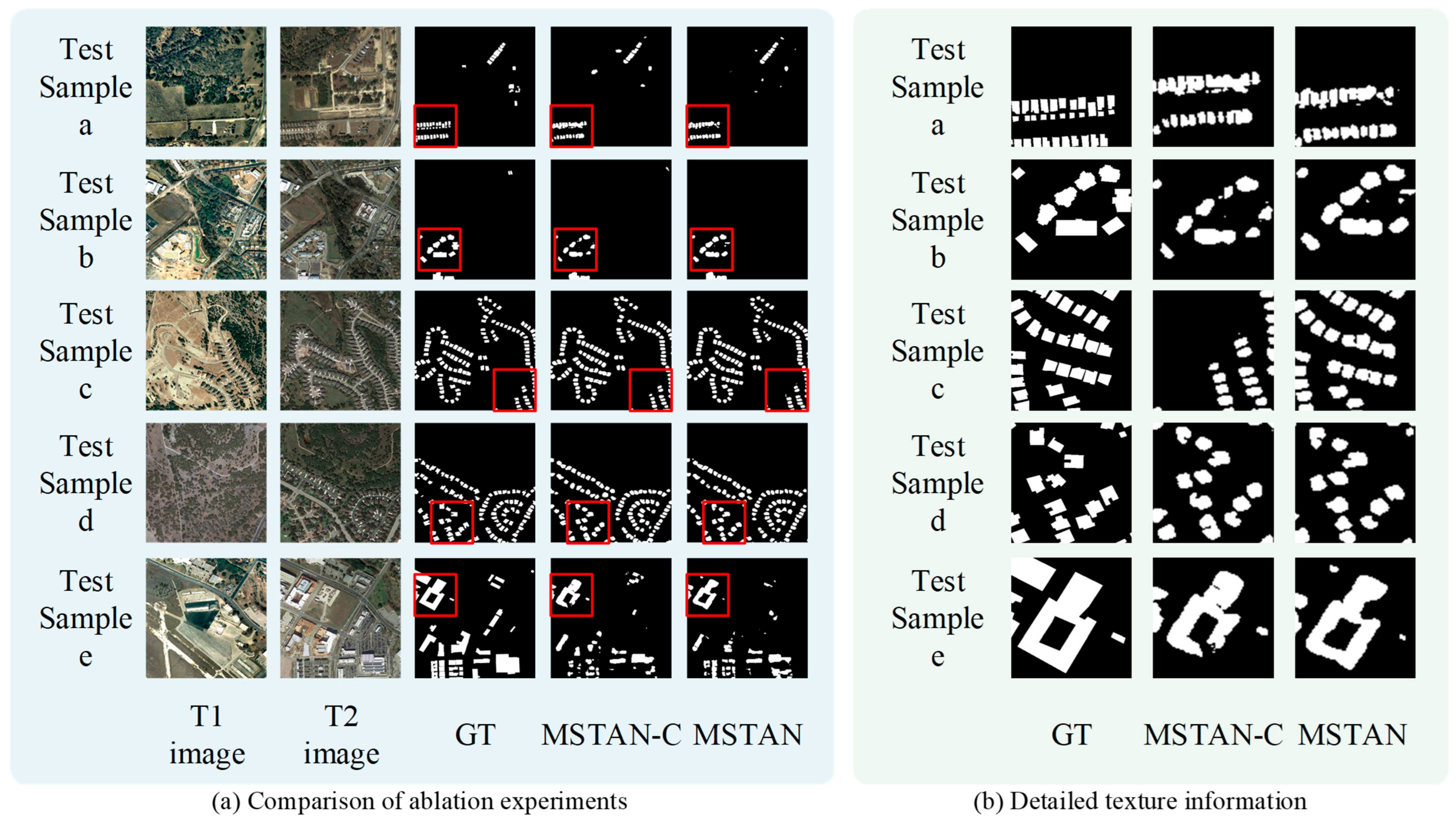

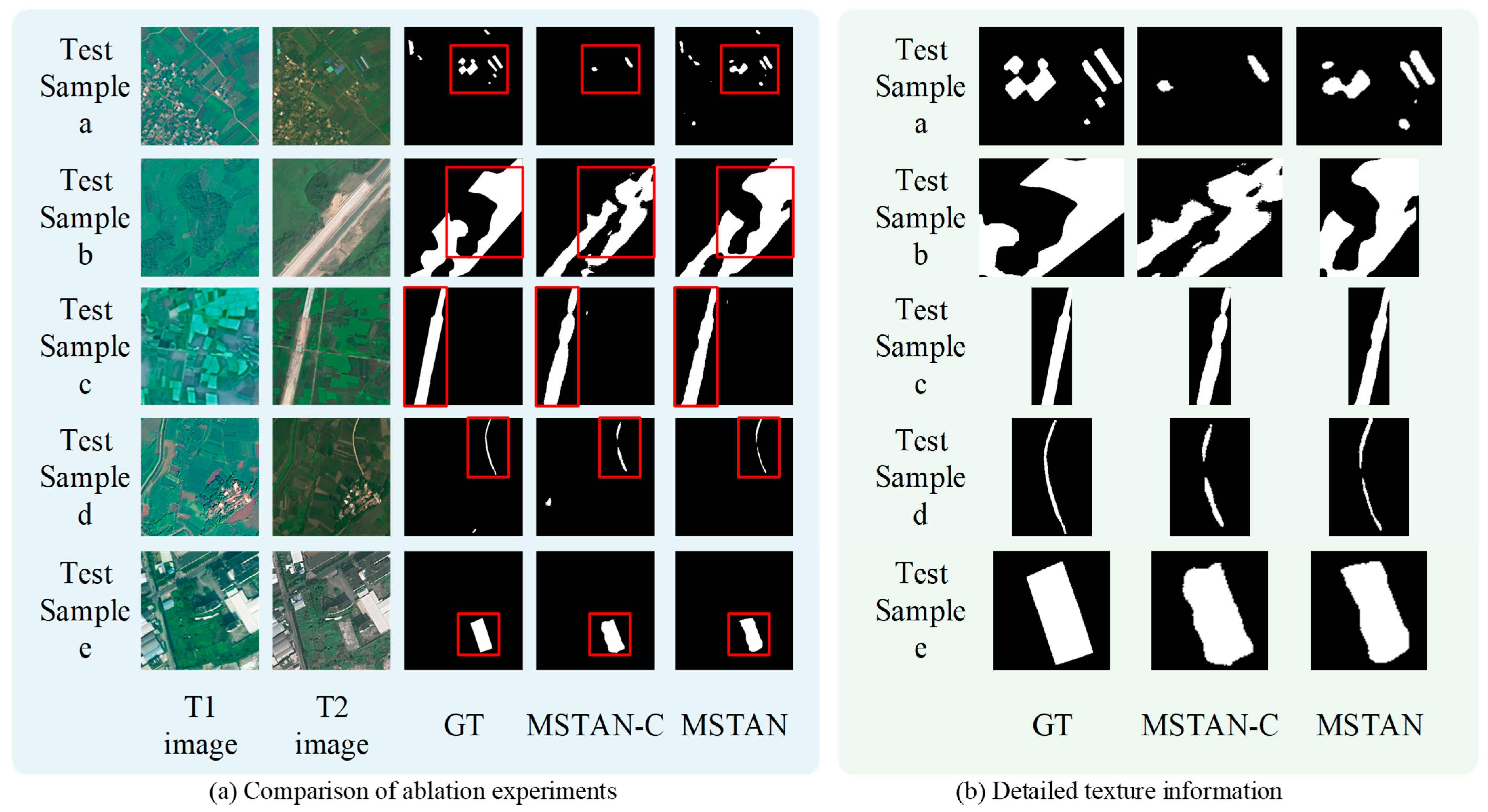

3.4. Ablation Experiments

To validate the effectiveness of the four-layer ASFF module in change detection tasks, this section conducts comparative ablation experiments on the LEVIR-CD and CLCD datasets. The models compared include MSTAN and MSTAN-C, where MSTAN employs the four-layer ASFF module for multi-scale feature fusion, while MSTAN-C uses the concatenate operation to fuse multi-scale features following the approach in [

8]. The comparison results are shown in

Table 5 and

Table 6.

On the LEVIR-CD dataset, MSTAN shows improvements across all evaluation metrics, with an IoU increase of 0.680 and an F1-score improvement of 0.486, indicating that the model can more accurately predict changed regions overall, thereby helping to reduce false alarms and missed detections in practical applications.

On the CLCD dataset, MSTAN also demonstrates superior performance, achieving an IoU improvement of 1.673, an F1-score increase of 1.487, and a precision gain of 2.296. A possible reason is that differences in target representation between the LEVIR-CD and CLCD datasets make the traditional concatenate fusion mechanism less effective in learning multi-scale target features. For instance, the two datasets differ in terms of target types, scale sizes, and scale ranges. In contrast, the four-layer ASFF module’s adaptive feature learning and dynamic fusion strategy enable the model to effectively learn more generalizable feature representations, thereby enhancing its generalization capability and adaptability. These results indicate that the proposed ASFF feature fusion module is effective in improving performance for remote sensing image change detection tasks.

Figure 10 and

Figure 11 present the visual comparison of detection results on the two datasets, along with the corresponding detailed information displays.

From the visualization results, MSTAN demonstrates clear advantages on multiple test samples, which is consistent with the aforementioned quantitative analysis findings.

The improvement of MSTAN on the LEVIR-CD dataset is not particularly pronounced. The result generated by MSTAN-C exhibits irregular edges in the red-boxed region (e.g., test sample e), whereas the change region produced by MSTAN shows higher shape fidelity to the ground truth, with smoother and more accurate boundaries. This observation corresponds to the improvements in the IoU and F1-score metrics of MSTAN on the LEVIR-CD dataset in the quantitative analysis, indicating that the four-layer ASFF module enables more precise reconstruction of the morphology of changed regions and enhances the intersection-over-union ratio. The performance improvement of MSTAN is more significant on the CLCD dataset. The region detected by MSTAN-C not only deviates in shape but also contains several missed detections (e.g., test sample a). The detected edges are less smooth and continuous (e.g., test sample a). In contrast, MSTAN’s detection results are closer to the ground truth, with reduced noise. In test sample d, the ground truth contains a thin, elongated change line. MSTAN-C fails to detect this line continuously, exhibiting fragmentation, while MSTAN, although not fully capturing the entire line, handles the edge details more effectively.

However, the four-layer ASFF module clearly has limitations. For instance, noticeable missed detections occur in test sample e on LEVIR-CD and test sample b on CLCD, indicating that MSTAN’s Recall metric still needs improvement—this is particularly critical for sensitive target detection. A possible reason is that the four-layer ASFF module may lack sufficient sensitivity to subtle or fine-grained changes.

In summary, the design of the four-layer ASFF module can effectively address the detection challenges in remote sensing image change detection tasks caused by inconsistent target scales and scene variations. The ASFF module is not merely a technical improvement over concatenation-based fusion; rather, it serves as a fusion framework that enhances generalization capability, providing a more robust fusion strategy for change detection tasks.

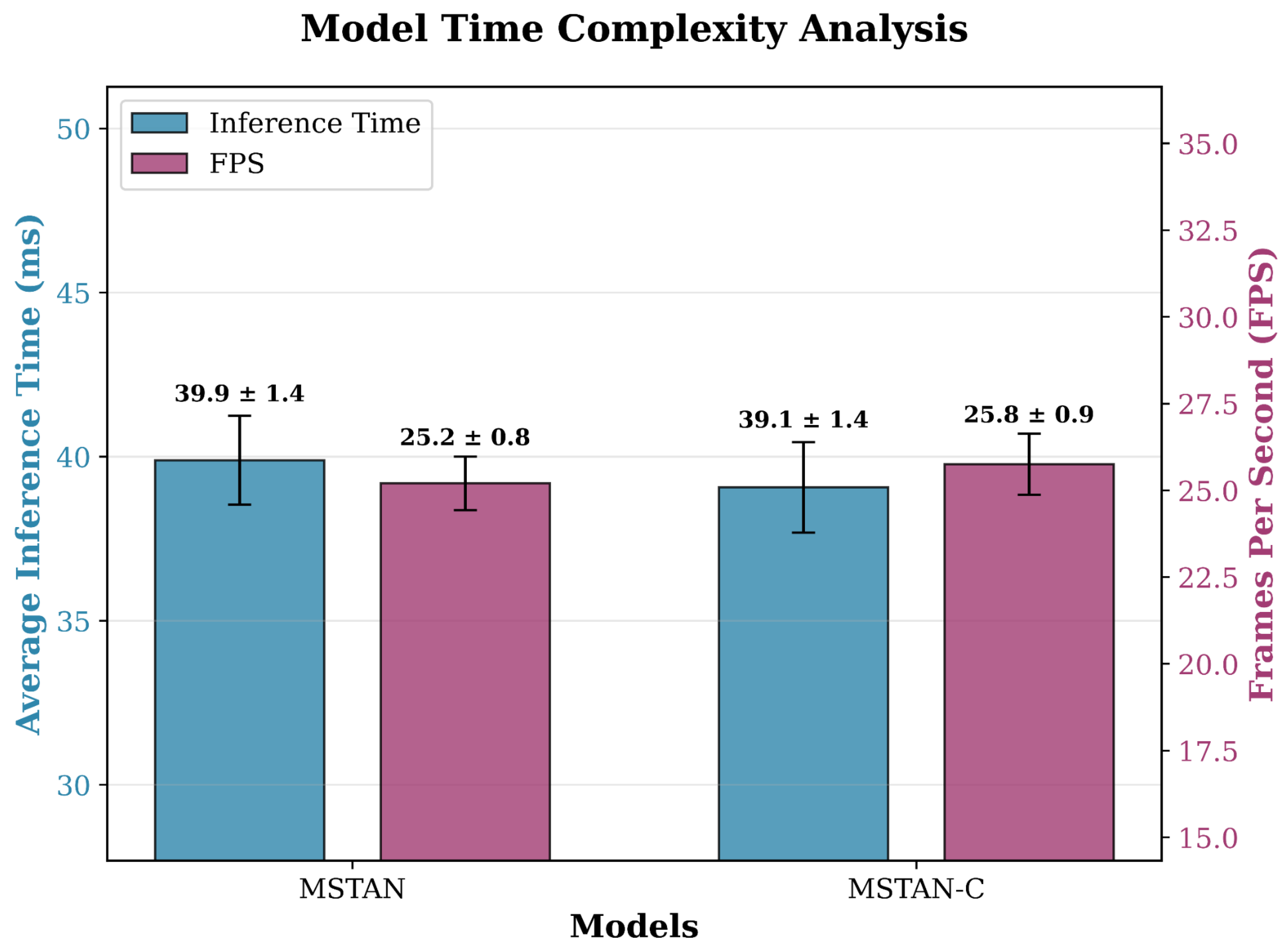

3.5. Complexity Analysis

To comprehensively evaluate the impact of the four-layer ASFF module on the computational complexity of the model, we analyze the Floating—point Operations (FLOPs) and model Parameters (Params) of MSTAN (the model with the four-layer ASFF module introduced) and MSTAN-C model (the model that uses the concat operation to fuse multi—scale features instead of the four-layer ASFF module) in

Table 7.

MSTAN is designed to leverage the four-layer ASFF module for adaptive fusion of multi—scale features, aiming to capture the complementary information across different scales more effectively in remote sensing image change detection. In contrast, MSTAN-C adopts a simpler concat operation, which directly concatenates multi—scale features without adaptive weight learning.

From the perspective of FLOPs, MSTAN has 204.472 G FLOPs, while MSTAN-C has 202.788 G FLOPs. The four-layer ASFF module needs to learn adaptive fusion weights for multi—scale features. This process involves operations such as generating weight coefficients for each spatial position and each scale, which introduces additional computational operations. As a result, MSTAN has a slightly higher number of floating—point operations compared to MSTAN-C that uses the concat operation.

Regarding the model parameters, MSTAN has 41.437 M parameters, and MSTAN-C has 41.027 M parameters. The four-layer ASFF module contains network structures responsible for learning fusion weights. These structures, including lightweight convolutional layers for weight generation, bring in a certain number of parameters, leading to a slightly higher parameter count in MSTAN than in MSTAN-C.

Meanwhile, we conducted five test trials to measure the inference time and FPS (Frames Per Second) metrics on the same input image pair. For each trial, the average inference time and FPS were computed over 100 forward passes with identical input data. The experimental results are presented in

Table 8. The definition of FPS is given in Equation (9).

where

T denotes the inference time.

In the table, “Mean” denotes the mean value of repeated trials for each metric, and “Std” represents the standard error of the repeated trials.

Figure 12 shows the error bar plots of inference time and FPS metrics. From these charts, it can be observed that MSTAN has a slightly longer response time and a correspondingly lower FPS compared to MSTAN-C. This is consistent with the earlier analysis of the FLOPs and Parameters metrics. Statistical significance tests across 5 independent training runs confirm the robustness of these results, with standard deviations below 0.3% for all metrics, indicating stable performance free from random training variance.

In summary, the four-layer ASFF module introduces a certain level of computational complexity to the model; however, this increase is attributable to the implementation of more accurate adaptive fusion of multi-scale features. Such adaptive fusion is crucial for enhancing the model’s generalization and robustness. However, for rapid response systems dedicated to specific tasks, the responsiveness of MSTAN remains questionable.