1. Introduction

Age recognition has attracted significant attention in computer vision and biometrics due to its broad practical applications [

1]. Accurate age recognition holds irreplaceable value in scenarios such as precision marketing, healthcare, and public safety [

2]. The application requirements of these areas impose strict demands on the accuracy and real-time performance of the technology, driving age recognition algorithms to iterate towards higher efficiency and robustness [

3].

Although age recognition has become a research hotspot, the complexity and difficulty of facial age recognition far exceed those of ordinary image recognition tasks [

4]. Firstly, the individual differences in the aging process are significant. Factors such as genetics, living environment, and dietary habits lead to variations in the rate of facial aging among different people, and people of different ages may also exhibit similar facial features [

5]. Secondly, the ambiguity of adjacent age labels is difficult to avoid. Human facial features change continuously and gradually with age, and the differences in facial appearance between adjacent ages are often so subtle that they cannot be defined by a single label. It is difficult for traditional “either-or” classification or regression methods to capture this continuity [

6]. Thirdly, external interference factors are complex. Changes in lighting, shooting angles, makeup, facial expressions, and even racial differences can all mask age characteristics, making the model susceptible to interference from irrelevant information. Moreover, the inherent biases of the datasets also exacerbate the challenges [

7]. The existing public datasets mostly focus on specific age ranges or racial groups, making it difficult to cover the real distribution of all ages and multiple ethnic groups, resulting in limited generalization ability of the model.

The development of facial age recognition technology demonstrates the evolution from traditional machine learning to deep learning. Traditional machine learning methods extract visual cues related to age, such as facial wrinkles, skin color, and eye area textures based on manual design approaches, and then use classic algorithms like regression and classification to complete age recognition [

8]. However, the expressive ability of manual features is limited, making it difficult to capture the complex patterns of facial aging. Deep learning has significantly advanced the technology of facial age recognition. Convolutional Neural Networks (CNNs) have become the mainstream in this field because they can directly extract high-dimensional and complex age-related features from raw facial images [

9]. However, CNNs rely on a large number of parameters and require a certain amount of labeled data. In reality, the construction of large-scale face datasets is costly, labeling is labor-intensive, and existing datasets often have problems such as insufficient data and imbalanced distribution, which severely limit the generalization ability of the model. Moreover, the high-dimensional features extracted by CNNs often contain redundant information, which not only increases computational complexity but also reduces the running efficiency of the model [

10].

There are significant differences in appearance among peers. This is because age is a complex multi-dimensional biological process, and facial expressions are influenced by various factors such as genetics, lifestyle, environment, psychology and health. Therefore, relying solely on facial features for age recognition has inherent limitations. To overcome this limitation, research in recent years has shifted its focus to modeling individual aging process. Geng [

11] was the first to propose an individual aging spatial model, extracting feature parameters through traditional appearance models and predicting age based on the projection of the face in space. Zhang et al. [

12] further proposed a Recurrent Age Estimation (RAE) model, inputting the facial feature sequence extracted by CNN into a recurrent neural network (RNN), and improving the recognition accuracy by learning the aging temporal patterns. However, the RAE model still has significant shortcomings. On the one hand, the features extracted by CNN contain a large amount of high-dimensional redundant information, which interferes with the model’s focus on key age features. On the other hand, the insufficient training data limits the modeling ability for the individual aging process, ultimately restricting the improvement of recognition performance.

In recent years, architectures that integrate multiple modules and attention mechanisms have become an important research focus for improving age recognition performance. For example, Zhao et al. [

8] integrated multiple deep networks to effectively learn aging features of faces. Liu et al. [

4] utilized local and global multi-attention mechanisms to capture global and local facial features, thereby enhancing facial age recognition. An et al. [

13] improved age-related key region focusing by hybridizing spatial-channel attention modules. However, these methods rely on massive static image data rather than longitudinal temporal data of the same individual, making it difficult to capture the continuous dynamics of individual aging.

Meanwhile, technological advancements in related visual tasks also offer valuable references for age recognition. For instance, the feature fusion and attention mechanisms used in 3D face prediction [

14] and reconstruction [

15], the temporal modeling in human action recognition [

16], and the feature dependency modeling in expression recognition [

17] all demonstrate the effectiveness of attention mechanisms, feature dependencies, and feature optimization in learning complex visual patterns.

Although attention mechanisms, feature dependencies, and feature optimization have demonstrated effectiveness in general visual tasks, systematically integrating them into individual aging modeling remains a gap in current research. To address this, we propose an identification model based on Individual Aging Patterns (IAP). Compared to existing works, IAP synergistically optimizes age label distribution, manifold learning, and attention mechanisms. By constructing a label distribution covering reasonable adjacent ages, it can more accurately reflect the ambiguity of age concepts. The attention mechanism is utilized to highlight the key aging features, and low-dimensional discriminative features are extracted by combining CNN with manifold learning, thereby improving the accuracy and robustness of IAP.

Research has achieved the following innovations in the field of facial age recognition:

A new age recognition method based on IAP has been proposed. IAP breaks through the limitation of traditional age recognition which solely relies on static facial features and focuses on the dynamic time process of individual aging. It can accurately reflect the personalized aging of individuals.

A CNN and manifold learning feature optimization strategy was constructed, which eliminated redundant information and retained the aging-related manifold structure.

Multiple collaborative optimization techniques were integrated, the distribution of age labels was improved, deep features were combined with manifold learning, and attention mechanisms were integrated with temporal modeling, multi-dimensionally enhancing the effectiveness of features and the robustness of the model.

2. Related Work

Facial age recognition involves analyzing and extracting various features from facial images, such as facial geometric features, texture features, shape features, contour edges, and skin relaxation degree, to infer the age or age range of an individual. Feature extraction of age is the most crucial part in age recognition. Effective age features can enhance the performance of the age recognition system and reduce the complexity of the age recognition algorithm.

Traditional feature extraction methods mainly include anthropometric models, flexible models, appearance models, bio-inspired methods, local binary patterns (LBP), and Gabor filters [

18]. Anthropometric models construct geometric models based on the distance features of key facial organs such as eyes, nose, and mouth, achieving age identification by quantifying shape changes, especially suitable for determining the age of minors. However, this model is sensitive to pose changes and can only effectively process frontal face images [

19]. A flexible model integrates facial shape with grayscale/texture information, separately extracting shape parameters and global texture features, establishing a mapping function between age and feature parameters. Its advantage lies in considering both geometric and appearance information, but it lacks robustness against complex poses and lighting changes. Appearance models integrate global and local features (such as texture details, frequency characteristics, skin tone distribution, etc.) and combine them with geometric features for comprehensive description [

20]. However, such methods are sensitive to factors like lighting and pose, and they struggle to capture subtle changes in facial shape and texture across different age groups (such as skin texture degradation and gradual signs of aging). This limits the stability and accuracy of the extracted features. Biologically inspired feature extraction methods, designed based on biological principles, mimic natural observation methods. LBP and Gabor filters are also commonly used methods for extracting texture features in facial recognition. These methods help capture the texture patterns of the face, enabling tasks related to describing and recognizing facial appearance. Traditional methods are sensitive to lighting and facial pose, and they find it difficult to capture deep age-related features, such as minor changes in skin texture and subtle differences in the aging process of the face, leading to unstable or inaccurate extracted features.

On the other hand, manifold learning maps the high-dimensional data of the original face images to a low-dimensional manifold space, achieving feature extraction and dimensionality reduction. Typical methods include principal components analysis (PCA) [

21], locally linear embedding (LLE) [

22], Isomap [

23], etc. These methods achieve feature compression and key information retention by mapping the high-dimensional face data to a low-dimensional manifold space. However, manifold learning has inherent bottlenecks in terms of computational complexity, processing efficiency, and scalability for large-scale data, which limits its application in practical scenarios.

Deep learning, especially CNN, has made significant progress and achievements in the field of facial age recognition. Through multi-level feature extraction, CNN can extract high-level abstract features of faces, enabling the model to better capture and understand the complex changes related to age in face images [

24]. Chen [

25] trained and extracted face features using a set of CNNs and summarized the binary outputs of each CNN for final age prediction. Tripathi et al. [

26] applied the improved ResNet to the training and testing of face images, improving the performance of the model. Akhand et al. [

27] pre-trained the deep learning model and transferred it to facial age recognition, improving the accuracy of age recognition. Zhang et al. [

28] employed a feature fusion method to combine the identity of the person and the face age features, comprehensively considering the relationships between the features to enhance the overall representation ability of the model and improve the accuracy of age recognition.

It is now widely acknowledged that individual differences significantly limit age recognition accuracy, driving the field toward more refined modeling strategies. A mainstream approach is to utilize attention mechanisms to capture the complexity of aging. Zhu et al. [

29] used the ResNet-50 model to extract facial aging features by introducing an attention mechanism, further improving the accuracy of age recognition. Zhang et al. [

30] used a multi-attention mechanism to capture rich facial information. Meanwhile, Transformers and their variants have also been introduced for facial age recognition. Liu et al. [

4] adopted the transformer to capture global facial dependency features among local features of the face. Alhaeer et al. [

31] used the transformer variant T2T-ViT network to extract rich facial features and then used multi-scale attention to extract age-related features.

Deep learning has achieved remarkable results in the field of age recognition; its training heavily relies on large-scale labeled data. The current available datasets have a limited sample size, which poses challenges for achieving high-precision age recognition. To alleviate the problems of data scarcity and imbalance, Zhang et al. [

3] and Akgül [

32] proposed improvement strategies, emphasizing the necessity of designing for data imbalance. Moreover, there are significant differences in the aging process of individuals, and the rate of changes in skin condition and facial features varies among different people, directly leading to large errors in the model’s age prediction. The insufficient understanding of individual aging patterns is one of the main reasons for the low accuracy of age recognition.

In fact, by modeling the individualized aging process from facial images, we can more accurately infer a subject’s true age and thereby predict their future appearance. However, learning individual’s aging pattern requires multiple sequential facial images of the same person. Therefore, how to utilize limited facial images to discover effective individual aging patterns to improve the accuracy and reliability of age recognition has become a key issue that urgently needs to be addressed in this field.

3. IAP Facial Age Recognition Algorithm

3.1. Relevant Concepts

3.1.1. Improved Age Label Distribution

Human aging is a continuous and gradual process, resulting in a high degree of overlap in facial features between adjacent ages. This continuity makes single, absolute age labels difficult to accurately describe the samples. For example, a facial image with a physiological age of 29-year-old may be judged as 28-year-old or 30-year-old by different annotators, revealing the inherent ambiguity of age labels.

To quantify the ambiguity of age, researchers proposed age label distribution learning (LDL) [

5]. Facial age is no longer a single label but is described by a class probability distribution structure, which indicates the degree of matching between the sample and multiple related ages. Specifically, for a facial image sample

x, this distribution quantifies the matching degree of age

with

x (where

is the related age), and it must satisfy three conditions: (1) The degree of description increases as it gets closer to the physiological age

(that is, the closer to the actual age

, the larger

is). (2) The degree of description of the real age

for

x is the highest. (3) The sum of the degrees of description for all related ages is 1, and the value range of each degree of description is [0, 1].

The calculation of description degree usually employs the Gaussian distribution model:

represents the physiological age of the sample, and σ is the standard deviation of the Gaussian distribution. The bell-shaped characteristic of the Gaussian distribution precisely fits the pattern that the closer the age is, the higher the description degree is. Taking u representing a 25-year-old and σ representing 3 as an example, the description degree at 25-year-old is the highest, while the description degrees for a 22-year-old and a 28-year-old decrease as the distance increases, naturally simulating the continuity and correlation of age characteristics. Each image not only provides information for the training of its own actual age but can also assist the model in learning the features of the transitional stage through the description degrees of adjacent ages, thereby alleviating the problem of insufficient training data.

The existing age label distribution has significant limitations. On one hand, there are unreasonable adjacent age ranges, which lead to information loss due to too narrow coverage or noise introduction due to too wide coverage [

7]. On the other hand, the degree of correlation between adjacent ages is not set scientifically and has not undergone systematic scientific verification and quantitative analysis. To address these issues, the age label distribution is improved. One is to precisely adjust the key parameter σ value, and the other is to reasonably set the adjacent age ranges based on the characteristic laws of physiological age u. This optimization scheme can more accurately capture the fuzzy characteristics and continuous change trends during the age transition process. While retaining the key feature information of the original data, it further alleviates the problem of insufficient training data, providing a more reliable data foundation for subsequent model training and application.

3.1.2. Deep Facial Feature Manifold Learning

Manifold learning directly projects the original high-dimensional face image space using algorithms such as Isomap and LLE, attempting to reveal the hidden low-dimensional manifold structure within the original pixel data, thereby achieving dimensionality reduction and feature extraction. However, this direct projection method has obvious limitations. On the one hand, the original face images contain a large amount of redundant information (such as background noise, illumination changes, expression fluctuations, etc.) that interferes with the capture of the core structure (such as facial features, skin texture, etc.) by manifold learning. On the other hand, directly projecting onto the original space often fails to focus on the structures related to aging, making it difficult to separate out the low-dimensional manifold features related to age.

Deep manifold learning emerged to address this challenge [

33]. The core idea is to combine the feature extraction capability of deep learning with the low-dimensional structure mining ability of manifold learning. CNNs extract abstract features from low-level to high-level through multiple convolutional layers, pooling, nonlinear activation, and finally output high-dimensional task-related feature vectors through fully connected layers. However, this method has a key limitation: there is redundant information in the high-dimensional features and the intrinsic geometric structure of the feature space is ignored. Directly using the features extracted by CNNs for age recognition will increase computational complexity and is prone to overfitting. At the same time, blindly reducing the dimension may also lose key information. In response to the problems with the features extracted by CNNs, a manifold constraint is designed to ensure that the extracted features are adapted to the geometric structure of the low-dimensional manifold. Therefore, after extracting features through CNNs, manifold learning is introduced to reduce the high-dimensional features and embed them into the low-dimensional space, removing noise and retaining core correlations, thereby obtaining low-dimensional manifold features with strong discriminative ability.

Suppose the original face image is , where is the dimensions of the original face space, is the number of face images, and the real age label is . We want to extract a high-dimensional deep face feature space through the deep learning model, where is the dimension of the deep facial feature space extracted by CNNs from the original facial feature space. We want to learn a low-dimensional manifold embedded in to obtain a manifold aging feature subspace , and . More specifically, our learning objective is to find and projection matrices and such that , where .

3.1.3. Attention Mechanism

The attention mechanism simulates the human capacity for selective perception and information processing. It enables the efficient handling of tasks within complex perceptual, cognitive, and decision-making processes by dynamically allocating computational resources, focusing on core information, and filtering out irrelevant interference. Within a model, it flexibly captures long-range dependencies in sequential data by assigning attention weights to different parts of the input, thereby providing a precise mechanism for weighting information during aggregation.

Attention mechanism usually includes the following three parts:

1. Calculate attention weights. Based on the input features, the attention weight for each position is calculated, representing its importance relative to the current context. In mathematical terms, let

denote an input sequence of

elements. Given a query vector

q that retrieves information from X, the relevance between each

xi and

q is evaluated using a scoring function

S. The attention weight

is obtained by applying the softmax function to these relevance scores.

2. Based on the calculated weights, perform a weighted summation of the input feature sequence to obtain the final feature vector.

3. The weighted feature vectors are used as context vectors and provided to subsequent neural network models for further processing.

The weighted aggregation result serves as a context vector, retaining the core information relevant to the task from the input, providing concise and crucial feature inputs for subsequent neural networks, supporting deeper reasoning and decision-making.

3.2. Learning of IAP

Individual aging is a complex process influenced by multiple factors. When judging age, humans not only rely on single static facial features but also combine the unique dynamic patterns of individual aging, inferring the laws of aging by comparing temporal facial changes. This indicates that age recognition needs to rely on the temporal evolution of an individual’s historical facial features, learning aging patterns from dynamic changes, rather than relying solely on a single static image.

To model this process, the temporal changes in facial features are treated as a dynamic sequence. Here,

is the facial feature vector at time

, and

is the corresponding age. Aging is a continuous process, meaning that

is determined by both historical states and the current features. Therefore, this aging pattern can be represented by a recurrent function.

The function f models the dynamic of the aging process, and its parameter vector needs to be learned from the individual age time-series data. represents the age state at time , which captures the influence of historical information on the current age.

The learning objective for the individual aging pattern is to optimize the parameter vector in Equation (4), enabling the model to accurately predict the current age from the input sequence of facial features given the corresponding age sequence .

Long Short-Term Memory (LSTM) networks, a specialized type of Recurrent Neural Network (RNN), are particularly suited for modeling the continuous dynamics of individual aging due to their ability to capture long-term dependencies in sequential data. Given that aging involves long-range correlations in facial feature changes, LSTM’s gating mechanisms effectively mitigate the gradient vanishing problem of traditional RNNs and facilitate stable learning over extended time periods. Therefore, using LSTM to model the IAP effectively captures the evolutionary pathway from historical features to the current age, providing a dynamic foundation for age prediction that is consistent with physiological aging characteristics.

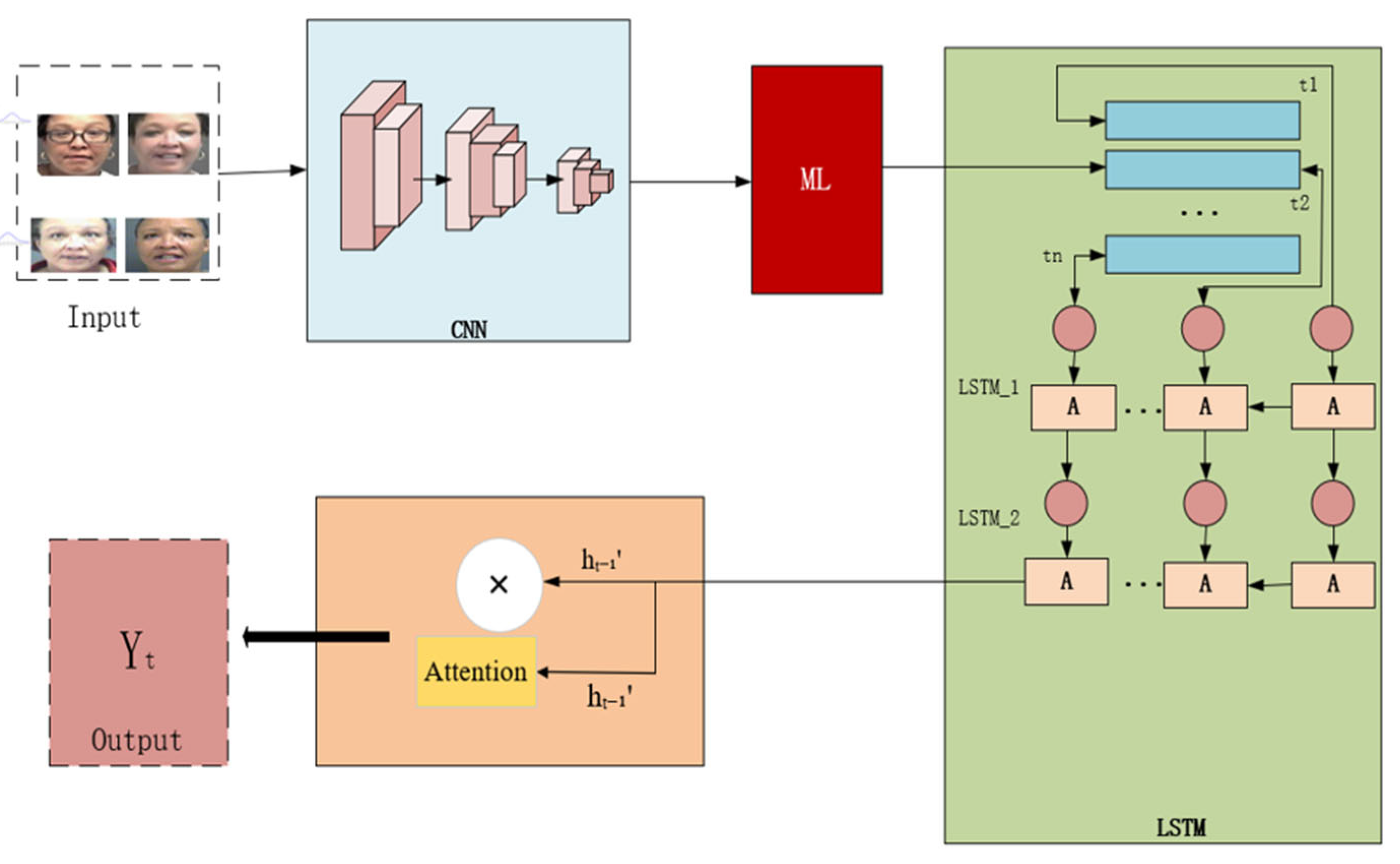

3.3. IAP Algorithm Framework

The proposed IAP algorithm learns personalized aging trajectories from an individual’s facial image sequences for age estimation. As illustrated in

Figure 1, the framework first preprocesses the input raw facial images and generates an improved label distribution that covers adjacent ages. This leverages the inherent ambiguity of age labels to provide richer supervisory information. Subsequently, high-dimensional de features are extracted from each image using CNN. Manifold learning is then applied to eliminate redundancy and reduce dimensionality, yielding refined, age-related low-dimensional features. These features are sorted by individual timelines to form the sequence.

This sequence is fed into an LSTM to capture the dynamic process of individual aging. Within the LSTM, an attention mechanism is integrated, which enables the model to focus on the most critical features for current age prediction by dynamically evaluating and weighting the hidden states of historical time steps, thereby generating a weighted context vector. Finally, based on this context vector, a fully connected layer outputs the predicted age value. In this way, IAP synergistically integrates label distribution, deep feature extraction, manifold learning, and attention mechanism to collectively achieve precise individualized age recognition.

4. Experiments and Results Analysis

4.1. Datasets

We evaluated our method on two public datasets with longitudinal facial images, FG-NET [

34] and MORPH [

35], which are well-suited for building individual age time-series models.

The FG-NET from the University of Chicago, contains 1002 images of 82 subjects (ages 0–69), averaging 12 images per person over spans of up to 18 years. The sequences of each individual, documenting the transition from childhood to later life, provide a continuous record of aging, ideal for capturing its gradual trajectory. The MORPH from the University of Cape Town’s CIPR Lab, is larger and more diverse. It includes 55,134 images from 13,000 subjects (ages 16–77), with an average of 4 images per person collected between 2003 and 2007. The racial composition includes 76.5% black images, 17.5% white images, and 6.0% images of other races (including Hispanic, Asian, etc.). The distribution of various races and age groups is shown in

Table 1. Despite the racial imbalance, MORPH’s broad age range and large population base still make it a valuable resource for learning diverse aging patterns and validating model generalization capabilities. In this study, we fully considered this data distribution characteristic and specifically evaluated the model’s performance on different racial subsets in subsequent analyses (

Section 4.8).

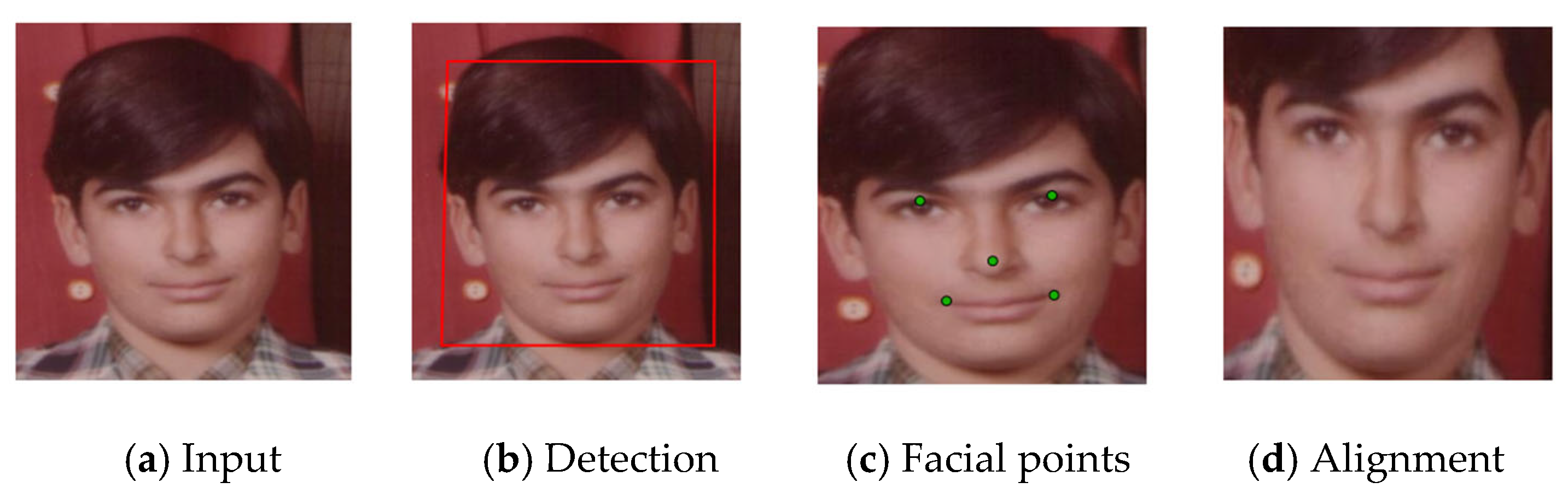

The DPM model [

7] preprocesses facial images. The pipeline, as shown in

Figure 2, consisted of detection, alignment, cropping, and normalization. First, detect the facial region and five key landmark points: the centers of the left and right eyes, the tip of the nose, and the left and right corners of the mouth. The face then aligned to an upright pose based on these landmarks, and the central facial region cropped and normalized.

4.2. Evaluation Criteria

The performance of the IAP is evaluated using Mean Absolute Error (MAE) and Cumulative Scores (CS). The formula for calculating MAE is

is the number of test face samples, is the actual age, and is the predicted age. The smaller the MAE, the closer the model’s predicted age is to the actual age, indicating better performance of the model.

CS represents the accuracy of different error values measured in years, with error levels ranging from 0 to 10, defined as follows:

is the number of the tested images on which the predicted ages make an absolute error no higher than years (error level), and is the total number of the testing images.

4.3. Network and Training

Various CNN architectures, such as AlexNet, VGGNet, ResNet, Inception V4, DenseNet, and EfficientNet—are commonly used in computer vision and image processing. AlexNet is characterized by its simplicity and limited depth. Although VGGNet has a simple architecture with 16 or 19 layers, its large number of parameters results in limited generalization ability when trained on small datasets. Deeper networks including ResNet, DenseNet, and EfficientNet contain over 100 layers and extensive parameters, which often lead to overfitting, gradient vanishing, or gradient explosion on small-scale datasets. In comparison, Inception V4 captures multi-scale features via different convolutional kernel sizes and pooling operations, and introduces residual connections to alleviate gradient issues, thereby improving both training stability and generalization. Given these attributes, Inception V4 is employed in this study for facial feature training and extraction.

We conducted the training using the TensorFlow 1.13.0 framework in the PyCharm IDE, with hardware acceleration from an NVIDIA RTX3060-12G GPU. We randomly partitioned both the MORPH and FG-NET, allocating 80% for training and 20% for testing. The network initialization parameters for Inception V4 on each dataset are provided in

Table 2.

The mini-batch sizes are set differently for the two datasets to accommodate their distinct scales and to facilitate effective sequential learning. For the large-scale MORPH, a standard mini-batch size of 80 is used. For the FG-NET, a smaller batch size of 2 is adopted to prevent overfitting, provide a more stable gradient direction, and enable the model to learn more thoroughly from the limited samples. This is crucial for using LSTM models to learn coherent individual aging patterns.

4.4. Configuration of Label Distribution Parameters

Based on the analysis in

Section 3.1.1, according to reference [

5], the label distribution for each face image is initialized as a discrete Gaussian distribution. The given age label distribution covers a reasonable range of related ages. After experimental comparison, when the values of σ for the two datasets are 3.0 for MORPH and 1.5 for FG-NET, and the label distribution is fixed at [u − 5, u + 5], the performance is the best.

4.5. Selection of Manifold Learning Methods

To rigorously argue for the selection of manifold learning methods, we conducted a comparative analysis of three representative techniques: principal component analysis (PCA), locally linear embedding (LLE), and Isomap [

33]. These methods are evaluated within the same Inception V4 framework, measured by MAE.

As shown in

Table 3 and

Table 4, PCA consistently achieves the lowest MAE across nearly all evaluated dimensions on both the MORPH and FG-NET, demonstrating robust and stable age recognition accuracy. This advantage stems from the core characteristic of PCA, which preserves the global variance of the data, perfectly aligning with the fact that facial aging is a continuous and global process. In contrast, nonlinear methods like LLE and Isomap, which focus on local neighborhoods, showed greater performance volatility and sensitivity to parameters, making them less reliable. Therefore, we choose the PCA in our framework.

For facial age recognition, CNN-derived features are often discriminative yet redundant and noisy. The application of PCA specifically mitigates these issues. It reduces the dimensionality of the original features, eliminating redundant information while preserving components critical for age estimation. This process not only lowers the costs of data storage and subsequent computation but also reduces the risk of overfitting by simplifying the feature space. Ultimately, these enhancements lead to a more accurate and robust age recognition system.

Inception V4 employs a global average pooling (GAP) layer on the final convolutional feature maps to produce a 1536-dimensional feature vector. Subsequently, PCA is applied to this vector for the MORPH and FG-NET, reducing dimensionality to 300 and 291 dimensions while preserving 98% of the original feature information. This approach successfully maintains data fidelity while significantly enhancing computational efficiency.

The individual sequence features, after dimensionality reduction via PCA, were fed into the LSTM network to learn the aging patterns of individuals and achieve age identification. In our experiments, we randomly split the MORPH and FG-NET datasets by subject, using 80% for training and 20% for testing. The initialization parameters of the LSTM are listed in

Table 5.

4.6. Parameter Sensitivity Analysis

To verify the robustness of the model’s parameter selection, this study conducted a parameter sensitivity analysis based on the foundation of previous systematic research [

5,

33].

We conducted extensive experiments on the MORPH and FG-NET, with the σ value ranging from 0.5 to 5.0. The results showed that the MORPH and FG-NET datasets achieved the lowest MAE at σ = 3.0 and σ = 1.5, respectively, and the performance declined as the parameters deviated from the optimal values, demonstrating the stability of the selected values. This phenomenon is related to the characteristics of the datasets. The MORPH has a wide age distribution and high diversity, requiring a larger σ to smooth the label distribution. While the FG-NET has a small sample size and concentrated age variations, a smaller σ can provide more targeted supervision signals.

For the MORPH, retaining 98% of the information (corresponding to 300 dimensions) directly yields the best performance (MAE = 1.54). For the FG-NET dataset, although the lowest MAE (2.48) was achieved at 20 dimensions, we ultimately chose the 291 dimensions corresponding to 98% information (MAE = 3.70). This decision aims to prioritize the integrity of the discriminative information in order to avoid the overfitting risk caused by excessive dimension reduction on small-scale datasets, and to be consistent with the processing strategy of the MORPH, ensuring the overall uniformity of the methodology. This trade-off reflects our emphasis on the generalization ability of the model and the rigor of the method.

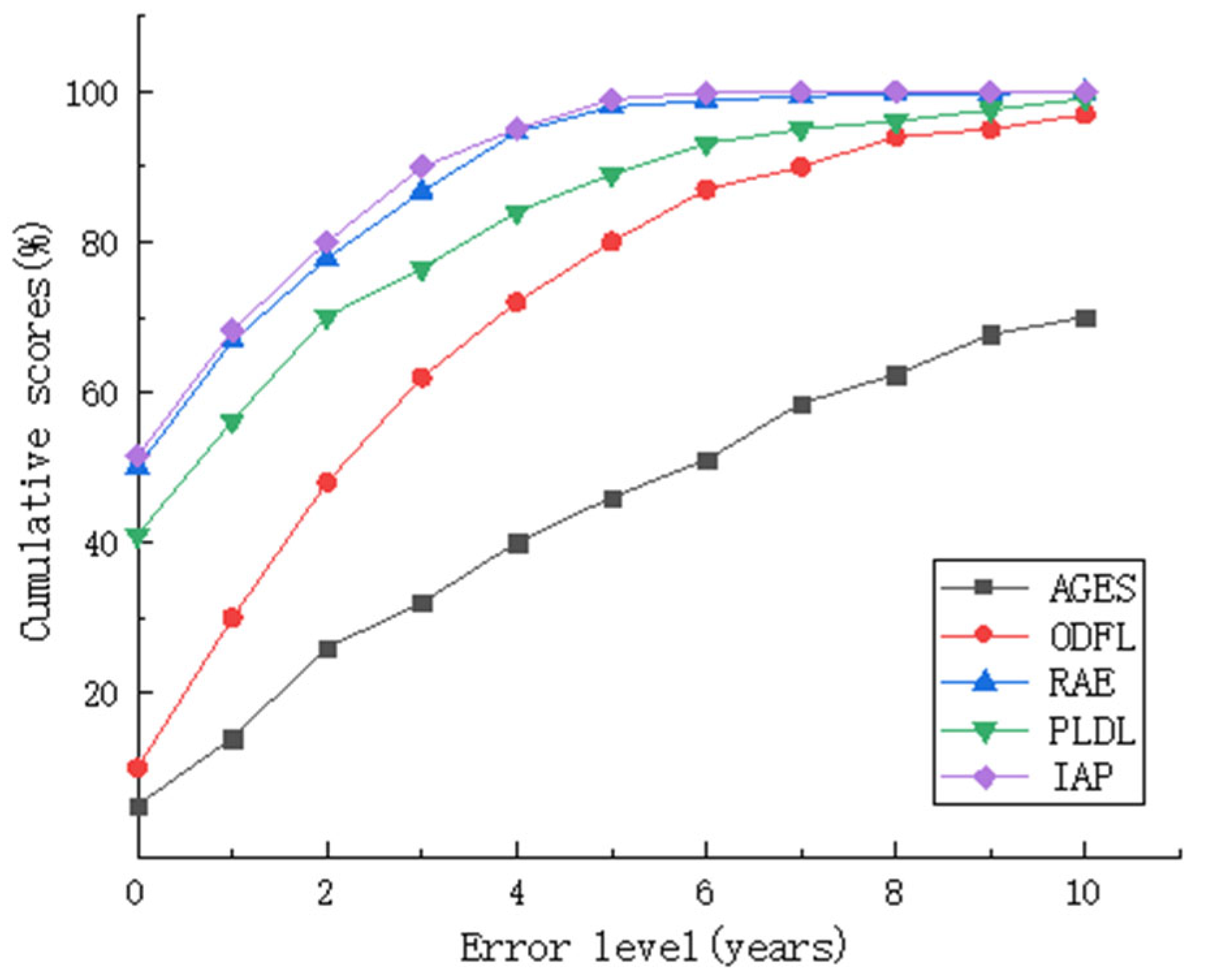

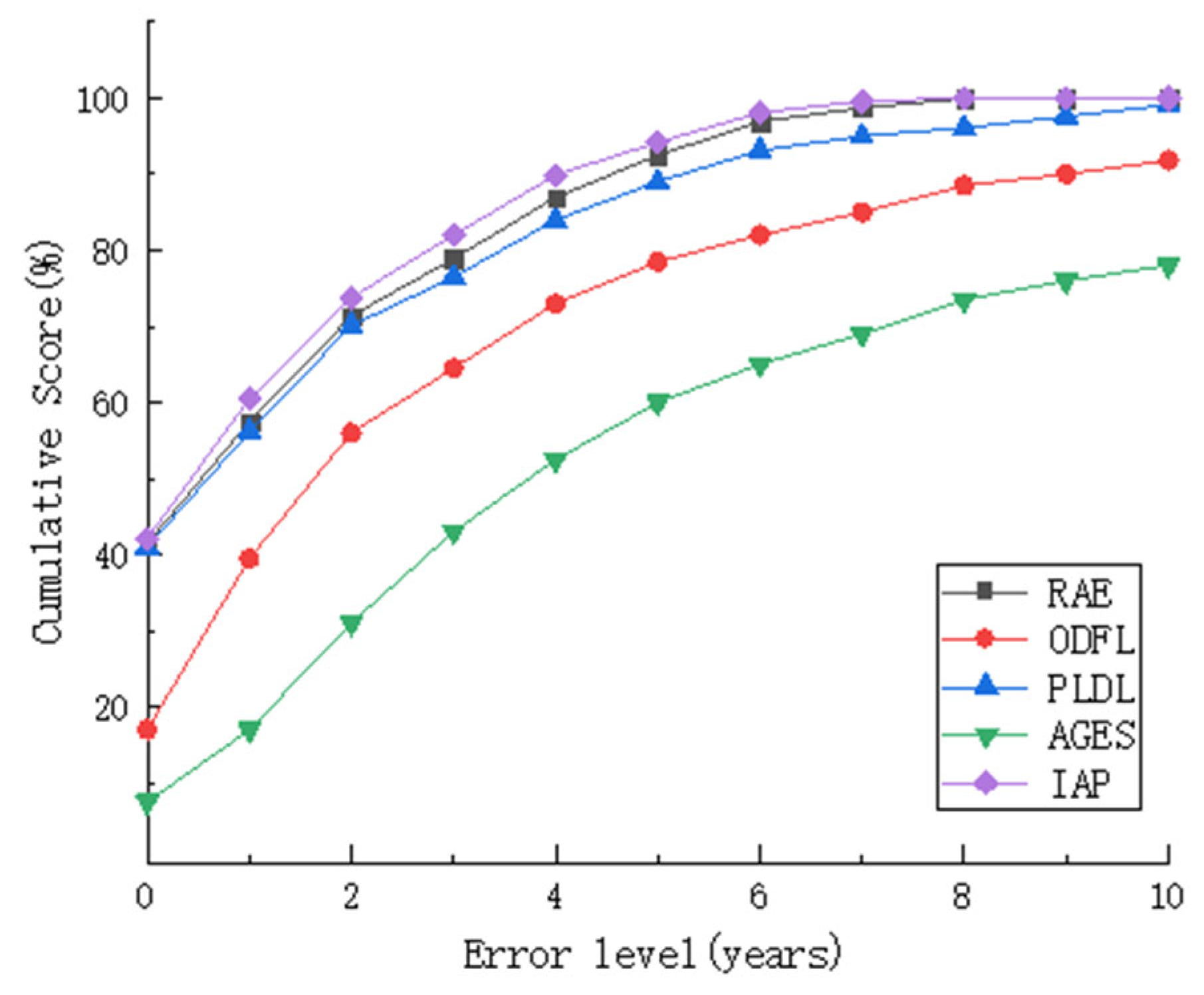

4.7. Experimental Results and Analysis

The proposed IAP was compared against existing approaches, including AGES [

11], RAE [

12], PLDL [

5], ODFL [

36], LRA-GNN [

30], and GroupFace [

3]. As shown in

Table 6, the IAP model achieved the lowest MAE values of 1.07 and 1.92 on the respective datasets, outperforming all benchmark methods. In contrast, AGES [

11] constructs aging by building an AAM-based feature subspace. ODFL [

36] relies on CNN features. PLDL [

5] uses distribution learning across adjacent ages. RAE [

12] combines CNN and LSTM to learn aging patterns. LRA-GNN [

30] divides the face into multiple patches, uses a multi-head attention mechanism to capture potential relationships between nodes, and employs residual networks to extract facial features for age recognition. GroupFace [

3] uses an enhanced multi-hop attention graph convolutional network to extract discriminative features of different groups and employs reinforcement learning to address the class imbalance problem.

The experimental findings, comprising the MAE values in

Table 6 and the CS curves in

Figure 3 and

Figure 4, collectively demonstrated that the proposed IAP achieved state-of-the-art performance on both benchmark datasets. The results revealed a noticeable performance disparity between the MORPH and FG-NET datasets, primarily stemming from differences in their data characteristics. MORPH offers a substantial number of individual samples distributed across diverse age groups and demographic attributes (e.g., gender and ethnicity), thereby closely mirroring real-world facial aging patterns and enabling the learning of multi-factor aging dynamics. In contrast, FG-NET is limited in scale, which restricts the model’s capacity to learn refined personalized aging features and results in relatively lower accuracy.

To provide more precise performance analysis,

Table 7 lists the CS of the IAP at different error levels in detail. The quantitative results corroborate the curve trends shown in

Figure 3 and

Figure 4, accurately confirming the high accuracy and robustness of the IAP in age recognition.

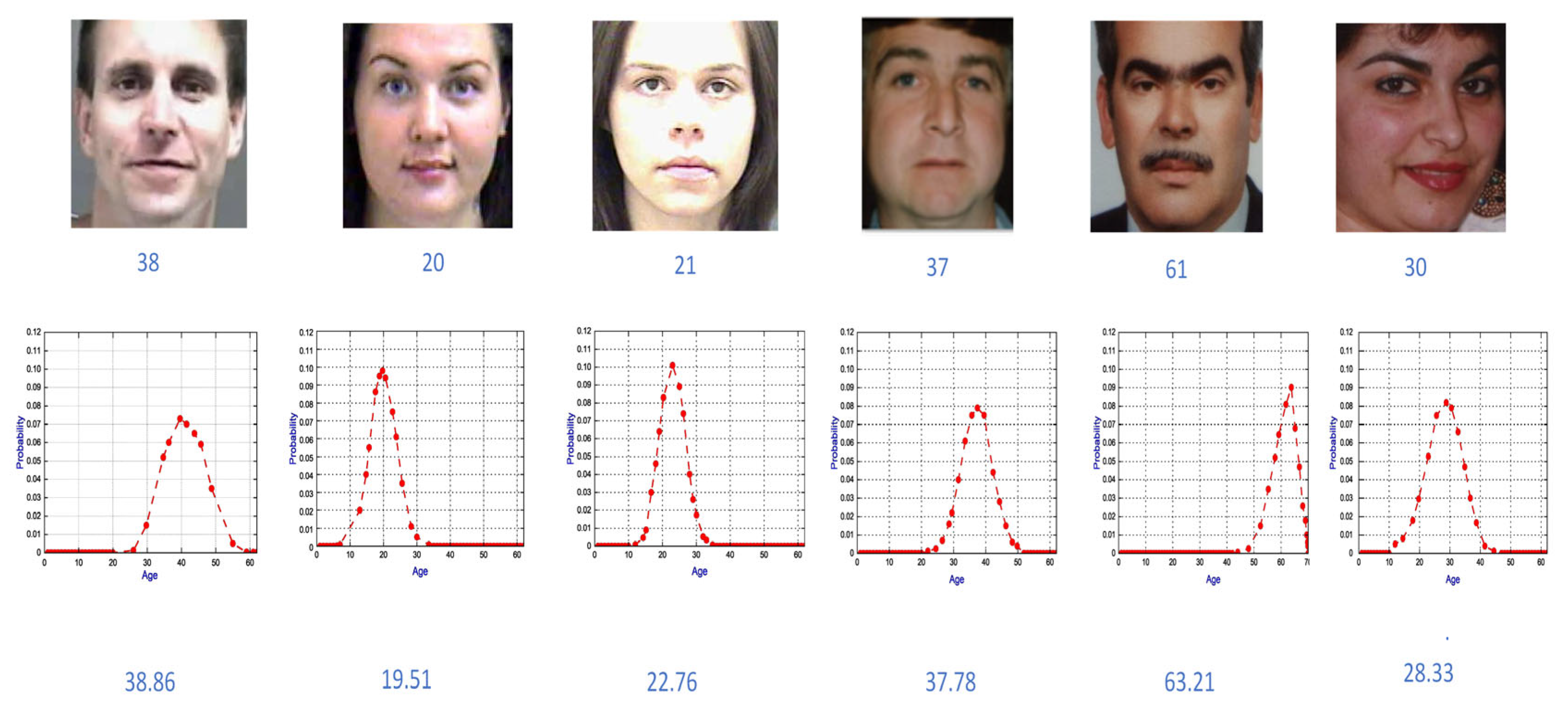

The visualization of prediction results fully demonstrates the model’s robust cross-age generalization capability. As shown in

Figure 5, on both the MORPH and FG-NET, the model maintains a low prediction error for a wide range of samples from young adults to the elderly, with predictions consistent with the true ages.

The effectiveness of the IAP stems from its integrated architectural design, which synergistically combines the following key components: 1. The improved label distribution more accurately reflects the polysemy of age and more fully explores the implicit ambiguous information in facial images. 2. By using multiple layers of convolution to gradually extract features from the image, rich visual feature representations can be learned. 3. Manifold learning learns the underlying manifold structure from data, which helps models better understand complex data features. 4. Integrating the attention mechanism into the learning process of IAP enables the model’s predictions to more closely align with the actual aging trajectories of individuals, significantly enhancing the accuracy and reliability of the recognition task. The IAP collaboratively optimizes the three core aspects of feature learning, individual modeling, and data utilization, providing a scientific paradigm for age recognition technology.

4.8. Model Generalization Ability Analysis

4.8.1. Generalization Ability Within the Dataset

To evaluate the generalization ability of the IAP, we conduct group performance analysis based on the MORPH.

Table 8 shows the MAE performance of the model on different subsets of races and age groups.

Prediction results based on subsets of MORPH showed that the performance of the IAP model exhibited systematic differences consistent with data distribution and biological characteristics. It performed best in the African group with sufficient data (MAE = 1.02), and while performance slightly decreased in groups with less data, it still maintained a high level of recognition ability. In terms of age dimension, the model performed best in the 21–40 age range where facial features are stable (MAE = 1.46–1.51), and its performance reasonably degrades in the elderly group with scarce data. This error distribution mainly stems from the inherent sample bias in the dataset rather than defects in the model architecture, which verifies the superiority of the IAP framework under balanced data conditions and its fundamental generalization ability in challenging scenarios.

4.8.2. Generalization Ability Across Different Datasets

We rigorously evaluated the model’s robustness through cross-dataset validation. The results revealed an MAE of 4.85 when training on MORPH and testing on FG-NET, and 5.91 for the reverse scenario. These represent a significant decline from the within-dataset performance (MORPH: 1.07, FG-NET: 1.92). We attribute this drop to the substantial domain shift between the datasets, including disparities in age distribution, image quality, ethnicity, and acquisition conditions, which collectively pose a formidable challenge for the model.

However, a thorough analysis revealed the positive aspect of our model. Despite the challenges, when trained on MORPH and tested on FG-NET, its MAE (4.85) still outperforms some traditional methods (such as AGES [

11]) in the internal test results of the FG-NET dataset (6.22). This indicates that by learning individualized aging patterns, IAP is more robust than features relying on manual feature methods.

4.9. Ablation Experiments

We conducted a series of ablation studies to evaluate the contribution of each key component in the IAP framework. Starting from a baseline that used a CNN (Inception V4) and single age labels, we incrementally integrated our proposed modules: improved label distribution learning (ILDL), manifold learning (ML), LSTM for temporal modeling, and an attention mechanism.

Table 9 summarizes the configurations and corresponding MAE results on the MORPH and FG-NET for all compared models.

The ablation results led to the following observations: 1. Impact of ILDL. Compared to the single-label baseline, introducing the ILDL strategy reduced the MAE by 0.28 and 0.60 on the MORPH and FG-NET, respectively. This demonstrates that our proposed label distribution effectively captures the ambiguity and continuity of age labels, thereby mitigating issues arising from insufficient training data. 2. Impact of ML. Incorporating ML for feature dimensionality reduction and optimization led to a further decrease in MAE, particularly notable on the MORPH dataset. This indicates that ML successfully extracts a low-dimensional, age-related manifold structure from the high-dimensional CNN features, effectively eliminating redundancy and enhancing feature discriminability. 3. Impact of LSTM. Integrating LSTM to learn individual aging patterns from sequential data resulted in a substantial performance improvement. This confirms the importance of modeling the temporal dynamics of aging for accurate age recognition. 4. Impact of attention mechanism. The introduction of the attention mechanism within the LSTM (forming the IAP) yielded the most significant performance gain, achieving the lowest MAE on both datasets. This verifies that the attention mechanism enables the model to dynamically focus on key features that are more relevant to age progression during sequence modeling, thereby more accurately capturing individual aging patterns.

In conclusion, the ablation studies systematically confirm the individual and collective contributions of each proposed module. The results highlight the core value of our integrated design, wherein the synergistic integration of improved label distribution, manifold learning, LSTM-based temporal modeling, and an attention mechanism is responsible for the superior performance of the IAP framework.

5. Conclusions

This paper addresses the challenges in facial age recognition posed by the complexity and individuality of the aging process by proposing a novel model based on IAP. The proposed framework extracts facial features via CNN, refines them through manifold learning to retain highly discriminative information, and models the temporal progression of aging using recurrent neural networks. It further incorporates an attention mechanism to focus on age-sensitive facial regions and an improved label distribution strategy to capture the ambiguity inherent in age labels, thereby mitigating data scarcity. By collaboratively optimizing feature learning, temporal modeling, and data utilization, IAP establishes a scientific paradigm that significantly enhances recognition accuracy.

However, this study also has certain limitations that point to valuable directions for future research. First, the performance of the IAP is contingent on the availability of longitudinal facial sequences, which are scarce and costly to collect compared to static image datasets. This data dependency may restrict its applicability in scenarios where only a single facial image is available. Second, the integration of LSTM and attention mechanisms, while crucial for capturing temporal dynamics, increases the computational complexity and inference time compared to simpler CNN-based models. This limits its deployment in real-time applications requiring immediate feedback. Third, although the model demonstrates strong generalization ability within the dataset, its performance exhibits a drop in cross-dataset testing and may be affected by inherent biases in the data, such as the uneven distribution of dataset size, age, race, and gender.

Future work will focus on addressing these limitations. We plan to explore data augmentation techniques and transfer learning to reduce reliance on large longitudinal datasets. To tackle computational cost issues, we will investigate lightweight models to improve inference efficiency. Additionally, we will actively incorporate more diverse and balanced datasets and explore advanced architectural innovations such as Transformers to enable more efficient long-range dependency modeling, thereby further enhancing the model’s generalization ability and practical application value.