1. Introduction

Inclement weather poses significant challenges for image perception in self-driving systems, as cameras are critical for object detection, lane recognition, and traffic sign interpretation [

1]. Adverse conditions such as rain, snow, fog, or sleet degrade image quality through raindrop-obscured lenses, snow accumulation, fog-induced contrast loss, or glare from wet surfaces, introducing noise and distortion that degrade computer vision algorithm performance. Researchers have explored real-time image enhancement using convolutional neural networks (CNNs) or generative adversarial networks (GANs) to enhance raw camera feeds, alongside training models on synthetic or augmented datasets that simulate weather-corrupted visuals [

2]. However, dynamic or extreme conditions continue to challenge these methods.

Current state-of-the-art self-driving systems are black-box ML models that provide limited insight into their decision-making processes. Under uncertain conditions, such systems can produce outputs that endanger passengers and nearby individuals. In high-risk scenarios where lives are at stake, safe decision-making requires high certainty in the accuracy of the information used. Uncertainty quantification addresses this by enabling models to indicate their confidence levels, allowing users to determine when to trust outputs and when models are likely to struggle.

These problems are exacerbated by the inherent uncertainty introduced by adverse weather, which is rarely quantified or leveraged effectively in existing approaches. Without explicit modeling of this uncertainty, segmentation models cannot adapt their confidence levels or focus computational resources on the most challenging regions. This limitation is particularly problematic for foundation models like SAM and SAM2, which, despite their capabilities in standard conditions, lack mechanisms to handle the uncertainty introduced by inclement weather.

We address these challenges through two complementary uncertainty-aware approaches: one targeting extreme conditions where object detection is critical for safety, and another improving overall segmentation quality across varying weather conditions. These approaches enhance the robustness of autonomous driving perception systems by explicitly incorporating uncertainty estimation into the segmentation process, enabling more reliable operation in dynamic and unpredictable environmental conditions.

2. Related Work

Recent advancements in computer vision have led to significant improvements in semantic segmentation models, particularly with the introduction of the Segment Anything Model (SAM) [

3]. SAM represents a paradigm shift, utilizing a prompt-based architecture that enables zero-shot segmentation across diverse domains. Building upon this foundation, SAM2 [

4] further enhances these capabilities with improved performance and efficiency. These models have demonstrated versatility across various applications [

5,

6], but face challenges in complex environmental conditions such as those encountered in autonomous driving scenarios.

Uncertainty estimation in deep learning has emerged as a critical research direction [

7], particularly for safety-critical applications like autonomous driving. The seminal work by [

8] established a framework for distinguishing between epistemic uncertainty (model uncertainty) and aleatoric uncertainty (data uncertainty), both essential for reliable decision-making systems. Techniques such as Monte Carlo dropout provide practical approximations of Bayesian inference in deep neural networks, offering computationally efficient uncertainty estimates [

9]. These approaches have been extended to various computer vision tasks, including semantic segmentation.

In medical imaging, uncertainty-aware training has proven valuable, with several studies demonstrating improved segmentation performance in regions with ambiguous boundaries or pathological variations. SAM-based models, such as SAM-Med2D, have improved CT and MRI image segmentation by fine-tuning adapters in the SAM architecture [

10]. Despite these improvements, medical images remain ambiguous, with physicians frequently providing different annotations for lesions in CT images [

11].

The Uncertainty-Aware Adapter (UAT) [

11] provides a foundation for addressing perceptual ambiguity through aleatoric uncertainty modeling. This architecture creates a dedicated latent space for sampling possible segmentation variants, building upon previous uncertainty works like Probabilistic U-Net [

12]. Its core innovation is the Condition Modifies Sample Module (CMSM), which establishes deeper integration between uncertainty samples and model features, unlike previous approaches that simply concatenate stochastic samples at the output layer. The Uncertainty-Aware Adapter is a lightweight component attached to the pre-trained SAM model, preserving SAM’s foundation while enabling the generation of multiple plausible segmentation hypotheses. Rather than relying on one-to-one ground truth-to-image mappings, it calibrates the model on real-world scenarios with multiple valid interpretations.

This approach mirrors challenges in autonomous driving during inclement weather, where environmental conditions create similar perceptual ambiguities [

13]. Just as medical images contain regions where multiple expert interpretations are valid, driving scenes during snow, rain, or fog present objects with unclear boundaries and varying visibility. Generating multiple plausible segmentation hypotheses rather than a single prediction enables more robust decision-making in safety-critical autonomous systems, allowing conservative action planning when uncertainty is high. These medical applications provide insights transferable to autonomous driving, particularly for identifying critical regions under adverse conditions where traditional deterministic segmentation approaches fail due to reduced sensor reliability.

We leverage three prominent datasets to validate our approach: CamVid [

14], BDD100K [

15], and GTA Driving. CamVid is a popular benchmark for evaluating semantic segmentation in driving scenarios, providing high-definition video sequences with pixel-level annotations. BDD100K offers diverse driving scenes across different weather conditions and times of day. The GTA Driving dataset complements these with synthetic driving scenes and perfect ground truth annotations. While several studies have utilized these datasets to evaluate segmentation algorithms across varying conditions, comprehensive analyses of uncertainty estimation remain limited [

16,

17]. These three datasets provide a foundation for evaluating uncertainty-aware segmentation approaches in autonomous driving applications, particularly for addressing challenges posed by inclement weather.

While existing research has made progress in both uncertainty estimation and robust segmentation for autonomous driving, a gap remains in effectively combining these approaches to address the specific challenges posed by inclement weather. This paper integrates uncertainty-aware training techniques with state-of-the-art segmentation models (SAM and SAM2) to develop complementary approaches addressing different aspects of the inclement weather challenge: one improving overall accuracy through uncertainty-guided fine-tuning (SAM2 with Multistep Fine-Tuning), and another focused on extreme conditions through adaptive region focusing (UAT-SAM).

3. Datasets

Three datasets were used in this study: BDD100K, CamVid, and GTA Driving.

BDD100K was split into 7000 training, 1000 validation, and 2000 testing samples.

CamVid was split into 369 training, 100 validation, and 268 testing samples.

GTA Driving contained 2500 training samples used exclusively for training and not for evaluation. The GTA Driving dataset was incorporated only during the fine-tuning phase to improve domain adaptation and model generalization; therefore, it is not included in the quantitative results reported in

Section 5.

SAM2 used all three datasets for uncertainty-aware fine-tuning, while UAT-SAM was trained only on the CamVid dataset using car instances (1047 training, 274 validation, and 177 testing).

4. Methodology

4.1. SAM2 with Multistep Fine-Tuning for Overall Accuracy Improvement

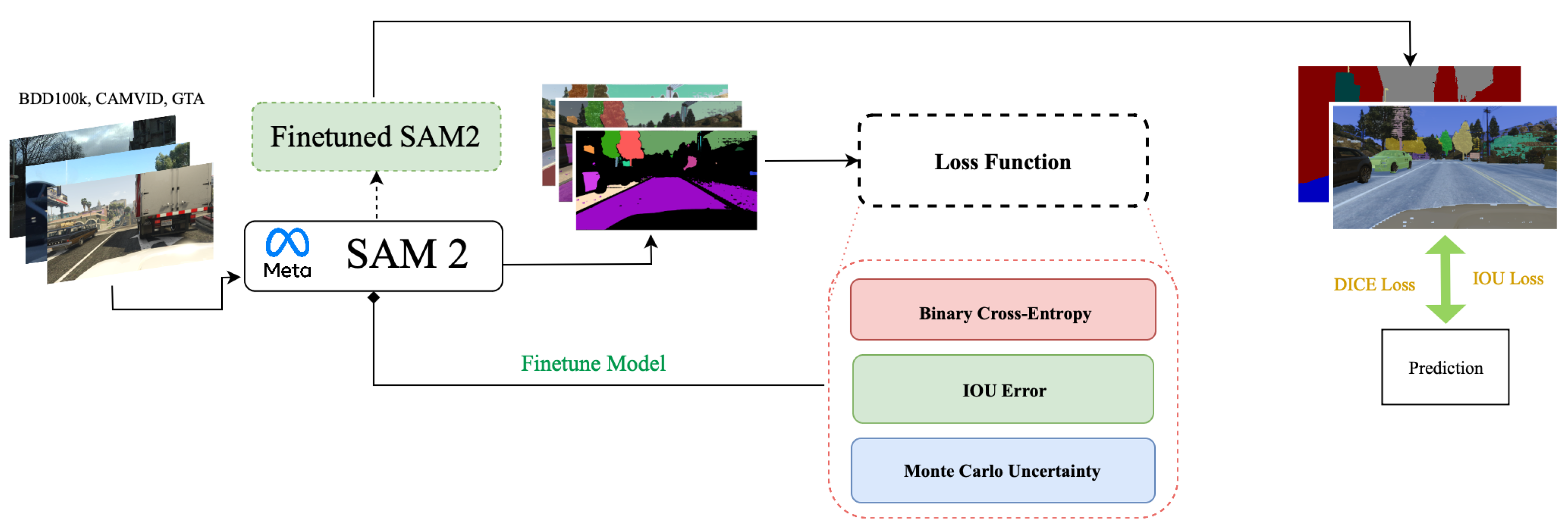

The following outlines our step-by-step approach for fine-tuning SAM2 on driving datasets such as BDD100K using a custom loss function (see

Figure 1 for an overview). We aim to improve segmentation accuracy by incorporating multiple loss components, including Binary Cross-Entropy, Intersection-over-Union (IoU) Error, and Monte Carlo Uncertainty Loss.

We first prepare the dataset by pairing each image with its corresponding ground truth segmentation mask. Additionally, the ground truth masks are separated into a list of binary masks based on color, where a pixel has a value of 1 if it belongs to the i-th mask and 0 otherwise.

Then, the preprocessed images are passed through SAM2 to generate output masks with shape (n masks, each pixels with c channels). This is reduced to using the sigmoid function before feeding into the loss function to avoid dimension conflicts.

Once the model has made its predictions, we feed the predictions and ground truth into our custom loss function, which incorporates three key components:

The only modification from standard fine-tuning is the inclusion of the uncertainty-weighted loss; all data splits, optimizer settings, and network layers remain identical. Therefore, the observed performance improvement stems from the uncertainty modeling mechanism rather than from generic fine-tuning.

After this loss is calculated, it is backpropagated into SAM2. This updates the model’s weights to improve segmentation accuracy and is essential for aligning predictions with ground truth.

To ensure convergence, we repeat the above process for 6000 steps, progressively refining the model’s ability to accurately segment objects in self-driving scenarios.

The SAM2 model used was derived from the official Facebook Research implementation [

4]. The model is fine-tuned using Python 3.10 (

https://www.python.org/, accessed on 10 April 2025) and PyTorch 2.3.0 (

https://pytorch.org/, accessed on 10 April 2025) and trained on an NVIDIA GeForce 4060 GPU.

4.2. UAT-SAM for Extreme Weather Conditions

Our second complementary approach tackles object instance segmentation in extreme weather scenarios using the UAT-SAM adapter architecture [

11]. As referenced in

Section 2, the UAT adapter is a novel addition to the original SAM architecture, inspired by methodologies in medical imaging. This adapter is inserted into each transformer block of SAM and acts as a compact set of parameters that incorporates additional information—in this case, uncertainty. The UAT adapter utilizes the CMSM (Condition Modifies Sample Module) to incorporate a sampled uncertainty code

z, derived from a Conditional Variational Autoencoder (CVAE). This CVAE employs both a Prior Net (P) and a Posterior Net (Q) to encode observed uncertainty information from the input image [

11].

4.2.1. Architecture Overview

The UAT-SAM architecture follows a structured pipeline for uncertainty-aware segmentation:

Input → CVAE (Prior/Posterior) → CMSM → Transformer Adapter → SAM Output

The Conditional Variational Autoencoder (CVAE) consists of two networks: a Prior Net (P) that encodes uncertainty from the input image alone, and a Posterior Net (Q) that encodes uncertainty from both the input image and the ground truth segmentation mask. During training, the latent code z is sampled from the posterior distribution; during inference, z is sampled from the prior. z is then processed by the Condition Modifies Sample Module (CMSM), which employs attention-like mechanisms to transform z into meaningful spatial features. These transformed features are injected into each transformer block of SAM’s image encoder through lightweight adapter modules, allowing the model to adaptively incorporate uncertainty information at multiple feature levels without modifying the frozen SAM backbone weights.

UAT-SAM keeps all SAM backbone parameters frozen; only the adapters and CVAE modules are trainable. This design prevents confusion between “fine-tuning,” which updates the entire backbone, and “adapter training,” which only updates lightweight adapter components.

Unlike previous approaches that directly concatenate the sampled code z with the main features, the UAT adapter integrates position vectors (p) and employs learnable attention-like mechanisms to transform z into meaningful features. These features are then combined with the main features in a layer-specific manner, allowing for nuanced modifications. This design ensures that the uncertainty sample from the CVAE is effectively captured and utilized, leading to more robust segmentation outputs.

4.2.2. Model Efficiency Analysis

Because SAM is frozen and only lightweight uncertainty-aware modules are added (approximately 8.9 M trainable parameters, about 13% of SAM-ViT-B’s 86 M parameters), the computational overhead remains minimal. Inference benchmarking on identical hardware shows that the original SAM model runs at 330.71 ms per image, while UAT-SAM (pre-trained backbone + trained adapters + CVAE) runs at 347.38 ms, with an overhead of 5.04% (+16.67 ms). This confirms that UAT-SAM maintains near-real-time performance while introducing meaningful uncertainty modeling, making it suitable for deployment in autonomous driving perception pipelines where latency and reliability are equally critical.

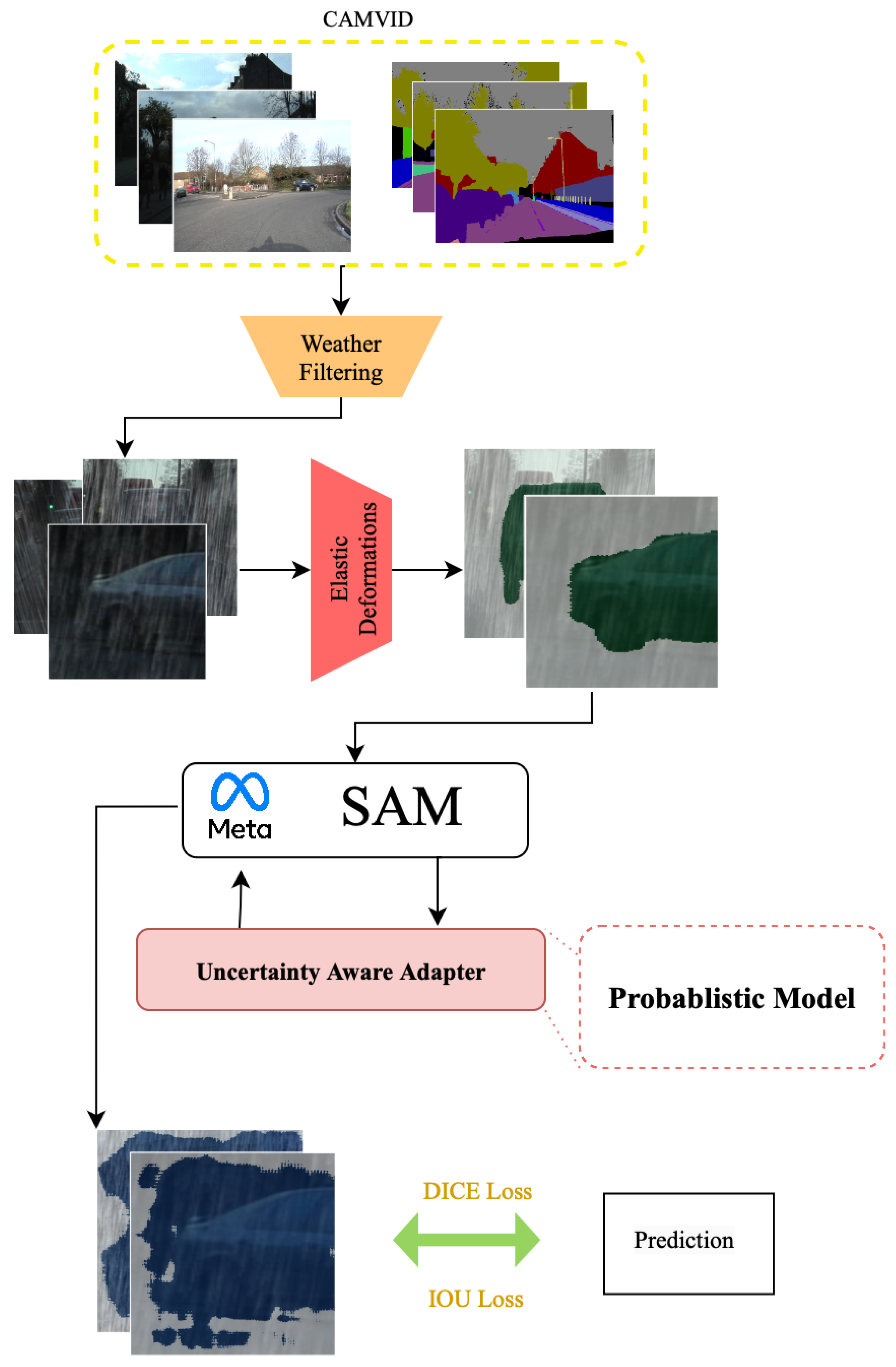

Prior to training, the CamVid dataset required extensive preprocessing (see

Figure 2 for the complete pipeline). Each CamVid image was originally paired with a single human-annotated segmentation mask collected under clear-weather conditions. To simulate adverse weather scenarios, synthetic fog, rain, and snow filters were applied to the images only, while the corresponding masks were automatically augmented using elastic deformation. This process generated multiple plausible ground truth variants from the original annotation, enabling the UAT adapter to learn from diverse label interpretations without requiring additional manual labeling. We applied random weather filters (fog, rain, or snow) with random strengths ranging from 0 to 1 (

clear image,

completely obscured image) to the original images to increase difficulty during training and testing under extreme weather.

Due to the module’s architecture and its medical imaging origins, it required multiple ground truth segmentations for each image. However, most publicly available driving datasets, including CamVid, provide only one ground truth mask. To simulate ambiguous segmentation requirements, we applied elastic deformations to all 1419 human-segmented ground truth masks from CamVid. Each segmentation was deformed by randomly shifting pixel locations in both x and y directions using a Gaussian filter. The magnitude and smoothness of these shifts were controlled by two parameters: and , specifically as follows:

Fog-like deformations: , (smoother, blurred boundaries),

Rain-like deformations: , (sharper, localized changes),

Snow-like deformations: , (medium smoothness, stronger distortion).

This approach generated three additional annotations from a single ground truth, alongside the original annotation. Each image in the dataset therefore had four segmentation masks, capturing a range of plausible interpretations. These were matched to the weather-filtered images for training (

Table 1 and

Table 2).

We also utilized instance cropping on the data to specifically focus on car segmentations during training, leveraging uncertainty modeling to prioritize regions with high variability. This adaptation allows the model to generate accurate outputs even with noisy or ambiguous data.

The training methodology incorporates a tailored loss function, primarily the Dice Coefficient Loss, to handle segmentation. This loss function improves boundary detection, crucial for imbalanced datasets and difficult scenarios.

The training pipeline follows a multi-stage process, starting with a pre-trained Segment Anything Model (SAM) and selective parameter freezing to retain SAM’s pre-trained capabilities. Gradual adaptation fine-tunes the model for specific domain needs. Key metrics like Dice score and Intersection-over-Union (IoU) are monitored, with early stopping to prevent overfitting. TensorBoard visualizes the training process, ensuring high performance and adaptability in severe conditions.

We tested the fine-tuned model and zero-shot SAM by running inference on 177 heavy-weather-filtered CamVid car instance segmentations with the original ground truth segmentation paired, and compared IoU and Dice across both.

5. Results and Discussion

5.1. SAM2 Multistep Fine-Tuning

5.1.1. Overall Accuracy Improvements

To assess the performance of fine-tuned SAM2, we evaluated it against base SAM2 using Intersection-over-Union (IoU) and the Dice coefficient. Specifically, we computed the average IoU for commonly seen road objects (e.g., cars, people, bicycles, traffic lights), as well as overall segmentation across the BDD100K and CamVid datasets. The following

Table 3 and

Table 4 describe the results we obtained.

Our fine-tuned SAM2 outperformed zero-shot SAM2 in most classes based on IoU and Dice scores, except for stop signs and fire hydrants. This may have been the result of a class imbalance in the BDD100K dataset, which likely contains more examples of common road objects such as cars, people, and motorcycles than less common objects such as stop signs and fire hydrants. Additionally, the smaller size of stop signs and fire hydrants may have contributed to the reduced segmentation performance, especially when attempting to segment them at a distance. On average, our fine-tuned SAM2 model improved IoU by 36.13% over zero-shot SAM2, with the highest gain being in car segmentation (+79.13%) and the smallest nonzero gain in person segmentation (+22.34%). For Dice scores, our model improved by 48.79% on average, with cars showing the highest increase (+134.51%) and people showing the smallest nonzero increase (+24.07%).

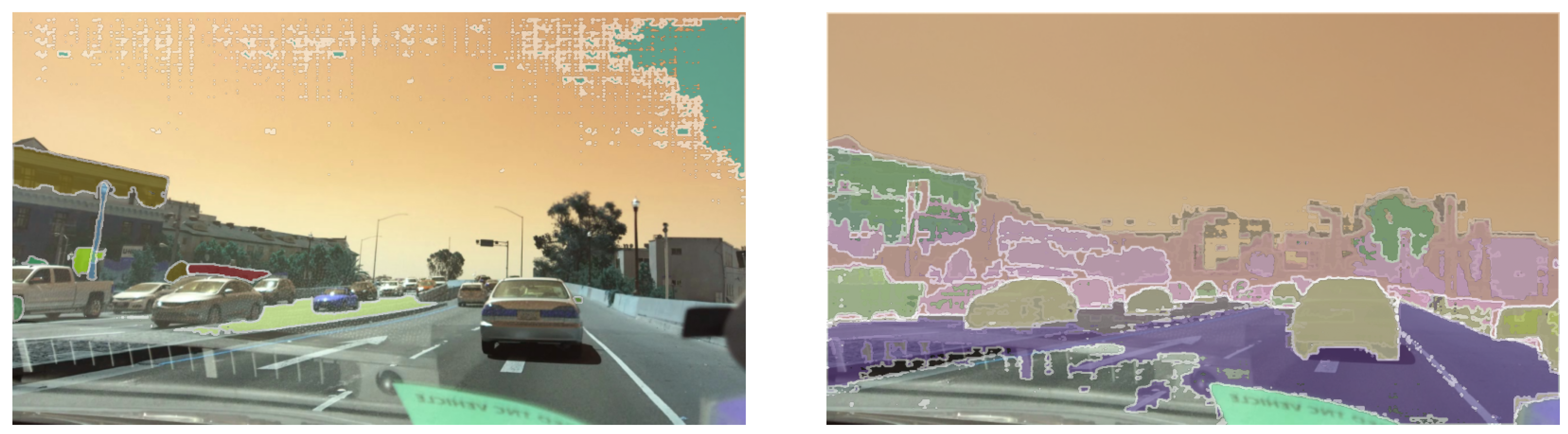

5.1.2. Uncertainty-Aware Fine-Tuning Benefits

Incorporating uncertainty into our fine-tuning process improved segmentation in ambiguous regions, particularly for multi-component objects like vehicles. Zero-shot SAM2 often produced inconsistent masks for vehicles, segmenting individual components (such as wheels or windows) or omitting the vehicle entirely (

Figure 3). After applying uncertainty-aware fine-tuning, SAM2 consistently assigned a single mask per vehicle (

Figure 3), enhancing segmentation accuracy and reducing fragmented outputs.

Additionally, our fine-tuned SAM2 model demonstrated strong generalization across diverse driving scenarios. We evaluated its performance on datasets from various environments, including BDD100K (recorded in New York, San Francisco, and other regions), CamVid (recorded in Cambridge). Across both datasets, our model consistently segmented key classes such as cars, trucks, roads, and pedestrians, highlighting its robustness in both real-world and synthetic driving conditions.

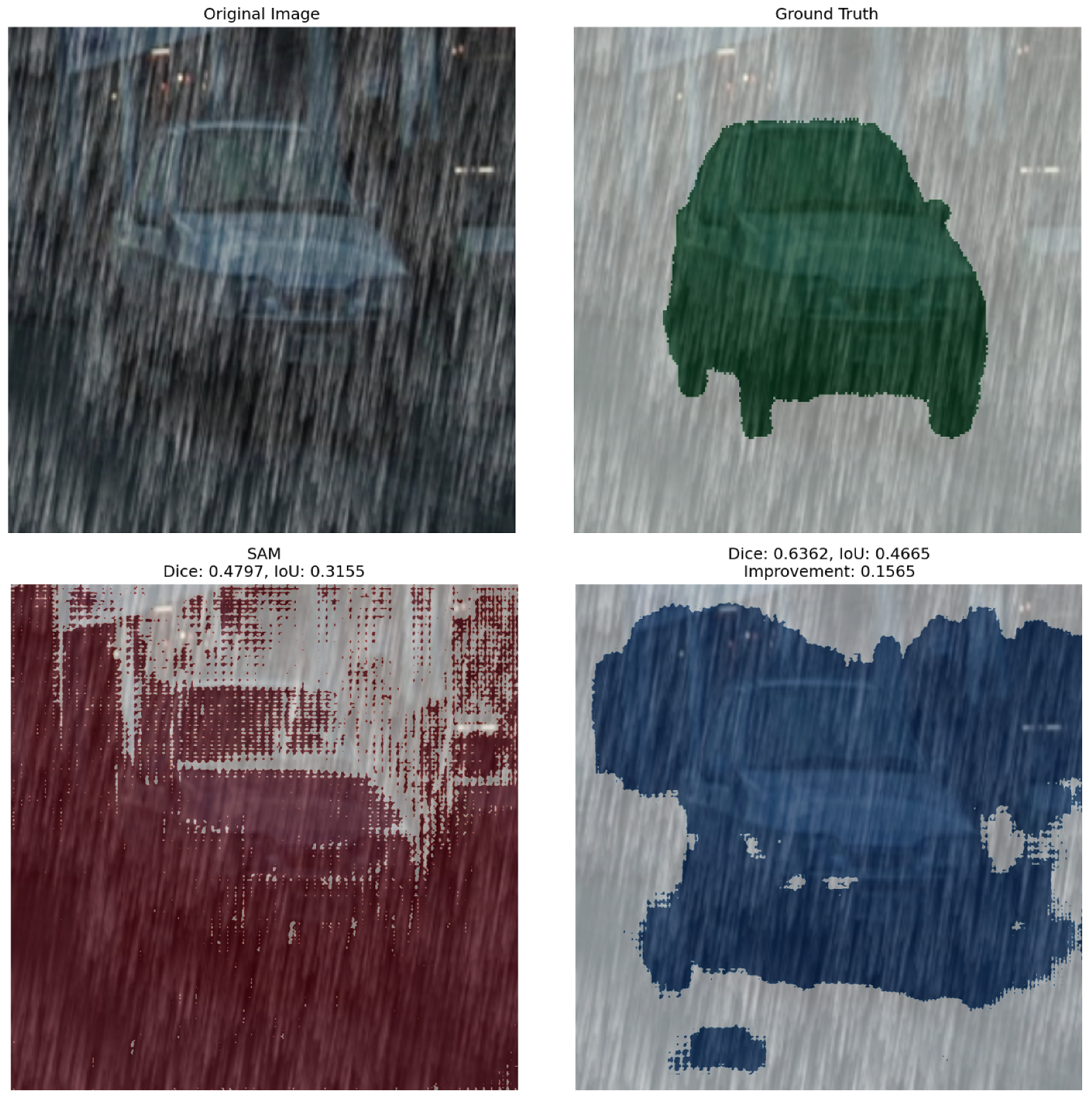

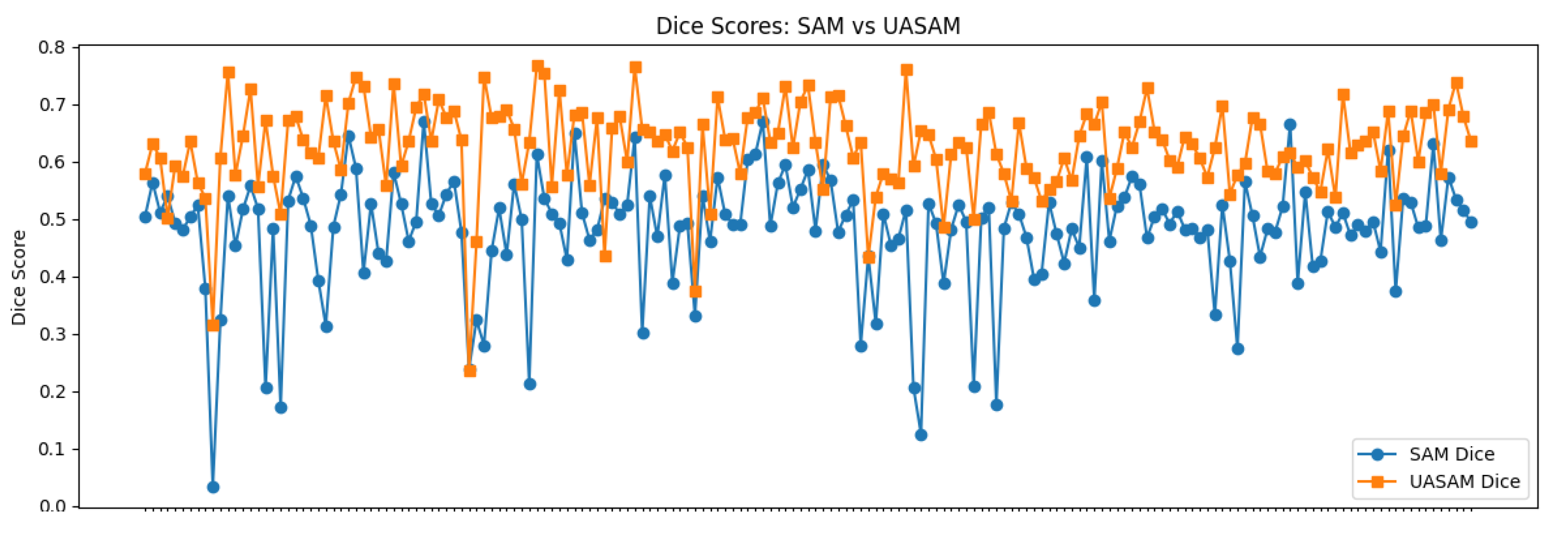

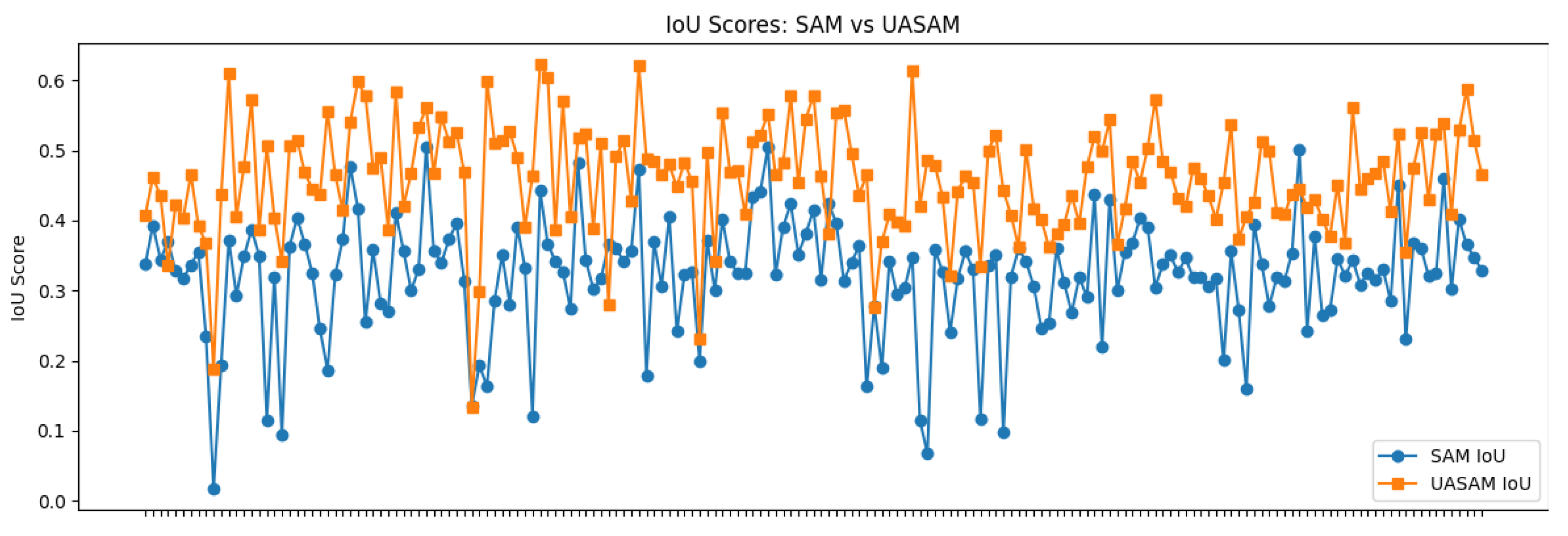

5.2. UAT-SAM

The fine-tuned UAT-SAM was tested on 177 heavy-weather-filtered CamVid car instance images with the original human segmentations serving as the ground truth. SAM served as the baseline, and the evaluation metrics were IoU and the Dice coefficient (

Table 5). Under heavy obscuration, baseline SAM often failed to segment or contour any object within the image, especially in rain and snow scenarios.

UAT-SAM improved IoU by 42.7% and Dice by 30% compared to zero-shot SAM. UAT-SAM consistently outperformed zero-shot SAM by focusing on regions with high variability, improving segmentation accuracy in challenging environments where visibility is compromised.

Figure 4 illustrates a representative example of this improvement in a rain scenario.

The UAT-SAM experiment focuses on car instance segmentation in heavy-weather CamVid subsets using the original SAM backbone. By contrast, the SAM2 fine-tuning experiment (

Section 5) targets full-scene segmentation on BDD100K and CamVid datasets. As the training objectives and domains differ, direct numerical comparison between SAM2-FT and UAT-SAM is not appropriate. Instead, the two methods are complementary: SAM2-FT enhances overall segmentation accuracy across diverse weather conditions, while UAT-SAM improves robustness in safety-critical scenarios with severe visual degradation.

Although

Figure 5 and

Figure 6 show instances where both UAT-SAM and zero-shot SAM fail to segment effectively, UAT-SAM generally exhibits better robustness and handles more challenging cases. These failures are less frequent and often occur in particularly ambiguous or noisy regions, emphasizing the model’s strength in typical conditions. Following [

11], addressing ambiguity in adverse driving conditions by focusing the model on uncertain regions shows promise.

While the current experiments compare UAT-SAM and SAM2 primarily against zero-shot SAM/SAM2 baselines, future work will benchmark against established segmentation frameworks such as Mask2Former, SegFormer, and DeepLabv3+ to position uncertainty-aware methods within standard driving-scene segmentation baselines.

6. Conclusions

This research demonstrates the effectiveness of two complementary uncertainty-aware approaches for improving semantic segmentation in self-driving applications, particularly under challenging weather conditions. The UAT adapter integrated with SAM enhanced segmentation capabilities in severe weather scenarios by leveraging uncertainty estimates to identify and focus on critical regions where visibility is compromised. Experiments on the BDD100K and CamVid datasets revealed that this approach significantly improved detection and segmentation of crucial road elements, with particular focus on vehicles and specific objects of interest. The UAT adapter showed notable improvements in car detection accuracy under fog, heavy rain, and low-light conditions, where traditional segmentation methods typically fail.

In contrast, the uncertainty-incorporated multistep fine-tuning approach with SAM2 proved effective at improving overall scene segmentation quality across varying weather conditions. This method delivered clearer contours and better distinction between foreground and background elements, resulting in more precise boundary delineation and improved class separation. The uncertainty-guided loss function enabled the model to adaptively focus on ambiguous regions during training, leading to more reliable segmentations with well-calibrated confidence estimates.

These approaches address different but complementary aspects of the inclement weather challenge in autonomous driving perception. The UAT adapter provides a targeted solution for severe conditions where safety-critical decisions must be made despite limited visibility, while the uncertainty-fine-tuned SAM2 offers broader improvements in segmentation quality that enhance overall system performance.

Our contributions advance robust segmentation under challenging conditions and demonstrate the value of incorporating uncertainty awareness into modern foundation models like SAM and SAM2. The methods presented here have potential applications beyond autonomous driving, particularly in other safety-critical domains where perception systems must operate reliably despite environmental challenges.

7. Future Work

Future work will extend the UAT-SAM framework beyond vehicle segmentation to additional object classes, including pedestrians, cyclists, and traffic signs, enabling a more comprehensive perception system for autonomous driving. We will also perform weather-specific adapter training using the ACDC and Boreas datasets, which capture diverse conditions such as fog, rain, snow, and nighttime scenes. This will support a systematic evaluation of uncertainty-aware adapters under varied real-world weather domains. Because UAT-SAM employs parameter-efficient adapter training rather than full fine-tuning, these experiments will further test its scalability and transferability across new object categories and environmental variations.

Author Contributions

Conceptualization, Z.C. and D.R.; Methodology, Z.C. and D.R.; Software, Z.C. and K.W.; Validation, Z.C., K.W. and J.W.; Formal analysis, Z.C., K.W. and J.W.; Data curation, Z.C., K.W. and S.A.; Writing—original draft, Z.C., K.W. and D.R.; Writing—review & editing, Z.C., K.W., S.A., J.W. and D.R.; Visualization, Z.C., K.W. and S.A.; Supervision, Z.C. and D.R.; Project administration, J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Acknowledgments

We would like to express our sincere gratitude to the authors of

Uncertainty-Aware Adapter: Adapting Segment Anything Model (SAM) for Ambiguous Medical Image Segmentation [

11] for their invaluable contributions to the field. Their research and application of uncertainty-aware adapters provided critical insights that significantly influenced our work. We also deeply appreciate the open-source efforts and datasets made available by the research community, which played a crucial role in facilitating our experimentation and validation. The collaborative spirit of the academic and engineering communities has been instrumental in shaping our study.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Zhang, Y.; Carballo, A.; Yang, H.; Takeda, K. Perception and sensing for autonomous vehicles under adverse weather conditions: A survey. ISPRS J. Photogramm. Remote Sens. 2023, 196, 146–177. [Google Scholar] [CrossRef]

- Jiang, S.; Guo, Z.; Zhao, S.; Wang, H.; Jing, W. Ce-gan: A camera image enhancement generative adversarial network for autonomous driving. In Proceedings of the 2022 IEEE International Conference on Data Science and Advanced Analytics (DSAA), Shenzhen, China, 13–16 October 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y.; et al. Segment anything. arXiv 2023, arXiv:2304.02643. [Google Scholar] [CrossRef] [PubMed]

- Ravi, N.; Gabeur, V.; Hu, Y.-T.; Hu, R.; Ryali, C.; Ma, T.; Khedr, H.; Rädle, R.; Rolland, C.; Gustafson, L.; et al. Sam 2: Segment anything in images and videos. arXiv 2024, arXiv:2408.00714. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.-Y.; Huang, H.-W.; Chai, W.; Jiang, Z.; Hwang, J.-N. Samurai: Adapting segment anything model for zero-shot visual tracking with motion-aware memory. arXiv 2024, arXiv:2411.11922. [Google Scholar] [CrossRef]

- Chen, J.; Yang, Z.; Zhang, L. Semantic Segment Anything. 2023. Available online: https://github.com/fudan-zvg/Semantic-Segment-Anything (accessed on 15 March 2025).

- Dutta, S.; Wei, H.; van der Laan, L.; Alaa, A.M. Estimating uncertainty in multimodal foundation models using public internet data. arXiv 2023, arXiv:2310.09926. [Google Scholar] [CrossRef]

- Kendall, A.; Gal, Y. What uncertainties do we need in bayesian deep learning for computer vision? Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Dawood, T.; Chan, E.; Razavi, R.; King, A.P.; Puyol-Anton, E. Addressing deep learning model calibration using evidential neural networks and uncertainty-aware training. arXiv 2023, arXiv:2301.13296. [Google Scholar] [CrossRef]

- Cheng, J.; Ye, J.; Deng, Z.; Chen, J.; Li, T.; Wang, H.; Su, Y.; Huang, Z.; Chen, J.; Jiang, L.; et al. Sam-med2d. arXiv 2023, arXiv:2308.16184. [Google Scholar] [CrossRef]

- Jiang, M.; Zhou, J.; Wu, J.; Wang, T.; Jin, Y.; Xu, M. Uncertainty-aware adapter: Adapting segment anything model (sam) for ambiguous medical image segmentation. arXiv 2024, arXiv:2403.10931. [Google Scholar] [CrossRef]

- Kohl, S.A.A.; Romera-Paredes, B.; Meyer, C.; Fauw, J.D.; Ledsam, J.R.; Maier-Hein, K.H.; Eslami, S.M.A.; Rezende, D.J.; Ronneberger, O. A probabilistic u-net for segmentation of ambiguous images. arXiv 2019, arXiv:1806.05034. [Google Scholar] [CrossRef]

- Burnett, K.; Yoon, D.J.; Wu, Y.; Li, A.Z.; Zhang, H.; Lu, S.; Qian, J.; Tseng, W.-K.; Lambert, A.; Leung, K.Y.K.; et al. Boreas: A multi-season autonomous driving dataset. arXiv 2023, arXiv:2203.10168. [Google Scholar] [CrossRef]

- Brostow, G.J.; Fauqueur, J.; Cipolla, R. Semantic object classes in video: A high-definition ground truth database. Pattern Recognit. Lett. 2009, 30, 88–97. [Google Scholar] [CrossRef]

- Yu, F.; Chen, H.; Wang, X.; Xian, W.; Chen, Y.; Liu, F.; Madhavan, V.; Darrell, T. Bdd100k: A diverse driving dataset for heterogeneous multitask learning. arXiv 2020, arXiv:1805.04687. [Google Scholar] [CrossRef]

- Modas, A.; Sanchez-Matilla, R.; Frossard, P.; Cavallaro, A. Toward robust sensing for autonomous vehicles: An adversarial perspective. IEEE Signal Process. Mag. 2020, 37, 14–23. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Y.; Niu, Q. Multi-sensor fusion in automated driving: A survey. IEEE Access 2020, 8, 2847–2868. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).