1. Introduction

Network protocols serve as the foundational rules, standards, and conventions governing information exchange between peer entities in computer networks, with protocol programs acting as their practical implementations. However, the inherent complexity of protocol specifications and inevitable oversights during implementation expose these programs to vulnerability risks, which pose severe threats to the stability and security of information systems. Notable examples include the critical “Heartbleed” vulnerability [

1] in OpenSSL—enabling attackers to steal encryption data from billions of users—the OpenNDS gateway vulnerability [

2] that allows bypassing access authentication for unauthorized intranet access, and the Wing FTP Server vulnerability [

3], facilitating full server compromise. Thus, efficient and effective vulnerability detection in network protocol programs remains a critical imperative.

Fuzzing, a proven efficient vulnerability detection technique, is widely employed in protocol program testing [

4,

5,

6,

7,

8]. It identifies potential security flaws by transmitting large volumes of malformed messages to target protocol programs and monitoring real-time exceptions [

9,

10,

11]. Technically, protocol fuzzing is categorized into black-box and gray-box approaches based on reliance on the target program’s internal information [

12,

13,

14,

15]. Gray-box fuzzing enhances efficiency by leveraging instrumentation to obtain internal data (e.g., code coverage and key path triggering) for process optimization, but its dependence on source code and customized instrumentation tools limits applicability to closed-source scenarios. In contrast, black-box fuzzing requires no access to the tested program’s source code, making it more widely adopted in real-world settings. From a test case construction perspective, fuzzing methods are further divided into mutation-based and generation-based types [

16,

17,

18,

19]. Mutation-based methods modify real interaction messages (seeds) via strategies like random perturbation or boundary replacement, but their lack of protocol specification guidance hinders the production of high-quality test cases. Generation-based methods, by contrast, directly construct test cases compliant with protocol format and logic, resulting in superior quality.

Given these attributes, generation-based black-box fuzzing has emerged as a widely used technique for network protocol vulnerability detection due to its strong environmental adaptability. Typically, its workflow involves three steps: extracting protocol information through real traffic analysis, writing generation scripts based on the extracted information, and executing fuzzing. Nevertheless, current generation-based black-box protocol fuzzing faces three key challenges to achieving effective and efficient vulnerability detection:

Challenge 1. Traffic-based protocol analysis is highly labor-intensive and error-prone, as testers must manually decode field meanings, interaction logic, and state transitions frame by frame. This tedious process not only lacks efficiency but also introduces subjective interpretations of protocol specifications, ultimately reducing the accuracy of automatically generated test scripts.

Challenge 2. The current process of writing generation scripts is overly manual and inefficient. Testers are required to master framework-specific syntax while designing complex fuzzing strategies, a laborious approach that is time-consuming, prone to human error, and ultimately compromises the overall effectiveness of fuzzing campaigns.

Challenge 3. The fuzzing process lacks a dynamic optimization mechanism. Black-box methods generally cannot adjust test case generation rules in real time, and structural deviations in initial script design often lead to the production of numerous invalid test cases, resulting in low efficiency.

Recent years have witnessed breakthrough progress in Large Language Model (LLM) technology, which has provided a fresh technical approach to addressing the aforementioned challenges. First, with their strong semantic understanding and text analysis capabilities, LLMs can greatly assist testers in parsing protocol formats, thereby improving the efficiency and accuracy of protocol analysis. Second, leveraging LLMs’ excellent code generation capabilities enables the rapid construction of scripts, effectively reducing development costs while improving script quality. Moreover, combining LLM-based agent technology can realize dynamic perception of the testing process and strategy optimization, breaking through the static limitations of general methods.

However, LLMs have inherent limitations such as uncertainty and instability in generated content, which may lead to incomplete protocol information analysis and unstable, non-executable script generation. Their content generation requires well-designed prompt engineering and appropriate inspection and repair—especially critical in generation-based black-box protocol fuzzing scenarios that demand high accuracy.

In response to the aforementioned challenges, we introduce LLM-Boofuzz—a generation-based black-box protocol fuzzing framework driven by LLMs. For Challenge 1, we convert traffic packets into LLM-interpretable structured text, utilize prompt engineering to guide LLMs in extracting comprehensive protocol-related information, and determine optimal configuration parameters—including structured text format, LLM parameter scale, and input message size—through comparative experimental analyses. For Challenge 2, we leverage LLMs’ code generation ability and combine it with the protocol information extracted earlier to automatically generate fuzzing scripts in batches. These scripts include differentiated fuzzing strategies guided by prompt engineering. Additionally, the framework adds an LLM-driven script repair mechanism to improve the quality and executability of the generated scripts. For Challenge 3, we integrate an LLM-based agent to support multi-script iterative fuzzing, enable real-time status monitoring of the target program, and achieve dynamic script optimization via LLM invocation.

The contributions of this paper are summarized as follows:

Method. We present a generation-based, LLM-driven black-box protocol fuzzing method, which leverages LLMs to extract protocol-related information from real network traffic, guide LLM-based fuzzing script generation, and enable multi-script iterative fuzzing via a dedicated agent. Most importantly, this is the first work to leverage intelligent technology to enable the construction of protocol fuzzing scripts.

Approach. We formulate a unified LLM-powered workflow encompassing three key modules: (1) LLM-based real traffic protocol information extraction, (2) LLM-based script generation and repair, and (3) multi-script iterative fuzzing.

Prototype and Evaluation. We develop the LLM-Boofuzz prototype system and conduct comprehensive experiments across HTTP, FTP, SSH, and SMTP protocol scenarios, benchmarking it against mainstream fuzzing tools (Boofuzz [

20], Snipuzz [

21], and AFLNet [

22]). Experimental results demonstrate that LLM-Boofuzz exhibits significantly superior performance in vulnerability triggering and code coverage compared to the baseline tools. Moreover, it demonstrates strong adaptability to stateful protocol fuzzing, with all core modules functioning robustly.

2. Background and Motivation

In this section, we introduce the background and motivation of our paper. We first introduce Boofuzz, currently the most widely used generation-based black-box fuzzing tool, along with its usage limitations. Then, we introduce the application of LLMs in protocol fuzzing.

2.1. Boofuzz Tool

Boofuzz, a mainstream generation-based black-box protocol fuzzing tool, inherits Sulley’s [

23] Python scripting mechanism. Using Python 3.11+ (Python 3.11 and higher versions) syntax, Boofuzz can directly define protocol field types (e.g., strings, integers, and checksums), set various mutation rules (e.g., boundary values, dictionary enumeration, string concatenation, and byte flipping), and construct protocol state machines to adapt to stateful protocol interaction scenarios. Meanwhile, Boofuzz’s built-in network session management function can automatically maintain target connections, its real-time monitoring function can automatically capture exceptions such as program crashes and timeouts, and its rich callback function interfaces support in-depth expansion of script functions. With these features, Boofuzz enables rapid, targeted, and customized construction of black-box protocol generation scripts, making it widely used in practical vulnerability detection scenarios.

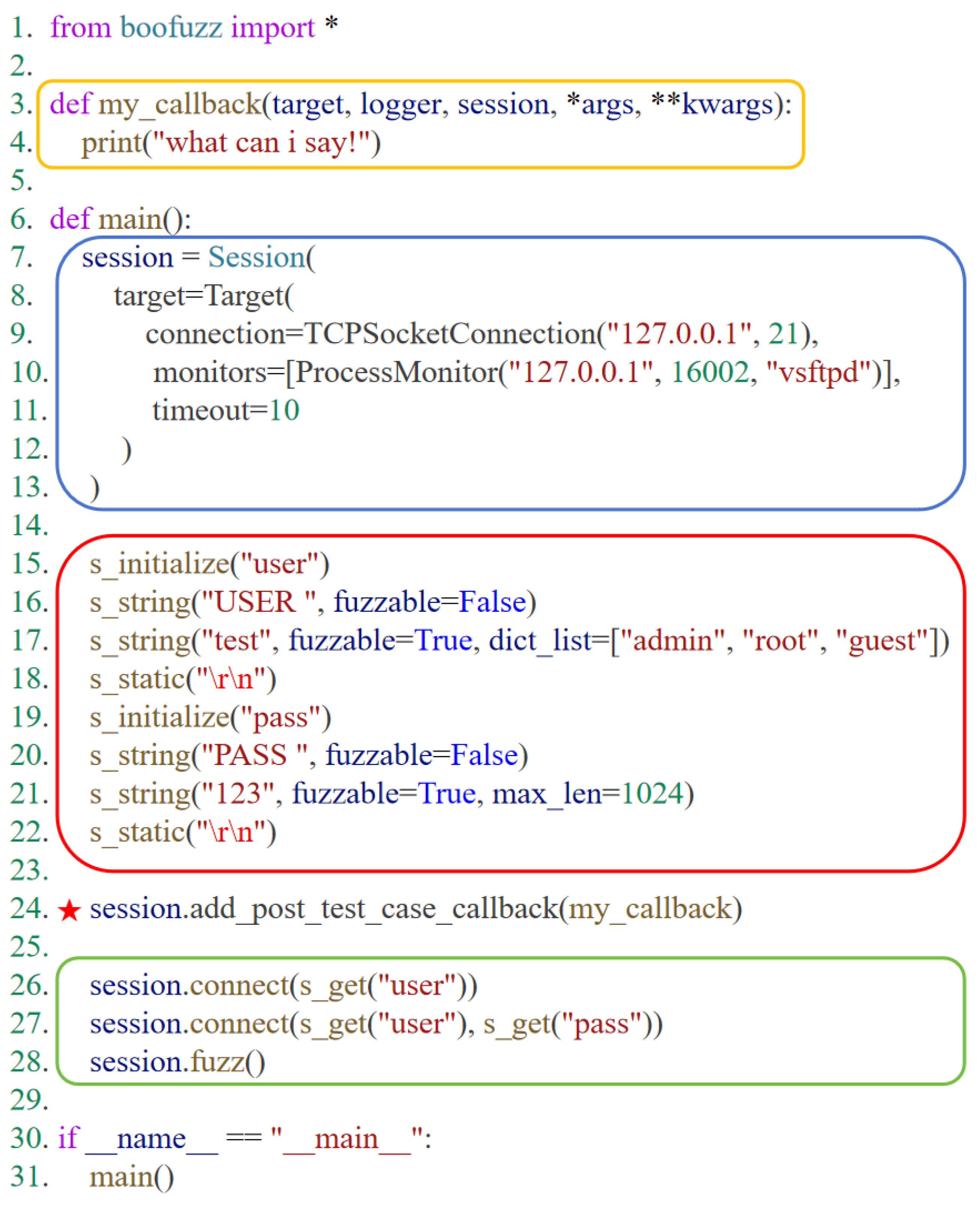

Figure 1 presents an example of a Boofuzz generation script, which is designed to test an FTP protocol program.

The blue square represents the session construction, which configures the connection method to the target protocol program, process monitoring, and timeout duration.

The red square represents field definition and mutation rules, which define the message structure and mutation strategies.

The yellow square and line 24 represent the callback function configuration, which specifies that Boofuzz will automatically execute the custom my_callback function after sending test cases to support function expansion.

The green square represents the protocol state machine construction, which adapts to the FTP protocol log-in process by setting the sending order of field groups.

It is recognized that the Boofuzz tool boasts comprehensive functionality, featuring flexible script writing and strong extensibility. However, manual development of Boofuzz scripts still presents certain challenges. This process requires not only proficiency in Boofuzz syntax but also a deep understanding of protocol formats—a constraint that has hindered the tool’s full and in-depth application. Moreover, the Boofuzz application process is constrained by numerous factors.

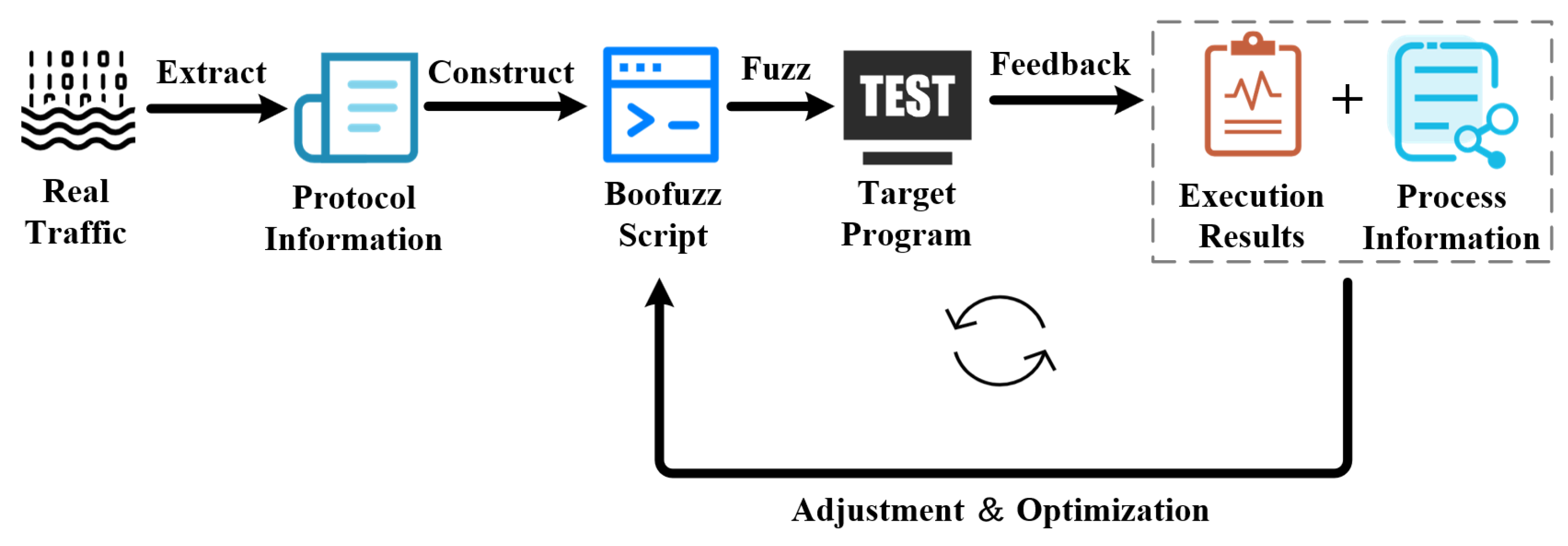

Figure 2 shows the general protocol fuzzing workflow of Boofuzz: Testers first need to obtain the real interaction traffic of the target protocol programs, parse the traffic data based on professional experience, and extract protocol information such as protocol field structure, interaction rules, and state transition logic from it. Secondly, based on the extracted protocol information and in accordance with the syntax specifications of the Boofuzz tool, testers manually write scripts. Then, testers launch the scripts to execute fuzzing. Finally, testers need to combine the script execution results and process information to manually analyze the shortcomings of the current script in aspects like field coverage and mutation strategies, adjust and optimize the script, and then re-launch it for new fuzzing, forming a cyclic process until the preset fuzzing target is achieved. However, this workflow has significant efficiency and accuracy flaws as follows.

Heavy manual dependence. The three core steps—protocol information extraction, script construction, and script adjustment—all depend on manual operations. Not only does this lead to high labor and time costs, but it also tends to introduce errors (e.g., deviations in protocol information parsing, flaws in script writing, and inadequate optimization accuracy). These issues stem from variations in human domain expertise and operational oversights, ultimately undermining the effectiveness of fuzzing.

Single-threaded fuzzing. Boofuzz uses a single-threaded mode, executing only one set of script logic at a time. It cannot explore the protocol’s multidimensional syntax space and state space in parallel, resulting in limited test coverage and low overall efficiency.

2.2. Application of LLMs in Protocol Fuzzing

In recent years, technologies related to LLMs have developed rapidly. Leveraging their exceptional capabilities in information extraction, logical reasoning, and code generation, the application of LLMs in protocol fuzzing has gained rapid traction. ChatAFL [

24] uses pre-trained LLMs to extract machine-readable protocol information and assist in generating high-quality seeds, effectively improving fuzzing efficiency. However, it struggles to handle unknown protocol scenarios. ArtifactMiner [

25] leverages GPT-3.5 to learn from a small number of samples and generate Modbus protocol description specifications, with field integrity close to that designed by human experts. Nevertheless, its generation performance highly depends on the quality of initial samples. mGPTfuzz [

26] uses LLMs to extract Matter IoT protocol specifications and successfully constructs a large number of valid test cases. However, it lacks refined guidance in fuzzing strategies, leading to random test case generation. ChatHTTPFuzz [

27] uses LLMs to parse HTTP protocol standard documents and automatically extract HTTP protocol structures through specific prompt templates, improving the automation and accuracy of protocol fuzzing. However, its specific prompt templates are only applicable to HTTP, limiting its universality. Maklad et al. [

28] proposed an agent-based LLM architecture integrating Retrieval-Augmented Generation and Chain-of-Thought prompting, which improves the efficiency of fuzzing seed generation and protocol state space coverage. However, this architecture is complex, and the retrieval module highly depends on the completeness and timeliness of the protocol knowledge base. LLMIF [

29] employs enhanced LLMs to analyze protocol specifications, mitigating issues in IoT protocol fuzzing such as ambiguous message formats, unclear dependencies, and inadequate test case evaluation. However, it is only applicable to IoT device scenarios and requires hardware support for implementation. CHATFuMe [

30] leverages LLMs to automatically construct protocol state models and generate executable programs for state sequence message generation, thereby enabling LLM-powered stateful protocol fuzzing. However, this method relies on protocol standard documents and cannot handle scenarios based on real traffic. Furthermore, despite numerous existing explorations in this field, we find that LLMs still present the following issues in their application to protocol fuzzing:

Existing studies neglect extracting protocol information via LLM-based real traffic parsing. In practical vulnerability detection, numerous unknown or private protocols lack public documentation, necessitating real traffic analysis to infer protocol details (e.g., field structures and interaction rules). Even for public protocols like HTTP, implementation-specific message designs (e.g., omitted Cookie fields and custom headers) are prevalent. Yet existing works primarily extract protocol information from standard documents or technical texts, failing to address such scenarios.

Existing studies overlook the construction of generation-based black-box protocol fuzzing scripts via LLMs. As a critical step in generation-based black-box protocol fuzzing, script construction remains underexplored in leveraging LLMs’ potential. Compounded by LLMs’ inherent generation uncertainties, generating executable scripts with effective fuzzing strategies remains an unaddressed challenge.

Existing studies are deficient in research on LLM-assisted dynamic adjustment and optimization of black-box protocol fuzzing. Traditional black-box protocol fuzzing relies heavily on manual intervention for test strategy iteration, suffering from inherent inefficiencies, poor real-time performance, and human oversight. While LLM-based agent technologies offer potential for dynamic optimization—via real-time collection of test results, LLM-driven script adjustments, and adaptive fuzzing iteration—this avenue remains unexplored in the existing literature.

Drawing on the above analysis, we propose LLM-Boofuzz. Built upon the Boofuzz tool and designed to tackle the limitations of conventional methods, LLM-Boofuzz incorporates three key parts: LLM-based real traffic protocol information extraction, LLM-based script generation and repair, and multi-script iterative fuzzing—thereby enabling LLM-powered enhancement of the entire workflow for generation-based black-box protocol fuzzing.

3. Design of LLM-Boofuzz

3.1. Overview

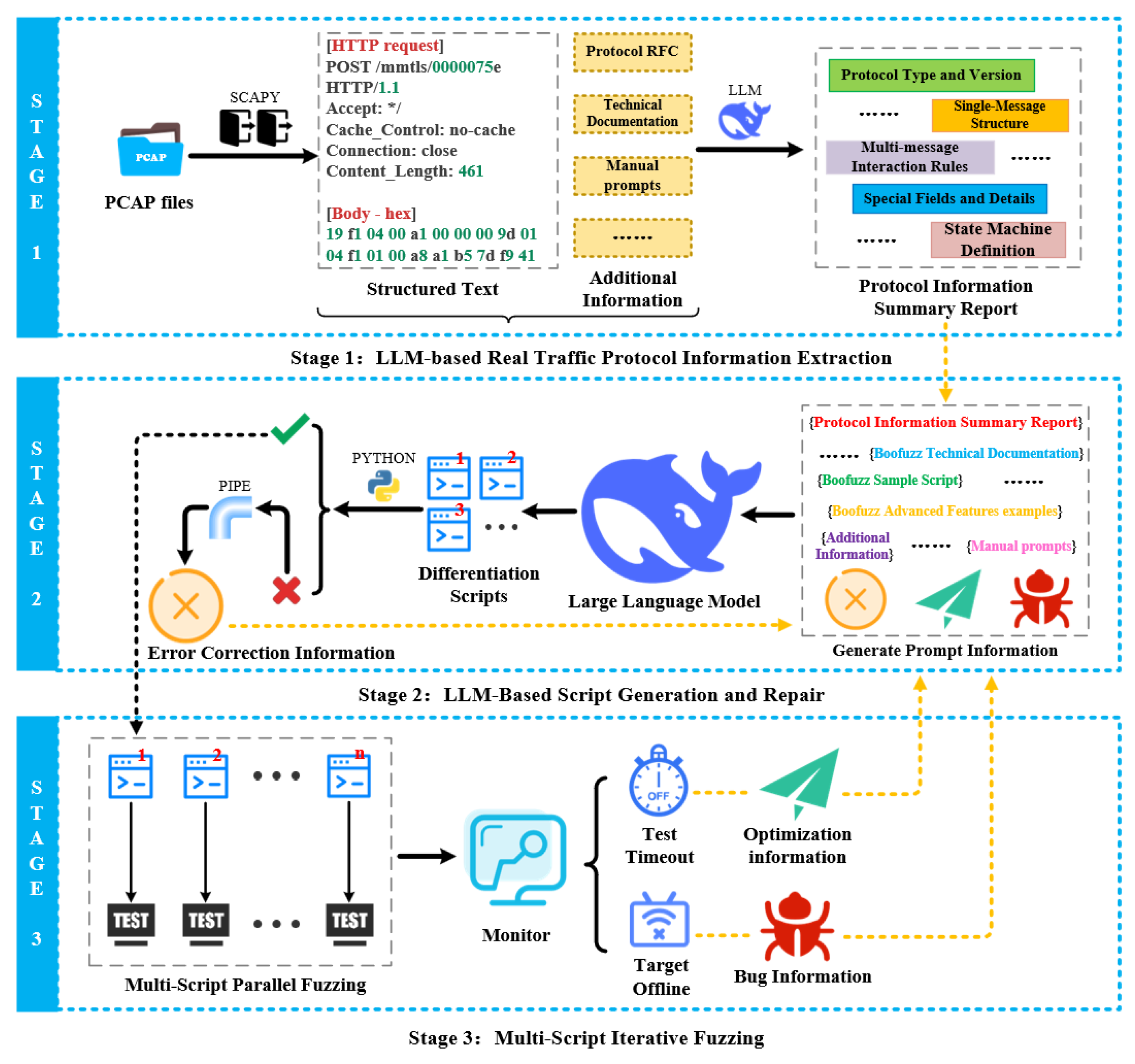

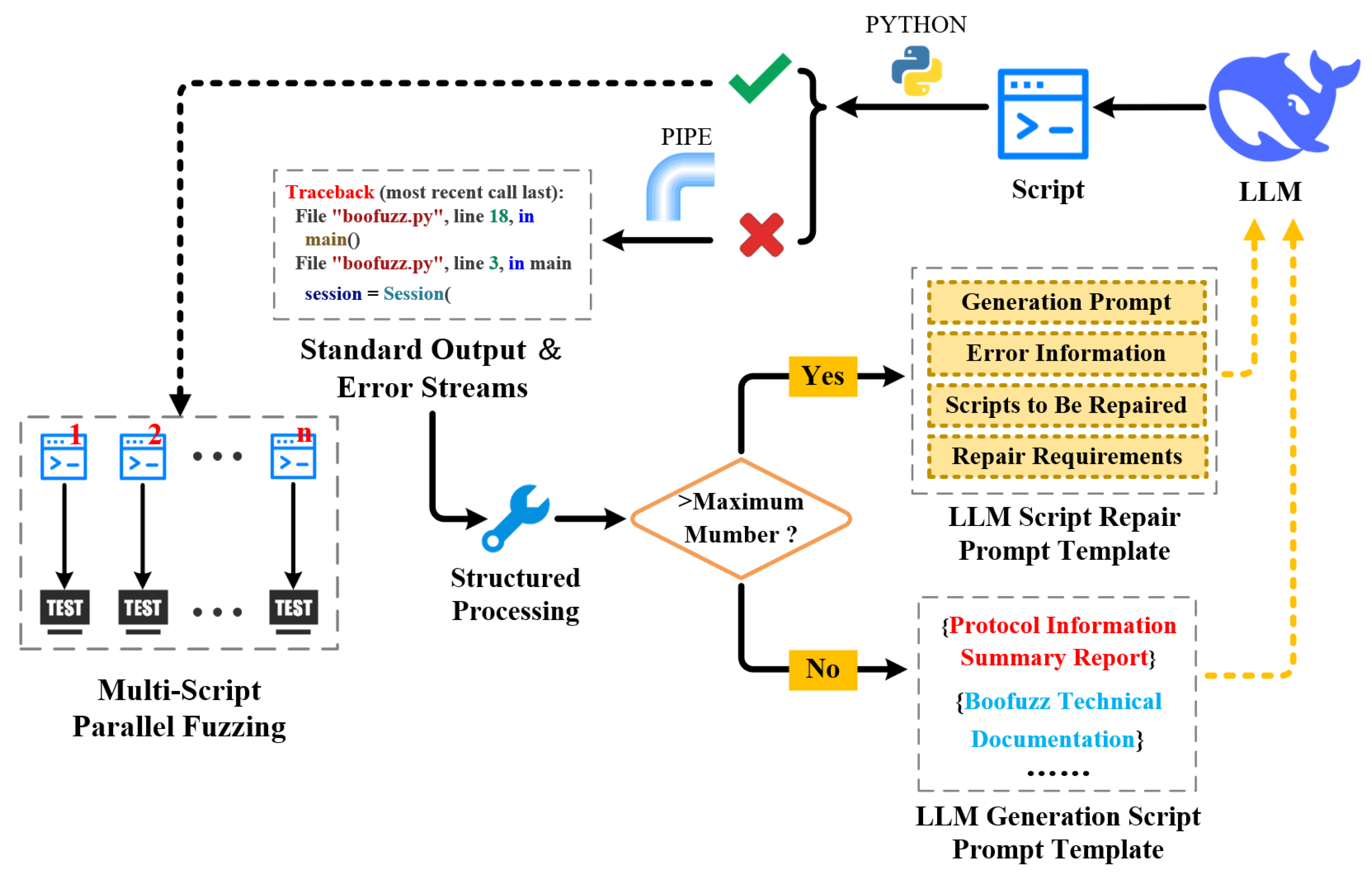

As shown in

Figure 3, LLM-Boofuzz takes real traffic as input and leverages LLMs’ text analysis and code generation capabilities and the Boofuzz tool to construct a fuzzing workflow consisting of three stages: LLM-based real traffic protocol information extraction, LLM-based script generation and repair, and multi-script iterative fuzzing.

Stage 1: LLM-based Real Traffic Protocol Information Extraction. Firstly, LLM-Boofuzz uses Scapy [

31] scripts to convert real communication messages of the target protocol program into structured text containing key information (e.g., field structures and interaction sequences)—providing LLMs with parsable input for analysis. Then prompt engineering is designed to guide LLMs in in-depth analysis of the structured text, extracting protocol information (e.g., message structures, field constraints, and protocol state machines) to support subsequent script generation.

Stage 2: LLM-Based Script Generation and Repair. Then, based on the extracted protocol information, LLM-Boofuzz integrates Boofuzz technical documentation, sample scripts, examples of advanced Boofuzz functions, and manual prompts to guide LLMs in generating multiple scripts that comply with Boofuzz syntax specifications and feature differentiated fuzzing strategies. These generated scripts undergo a trial run: for those that fail to execute, error output is captured via pipes and combined with Boofuzz technical documentation to form error correction information, which then guides LLMs in repairing the scripts—ultimately ensuring their executability.

Stage 3: Multi-Script Iterative Fuzzing. Finally, LLM-Boofuzz simultaneously launches a number of copies of the target protocol program that matches the number of scripts. It ensures that each script executes testing tasks independently through a process isolation mechanism. Throughout the process, the monitoring module tracks the fuzzing status in real time via two types of non-intrusive monitoring logics:

If a script fails to trigger any exceptions for a duration exceeding the preset threshold, it is determined to have insufficient testing capability and marked as an object to be optimized.

Through network connection polling and background process monitoring, exceptions such as program crashes and hangs are identified, and information, including the script that triggered the exception, the test case, and the execution timestamp, is recorded.

The two types of monitoring data are integrated into structured feedback information, which is inputted into LLMs in real time to support the dynamic adjustment of fuzzing strategies. For scripts that have timed out without triggering any exceptions, LLMs adjust and optimize the scripts based on protocol information, the original script’s fuzzing strategy, and other relevant data. For scripts that have triggered exceptions, LLMs conduct in-depth analysis of the scripts by combining information such as the original script’s fuzzing strategy, the test case that triggered the exception, and the exception triggering time so as to explore more associated Proof-of-Concept (PoC) instances. Through this closed-loop iterative mechanism, LLM-Boofuzz not only ensures the continuity of the testing process but also improves the efficiency and comprehensiveness of vulnerability discovery.

The following sections detail the implementation of LLM-Boofuzz from three aspects: LLM-based real traffic protocol information extraction, LLM-based script generation and repair, and multi-script iterative fuzzing.

3.2. LLM-Based Real Traffic Protocol Information Extraction

To address the challenges of low efficiency and high error rate in manually parsing real traffic to extract protocol information in general black-box protocol fuzzing, LLM-Boofuzz establishes an LLM-based real traffic protocol information extraction method. This method first converts real traffic into structured text that LLMs can analyze and then guides LLMs to parse the structured text for extracting protocol information. This subsection will provide a detailed introduction in

Section 3.2.1 and

Section 3.2.2.

3.2.1. Real Traffic Conversion Method

This subsubsection details the method for converting real traffic into structured text, including the use of Scapy scripts to process .pcap format packets into LLM-interpretable structured text, the key information to retain during conversion, text organization, and the optimal conversion strategy determined through experiments. Since current LLMs do not support direct parsing of .pcap format packets, LLM-Boofuzz uses Scapy scripts to convert .pcap packets into LLM-interpretable structured text, which is then input to LLMs for protocol information extraction and analysis. We find that the rationality of the text format directly affects LLMs’ parsing performance:

Finding 1. The text format must have structured expression capabilities to ensure complete transmission of information such as protocol fields and message order.

Finding 2. The text format should be light to avoid excessive redundant information that affects the effect of the LLM analysis.

Therefore, we compare and screen common text formats to explore the optimal method for LLM-based protocol information extraction.

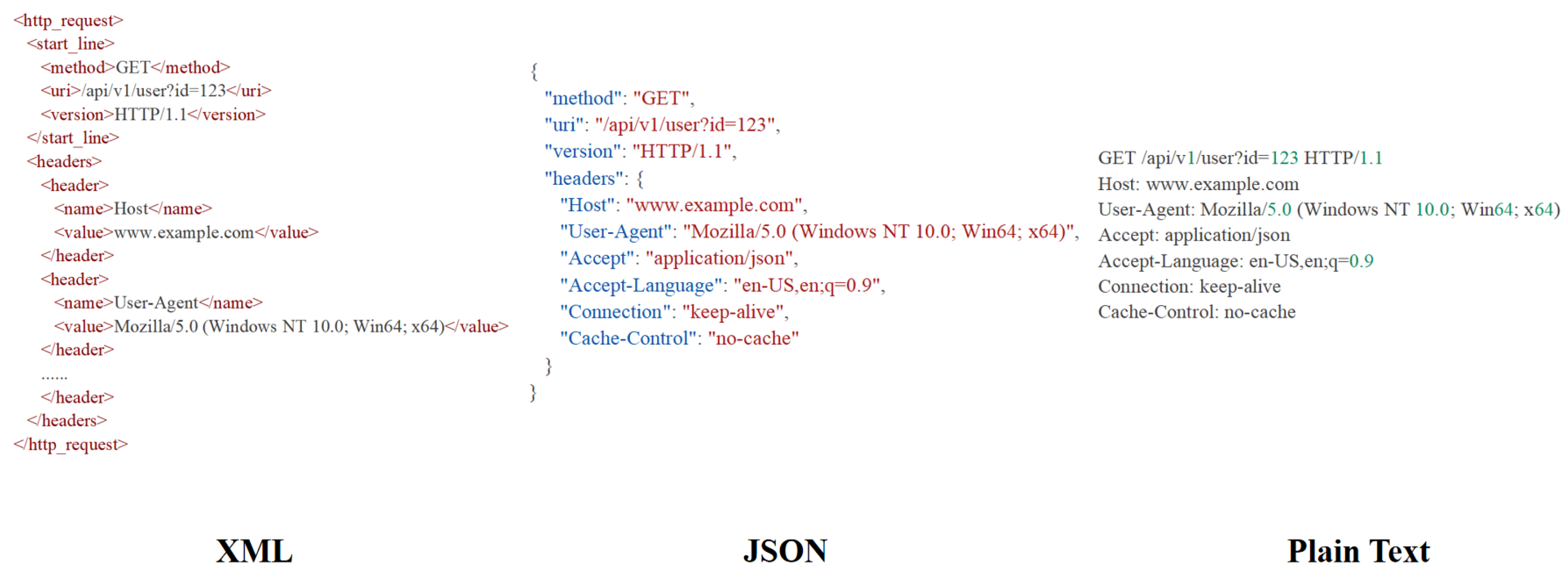

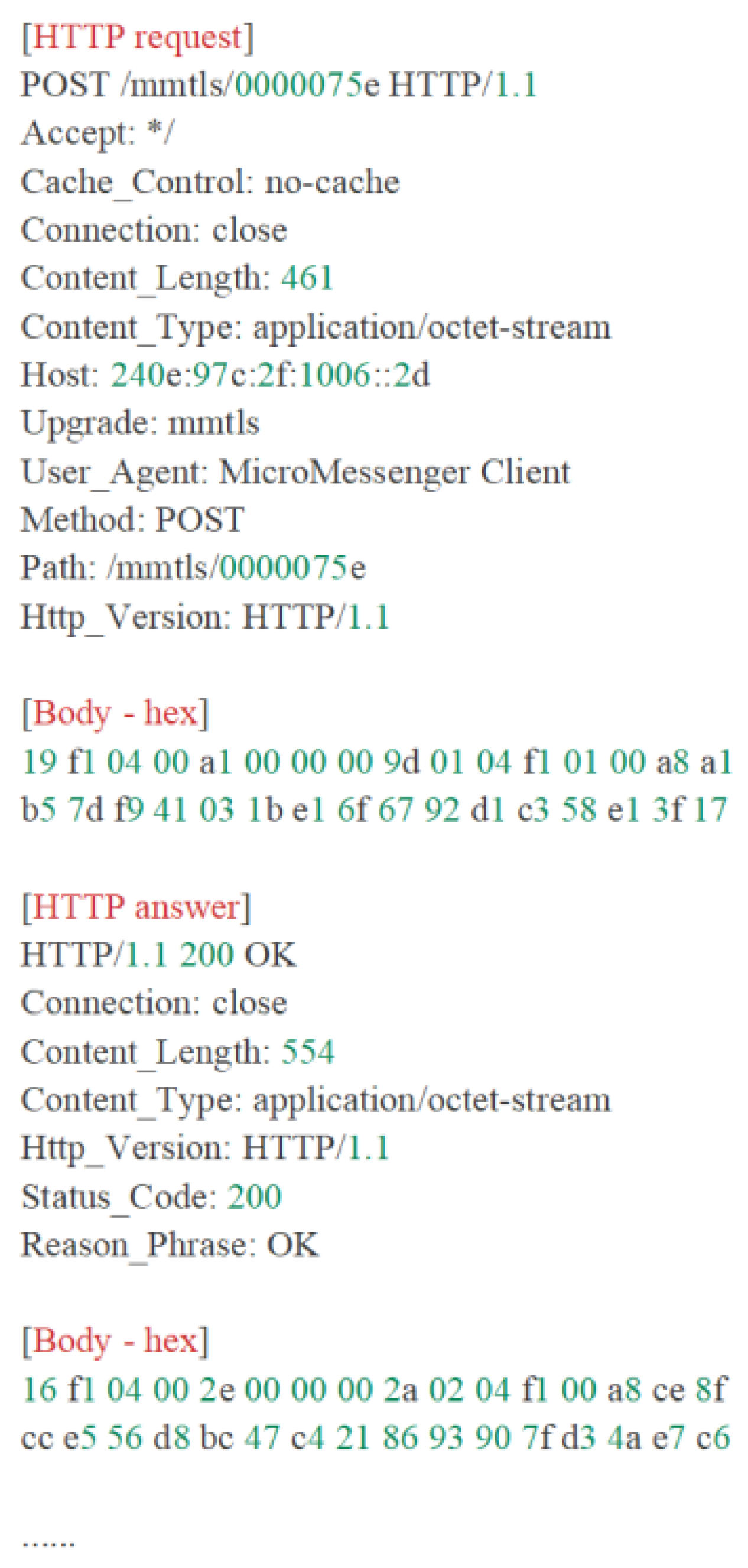

As shown in

Figure 4, the mainstream structured data exchange formats include the following:

XML. Although it has a strict tag-nested structure, its cumbersome closing tags (e.g., <field_name=“src_ip”>192.168.1.1</field>) introduce a large number of redundant characters, significantly increasing text length [

32].

JSON. With key–value pairs (e.g., “src_ip”: “192.168.1.1”) and array structures, it can clearly express the hierarchical relationship of messages while being more concise than XML [

33].

Plain Text. This is the most lightweight format, and it can achieve more structured descriptions through custom separators (e.g., using \n to separate fields, to distinguish message types).

Next, it is necessary to consider how to handle binary content in messages during conversion to structured text. As shown in

Table 1, a lot of protocols contain binary fields. To convert messages into fully readable text, retaining all untranslatable binary content would increase the redundancy of useless information and analysis complexity.

Based on the above research, we design and compare three structured text conversion methods:

JSON Format Conversion. Organize traffic packet information (e.g., timestamp, source/destination address, port, protocol type, and field information) into structured text in JSON format. Based on the observation that key information of binary content is mostly concentrated in the header area and hexadecimal bytes are shorter and widely used in message storage, binary content in messages is converted to hexadecimal bytes, with 30–50 bytes retained.

Full Readable Text Conversion. Fully translate parts of the message that can be parsed into readable text, convert binary content to hexadecimal bytes, and retain all of them.

Concise Readable Text Conversion. Fully retain parts of the message that can be parsed into readable text, convert binary content to hexadecimal bytes, and retain 30–50 bytes.

Based on Finding 2, in order to quantitatively evaluate the resource consumption differences of the three traffic conversion methods, we design a comparative experiment: 100 real HTTP messages (stateless protocol) and 100 FTP messages (stateful protocol) are processed using the three conversion methods. The number of lines of the converted structured text and the token consumption for LLM analysis are counted. The results are shown in

Table 2.

Experimental results show that Method 3 (Concise Readable Text Conversion) performs best in resource consumption control: in terms of the number of text lines, for HTTP, Method 3’s line count is only 41.7% of Method 1’s and 58.3% of Method 2’s, while for FTP, it is 48.7% of Method 1’s and 59.4% of Method 2’s—significantly reducing the size of structured text. As for token consumption, for HTTP, Method 3 reduces token usage by 58.0% compared to Method 1 and 43.4% compared to Method 2, and for FTP, it reduces token usage by 50.7% compared to Method 1 and 40.0% compared to Method 2—with a particularly significant gap compared to Method 1.

Interestingly, although Method 1 retains only part of the binary content, its generated text lines and token consumption are higher than those of Method 2 (which retains all binary content). We analyze the reasons as follows:

JSON format uses a large number of { } and line breaks, increasing the number of lines of structured text. Additionally, the frequent repetition of key–value content (e.g., “methods”, “version”, and “http.payload”) in JSON significantly increases token consumption.

Most of the 100 captured messages are regular request messages, with only a small number involving binary file transfer—leading to higher resource consumption in Method 1 than in Method 2. However, this does not affect the conclusion that “Method 3 performs best in resource consumption control”.

To explore the impact of conversion methods on LLMs’ protocol parsing performance, a control experiment was designed: 100 HTTP and 100 FTP messages were processed via three conversion methods and tested on four representative first-tier LLMs—DeepSeek-R1-72B, DeepSeek-R1-256B, Qwen3-256B, and GPT-5. These models are recognized for their strong performance on mainstream benchmarks and excel in semantic understanding, code generation, and logical reasoning, particularly in complex tasks like protocol traffic parsing. With a fixed temperature of 0.3 and consistent prompts, each model parsed messages to generate protocol information summary reports, with 50 repetitions per group. Reports were independently evaluated by two professional researchers—judged as “good” only if protocol information (e.g., structure and field constraints) aligned with RFC specifications/actual traffic and both researchers concurred. The number of “good” reports (Effectiveness Score) is shown in

Table 3.

Experimental results show that across both HTTP and FTP protocols, all four advanced first-tier LLMs (DeepSeek-R1-72B, DeepSeek-R1-256B, Qwen3-256B, and GPT-5) successfully completed the protocol parsing task, with Method 3 (Simplified Readable Text Conversion) consistently yielding more “good” reports than Method 1 (JSON Format Conversion) and Method 2 (Full Readable Text Conversion). This advantage was most notable for FTP (a more complex protocol) when paired with DeepSeek-R1-256B. Additionally, larger-parameter models outperformed smaller ones: DeepSeek-R1-256B generated more “good” reports than DeepSeek-R1-72B under all methods, suggesting that increased parameters may enhance protocol analysis capabilities. The consistent effectiveness of Method 3 across these top-performing LLMs confirms that our approach is not dependent on specific models but universally applicable, thereby validating the reliability and effectiveness of our research method.

Shown in

Figure 5, based on the above research, the structured text conversion method finally determined in this paper is as follows:

Fully retain parts of the message that can be parsed into readable text.

Convert binary content to hexadecimal bytes and retain 30–50 bytes for consumption control.

Organize sent and received messages in chronological order and distinguish message boundaries using separators (e.g., [Request], [Response], and line breaks) to help improve the parsing level of LLMs.

3.2.2. LLM Parsing to Generate Protocol Information Summary Report

After converting real traffic into LLM-parsable structured text, the core task is to use LLMs for in-depth analysis to extract protocol information and generate a comprehensive, standardized protocol information summary report. This report serves as the foundation for guiding LLMs in generating high-quality scripts. This process requires well-designed prompts to guide LLMs in exerting their semantic understanding and knowledge integration capabilities, clearly defining the key content to be included in the report and the redundant information to be avoided, and determining the appropriate LLM parameter size and message input scale.

Guided by the authoritative prompt engineering literature [

34,

35,

36,

37,

38,

39,

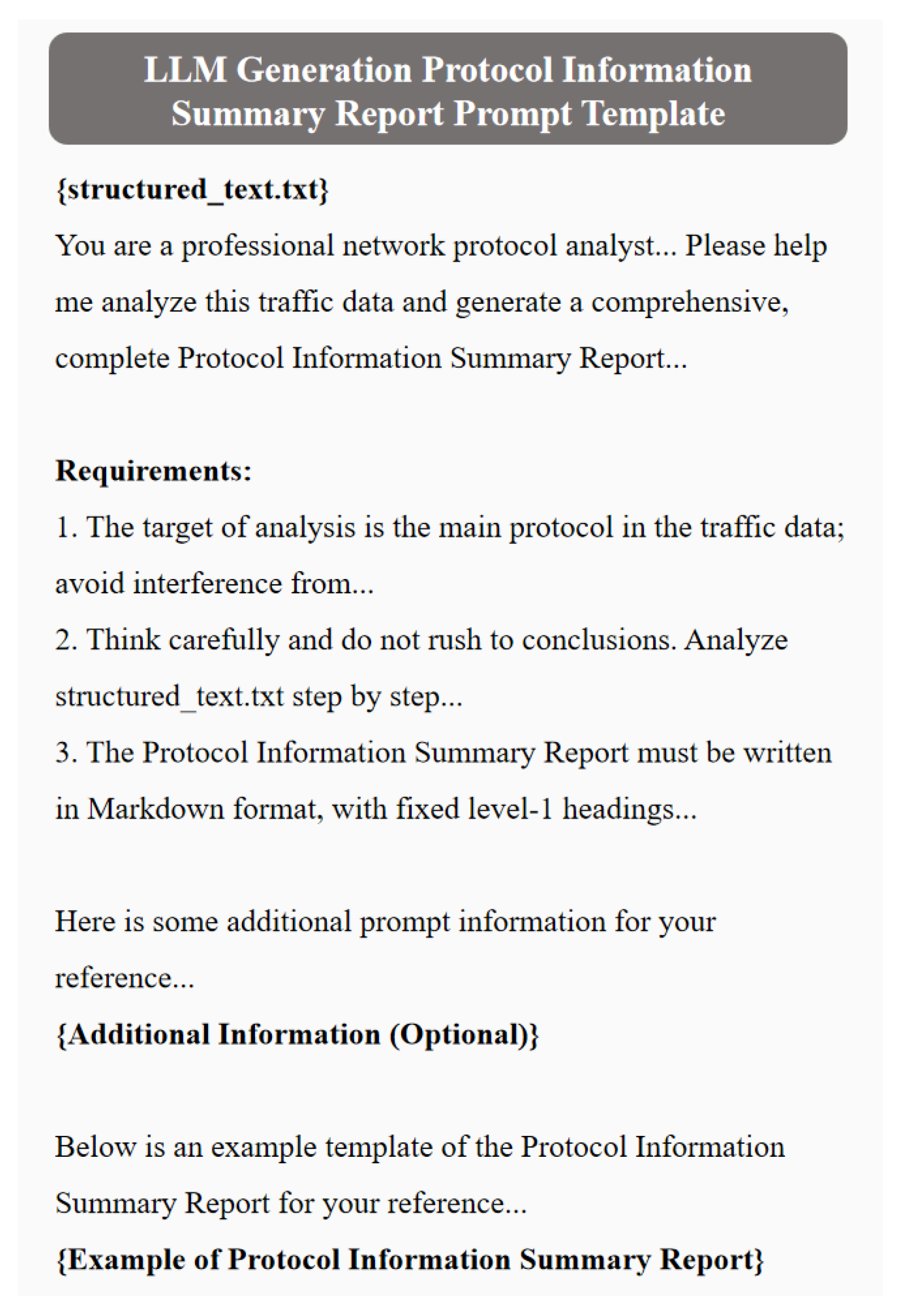

40], the prompt design for protocol information extraction follows the following logic:

Clarify Objectives. Clearly define the target of analysis as the main protocol in the structured text, avoiding interference from underlying details and sub-protocols irrelevant to the protocol logic.

Provide References. Offer template examples of the protocol information summary report, specifying the typical content and format of each part.

Guide Step-by-Step Reasoning. Require LLMs to perform analysis in three steps—“first parse single-message fields, then sort out multi-message interaction sequences, and finally summarize state machine rules.” This structured approach reduces analysis complexity.

Standardize Input and Output. Mandate that the protocol information summary report uses Markdown format, with five fixed level-1 headings:

Protocol Type and Version;

Single-Message Structure (presented as a table, including field name, data type, length range, default value, and constraints);

Multi-Message Interaction Rules;

State Machine Definition (describe state nodes and transition conditions in text, e.g., “State S1 (Unauthenticated) → Receive USER command → State S2 (Authenticating)”);

Special Fields and Details.

Role Setting. Explicitly instruct LLMs to “act as a professional network protocol analyst with in-depth knowledge of TCP/IP and application-layer protocols, capable of accurately identifying field structures, interaction rules, and state transition logic from traffic text.” This professional role setting prompts the model to output results that comply with domain specifications.

Meanwhile, when generating the protocol information summary report, LLMs are reminded to avoid three types of redundant information:

Underlying Protocol Details. Examples include preamble codes of Ethernet frames and CRC check values—details at the link layer irrelevant to the target protocol logic—to avoid interfering with the analysis of application-layer protocols.

Redundant Descriptions of Non-Core Fields. For fixed padding fields in the protocol (e.g., blank line separators in HTTP messages), only their positions and formats need to be noted, without repeated explanations of their functions.

Ambiguous Speculative Content. If LLMs cannot clearly infer the constraints of a field from the traffic, they should mark the field as “to be verified” and avoid redundant descriptions—instead of generating uncertain assumptions—thereby improving report accuracy.

In summary, this paper constructs the LLM protocol information summary report generation prompt template shown in

Figure 6.

To determine the optimal analysis configuration, we design an experiment to compare the quality of protocol information summary reports generated under different conditions. The experimental variables include LLMs’ parameter sizes (8B, 72B, and 256B) and the number of input traffic messages (25, 50, 100, and 200), while the evaluation metrics are completeness (whether the report covers the five core content categories listed above) and accuracy (whether field constraints and state machine rules comply with RFC specifications or actual traffic).

Each group of experiments is repeated 50 times. Two professional protocol security researchers jointly evaluate whether the protocol information summary report is “good” in terms of completeness and accuracy. The number of “good” evaluations for each group is counted.

Table 4 shows that larger-parameter LLMs generally produce more “complete” and “accurate” reports (better quality); regarding input message count, report completeness is basically independent of this factor (e.g., the 72B model already achieves high “complete” counts with 25 messages), while report accuracy not only rises with LLM parameter size but also shows a “rise-then-stabilize” trend with increasing message count—with 25 messages, LLMs miss low-frequency methods/fields (low accuracy), accuracy improves as messages increase, and quality differs little once over 100 messages. The reasons are that report “completeness” depends on LLMs’ task understanding and output control (only tied to parameter size, not message count), while “accuracy” requires both sufficient parameters and appropriate message counts (fewer messages lead to over-focus on local samples, lacking comprehensive content); therefore, the configuration adopted in this study is an LLM with ≥256B parameters combined with ≥100 real traffic messages as inputs.

Moreover, for state machine extraction in stateful protocols (e.g., FTP and SMTP), LLM-Boofuzz avoids Bleem-like approaches [

41] (which dynamically generate/refine state machines via real-time traffic analysis during fuzzing) for three reasons: first, Boofuzz’s generation-based fuzzing (vs. mutation-based) hinders independent exploration of new protocol states from real-time interactions; second, black-box scenarios only allow inferring state machine info from a few returned messages, limiting dynamic state perception; and third, such dynamic mechanisms need protocol-specific customization (high engineering effort), conflicting with LLM-Boofuzz’s goal of lightweight, universal automated analysis.

Based on the above, LLM-Boofuzz addresses state machine extraction for stateful protocols in two ways: first, it filters irrelevant packets during real traffic test casing, retaining more than 100 target protocol packets covering multiple types to provide LLMs with comprehensive basic information for state machine analysis and ensure construction integrity; second, it guides LLMs via prompt design in the script iterative optimization stage to proactively infer target protocol state machine composition and generate corresponding test scripts, endowing Boofuzz with LLM-driven state machine inference and mutation capabilities. Experimental verification shows that in FTP testing with 100 complete session packets (including login, upload, download, and logout), state machine rules generated by DeepSeek-R1-256B, Qwen3-256B, and GPT-5 all align with RFC 959 specifications (accurately describing transitions like “PORT command triggering data connection establishment”). This consistency across multiple advanced LLMs further validates the reliability of our method in enabling stateful protocol analysis.

Through the above design, LLMs can efficiently extract protocol information from structured text and generate standardized, comprehensive protocol information summary reports—providing support for subsequent script construction.

3.3. LLM-Based Script Generation and Repair

To address the issues in general generation-based black-box protocol fuzzing—where script writing is highly dependent on manual experience and LLMs tend to generate scripts with poor executability and insufficient advanced feature coverage—LLM-Boofuzz establishes an LLM-based script generation and repair method. This method guides LLMs to generate high-quality scripts with differentiated fuzzing strategies through prompt engineering and ensures script executability via an error capture and repair process. This subsection elaborates on the specific implementation of this method in

Section 3.3.1 and

Section 3.3.2.

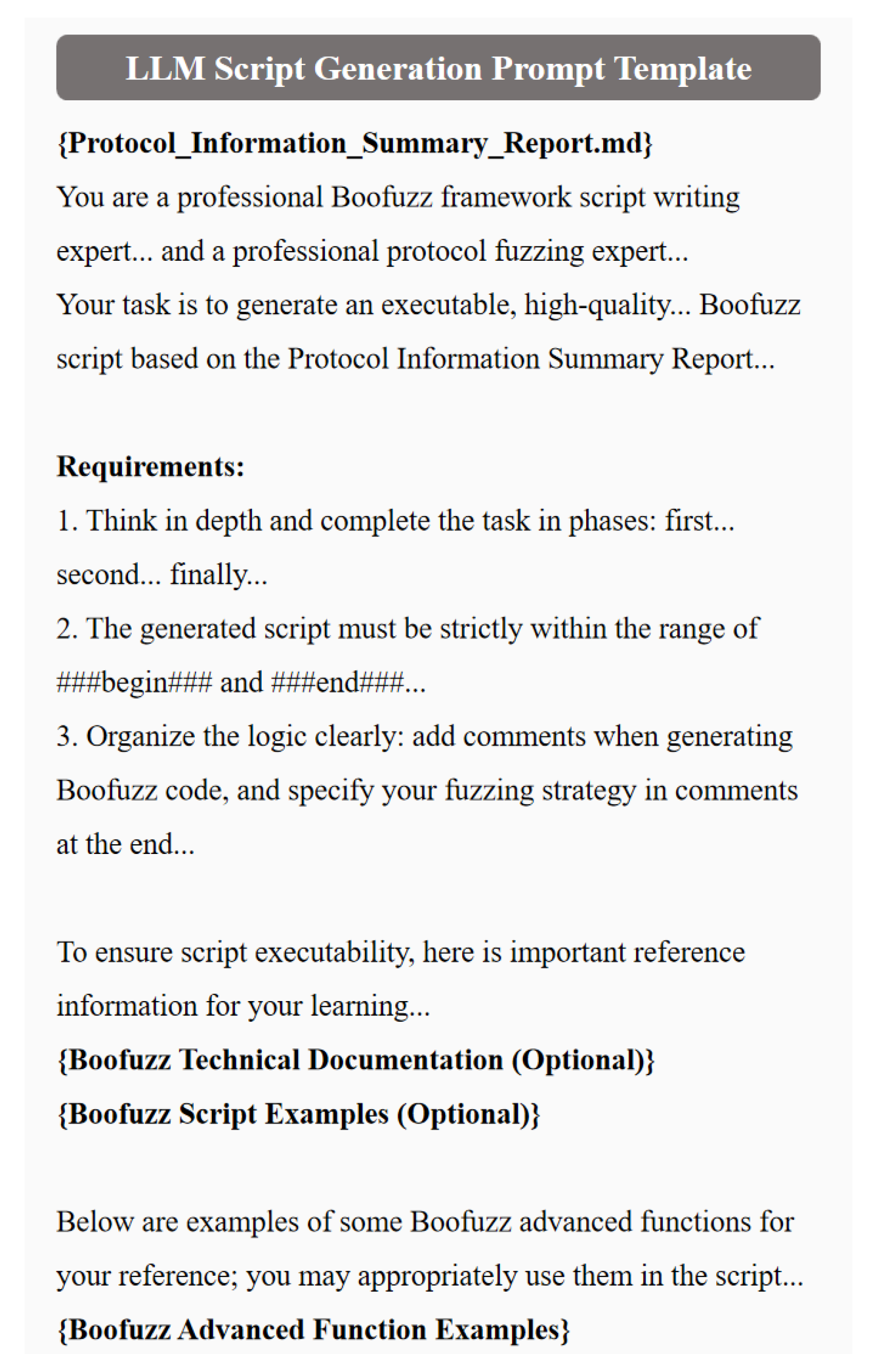

3.3.1. LLM-Based Script Generation

During practical exploration of using LLMs to assist in script generation, we identified a series of issues that restrict the effectiveness of fuzzing: firstly, scripts generated by LLMs often have executability defects, specifically manifested in calling deprecated Boofuzz functions, containing syntax errors, or improperly using the Application Programming Interfaces (APIs) of Boofuzz tool—issues that directly prevent scripts from initiating the fuzzing process normally; secondly, for advanced features in the Boofuzz tool, such as callback function registration, process monitoring, and network monitoring, the quality of code generated by LLMs decreases significantly, usually making it difficult to meet the needs of in-depth fuzzing; and thirdly, LLMs exhibit a “simplification tendency” during script generation, defaulting to outputting scripts with simpler structures and functions, while the complex fuzzing strategies required in real vulnerability detection scenarios are difficult to generate independently through LLMs. We believe that these issues stem from two factors: first, limited public Boofuzz technical materials and sample scripts online leave LLMs inadequately trained on its syntax, features, and high-quality examples; second, generating high-quality, differentiated scripts requires systematic prompt engineering, but current fragmented prompting lacks standardization, hindering consistent quality. To tackle these, this subsection focuses on prompt engineering, using standardized prompt templates to specifically resolve issues with LLMs’ generated scripts in executability, feature completeness, and complexity.

The prompt template design for script generation in LLM-Boofuzz is as follows:

Clarify Objectives. One must clearly define the core goal of script generation in the prompt—“generate executable, high-quality scripts with differentiated fuzzing strategies” based on input such as the protocol information summary report. It explicitly requires strict compliance with the syntax rules of the latest Boofuzz version (0.4.2), focusing on covering key protocol fields and ensuring obvious differences in fuzzing strategies (e.g., field mutation methods and test case sequences) between generated scripts—providing support for subsequent multi-script fuzzing.

Provide References. To address poor script executability and missing advanced features, three types of reference resources are embedded in the prompt: First, the latest Boofuzz technical documentation, which specifies function parameter definitions, version change records, and a list of non-deprecated APIs to ensure LLMs master Boofuzz syntax specifications; second, excellent script examples, which cover standard implementations of core modules (e.g., protocol field definition, state machine construction, and test case sequence design) and include adaptation cases for typical protocols (e.g., HTTP and FTP) to provide structural references for LLMs; and third, advanced feature demonstration code, which details the calling process and parameter configuration of advanced Boofuzz functions (e.g., callback function registration, process monitoring, and proxy support) to assist LLMs in generating scripts with in-depth fuzzing capabilities.

Split Complex Tasks. When LLMs generate multiple high-quality, differentiated scripts in a single run, the high task complexity often degrades script quality. To resolve this, a “single script per run + multi-round generation” strategy is adopted: the prompt is designed with a loop call logic to guide LLMs to focus on generating one script at a time, and multi-round repetition meets the demand for multiple scripts.

Guide Step-by-Step Reasoning. The LLMs are set to use an in-depth reasoning mode, splitting the script generation process into three steps: “Protocol Parsing” → “Strategy Design” → “Code Generation”. The prompt includes guiding logic: “Please strictly follow the three steps of ’Protocol Parsing’, ’Strategy Design’, and ’Code Generation’ to advance step by step, refining the logic in each phase and avoiding hasty conclusions”—improving the logical rigor of the script. Additionally, LLMs are required to embed comments in the script code and supplement fuzzing strategy explanations at the end of the script. This not only helps LLMs organize reasoning logic and enables researchers to understand fuzzing ideas but also lays the foundation for subsequent dynamic script iterative optimization.

Standardize Input and Output. Structured processing of input and output is achieved through separators: for the input layer, the protocol information summary report is wrapped in <PROTOCOL_INFO> tags, and reference scripts are marked with <EXAMPLE_SCRIPT> tags—assisting LLMs in accurately identifying task boundaries and improving information understanding accuracy; for the output layer, LLMs are required to strictly limit the generated script within the tags ###begin### and ###end###, enabling quick extraction of script code through simple string matching.

Role Setting. The LLMs are assigned dual expert roles—“Boofuzz tool Script Writing Expert” and “Protocol Fuzzing Expert”—with clear definitions of the capabilities required of these experts. This guides LLMs to output scripts that meet fuzzing requirements from a professional perspective.

Based on the above, this paper constructs the LLM script generation prompt template shown in

Figure 7.

To verify the effectiveness of the aforementioned design, this study conducted a comparative experiment, selecting two typical application-layer protocols (HTTP and FTP) as subjects, using Deepseek-R1-256b as the fixed LLMs, with the unoptimized group employing simple prompts containing only the instruction “Generate a Boofuzz script” and the optimized group using the prompt template designed in this study—each group generating 50 scripts for the protocol information summary report of each protocol.

The experiment established evaluation metrics in three aspects:

Executable Rate. This is measured by the criterion of “being able to start the Boofuzz testing process normally without syntax errors or API call errors”;

Advanced Feature Coverage. This is determined by “whether the script includes at least one of the advanced features such as callback functions, process monitoring, and proxy support”;

Human-rated “Good” Samples. Two professional protocol security researchers jointly assessed script quality as “good” or “bad” based on three dimensions: protocol field coverage, rationality of fuzzing strategies, and code standardization.

Table 5 shows the experimental results. The unoptimized group had a script executability rate of only 38%, with no samples implementing advanced features and only 12 scripts rated “good” in manual evaluation, while the optimized group saw its executability rate rise to 92%, with 85% of samples containing at least one advanced feature and 41 scripts rated “good” in manual evaluation—fully demonstrating that the prompt engineering in our study enhances the quality of scripts generated by LLMs.

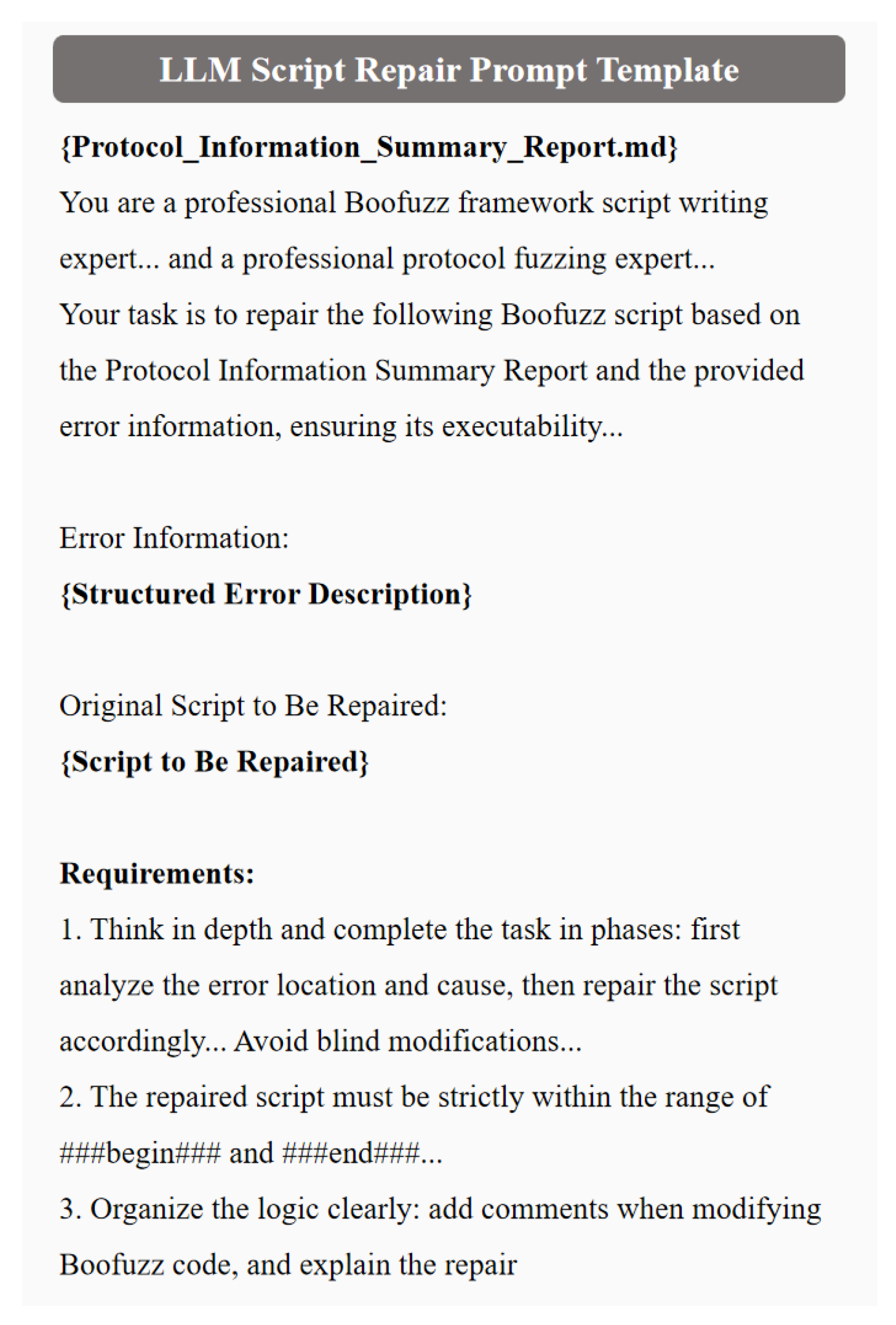

3.3.2. LLM-Based Script Repair

Although the prompt engineering design in

Section 3.3.1 significantly improves script quality, approximately 8% of generated scripts still experience execution exceptions. To address this, we design an LLM-based script repair process to ultimately ensure script executability.

As shown in

Figure 8, when an LLM generates a script, the process attempts to execute it: if the script runs successfully, it is added to the multi-script iterative testing queue; if execution fails, since the script is launched via an independent subprocess, the process can retrieve the script’s standard output and error streams in real time through a pipe, extract and structurally process the execution exception descriptions from these streams, integrate them into a specially designed script repair prompt template, and input the resulting error correction information to LLMs for regenerating the script. To avoid invalid repair loops and resource waste, the process sets a maximum number of repair attempts; if the script still fails to execute after exceeding this threshold, it invokes the script generation prompts from

Section 3.3.1 to guide LLMs in generating an entirely new script, which is then tested again. This cycle continues until a normally executable script is produced.

In the design of the script repair prompt template, as shown in

Figure 9, a structure consisting of “Generation Prompts + Error Information + Script to Be Repaired + Repair Requirements” is adopted. In accordance with the principle of guiding the model to think step by step, LLMs are explicitly required to first analyze the location and cause of the error and then repair the script in a targeted manner—preventing LLMs from modifying the script content blindly.

Through this process, the executability rate of scripts generated by LLMs increased from 92% to 100%, providing a reliable guarantee for subsequent multi-script fuzzing.

3.4. Multi-Script Iterative Fuzzing

To address the challenges of general black-box protocol fuzzing—low efficiency of single-threaded execution, reliance on manual script adjustment, and lack of a dynamic adjustment and optimization mechanism—LLM-Boofuzz implements a multi-script iterative fuzzing method. This method leverages LLM-based agents to enable multi-script fuzzing, real-time status monitoring, and dynamic script iterative optimization. This subsection elaborates on the specific implementation of this method in

Section 3.4.1,

Section 3.4.2, and

Section 3.4.3, respectively.

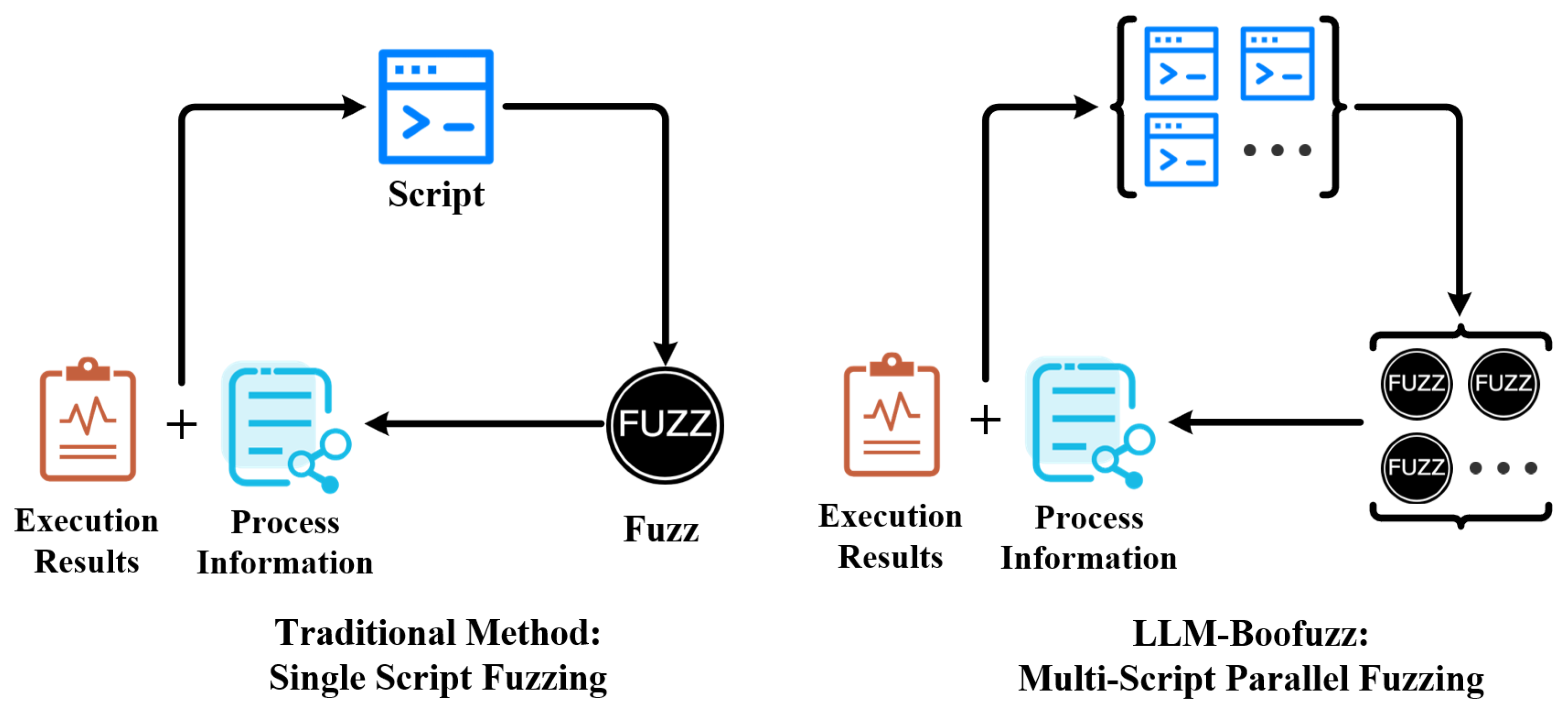

3.4.1. Multi-Script Fuzzing

This subsubsection focuses on the design and implementation of the multi-script fuzzing mechanism.

As shown in

Figure 10, in the general Boofuzz-based vulnerability detection workflow, fuzzing typically uses a single-script serial execution mode: only one target protocol program instance is launched, and a single script is loaded for fuzzing. After fuzzing ends, testers must manually adjust the script’s fuzzing strategy based on execution results and process information before restarting the fuzzing process. This mode has significant efficiency limitations: the single fuzz cycle is lengthy, and only the local syntax and state space of the protocol can be explored serially—making it difficult to cover multidimensional fuzzing scenarios. To address this, we design a multi-script fuzzing mechanism that improves fuzzing efficiency through multi-script execution and automated scheduling.

This mechanism designs different fuzzing modes for two black-box scenarios in actual vulnerability discovery: “accessible binary executable programs” and “complete black-boxes providing only network service ports”. For the “accessible binary executable program” scenario, a “multi-target multi-script” mode is adopted. First, the command parsing module receives the startup command of the target protocol program input by the user and extracts information such as the path of the target protocol program and configuration parameters. Second, it modifies the startup command to launch multiple target protocol program instances simultaneously and allocates independent ports to each instance based on a preset port pool. Next, each target protocol program instance is matched with an independent script featuring differentiated fuzzing strategies, with a one-to-one correspondence established between scripts and instances through port mapping. Finally, the multi-process scheduling module uniformly manages all target protocol program instances and script processes, supporting batch termination of processes via Process Identifiers (PIDs) to quickly clean up the fuzz environment. It can also query resource usage such as CPU usage and memory peaks of each instance based on PID association and dynamically adjust the number of parallel processes to avoid resource contention. For the “complete black-box providing only network service ports” scenario, a “single-target multi-script” mode is adopted, where the proxy forwarding module allocates independent source IP addresses to each script to achieve multi-script fuzzing.

Through the above design, the multi-script fuzzing mechanism ensures fuzz isolation while effectively increasing the number of test cases generated per unit time and the coverage of the protocol’s syntax and state space, which helps improve fuzzing efficiency and comprehensiveness.

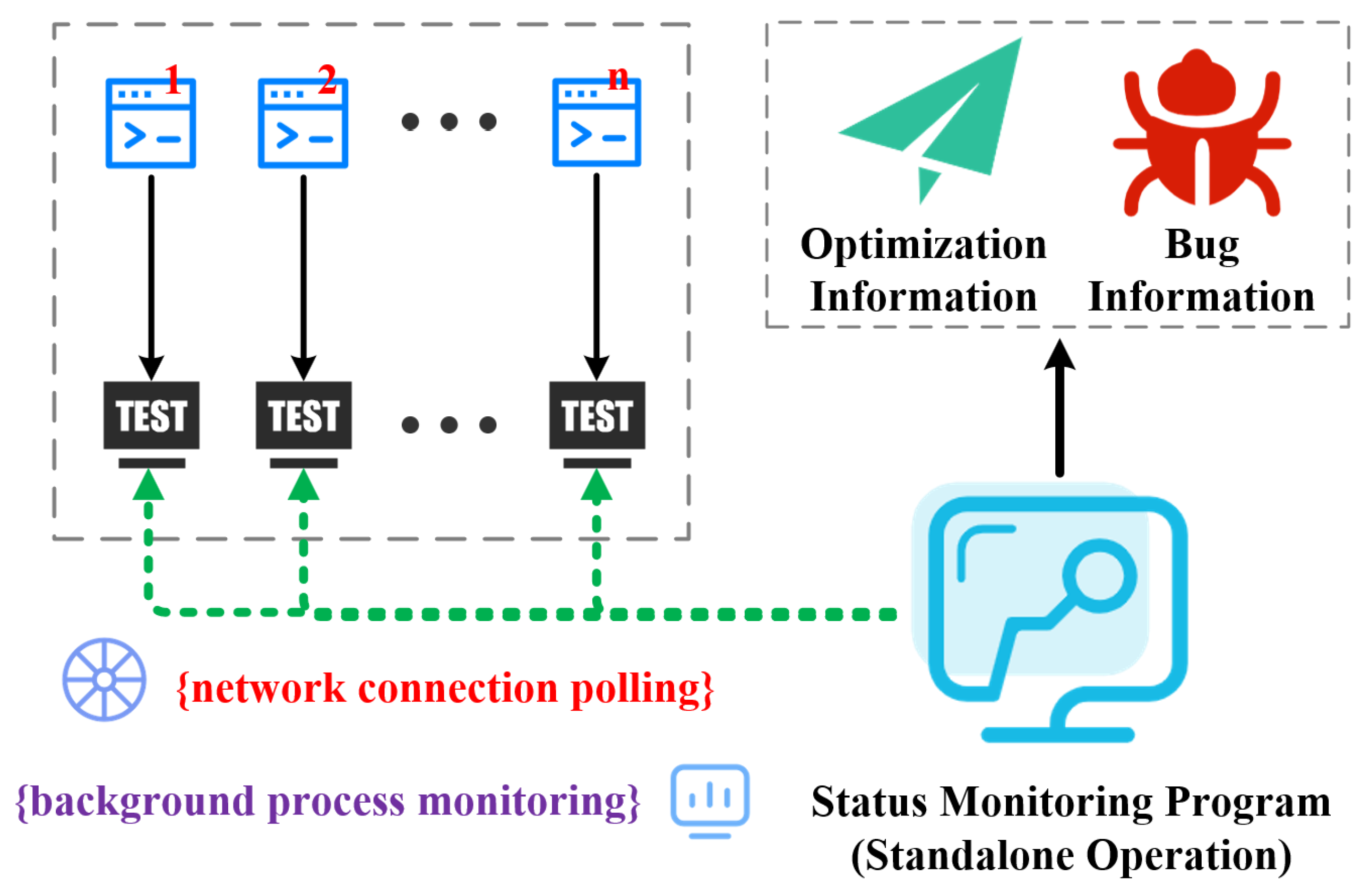

3.4.2. Real-Time Status Monitoring

This subsubsection elaborates on the design and implementation of the real-time status monitoring mechanism.

In vulnerability detection practice, we identify several issues with general Boofuzz monitoring methods: On one hand, Boofuzz’s built-in monitoring functions are difficult to use—its development is still evolving and its interface design is complex, resulting in an extremely low proportion of publicly available scripts online that utilize these monitoring functions, which makes it hard to provide high-quality sample support for script generation. On the other hand, when requesting LLMs to generate scripts with process monitoring functionality, the generated relevant code often has frequent errors, poor stability, and suboptimal functional implementation, failing to reliably perform status monitoring of the target protocol program. Based on the above issues, we decide to independently develop a status monitoring program to conduct real-time monitoring of the multiple launched target protocol programs and fuzz scripts separately, eliminating reliance on LLMs to construct process monitoring logic within scripts to ensure the stability and reliability of the monitoring process. In fact, the built-in process monitoring functionality of the Boofuzz tool essentially also monitors target processes through external monitoring programs, which aligns with the principle of the method we propose.

As shown in

Figure 11, for the specific implementation, the real-time status monitoring mechanism consists of “network connection polling” and “background process monitoring”. Serving as the primary monitoring method, “network connection polling” periodically initiates TCP handshakes with each target protocol program to determine whether the target program is online and records the duration of their online and offline statuses. If the online duration of a target program exceeds the preset threshold, the system will determine that the testing effect of the corresponding script is suboptimal and requires further optimization; when a target program changes from online to offline and its offline duration exceeds the set threshold, it is considered that the target program may have crashed or hung. “Background process monitoring”, as an auxiliary monitoring mechanism, utilizes the process PID uniformly managed in the multi-script fuzzing mechanism described in

Section 3.4.1. It checks whether the scripts and target protocol programs are in normal operation by querying the process manager, thereby assisting in judging the actual status and improving the accuracy of monitoring.

Through this real-time status monitoring mechanism, the agent can grasp the operating status of target protocol programs and scripts in real time and accurately, providing information support for dynamic script iterative optimization.

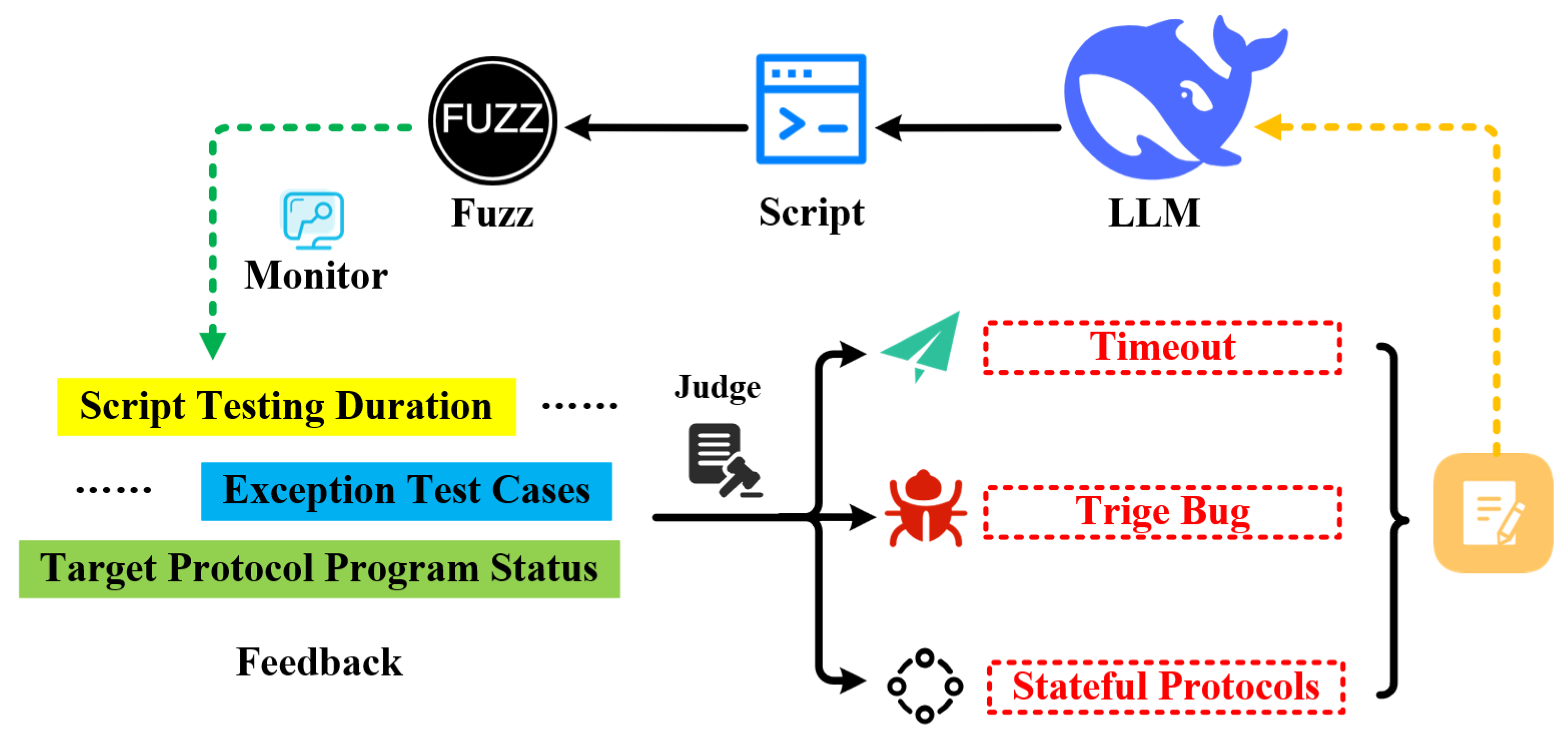

3.4.3. Dynamic Script Iterative Optimization

Traditional black-box protocol fuzzing lacks dynamic adjustment and optimization capabilities, making it difficult to optimize fuzzing strategies in real time based on target system feedback. In addition, manual intervention for script adjustment incurs high costs and fails to ensure fuzzing continuity and stability. To address this, we design an LLM-based dynamic script iterative optimization mechanism, enabling dynamic adjustment of fuzzing strategies through an agent.

As shown in

Figure 12, throughout the multi-script fuzzing process, the agent first collects in real time multiple types of feedback information available in black-box scenarios—including each script’s fuzzing duration, the status of target protocol programs, and test cases that may trigger exceptions—and then organizes this information in a structured manner. Next, based on this feedback, the agent classifies and identifies the fuzzing status of each script to determine whether the current script belongs to one of the following scenarios: “timeout without triggering vulnerabilities”, “successful vulnerability triggering”, or “fuzzing stateful protocols”. Finally, for each scenario, the agent invokes customized prompts to guide LLMs in generating optimization strategies; once the scripts are updated, they are reintroduced into the multi-script fuzzing process, forming a closed loop for dynamic adjustment and optimization. The specific iterative optimization strategies are as follows:

Timeout Without Triggering Vulnerabilities. When the agent detects that a script has run continuously beyond the preset threshold without triggering any exceptions, it compiles relevant information—including the script’s source code, its fuzzing strategy (which is written as comments at the end of the script during generation), and its testing duration—and uses a dedicated prompt to guide LLMs in optimizing and adjusting the script. Following the principle of guiding the model to reason step by step, LLMs are first instructed to analyze the script’s source code and fuzzing strategy, identify potential fuzzing blind spots or inefficient tactics, then adjust the protocol fields or state nodes being targeted for fuzzing, and make appropriate adjustments to test case mutation methods. This adjustment is intended to break through fuzzing bottlenecks and increase the likelihood of vulnerability triggering.

Successful Vulnerability Triggering. When the agent determines that a script may have triggered a vulnerability, the agent first parses the .db format test case packets generated by the Boofuzz tool, extracts test cases that may have caused exceptions, and combines these with information such as the original script’s source code, fuzzing strategies, and runtime duration to form dedicated prompts to guide LLMs to analyze potential causes of the vulnerability triggering and identify vulnerable fields or state nodes and finally help LLMs to generate multiple differentiated scripts targeting vulnerability triggering to unearth more related PoC. Meanwhile, to avoid wasting fuzzing resources, if the agent detects that the framework has triggered vulnerabilities continuously over a short period, it stops in-depth exploration of the current script and instead regenerates scripts with significantly different fuzzing strategies, thereby enhancing the comprehensiveness of fuzzing.

Fuzzing Stateful Protocols. Specifically, for optimizing scripts used for fuzzing stateful protocols, we draw on LLMs’ strengths of extensive knowledge reserves and strong logical reasoning capabilities, integrate content such as protocol information summary reports, script fuzzing strategies, manual prompts, and protocol standard documents, design dedicated prompts to prompt LLMs to actively speculate on and mutate the protocol state machine, and generate scripts targeting different state nodes or state transition logics—thereby enhancing the fuzzing capability for stateful protocols.

4. Experiments

To comprehensively evaluate the performance of the LLM-Boofuzz tool in the field of protocol fuzzing, this section conducts verification from the dimensions of vulnerability triggering efficiency, code coverage, and effectiveness of core modules by designing multiple sets of comparative experiments so as to systematically demonstrate the technical advantages and innovative value of LLM-Boofuzz.

4.1. Experimental Setup

To fully verify the effectiveness of LLM-Boofuzz, a series of experiments are conducted to answer the following questions:

RQ 1. How effective is LLM-Boofuzz in triggering vulnerabilities in real scenarios?

RQ 2. What is LLM-Boofuzz’s ability to improve code coverage of the target protocol program?

RQ 3. Do key modules of LLM-Boofuzz (such as “LLM-based protocol information extraction from real traffic” and “multi-script iterative fuzzing”) play an effective role in vulnerability mining?

All experiments are executed in a unified hardware and software environment:

Hardware. The experiments use an Intel (R) Core (TM) i7-14650HX processor (2.20 GHz main frequency) with 32 GB memory.

Software. The LLM-Boofuzz system is deployed on a Windows 11 host, while the target protocol programs run on Ubuntu 22.04 LTS virtual machines or Windows 10 virtual machines (configured with an 8-core CPU and 16 GB memory).

The prototype tool LLM-Boofuzz is developed in Python 3.11 with a modular design, featuring strong stability and scalability. It can flexibly adapt to fuzzing requirements for multiple network protocols such as HTTP, FTP, SSH, SSL, COAP, and SMTP.

To comprehensively evaluate the performance of our proposed method, we select three representative protocol fuzzing tools for comparative experiments and explain the reasons for not selecting LLM-enabled protocol fuzzing tools, as detailed below:

Boofuzz. This is a classic generation-based black-box protocol fuzzing tool, and the prototype tool on which LLM-Boofuzz is based. Though first proposed in 2015, it has maintained continuous updates and iterations over the years, remaining one of the latest and most user-friendly tools in the field of protocol fuzzing to date. In this study, we adopt its latest version, updated in September 2025, with the specific branch d9b0934b4e23c6bbc33ed27673ed2d3e7a6fba1c.

Snipuzz. This is a state-of-the-art tool in the field of black-box protocol fuzzing, which excels in stateless protocol fuzzing through protocol snippet recombination technology. Introduced in 2021, it has also undergone consistent updates to adapt to evolving application scenarios. We use its latest version, updated in September 2024, with the branch edb246a6c20dd3e701367a5c96d24571fcafd521.

AFLNet. This is a representative gray-box protocol fuzzing tool that combines the mutation strategy of American Fuzzy Lop (AFL) [

42] with network protocol state machine modeling, achieving high vulnerability discovery efficiency in protocol fuzzing. Since this paper focuses on black-box tools, the main comparisons are conducted with other black-box tools, while gray-box tools serve as auxiliary references. AFLNet is selected because it is the most widely used tool for comparative experiments in the gray-box field and has kept up with regular updates. We adopt its latest version, updated in May 2025, with the branch 96032f86d0005dfeeb41ea7b31103f1d1ff8f168.

Regarding the reason for not selecting LLM-enabled protocol fuzzing tools, we have the following practical constraints:

Most tools are not open-source, hindering fair and reproducible comparisons. For example, CHATFuMe (LLM-assisted stateful protocol fuzzing) remains closed-source, with no access to its core implementation for consistent benchmarking.

Many tools are tailored to specific targets, conflicting with our general-purpose scenario. Cases include LLMIF (IoT device-focused, needs hardware support), ChatHTTPFuzz (HTTP-only), mGPTfuzz (Matter IoT protocol-specific), and ArtifactMiner (optimized for Modbus protocol).

A large portion are gray-box-based, lacking comparative significance for black-box evaluation. For instance, ChatAFL uses LLMs for seed generation but relies on gray-box logic (needs internal program info), which is incompatible with our black-box focus.

4.2. Comparison of Vulnerability Triggering Capability

To answer RQ 1, we first evaluate LLM-Boofuzz’s vulnerability triggering capability by comparing the average Time To Exposure (TTE) of LLM-Boofuzz and mainstream tools when triggering the same vulnerabilities in the same environment. In the experimental design, 12 known vulnerabilities covering HTTP, FTP, SSH, and SMTP protocols (including both stateless and stateful protocol scenarios) are selected, each tested repeatedly for 10 rounds in the same test environment with a maximum single-round duration of 3 h; the average time each tool takes to trigger the vulnerabilities is recorded. For the Boofuzz tool specifically, its scripts are manually analyzed and written by professional protocol security researchers with LLM assistance, aiming to conduct comprehensive fuzzing on the target protocol program.

As shown in

Table 6, LLM-Boofuzz demonstrates superior vulnerability triggering efficiency in all test scenarios: For the stateless protocol (HTTP) scenario, in the fuzzing of six vulnerabilities, its average TTE was 20.66 min, significantly lower than that of the other three tools—for instance, CVE-2018-5767, a vulnerability caused by an unrestricted overly long Cookie value in messages, saw LLM-Boofuzz focus on this Cookie field in initially generated differentiated scripts, achieving an average TTE of only 1 min, while in the fuzzing of CVE-2017-17663, LLM-Boofuzz (31 min) was slightly slower than Snipuzz (27 min) but showed stronger stability and outperformed Snipuzz in other HTTP vulnerability tests. In contrast, AFLNet timed out without triggering vulnerabilities in two HTTP tests and failed to test CVE-2018-5767 due to inability to adapt to the embedded device environment, whereas LLM-Boofuzz successfully triggered all HTTP vulnerabilities within 1 h. The coefficient of variation (CV) is defined as the ratio of the sample standard deviation to the sample mean (smaller values indicate less fluctuation in experimental data), and the CV values of all valid test groups in the experiment are generally small, confirming the stability and reliability of the experimental results.

For the stateful protocol (FTP, SSH, and SMTP) scenario, LLM-Boofuzz’s efficiency advantage was more prominent: in the fuzzing of six vulnerabilities, it achieved an average TTE of 11.8 min and successfully triggered all of them. Meanwhile, Boofuzz timed out in four stateful protocol vulnerability tests due to inability to effectively focus on key states—though it could trigger simpler FTP vulnerabilities (CVE-2025-5667 and CVE-2025-5666) quickly—reflecting that general methods, even with LLMs’ assistance, still suffer from instability and low efficiency in fuzzing complex stateful protocols; Snipuzz exhibited poor stateful protocol fuzzing capability, with four vulnerabilities timing out and longer average durations for the two it triggered; and AFLNet failed to test five stateful protocol vulnerabilities due to inability to adapt to embedded environments or Windows systems and even failed to trigger the only testable one (CVE-2023-42115)—fully demonstrating the poor environmental adaptability of gray-box protocol fuzzing. The experimental results verify the good vulnerability triggering ability of LLM-Boofuzz in stateful protocol fuzzing.

What is more, we select 15 vulnerable network protocol programs spanning a range of protocols—including HTTP, FTP, SSH, TLS, SMTP, and COAP—by taking the comparison of vulnerability triggering ability between LLM-Boofuzz and mainstream tools as the evaluation metric. For the experimental setup, each protocol program is subjected to three repeated test rounds in the same test environment, with each round capped at 12 h. During testing, whether each tool successfully triggers vulnerabilities is recorded. As presented in

Table 7, the experimental results show that LLM-Boofuzz achieved successful vulnerability triggering across all 15 network protocol programs, while Boofuzz, Snipuzz, and AFLNet triggered 8, 7, and 7 vulnerabilities, respectively, demonstrating a clear-cut advantage over the comparison tools.

Based on the aforementioned research, LLM-Boofuzz exhibits strong vulnerability triggering capability, which we attribute to the synergistic effect of multiple factors. First, thanks to its deep parsing of protocol information from real traffic, the test cases generated by LLM-Boofuzz have a high degree of compatibility with protocol syntax. Second, the scripts generated by LLM-Boofuzz are of high quality and come with targeted fuzzing strategies. Furthermore, LLM-Boofuzz can achieve more efficient exploration of protocol syntax spaces and state spaces through its multi-script iterative fuzzing method.

4.3. Comparison of Coverage Improvement Capabilities

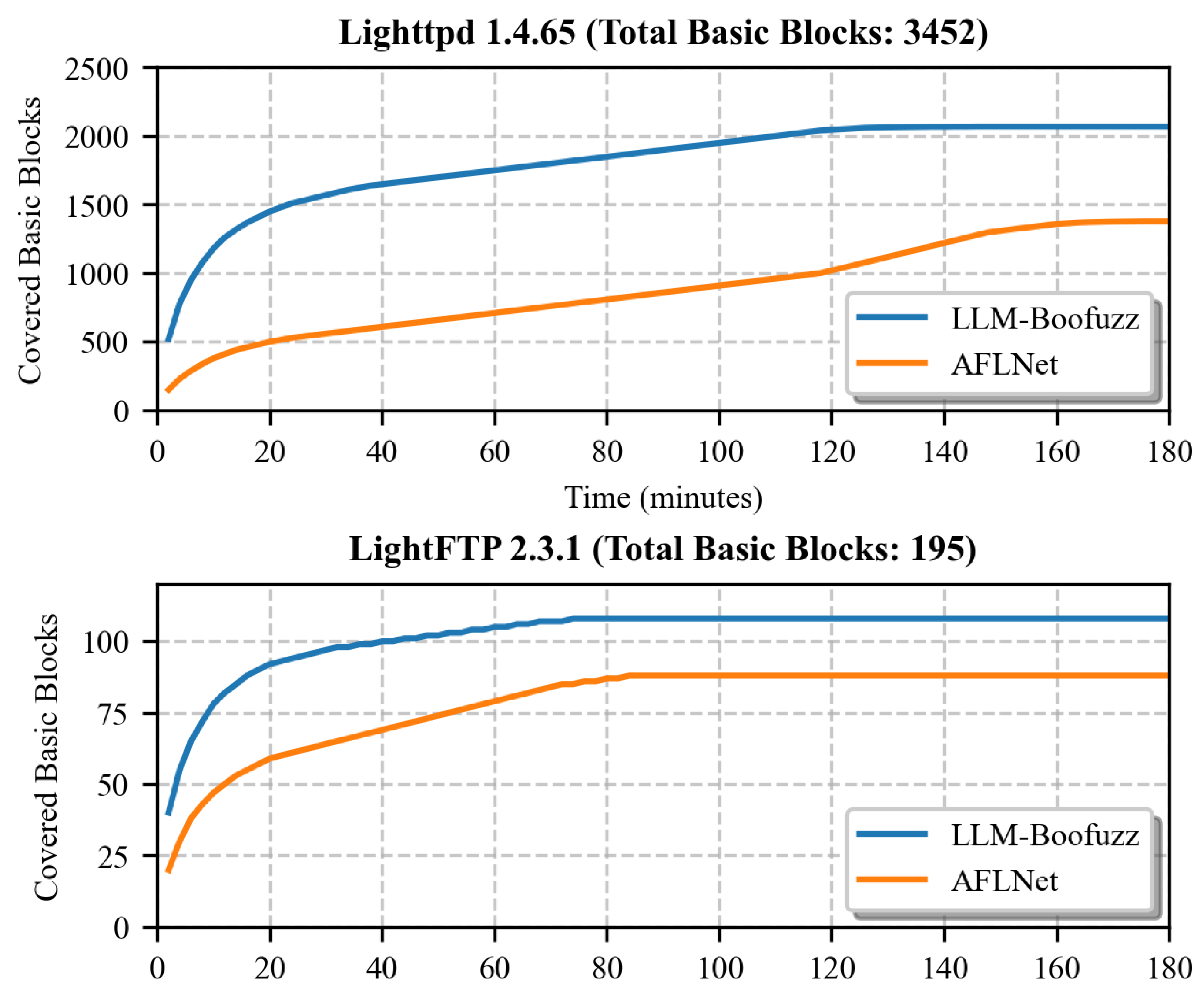

To answer RQ 2, we systematically evaluate LLM-Boofuzz’s ability to improve code coverage of target protocol programs by comparing its line coverage, function coverage, and basic block-level code coverage growth rate with those of mainstream tools. In the experimental design, Lighttpd 1.4.65 (HTTP protocol, patched) and LightFTP 2.3.1 (FTP protocol, patched) are selected as target protocol programs. Gcov [

43] and LLVM [

44] are used to collect code coverage data of different test tools within a 3-h test duration. Each group of experiments is repeated 10 times, and the average value of multiple tests is used as the final result.

As shown in

Table 8, in the protocol fuzz on Lighttpd 1.4.65 and LightFTP 2.3.1, LLM-Boofuzz outperforms mainstream tools in both average line coverage and average function coverage. Further analysis of the relative improvement margins reveals that in the stateless HTTP protocol scenario, LLM-Boofuzz achieves a coverage improvement of 21.8–66.5%, while in the stateful FTP protocol scenario, the improvement reaches 34.7–166.9%, which demonstrates LLM-Boofuzz’s good adaptability to stateful protocols.

Figure 13 presents the results of dynamic coverage monitoring experiments conducted via LLVM basic block-level instrumentation. For the Lighttpd 1.4.65 program—which includes a total of 3452 basic blocks—LLM-Boofuzz showed a notable initial advantage in coverage. After 2 min of fuzzing, it covered 520 basic blocks, which is 3.47 times the coverage of AFLNet (150 basic blocks). After 10 min, its coverage increased to 1180 basic blocks, representing an 800-block lead over AFLNet (380 basic blocks). At this stage, its coverage already accounted for 57% of the final count. By the conclusion of the experiment, LLM-Boofuzz achieved coverage of 2070 basic blocks, 690 more than AFLNet’s 1380 basic blocks. This trend was equally apparent in the LightFTP 2.3.1 program, which contains 195 basic blocks in total. The experimental data confirm that LLM-Boofuzz exhibited a steeper coverage growth curve for both protocol-specific programs. Notably, it demonstrated a more rapid ability to expand basic block coverage during the early stages of fuzzing.

In summary, regarding the certain advantages exhibited by LLM-Boofuzz in terms of coverage, we argues the following: Under the guidance of the protocol information summary report, LLM-Boofuzz can enumerate message types more comprehensively, and its test case generation is more semantically targeted—enabling it to hit many key nodes in protocol interactions. Additionally, the multi-script fuzzing mechanism accelerates the exploration of code paths, allowing coverage of more potential paths within the same timeframe. Furthermore, the iterative optimization mechanism can dynamically adjust fuzzing strategies and continuously initiate exploration toward uncovered code paths, thereby more effectively reaching the deep-seated code logic of the target program. The experimental results also confirm that, in specific scenarios, generation-based black-box protocol fuzzing methods possess unique advantages in terms of coverage improvement capability.

4.4. Ablation Experiments

To answer RQ 3, we design ablation experiments to verify the contribution of each key module to tool performance by removing critical functional modules of LLM-Boofuzz. In the experimental design, given that “LLM-based script generation and repair” is the core component enabling the automatic operation of LLM-Boofuzz, it is indispensable and thus no ablation experiment is conducted for this module. Consequently, we construct four test scenarios.

Original Mode. Full LLM-Boofuzz system.

-A Mode. Remove the “LLM-based real traffic protocol information extraction” module; LLM-Boofuzz generates scripts directly from the structured text of real traffic.

-B Mode. Remove the “multi-script iterative fuzzing” module; only one script is executed for iterative fuzzing.

-A-B Mode. Remove both the “LLM-based real traffic protocol information extraction” and “multi-script iterative fuzzing” modules.

In addition, five typical vulnerabilities covering stateless protocols (HTTP) and stateful protocols (FTP, SSH, and SMTP) are selected as test objects. Each vulnerability is tested repeatedly for 10 rounds in the four modes, with a maximum single-round test duration of 3 h. The average TTE and time ratio (average TTE of ablation mode/average TTE of original mode) are recorded.

Table 9 demonstrates that the vulnerability triggering efficiency of LLM-Boofuzz experienced a substantial decrease following the removal of its key modules. Mode -A was unable to trigger any vulnerabilities, with an average time ratio registering 5.23. Mode -B failed to trigger two of the target vulnerabilities, and its average time ratio reached 7.31. Most notably, Mode -A-B failed to trigger any vulnerabilities within a 3-h timeframe in all conducted tests. The coefficient of variation (CV) values of the valid test groups are relatively small, indicating that the experimental data is basically stable and verifying the reliability of the conclusions.

Furthermore, we find that for stateful protocols (FTP, SSH, and SMTP), the efficiency decline is more pronounced after removing Module B. Specifically, neither CVE-2025-47812 nor CVE-2023-42115 could be triggered within 3 h under Mode -B, whereas the original mode required an average of only 25 and 65 min, respectively, to trigger them. For CVE-2024-46483, the average triggering time under Mode -B increased from 33 min to 158 min (resulting in a time ratio of 4.79). We analyze that the reason lies in the fact that adjusting the testing strategy for stateful protocols involves not only field mutation but also modifications to state transition logic, making script construction more complex. In contrast, the multi-script iterative fuzzing (i.e., Module B) can simultaneously explore different state paths, preventing single-threaded processes from falling into inefficient loops during complex state transitions. Thus, stateful protocol fuzz relies more heavily on Module B.

Notably, Module A plays a particularly prominent role in the testing of CVE-2022-36446. In the context of this vulnerability, the target protocol program has a custom field defined within HTTP messages. By parsing and analyzing real traffic, Module A enables LLMs to more accurately construct scripts that align with the requirements of this custom field. Following the removal of Module A, the average trigger time for the vulnerability increased from 17 min to 140 min; this corresponds to a time ratio of 8.24. This finding indicates that the protocol information summary report generated by LLMs can effectively guide improvements in the accuracy of test cases and reduce ineffective exploratory efforts.

4.5. Experimental Summary

Comprehensive experimental results show that LLM-Boofuzz outperforms mainstream tools in vulnerability triggering efficiency and code coverage improvement. Additionally, its core modules—“LLM-based real traffic protocol information extraction” and “multi-script iterative fuzzing”—all prove to be effective.

5. Discussion

LLM-Boofuzz constitutes an initial exploration of the deep integration of LLMs into generation-based black-box protocol fuzzing, yet it exhibits several notable limitations.

First, regarding protocol information extraction via real traffic analysis, LLM-Boofuzz is currently more applicable to plain text and most text-based protocols, while its efficacy for binary or encrypted protocols is limited. This is because current LLMs excel at processing language- and text-based inputs (a trait observable in common usage scenarios) but struggle with tasks requiring extensive mathematical calculations and rigorous logical reasoning—such as parsing pure binary data, where precise interpretation of byte-level structures and bitwise relationships exceeds their current capabilities. Encrypted protocols present an even more complex challenge: their data not only exists in binary form but also embeds encryption logic and features. Notably, most existing research in protocol analysis only studies message structures after decrypting encrypted protocols, as direct analysis of encrypted data remains a bottleneck in the field. Thus, leveraging LLMs for encrypted protocol processing is still immature and unfeasible at present. However, this limitation underscores the need for deeper exploration in protocol reverse engineering, and the real traffic-based protocol information extraction approach proposed in this paper still holds referential value.

Secondly, LLM-Boofuzz lacks the capability for dynamic exploration of protocol state machines. Currently, it relies on pre-extracted state machine rules from static real traffic to guide fuzzing, which fails to adapt to complex stateful protocols.

Thirdly, the agent’s functions in LLM-Boofuzz remain relatively simplistic. Its current design only supports basic status monitoring (e.g., process crash detection) and script optimization based on timeout or exception feedback, lacking advanced capabilities such as real-time packet capture analysis, automated vulnerability verification, and adaptive adjustment of parallel fuzzing threads.

Based on the above discussion, our future research will focus on the following aspects.

Enhancing real traffic parsing. We will further investigate methods to improve the ability of LLMs to parse real traffic, thereby enhancing the adaptibility of LLM-Boofuzz to private and unknown scenarios.

Dynamic protocol state machine construction. We aim to implement a mechanism for constructing dynamic protocol state machines based on real-time traffic. This will enhance LLM-Boofuzz’s adaptibility to complex stateful protocols.

Strengthening Agent Capabilities. We will continue to enhance the practical capabilities of the agent by integrating functions such as real-time packet capture analysis and automated vulnerability verification into LLM-Boofuzz, thereby supporting the expansion of fuzzing functionalities.

6. Related Work

6.1. Black-Box Protocol Fuzzing

Black-box protocol fuzzing can be conducted solely through network communication interactions, without being restricted by the target’s operating environment or specific architecture—exhibiting good scenario adaptability. Additionally, it does not require testers to make intrusive modifications to the source code, reducing fuzzing costs. Thus, it has received widespread attention in industry and academia. Based on test case construction methods, black-box protocol fuzzing can be divided into mutation-based and generation-based modes. Generation-based fuzzing has a long development history and has evolved through multiple generations of tools, forming a mature technical framework: In 1999, PROTOS [

45] became the first systematic black-box protocol fuzzing framework. It tests protocol programs through preset malformed data templates but relies on fixed templates—struggling to adapt to dynamic changes in protocol formats. In 2002, SPIKE [

46] introduced a scripting mechanism to black-box protocol fuzzing for the first time, allowing testers to customize protocol field structures via scripts. However, its scripting syntax is cumbersome, requiring high technical expertise from testers, and it lacks support for protocol state machines. Subsequently, in 2005, the Peach [

47] framework described protocol formats using XML, further enhancing the flexibility of generating script construction. However, XML itself has high redundancy and low parsing efficiency; script maintenance costs increase significantly with protocol complexity. In 2008, Sulley combined Python scripting with protocol fuzzing for the first time. Leveraging Python’s simplicity, ease of use, and strong extensibility, it significantly reduced the threshold for writing generation scripts and expanded the capability boundary of black-box protocol fuzzing. However, its single-threaded fuzzing strategy severely limits fuzzing efficiency, and its field mutation strategies are relatively simple. Through long-term development, generation-based black-box protocol fuzzing has formed a technical system centered on generation scripts and is widely used in various protocol fuzzing scenarios.

Moreover, the mutation-based methods have developed relatively slowly, primarily stemming from limitations in black-box environments—such as the scarcity of feedback information, the difficulty in acquiring high-quality seed samples, and the challenges in designing mutation strategies. In recent years, nonetheless, these methods have achieved some advancements: for example, Bleem distinguishes and mutates fields based on message field types while supporting sequence-level mutation operations, and Snipuzz attempts to implement intelligent mutation based on message fragments. Nevertheless, on the whole, mutation-based methods exhibit significant randomness and blindness in test case construction, rendering it difficult to access the deep logic and boundary scenarios of protocol programs. Furthermore, a large number of test cases are filtered out directly due to non-compliance with protocol syntax specifications, which results in low fuzzing efficiency. To date, mutation-based methods remain unable to replace generation-based methods in the practical field of vulnerability detection.

6.2. Gray-Box Protocol Fuzzing

In recent years, gray-box protocol fuzzing tools have received extensive attention in academia. Tools such as AFLNet and StateAFL [

48] improve vulnerability mining efficiency and comprehensiveness through code coverage-guided mutation strategies but struggle to cover deep logical paths. Tools like PS-Fuzz [

49], DSFuzz [

50], and SGMFuzz [

51] identify protocol state nodes by capturing long-lifetime variables in memory snapshots, enhancing fuzzing capabilities for stateful protocols. However, frequent memory snapshot capture may reduce fuzzing efficiency. SNPSFuzzer [