SSF-KW: Keyword-Guided Multi-Task Learning for Robust Extractive Summarization

Abstract

1. Introduction

- We propose SSF-KW, a novel framework that jointly learns keyword extraction and sentence selection to reduce sensitivity to noisy reference summaries.

- We design a keyword-guided fusion mechanism that combines lexical salience and semantic context for more coherent and informative summaries.

- We empirically validate the framework’s robustness and interpretability across multiple datasets and provide ablation studies to assess each component’s contribution.

2. Related Work

3. Methodology

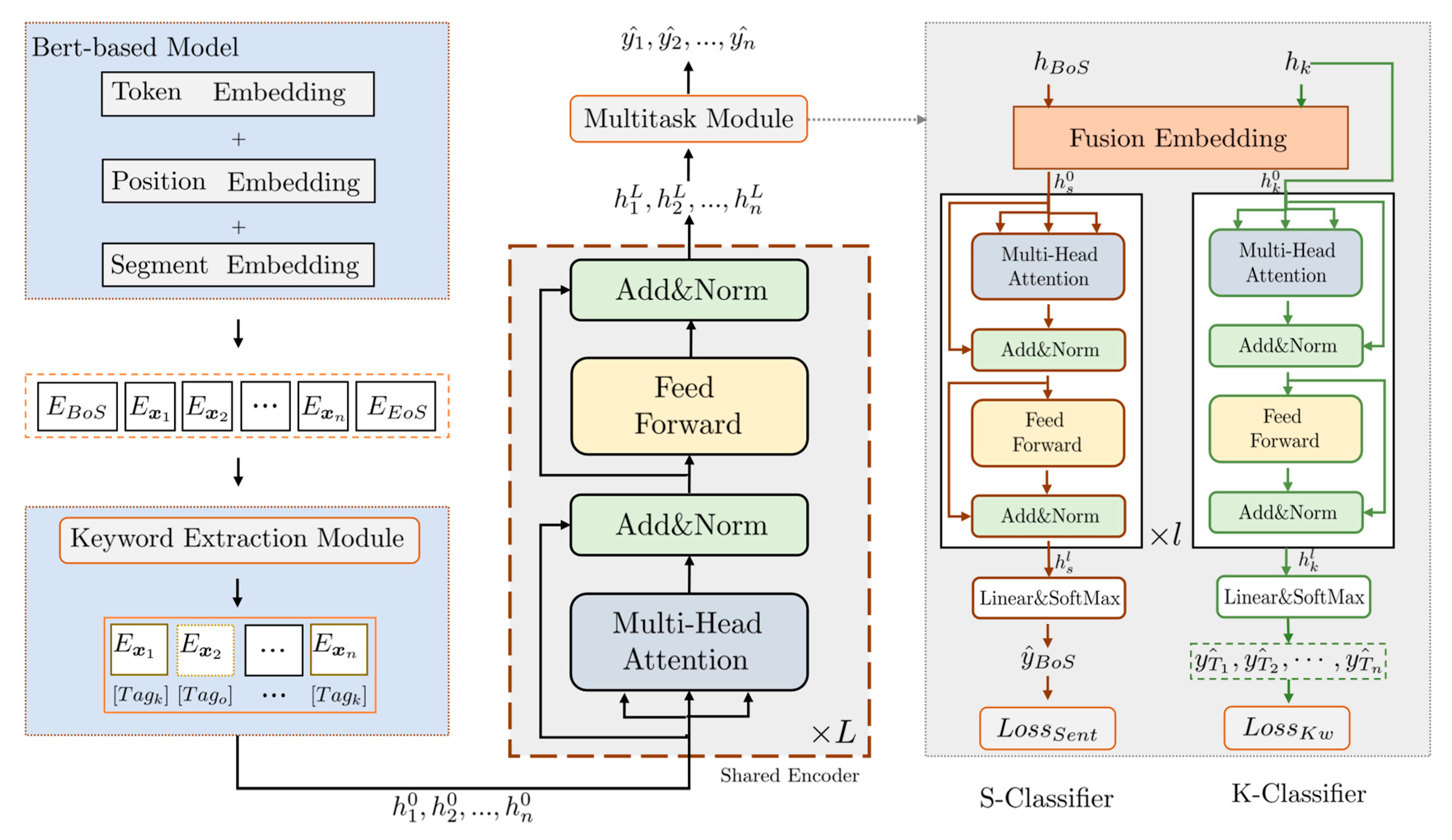

3.1. Overview

- Sentence Embedding Module (Section 3.2.1): Encodes individual sentences using a BERT-based model to generate contextualized representations.

- Keyword Extraction Module (Section 3.2.2): Identifies pivotal lexical units (nouns, verbs, adjectives) via part-of-speech tagging and semantic similarity analysis.

- Fusion and Classification Module (Section 3.2.3): Integrates sentence and keyword embeddings through a fusion strategy, which are then processed by task-specific classifiers to produce the final summary. The system is optimized using a multi-task objective function (Section 3.4) that jointly addresses sentence selection accuracy and keyword identification.

Task Definition

3.2. Model Architecture

3.2.1. Sentence Embedding Module

3.2.2. Keyword Extraction Module

- 1.

- Part-of-Speech Tagging: We first conduct part-of-speech tagging on all tokens using NLTK. Each token is annotated, allowing us to identify candidate keywords from nouns, verbs, and adjectives.

- 2.

- Contextual Embedding and Similarity Calculation: For each candidate word in a sentence, we compute its contextualized embedding using the pre-trained BERT model. The cosine similarity between the word embedding and the overall document embedding () is then calculated to determine its relevance. The document embedding is computed as the average of all sentence embeddings:

- 3.

- Keyword Selection: The candidate word with the highest similarity to the document embedding within its sentence is selected as the key semantic word (). Tokens are marked as keywords () or non-keywords ().

3.2.3. Fusion and Classification Module

3.3. Classifier Design

3.4. Optimization

4. Experiments and Analysis

4.1. Evaluation Metric

4.2. Setup

4.3. Baselines and Results

4.4. Dataset Description

4.4.1. Sentence-Level Labels (For Extractive Summarization)

4.4.2. Keyword-Level Labels (For Keyword Extraction)

4.4.3. Dataset Scope and Statistics

4.5. Ablation Study

4.5.1. Analysis of Component Contributions

4.5.2. Analysis of Fusion Embedding

4.5.3. Qualitative Illustration of Factual Error Correction

4.6. Computational Efficiency Analysis

- Vs. Generative LLMs (e.g., ChatGPT, T5, BART): Generative models employ an autoregressive decoding process [45] which generates summaries token-by-token sequentially. This results in a time complexity that scales linearly with the output length O(n) [46], making inference comparatively slow. In contrast, SSF-KW, as an extractive model, performs a single forward pass through the encoder to score all sentences, followed by a simple selection step [47]. Its inference time is constant O(1) relative to the output length, as it does not generate new tokens but only selects from existing ones. Furthermore, generative LLMs typically have orders of magnitude more parameters (e.g., billions [48]) compared to our BERT-based encoder (e.g., millions [49]), drastically increasing memory footprint and energy consumption.

- Vs. Other Abstractive Models: While smaller than LLMs, abstractive models still incur the overhead of a decoder network and autoregressive generation [50]. SSF-KW eliminates the entire decoder component, reducing both the number of parameters and the computational graph complexity.

- Vs. Graph-Based Models (e.g., GNN-EXT, RHGNNSUMEXT): Many extractive models rely on complex graph neural networks to capture document structure [42]. The construction of sentence-entity graphs and the multi-step message passing operations in GNNs introduce non-trivial computational overhead [51]. SSF-KW achieves competitive performance through a conceptually simpler, joint encoding and multi-task learning framework, avoiding the explicit graph construction and propagation steps.

- Internal Design Choices: Our multi-task design promotes efficiency by learning a more focused and disentangled representation within a single shared encoder. This architecture aligns with the concept of parameter sharing in multi-task learning [52], leading to faster convergence during training and a more compact model.

4.7. Quantitative Analysis of Computational Efficiency

4.7.1. Parameter Scale

4.7.2. Computational Complexity

4.7.3. Training Efficiency

4.7.4. Comparison with Prior Solutions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, H.; Yu, P.S.; Zhang, J. A systematic survey of text summarization: From statistical methods to large language models. ACM Comput. Surv. 2025, 57, 1–41. [Google Scholar]

- Shakil, H.; Farooq, A.; Kalita, J. Abstractive text summarization: State of the art, challenges, and improvements. Neurocomputing 2024, 603, 128255. [Google Scholar] [CrossRef]

- Giarelis, N.; Mastrokostas, C.; Karacapilidis, N. Abstractive vs. extractive summarization: An experimental review. Appl. Sci. 2023, 13, 7620. [Google Scholar]

- Maynez, J.; Narayan, S.; Bohnet, B.; McDonald, R. On Faithfulness and Factuality in Abstractive Summarization. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 1906–1919. [Google Scholar]

- Wang, Y.; Zhang, J.; Yang, Z.; Wang, B.; Jin, J.; Liu, Y. Improving Extractive Summarization with Semantic Enhancement Through Topic-Injection Based BERT Model. Inf. Process. Manag. 2024, 61, 103677. [Google Scholar] [CrossRef]

- Landes, P.; Chaise, A.; Patel, K.; Huang, S.; Di Eugenio, B. Hospital Discharge Summarization Data Provenance. In Proceedings of the 22nd Workshop on Biomedical Natural Language Processing and BioNLP Shared Tasks, Toronto, Canada, 13 July 2023; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 439–448. [Google Scholar]

- Zhong, M.; Liu, P.; Chen, Y.; Wang, D.; Qiu, X.; Huang, X.-J. Extractive Summarization as Text Matching. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 6197–6208. [Google Scholar]

- Zhang, S.; Wan, D.; Bansal, M. Extractive Is Not Faithful: An Investigation of Broad Unfaithfulness Problems in Extractive Summarization. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; Volume 1, pp. 2153–2174. [Google Scholar]

- Zhang, J.; Lu, L.; Zhang, L.; Chen, Y.; Liu, W. DCDSum: An Interpretable Extractive Summarization Framework Based on Contrastive Learning Method. Eng. Appl. Artif. Intell. 2024, 133, 108148. [Google Scholar] [CrossRef]

- AbdelAziz, N.M.; Ali, A.A.; Naguib, S.M.; Fayed, L.S. Clustering-Based Topic Modeling for Biomedical Documents Extractive Text Summarization. J. Supercomput. 2025, 81, 171. [Google Scholar] [CrossRef]

- Debnath, D.; Das, R.; Pakray, P. Extractive Single Document Summarization Using Multi-Objective Modified Cat Swarm Optimization Approach: ESDS-MCSO. Neural Comput. Appl. 2025, 37, 519–534. [Google Scholar] [CrossRef]

- Dong, X.; Li, W.; Le, Y.; Jiang, Z.; Zhong, J.; Wang, Z. TermDiffuSum: A Term-Guided Diffusion Model for Extractive Summarization of Legal Documents. In Proceedings of the 31st International Conference on Computational Linguistics, Abu Dhabi, UAE, 19–24 January 2025; pp. 3222–3235. [Google Scholar]

- Wang, R.; Lan, T.; Wu, Z.; Liu, L. Unsupervised Extractive Opinion Summarization Based on Text Simplification and Sentiment Guidance. Expert Syst. Appl. 2025, 272, 126760. [Google Scholar] [CrossRef]

- Rodrigues, C.; Ortega, M.; Bossard, A.; Mellouli, N. REDIRE: Extreme REduction DImension for extRactivE Summarization. Data Knowl. Eng. 2025, 157, 102407. [Google Scholar] [CrossRef]

- Chan, H.P.; Zeng, Q.; Ji, H. Interpretable Automatic Fine-Grained Inconsistency Detection in Text Summarization. In Findings of the Association for Computational Linguistics: ACL 2023; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 6433–6444. [Google Scholar]

- She, S.; Geng, X.; Huang, S.; Chen, J. Cop: Factual Inconsistency Detection by Controlling the Preference. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 13556–13563. [Google Scholar]

- Chen, L.; Chang, K. An Entropy-Based Corpus Method for Improving Keyword Extraction: An Example of Sustainability Corpus. Eng. Appl. Artif. Intell. 2024, 133, 108049. [Google Scholar] [CrossRef]

- Li, J.; Zhang, X.; Wang, J.; Cao, S.; Zhou, X. Hierarchical Differential Amplifier Contrastive Learning for Semi-supervised Extractive Summarization. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), 30 June–5 July 2024; IEEE: New York, NY, USA, 2024; pp. 1–8. [Google Scholar]

- Rong, H.; Chen, G.; Ma, T.; Sheng, V.S.; Bertino, E. FuFaction: Fuzzy Factual Inconsistency Correction on Crowdsourced Documents with Hybrid-Mask at the Hidden-State Level. IEEE Trans. Knowl. Data Eng. 2024, 36, 167–183. [Google Scholar] [CrossRef]

- Liu, Y.; Deb, B.; Teruel, M.; Halfaker, A.; Radev, D.; Hassan, A. On Improving Summarization Factual Consistency from Natural Language Feedback. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; Volume 1, pp. 15144–15161. [Google Scholar]

- Tang, L.; Goyal, T.; Fabbri, A.; Laban, P.; Xu, J.; Yavuz, S.; Kryściński, W.; Rousseau, J.; Durrett, G. Understanding Factual Errors in Summarization: Errors, Summarizers, Datasets, Error Detectors. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; Volume 1, pp. 11626–11644. [Google Scholar]

- Koupaee, M.; Wang, W.Y. WikiHow: A Large Scale Text Summarization Dataset. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics: Student Research Workshop, Florence, Italy, 28 July–2 August 2019; Association for Computational Linguistics: Vienna, Austria; pp. 1–6. [Google Scholar]

- Narayan, S.; Cohen, S.B.; Lapata, M. Don’t give me the details, just the summary! Topic-aware convolutional neural networks for extreme summarization. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; Association for Computational Linguistics: Vienna, Austria; pp. 1797–1807. [Google Scholar]

- Zhou, Q.; Yang, N.; Wei, F.; Huang, S.; Zhou, M.; Zhao, T. Neural Document Summarization by Jointly Learning to Score and Select Sentences. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; Volume 1, pp. 654–663. [Google Scholar]

- Galeshchuk, S. Abstractive Summarization for the Ukrainian Language: Multi-Task Learning with Hromadske. Ua News Dataset. In Proceedings of the Second Ukrainian Natural Language Processing Workshop (UNLP), Dubrovnik, Croatia, 2–6 May 2023; pp. 49–53. [Google Scholar]

- Li, L.; Xu, S.; Liu, Y.; Gao, Y.; Cai, X.; Wu, J.; Song, W.; Liu, Z. LiSum: Open Source Software License Summarization with Multi-Task Learning. In Proceedings of the 2023 38th IEEE/ACM International Conference on Automated Software Engineering (ASE), Luxembourg, 11–15 September 2023; IEEE Computer Society: Washington, DC, USA, 2023; pp. 787–799. [Google Scholar]

- Chen, L.; Leng, L.; Yang, Z.; Teoh, A.B.J. Enhanced Multitask Learning for Hash Code Generation of Palmprint Biometrics. Int. J. Neural Syst. 2024, 34, 2450020. [Google Scholar] [CrossRef] [PubMed]

- Tian, Y.; Lin, Y.; Ye, Q.; Wang, J.; Peng, X.; Lv, J. UNITE: Multitask Learning with Sufficient Feature for Dense Prediction. IEEE Trans. Syst. Man Cybern. Syst. 2024, 54, 5012–5024. [Google Scholar] [CrossRef]

- Qin, Y.; Pu, N.; Wu, H.; Sebe, N. Margin-aware Noise-robust Contrastive Learning for Partially View-aligned Problem. ACM Trans. Knowl. Discov. Data 2025, 19, 1–20. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhu, Y.; Yang, M.; Jin, G.; Zhu, Y.; Chen, Q. Cross-to-merge training with class balance strategy for learning with noisy labels. Expert Syst. Appl. 2024, 249, 123846. [Google Scholar] [CrossRef]

- Lin, C. Rouge: A Package for Automatic Evaluation of Summaries. In Text Summarization Branches Out; Association for Computational Linguistics: Stroudsburg, PA, USA, 2004; pp. 74–81. [Google Scholar]

- Zhao, W.; Peyrard, M.; Liu, F.; Gao, Y.; Meyer, C.M.; Eger, S. MoverScore: Text Generation Evaluating with Contextualized Embeddings and Earth Mover Distance. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing & 9th Int’l Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 563–578. [Google Scholar] [CrossRef]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. BERTScore: Evaluating Text Generation with BERT. In Proceedings of the 8th Int’l Conference on Learning Representations (ICLR 2020), Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Li, H.; Chowdhury, S.B.R.; Chaturvedi, S. Aspect-Aware Unsupervised Extractive Opinion Summarization. In Findings of the Association for Computational Linguistics: ACL 2023; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 12662–12678. [Google Scholar]

- Liang, X.; Li, J.; Wu, S.; Li, M.; Li, Z. Improving Unsupervised Extractive Summarization by Jointly Modeling Facet and Redundancy. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 30, 1546–1557. [Google Scholar] [CrossRef]

- Wang, Y.; Mao, Q.; Liu, J.; Jiang, W.; Zhu, H.; Li, J. Noise-Injected Consistency Training and Entropy-Constrained Pseudo Labeling for Semi-Supervised Extractive Summarization. In Proceedings of the 29th International Conference on Computational Linguistics, Gyeongju, Republic of Korea, 12–17 October 2022; pp. 6447–6456. [Google Scholar]

- Zhu, T.; Hua, W.; Qu, J.; Hosseini, S.; Zhou, X. Auto-Regressive Extractive Summarization with Replacement. World Wide Web 2023, 26, 2003–2026. [Google Scholar] [CrossRef]

- Mendes, A.; Narayan, S.; Miranda, S.; Marinho, Z.; Martins, A.F.; Cohen, S.B. Jointly Extracting and Compressing Documents with Summary State Representations. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 3955–3966. [Google Scholar]

- Zhang, H.; Liu, X.; Zhang, J. Extractive Summarization via ChatGPT for Faithful Summary Generation. In Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, 6–10 December 2023; Association for Computational Linguistics: Stroundsburg, PA, USA, 2023; pp. 3270–3278. [Google Scholar]

- Jie, R.; Meng, X.; Jiang, X.; Liu, Q. Unsupervised Extractive Summarization with Learnable Length Control Strategies. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 26–27 February 2024; Volume 38, pp. 18372–18380. [Google Scholar]

- Sun, S.; Yuan, R.; Li, W.; Li, S. Improving Sentence Similarity Estimation for Unsupervised Extractive Summarization. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar]

- Chen, J. An Entity-Guided Text Summarization Framework with Relational Heterogeneous Graph Neural Network. Neural Comput. Appl. 2024, 36, 3613–3630. [Google Scholar] [CrossRef]

- Su, W.; Jiang, J.; Huang, K. Multi-Granularity Adaptive Extractive Document Summarization with Heterogeneous Graph Neural Networks. PeerJ Comput. Sci. 2023, 9, e1737. [Google Scholar] [CrossRef]

- Hermann, K.M.; Kocisky, T.; Grefenstette, E.; Espeholt, L.; Kay, W.; Suleyman, M.; Blunsom, P. Teaching machines to read and comprehend. In Proceedings of the 28th Conference on Neural Information Processing Systems (NeurIPS 2015), Montreal, QC, Canada, 7–12 December 2015; pp. 1693–1701. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising Sequence-to-Sequence Pre-Training for Natural Language Generation, Translation, and Comprehension. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 7871–7880. [Google Scholar]

- Liu, Y.; Lapata, M. Text Summarization with Pretrained Encoders. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 3730–3740. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models Are Few-Shot Learners. In Proceedings of the 34th Conference on Neural Information Processing Systems, Online, 6–12 December 2020; pp. 1877–1901. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. Bert: Pre-Training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 4171–4186. [Google Scholar]

- See, A.; Liu, P.J.; Manning, C.D. Get to the Point: Summarization with Pointer-Generator Networks. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; Volume 1, pp. 1073–1083. [Google Scholar]

- Jiang, B.; Zhang, Z.; Lin, D.; Tang, J.; Luo, B. Semi-Supervised Learning with Graph Learning-Convolutional Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11313–11320. [Google Scholar]

- Zhang, Y.; Yang, Q. A Survey on Multi-Task Learning. IEEE Trans. Knowl. Data Eng. 2021, 34, 5586–5609. [Google Scholar] [CrossRef]

| Artificial Summary (with factual errors): Dr. Doom is seen for the first time in the trailer for the “Fantastic Four” reboot, Chris Pratt takes the lead in the new trailer for “Jurassic World”. |

| Extracted Summary based on Erroneous Summary: Not to be outdone, the new trailer for “Jurassic World” came out Monday morning. It features even more stars, Chris Pratt. Corrected Artificial Summary: Dr. Doom is seen for the first time in the trailer for the “Fantastic Four” reboot. The new “Jurassic World” trailer features more of Chris Pratt’s character. Extracted Summary based on Corrected Summary: Not to be outdone, the new trailer for “Jurassic World” came out Monday morning. It features even more of star Chris Pratt. Pratt’s scientist character knows dinosaurs better than anyone. |

| Notation | Description |

|---|---|

| Hidden state of the sentence encoder | |

| The reference summary | |

| An original sentence from the document | |

| Weight parameter for fusion | |

| Batch size | |

| -th word of a sentence | |

| Word-level embedding for keyword extraction | |

| Sentence-level embedding for summarization | |

| Begin/End of Sentence tokens | |

| Tagk/Tago | Tag indicating a keyword/non-keyword |

| Model | Brief Description |

|---|---|

| FAR [35] | Addresses facet-bias in extractive summarization by explicitly modeling multiple informational facets within a document |

| RFAR [35] | Extends FAR with a redundancy-minimization strategy to ensure more diverse sentence selection |

| CPSUM [36] | A semi-supervised framework that generates pseudo-labels to improve extractive summarization without heavy reliance on annotations |

| AES-REP [37] | Introduces an auto-regressive extractive model with a replacement mechanism for iterative refinement of selected sentences |

| EXCOSUMM [38] | Proposes a dynamic-length controller that adapts output summary length to the quality of reference summaries seen during training |

| CHATGPT-EXT [39] | Employs ChatGPT (gpt-3.5-turbo) for zero-shot extractive summarization, evaluated via ROUGE overlap with human references |

| LCS-EXT [40] | Uses a Siamese network with a bidirectional prediction objective, removing positional assumptions from sentence ranking |

| SIMBERT [41] | Enhances sentence-similarity estimation and ranking through mutual learning and auxiliary signal boosters for key-sentence detection |

| RHGNNSUM-EXT [42] | Leverages heterogeneous GNN and knowledge-graph information by constructing a sentence–entity graph for node-level reasoning |

| GNN-EXT [43] | Utilizes heterogeneous graph neural networks with edge features to model multi-granular semantic connections between sentences and entities |

| Model | CNN/DailyMail | WikiHow | XSum | ||||||

|---|---|---|---|---|---|---|---|---|---|

| R-1 | R-2 | R-L | R-1 | R-2 | R-L | R-1 | R-2 | R-L | |

| Oracle | 52.59 | 31.24 | 48.87 | 39.80 | 14.85 | 36.90 | 25.62 | 7.62 | 18.72 |

| SIMBERT | 35.41 | 13.18 | 31.75 | - | - | - | - | - | - |

| RFAR | 40.64 | 17.49 | 36.01 | 27.38 | 6.02 | 25.37 | - | - | - |

| FAR | 40.83 | 17.85 | 36.91 | 27.54 | 6.17 | 25.46 | - | - | - |

| LCS-EXT | 40.92 | 17.88 | 37.27 | - | - | - | - | - | - |

| CPSUM (Soft) | 40.93 | 18.01 | 37.04 | - | - | - | 17.22 | 2.17 | 12.71 |

| CPSUM (Hard) | 41.02 | 18.08 | 37.10 | - | - | - | 17.29 | 2.18 | 12.73 |

| EXCONSUMM | 41.70 | 18.60 | 37.80 | - | - | - | - | - | - |

| CHATGPT-EXT | 42.26 | 17.02 | 27.42 | - | - | - | 20.37 | 4.78 | 14.21 |

| RHGNNSUMEXT | 42.39 | 19.45 | 38.85 | - | - | - | - | - | - |

| GNN-EXT | 43.14 | 19.94 | 39.43 | - | - | - | - | - | - |

| AES-REP | 43.21 | 19.90 | 39.38 | 29.46 | 7.75 | 27.23 | - | - | - |

| SSF-KW (ours) | 43.27 | 20.39 | 39.70 | 30.03 | 8.42 | 27.89 | 25.43 | 5.29 | 21.34 |

| Model | CNN/DailyMail | XSum | WikiHow | ||||||

|---|---|---|---|---|---|---|---|---|---|

| R-1 | R-2 | R-L | R-1 | R-2 | R-L | R-1 | R-2 | R-L | |

| Full Model | 43.27 | 20.39 | 39.71 | 25.43 | 5.29 | 21.34 | 30.04 | 8.42 | 27.89 |

| 43.20 | 20.35 | 39.62 | 25.29 | 5.26 | 21.17 | 29.80 | 8.30 | 27.64 | |

| 43.21 | 20.36 | 39.64 | 25.22 | 5.29 | 21.12 | 29.84 | 8.38 | 27.70 | |

| 43.13 | 20.31 | 39.58 | 25.16 | 5.21 | 21.06 | 29.48 | 8.15 | 27.40 | |

| 43.23 | 20.38 | 39.68 | 25.32 | 5.31 | 21.24 | 29.91 | 8.41 | 27.78 | |

| 43.22 | 20.34 | 39.66 | 25.19 | 5.19 | 21.07 | 30.21 | 8.51 | 27.99 | |

| Value | CNN/DailyMail | XSum | WikiHow | ||||||

|---|---|---|---|---|---|---|---|---|---|

| R-1 | R-2 | R-L | R-1 | R-2 | R-L | R-1 | R-2 | R-L | |

| 0.0 | 43.25 | 20.42 | 39.70 | 25.34 | 5.30 | 21.23 | 29.94 | 8.40 | 27.79 |

| 0.1 | 43.22 | 20.36 | 39.65 | 25.37 | 5.32 | 21.24 | 29.97 | 8.41 | 27.82 |

| 0.2 | 43.27 | 20.39 | 39.71 | 25.38 | 5.28 | 21.27 | 29.96 | 8.39 | 27.83 |

| 0.3 | 43.22 | 20.40 | 39.67 | 25.36 | 5.31 | 21.20 | 30.00 | 8.37 | 27.84 |

| 0.4 | 43.23 | 20.38 | 39.67 | 25.36 | 5.26 | 21.26 | 29.99 | 8.38 | 27.84 |

| 0.5 | 43.20 | 20.36 | 39.64 | 25.37 | 5.26 | 21.27 | 30.03 | 8.42 | 27.89 |

| 0.6 | 43.19 | 20.34 | 39.63 | 25.32 | 5.26 | 21.21 | 30.02 | 8.39 | 27.86 |

| 0.7 | 43.21 | 20.40 | 39.66 | 25.37 | 5.28 | 21.27 | 30.01 | 8.40 | 27.85 |

| 0.8 | 43.23 | 20.39 | 39.68 | 25.43 | 5.29 | 21.34 | 29.98 | 8.39 | 27.84 |

| 0.9 | 43.23 | 20.38 | 39.68 | 25.40 | 5.30 | 21.30 | 29.93 | 8.36 | 27.78 |

| 1.0 | 43.21 | 20.36 | 39.65 | 25.39 | 5.31 | 21.29 | 29.92 | 8.33 | 27.74 |

| Type | Example Text |

|---|---|

| Noisy Reference Summary | “Dr. Doom is seen for the first time in the trailer for the Fantastic Four reboot, Chris Pratt takes the lead in the new trailer for Jurassic World.” |

| Baseline Extractive Output | “Not to be outdone, the new trailer for Jurassic World came out Monday morning. It features even more stars, Chris Pratt.” (selects sentence influenced by erroneous association) |

| SSF-KW Output | “Not to be outdone, the new trailer for Jurassic World came out Monday morning. It features even more of star Chris Pratt’s scientist character who knows dinosaurs better than anyone.” (selects factually correct sentence reflecting true context) |

| Observation | The reference-level factual error (“Chris Pratt takes the lead in Fantastic Four”) changes the extractive preference of standard models, which prioritize sentences lexically overlapping with the noisy supervision. SSF-KW, by incorporating semantic and keyword cues, refocuses the selection process on the document’s actual meaning rather than the flawed reference, thereby improving factual robustness and interpretability. |

| Model | Core Encoder | Extra Module | Parameters | Complexity Contributors |

|---|---|---|---|---|

| FAR [35] | BERT-base | Facet graph encoder | 110 M | Encoder + facet-graph propagation |

| RFAR [35] | BERT-base | Graph + redundancy gating | 112 M | Encoder + graph message passing |

| CPSUM [36] | BERT-base | Semi-supervised pseudo-labeling (no decoder/GNN) | 110 M | Encoder |

| AES-REP [37] | BERT-base | Auto-regressive sentence replacement | 118 M | Encoder + sentence-level sequential step |

| EXCOSUMM [38] | BERT-base | Dynamic length controller | 113 M | Encoder + controller |

| LCS-EXT [40] | BERT-base (dual tower) | Siamese encoder | 2 × 110 M | Two encoders |

| SIMBERT [41] | BERT-base | Similarity enhancement | 110 M | Encoder |

| RHGNNSUM-EXT [42] | BERT-base | Heterogeneous GNN | 125 M | Encoder + heterogeneous graph |

| GNN-EXT [43] | BERT-base | Graph neural network | 122 M | Encoder + graph |

| SSF-KW (Ours) | BERT-base | Multi-task fusion (no decoder/GNN) | 114 M (+ fusion < 4 M) | Encoder + fusion |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Zhang, J. SSF-KW: Keyword-Guided Multi-Task Learning for Robust Extractive Summarization. Electronics 2025, 14, 4551. https://doi.org/10.3390/electronics14234551

Wang Y, Zhang J. SSF-KW: Keyword-Guided Multi-Task Learning for Robust Extractive Summarization. Electronics. 2025; 14(23):4551. https://doi.org/10.3390/electronics14234551

Chicago/Turabian StyleWang, Yiming, and Jindong Zhang. 2025. "SSF-KW: Keyword-Guided Multi-Task Learning for Robust Extractive Summarization" Electronics 14, no. 23: 4551. https://doi.org/10.3390/electronics14234551

APA StyleWang, Y., & Zhang, J. (2025). SSF-KW: Keyword-Guided Multi-Task Learning for Robust Extractive Summarization. Electronics, 14(23), 4551. https://doi.org/10.3390/electronics14234551