1. Introduction

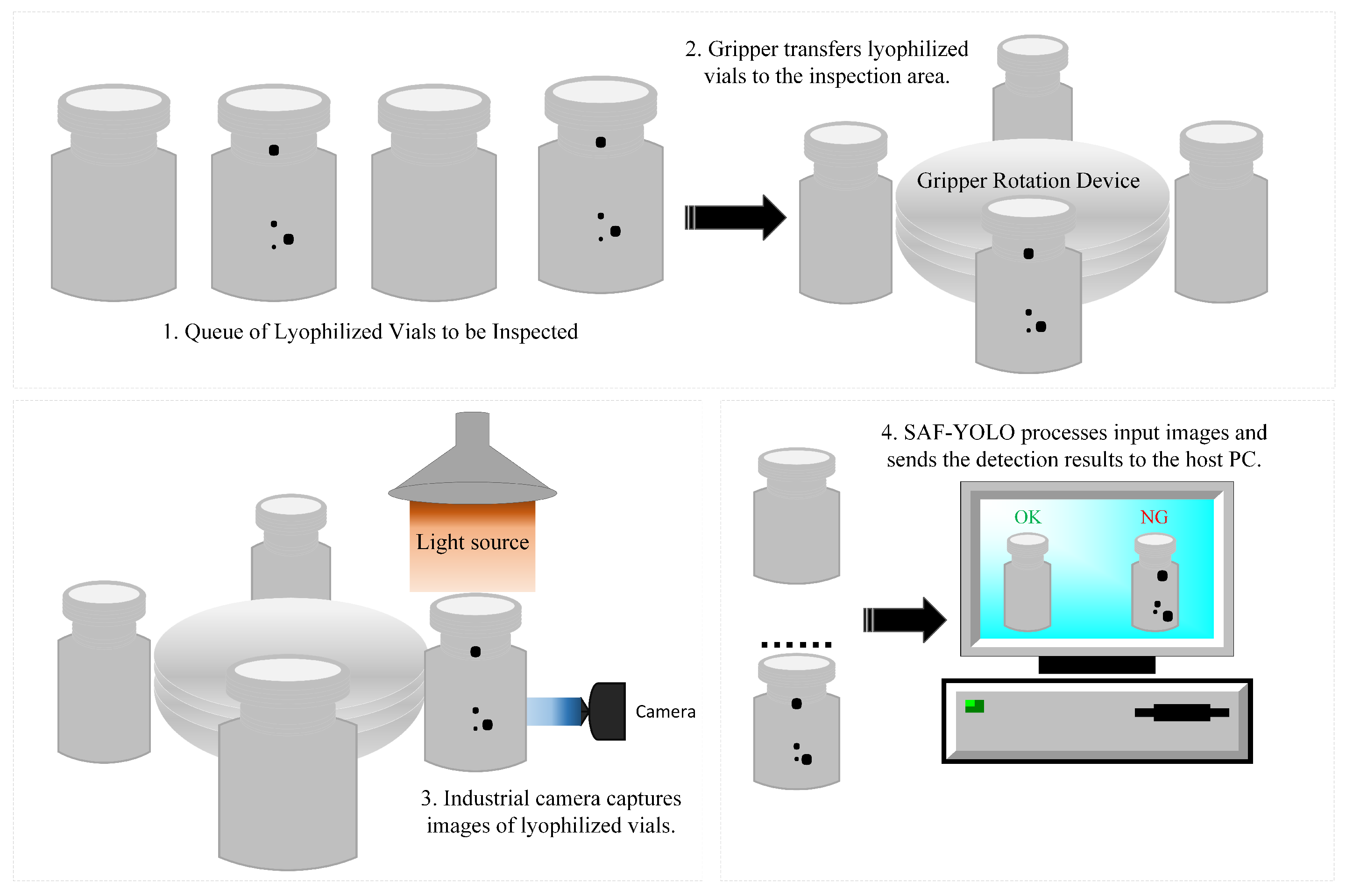

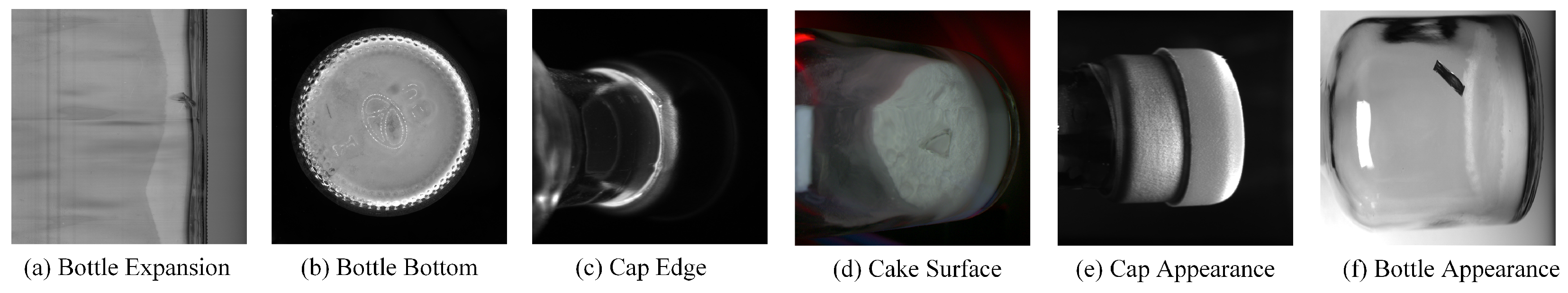

Injectable formulations are widely used in clinical practice owing to their distinct advantages, including rapid onset of action and high bioavailability. Among the various packaging formats, the Lyophilized Vial is one of the primary containers for injectables, and is particularly suitable for powdered antibiotics (e.g., penicillin). Such powders can be conveniently reconstituted with saline for injection. Compared with liquid injectables, Lyophilized Vial also offers several advantages, such as lighter weight, longer storage stability, and ease of transport. Structurally, a Lyophilized Vial constitutes a sealed system composed of multiple components, including the base, body, neck, lyophilized drug powder, rubber stopper, and aluminum cap. Its manufacturing process typically involves sequential steps such as washing the empty Lyophilized Vial, drying, filling with drug powder, inserting the rubber stopper, and crimping the aluminum cap. Each of these steps can introduce distinct types of defects. For instance, incomplete washing may leave residual contaminants; improper temperature control during drying can lead to cracks in the glass body; and inappropriate torque during capping may result in damage to the aluminum seal. These defects vary widely in form and may occur at different parts of the Lyophilized Vial. Since the reconstituted drug solution is administered directly into the human body, any such defect may cause contamination of the drug or compromise the container’s integrity, posing serious risks. Consequently, in accordance with the requirements of the National Medical Products Administration (NMPA), each Lyophilized Vial must undergo rigorous visual defect inspection prior to release from the factory [

1].

Traditional manual visual inspection suffers from low efficiency and high labor intensity, whereas machine vision, owing to its automation capability, has become an essential component of intelligent manufacturing and is gradually replacing manual inspection [

2]. As the core of machine vision systems, visual inspection algorithms are generally categorized into traditional image processing methods and deep learning-based approaches. For Lyophilized Vial defect detection, traditional image processing techniques—such as the deformable template matching proposed by Gong et al. [

3] and the ROI-based brightness statistical analysis developed by De et al. [

4]—can handle certain geometric distortions or illumination variations. However, these methods rely heavily on handcrafted feature design and parameter tuning, resulting in limited detection performance under complex backgrounds or when defect features are subtle.

Lyophilized Vial defect detection presents several intrinsic challenges. First, the vial’s transparent and highly reflective glass surface produces strong specular highlights and low-contrast defect regions, which obscure visual cues. Second, the defects themselves are often extremely small, irregularly shaped, and distributed across multiple structural areas such as the neck, shoulder, and body, making feature extraction difficult. Third, the Lyophilized vial’s multi-component structure introduces large intra-class variation and uneven lighting across views. Finally, the scarcity and imbalance of real defect samples hinder model generalization. Collectively, these challenges lead to weak feature responses, high false detection rates, and unstable performance when conventional detection frameworks such as YOLOv11 are directly applied.

With the remarkable success of deep learning in the field of computer vision, deep learning-based visual inspection algorithms have been extensively investigated. These methods formulate visual defect detection as an object detection task and introduce application-specific enhancements on the basis of general deep learning object detection frameworks. Among these, the YOLO series [

5,

6,

7,

8,

9] has emerged as one of the most influential and widely applied algorithms in object detection. YOLO achieves an effective balance between detection speed and accuracy, while also benefiting from being fully open source. For example, in road defect detection, Li et al. [

10] proposed MGD-YOLO, which incorporates a multi-scale dilated attention module, depthwise separable convolutions, and a visual global attention upsampling module to enhance cross-scale semantic representation and improve localization of low-level features. For drilling rig visual inspection, Zhao et al. [

11] introduced FSS-YOLO, which integrates Faster Blocks, the SimAM attention mechanism, and shared convolutional structures in the detection head to reduce parameter complexity and strengthen multi-scale generalization. In the context of liquid crystal display defect detection, Luo et al. [

12] developed YOLO-DEI, combining DCNv2, CE modules, and IGF modules to significantly improve both precision and recall for small targets and large-scale defects. For small-object detection in remote sensing imagery, Zhang et al. [

13] proposed SuperYOLO, which leverages multimodal fusion modules and a super-resolution branch to improve accuracy under low-resolution inputs. In hot-rolled steel surface defect detection, Huang et al. [

14] presented SSA-YOLO, incorporating channel attention convolutional squeeze-excitation modules and Swin Transformer components to enhance feature extraction for small defects and enable multi-scale defect detection. For weld defect detection, Wang et al. [

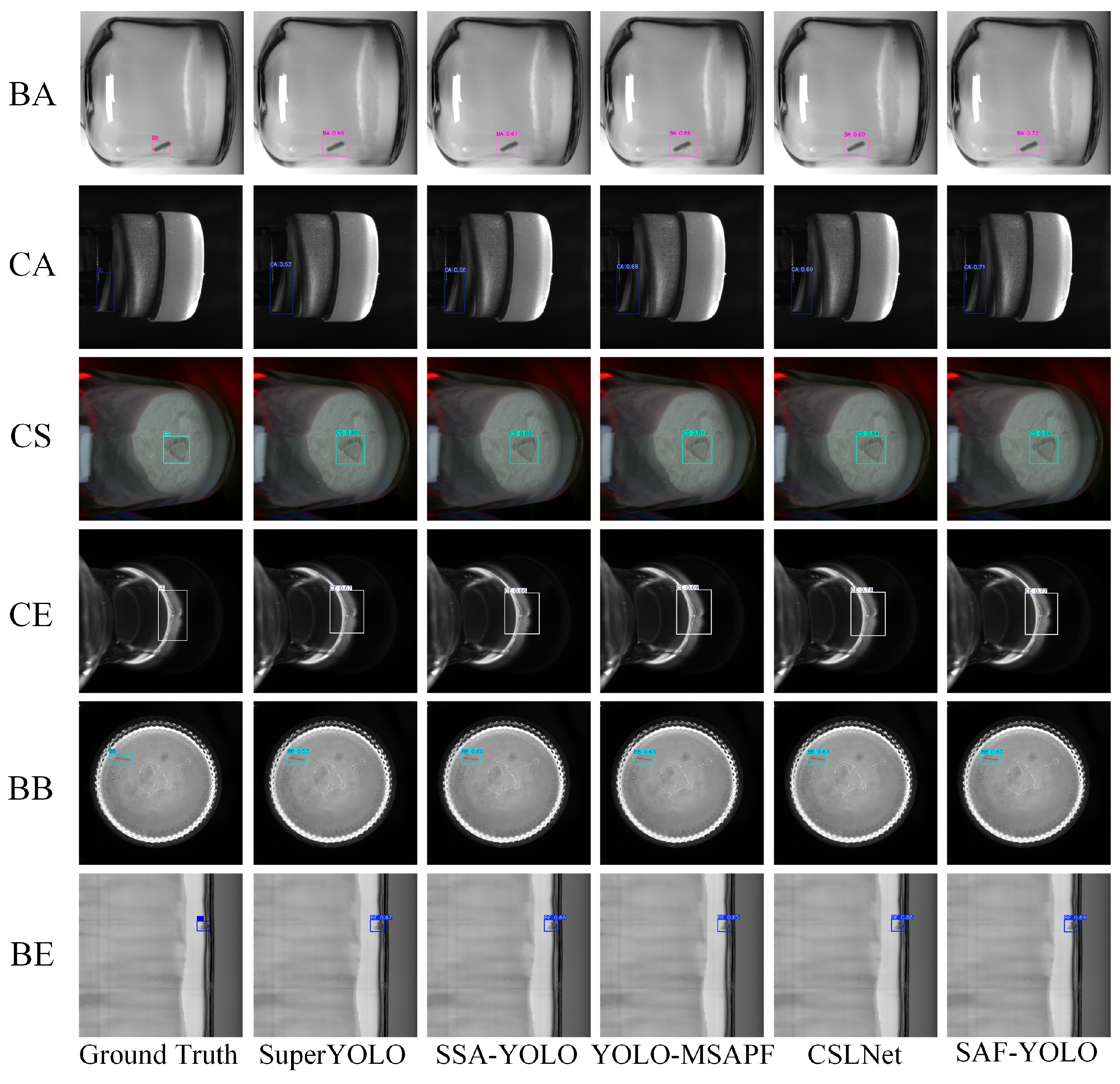

15] proposed YOLO-MSAPF, combining multi-scale alignment fusion with a Parallel Feature Filtering (PFF) module to suppress irrelevant information while strengthening essential features. By filtering fused features across spatial and channel dimensions, their approach achieved improved detection performance. Although these algorithms have demonstrated excellent results on their respective datasets, most are tailored to general-purpose object detection or domain-specific defect detection tasks. When applied to the unique industrial scenario of Lyophilized Vial inspection—characterized by complex structural features and diverse defect types—their generalization capacity and detection performance remain uncertain.

In the field of pharmaceutical packaging and Lyophilized Vial defect detection, various improved algorithms based on the YOLO architecture have been proposed. For lyophilized powder and packaging Lyophilized Vial defect detection, Xu et al. [

16] developed CSLNet, which integrates a C2f_Star module and a lightweight fusion module to achieve adaptive multi-scale feature aggregation. By combining a multi-view imaging system with coarse region proposal networks and attention-based localization, their method improved both detection performance and efficiency. Vijayakumar et al. [

17] proposed a real-time pharmaceutical packaging defect detection method, CBS-YOLOv8, which introduces cross-stage feature interaction and lightweight improvements to significantly enhance the recognition of cracks, foreign objects, and printing defects in bottles and packaging. Chen et al. [

18] designed a multi-scale surface defect detection approach by incorporating variable receptive-field convolutions and feature aggregation structures, thereby strengthening the representation of surface defects at different scales and effectively improving the detection of small and complex flaws. Pei et al. [

19] introduced BiD-YOLO, which employs a dual-branch pathway to capture fine-grained texture features and global contextual information separately, while feature fusion enhances detection accuracy. Their model achieves a balance between real-time performance and high precision, making it suitable for detecting subtle surface defects in transparent plastic bottles. Although these methods have demonstrated promising results, they primarily target specific categories of pharmaceutical defects or focus on localized regions of the container. Consequently, they do not provide comprehensive coverage of defects across the entire Lyophilized Vial body, which limits their applicability and industrial value.

Existing YOLO-based improvements predominantly focus on enhancing spatial-domain features, incorporating local attention mechanisms, or refining network structures. For example, MGD-YOLO employs multi-scale dilated attention and depthwise separable convolutions to strengthen cross-scale feature representation; FSS-YOLO integrates Faster Blocks with SimAM attention to improve multi-scale generalization while reducing model complexity; SSA-YOLO combines Swin Transformer blocks and channel attention to enhance small-target detection. Although these approaches achieve notable performance in their respective applications, they are largely confined to spatial-domain representations and local feature refinement, which limits their ability to capture subtle frequency-domain cues or preserve global semantic coherence—particularly in transparent and reflective surfaces such as Lyophilized Vial. In contrast, SAF-YOLO adopts a distinct strategy by integrating frequency-domain analysis with multi-scale attention fusion. The framework uses spectral decomposition to separate low-frequency structural information from high-frequency defect details, incorporates global-context fusion to maintain holistic spatial semantics, and applies multi-scale attention to adaptively emphasize critical features across the entire vial. By combining spectral and spatial cues in this way, SAF-YOLO achieves more reliable defect detection under variable illumination and reflective conditions, offering robustness and generalization beyond the capabilities of existing YOLO-based improvements.

To address the aforementioned issues, this paper analyzes the limitations of YOLOv11 in Lyophilized Vial defect detection and proposes SAF-YOLO, an enhanced algorithm that integrates spectral perception and attention fusion mechanisms to achieve comprehensive detection of various typical Lyophilized Vial defects. Specifically, the main contributions of this work are as follows:

Introduced into the backbone network, this module employs frequency-domain decomposition and reconstruction to simultaneously model low-frequency structural and high-frequency detail information, thereby enhancing the network’s sensitivity to subtle defects.

Incorporated between the backbone and neck, this module performs global semantic modeling through a combination of spatial attention and dual-path fusion, improving contextual feature correlation.

Integrated into the neck network, this module enhances multi-scale feature representation by combining channel and spatial attention, thereby improving the detection performance for defects of various sizes.

A dataset comprising 12,000 images captured from six different viewing angles is constructed to provide reliable support for model training and performance evaluation.

The remainder of this paper is organized as follows.

Section 1 introduces the characteristics of Lyophilized Vial defect types and reviews the limitations of existing defect detection methods.

Section 2 presents the proposed detection framework for Lyophilized Vial defects, which introduces a frequency-aware representation and attention-fusion strategy to enhance feature perception and localization. Based on the analysis of YOLOv11’s limitations, the framework incorporates three modules—WTC3K2, GCFR, and MSAFM—to achieve multi-scale and context-aware feature enhancement.

Section 3 reports the experimental validation, where comparative studies demonstrate that the proposed approach significantly outperforms existing mainstream methods in detecting multiple types of Lyophilized Vial defects.

Section 4 concludes the paper by summarizing the contributions of SAF-YOLO and outlining directions for future work.

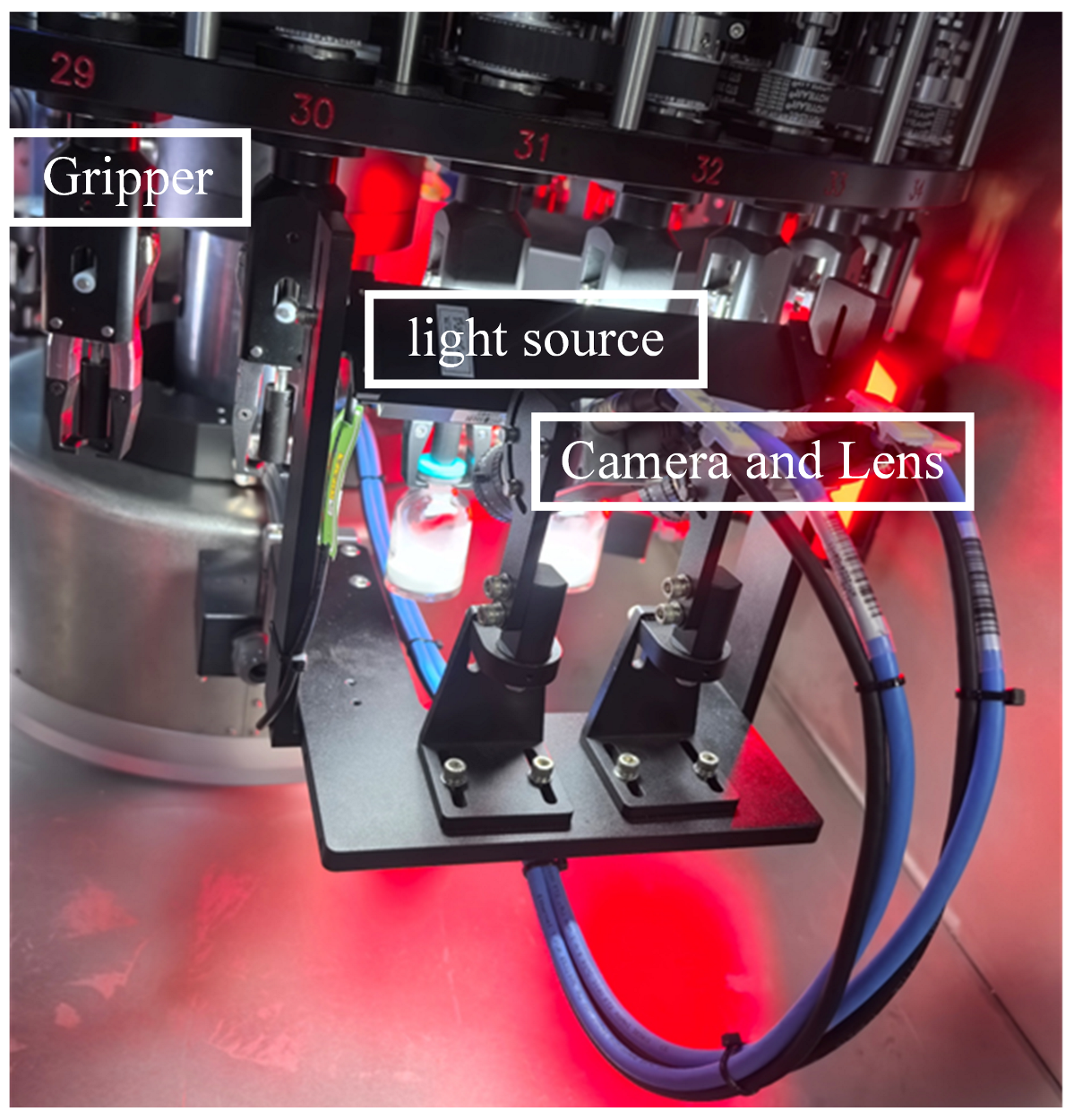

2. SAF-YOLO Detection Method

2.1. Network Architecture

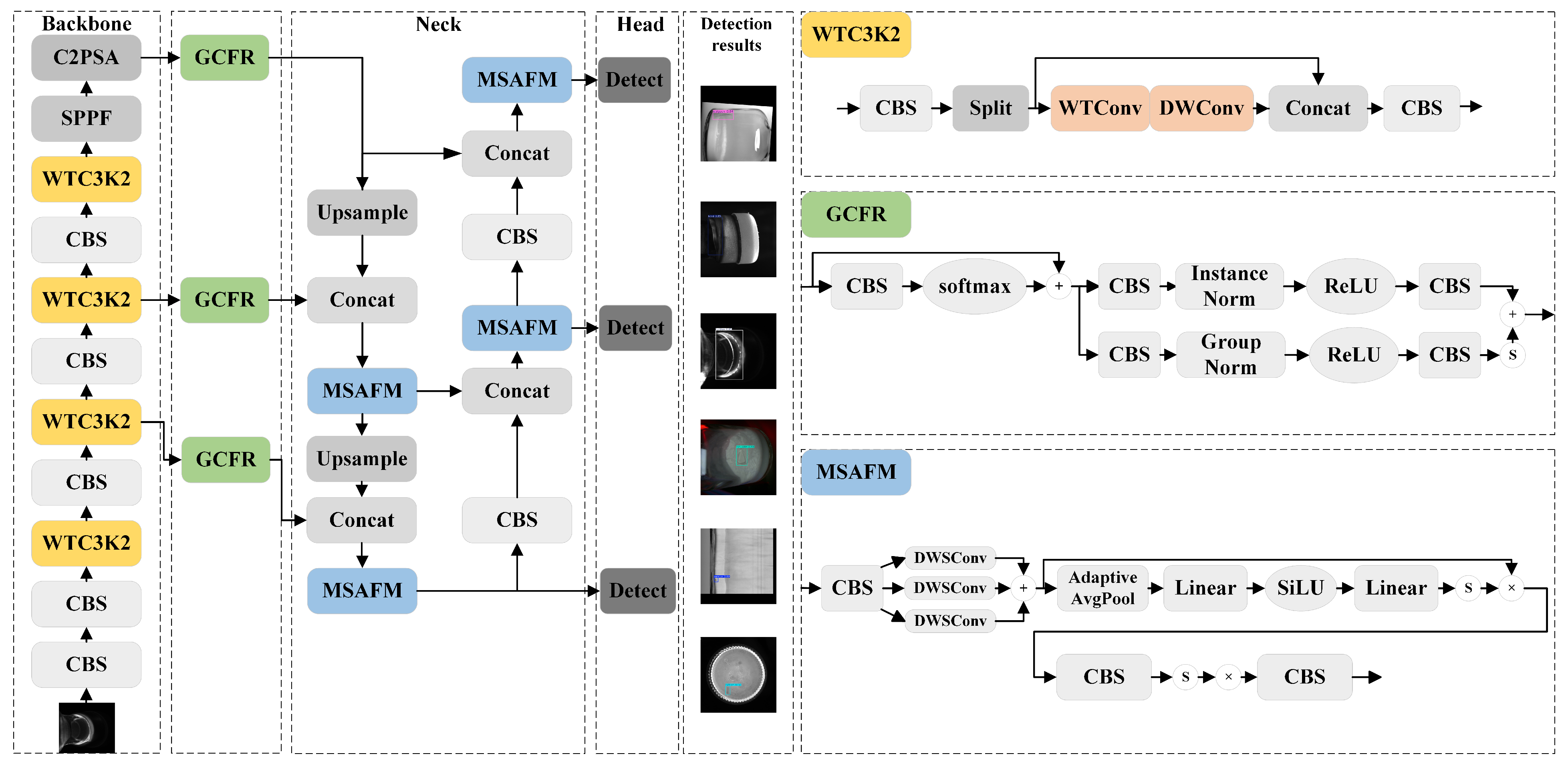

The overall architecture of the proposed SAF-YOLO network for Lyophilized Vial defect detection is illustrated in

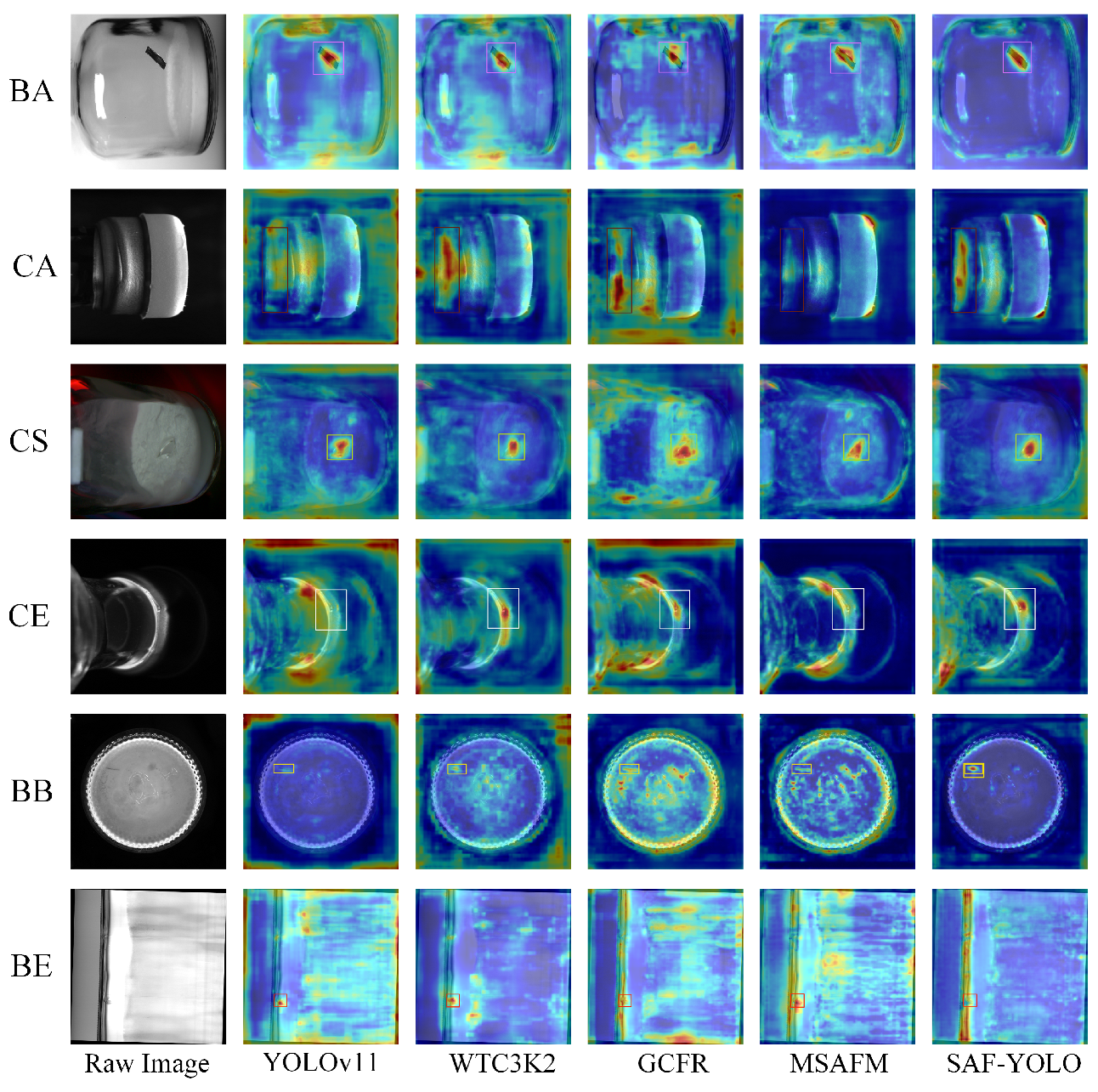

Figure 1. Its structure remains consistent with YOLOv11, comprising a backbone network for feature extraction, a neck network for feature fusion, and a head network for output prediction. In YOLOv11, the C3K2 modules in the backbone employ stacked convolutions to extract features. However, due to the curved edges of Lyophilized Vial, imaging distortions occur, and standard convolutions—with their fixed receptive fields and frequency response—struggle to capture high-frequency texture details effectively, which may result in missed detections of small defects. Although the YOLOv11 backbone generates multi-scale feature maps at the P3, P4, and P5 layers, these outputs are largely independent and only integrated in the neck network, leaving limited cross-layer and cross-region semantic interaction. Consequently, for defects with large spatial extent, such as longitudinal scratches on the Lyophilized Vial body, the network lacks sufficient contextual awareness. The neck network of YOLOv11 employs fixed C3K2 modules for multi-scale feature fusion, which can aggregate some contextual information but are constrained by a uniform receptive field and thus have limited responsiveness across varying object scales. Given the substantial variation in defect sizes on Vial—ranging from small speck-like contaminants to elongated scratches—this limitation hinders detection performance. To overcome these shortcomings of YOLOv11 in Lyophilized Vial defect detection, this paper introduces several improved modules:

WTC3K2 Module in the Backbone Network: We propose an improved residual module, WTC3K2, which replaces the standard convolutions in the conventional C3K2 block with wavelet transform–based convolutions (WTConv). By leveraging frequency-domain decomposition and reconstruction, this design effectively models both low-frequency structural information and high-frequency details, thereby enhancing the network’s sensitivity to subtle texture defects.

GCFR Module between the Backbone and Neck Networks: A Global Context Feature Refine (GCFR) module is introduced between the backbone and neck. By generating spatial attention masks and guiding a dual-path fusion process (addition and multiplication), this lightweight design achieves long-range semantic modeling and strengthens contextual awareness.

MSAFM Module in the Neck Network: In the neck, we construct a Multi-Scale Attention Fusion Module (MSAFM), which employs a three-branch parallel convolutional structure. By integrating both channel attention and spatial attention, the module enhances multi-scale feature representation and improves recognition performance across targets of varying sizes.

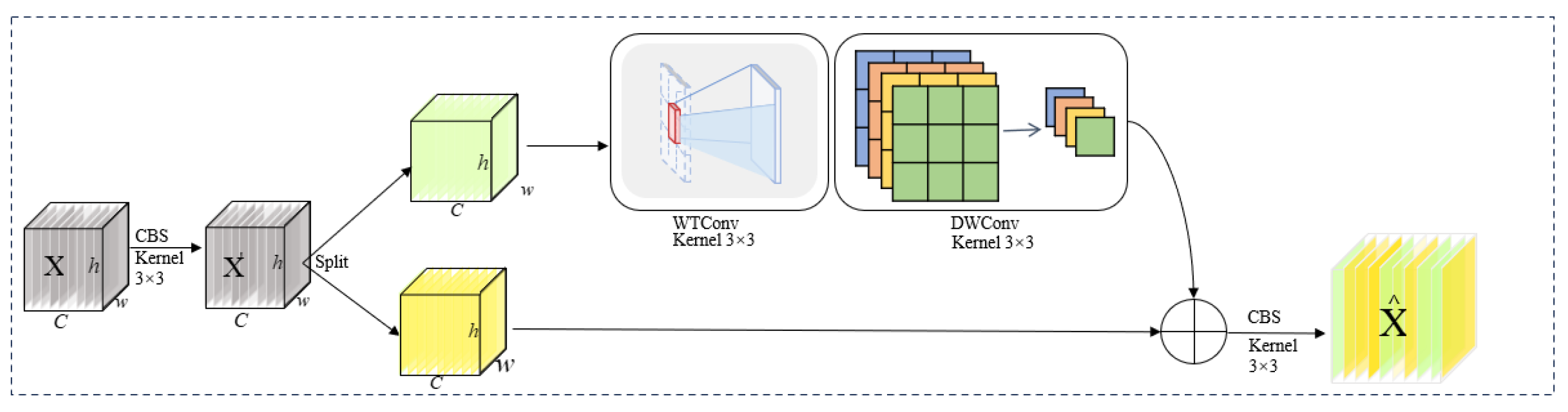

2.2. WTC3K2 Module

The backbone of YOLOv11 employs multiple C3K2 modules for feature extraction. In these modules, conventional convolutions generally use kernels of fixed size. The receptive field during feature extraction is therefore determined by the kernel size: if the kernel is too small, the ability to detect large-scale defects is diminished; conversely, if the kernel is too large, the resolution of small-scale local defects is reduced while the parameter count increases substantially. This fixed-size convolutional kernel setting results in a limited detection field of view and makes it difficult to accommodate the wide variation in defect scales observed in Lyophilized Vial. In contrast, wavelet transform–based WTConv [

20] achieves low-/high-frequency decomposition and multi-level reconstruction, thereby providing receptive fields at multiple scales. This enables the extraction of defect features across different sizes while simultaneously enhancing the resolution of texture details and edges, without causing a significant increase in parameters or computational cost. To address the limitations of the original backbone, we propose a novel Wavelet-C3K2 (WTC3K2) module to replace the standard C3K2 module, as illustrated in

Figure 2. In this design, WTConv substitutes for traditional convolutions to improve defect detection across scales, and depthwise convolutions are incorporated to further reduce the parameter count.

However, the above explanation alone is insufficient to fully justify the use of wavelet convolutions. To address this, we further clarify how wavelet kernels overcome the limitations of fixed conventional convolutions. Traditional convolutions compute within a fixed spatial receptive field, with weights applied uniformly across all input frequencies, which constrains the model’s ability to represent features of varying scales and textures. When the kernel is small, the model struggles to capture large-scale structural information; when the kernel is large, local details may be overlooked. In contrast, wavelet convolutions enable joint analysis in both spatial and frequency domains. Through multi-resolution decomposition, the input features are divided into subbands of different scales and orientations, each focusing on local variations within specific frequency ranges. This mechanism allows the convolution operation to adaptively adjust its receptive field according to the frequency components of the input features, rather than being limited to a fixed window. Based on this principle, the proposed WTC3K2 module effectively mitigates the rigidity of conventional convolutional structures. First, the low-frequency subband emphasizes the overall vial shape and illumination consistency, enhancing model stability under curved surfaces and varying lighting conditions. Second, the high-frequency subbands highlight defect edges and fine-grained textures, improving the model’s sensitivity to local anomalies such as micro-cracks and scratches. By fusing features across different frequency levels via inverse wavelet transform, WTC3K2 achieves multi-scale, frequency-adaptive feature representation, enriching feature expressiveness and robustness without substantially increasing the number of parameters.

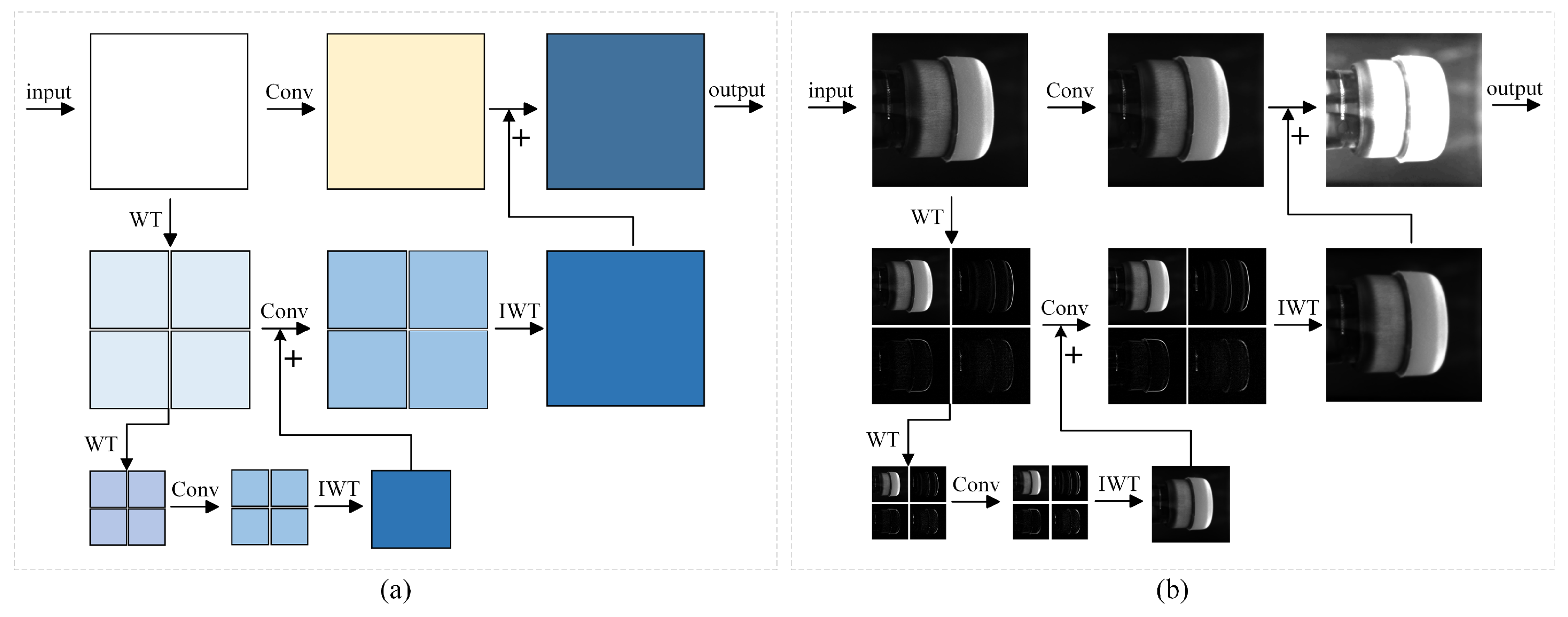

Taking a two-layer convolution as an example, the spatial-domain convolution of WTConv can be decomposed into the following sequence: 2D wavelet transform (WT) → convolution on frequency components (Conv) → 2D inverse wavelet transform (IWT) → residual addition, as illustrated in

Figure 3.

First, the input feature map

X is subjected to a two-dimensional wavelet transform, yielding four distinct frequency sub-bands Equation (

1), the subscripts L and H denote the low- and high-frequency components along the corresponding dimensions, while the superscript (1) indicates the first wavelet convolution operation applied to the input

X:

Then, a convolution operation with a 3 × 3 kernel is applied to each frequency sub-band Equation (

2):

To further enlarge the receptive field, the low-frequency component obtained along both row and column dimensions

is taken as the input for a second wavelet transform, producing

. An inverse wavelet transform (IWT) is then applied to yield

. Leveraging the linearity of IWT, the aggregation can be recursively performed in a bottom-up manner shown in Equation (

3):

The component

is treated as a residual term and added to the standard convolution output of the feature map

X, yielding the final output of WTConv in Equation (

4):

The overall workflow of WTC3K2 is summarized in Algorithm 1. It primarily involves standard convolution followed by channel splitting, 2D wavelet processing with WTConv, and depthwise convolution (DWConv), after which residual connections and standard convolution are applied to produce the final output.

| Algorithm 1 The Proposed Wavelet-C3K2 (WTC3K2) |

| Input: Feature map X |

| Output: Optimized feature map |

| 1: Apply a convolution to the input X and perform channel splitting: |

| 2: |

| 3: |

| 4: for do |

| 5: |

| 6: |

| 7: end for |

| 8: |

| 9: for do |

| 10: |

| 11: end for |

| 12: |

| 13: return |

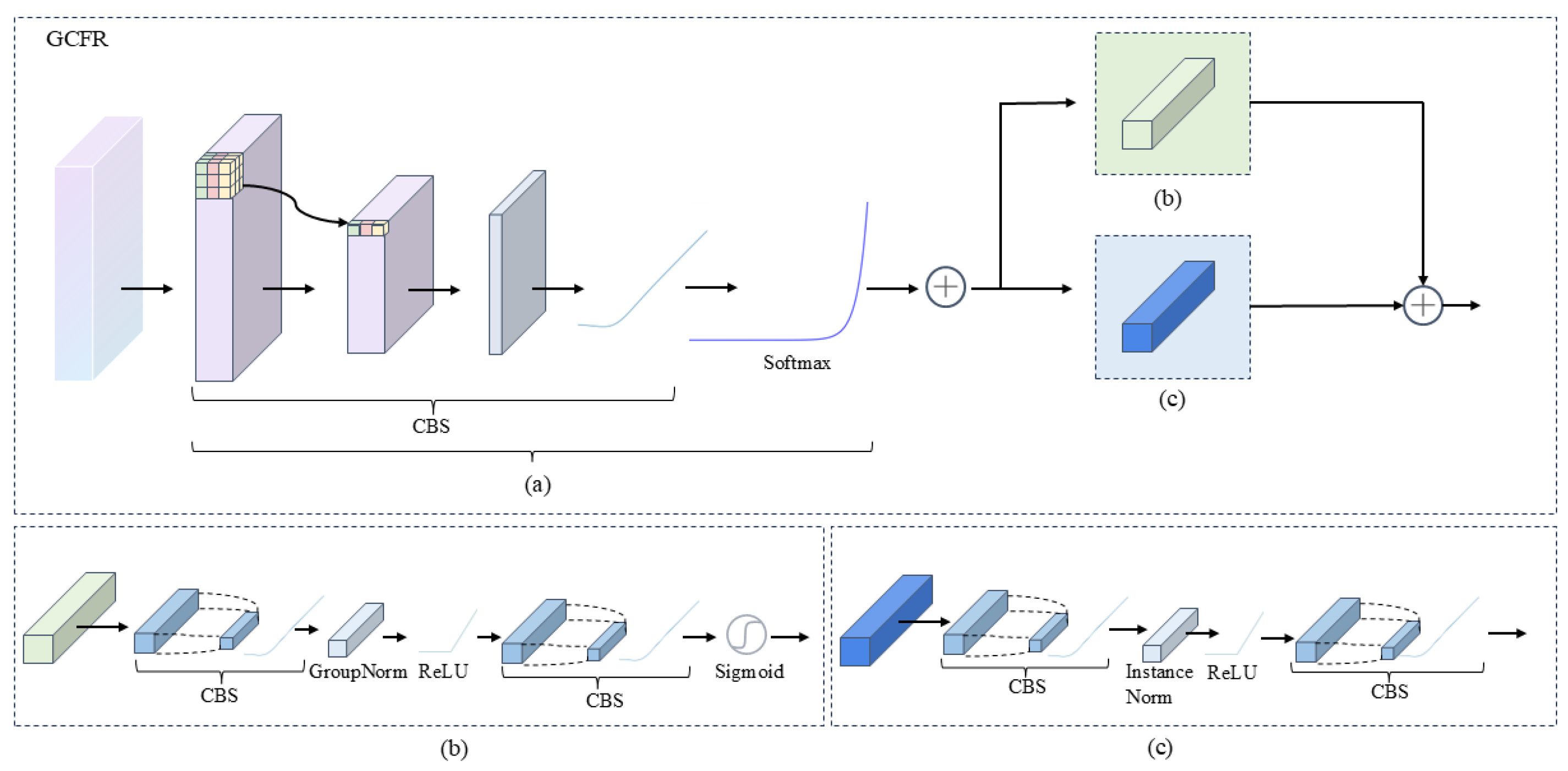

2.3. GCFR Module

The neck network enhances detection performance through multi-scale feature fusion and serves as a bridge between the backbone and head networks. However, when applied to Lyophilized Vial defect detection, the existing neck structure has two main limitations. First, insufficient contextual information: the transmitted feature maps are dominated by local features, lacking global contextual information, which makes it difficult to capture long-range dependencies [

21]. Second, limited multi-scale feature fusion: prior to entering the neck, the feature maps do not undergo sufficient multi-scale semantic enhancement, resulting in suboptimal performance in complex scenarios. To address these issues, we introduce a novel Global Context Feature Refine (GCFR) module between the backbone and neck networks, as illustrated in

Figure 4. This module integrates a spatial adaptive pooling structure with a channel attention mechanism, aiming to enrich contextual information and improve multi-scale feature fusion within the network. The pseudocode workflow of the GCFR module is summarized in Algorithm 2.

| Algorithm 2 The Proposed Global Context Feature Refine (GCFR) Module |

| Input: Feature map X |

| Output: Optimized feature map |

| Initialization: |

| Define channel_add_model: Conv1×1 → InstanceNorm → ReLU → Conv1×1 |

| Define channel_mul_model: Conv1×1 → GroupNorm → ReLU → Conv1×1 |

| Set fixed parameters: , |

| 1: Compute |

| 2: Flatten , apply softmax along the spatial dimension |

| 3: Compute |

| 4: |

| 5: Compute |

| 6: |

| 7: return |

The spatial adaptive pooling structure is designed to address the problem of insufficient contextual information. Inspired by Reference [

22], we incorporate this structure into the GCFR module. As shown in

Figure 4a, it generates adaptive weight distributions over the feature map, thereby introducing global contextual information along the channel dimension. Specifically, a 1 × 1 convolution is first applied to generate the attention weights of the input feature map

X. These weights are then normalized using a softmax function to produce a weight map, which is multiplied with the original feature map to obtain the contextual features

in Equation (

5):

The channel attention mechanism is introduced to address the limitation of insufficient multi-scale feature fusion. To better enhance the contrast between target and background regions in feature maps and to enrich the semantic information of target features, the GCFR module incorporates two mechanisms: additive channel attention (

Figure 4b) and multiplicative channel attention (

Figure 4c). The multiplicative channel attention generates a channel-wise scaling factor with sigmoid activation through a series of 1 × 1 convolutions and nonlinear normalization. This factor is applied multiplicatively to the original features, amplifying or suppressing important channels. In contrast, the additive channel attention reshapes the contextual information into a low-dimensional spatial representation, which is then processed through a set of convolutions and normalization to recover a bias term matching the number of input channels. This bias is added to the features to compensate or correct channel responses. The multiplicative branch serves to adjust the relative importance of channels (i.e., a gating mechanism), while the additive branch injects a global semantic bias signal, enriching feature representation. The coefficients

and

are learnable fusion weights that allow the network to automatically balance the contributions of the additive and multiplicative modifications.

By integrating the spatial adaptive pooling structure with the two channel attention mechanisms, the GCFR module is constructed. Before the feature maps are passed into the neck network, the GCFR module enhances them with contextual information, thereby improving the representation of multi-scale features. During the multi-scale fusion process, the introduced global contextual information also compensates for the deficiencies of local features, ultimately enhancing defect detection performance.

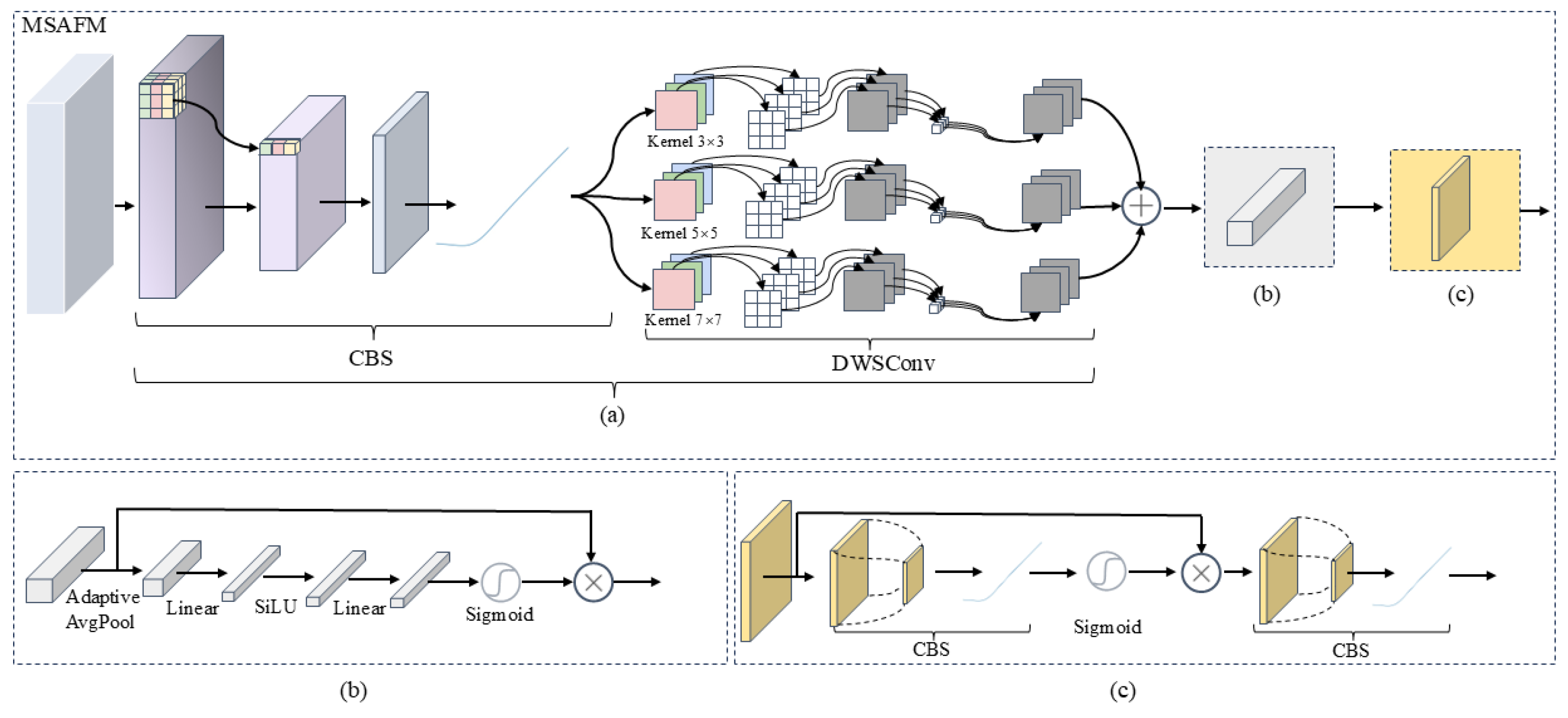

2.4. MSAFM Module

In the neck network of YOLOv11, the C3K2 module remains the primary convolutional block. However, as discussed in

Section 3.2, C3K2 relies on fixed-size convolutional kernels, which constrain its receptive field. This limitation reduces performance in Lyophilized Vial defect detection scenarios where backgrounds are complex and defect scales vary significantly. Hence, it is necessary to improve the C3K2 module in the neck network. Unlike the backbone network, which primarily focuses on feature extraction, the neck network emphasizes feature fusion. To this end, we propose the Multi-Scale Attention Fusion Module (MSAFM) as a replacement for the C3K2 module in the neck. As illustrated in

Figure 5, MSAFM integrates (a) multi-branch convolutions, (b) the SENet module based on channel attention, and (c) the SAM module based on spatial attention. Together, these components effectively enhance feature representation and fusion capabilities. The pseudocode workflow of the MSAFM structure is presented in Algorithm 3.

Multi-branch convolution module: The input feature map

X is first processed by three parallel depthwise separable convolution (DWSConv) branches, with kernel sizes of 3 × 3, 5 × 5, and 7 × 7, respectively. The outputs of these three branches can be expressed as Equation (

6):

Subsequently, to ensure the simultaneous presence of small, medium, and large receptive-field features—thereby accommodating the detection requirements of defects at different scales—the feature maps from the three branches are combined through element-wise addition to obtain the fused feature map :

| Algorithm 3 Multi-Scale Attention Fusion Module (MSAFM) |

| Input: Feature map X |

| Output: Optimized feature map |

| Initialization: |

| Define three depthwise separable convolutions with kernel sizes , and |

| Define channel attention module (SENet) |

| Define spatial attention module (SAM) |

| 1: |

| 2: |

| 3: |

| 4: |

| 5: |

| 6: |

| 7: |

| 8: return Shortcut

|

To further enhance the discriminative capability of the fused features, an attention mechanism is introduced to dynamically adjust feature weights along both the channel and spatial dimensions. First, SENet [

23] is employed to perform channel-wise enhancement on the fused features, strengthening key feature channels while suppressing redundant information. The corresponding computation is expressed as Equation (

7):

where

and

denote the weight matrices,

represents global average pooling,

is the sigmoid activation function, and

is the activation function. Subsequently, the SAM module is employed to highlight defect regions and suppress background noise, enabling the network to focus more effectively on spatial locations that are critical for defect detection. The corresponding computation is formulated as Equation (

8):

By integrating multi-scale convolutional structures with dual attention mechanisms (SENet and SAM), the MSAFM module is capable of simultaneously capturing multi-scale information, strengthening the representation of key features, and focusing on defect regions. As a result, it significantly improves detection accuracy in complex Lyophilized Vial defect detection scenarios.

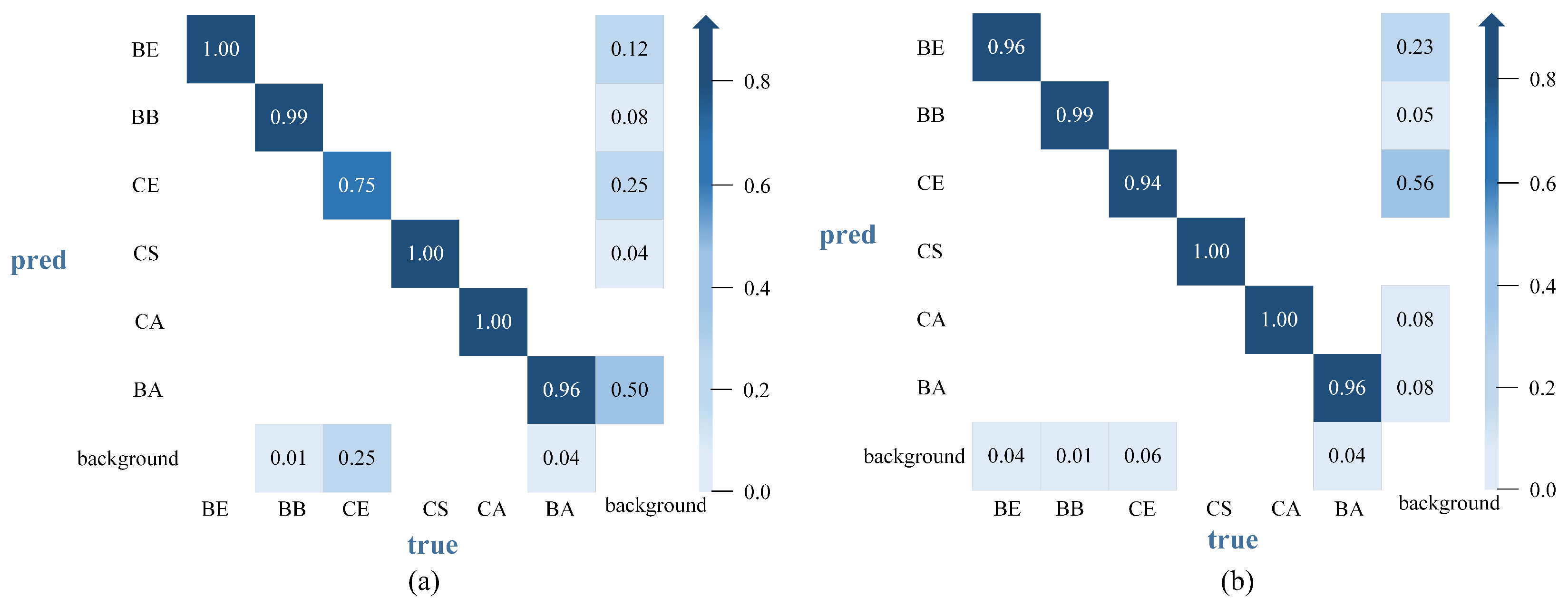

4. Discussion

This section traces the experimental results back to the model design to explain the reasons for performance improvements of each module across different defect types. First, the WTC3K2 module divides the input features into multiple frequency bands through wavelet decomposition, allowing the network to independently enhance responses to fine defects such as scratches and small particles in the high-frequency subbands. Experimental results demonstrate that this module significantly improves the recall rate for various defects, validating the effectiveness of wavelet-based frequency feature extraction in enhancing defect signal-to-noise ratios. Second, the GCFR module incorporates spatially adaptive pooling along with channel-wise additive and multiplicative fusion mechanisms, enabling the capture of global semantic information across regions. This improves detection performance for morphologically diverse defects and effectively enhances spatial consistency and robustness through the integration of global context. Finally, the MSAFM module employs multi-scale attention fusion to adaptively assign saliency weights across features of different scales, thereby suppressing background interference and strengthening the model’s unified response to defects of varying sizes, reducing the miss rate. In summary, these three modules are designed to address high-frequency microstructure modeling, long-range context perception, and scale-invariant feature representation, respectively, complementing the limitations of traditional spatial convolutions in feature representation from multiple perspectives.

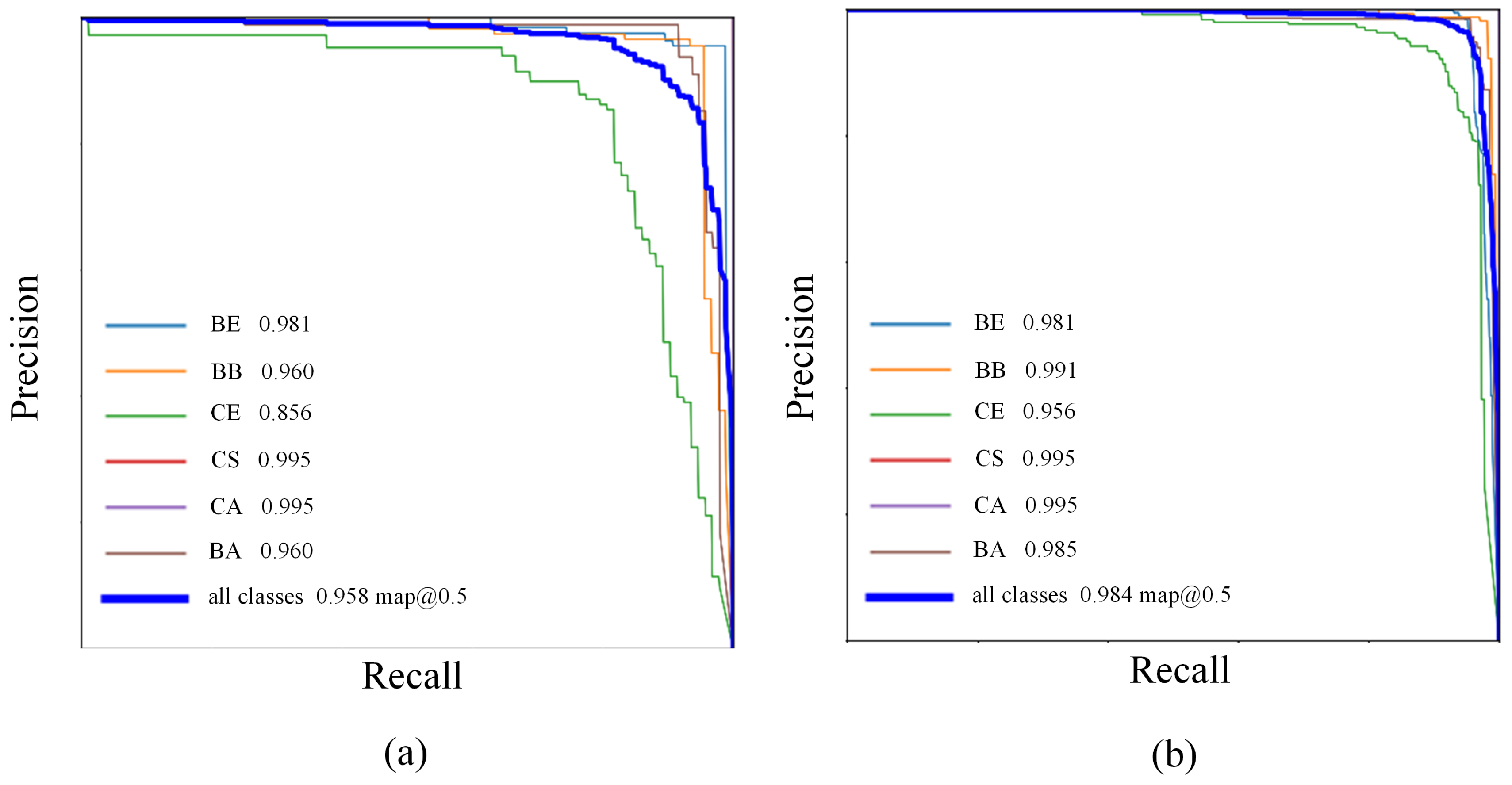

From the perspective of pharmaceutical quality inspection, key performance indicators for detection models include Precision, Recall, and mAP@50. Under the dataset and testing conditions of this study, SAF-YOLO achieved a 3.6% increase in Recall for crack-type defects, indicating that the model can effectively reduce the misclassification of Lyophilized Vial with scratches or potential contamination risks as acceptable products, thereby significantly lowering the miss rate of defective Lyophilized Vial. This improvement is crucial for ensuring the sterility and packaging integrity of pharmaceutical production. Furthermore, considering the real-time detection requirements of industrial production lines,

Section 3.4.1 (

Table 5) provides a quantitative analysis of the model’s parameters, GFLOPs, and inference latency. The results show that SAF-YOLO substantially improves detection sensitivity while maintaining a low computational load and stable real-time frame rates. Its lightweight design enables deployment on high-speed production lines without compromising throughput. In summary, SAF-YOLO not only achieves improvements in detection accuracy but also demonstrates efficiency and deployability, highlighting its industrial applicability and offering a feasible and effective solution for automated quality control in Lyophilized Vial production lines.

Future research could focus on the following directions:

Employing lightweight architectures and model compression strategies to improve real-time performance and deployment efficiency in industrial settings.

Utilizing self-supervised or few-shot learning techniques to address challenges associated with high data acquisition costs and limited annotation availability.

During the model training phase, a federated learning or distributed training framework can be adopted, allowing each production line or inspection station to retain local data while only sharing model updates (such as gradients or weights) instead of raw images. This approach enables localized data processing and protects sensitive inspection data [

28].