Enhanced Knowledge Graph Completion Based on Structure-Aware and Semantic Fusion Driven by Large Language Models

Abstract

1. Introduction

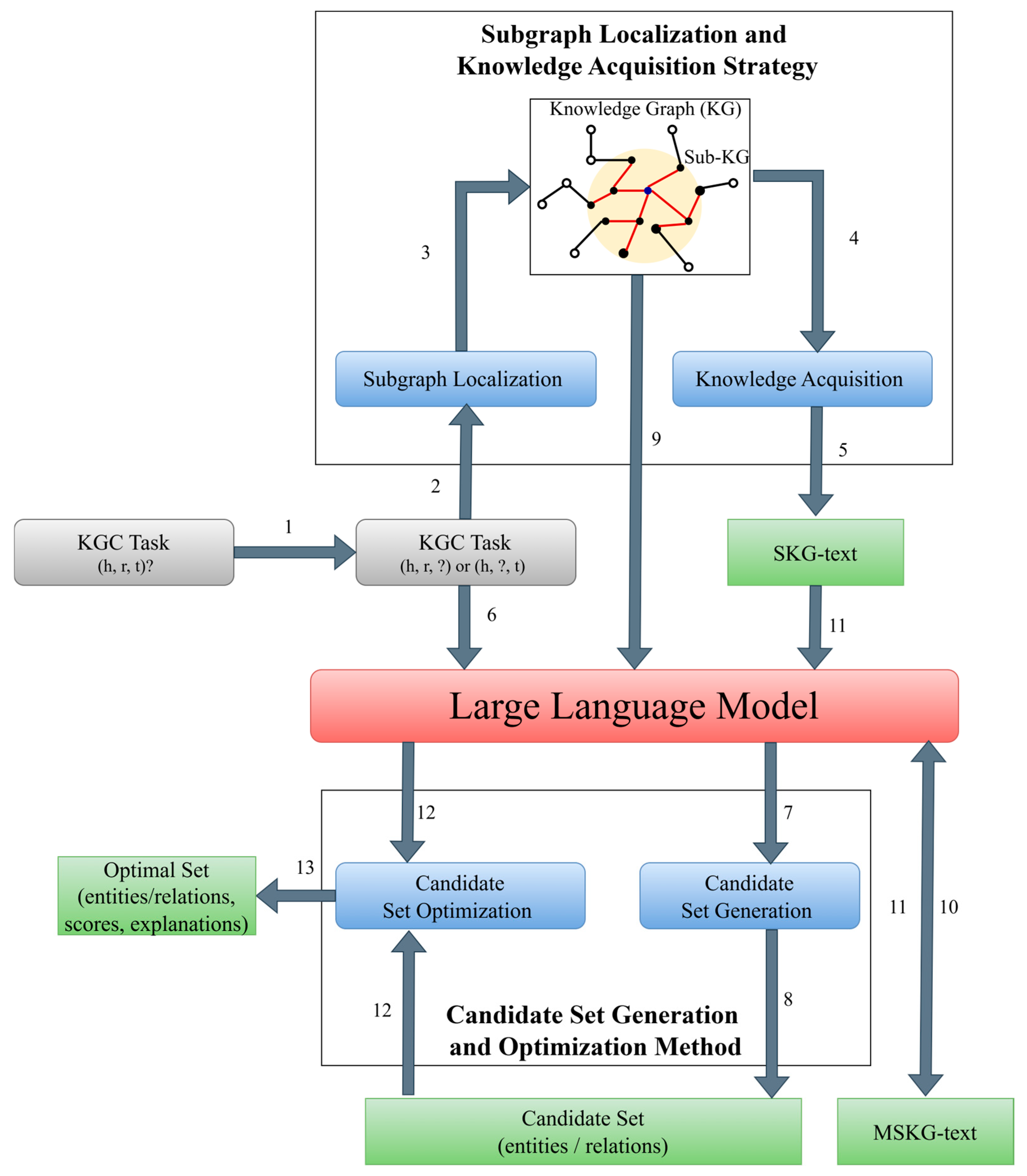

- To embed and integrate the structural knowledge information of KGs with the knowledge generated by LLMs, we propose a KGC framework based on structure-aware and semantic fusion driven by LLMs, which can enhance the interpretability and accuracy of inferences.

- To fully utilize the complex structural and textual information in KGs, we propose a subgraph localization and knowledge acquisition strategy that can reduce the search space of KGs and the occurrence of “hallucinations” of LLMs, improving the efficiency of semantic understanding and content generation of LLMs.

- To enrich additional semantic knowledge of the incomplete triple, a candidate set generation and optimization method driven by LLMs is proposed that can obtain high-quality and broader entities or relations.

2. Related Work

2.1. Knowledge Graph Completion

2.2. LLMs-Based KGC

3. Methodology

3.1. Problem Definition

3.2. Framework

| Algorithm 1 The E-KGC framework process |

| Input: KGC task (h, r, t)? or (h, r, ?) or (h, ?, t); KG ; LLM M Output: Optimal set or 1: if KGC task is (h, r, ?) or (h, ?, t) then 2: Construct Sub-KG from according to target entity 3: Obtain SKG-text from Sub-KG 4: Get or according to h and r or h and t using M 5: Generate MSKG-text according to all entities and relations of Sub-KG using M 6: ← or ← according to SKG-text, MSKG-text using M 7: if KGC task is (h, r, t)? then 8: Convert (h, r, t)? to (h, r, ?) 9: repeat line 2–line 6 10: if t in of then 11: (h, r, t)? ← “Yes” 12: else 13: (h, r, t)? ← “No” |

3.3. Subgraph Localization and Knowledge Acquisition Strategy

| Algorithm 2 Subgraph localization and knowledge acquisition |

| Input: Target entity e; KG ; Maximum hop distance max_hops Output: Text of the entity and relation in the subgraph SKG-text 1: E_visited ← E_visited∪{e} 2: E_sub ← E_sub∪{e} 3: Q.enqueue((e, 0)) // Add target entity and current hop distance into queue Q 4: while Q is not empty do 5: (e_curr, h_curr) ← Q.dequeue // Get an entity and hop distance from the queue 6: if h_curr < max_hops then 7: for each triple (e_curr, r, e_tail) ∈ do // Outgoing relations 8: if e_tail ∉ E_visited then 9: E_visited ← E_visited∪{e_tail} 10: E_sub ← E_sub∪{e_tail} 11: Q.enqueue((e_tail, h_curr + 1)) 12: end if 13: end for 14: for each triple (e_head, r, e_curr) ∈ do // Incoming relations 15: if e_head ∉ E_visited then 16: E_visited ← E_visited∪{e_head} 17: E_sub ← E_sub∪{e_head} 18: Q.enqueue((e_head, h_curr + 1)) 19: end if 20: end for 21: end if 22: end while 23: for each entity e′ ∈ E_sub do 24: for each triple (eh, r, e) ∈ where eh = e′ or e = e′ do 25: if eh ∈ E_sub and e ∈ E_sub then 26: T_sub ← T_sub∪{(eh, r, e)} 27: end if 28: end for 29: end for 30: Sub-KG = (E_sub, T_sub) 31: for each triple (h′, r′, t′) ∈ T_sub do 32: h′_text ← D(h′) 33: r′_text ← D(r′) 34: t′_text ← D(t′) 35: D′ ← D′∪{(h′_text, r′_text, t′_text, h′_text r′_text t′_text)} 36: end for 37: for each entity e″ ∈ E_sub do 38: base_text ← Connection (e″, A) // Multiple attribute connection 39: d ← e″.getAttribute(“description”) 40: D′(e″) ← D’(e’’)∪{base_text d} 41: end for 42: SKG-text ←D′ D′(e″) |

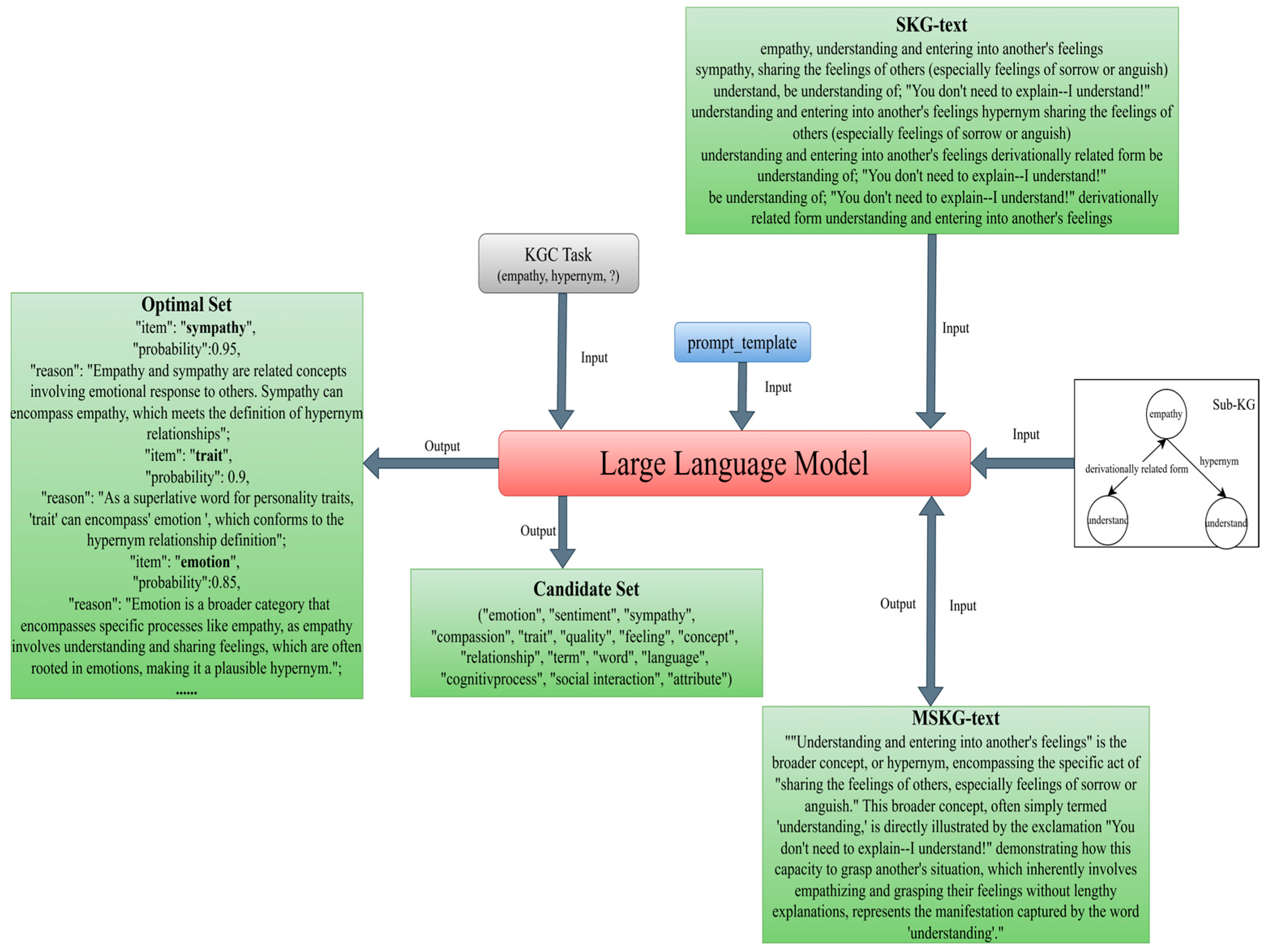

3.4. LLM-Guided Candidate Set Generation and Optimization Method

| Algorithm 3 LLM-guided candidate set generation and optimization |

| Input: Text of the entity and relation in the subgraph SKG-text; Target entity et and relation rt; LLM M; Sub-KG; Maximum selected item K; Number of candidate items N Output: Optimal set or 1: prompt_template_1 ← “Based on items” {et} “and” {rt} “predict the candidate item set for another item in the triple. The requirements are: (a) the number of candidate items is” {N}, “(b) the candidate items are sorted in descending order of prediction probability, (c) output in set format.” 2: or ← M(prompt_template_1) 3: for each triple (h, r, t) ∈ T_sub do 4: prompt_template_2 ← “Please generate a coherent and natural text description based on” {(h, r, t)}. 5: Sentence ←Sentence∪{M(prompt_template_2)} 6: end for 7: prompt_template_3 ← “Please connect each item in” {Sentence} “and optimize it into natural language text according to the following requirements. Requirement: (a) maintain semantic coherence, (b) eliminate redundant expressions, (c) ensure entity referential consistency.” 8: MSKG-text ←M(prompt_template_3) 9: for each item i ∈ or do 10: prompt_template_4 ← “Based on” {SKG-text} “and” {MSKG-text} “analyze the possibility of” {i} “as a predicted item and display the reasons for the prediction. Requirement: output format (item, prediction probability, reason).” 11: Set ←Set∪{M(prompt_template_4)} 12: end for 13: or ← Sort in descending order based on probability and select the top K values. |

4. Experiment

4.1. Experiment Settings

4.1.1. Datasets

4.1.2. Baselines

4.1.3. Metrics

4.1.4. Experiment Details

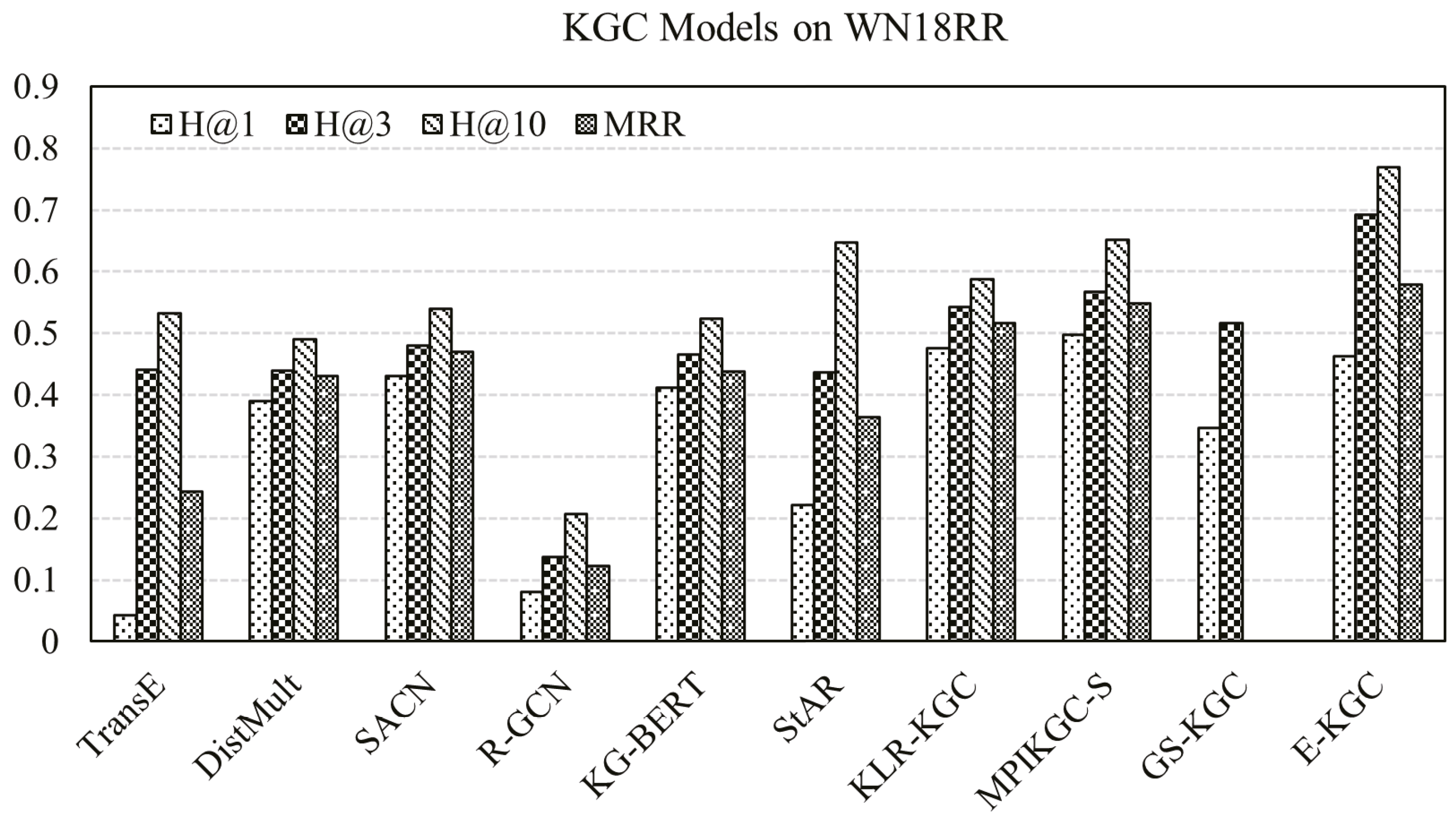

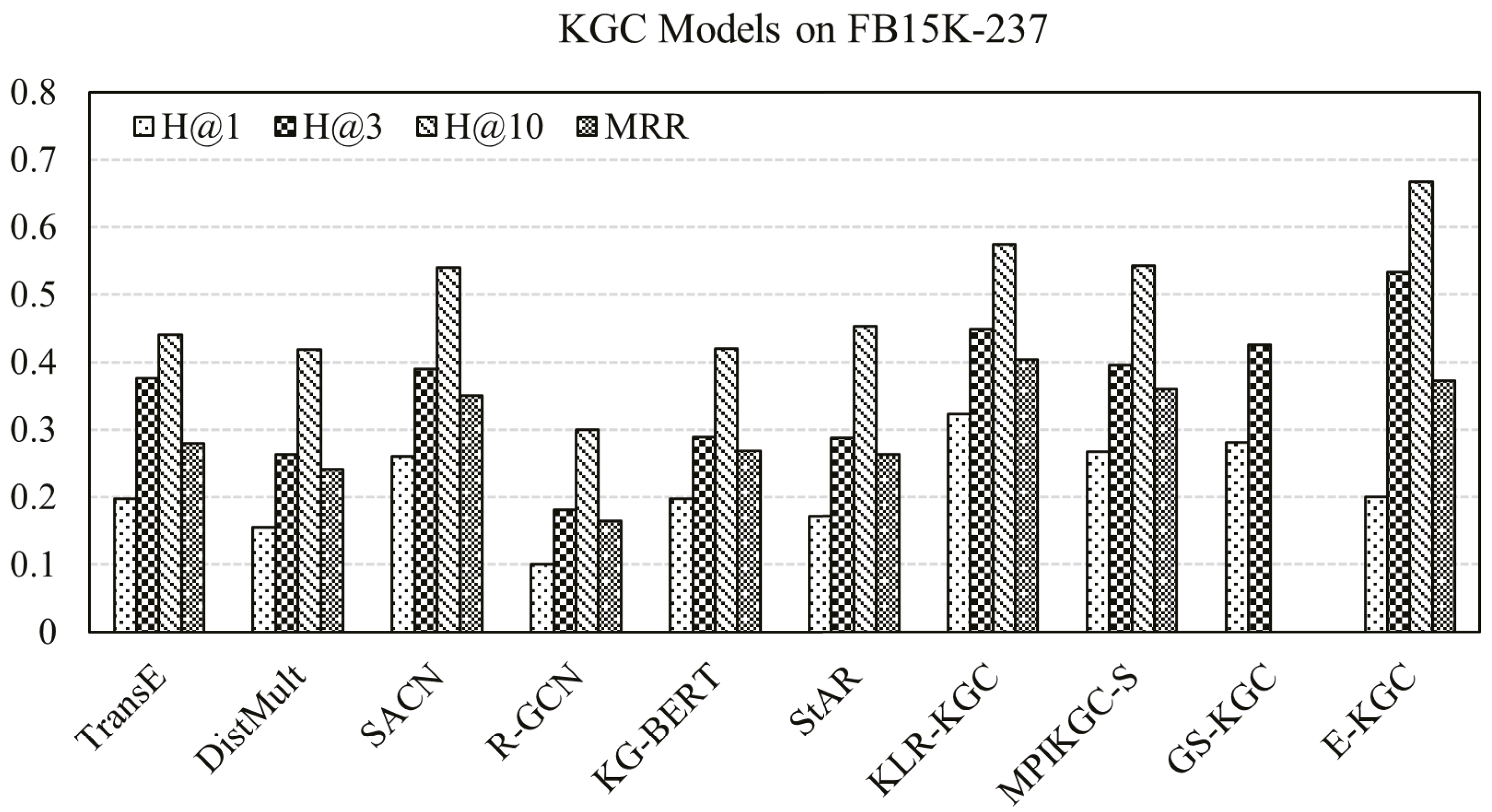

4.2. Experiment Results

4.2.1. E-KGC Performance

4.2.2. Ablation Experiment

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kong, Y.; Liu, X.; Zhao, Z.; Zhang, D.; Duan, J. Bolt defect classification algorithm based on knowledge graph and feature fusion. Energy Rep. 2022, 8, 856–863. [Google Scholar] [CrossRef]

- West, R.; Gabrilovich, E.; Murphy, K.; Sun, S.; Gupta, R.; Lin, D. Knowledge base completion via search-based question answering. In Proceedings of the 23rd International Conference on World Wide Web, Seoul, Republic of Korea, 7–11 April 2014; pp. 515–526. [Google Scholar]

- GUAN Sai-Ping, J.X.-L. Knowledge Reasoning Over Knowledge Graph: A Survey. J. Softw. 2018, 29, 2966–2994. [Google Scholar]

- Lao, N.; Cohen, W.W. Relational retrieval using a combination of path-constrained random walks. Mach. Learn. 2010, 81, 53–67. [Google Scholar] [CrossRef]

- Wei, Y.; Luo, J.; Xie, H. KGRL: An OWL2 RL Reasoning System for Large Scale Knowledge Graph. In Proceedings of the 12th International Conference on Semantics, Knowledge and Grids (SKG), Beijing, China, 15–17 August 2016; pp. 83–89. [Google Scholar]

- Kou, F.; Du, J.; Yang, C.; Shi, Y.; Liang, M.; Xue, Z.; Li, H. A multi-feature probabilistic graphical model for social network semantic search. Neurocomputing 2019, 336, 67–78. [Google Scholar] [CrossRef]

- Zhao, F.; Yan, C.; Jin, H.; He, L. BayesKGR: Bayesian Few-Shot Learning for Knowledge Graph Reasoning. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2023, 22, 1–21. [Google Scholar] [CrossRef]

- Yao, L.; Mao, C.; Luo, Y. KG-BERT: BERT for Knowledge Graph Completion. arXiv 2019, arXiv:1909.03193. [Google Scholar] [CrossRef]

- Wang, L.; Zhao, W.; Wei, Z.; Liu, J. SimKGC: Simple Contrastive Knowledge Graph Completion with Pre-trained Language Models. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, ACL, Dublin, Ireland, 22–27 May 2022; Volume 1, pp. 4281–4294. [Google Scholar]

- Ji, S.; Liu, L.; Xi, J.; Zhang, X.; Li, X. KLR-KGC: Knowledge-Guided LLM Reasoning for Knowledge Graph Completion. Electronics 2024, 13, 5037. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, W.; Jiang, Z.; Wang, Z. Exploring Knowledge from Knowledge Graphs and Large Language Models for Link Prediction. In Proceedings of the 2024 IEEE International Conference on Knowledge Graph (ICKG), Abu Dhabi, United Arab Emirates, 11–12 December 2024; pp. 485–491. [Google Scholar]

- Zhang, Y.; Chen, Z.; Guo, L.; Xu, Y.; Zhang, W.; Chen, H. Making Large Language Models Perform Better in Knowledge Graph Completion. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, VIC, Australia, 28 October–1 November 2024; pp. 233–242. [Google Scholar]

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Chen, Q.; Peng, W.; Feng, X.; Qin, B.; et al. A Survey on Hallucination in Large Language Models: Principles, Taxonomy, Challenges, and Open Questions. ACM Trans. Inf. Syst. 2025, 43, 1–55. [Google Scholar] [CrossRef]

- Bordes, A.; Usunier, N.; García-Durán, A.; Weston, J.; Yakhnenko, O. Translating Embeddings for Modeling Multi-relational Data. In Proceedings of the Advances in Neural Information Processing Systems 26: 27th Annual Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–10 December 2013; pp. 2787–2795. [Google Scholar]

- Yang, B.; Yih, W.; He, X.; Gao, J.; Deng, L. Embedding Entities and Relations for Learning and Inference in Knowledge Bases. In Proceedings of the 3rd International Conference on Learning Representations, ICLR, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Trouillon, T.; Welbl, J.; Riedel, S.; Gaussier, É.; Bouchard, G. Complex Embeddings for Simple Link Prediction. In Proceedings of the 33nd International Conference on Machine Learning, ICML, New York, NY, USA, 19–24 June 2016; JMLR Workshop and Conference Proceedings. Volume 48, pp. 2071–2080. [Google Scholar]

- Dettmers, T.; Minervini, P.; Stenetorp, P.; Riedel, S. Convolutional 2D Knowledge Graph Embeddings. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence (AAAI-18), New Orleans, LA, USA, 2–7 February 2018; pp. 1462–2061. [Google Scholar]

- Shang, C.; Tang, Y.; Huang, J.; Bi, J.; He, X.; Zhou, B. End-to-End Structure-Aware Convolutional Networks for Knowledge Base Completion. In Proceedings of the 33nd AAAI Conference on Artificial Intelligence (AAAI-19), Honolulu, HI, USA, 27 January–1 February 2019; pp. 3060–3067. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Kim, B.; Hong, T.; Ko, Y.; Seo, J. Multi-Task Learning for Knowledge Graph Completion with Pre-trained Language Models. In Proceedings of the 28th International Conference on Computational Linguistics, COLING 2020, Barcelona, Spain, 8–13 December 2020; pp. 1737–1743. [Google Scholar]

- Zhang, J.; Shen, B.; Zhang, Y. Learning hierarchy-aware complex knowledge graph embeddings for link prediction. Neural Comput. Appl. 2024, 36, 13155–13169. [Google Scholar] [CrossRef]

- Petroni, F.; Rocktäschel, T.; Lewis, P.; Bakhtin, A.; Wu, Y.; Miller, A.H.; Riedel, S. Language models as knowledge bases? arXiv 2019, arXiv:1909.01066. [Google Scholar] [CrossRef]

- Li, W.; Ge, J.; Feng, W.; Zhang, L.; Li, L.; Wu, B. SIKGC: Structural Information Prompt Based Knowledge Graph Completion with Large Language Models. In Proceedings of the 2024 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Kuching, Malaysia, 6–10 October 2024; pp. 1116–1123. [Google Scholar]

- Guan, X.; Liu, Y.; Lin, H.; Lu, Y.; He, B.; Han, X.; Sun, L. Mitigating large language model hallucinations via autonomous knowledge graph-based retrofitting. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 24 March 2024; Volume 38, pp. 18126–18134. [Google Scholar]

- Yao, L.; Peng, J.Z.; Mao, C.S.; Luo, Y. Exploring Large Language Models for Knowledge Graph Completion. In Proceedings of the 2025 IEEE International Conference on Acoustics, Speech, and Signal Processing, Hyderabad, India, 6–11 April 2025. [Google Scholar]

- Yang, R.; Zhu, J.; Man, J.; Liu, H.; Fang, L.; Zhou, Y. GS-KGC: A generative subgraph-based framework for knowledge graph completion with large language models. Inf. Fusion 2025, 117, 102868. [Google Scholar] [CrossRef]

- Toutanova, K.; Chen, D.; Pantel, P.; Poon, H.; Choudhury, P.; Gamon, M. Representing Text for Joint Embedding of Text and Knowledge Bases. In Proceedings of the Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1499–1509. [Google Scholar]

- Schlichtkrull, M.; Kipf, T.N.; Bloem, P.; van den Berg, R.; Titov, I.; Welling, M. Modeling Relational Data with Graph Convolutional Networks. Semant. Web 2018, 10843, 593–607. [Google Scholar]

- Xu, D.; Zhang, Z.; Lin, Z.; Wu, X.; Zhu, Z.; Xu, T.; Zhao, X.; Zheng, Y.; Chen, E. Multi-perspective Improvement of Knowledge Graph Completion with Large Language Models. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), Torino, Italia, 20–25 May 2024; pp. 11956–11968. [Google Scholar]

- Akrami, F.; Saeef, M.S.; Zhang, Q.; Hu, W.; Li, C. Realistic Re-evaluation of Knowledge Graph Completion Methods: An Experimental Study. In Proceedings of the 2020 ACM SIGMOD International Conference on Management of Data, New York, NY, USA, 14–19 June 2020; pp. 1995–2010. [Google Scholar]

| Dataset | Entity | Relation | Train | Valid | Test |

|---|---|---|---|---|---|

| WN18RR | 40,943 | 11 | 86,835 | 3034 | 3134 |

| FB15k-237 | 14,541 | 237 | 272,115 | 17,535 | 20,466 |

| Baseline | Type | Description |

|---|---|---|

| TransE [14] | KGE-based | By representing entities and relationships as translational relationships in vector space. |

| DistMult [15] | KGE-based | The interaction between entities and relationships is represented by bilinear models. |

| SACN [18] | GNN-based | It has the capability to aggregate local information from neighboring nodes within the graph for each individual node. |

| R-GCN [28] | GNN-based | It achieves this by uniformly considering the neighborhood of each entity through hierarchical propagation rules, making it well suited for directed graphs. |

| KG-BERT [8,20] | PLM-based | It demonstrates that PLMs can be fine-tuned on triple classification tasks by encoding entities and relations in textual form. |

| StAR [21] | PLM-based | It integrates structural constraints into PLMs using entity markers, knowledge-aware attention, or auxiliary graph encoders to address the semantic-structure gap. |

| KLR-KGC [10] | LLM-based | It directs the LLM to filter and prioritize candidate entities, thereby constraining its output to minimize omissions and erroneous answers. |

| MPIKGC-S [29] | LLM-based | It compensates for the deficiency of contextualized knowledge and improve KGC by querying LLMs from various perspectives. |

| GS-KGC [26] | LLM-based | It leverages subgraph details for contextual reasoning and adopts a question-answering (QA) strategy to accomplish the KGC tasks. |

| Model | WN18RR | FB15K-237 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| H@1 | H@3 | H@10 | MRR | H@1 | H@3 | H@10 | MRR | ||

| KGE-based | TransE | 0.043 | 0.441 | 0.532 | 0.243 | 0.198 | 0.376 | 0.441 | 0.279 |

| DistMult | 0.390 | 0.440 | 0.490 | 0.430 | 0.155 | 0.263 | 0.419 | 0.241 | |

| GNN-based | SACN | 0.430 | 0.480 | 0.540 | 0.470 | 0.260 | 0.390 | 0.540 | 0.350 |

| R-GCN | 0.080 | 0.137 | 0.207 | 0.123 | 0.100 | 0.181 | 0.300 | 0.164 | |

| PLM-based | KG-BERT | 0.412 | 0.465 | 0.524 | 0.438 | 0.197 | 0.289 | 0.420 | 0.268 |

| StAR | 0.222 | 0.436 | 0.647 | 0.364 | 0.171 | 0.287 | 0.452 | 0.263 | |

| LLM-based | KLR-KGC | 0.476 | 0.542 | 0.587 | 0.516 | 0.323 | 0.449 | 0.574 | 0.404 |

| MPIKGC-S | 0.497 | 0.568 | 0.652 | 0.549 | 0.267 | 0.395 | 0.543 | 0.360 | |

| GS-KGC | 0.346 | 0.516 | - | - | 0.280 | 0.426 | - | - | |

| E-KGC | 0.462 | 0.692 | 0.769 | 0.579 | 0.200 | 0.533 | 0.667 | 0.372 | |

| Model | WN18RR | FB15K-237 | ||||||

|---|---|---|---|---|---|---|---|---|

| H@1 | H@3 | H@10 | MRR | H@1 | H@3 | H@10 | MRR | |

| E-KGC | 0.462 | 0.692 | 0.769 | 0.579 | 0.200 | 0.533 | 0.667 | 0.372 |

| w/o SKG | 0.325 | 0.452 | 0.673 | 0.525 | 0.154 | 0.493 | 0.572 | 0.314 |

| w/o LLM | 0.203 | 0.416 | 0.476 | 0.478 | 0.123 | 0.487 | 0.523 | 0.325 |

| w/o prompt | 0.109 | 0.352 | 0.426 | 0.464 | 0.087 | 0.411 | 0.443 | 0.265 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, J.; Asmuni, H.; Wang, K.; Li, Y. Enhanced Knowledge Graph Completion Based on Structure-Aware and Semantic Fusion Driven by Large Language Models. Electronics 2025, 14, 4521. https://doi.org/10.3390/electronics14224521

Hu J, Asmuni H, Wang K, Li Y. Enhanced Knowledge Graph Completion Based on Structure-Aware and Semantic Fusion Driven by Large Language Models. Electronics. 2025; 14(22):4521. https://doi.org/10.3390/electronics14224521

Chicago/Turabian StyleHu, Jing, Hishammuddin Asmuni, Kun Wang, and Yingying Li. 2025. "Enhanced Knowledge Graph Completion Based on Structure-Aware and Semantic Fusion Driven by Large Language Models" Electronics 14, no. 22: 4521. https://doi.org/10.3390/electronics14224521

APA StyleHu, J., Asmuni, H., Wang, K., & Li, Y. (2025). Enhanced Knowledge Graph Completion Based on Structure-Aware and Semantic Fusion Driven by Large Language Models. Electronics, 14(22), 4521. https://doi.org/10.3390/electronics14224521