LCB-Net: Long-Range Context and Box Distribution Network for Small Object Detection

Abstract

1. Introduction

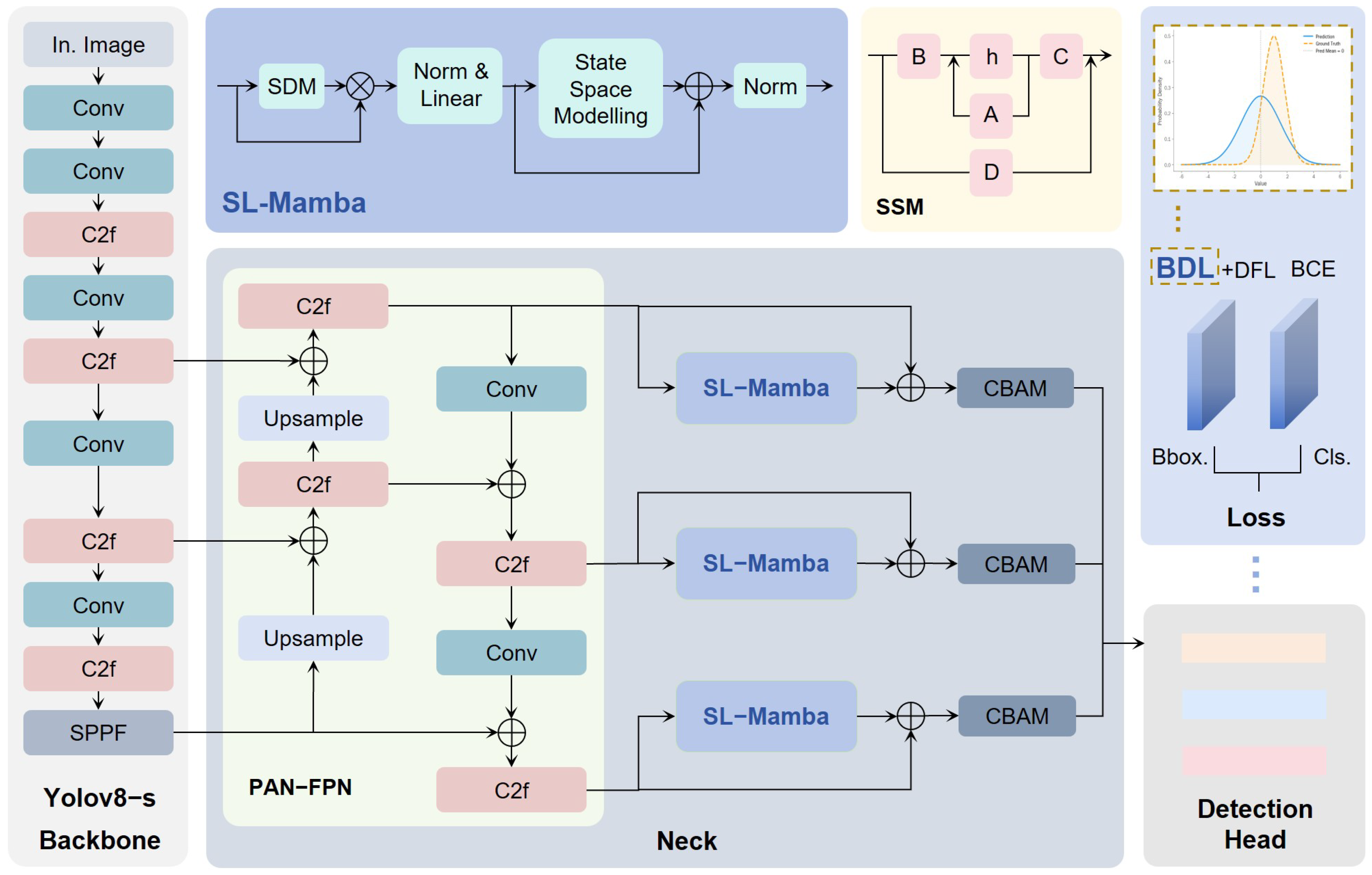

- To address the limited ability of SOD models in modeling long-range contextual relationships, we propose a novel SOD framework that integrates long-range context modeling and bounding box distribution learning.

- We introduce the SL-Mamba module, which efficiently establishes long-range dependencies between pixels, overcoming the architectural limitations of traditional CNNs and local attention mechanisms. Additionally, a residual fusion mechanism is incorporated to synergistically enhance local and global information.

- We employ multivariate Gaussian distribution to model bounding box probability distributions and construct a corresponding loss function. This approach mitigates localization ambiguity and uncertainty, significantly improving detection accuracy.

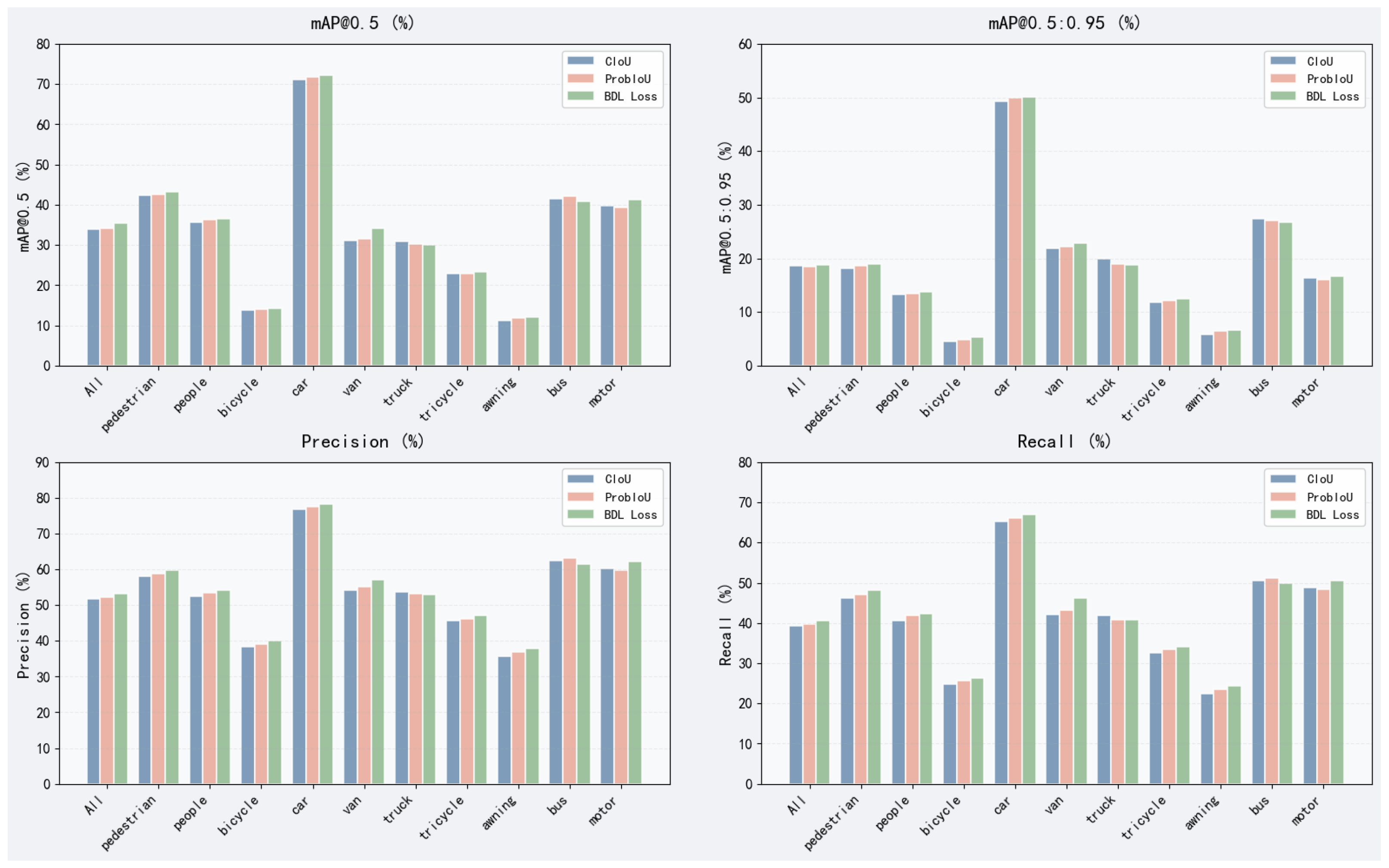

- Extensive experiments validate the effectiveness of SL-Mamba on small object detection datasets. Specifically, on the VisDrone dataset, our method achieves a 4.3% improvement in mAP@0.5:0.95 compared to baseline approaches. Furthermore, the proposed BDL demonstrates superior localization performance over both CIoU and ProbIoU on both small object and general object detection datasets.

2. Related Work

2.1. Feature Fusion-Based Small Object Detection

2.2. Context-Aware Small Object Detection

2.3. Image Enhancement-Based Small Object Detection

2.4. Region Proposal-Based Small Object Detection

3. Method

3.1. Overview

3.2. Preliminaries

3.2.1. YOLOv8 Architecture

3.2.2. State Space Models and Mamba

3.2.3. Label Distribution Learning

3.3. Saliency-Guided Long-Range Mamba

3.4. Bounding Box Distribution Loss Function

4. Results

4.1. Datasets and Evaluation Metrics

4.2. Implementation Details

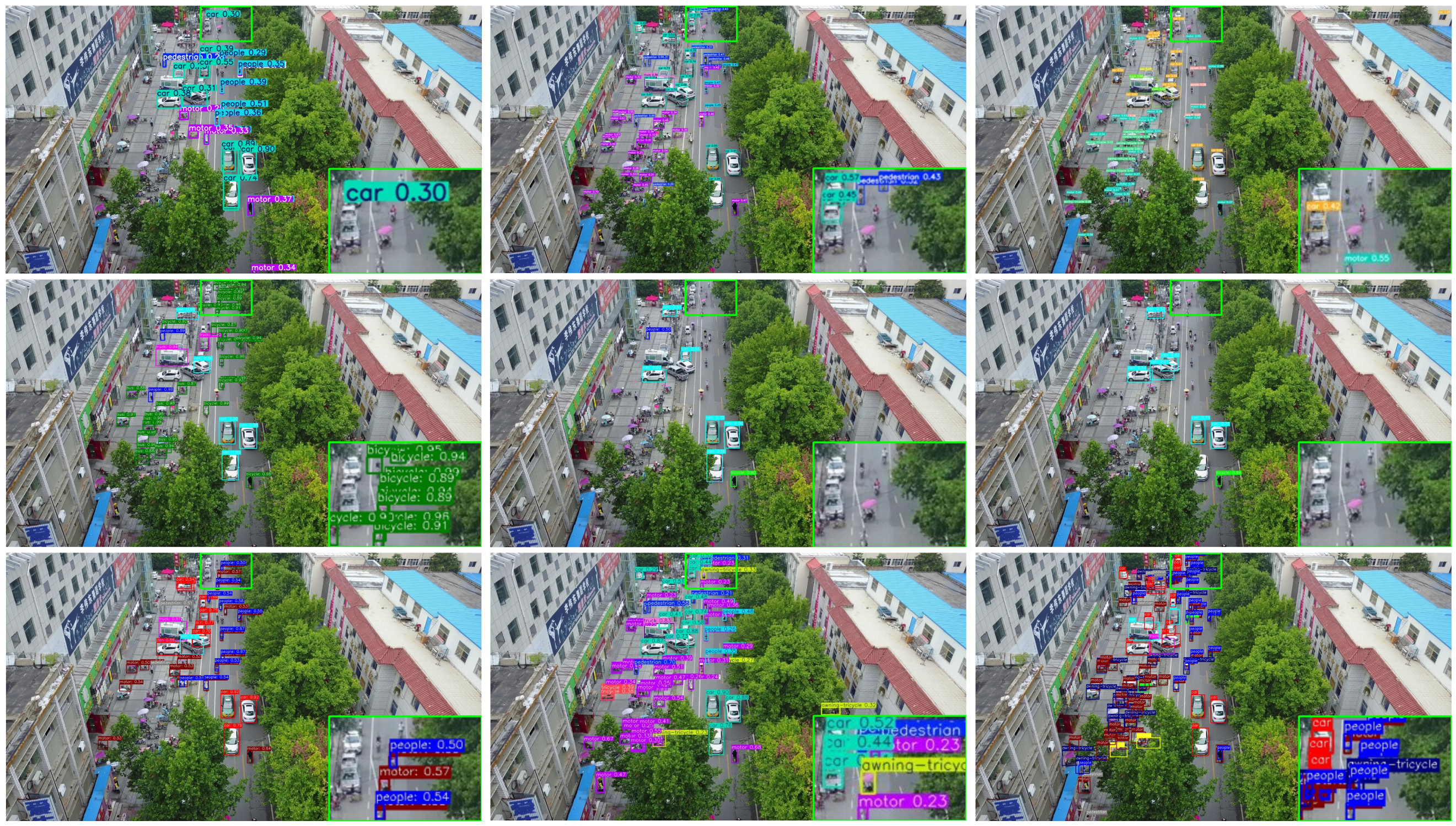

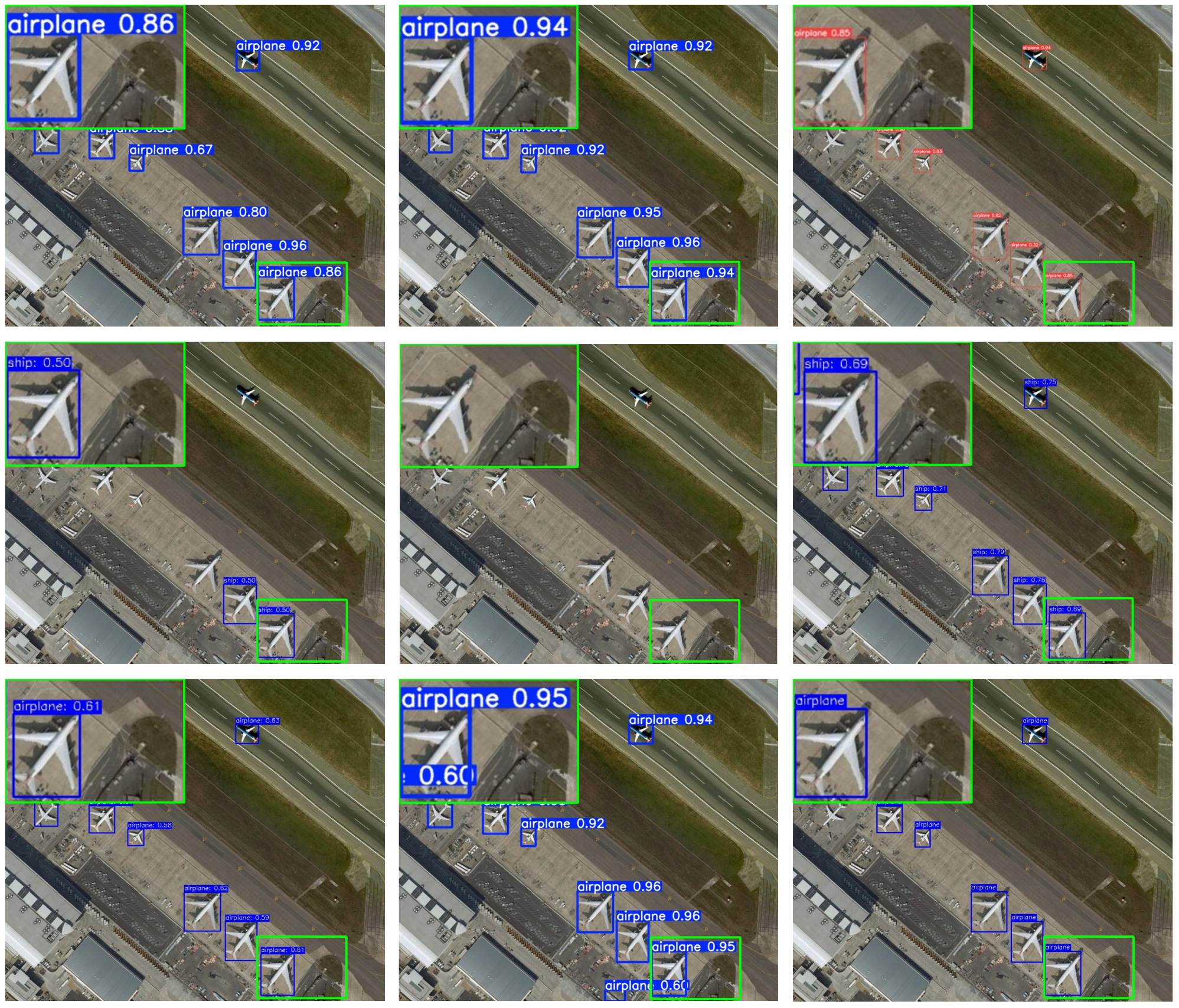

4.3. Comparison with State-of-the-Art Methods

4.4. Ablation Study

- Addressing gradient vanishment at IoU = 0. Traditional IoU-based loss functions exhibit fundamental limitations when handling non-overlapping bounding boxes: once IoU becomes zero, the loss saturates at a fixed value, failing to reflect the spatial relationship between completely separated boxes. This binary failure mode leads to gradient vanishing and optimization stagnation. In contrast, the proposed BDL framework circumvents this issue through distributional modeling. By measuring the Mahalanobis distance between the predicted and ground-truth distributions, BDL maintains sensitivity even in non-overlapping scenarios. The covariance matrix enables quantitative assessment of distributional divergence, ensuring continuous gradient flow and stable optimization regardless of overlap conditions.

- Mitigating oversensitivity to small object annotations. Small object detection is particularly susceptible to annotation noise and variance due to the low pixel coverage and inherent localization ambiguity. Conventional regression losses treat all dimensional errors equally, often forcing the model to overfit to annotation inaccuracies. BDL introduces an intelligent weighting mechanism through the inverse covariance matrix . When high uncertainty exists in certain dimensions (e.g., height and width of tiny objects), the corresponding elements in automatically downweight their contribution to the total loss. This uncertainty-aware design redirects the model’s focus toward more reliable dimensions during optimization, effectively suppressing overfitting to noisy annotations and improving generalization performance.

- Probabilistic representation for enhanced robustness. By modeling bounding boxes as multivariate Gaussian distributions, BDL fundamentally enhances the robustness of small object detection. The probabilistic representation naturally accommodates the inherent ambiguity in small object localization, transforming the learning objective from deterministic fitting to distributional alignment. This approach not only resolves the gradient vanishment issue but also provides a principled mechanism for handling annotation uncertainties, ultimately leading to more efficient and accurate detection of challenging small objects.

4.5. Analysis of Challenging Conditions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wang, H.; Gao, P. Survey Of Small Object Detection Methods Based On Deep Learning. In Proceedings of the 2024 9th International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Okinawa, Japan, 21–23 November 2024; Volume 9, pp. 221–224. [Google Scholar] [CrossRef]

- Zheng, X.; Bi, J.; Li, K.; Zhang, G.; Jiang, P. SMN-YOLO: Lightweight YOLOv8-Based Model for Small Object Detection in Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2025, 22, 8001305. [Google Scholar] [CrossRef]

- Wang, G.; Liu, X.; Wang, Y.; Li, X.; Zhang, H.; Jiang, M.; Song, S. Deep learning for pulmonary nodule detection: A comparative study of 2D and 3D convolutional neural networks. Med. Image Anal. 2021, 71, 102052. [Google Scholar]

- Zhang, M.; Wang, Y.; Lin, J.; Zhao, Y. Transformer-based small object detection in high-resolution remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2023, 197, 309–322. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Cui, L.; Jiang, R.; Li, Z. MDSSD: Multi-scale deconvolutional single shot detector for small objects. IEEE Trans. Image Process. 2019, 28, 829–840. [Google Scholar] [CrossRef]

- Liu, Z.; Li, D.; Ge, S.S.; Tian, F. Small traffic sign detection from large image. Appl. Intell. 2019, 49, 2001–2013. [Google Scholar] [CrossRef]

- Li, Y.; Huang, Q.; Pei, X.; Chen, Y.; Jiao, L.; Shang, R. Cross-Layer Attention Network for Small Object Detection in Remote Sensing Imagery. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2021, 14, 2148–2161. [Google Scholar] [CrossRef]

- Lee, G.; Hong, S.; Cho, D. Self-Supervised Feature Enhancement Networks for Small Object Detection in Noisy Images. IEEE Signal Process. Lett. 2021, 28, 1026–1030. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, S.; Yu, Z.; Feng, L.; Zhang, W. Scale-Equalizing Pyramid Convolution for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 13356–13365. [Google Scholar]

- Wang, Y.; Li, H.; Bai, X. MR-CNN: Multi-scale region-based CNN for small object recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; AAAI Press: Palo Alto, CA, USA, 2019; pp. 9213–9220. [Google Scholar]

- Bell, S.; Zitnick, C.L.; Bala, K.; Girshick, R. Inside-outside net: Detecting objects in context with skip pooling and recurrent neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, LA, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 2874–2883. [Google Scholar]

- Zhang, N.; Donahue, J.; Girshick, R.; Darrell, T. Part-based R-CNNs for fine-grained category detection. In Proceedings of the Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Lecture Notes in Computer Science. Springer: Cham, Switzerland, 2014; Volume 8689. [Google Scholar]

- Müller, J.; Dietmayer, K. Traffic light detection using deep learning with spatial pyramid pooling. In Proceedings of the IEEE Intelligent Vehicles Symposium, Changshu, China, 26–30 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 680–685. [Google Scholar]

- Chan, S.; Yu, M.; Chen, Z.; Mao, J.; Bai, C. Regional Contextual Information Modeling for Small Object Detection on Highways. IEEE Trans. Instrum. Meas. 2023, 72, 2531613. [Google Scholar] [CrossRef]

- Cheng, G.; Yuan, X.; Yao, X.; Yan, K.; Zeng, Q.; Xie, X.; Han, J. Towards Large-Scale Small Object Detection: Survey and Benchmarks. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 13467–13488. [Google Scholar] [CrossRef]

- Li, J.; Liang, X.; Wei, Y.; Xu, T.; Feng, J.; Yan, S. Perceptual generative adversarial networks for small object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1222–1230. [Google Scholar]

- Bai, Y.; Zhang, Y.; Ding, M.; Ghanem, B. SOD-MTGAN: Small object detection via multi-task generative adversarial network. In Proceedings of the Computer Vision—ECCV 2018: 15th European Conference, Munich, Germany, 8–14 September 2018; Lecture Notes in Computer Science. Springer: Cham, Switzerland, 2018; Volume 11217. [Google Scholar]

- Yuan, Y.; Fu, R.; Huang, L.; Lin, W.; Zhang, C.; Chen, X.; Wang, J. HRFormer: High-resolution transformer for dense prediction. arXiv 2021, arXiv:2110.09408. [Google Scholar] [CrossRef]

- Nazeri, K.; Thasarathan, H.; Ebrahimi, M. Edge-informed single image super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 3008–3017. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Huang, X.; Zhou, H.; Zhang, Q. SRPN: Similarity-based region proposal networks for nuclei and cells detection in histology images. Med. Image Anal. 2021, 72, 102142. [Google Scholar] [CrossRef] [PubMed]

- Sun, P.; Zhang, R.; Jiang, Y.; Kong, T.; Xu, C.; Zhan, W.; Tomizuka, M.; Li, L.; Yuan, Z.; Wang, C.; et al. Sparse R-CNN: End-to-End Object Detection with Learnable Proposals. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 21–26 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 14449–14458. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 9992–10002. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 779–788. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO, (Version 8.0.0) [Computer Software]; Ultralytics: Ballenger Creek, MD, USA, 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 12 November 2025).

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-Time Sequence Modeling with Selective State Spaces. arXiv 2024, arXiv:2312.00752. [Google Scholar]

- Gu, A.; Goel, K.; Re, C. Efficiently Modeling Long Sequences with Structured State Spaces. arXiv 2022, arXiv:2111.00396. [Google Scholar] [CrossRef]

- Geng, X. Label distribution learning. IEEE Trans. Knowl. Data Eng. 2016, 28, 1734–1748. [Google Scholar] [CrossRef]

- Geng, X.; Yin, C.; Zhou, Z.H. Facial age estimation by learning from label distributions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 3422–3429. [Google Scholar]

- Xu, N.; Liu, Y.P.; Geng, X. Label Enhancement for Label Distribution Learning. IEEE Trans. Knowl. Data Eng. 2021, 33, 1632–1643. [Google Scholar] [CrossRef]

- Xu, N.; Hu, Y.; Qiao, C.; Geng, X. Aligned Objective for Soft-Pseudo-Label Generation in Supervised Learning. In Proceedings of the Forty-First International Conference on Machine Learning, ICML 2024, Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Xu, N.; Qiao, C.; Zhao, Y.; Geng, X.; Zhang, M.L. Variational Label Enhancement for Instance-Dependent Partial Label Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 11298–11313. [Google Scholar] [CrossRef]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked autoencoders are scalable vision learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 16000–16009. [Google Scholar]

- Dosovitskiy, A. An image is worth 16×16 words: Transformers for image recognition at scale. In Proceedings of the International Conference on Learning Representations, Virtual Event, 26–30 April 2020. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; AAAI Press: Palo Alto, CA, USA, 2020; pp. 12993–13000. [Google Scholar]

- Li, X.; Wang, W.; Wu, L.; Chen, S.; Hu, X.; Li, J.; Yang, J. Generalized focal loss: Learning qualified and distributed bounding boxes for dense object detection. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; pp. 21002–21012. [Google Scholar]

- Murrugarra-Llerena, J.; Kirsten, L.N.; Zeni, L.F.; Jung, C.R. Probabilistic Intersection-Over-Union for Training and Evaluation of Oriented Object Detectors. IEEE Trans. Image Process. 2024, 33, 671–681. [Google Scholar] [CrossRef]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and tracking meet drones challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7380–7399. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Zhou, P.; Guo, L. Multi-class geospatial object detection and geographic image classification based on collection of part detectors. ISPRS J. Photogramm. Remote Sens. 2014, 98, 119–132. [Google Scholar] [CrossRef]

- Zhang, S.; Xie, Y.; Wan, J.; Xia, H.; Li, S.Z.; Guo, G. WiderPerson: A Diverse Dataset for Dense Pedestrian Detection in the Wild. IEEE Trans. Multimed. (TMM) 2019, 22, 380–393. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; NanoCode012; Kwon, Y.; Michael, K.; Xie, T.; Fang, J.; Imphxy; et al. Ultralytics, YOLOv5 [Computer Software]; Ultralytics: Ballenger Creek, MD, USA, 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 12 November 2025).

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. In Proceedings of the International Conference on Learning Representations, Virtual, 3–7 May 2021. [Google Scholar]

- Zhu, F.; Chen, X.; Wang, J.; Loy, C.C.; Lin, D. Sparse DETR: Efficient End-to-End Object Detection with Learnable Sparsity. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 14464–14473. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-Time Object Detection. arXiv 2023, arXiv:2304.08069. [Google Scholar]

- Nix, D.; Weigend, A. Estimating the mean and variance of the target probability distribution. In Proceedings of the 1994 IEEE International Conference on Neural Networks (ICNN’94), Orlando, FL, USA, 27 June–2 July 1994; Volume 1, pp. 55–60. [Google Scholar] [CrossRef]

- Lakshminarayanan, B.; Pritzel, A.; Blundell, C. Simple and Scalable Predictive Uncertainty Estimation using Deep Ensembles. In Proceedings of the Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Chen, H.; Zendehdel, N.; Leu, M.C.; Yin, Z. Fine-grained activity classification in assembly based on multi-visual modalities. J. Intell. Manuf. 2023, 35, 2215–2233. [Google Scholar] [CrossRef]

| Methods | Precision | Recall | mAP@0.5 | mAP@0.5:0.95 |

|---|---|---|---|---|

| VisDrone | ||||

| YOLOv5s [46] | 48.3 | 35.2 | 34.9 | 19.5 |

| YOLOv8s [26] | 51.7 | 39.2 | 40.2 | 23.0 |

| YOLOv10s [47] | 54.6 | 42.1 | 44.1 | 26.7 |

| DETR [48] | – | – | 39.3 | 23.1 |

| Deformable DETR [49] | – | – | 43.7 | 26.8 |

| Sparse DETR [50] | – | – | 44.0 | 27.0 |

| RT-DETR-R18 [51] | – | – | 44.6 | 26.7 |

| LCB-Net (Ours) | 56.2 | 42.3 | 44.5 | 27.3 |

| WiderPerson | ||||

| YOLOv5s [46] | 81.1 | 66.2 | 78.7 | 59.3 |

| YOLOv8s [26] | 87.3 | 76.5 | 84.9 | 66.1 |

| YOLOv10s [47] | 89.2 | 77.2 | 86.3 | 66.6 |

| DETR [48] | – | – | 69.5 | 43.2 |

| Deformable DETR [49] | – | – | 76.5 | 48.6 |

| Sparse DETR [50] | – | – | 78.5 | 51.7 |

| RT-DETR-R18 [51] | – | – | 80.2 | 52.9 |

| LCB-Net (Ours) | 90.1 | 77.4 | 86.7 | 66.9 |

| NWPU-VHR-10 | ||||

| YOLOv5s [46] | 90.6 | 80.9 | 90.1 | 58.9 |

| YOLOv8s [26] | 92.1 | 81.1 | 91.3 | 61.8 |

| YOLOv10s [47] | 93.5 | 83.7 | 92.1 | 62.5 |

| DETR [48] | – | – | 89.7 | 45.9 |

| Deformable DETR [49] | – | – | 91.2 | 58.6 |

| Sparse DETR [50] | – | – | 91.6 | 59.2 |

| RT-DETR-R18 [51] | – | – | 92.5 | 59.9 |

| LCB-Net (Ours) | 94.1 | 84.6 | 92.4 | 62.7 |

| Methods | Params (M) | FLOPs (G) | FPS (RTX 4090) |

|---|---|---|---|

| YOLOv5s [46] | 7.2 | 16.5 | 244 |

| YOLOv8s [26] | 11.1 | 28.6 | 302 |

| YOLOv10s [47] | 8.3 | 23.8 | 395 |

| DETR [48] | 41.3 | 86.2 | 28 |

| Deformable DETR [49] | 39.8 | 173.0 | 19 |

| Sparse DETR [50] | 38.2 | 142.0 | 42 |

| RT-DETR-R18 [51] | 32.0 | 58.0 | 108 |

| LCB-Net (Ours) | 15.2 | 42.5 | 125 |

| Module Configuration | Precision | Recall | mAP@0.5 | mAP@0.5:0.95 |

|---|---|---|---|---|

| VisDrone | ||||

| Baseline | 51.7 | 39.2 | 40.2 | 23.0 |

| + SL-Mamba * | 54.4 | 40.8 | 43.1 | 25.0 |

| + SL-Mamba † | 55.6 | 41.5 | 44.3 | 25.9 |

| + SL-Mamba †, CBAM | 56.2 | 42.3 | 44.5 | 27.3 |

| + SL-Mamba †, CBAM, BDL | 56.7 | 42.5 | 44.9 | 27.9 |

| WiderPerson | ||||

| Baseline | 87.3 | 76.5 | 84.9 | 66.1 |

| + SL-Mamba * | 88.5 | 77.0 | 85.6 | 66.5 |

| + SL-Mamba † | 89.2 | 77.3 | 86.1 | 66.7 |

| + SL-Mamba †, CBAM | 89.7 | 77.4 | 86.4 | 66.7 |

| + SL-Mamba †, CBAM, BDL | 90.1 | 77.4 | 86.7 | 66.9 |

| NWPU-VHR-10 | ||||

| Baseline | 92.1 | 81.1 | 91.3 | 61.8 |

| + SL-Mamba * | 92.8 | 82.0 | 91.8 | 62.1 |

| + SL-Mamba † | 93.4 | 83.5 | 92.2 | 62.4 |

| + SL-Mamba †, CBAM | 93.8 | 84.2 | 92.3 | 62.6 |

| + SL-Mamba †, CBAM, BDL | 94.1 | 84.6 | 92.4 | 62.7 |

| Datasets | Loss Function | Precision | Recall | mAP@0.5 | mAP@0.5:0.95 |

|---|---|---|---|---|---|

| COCO128 | CIoU | 94.6 | 84.9 | 95.1 | 82.5 |

| ProbIoU | 93.1 | 97.1 | 97.6 | 90.0 | |

| BDL (Ours) | 96.4 | 96.3 | 97.7 | 91.1 | |

| COCO1000 | CIoU | 97.8 | 96.9 | 97.7 | 89.3 |

| ProbIoU | 97.3 | 96.8 | 98.2 | 90.2 | |

| BDL (Ours) | 97.9 | 97.2 | 98.0 | 90.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiao, Y.; Liang, Y.; Liu, M. LCB-Net: Long-Range Context and Box Distribution Network for Small Object Detection. Electronics 2025, 14, 4487. https://doi.org/10.3390/electronics14224487

Qiao Y, Liang Y, Liu M. LCB-Net: Long-Range Context and Box Distribution Network for Small Object Detection. Electronics. 2025; 14(22):4487. https://doi.org/10.3390/electronics14224487

Chicago/Turabian StyleQiao, Yiguo, Yun Liang, and Mingzhe Liu. 2025. "LCB-Net: Long-Range Context and Box Distribution Network for Small Object Detection" Electronics 14, no. 22: 4487. https://doi.org/10.3390/electronics14224487

APA StyleQiao, Y., Liang, Y., & Liu, M. (2025). LCB-Net: Long-Range Context and Box Distribution Network for Small Object Detection. Electronics, 14(22), 4487. https://doi.org/10.3390/electronics14224487