Stereo-GS: Online 3D Gaussian Splatting Mapping Using Stereo Depth Estimation

Abstract

1. Introduction

- We propose a framework for online, high-fidelity 3DGS reconstruction driven by stereo depth estimation. Accurate, dense Gaussian primitives are initialized directly from stereo-derived depth maps.

- We introduce a robust stereo-based depth estimation pipeline with a comprehensive two-stage filtering mechanism that sequentially estimates depths from incoming frames, integrates them for temporal consistency, and effectively removes outliers.

2. Related Work

2.1. Stereo SLAM

2.2. Differentiable Rendering SLAM

3. Proposed Method

3.1. System Overview

3.2. Stereo Depth Estimation

3.3. Depth Outlier Filtering

3.4. Online 3DGS Mapping

3.5. 3DGS Optimization

4. Experimental Results

4.1. Experimental Setup

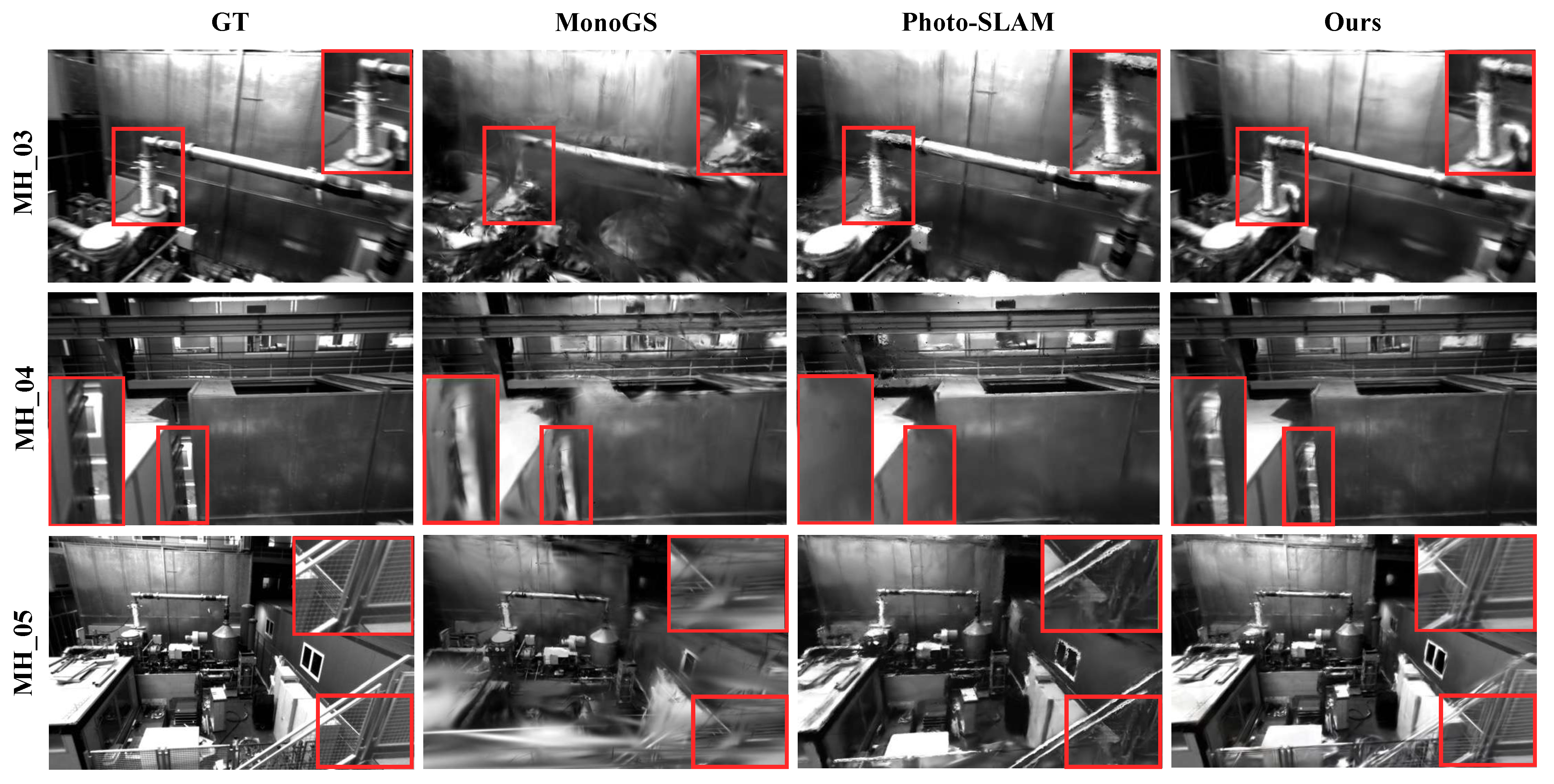

4.2. Evaluation on EuRoC

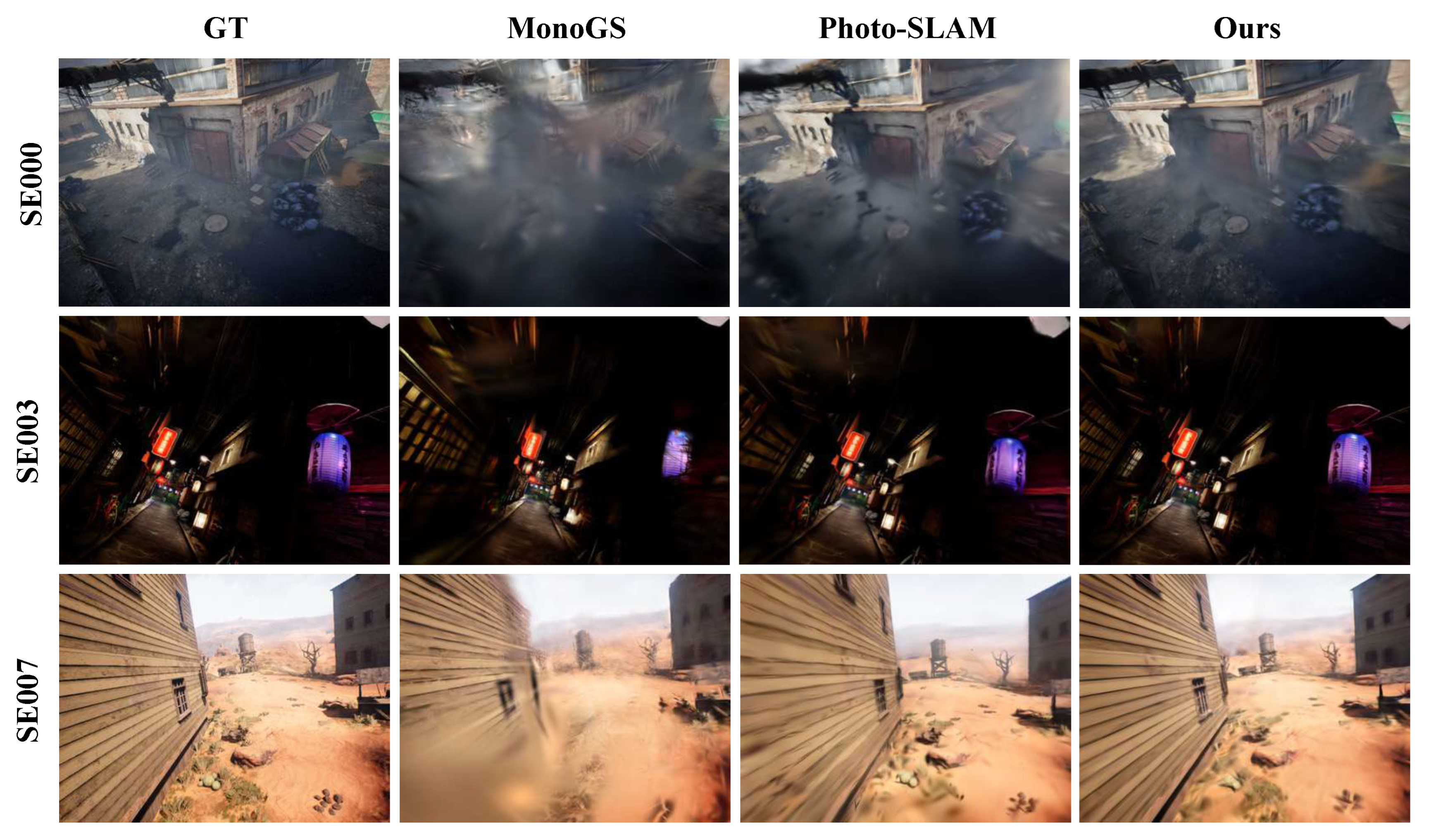

4.3. Evaluation on TartanAir

4.4. Ablation Study

5. Limitations and Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. NeRF: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Müller, T.; Evans, A.; Schied, C.; Keller, A. Instant neural graphics primitives with a multiresolution hash encoding. ACM Trans. Graph. (TOG) 2022, 41, 102. [Google Scholar] [CrossRef]

- Barron, J.T.; Mildenhall, B.; Tancik, M.; Hedman, P.; Martin-Brualla, R.; Srinivasan, P.P. Mip-NeRF: A multiscale representation for anti-aliasing neural radiance fields. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 5855–5864. [Google Scholar]

- Kerbl, B.; Kopanas, G.; Leimkühler, T.; Drettakis, G. 3D Gaussian Splatting for real-time radiance field rendering. ACM Trans. Graph 2023, 42, 139. [Google Scholar] [CrossRef]

- Yu, Z.; Chen, A.; Huang, B.; Sattler, T.; Geiger, A. Mip-Splatting: Alias-free 3D Gaussian Splatting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024. [Google Scholar]

- Chen, Y.; Xu, H.; Zheng, C.; Zhuang, B.; Pollefeys, M.; Geiger, A.; Cham, T.J.; Cai, J. MVSplat: Efficient 3D Gaussian Splatting from sparse multi-view images. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2024. [Google Scholar]

- Bao, K.; Wu, W.; Hao, Y. Gaussian Splatting-Based Color and Shape Deformation Fields for Dynamic Scene Reconstruction. Electronics 2025, 14, 2347. [Google Scholar] [CrossRef]

- Sandström, E.; Zhang, G.; Tateno, K.; Oechsle, M.; Niemeyer, M.; Zhang, Y.; Patel, M.; Van Gool, L.; Oswald, M.; Tombari, F. Splat-SLAM: Globally optimized RGB-only SLAM with 3D Gaussians. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 10–17 June 2025. [Google Scholar]

- Huang, H.; Li, L.; Cheng, H.; Yeung, S.K. Photo-SLAM: Real-time simultaneous localization and photorealistic mapping for monocular stereo and RGB-D cameras. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024. [Google Scholar]

- Chen, S. Gaussian Splatting SLAM (MonoGS). In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024. [Google Scholar]

- Hu, Y.S.; Abboud, N.; Ali, M.Q.; Yang, A.S.; Elhajj, I.; Asmar, D.; Chen, Y.; Zelek, J.S. MGSO: Monocular real-time photometric SLAM with efficient 3D Gaussian splatting. In Proceedings of the 2025 IEEE International Conference on Robotics and Automation (ICRA), Atlanta, GA, USA, 19–23 May 2025. [Google Scholar]

- Lee, B.; Park, J.; Giang, K.T.; Jo, S.; Song, S. MVS-GS: High-Quality 3D Gaussian Splatting Mapping via Online Multi-View Stereo. IEEE Access 2025, 13, 1–13. [Google Scholar] [CrossRef]

- Lee, B.; Park, J.; Giang, K.T.; Song, S. Online 3D Gaussian Splatting Modeling with Novel View Selection. arXiv 2025, arXiv:2508.14014. [Google Scholar] [CrossRef]

- Teed, J.; Deng, J. DROID-SLAM: Deep visual SLAM for monocular, stereo, and RGB-D cameras. Adv. Neural Inf. Process. Syst. 2021, 34, 16558–16569. [Google Scholar]

- Wen, B.; Trepte, M.; Aribido, J.; Kautz, J.; Gallo, O.; Birchfield, S. FoundationStereo: Zero-shot stereo matching. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 10–17 June 2025. [Google Scholar]

- Burri, M.; Nikolic, J.; Gohl, P.; Schneider, T.; Rehder, J.; Omari, S.; Achtelik, M.W.; Siegwart, R. The EuRoC micro aerial vehicle datasets. Int. J. Robot. Res. 2016, 35, 1157–1163. [Google Scholar] [CrossRef]

- Wang, W.; Zhu, D.; Wang, X.; Hu, Y.; Qiu, Y.; Wang, C.; Hu, Y.; Kapoor, A.; Scherer, S. TartanAir: A dataset to push the limits of visual SLAM. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 4909–4916. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An open-source SLAM system for monocular, stereo, and RGB-D cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar]

- Engel, J.; Koltun, V.; Cremers, D. Direct Sparse Odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 611–625. [Google Scholar] [CrossRef] [PubMed]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar]

- Newcombe, R.A.; Izadi, S.; Hilliges, O.; Molyneaux, D.; Kim, D.; Davison, A.J.; Kohi, P.; Shotton, J.; Hodges, S.; Fitzgibbon, A. KinectFusion: Real-time dense surface mapping and tracking. In Proceedings of the 2011 10th IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Basel, Switzerland, 26–29 October 2011; pp. 127–136. [Google Scholar]

- Hornung, A.; Wurm, K.M.; Bennewitz, M.; Stachniss, C.; Burgard, W. OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Auton. Robot. 2013, 34, 189–206. [Google Scholar] [CrossRef]

- Chang, J.; Chen, Y. Pyramid stereo matching network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5410–5418. [Google Scholar]

- Zhang, F.; Prisacariu, V.; Yang, R.; Torr, P.H.S. GA-Net: Guided aggregation net for end-to-end stereo matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 185–194. [Google Scholar]

- Lipson, V.; Teed, E.; Deng, J. RAFT-Stereo: Multilevel recurrent field transforms for stereo matching. In Proceedings of the 2021 International Conference on 3D Vision (3DV), London, UK, 1–3 December 2021; pp. 218–227. [Google Scholar]

- Xu, J.; Zhang, Z.; Chen, J.; Wang, L. IGEV-Stereo: Iterative geometry encoding volume for stereo matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 21919–21928. [Google Scholar]

- Bartolomei, L.; Tosi, F.; Poggi, M.; Mattoccia, S. Stereo Anywhere: Robust zero-shot deep stereo matching even where either stereo or mono fail. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 10–17 June 2025; pp. 1013–1027. [Google Scholar]

- Yang, L.; Kang, B.; Huang, Z.; Zhao, Z.; Xu, X.; Feng, J.; Zhao, H. Depth Anything v2. Adv. Neural Inf. Process. Syst. 2024, 37, 21875–21911. [Google Scholar]

- Wang, Z.; Liu, S.; Zhu, L.; Chen, H.; Lee, G.H. NICE-SLAM: Neural implicit scalable encoding for SLAM. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12786–12796. [Google Scholar]

- Zhu, Y.; Peng, Y.; Wang, Z.; Liu, S.; Lee, G.H. NeRF-SLAM: Real-time dense monocular SLAM with neural radiance fields. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab Emirates, 1–5 October 2023. [Google Scholar]

- Ma, Y.; Lv, J.; Wei, J. High-Precision Visual SLAM for Dynamic Scenes Using Semantic–Geometric Feature Filtering and NeRF Maps. Electronics 2025, 14, 3657. [Google Scholar] [CrossRef]

- Wei, W.; Wang, J.; Xie, X.; Liu, J.; Su, P. Real-Time Dense Visual SLAM with Neural Factor Representation. Electronics 2024, 13, 3332. [Google Scholar] [CrossRef]

- Keetha, N.; Karhade, J.; Jatavallabhula, K.M.; Yang, G.; Scherer, S.; Ramanan, D.; Luiten, J. SplaTAM: Splat, Track & Map 3D Gaussians for dense RGB-D SLAM. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 21357–21366. [Google Scholar]

- Li, M.; Liu, S.; Zhou, H.; Zhu, G.; Cheng, N.; Deng, T.; Wang, H. SGS-SLAM: Semantic Gaussian splatting for neural dense SLAM. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2024; pp. 163–179. [Google Scholar]

- Song, S.; Kim, D.; Choi, S. View path planning via online multiview stereo for 3D modeling of large-scale structures. IEEE Trans. Robot. 2021, 38, 372–390. [Google Scholar] [CrossRef]

- Song, S.; Truong, K.G.; Kim, D.; Jo, S. Prior depth-based multi-view stereo network for online 3D model reconstruction. Pattern Recognit. 2023, 136, 109198. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

| Method | Metric | MH01 | MH02 | MH03 | MH04 | MH05 | V1_01 | V2_01 | Avg. |

|---|---|---|---|---|---|---|---|---|---|

| MonoGS | PSNR↑ | 25.88 | 17.26 | 19.59 | 25.23 | 24.67 | 28.07 | 23.65 | 23.48 |

| SSIM↑ | 0.85 | 0.68 | 0.71 | 0.85 | 0.84 | 0.90 | 0.83 | 0.81 | |

| LPIPS↓ | 0.17 | 0.43 | 0.38 | 0.24 | 0.26 | 0.19 | 0.28 | 0.26 | |

| PhotoSLAM | PSNR↑ | 21.23 | 22.10 | 20.92 | 20.22 | 19.73 | 23.13 | 21.95 | 21.23 |

| SSIM↑ | 0.70 | 0.73 | 0.70 | 0.74 | 0.72 | 0.78 | 0.78 | 0.74 | |

| LPIPS↓ | 0.30 | 0.29 | 0.34 | 0.34 | 0.38 | 0.28 | 0.30 | 0.32 | |

| Ours | PSNR↑ | 22.63 | 23.34 | 23.83 | 23.34 | 23.12 | 25.01 | 24.12 | 23.70 |

| SSIM↑ | 0.77 | 0.79 | 0.81 | 0.88 | 0.86 | 0.86 | 0.85 | 0.83 | |

| LPIPS↓ | 0.27 | 0.26 | 0.27 | 0.19 | 0.22 | 0.22 | 0.23 | 0.24 |

| Method | Metric | SE000 | SE001 | SE002 | SE003 | SE004 | SE005 | SE006 | SE007 | Avg. |

|---|---|---|---|---|---|---|---|---|---|---|

| MonoGS | PSNR↑ | 20.95 | 17.76 | 18.81 | 17.59 | 27.92 | 17.26 | 16.45 | 23.38 | 20.02 |

| SSIM↑ | 0.59 | 0.35 | 0.48 | 0.65 | 0.78 | 0.43 | 0.28 | 0.64 | 0.53 | |

| LPIPS↓ | 0.60 | 0.69 | 0.58 | 0.51 | 0.55 | 0.66 | 0.76 | 0.56 | 0.61 | |

| PhotoSLAM | PSNR↑ | 20.70 | 16.41 | 16.95 | 23.16 | 25.98 | 16.28 | 17.73 | 24.07 | 19.67 |

| SSIM↑ | 0.58 | 0.30 | 0.39 | 0.72 | 0.81 | 0.40 | 0.33 | 0.75 | 0.50 | |

| LPIPS↓ | 0.55 | 0.68 | 0.73 | 0.38 | 0.56 | 0.64 | 0.66 | 0.33 | 0.61 | |

| Ours | PSNR↑ | 23.49 | 20.32 | 20.64 | 23.5 | 29.28 | 20.00 | 17.39 | 25.12 | 22.47 |

| SSIM↑ | 0.67 | 0.58 | 0.46 | 0.82 | 0.93 | 0.53 | 0.34 | 0.68 | 0.61 | |

| LPIPS↓ | 0.48 | 0.45 | 0.69 | 0.32 | 0.59 | 0.56 | 0.65 | 0.49 | 0.53 |

| Model | Stereo Depth | GBA | Mask Update | PSNR↑ | SSIM↑ | LPIPS↓ | #Gaussians↓ | FPS↑ |

|---|---|---|---|---|---|---|---|---|

| A | X | X | X | 12.21 | 0.44 | 0.95 | 182 | 6.91 |

| B | O | X | X | 21.77 | 0.61 | 0.52 | 430 | 5.33 |

| C | O | O | X | 23.62 | 0.67 | 0.45 | 402 | 3.48 |

| D | O | O | O | 23.49 | 0.67 | 0.48 | 331 | 4.19 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, J.; Lee, B.; Lee, S.; Song, S. Stereo-GS: Online 3D Gaussian Splatting Mapping Using Stereo Depth Estimation. Electronics 2025, 14, 4436. https://doi.org/10.3390/electronics14224436

Park J, Lee B, Lee S, Song S. Stereo-GS: Online 3D Gaussian Splatting Mapping Using Stereo Depth Estimation. Electronics. 2025; 14(22):4436. https://doi.org/10.3390/electronics14224436

Chicago/Turabian StylePark, Junkyu, Byeonggwon Lee, Sanggi Lee, and Soohwan Song. 2025. "Stereo-GS: Online 3D Gaussian Splatting Mapping Using Stereo Depth Estimation" Electronics 14, no. 22: 4436. https://doi.org/10.3390/electronics14224436

APA StylePark, J., Lee, B., Lee, S., & Song, S. (2025). Stereo-GS: Online 3D Gaussian Splatting Mapping Using Stereo Depth Estimation. Electronics, 14(22), 4436. https://doi.org/10.3390/electronics14224436