Performance Comparison of Adversarial Example Attacks Against CNN-Based Image Steganalysis Models

Abstract

1. Introduction

- To the best of our knowledge, this is the first study to extensively compare the performance of state-of-the-art adversarial example attack methods against CNN-based image steganalysis machine learning (ML) models (hereafter referred to as CNN models). To this end, we implemented three representative CNN models—namely, XuNet [13], YeNet [14], and SRNet [15]—using two well-known datasets, Bossbase [17] and BOWS2 [18], which are among the most widely used and well-recognized benchmark datasets in the field of image steganography and steganalysis [19,20,21]. Subsequently, we compared nine representative adversarial example attack methods introduced in Section 2.2 using the IBM Adversarial Robustness Toolbox v.1.17. (ART) [22].

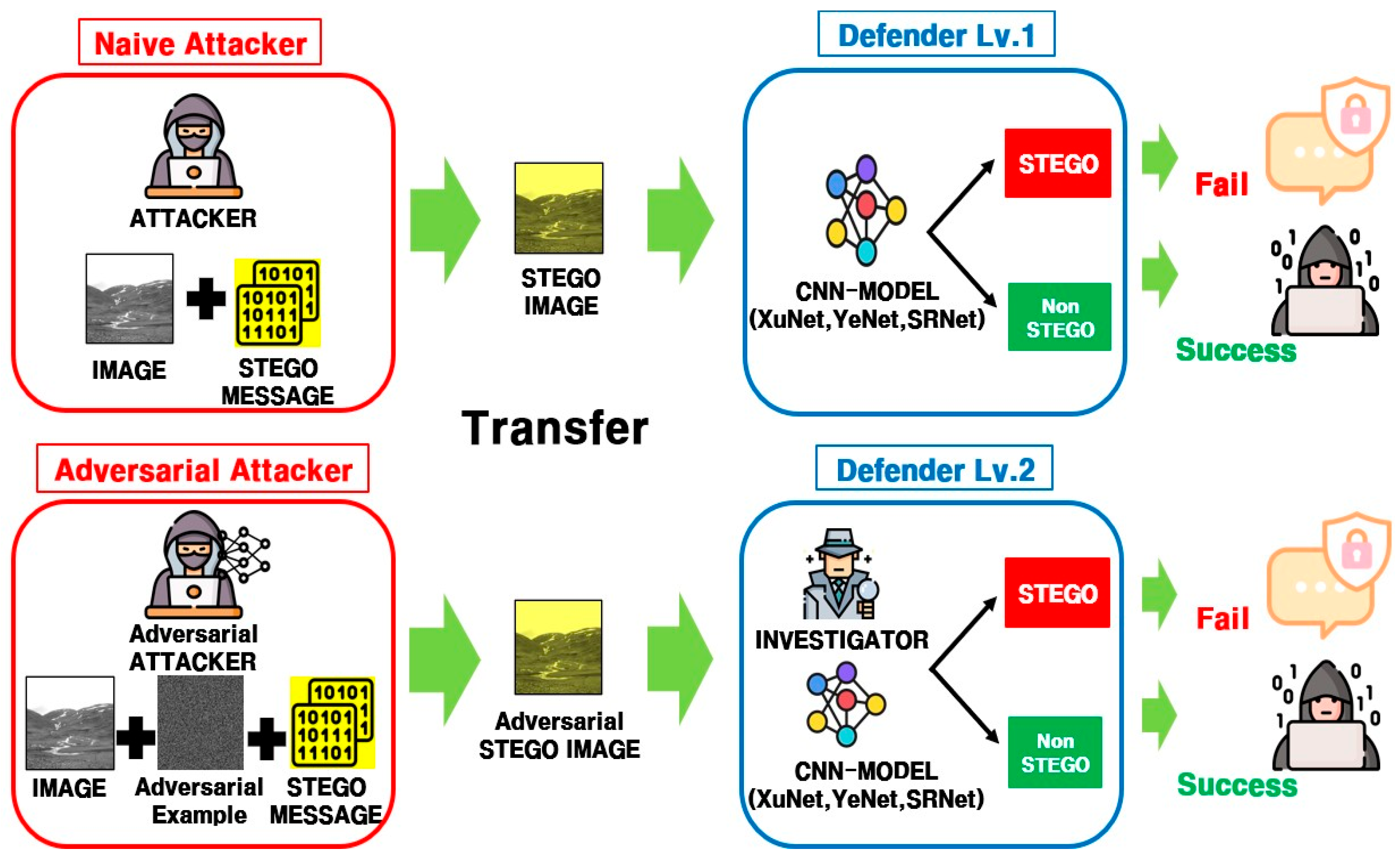

- To better understand the security problem between an attacker and a defender in the field of steganalysis research, we formally describe a system model consisting of three participating entities: a naïve attacker (a basic steganography system), a defender (two types of CNN-based image steganalysis systems, namely Defender Lv. 1 and Defender Lv. 2), and an adversarial attacker (an adversarial example attack system). In particular, while Defender Lv. 1 represents a basic CNN-based image steganalysis system, we newly introduce an advanced defender type—Defender Lv. 2—equipped with a human visual inspection capability to address a more challenging security problem.

- According to our experimental results, we found that existing performance metrics—such as classification accuracy (CA) and missed detection rate (MDR)—do not adequately reflect how effectively adversarial example attackers can evade defenders. This is because several adversarial attack methods significantly degrade the visual quality of stego images, making them appear suspicious and easily detectable by Defender Lv. 2.

- To address the limitations of using existing metrics in evaluating attack performance against Defender Lv. 2, we propose a new metric called the Attack Success Index (ASI). The ASI measures the degree to which adversarial examples are successfully generated and delivered to the receiver while evading an advanced defense system that combines CNN-based steganalysis with human-like visual inspection (Defender Lv. 2), as described in Section 2.

2. Background and Related Works

2.1. System Model

2.1.1. Naïve Attacker: Image Steganography System

2.1.2. Defender: ML-Based Image Steganalysis System

2.1.3. Adversarial Attacker: Adversarial Example Attack System

2.2. Adversarial Example Attacker

2.2.1. Fast Gradient Sign Method (FGSM) [26]

2.2.2. Basic Iterative Method (BIM) [27]

2.2.3. Projected Gradient Descent (PGD) [28]

2.2.4. Carlini and Wagner (C&W) [29]

2.2.5. ElasticNet (EAD) [30]

2.2.6. DeepFool [31]

2.2.7. Jacobian Saliency Map (JSMA) [32]

2.2.8. NewtonFool [33]

2.2.9. Wasserstein Attack [34]

2.3. Existing Studies

3. Performance Evaluation

3.1. Experimental Purpose and Procedures

- Step 1 (Preparing image dataset): To train the CNN models, we prepared a total of 40,000 images with a resolution of 256 × 256 pixels. Among them, 20,000 plain images (non-stego images) were collected from the BOSSBase v1.01 dataset [17] and the BOWS2 dataset [18], which are widely used in the steganalysis research field [19,20,21]. The remaining 20,000 stego images were generated from these plain images using the WOW algorithm.

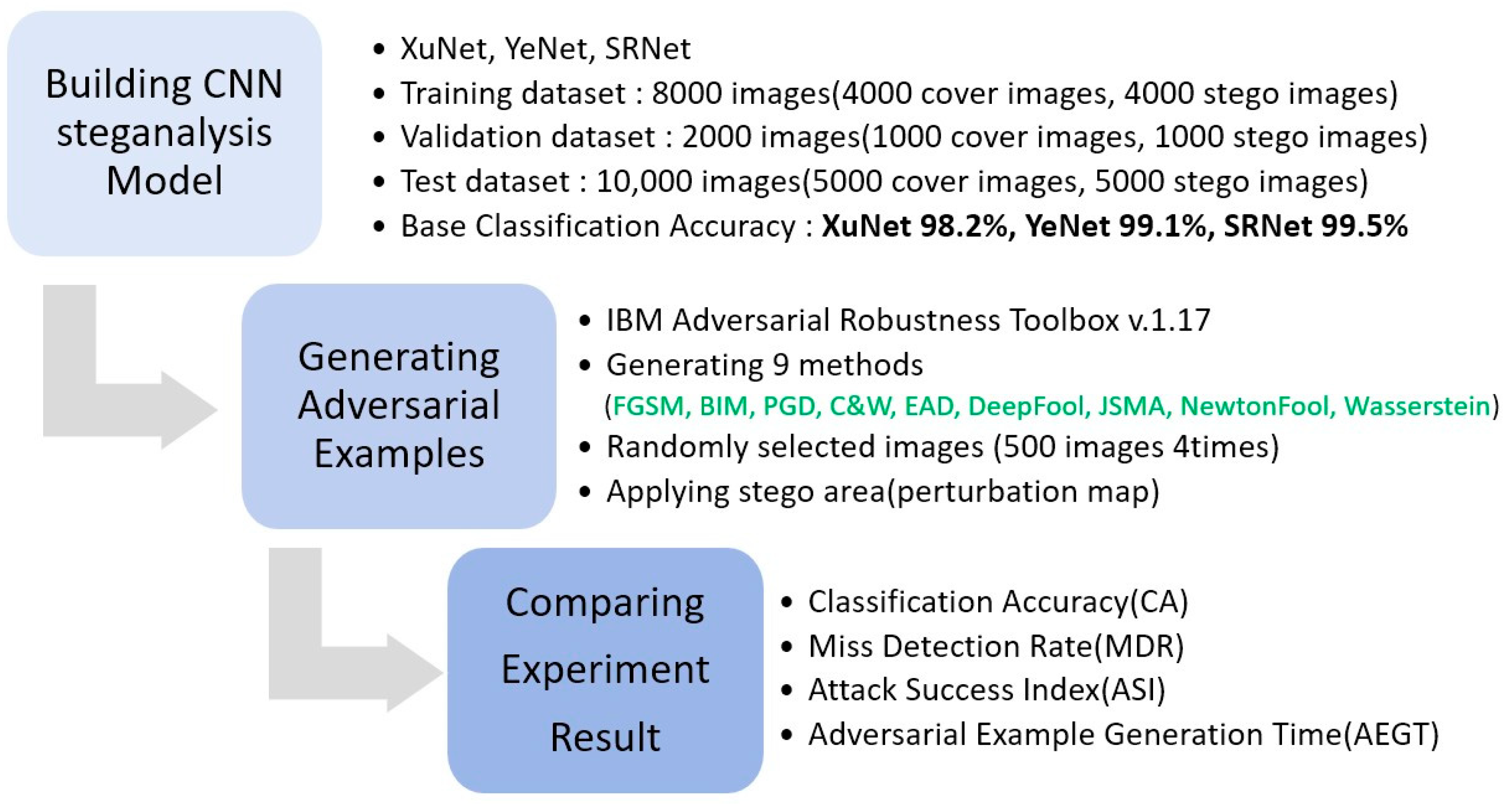

- Step 2 (Building CNN steganalysis models): We trained three CNN-based steganalysis models (XuNet [13], YeNet [14], and SRNet [15]) using the prepared image dataset. For training, 8000 images (4000 cover and 4000 stego) were used, and for validation, 2000 images (1000 cover and 1000 stego) were employed. For testing, 10,000 images (5000 cover and 5000 stego) were used to measure the base classification accuracy (BCA) of each model. To ensure a fair comparison, all models were trained until full convergence. The resulting BCAs were 98.2% for XuNet, 99.1% for YeNet, and 99.5% for SRNet. XuNet consists of five layers with a softmax activation function and 14,906 parameters. YeNet is composed of nine layers, also using a softmax activation function, with 107,698 parameters. SRNet comprises 26 layers with a softmax activation function and 4,776,962 parameters.

- Step 3 (Generating adversarial images and reapplying stego): For comparison, we evaluated nine adversarial example methods (FGSM [26], BIM [27], PGD [28], C&W [29], EAD [30], Deepfool [31], JSMA [32], NewtonFool [33] and Wasserstein [34]). For each attack method, we randomly selected 2000 (500 × 4) stego images from the dataset and converted them into adversarial stego images using the IBM Adversarial Robustness Toolbox (ART) v1.17. [22] Parameters used for experiments in IBM ART (v1.17) are shown in Table 1. Next, we generated images by reapplying the perturbation map previously obtained from the WOW algorithm.

- Step 4 (Testing and analyzing experiment results): To measure the classification accuracy and missed detection rate of the adversarial example methods, we prepared a test dataset consisting of 2000 (500 × 4) non-stego images and 2000 (500 × 4) adversarial stego images. We then evaluated the CNN-based steganalysis models using this test dataset and recorded the corresponding metrics. In addition, to calculate the Attack Success Index (ASI), we verified whether the hidden messages embedded in undetected adversarial examples could be successfully extracted and whether their PSNR values exceeded 30 or 40 dB. PSNR (Peak Signal-to-Noise Ratio) is commonly used to quantify the reconstruction quality of an image affected by lossy compression or distortion. Several studies have reported that the difference of two images becomes difficult to perceive by the human visual system when the PSNR value exceeds 30 dB [25,38,39]; we use this PSN value as a base threshold. Given a reference image and a test image , both of size , the PSNR between and is defined as follows:

3.2. Experimental Results and Analysis

3.2.1. Classification Accuracy (CA) and Miss Detection Rate (MDR)

3.2.2. Attack Success Index (ASI)

3.2.3. Adversarial Example Generation Time (AEGT)

4. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Johnson, N.F.; Jajodia, S. Exploring Steganography: Seeing the Unseen. Computer 1998, 31, 26–34. [Google Scholar] [CrossRef]

- Fridrich, J.; Goljan, M.; Du, R. Reliable Detection of LSB Steganography in Color and Grayscale Images. In Proceedings of the ACM Workshop on Multimedia and Security, Ottawa, ON, Canada, 5 October 2001; pp. 27–30. [Google Scholar]

- Kodovský, J.; Fridrich, J. Steganalysis of JPEG Images Using Rich Models. In Proceedings of the SPIE—The International Society for Optical Engineering, Brussels, Belgium, 16–18 April 2012; Volume 8303, p. 83030A. [Google Scholar]

- Pevný, T.; Filler, T.; Bas, P. Using High-Dimensional Image Models to Perform Steganalysis. In Proceedings of SPIE—The International Society for Optical Engineering; SPIE: Bellingham, WA, USA, 2010; Volume 7541, p. 754105. [Google Scholar]

- Qian, Y.; Dong, J.; Wang, W. Deep Learning for Steganalysis via Convolutional Neural Networks. In Proceedings of the SPIE Media Watermarking, Security and Forensics, San Francisco, CA, USA, 9–11 February 2015; Volume 9409, p. 94090J. [Google Scholar]

- Ker, A.D.; Pevný, T.; Bas, P.; Filler, T. Moving Steganography and Steganalysis from the Laboratory into the Real World. In Proceedings of the 1st ACM Workshop on Information Hiding and Multimedia Security (IH&MMSec), New York, NY, USA, 17–19 June 2013; pp. 1–10. [Google Scholar]

- Qian, Y.; Dong, J.; Wang, W.; Tan, T. Learning and Transferring Representations for Image Steganalysis Using CNNs. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), Seattle, WA, USA, 11–15 July 2016; pp. 134–139. [Google Scholar]

- Akhtar, N.; Mian, A. Threat of Adversarial Attacks on Deep Learning in Computer Vision: A Survey. IEEE Access 2018, 6, 14410–14430. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, W.; Cao, X.; Yu, N. Adversarial Examples in Deep Learning for Steganography and Steganalysis. In Proceedings of the ACM Workshop on Information Hiding and Multimedia Security (IH&MMSec), Paris, France, 3–5 July 2019; pp. 5–10. [Google Scholar]

- Tang, W.; Tan, S.; Li, B.; Huang, J. Automatic Steganographic Distortion Learning Using Adversarial Networks. IEEE Signal Process. Lett. 2020, 27, 1660–1664. [Google Scholar] [CrossRef]

- Liu, F.; Tan, S.; Li, B.; Huang, J. Adversarial Embedding for Image Steganography. IEEE Trans. Inf. Forensics Secur. 2020, 15, 2142–2155. [Google Scholar]

- Chen, M.; Qian, Z.; Luo, W. Adversarial Embedding Networks for Image Steganography. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 2670–2674. [Google Scholar]

- Xu, G.; Wu, H.-Z.; Shi, Y.-Q. Structural Design of Convolutional Neural Networks for Steganalysis. IEEE Signal Process. Lett. 2016, 23, 708–712. [Google Scholar] [CrossRef]

- Ye, J.; Ni, J.; Yi, Y. Deep Learning Hierarchical Representations for Image Steganalysis. IEEE Trans. Inf. Forensics Secur. 2017, 12, 2545–2557. [Google Scholar] [CrossRef]

- Boroumand, M.; Chen, M.; Fridrich, J. Deep Residual Network for Steganalysis of Digital Images. IEEE Trans. Inf. Forensics Secur. 2019, 14, 1181–1193. [Google Scholar] [CrossRef]

- Rah, Y.; Cho, Y. Reliable Backdoor Attack Detection for Various Size of Backdoor Triggers. Int. J. Artif. Intell. 2025, 14, 650–657. [Google Scholar] [CrossRef]

- Bas, P.; Filler, T.; Pevný, T. Break Our Steganographic System—The Ins and Outs of Organizing BOSS. In Proceedings of the Information Hiding Conference (IH), Prague, Czech Republic, 18–20 May 2011; pp. 59–70. [Google Scholar]

- Bas, P.; Furon, T. BOWS-2. Available online: https://data.mendeley.com/datasets/kb3ngxfmjw/1 (accessed on 10 November 2025).

- Cogranne, R.; Bas, P.; Fridrich, J. The ALASKA Steganalysis Challenge: A Step Toward Steganalysis at Scale. In Proceedings of the ACM Workshop on Information Hiding and Multimedia Security (IH&MMSec), Paris, France, 3–5 July 2019; pp. 1–10. [Google Scholar]

- Cogranne, R.; Bas, P.; Fridrich, J. Quantitative Steganalysis: Estimating the Payload and Detecting the Embedding Algorithm. IEEE Trans. Inf. Forensics Secur. 2020, 15, 1045–1058. [Google Scholar]

- Holub, V.; Fridrich, J. Universal Distortion Function for Steganography in an Arbitrary Domain. EURASIP J. Inf. Secur. 2015, 2015, 1–13. [Google Scholar] [CrossRef]

- Nicolae, M.-I.; Sinn, M.; Tran, M.N.; Buesser, B.; Rawat, A.; Wistuba, M.; Zantedeschi, V.; Baracaldo, N.; Chen, B.; Ludwig, H.; et al. Adversarial Robustness Toolbox v1.0.1; IBM Research Technical Report; IBM Research: New York, NY, USA, 2019. [Google Scholar]

- Pevný, T.; Filler, T.; Bas, P. Using High-Dimensional Image Models to Perform Highly Undetectable Steganography (HUGO). In Proceedings of the Information Hiding Conference (IH), Calgary, AB, Canada, 28–30 June 2010; pp. 99–114. [Google Scholar]

- Holub, V.; Fridrich, J. Designing Steganographic Distortion Using Directional Filters (WOW). In Proceedings of the IEEE International Workshop on Information Forensics and Security (WIFS), Tenerife, Spain, 2–5 December 2012; pp. 234–239. [Google Scholar]

- Huang, C.-T.; Shongwe, N.S.; Weng, C.-Y. Enhanced Embedding Capacity for Data Hiding Approach Based on Pixel Value Differencing and Pixel Shifting Technology. Electronics 2023, 12, 1200. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Kurakin, A.; Goodfellow, I.; Bengio, S. Adversarial Examples in the Physical World. arXiv 2017, arXiv:1607.02533. [Google Scholar] [CrossRef]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards Deep Learning Models Resistant to Adversarial Attacks. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Carlini, N.; Wagner, D. Towards Evaluating the Robustness of Neural Networks. In Proceedings of the IEEE Symposium on Security and Privacy (S&P), San Jose, CA, USA, 22–24 May 2017; pp. 39–57. [Google Scholar]

- Chen, P.-Y.; Sharma, Y.; Zhang, H.; Yi, J.; Hsieh, C.-J. EAD: Elastic-Net Attacks to Deep Neural Networks via Adversarial Examples. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), New Orleans, LA, USA, 2–7 February 2018; pp. 10–17. [Google Scholar]

- Moosavi-Dezfooli, S.M.; Fawzi, A.; Frossard, P. DeepFool: A Simple and Accurate Method to Fool Deep Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2574–2582. [Google Scholar]

- Papernot, N.; McDaniel, P.; Jha, S.; Fredrikson, M.; Celik, Z.B.; Swami, A. The Limitations of Deep Learning in Adversarial Settings. In Proceedings of the IEEE European Symposium on Security and Privacy (EuroS&P), Saarbrucken, Germany, 21–24 March 2016; pp. 372–387. [Google Scholar]

- Jang, U.; Wu, X.; Chen, S. Objective Metrics and Gradient Descent Algorithms for Adversarial Examples in Machine Learning. In Proceedings of the Annual Computer Security Applications Conference (ACSAC), Orlando, FL, USA, 4–8 December 2017; pp. 277–289. [Google Scholar]

- Wong, E.; Schmidt, F.; Metzen, J.H.; Kolter, J.Z. Wasserstein Adversarial Examples via Projected Sinkhorn Iterations. In Proceedings of the International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019; pp. 6808–6817. [Google Scholar]

- Shang, Y.; Jiang, S.; Ye, D.; Huang, J. Enhancing the Security of Deep Learning Steganography via Adversarial Examples. Mathematics 2020, 8, 1446. [Google Scholar] [CrossRef]

- Din, S.U.; Akhtar, N.; Younis, S.; Shafait, F.; Mansoor, A.; Shafique, M. Steganographic Universal Adversarial Perturbations. Pattern Recognit. Lett. 2020, 135, 146–152. [Google Scholar] [CrossRef]

- Li, L.; Li, X.; Hu, X.; Zhang, Y. Image Steganography and Style Transformation Based on Generative Adversarial Network. Mathematics 2024, 12, 615. [Google Scholar] [CrossRef]

- Hsiao, T.-C.; Liu, D.-X.; Chen, T.-L.; Chen, C.-C. Research on Image Steganography Based on Sudoku Matrix. Symmetry 2021, 13, 387. [Google Scholar] [CrossRef]

- Weng, C.-Y.; Weng, H.-Y.; Huang, C.-T. Expansion High Payload Imperceptible Steganography Using Parameterized Multilayer EMD with Clock-Adjustment Model. EURASIP J. Image Video Process. 2024, 2024, 37. [Google Scholar] [CrossRef]

- Gowda, S.N.; Yuan, C. StegColNet: Steganalysis Based on an Ensemble Colorspace Approach. In Proceedings of the Joint IAPR International Workshops on Statistical Techniques in Pattern Recognition (SPR) and Structural and Syntactic Pattern Recognition (SSPR), Padua, Italy, 21–22 January 2021; pp. 319–328. [Google Scholar]

- Kombrink, M.H.; Geradts, Z.J.M.H.; Worring, M. Image Steganography Approaches and Their Detection Strategies: A Survey. ACM Comput. Surv. 2024, 57, 1–40. [Google Scholar] [CrossRef]

- Luo, W.; Liao, X.; Cai, S.; Hu, K. A Comprehensive Survey of Digital Image Steganography and Steganalysis. APSIPA Trans. Signal Inf. Process. 2024, 13, e30. [Google Scholar] [CrossRef]

- Song, B.; Hu, K.; Zhang, Z. A Survey on Deep-Learning-Based Image Steganography. Expert Syst. Appl. 2024, 254, 124390. [Google Scholar] [CrossRef]

| Attack Method | Epsilon (ε) /Parameter | Iteration Count | Step Size (eps_Step/Step) | Norm Type |

|---|---|---|---|---|

| FGSM | eps = 0.3 | 1 | Same as ε (single step) | L∞ |

| BIM | eps = 0.3 | 100 | eps_step = 0.1 | L∞ |

| PGD | eps = 0.3 | 100 | eps_step = 0.1 | L∞ |

| C&W (L2) | confidence = 0.0, initial_const = 0.01 | 10 | learning_rate = 0.01 | L2 |

| EAD | confidence = 0.0, beta = 0.01 | 10 | learning_rate = 0.01 | Elastic-net (L1 + L2) |

| DeepFool | epsilon = 1 × 10−6 | 100 | Internal step | L2 |

| JSMA | theta = 1.0 | 100 | Pixel change = θ | L0 |

| NewtonFool | eta = 0.1 | 100 | Internal optimization step | L2 |

| Wasserstein Attack | transport budget = 0.1 | 200 | learning_rate = 0.01 | Wasserstein |

| Predicted Value | |||

|---|---|---|---|

| Stego | Non-Stego | ||

| Actual Value | Stego | True Positive (TP) | False Negative (FN) |

| Non-Stego | False Positive (FP) | True Negative (TN) | |

| Clean Image | XuNet | YeNet | SRNet | |

|---|---|---|---|---|

| [26] |  |  |  |  |

| [27] |  |  |  |  |

| [28] |  |  |  |  |

| [29] |  |  |  |  |

| [30] |  |  |  |  |

| [31] |  |  |  |  |

| [32] |  |  |  |  |

| [33] |  |  |  |  |

| [34] |  |  |  |  |

| Attack Methods | Classification Acc. (%) | p-Value (Adj Holm) | ||||

|---|---|---|---|---|---|---|

| XuNet | YeNet | SRNet | XuNet (vs. BIM) | YeNet (vs. PGD) | SRNet (vs. FGSM) | |

| None | 98.2 | 99.1 | 99.5 | - | - | - |

| FGSM [26] | 72.5 | 98.4 | 49.9 | 3.4 × 10−6 (***) | 6.06 × 10−6 (***) | - |

| BIM [27] | 54.0 | 48.7 | 49.9 | - | 1 | 1 |

| PGD [28] | 54.3 | 48.7 | 49.9 | 1 | - | 1 |

| C&W [29] | 80.1 | 95.4 | 76.8 | 3.3 × 10−6 (***) | 6.32 × 10−6 (***) | 1.93 × 10−7 (***) |

| EAD [30] | 93.6 | 53.9 | 53.0 | 2.2 × 10−6 (***) | 2.5 × 10−4 (***) | 1.08 × 10−3 (**) |

| DeepFool [31] | 95.5 | 98.3 | 92.7 | 2.2 × 10−6 (***) | 6.64 × 10−6 (***) | 9.82 × 10−7 (***) |

| JSMA [32] | 86.6 | 87.3 | 73.4 | 4.4 × 10−6 (***) | 2.44 × 10−5 (***) | 8.13 × 10−6 (***) |

| NewtonFool [33] | 95.5 | 98.3 | 92.9 | 2.5 × 10−6 (***) | 7.12 × 10−6 (***) | 9.82 × 10−7 (***) |

| Wasserstein [34] | 93.7 | 64.2 | 53.0 | 6.8 × 10−6 (***) | 2.5 × 10−4 (***) | 1.08 × 10−3 (**) |

| Average | 80.6 | 77.0 | 65.7 | - | - | - |

| Attack Methods | Miss Detection Rate. (%) | p-Value (Adj Holm) | ||||

|---|---|---|---|---|---|---|

| XuNet | YeNet | SRNet | XuNet (vs. BIM) | YeNet (vs. PGD) | SRNet (vs. FGSM) | |

| None | 3.4 | 1.2 | 0.2 | - | - | - |

| FGSM [26] | 54.6 | 2.6 | 100 | 3.41 × 10−6 (***) | 8.36 × 10−7 (***) | - |

| BIM [27] | 91.6 | 99.4 | 100 | - | 7.27 × 10−1 | - |

| PGD [28] | 91.0 | 99.4 | 100 | 1.21 × 10−1 | - | - |

| C&W [29] | 39.4 | 6.0 | 46.2 | 2.64 × 10−8 (***) | 2.69 × 10−6 (***) | 4.83 × 10−6 (***) |

| EAD [30] | 12.4 | 89.0 | 93.8 | 7.55 × 10−7 (***) | 6.65 × 10−5 (***) | 2.41 × 10−3 (**) |

| DeepFool [31] | 8.6 | 2.8 | 14.4 | 7.87 × 10−7 (***) | 5.22 × 10−6 (***) | 2.03 × 10−6 (***) |

| JSMA [32] | 26.8 | 22.2 | 53.0 | 1.43 × 10−8 (***) | 1.10 × 10−5 (***) | 4.68 × 10−6 (***) |

| NewtonFool [33] | 8.6 | 2.8 | 14.0 | 7.54 × 10−7 (***) | 4.93 × 10−6 (***) | 2.18 × 10−6 (***) |

| Wasserstein [34] | 12.2 | 68.4 | 93.8 | 7.87 × 10−7 (***) | 5.3 × 10−5 (***) | 1.45 × 10−3 (**) |

| Attack Methods | XuNet | YeNet | SRNet | |||

|---|---|---|---|---|---|---|

| 30 db | 40 db | 30 db | 40 db | 30 db | 40 db | |

| FGSM [26] | 0.2184 | 0 | 0 | 0 | 0.2000 | 0 |

| BIM [27] | 0 | 0 | 0.3976 | 0 | 0 | 0 |

| PGD [28] | 0 | 0 | 0 | 0 | 0 | 0 |

| C&W [29] | 0.3152 | 0.2679 | 0.0120 | 0 | 0.2772 | 0.1709 |

| EAD [30] | 0.0992 | 0 | 0.1780 | 0.0356 | 0.1876 | 0 |

| DeepFool [31] | 0.0516 | 0 | 0.0224 | 0 | 0.1152 | 0 |

| JSMA [32] | 0.2144 | 0.2144 | 0.0888 | 0 | 0.2120 | 0 |

| NewtonFool [33] | 0.0344 | 0.0249 | 0.0168 | 0.0095 | 0 | 0 |

| Wasserstein [34] | 0 | 0 | 0.2736 | 0 | 0.1876 | 0 |

| Attack Methods | XuNet | YeNet | SRNet | |||

|---|---|---|---|---|---|---|

| 30 db | 40 db | 30 db | 40 db | 30 db | 40 db | |

| FGSM [26] | 0.78 | 0 | 0 | 0 | 0.20 | 0 |

| BIM [27] | 0 | 0 | 0.40 | 0 | 0 | 0 |

| PGD [28] | 0 | 0 | 0 | 0 | 0 | 0 |

| C&W [29] | 0.80 | 0.68 | 0.20 | 0 | 0.60 | 0.37 |

| EAD [30] | 0.80 | 0.7 | 0.20 | 0.04 | 0.20 | 0 |

| DeepFool [31] | 0.60 | 0 | 0.80 | 0 | 0.80 | 0 |

| JSMA [32] | 0.80 | 0.8 | 0.40 | 0 | 0.40 | 0 |

| NewtonFool [33] | 0.40 | 0.29 | 0.60 | 0.34 | 0 | 0 |

| Wasserstein [34] | 0 | 0 | 0.10 | 0 | 0.20 | 0 |

| Attack Methods | XuNet | YeNet | SRNet |

|---|---|---|---|

| FGSM [26] | 0.002 s | 0.017 s | 0.027 s |

| BIM [27] | 0.108 s | 0.924 s | 0.960 s |

| PGD [28] | 0.058 s | 0.925 s | 1.010 s |

| C&W [29] | 5.168 s | 8.647 s | 7.164 s |

| EAD [30] | 10.35 s | 4.507 s | 54.951 s |

| DeepFool [31] | 0.776 s | 0.370 s | 4.005 s |

| JSMA [32] | 5.376 s | 11.432 s | 24.170 s |

| NewtonFool [33] | 0.590 s | 2.544 s | 2.462 s |

| Wasserstein [34] | 1.212 s | 1.276 s | 3.051 s |

| Average | 2.627 s | 3.405 s | 10.867 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.; Park, H.; Cho, Y. Performance Comparison of Adversarial Example Attacks Against CNN-Based Image Steganalysis Models. Electronics 2025, 14, 4422. https://doi.org/10.3390/electronics14224422

Kim H, Park H, Cho Y. Performance Comparison of Adversarial Example Attacks Against CNN-Based Image Steganalysis Models. Electronics. 2025; 14(22):4422. https://doi.org/10.3390/electronics14224422

Chicago/Turabian StyleKim, Hyeonseong, Hweerang Park, and Youngho Cho. 2025. "Performance Comparison of Adversarial Example Attacks Against CNN-Based Image Steganalysis Models" Electronics 14, no. 22: 4422. https://doi.org/10.3390/electronics14224422

APA StyleKim, H., Park, H., & Cho, Y. (2025). Performance Comparison of Adversarial Example Attacks Against CNN-Based Image Steganalysis Models. Electronics, 14(22), 4422. https://doi.org/10.3390/electronics14224422