An Analysis of Partitioned Convolutional Model for Vehicle Re-Identification

Abstract

1. Introduction

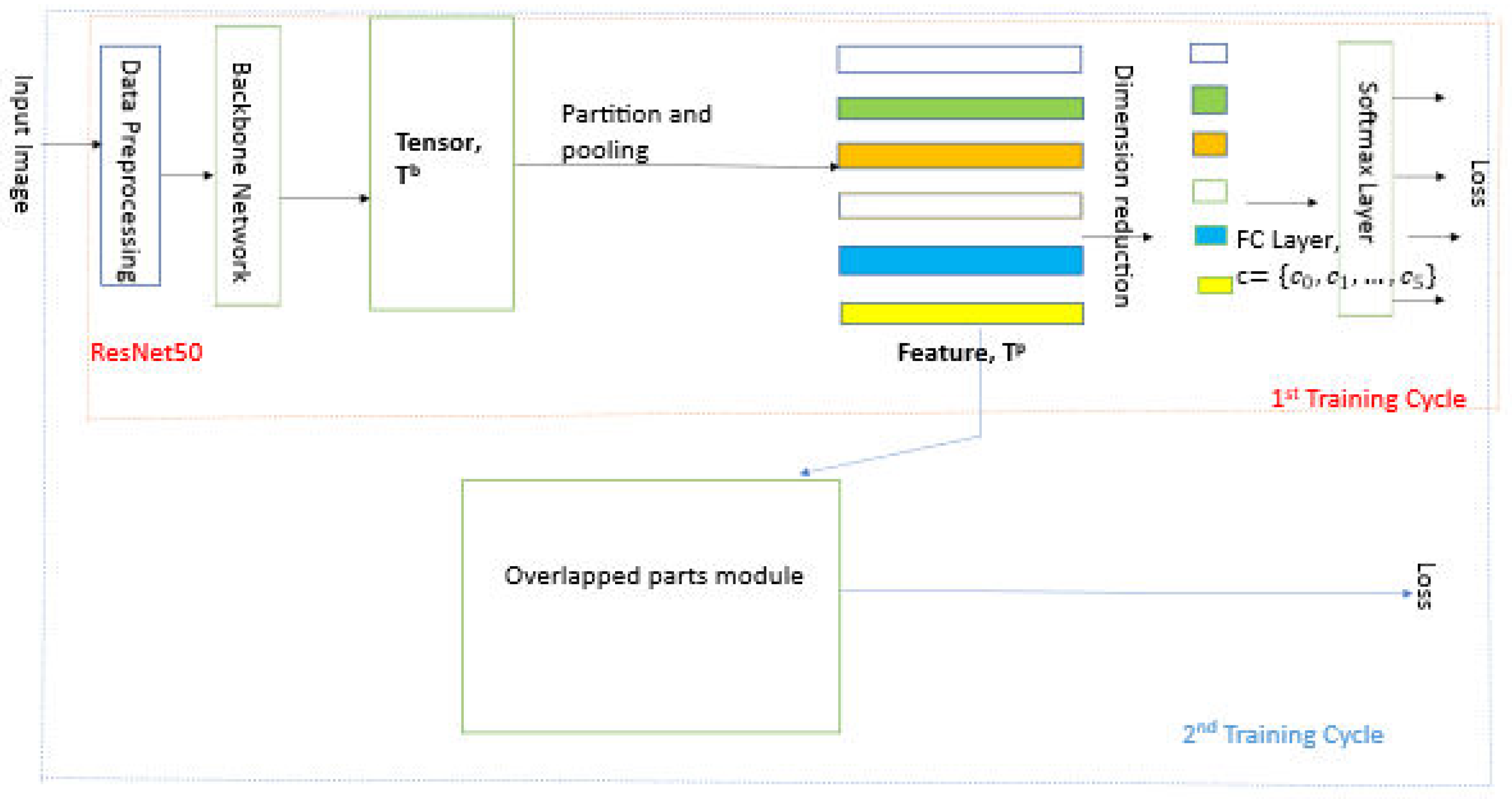

- Our work is an exhaustive extension of Sun et al. which uses PCB with refined part pooling (PCB-RPP) over person re-identification (PReid) datasets [4]. To the best of our knowledge, the application of this method to vehicle re-identification (VReid) has not been reported until now. Our analysis revealed that the performance behavior of RPP is different when applied to vehicle re-identification problem. RPP improves performance in the case of the VeRi dataset while it degrades performance in the case of the VehicleId dataset. The results of Sun et al. over three PReid datasets however showed consistent performance for all of them. The reason investigated was that RPP fails to solve within-part inconsistencies over neighboring parts in the case of images in the VehicleId dataset. This motivated further investigations to improve the PCB-RPP architecture.

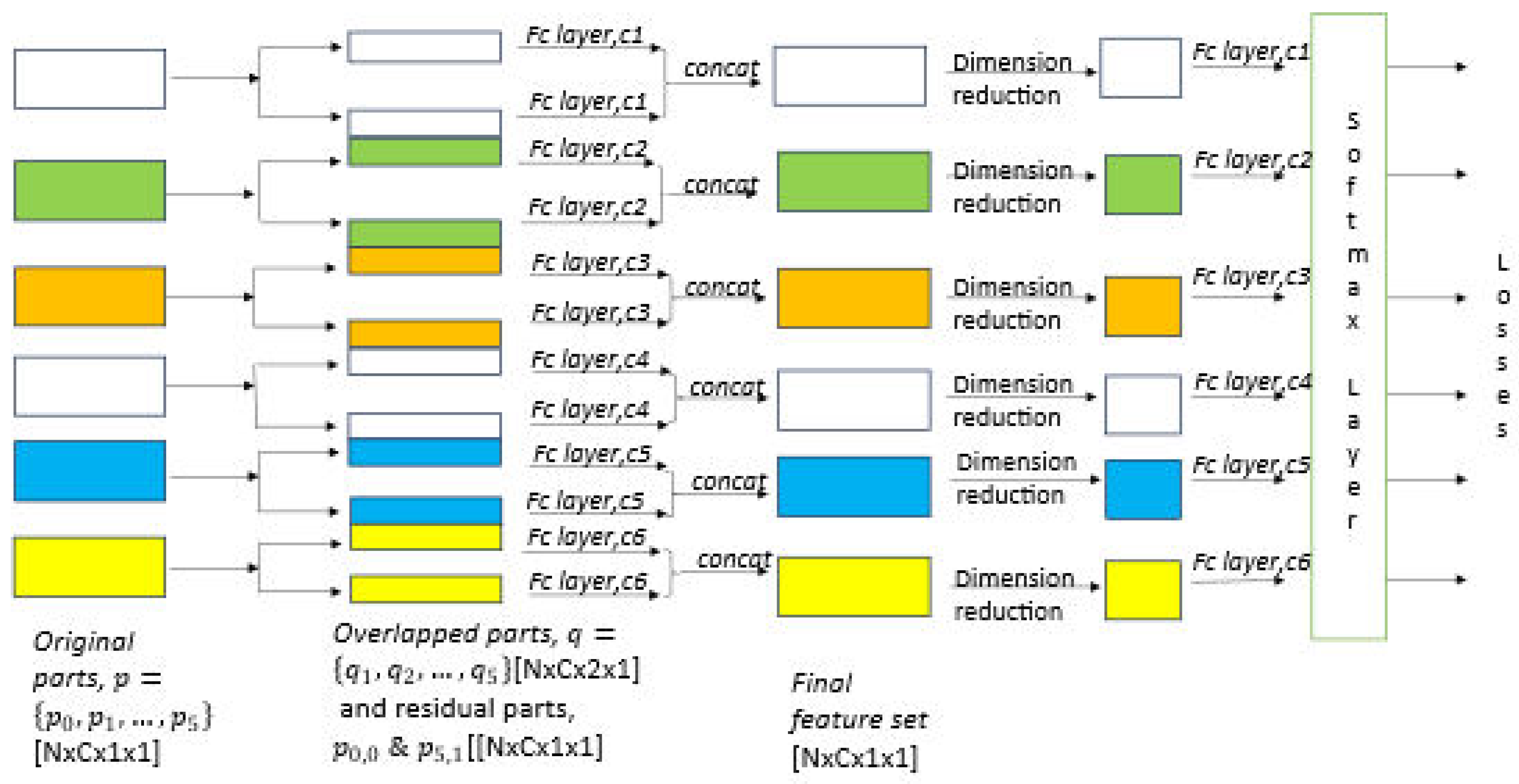

- We propose a novel Overlapped Part-based Convolutional Baseline method (OPCB) which can take care of within-part inconsistencies. OPCB overlaps parts of two adjacent parts to generate new parts. The new parts are then trained using the classifiers corresponding to the original parts and the results are concatenated to generate the feature set. This method is found to work consistently well across both VeRi and VehicleId datasets and even outperforms the refined part pooling approach.

- CNN-based models reported in the literature are usually tested over multiple architectures to identify and compare performance variations if there are any. Our work therefore investigates three residual networks as backbone networks viz. ResNet50, ResNet101, and ResNext50. The main difference lies in the number of layers, with ResNet101 and ResNext50 having higher number of layers than ResNet50. It was found that increasing number of layers or cardinality does not necessarily increase accuracy in solving vehicle re-identification problem. The approach of ensembling different architectures/models is commonly reported in the CNN literature. However, in the VeRid application domain, there are very few such references. In this work, we present a model ensembled over the three architectures which significantly improves re-identification results compared to the state of the art.

- The widely used re-ranking using k-reciprocal nearest neighbors scheme has been improved and incorporated in our work. This process uses a mix of Euclidean and Jaccard distances. We have augmented an algorithm that uses a score to obtain the best mix. This can be used as a generalized tool to finetune re-ranking for different datasets. Our results demonstrate that this has a significant impact in improving re-identification accuracy.

2. Related Work and Motivation

2.1. Vehicle Re-Id Datasets

- VeRi Dataset: The dataset consists of 49,357 images of 776 vehicles taken from 20,138 different camera angles. These cameras are distributed across an area of 1 square kilometer. The images are sourced from unconstrained real-world surveillance footage. Each vehicle is photographed by 20 different cameras. The images vary in terms of viewpoint, resolution, lighting conditions, and occlusion. The training set contains 575 unique vehicle identities, while the remainder are found in the test set. The test set includes both a query set and a gallery set. In the query set, there is only one image per vehicle identity, which is utilized to locate the corresponding identity in the gallery set.

- VehicleId Dataset: The collection consists of 221,763 pictures of 26,267 vehicles. Each vehicle is depicted from two distinct angles: front and back. The training dataset includes 110,178 images representing 13,134 different vehicle identities. The test set has been divided into three parts. These parts include 800, 1600, and 2400 vehicles, containing 7332, 12,995, and 20,038 images, respectively. For every one of the three parts, the query set consists of a single vehicle image for each identity, while the remainder of the vehicle images are included in the gallery set, where matches need to be identified.

2.2. Vehicle Re-Id Methods

3. Methodology

3.1. Data Preprocessing

- First, the images are resized to a fixed size (384,192). This is undertaken while keeping the pre-trained backbone model and the input it expects in view.

- Random Horizontal Flip—This is a technique for augmenting image data where an input image is flipped along the horizontal axis based on a specified probability.

- Random Erasing—This method involves randomly selecting a portion of an image and removing its pixels, which aids in training the model to be more robust with the provided data.

- Finally, the images are transformed into tensors and standardized to the same mean (0.485, 0.456, 0.406) and standard deviation (0.229, 0.224, 0.225) that are applied to typical ImageNet images. This standardization is essential for attaining the best outcomes when utilizing backbone architectures that have been pre-trained on ImageNet.

3.2. Backbone Model

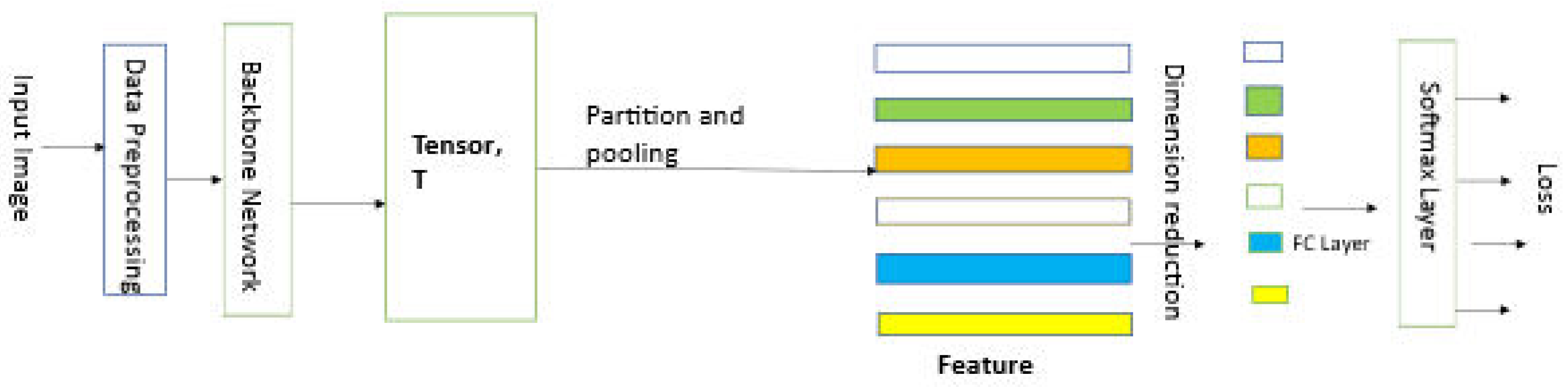

3.3. Part-Based Convolutional Baseline (PCB) Model

3.4. Refined Part Pooling

3.5. Proposed Overlapped Part-Based Convolutional Baseline (OPCB)

- Step 1: Train the PCB model.

- Step 2: Generate overlapped and residual parts.

- Step 3: Train overlapped and residual parts through original classifiers.

- Step 4: Concatenate the output pairwise to generate final features.

3.6. Generalized Training and Evaluation Procedure

3.7. Re-Ranking Using k-Reciprocal Nearest Neighbours

3.8. Proposed Algorithm for Best Distance Mix Calculation

| Algorithm 1 Algorithm for distance mix calculation for a dataset |

|

4. Experimental Results and Analysis

4.1. Distance Calculation for Re-Ranking

4.2. Impact of Backbone and Ensembled Model

4.3. Performance of OPCB

- OPCB improves PCB consistently across the VeRi and VehicleId datasets. This is in contrast with RPP which can only improve PCB for the VeRi dataset and fails to do so in the case of the VehicleId dataset.

- OPCB performs at par with RPP in the case of the VeRi dataset and largely improves over RPP and PCB in the case of the VehicleId dataset.

4.4. Comparison with State of the Art

- In the case of the VeRi dataset, our proposed ensembled part model with horizontal partitioning along with the refined part pooling gives significantly better results compared to all in terms of mAP. The R-1 and R-5 performances are as good as most of the reported works. The proposed OPCB and ensembled model also outperforms transformer-based models like Swin Transformer and TransReid.

- In the case of the Vehicle-Id dataset, most works have not reported their mean average precision and hence the values could not be compared. But on comparison with the state of the art in terms of Rank-1 and Rank-5 accuracies, it is found that our ensembled model performs at par with most of the reported results and outperforms transformer-based models like TransReid.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Amiri, A.; Kaya, A.; Keceli, A.S. A Comprehensive Survey on Deep-Learning-based Vehicle Re-Identification: Models, Data Sets and Challenges. arXiv 2024, arXiv:2401.10643. [Google Scholar] [CrossRef]

- Wang, H.; Hou, J.; Chen, N. A Survey of Vehicle Re-Identification Based on Deep Learning. IEEE Access 2019, 7, 172443–172469. [Google Scholar] [CrossRef]

- Wang, G.; Yuan, Y.; Chen, X.; Li, J.; Zhou, X. Learning Discriminative Features with Multiple Granularities for Person Re-Identification. arXiv 2018, arXiv:1804.01438. [Google Scholar] [CrossRef]

- Sun, Y.; Zheng, L.; Li, Y.; Yang, Y.; Tian, Q.; Wang, S. Learning Part-based Convolutional Features for Person Re-Identification. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 902–917. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Liu, W.; Ma, H.; Fu, H. Large-scale vehicle re-identification in urban surveillance videos, 2016. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), Seattle, WA, USA, 11–15 July 2016. [Google Scholar]

- Liu, H.; Tian, Y.; Wang, Y.; Pang, L.; Huang, T. Deep Relative Distance Learning: Tell the Difference between Similar Vehicles. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2167–2175. [Google Scholar] [CrossRef]

- Kanacı, A.; Zhu, X.; Gong, S. Vehicle Re-identification in Context. In Pattern Recognition; Brox, T., Bruhn, A., Fritz, M., Eds.; Springer: Cham, Switzerland, 2019; pp. 377–390. [Google Scholar]

- Lou, Y.; Bai, Y.; Liu, J.; Wang, S.; Duan, L. VERI-Wild: A Large Dataset and a New Method for Vehicle Re-Identification in the Wild. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–19 June 2019; pp. 3230–3238. [Google Scholar] [CrossRef]

- Tang, Z.; Naphade, M.; Liu, M.; Yang, X.; Birchfield, S.; Wang, S.; Kumar, R.; Anastasiu, D.; Hwang, J. CityFlow: A City-Scale Benchmark for Multi-Target Multi-Camera Vehicle Tracking and Re-Identification. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 15–19 June 2019; pp. 8789–8798. [Google Scholar] [CrossRef]

- Yan, K.; Tian, Y.; Wang, Y.; Zeng, W.; Huang, T. Exploiting Multi-grain Ranking Constraints for Precisely Searching Visually-similar Vehicles. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 562–570. [Google Scholar] [CrossRef]

- Zhou, Y.; Liu, L.; Shao, L. Vehicle Re-Identification by Deep Hidden Multi-View Inference. IEEE Trans. Image Process. 2018, 27, 3275–3287. [Google Scholar] [CrossRef] [PubMed]

- He, B.; Li, J.; Zhao, Y.; Tian, Y. Part-Regularized Near-Duplicate Vehicle Re-Identification. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–19 June 2019; pp. 3992–4000. [Google Scholar] [CrossRef]

- Wang, H.; Peng, J.; Chen, D.; Jiang, G.; Zhao, T.; Fu, X. Attribute-Guided Feature Learning Network for Vehicle Reidentification. IEEE MultiMedia 2020, 27, 112–121. [Google Scholar] [CrossRef]

- Zhao, Y.; Shen, C.; Wang, H.; Chen, S. Structural Analysis of Attributes for Vehicle Re-Identification and Retrieval. IEEE Trans. Intell. Transp. Syst. 2020, 21, 723–734. [Google Scholar] [CrossRef]

- Wang, Z.; Tang, L.; Liu, X.; Yao, Z.; Yi, S.; Shao, J.; Yan, J.; Wang, S.; Li, H.; Wang, X. Orientation Invariant Feature Embedding and Spatial Temporal Regularization for Vehicle Re-identification. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 379–387. [Google Scholar] [CrossRef]

- Zhu, J.; Du, Y.; Hu, Y.; Zheng, L.; Cai, C. VRSDNet: Vehicle re-identification with a shortly and densely connected convolutional neural network. Multimed. Tools Appl. 2019, 78, 29043–29057. [Google Scholar] [CrossRef]

- Zhu, J.; Zeng, H.; Lei, Z.; Liao, S.; Zheng, L.; Cai, C. A Shortly and Densely Connected Convolutional Neural Network for Vehicle Re-identification. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 3285–3290. [Google Scholar] [CrossRef]

- Zhu, J.; Huang, J.; Zeng, H.; Ye, X.; Li, B.; Lei, Z.; Zheng, L. Object Reidentification via Joint Quadruple Decorrelation Directional Deep Networks in Smart Transportation. IEEE Internet Things J. 2020, 7, 2944–2954. [Google Scholar] [CrossRef]

- Wang, H.; Peng, J.; Jiang, G.; Xu, F.; Fu, X. Discriminative feature and dictionary learning with part-aware model for vehicle re-identification. Neurocomputing 2021, 438, 55–62. [Google Scholar] [CrossRef]

- Liu, X.; Liu, W.; Mei, T.; Ma, H. PROVID: Progressive and Multimodal Vehicle Reidentification for Large-Scale Urban Surveillance. IEEE Trans. Multimed. 2018, 20, 645–658. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems; Pereira, F., Burges, C., Bottou, L., Weinberger, K., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2012; Volume 25. [Google Scholar]

- Liu, S.; Deng, W. Very deep convolutional neural network based image classification using small training sample size. In Proceedings of the 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Kuala Lumpur, Malaysia, 3–6 November 2015; pp. 730–734. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 11–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Chen, H.; Lagadec, B.; Bremond, F. Partition and Reunion: A Two-Branch Neural Network for Vehicle Re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Qian, J.; Jiang, W.; Luo, H.; Yu, H. Stripe-based and attribute-aware network: A two-branch deep model for vehicle re-identification. Meas. Sci. Technol. 2020, 31, 095401. [Google Scholar] [CrossRef]

- Yan, L.; Li, K.; Gao, R.; Wang, C.; Xiong, N. An Intelligent Weighted Object Detector for Feature Extraction to Enrich Global Image Information. Appl. Sci. 2022, 12, 7825. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, S.; Huang, Q.; Gao, W. RAM: A Region-Aware Deep Model for Vehicle Re-Identification. In Proceedings of the 2018 IEEE International Conference on Multimedia and Expo (ICME), Los Alamitos, CA, USA, 23–27 July 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Peng, J.; Wang, H.; Zhao, T.; Fu, X. Learning multi-region features for vehicle re-identification with context-based ranking method. Neurocomputing 2019, 359, 427–437. [Google Scholar] [CrossRef]

- Zhou, Y.; Li, J.; Chen, H.; Wu, Y.; Wu, J.; Chen, L. A spatiotemporal attention mechanism-based model for multi-step citywide passenger demand prediction. Inf. Sci. 2020, 513, 372–385. [Google Scholar] [CrossRef]

- Kim, H.G.; Na, Y.; Joe, H.W.; Moon, Y.H.; Cho, Y.J. Vehicle Re-identification with Spatio-temporal Information. In Proceedings of the 2023 14th International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 11–14 October 2023; pp. 1825–1827. [Google Scholar] [CrossRef]

- Chen, Y.; Ma, B.; Chang, H. Part alignment network for vehicle re-identification. Neurocomputing 2020, 418, 114–125. [Google Scholar] [CrossRef]

- Li, Z.; Lv, J.; Chen, Y.; Yuan, J. Person re-identification with part prediction alignment. Comput. Vis. Image Underst. 2021, 205, 103172. [Google Scholar] [CrossRef]

- Pang, X.; Tian, X.; Nie, X.; Yin, Y.; Jiang, G. Vehicle re-identification based on grouping aggregation attention and cross-part interaction. J. Vis. Commun. Image Represent. 2023, 97, 103937. [Google Scholar] [CrossRef]

- Bäckström, T.; Räsänen, O.; Zewoudie, A.; Zarazaga, P.P.; Koivusalo, L.; Das, S.; Mellado, E.G.; Mansali, M.B.; Ramos, D.; Kadiri, S.; et al. Introduction to Speech Processing, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar] [CrossRef]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5987–5995. [Google Scholar] [CrossRef]

- Zhong, Z.; Zheng, L.; Cao, D.; Li, S. Re-ranking Person Re-identification with k-Reciprocal Encoding. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3652–3661. [Google Scholar] [CrossRef]

- Kuma, R.; Weill, E.; Aghdasi, F.; Sriram, P. Vehicle Re-identification: An Efficient Baseline Using Triplet Embedding. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–9. [Google Scholar] [CrossRef]

- Xu, Y.; Jiang, N.; Zhang, L.; Zhou, Z.; Wu, W. Multi-scale Vehicle Re-identification Using Self-adapting Label Smoothing Regularization. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 2117–2121. [Google Scholar] [CrossRef]

- Lin, W.; Li, Y.; Yang, X.; Peng, P.; Xing, J. Multi-View Learning for Vehicle Re-Identification. In Proceedings of the 2019 IEEE International Conference on Multimedia and Expo (ICME), Shanghai, China, 8–12 July 2019; pp. 832–837. [Google Scholar] [CrossRef]

- Chu, R.; Sun, Y.; Li, Y.; Liu, Z.; Zhang, C.; Wei, Y. Vehicle Re-Identification With Viewpoint-Aware Metric Learning. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8281–8290. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, R.; Cao, J.; Gong, D.; You, M.; Shen, C. Part-Guided Attention Learning for Vehicle Instance Retrieval. IEEE Trans. Intell. Transp. Syst. 2022, 23, 3048–3060. [Google Scholar] [CrossRef]

- Li, J.; Yu, C.; Shi, J.; Zhang, C.; Ke, T. Vehicle Re-identification method based on Swin-Transformer network. Array 2022, 16, 100255. [Google Scholar] [CrossRef]

- Qian, J.; Pan, M.; Tong, W.; Law, R.; Wu, E.Q. URRNet: A Unified Relational Reasoning Network for Vehicle Re-Identification. IEEE Trans. Veh. Technol. 2023, 72, 11156–11168. [Google Scholar] [CrossRef]

- He, S.; Luo, H.; Wang, P.; Wang, F.; Li, H.; Jiang, W. TransReID: Transformer-Based Object Re-Identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 15013–15022. [Google Scholar]

- Wu, F.; Yan, S.; Smith, J.S.; Zhang, B. Vehicle re-identification in still images: Application of semi-supervised learning and re-ranking. Signal Process. Image Commun. 2019, 76, 261–271. [Google Scholar] [CrossRef]

- Huang, F.; Lv, X.; Zhang, L. Coarse-to-fine sparse self-attention for vehicle re-identification. Knowl.-Based Syst. 2023, 270, 110526. [Google Scholar] [CrossRef]

- Quispe, R.; Lan, C.; Zeng, W.; Pedrini, H. AttributeNet: Attribute enhanced vehicle re-identification. Neurocomputing 2021, 465, 84–92. [Google Scholar] [CrossRef]

- Pang, X.; Zheng, Y.; Nie, X.; Yin, Y.; Li, X. Multi-axis interactive multidimensional attention network for vehicle re-identification. Image Vis. Comput. 2024, 144, 104972. [Google Scholar] [CrossRef]

- Taufique, A.M.N.; Savakis, A. LABNet: Local graph aggregation network with class balanced loss for vehicle re-identification. Neurocomputing 2021, 463, 122–132. [Google Scholar] [CrossRef]

- Sun, K.; Pang, X.; Zheng, M.; Nie, X.; Li, X.; Zhou, H.; Yin, Y. Heterogeneous context interaction network for vehicle re-identification. Neural Netw. 2024, 169, 293–306. [Google Scholar] [CrossRef]

- Li, B.; Liu, P.; Fu, L.; Li, J.; Fang, J.; Xu, Z.; Yu, H. VehicleGAN: Pair-flexible Pose Guided Image Synthesis for Vehicle Re-identification. In Proceedings of the 2024 IEEE Intelligent Vehicles Symposium (IV), Jeju Island, Republic of Korea, 2–5 June 2024; pp. 447–453. [Google Scholar] [CrossRef]

- Bai, L.; Rong, L. Vehicle re-identification with multiple discriminative features based on non-local-attention block. Sci. Rep. 2024, 14, 31386. [Google Scholar] [CrossRef]

| Dataset | mAP | Rank-1 | Rank-5 | Rank-10 | Score, S | |

|---|---|---|---|---|---|---|

| VeRi | 0 | 60.00 | 95.41 | 96.96 | 98.03 | 5.84 |

| 0.1 | 80.92 | 95.65 | 97.20 | 98.45 | 0.39 | |

| 0.3 | 80.50 | 95.77 | 97.44 | 98.57 | 0.37 | |

| 0.5 | 80.00 | 95.77 | 97.56 | 98.81 | 0.41 | |

| 0.7 | 79.30 | 95.71 | 97.62 | 98.75 | 0.60 | |

| 0.9 | 77.99 | 95.95 | 97.91 | 98.87 | 0.76 | |

| 1 | 76.24 | 95.05 | 97.79 | 98.99 | 1.43 | |

| VehicleId (Small) | 0 | 82.88 | 80.45 | 91.64 | 92.64 | 5.12 |

| 0.1 | 85.16 | 82.09 | 96.71 | 98.99 | 1.29 | |

| 0.3 | 85.81 | 82.82 | 97.40 | 97.91 | 1.04 | |

| 0.5 | 86.44 | 83.54 | 97.63 | 98.95 | 0.39 | |

| 0.7 | 86.80 | 83.89 | 97.75 | 98.93 | 0.18 | |

| 0.9 | 87.11 | 84.25 | 97.73 | 98.82 | 0.05 | |

| 1 | 86.59 | 83.61 | 97.61 | 98.79 | 0.38 | |

| VehicleId (Medium) | 0 | 79.24 | 76.69 | 88.40 | 90.26 | 5.64 |

| 0.1 | 81.15 | 77.88 | 93.32 | 96.66 | 2.04 | |

| 0.3 | 81.81 | 78.55 | 94.20 | 97.20 | 1.35 | |

| 0.5 | 82.48 | 79.22 | 94.54 | 97.35 | 0.89 | |

| 0.7 | 83.22 | 80.09 | 94.54 | 97.39 | 0.48 | |

| 0.9 | 84.05 | 81.17 | 94.45 | 97.27 | 0.05 | |

| 1 | 83.84 | 80.98 | 94.20 | 97.18 | 0.24 | |

| VehicleId (Large) | 0 | 77.74 | 75.20 | 87.09 | 89.81 | 4.85 |

| 0.1 | 79.48 | 76.48 | 90.44 | 95.00 | 1.96 | |

| 0.3 | 80.01 | 76.99 | 91.23 | 95.72 | 1.33 | |

| 0.5 | 80.77 | 77.82 | 91.73 | 95.96 | 0.74 | |

| 0.7 | 81.48 | 78.64 | 92.14 | 96.09 | 0.23 | |

| 0.9 | 81.82 | 79.01 | 92.33 | 96.07 | 0.01 | |

| 1 | 81.26 | 78.32 | 92.18 | 95.95 | 0.39 |

| Model | mAP (R50) | mAP (R101) | mAP (RN50) | R1 (R50) | R1 (R101) | R1 (ResNext 50) | R5 (R50l) | R5 (R101) | R5 (ResNext 50) |

|---|---|---|---|---|---|---|---|---|---|

| PCB | 76.24 | 76.34 | 75.82 | 95.05 | 94.99 | 94.10 | 97.79 | 97.44 | 97.32 |

| PCB-RR | 80.50 | 80.04 | 79.97 | 95.76 | 95.71 | 96.07 | 97.43 | 96.96 | 97.20 |

| RPP | 77.08 | 76.15 | 76.47 | 94.99 | 94.46 | 93.74 | 97.97 | 97.38 | 97.38 |

| RPP-RR | 81.42 | 79.96 | 80.69 | 96.24 | 94.93 | 95.59 | 97.50 | 96.78 | 96.78 |

| Dataset Variant | Model | mAP (R50) | mAP (R101) | mAP (RNext 50) | R1 (R50) | R1 (R101) | R1 (RNext 50) | R5 (R50) | R5 (R101) | R5 (RNext 50) |

|---|---|---|---|---|---|---|---|---|---|---|

| Small | PCB | 86.59 | 87.38 | 86.57 | 83.61 | 84.70 | 83.63 | 97.61 | 97.14 | 96.99 |

| PCB-RR | 87.11 | 87.87 | 87.20% | 84.25 | 85.25 | 84.38 | 97.73 | 97.24 | 97.22 | |

| RPP | 83.61 | 84.91 | 85.95 | 80.43 | 81.98 | 83.04 | 95.33 | 95.31 | 96.11 | |

| RPP-RR | 84.18 | 85.74 | 86.60 | 81.05 | 82.93 | 83.89 | 95.70 | 95.73 | 96.34 | |

| Medium | PCB | 83.82 | 83.56 | 82.19 | 80.97 | 80.74 | 79.12 | 94.17 | 94.19 | 93.74 |

| PCB-RR | 84.05 | 84.03 | 82.31 | 81.17 | 81.27 | 79.17 | 94.45 | 94.45 | 94.08 | |

| RPP | 81.45 | 81.67 | 81.57 | 78.62 | 78.90 | 78.52 | 91.70 | 91.83 | 92.90 | |

| RPP-RR | 81.76 | 82.38 | 81.96 | 78.90 | 79.67 | 78.94 | 92.13 | 92.25 | 93.21 | |

| Large | PCB | 81.26 | 81.24 | 80.87 | 78.32 | 78.27 | 77.86 | 92.18 | 91.86 | 92.13 |

| PCB-RR | 81.82 | 81.61 | 81.23 | 79.01 | 78.71 | 78.25 | 92.33 | 92.09 | 92.29 | |

| RPP | 79.09 | 79.67 | 80.39 | 76.23 | 76.96 | 77.42 | 89.39 | 89.21 | 91.31 | |

| RPP-RR | 79.72 | 80.33 | 80.85 | 76.92 | 77.69 | 77.96 | 89.74 | 89.60 | 91.56 |

| Model | mAP (R50) | R1 (R50) | R5 (R50l) | R10 (R50) |

|---|---|---|---|---|

| Ensembled (PCB) | 79.83 | 95.29 | 98.09 | 98.92 |

| Ensembled (PCB)-RR | 83.13 | 97.02 | 97.61 | 98.56 |

| Ensembled—RPP | 80.37 | 95.95 | 98.09 | 99.04 |

| Ensembled–RPP-RR | 83.63 | 96.60 | 97.61 | 98.45 |

| Variant | Model | mAP | R1 | R5 | R10 |

|---|---|---|---|---|---|

| Small | Ensembled (PCB) | 88.24 | 85.52 | 98.21 | 99.07 |

| Ensembled (PCB)-RR | 88.51 | 85.80 | 98.19 | 99.17 | |

| Ensembled—RPP | 87.34 | 84.49 | 97.41 | 98.87 | |

| Ensembled–RPP-RR | 87.81 | 85.01 | 97.72 | 99.02 | |

| Medium | Ensembled (PCB) | 84.63 | 81.82 | 95.08 | 97.77 |

| Ensembled (PCB)-RR | 84.59 | 81.73 | 95.32 | 97.82 | |

| Ensembled—RPP | 84.24 | 81.49 | 94.71 | 97.12 | |

| Ensembled–RPP-RR | 84.53 | 81.80 | 95.00 | 97.20 | |

| Large | Ensembled (PCB) | 82.33 | 79.32 | 93.65 | 96.90 |

| Ensembled (PCB)-RR | 82.81 | 79.92 | 93.73 | 96.88 | |

| Ensembled—RPP | 82.15 | 79.27 | 92.88 | 96.26 | |

| Ensembled–RPP-RR | 82.59 | 79.83 | 92.86 | 96.35 |

| Model | mAP (R50) | R1 (R50) | R5 (R50l) | R10 (R50) |

|---|---|---|---|---|

| PCB (H) | 76.24 | 95.05 | 97.79 | 98.98 |

| PCB (H)-RR | 80.50 | 95.76 | 97.43 | 98.56 |

| RPP | 77.08 | 94.99 | 97.97 | 98.75 |

| RPP-RR | 81.42 | 96.24 | 97.50 | 98.51 |

| OPCB | 76.74 | 95.23 | 97.91 | 98.98 |

| OPCB-RR | 80.70 | 95.59 | 96.96 | 98.27 |

| Variant | Model | mAP (R50) | R1 (R50) | R5 (R50) | R10 (R50) |

|---|---|---|---|---|---|

| Small | PCB | 86.59 | 83.61 | 97.61 | 98.75 |

| PCB-RR | 87.11 | 84.25 | 97.73 | 98.82 | |

| RPP | 83.61 | 80.43 | 95.33 | 98.33 | |

| RPP-RR | 84.18 | 81.05 | 95.70 | 97.61 | |

| OPCB | 87.49 | 84.71 | 97.55 | 98.87 | |

| OPCB-RR | 87.94 | 85.20 | 97.87 | 98.98 | |

| Medium | PCB | 83.82 | 80.97 | 94.17 | 97.15 |

| PCB-RR | 84.05 | 81.17 | 94.45 | 97.27 | |

| RPP | 81.45 | 78.62 | 91.70 | 95.18 | |

| RPP-RR | 81.76 | 78.90 | 92.13 | 95.39 | |

| OPCB | 84.03 | 81.2 | 94.42 | 97.4 | |

| OPCB-RR | 84.35 | 81.49 | 94.82 | 97.52 | |

| Large | PCB | 81.26 | 78.32 | 92.18 | 95.95 |

| PCB-RR | 81.82 | 79.01 | 92.33 | 96.07 | |

| RPP | 79.09 | 76.23 | 89.39 | 93.48 | |

| RPP-RR | 79.72 | 76.92 | 89.74 | 93.68 | |

| OPCB | 81.81 | 78.9 | 92.5 | 96.17 | |

| OPCB-RR | 82.59 | 79.84 | 92.84 | 96.23 |

| Method and Reference | mAP (%) | R-1 (%) | R-5 (%) |

|---|---|---|---|

| Batch sample [39] | 67.55 | 90.23 | 96.42 |

| SLSR [40] | 65.13 | 91.24 | NR |

| MRL + Softmax Loss [41] | 78.50 | 94.30 | 98.70 |

| VANet [42] | 66.34 | 89.78 | 95.99 |

| MRM [14] | 68.55 | 91.77 | 95.82 |

| Part-regularized near duplicate [12] | 74.30 | 94.30 | 98.70 |

| PGAN [43] | 79.30 | 96.50 | 98.30 |

| SAN [14] | 72.5 | 93.3 | 97.1 |

| TCPM [23] | 74.59 | 93.98 | 97.13 |

| Swin Transformer [44] | 78.6 | 97.3 | NR |

| URRNet [45] | 72.2 | 93.1 | 97.1 |

| TransReid [46] | 78.2 | 96.5 | NR |

| SSL + re-ranking [47] | 69.90 | 89.69 | 95.41 |

| CFSA [48] | 79.89 | 94.99 | 98.81 |

| Attribute Net, [49] | 80.1 | 97.1 | 98.6 |

| URRNet [45] | 72.2 | 93.1 | 97.1 |

| MIMA Net [50] | 79.89 | 94.99 | 98.81 |

| OPCB + RR (Ours) | 80.77 | 95.59 | 96.96 |

| PCB + RPP + RR (Ensembled) (ours) | 83.63 | 97.02 | 98.45 |

| Method | Rank-1 | Rank-5 | ||||

|---|---|---|---|---|---|---|

| Small (%) | Medium (%) | Large (%) | Small (%) | Medium (%) | Large (%) | |

| MRM [14] | 76.64 | 74.20 | 70.86 | 92.34 | 88.54 | 84.82 |

| SLSR [40] | 75.10 | 71.80 | 68.70 | 89.70 | 86.10 | 83.10 |

| Part regularized near duplicate [12] | 78.40 | 75.00 | 74.20 | 92.30 | 88.30 | 86.40 |

| Batch sample [39] | 78.80 | 73,41 | 69.33 | 96.17 | 92.57 | 89.45 |

| SAN [14] | 79.7 | 78.4 | 75.6 | 94.3 | 91.3 | 88.3 |

| MRL + Softmax Loss [41] | 84.80 | 80.90 | 78.40 | 96.90 | 94.10 | 92.10 |

| VANet [42] | 88.12 | 83.17 | 80.35 | 97.29 | 95.14 | 92.97 |

| PGAN [40] | NR | NR | 77.8 | NR | NR | 92.1 |

| LABNet [51] | 84.02 | 80.18 | 77.2 | NR | NR | NR |

| TransReid [46] | 83.6 | NR | NR | 97.1 | NR | NR |

| HCI-Net [52] | 83.8 | 79.4 | 76.4 | 96.5 | 92.7 | 91.2 |

| VehicleGAN [53] | 83.5 | 78.2 | 75.7 | 96.5 | 93.2 | 90.6 |

| Attribute Net [49] | 86.0 | 81.9 | 79.6 | 97.4 | 95.1 | 92.7 |

| MIMANet [50] | 83.28 | 80.14 | 77.72 | 96.31 | 93.71 | 91.29 |

| URRNet [45] | 76.5, | 73.7 | 68.2 | 96.5 | 92.0 | 89.6 |

| MDFENet [54] | 83.66 | 80.78 | 77.88 | NR | NR | NR |

| OPCB + RR (Ours) | 85.20 | 81.49 | 79.84 | 97.87 | 94.82 | 92.84 |

| PCB + RR (Ensemble) (ours) | 85.25 | 81.72 | 79.92 | 97.24 | 95.32 | 93.73 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nath, R.K.; Mitra, D. An Analysis of Partitioned Convolutional Model for Vehicle Re-Identification. Electronics 2025, 14, 3634. https://doi.org/10.3390/electronics14183634

Nath RK, Mitra D. An Analysis of Partitioned Convolutional Model for Vehicle Re-Identification. Electronics. 2025; 14(18):3634. https://doi.org/10.3390/electronics14183634

Chicago/Turabian StyleNath, Rajsekhar Kumar, and Debjani Mitra. 2025. "An Analysis of Partitioned Convolutional Model for Vehicle Re-Identification" Electronics 14, no. 18: 3634. https://doi.org/10.3390/electronics14183634

APA StyleNath, R. K., & Mitra, D. (2025). An Analysis of Partitioned Convolutional Model for Vehicle Re-Identification. Electronics, 14(18), 3634. https://doi.org/10.3390/electronics14183634