1. Introduction

In the era of digital commerce, online customer reviews have emerged as a crucial source of getting customer feedback, preferences and latent unmet needs [

1,

2]. More than mere sentiment labels or star ratings, these reviews can capture nuanced user experiences, encompassing functional, emotional, and contextual dimensions. They also provide valuable insights for product improvement and marketing strategy formulation [

3]. Bridging such rich unstructured feedback with actionable product innovation remains a key challenge for both academic researchers and practitioners [

4]. A seminal dataset in large-scale user review analysis is the Amazon Reviews dataset curated by the research group of McAuley. The 2023 version of this dataset spans over 571 million reviews across 33 categories and deepens earlier collections in both scale and variety [

5]. Earlier versions have already facilitated extensive research in recommendation systems, sentiment analysis, and user behavior modeling [

6,

7,

8]. The availability of such massive public review corpora has enabled scholars to analyze consumer feedback at previously unattainable scales, but translating raw textual data into innovation-relevant insight remains nontrivial [

9].

Traditionally, review analysis has focused on techniques such as bag-of-words [

10], topic modeling [

11], and sentiment analysis [

12], sometimes augmented with aspect-based sentiment analysis [

13]. These methods help identify frequent themes or polarity distributions but often fall short in capturing the contextual nuance, consumer motivations, or unarticulated needs embedded in narrative text. In their survey, Malik & Bilal (2024) noted that many existing NLP-based review analysis methods rely heavily on keyword co-occurrence, topic modeling, or shallow sentiment classification, which limits their ability to capture deeper contextual nuance and latent user motivations [

14]. Such limitations hinder the extraction of in-depth insights for product enhancement and data-driven strategic decisions. Consequently, organizations face delays in capturing timely feedback, leading to missed opportunities for practical product improvements. Moreover, the contextual and narrative characteristics of review data are often insufficiently reflected, resulting in analyses overly dependent on surface-level statistics.

Recent advances in large language models (LLMs) have opened new possibilities for overcoming these challenges. Because LLMs are pretrained on massive corpora and capable of decoding contextual meanings through human-like summarization and classification, they can be used to infer implicit user goals rather than relying purely on keyword co-occurrence. Some recent studies have begun to explore this capacity for extracting customer needs (CNs) from product reviews and user interviews. For instance, Timoshenko et al. (2025) demonstrated that, under appropriate prompt-engineering and fine-tuning conditions, an LLM can match or even outperform expert human analysts in formulating well-structured CNs [

15]. Similarly, Wei et al. (2025) proposed an LLM-based framework to extract user priorities and attribute-level insights from user-generated content, demonstrating that prompt-driven models can identify nuanced consumer expectations and latent service attributes with greater interpretability than conventional topic modeling [

16]. Another recent model, InsightNet, proposes an end-to-end architecture to extract structured insights from customer feedback by constructing multi-level taxonomies and performing topic-level extraction via a fine-tuned LLM pipeline [

17].

Nevertheless, few studies have connected such extraction to formal frameworks of product strategy or value creation. That makes it harder for product managers or R&D teams to operationalize the output. Other approaches require extensive labeled data or human-in-the-loop correction, limiting adaptability to new products or domains [

18]. Bridging this gap requires integrating the analytical capabilities of LLMs with established theories of value creation, a direction that holds both academic significance and managerial relevance by connecting consumer insight generation with actionable product development and innovation strategy.

Therefore, this study proposes an integrative method that leverages large-scale online customer reviews through LLM-based analysis and maps the extracted insights onto the Value Proposition Canvas (VPC), a widely adopted framework distinguishing Customer’s Jobs, Pains, and Gains [

19]. In this framework, “Jobs” refer to the functional, social, and emotional tasks customers aim to accomplish; “Pains” represent the constraints, inconveniences, and risks encountered during task performance; and “Gains” denote the benefits, outcomes, and values expected from success. The approach automates this process by designing tailored prompts and extraction routines that identify these elements from review text, embed them into a vector space, cluster them into higher-order themes, and link the results to product–market fit evaluation.

This study distinguishes itself from prior research on customer review analysis through three primary contributions. First, it advances beyond conventional sentiment scoring or topic extraction by generating structured, actionable insights—specifically, the Jobs, Pains, and Gains elements—that are directly applicable to product strategy and innovation planning. Second, by leveraging LLM, the proposed framework captures complex categorical structures without requiring extensive labeled data, thereby ensuring scalability and adaptability across diverse product domains. Third, it integrates unstructured review information with VPC, thereby constructing a quantitative–qualitative bridge that advances data-driven customer value modeling and provides a practical decision-support instrument for product managers and R&D teams. Collectively, this integrative approach bridges LLM-based text analytics with customer value theory, contributing to both the theoretical advancement of AI-enabled consumer insight research and the practical domain of evidence-based product innovation.

The remainder of this paper is organized as follows:

Section 2 summarizes prior research on customer need identification.

Section 3 outlines the proposed methodology and analytical procedures.

Section 4 presents empirical validations and case studies.

Section 5 discusses the implications of the research findings.

Section 6 concludes with a summary of key findings and addresses research limitations and directions for future work.

2. Related Works

Properly understanding the voice of customer (VOC) has long been a critical foundation for innovation and product strategy, as it translates customer experiences and perceptions into actionable knowledge for design and development. However, extracting structured insights from vast, narrative-rich customer feedback has remained challenging due to its unstructured and context-dependent nature [

20]. Early research on VOC analysis relied mainly on qualitative methods, such as manual coding and small-sample content analysis, to identify recurring needs and user frustrations [

21]. With the rise of large-scale online review platforms and publicly available datasets such as the Amazon Reviews corpus, the field gradually transitioned toward quantitative, data-driven NLP methods capable of handling massive volumes of user-generated texts [

22].

This quantitative wave introduced techniques such as bag-of-words (BOW) modeling, topic modeling, and sentiment or aspect-based sentiment analysis, enabling scalable identification of frequent themes and polarity patterns. For example, Zhang et al. (2011), as well as Fang and Zhan (2015), demonstrated how BOW and TF–IDF features could classify review polarity, thereby laying a foundation for automated VOC mining [

23,

24]. Building on these text-based representations, Aguwa et al. (2012) further quantified the voice of the customer through a customer-satisfaction ratio model that translated textual VOC indicators into measurable performance metrics, representing one of the earliest attempts to link review analytics to managerial decision frameworks [

25]. Subsequent studies, such as Schouten and Frasincar (2016) and Qiu et al. (2018), demonstrated how aspect-level sentiment analysis can predict or reconstruct product ratings by aggregating aspect-specific polarity [

7,

26]. These approaches improved scalability but still relied on surface co-occurrence patterns, offering only limited capacity to capture contextual nuance and latent user motivations [

6,

8]. In parallel, Yazıcı and Ozansoy Çadırcı (2024) and Barravecchia et al. (2022) compared topic modeling algorithms for extracting actionable marketing insights from large review corpora [

11,

27]. Building on these, subsequent research has sought to embed review analysis within theory-linked requirement frameworks. For example, automated Kano classification models have been developed to categorize product attributes based on customer reviews, while Quality Function Deployment pipelines translate review-level requirements into engineering or design characteristics [

28,

29].

As a remedy, Lee et al. (2023) introduced a context-aware customer needs identification framework that leverages linguistic pattern mining to extract functional and contextual signals from online product reviews, improving interpretability over generic topic or sentiment pipelines [

4]. Even such context-enriched NLP approaches have yet to be fully integrated with strategic decision frameworks that explicitly link customer expressions to value creation constructs. These studies represent an important methodological step toward bridging textual analytics and product design; however, they largely remain confined to attribute-level mappings and do not capture the broader structure of customer value creation.

Despite the growing sophistication of NLP techniques, no established framework yet exists for automatically inferring the VPC signals (Customer Jobs, Pains, and Gains) from large-scale online reviews. With the rapid progress of deep representation learning and large language models (LLMs), VOC analysis has entered a new methodological phase. Timoshenko et al. (2025) demonstrated that LLMs can match expert analysts in generating structured CN statements when appropriately prompted [

15], while Wei et al. (2025) and Praveen et al. (2024) proposed end-to-end pipelines that automatically build multi-level taxonomies of user needs and service attributes [

16,

30]. Nonetheless, most prior LLM-based studies end at the extraction or taxonomy construction stage and rarely connect their outputs with formal value creation frameworks or quantitative fit metrics relevant to product decision-making. The present study therefore aims to operationalize VPC-consistent signals—Customer Jobs, Pains, and Gains—from unstructured customer reviews and link them to Product–Market Fit diagnostics, providing an end-to-end, theory-grounded, and data-driven bridge from unstructured feedback to actionable strategic insight.

3. Data and Methods

3.1. Data

The dataset used in this study was constructed from publicly available customer reviews posted on

Walmart.com, one of the largest retail e-commerce platforms in the United States. The data consist of user-generated textual feedback and associated star ratings on Samsung Galaxy Watch products, collected to examine variations in customer experience and perceived value across different product generations. Specifically, three distinct models (Galaxy Watch 5, Galaxy Watch 6, and Galaxy Watch 7) were selected as representative devices in Samsung’s smartwatch lineup, reflecting incremental advances in design and functionality. Data collection was conducted in August 2025.

For each model, approximately 2000 customer reviews were sampled and downloaded from Walmart’s review repository covering the period from September 2022 to August 2025, ensuring comparability across product generations. Each record contained the following fields: (1) model name (e.g., “Galaxy Watch 5”), (2) review date, (3) customer identifier (anonymized), (4) star rating expressed on a five-point ordinal, (5) review title, and (6) customer review text. More specifically, 1995 customer reviews were collected for Watch 5, 2000 for Watch 6, and 2005 for Watch 7. On average, each review contained approximately 293 characters, corresponding to a mean of 54.9 words per entry.

Prior to analysis, all reviews were subjected to a data cleaning process designed to preserve the linguistic and contextual integrity of the original user expressions. Entries containing missing values or incomplete text fields were excluded. “Review title” and “Customer review text” were subsequently concatenated into a single composite field to serve as the unified textual input for subsequent analysis. Given that LLMs rely heavily on contextual cues for semantic interpretation, no lexical-level preprocessing, such as stop word removal, stemming, or lemmatization, was performed. Only minimal adjustments were applied: unnecessary whitespace, line breaks and formatting artifacts—if they were present—were removed. Moreover, review entries consisting solely of promotional disclaimers were excluded (e.g., [This review was collected as part of a promotion.]). This approach ensured that the textual nuances, sentiment markers, and stylistic features—often critical for inferring consumer intent—remained intact for subsequent LLM-based analysis. The resulting dataset comprised 6000 cleaned reviews, with an average length of approximately 293 characters (55 words) per entry.

3.2. Analysis Framework

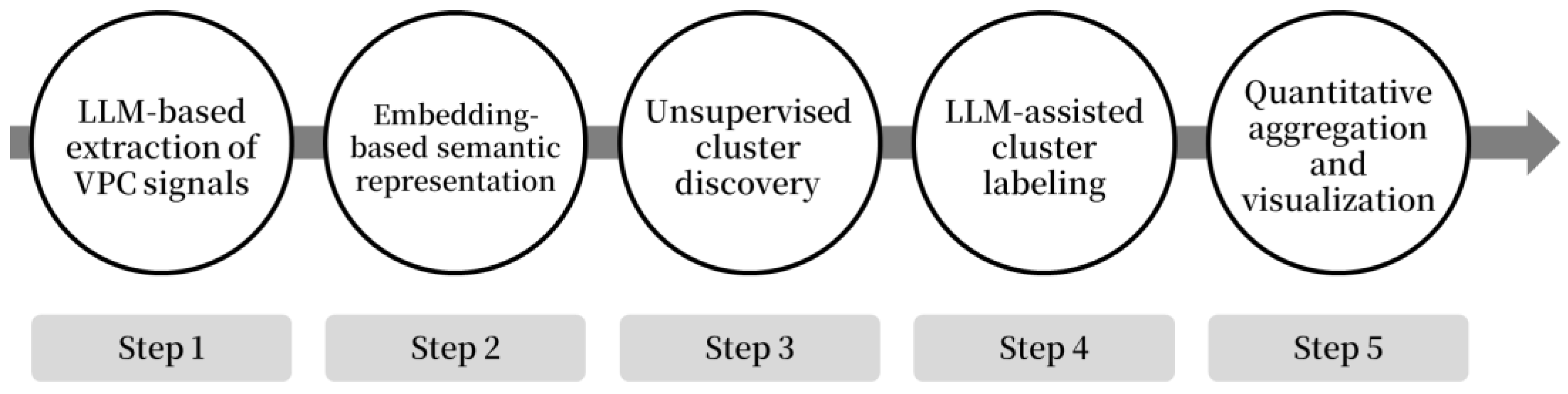

This study develops an end-to-end analysis pipeline that converts raw customer reviews into strategy-ready customer value signals aligned with the VPC. The framework integrates LLM-based information extraction, embedding-based semantic clustering, and quantitative synthesis to derive interpretable insights from customer feedback. The overall workflow, summarized in

Figure 1, consists of five sequential analytical steps.

First, customer reviews are analyzed through an LLM-driven extraction module that identifies six key dimensions (Customer Jobs, Customer Pains, Customer Gains, Feature Gaps, Emotions, and Usage Context) in accordance with the VPC structure. Second, each extracted construct is converted into a fixed-length semantic representation using a transformer-based embedding model, enabling the measurement of similarity among concepts. Third, unsupervised cluster discovery is performed for each field to group semantically related phrases, and optimal cluster numbers are determined through joint inspection of inertia and silhouette coefficients. Fourth, the resulting clusters are assigned interpretable category names generated by an LLM, yielding a conceptual taxonomy that summarizes consumer perceptions and unmet needs. Finally, quantitative aggregation and visualization produce frequency distributions, gap density matrices, and product–market fit indicators that reveal priority areas for product improvement. All procedures were implemented in Python 3.12.7 with fixed random seeds, explicit prompts, and periodic checkpoints to ensure reproducibility. To further enhance methodological transparency, an additional graphical workflow diagram is provided in

Appendix A (

Figure A4), which visually traces the transformation from raw reviews to structured VPC signals and, subsequently, to quantitative PMF metrics via the sequential stages of extraction, embedding, clustering, synthesis and reporting. This illustration shows the end-to-end transformation from input data to analytical outputs.

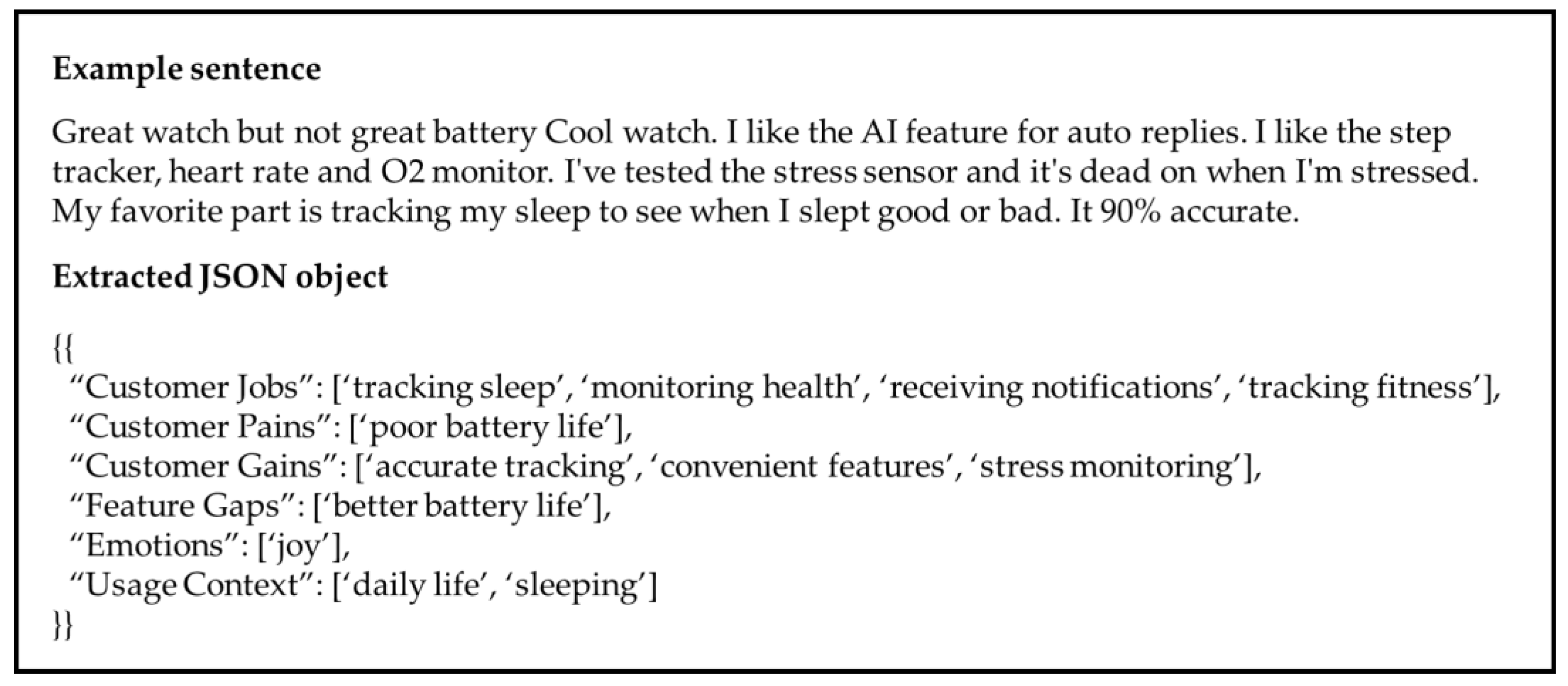

3.3. LLM-Based Extraction of VPC Signals

Each customer review is analyzed by an instruction-tuned or an inference-optimized LLM that returns a structured JSON object with six defined fields: Customer Jobs, Customer Pains, Customer Gains, Feature Gaps, Emotions, and Usage Context (Note: The extracted JSON object can be found in the

Appendix A). In this study, Meta’s Llama 3.3-70B-Instruct-Turbo model was employed as the inference engine due to its strong contextual understanding. The model is an instruction-tuned, text-only LLM optimized for multilingual dialog tasks and has been shown to outperform many existing open-source and proprietary chat models on common industry benchmarks. Its stability in structured text generation makes it particularly suitable for extracting semantically organized information from customer reviews [

31]. Two distinct prompts—system prompt and user prompt—were designed to capture both explicit statements and implied cues as well as to return empty lists when evidence is absent. Inference was conducted through a hosted API (Together AI,

https://www.together.ai/ (accessed on 17 October 2025)) for Llama-3.3-70B-Instruct-Turbo. Hence, no local accelerator configuration is required. We used conservative decoding (temperature ≈ 0.0) with schema-constrained prompting and strict JSON validation to ensure stability and recoverability. Missing keys were replaced with empty lists, and a checkpointing mechanism recorded intermediate results at fixed intervals to ensure safe recovery and reproducibility across large corpora. Downstream components were implemented in Python 3.12.7 with fixed random seeds. The core library stack for embedding and clustering is listed as follows: scikit-learn 1.6.1, numpy 2.0.2, together 1.5.30, pandas 2.2.2.

These six analytical dimensions jointly capture both the functional and experiential aspects of user perception embedded in product reviews. “Customer Jobs” refer to the functional, emotional, or social objectives that users seek to achieve through product use, while “Customer Pains” represent the difficulties or frustrations encountered in accomplishing those objectives. “Customer Gains” denote the benefits or positive outcomes realized or desired by users. “Feature Gaps” identify the discrepancies between expected and actual product performance. “Emotions” reflect affective responses expressed or implied in the reviews, categorized into ten classes: love, joy, trust, surprise, frustration, disgust, anger, fear, sadness, and neutral. This taxonomy extends the Parrott (2001) framework of six primary human emotions (love, joy, surprise, anger, sadness, fear) to incorporate domain-relevant affective states such as trust and frustration [

32]. Finally, “Usage Context” captures situational clues regarding when, where or how the product is used (e.g., “during workouts,” “while traveling,” or “at night”), providing essential information for contextualizing customer experiences.

Table 1 presents an example of the extracted VPC signal, showing how unstructured review text can be systematically parsed into six VPC dimensions. It captures both the functional and emotional aspects of user experience embedded in the narrative while leaving “Customer Pains”, “Feature Gaps” and “Usage Context” fields empty when no explicit evidence is found in the original text.

To assess the reliability of the LLM-based extraction, a random subset of 1000 cases was independently reviewed by domain experts. The resulting expert–model agreement exceeded 90% across all six analytical dimensions, demonstrating strong consistency between human judgment and model inference.

3.4. Embedding Generation

Following the extraction process, each textual element within the six analytical dimensions was converted into a continuous vector representation to standardize the extracted outputs and enable semantic comparison and clustering, since conceptually similar VPC signals often appear in varied linguistic forms. Embedding generation was performed using a transformer-based retrieval/encoding model that encodes each phrase into a fixed-length vector (e.g., 1024 dimensions). This representation preserves contextual and syntactic nuances, allowing conceptually similar expressions (e.g., “battery lasts long” and “strong battery life”) to occupy neighboring regions in the embedding space.

The embedding model “BAAI/bge-m3”, developed by Beijing Academy of Artificial Intelligence (BAAI), was employed to obtain dense embedding vectors. It provides state-of-the-art representational quality across multiple retrieval settings and document granularities. Unlike conventional embedding models optimized for a single task or short English texts, BGE-M3 unifies dense, sparse, and multi-vector retrieval under a single architecture, enabling robust performance on both sentence-level and long-document inputs (up to 8192 tokens). Its self-knowledge distillation framework jointly optimizes these retrieval modes, producing embeddings that are more discriminative and semantically coherent than those from prior models such as E5, Contriever, or OpenAI’s text-embedding series [

33]. These features make BGE-M3 particularly well-suited for analyzing heterogeneous, user-generated review data that vary in linguistic style, length, and expression.

All embeddings were computed independently for the five major dimensions—Customer Jobs, Customer Pains, Customer Gains, Feature Gaps, and Usage Context—to maintain field-specific semantic structures. The “Emotions” dimension was excluded from embedding analysis because it was already represented by discrete categorical labels. The resulting embedding matrices served as the basis for unsupervised clustering and subsequent quantification. To ensure methodological robustness, embeddings were generated under consistent model parameters, with batch processing applied to large text sets, and intermediate checkpoints and fixed random seeds used to maintain traceability and reproducibility. The resulting high-dimensional embeddings provide a scalable foundation for discovering latent semantic patterns within large volumes of customer feedback.

3.5. Clustering of Extracted Concepts

The generated embeddings were subjected to unsupervised clustering to identify semantically coherent groups of customer concepts within each analytical dimension. K-means clustering was employed to partition the embedding space into discrete clusters, enabling the discovery of latent structures without the need for manual supervision [

34,

35]. To determine the optimal number of clusters, the inertia (elbow) criterion and the silhouette coefficient were jointly examined across a predefined range of k values. This dual-metric approach ensures a balance between compactness within clusters and separability across clusters, thereby enhancing interpretability [

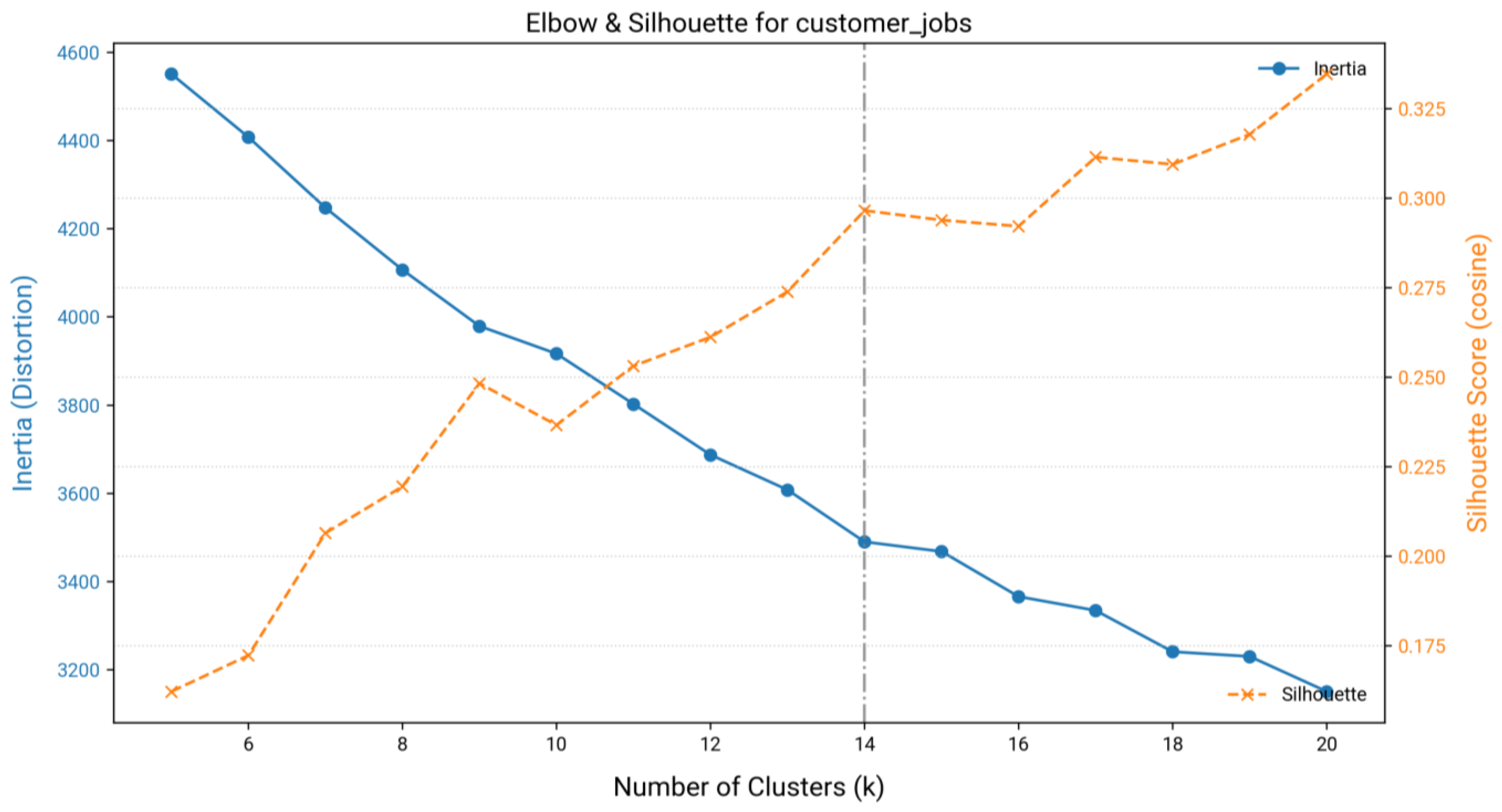

36].

To maintain methodological consistency and avoid distortions introduced by non-linear transformations, clustering was performed directly in the normalized embedding space without any prior dimensionality reduction. All K-means clustering procedures were executed with fixed random seeds and multiple initializations to ensure stability and reproducibility. The initialization parameter (n_init), which defines the number of random centroid initializations before selecting the solution with the lowest within-cluster variance, was set to 10. The number of clusters was systematically explored within the range of k = 5 to 20 at increments of 1 to capture an interpretable range of clustering granularity. The optimal k-value was determined by comparing the results from the Kneedle-based elbow method and the silhouette coefficient, selecting the smaller k to enhance interpretability. The Kneedle algorithm locates the “knee” point of the inertia curve by identifying where the marginal gain in intra-cluster compactness exhibits the most pronounced decline relative to a linear reference line. Formally, the knee point k satisfies the condition Δ(k) = f(k) − L(k) = max(f(k) − L(k)), where f(k) denotes the normalized inertia curve and L(k) represents the line connecting its endpoints [

37]. This method offers a mathematically grounded and noise-robust approach for automatic elbow detection, making it well-suited for large-scale, high-dimensional embedding analyses. In this study, the value of k for each VPC signal is presented in

Table 2.

Figure 2 illustrates the evaluation of cluster compactness and separation across varying k values, used to determine the optimal number of clusters for the “Customer Jobs” dimension. The elbow point, indicated by the gray line, represents the value of k beyond which additional clusters yield marginal improvements in cohesion while increasing model complexity.

3.6. LLM-Based Labeling of Clusters

For each cluster obtained from the embedding-based K-means procedure, a concise, human-interpretable category label was generated using an LLM under a structured instruction prompt. For this purpose, the same LLM described in 3.3 (Llama 3.3-70B-Instruct-Turbo) was employed to generate high-level semantic abstractions and produce coherent category names. To ensure both semantic representativeness and computational efficiency, a subset of cluster phrases was selected based on their cosine similarity to the cluster centroid, using the top 50 most representative phrases rather than random or arbitrary samples. This centroid-based selection method prioritizes phrases that lie closest to the semantic core of each cluster, thereby enhancing the fidelity of the LLM’s summarization process while maintaining token efficiency. The prompt constrained the output to a single, 3–5-word noun phrase without punctuation, thereby encouraging consistent and semantically meaningful labels across clusters. Temperature was fixed at 0.3 to minimize variance in labeling outcomes.

The resulting LLM-generated labels collectively form a hierarchical conceptual taxonomy that merges heterogeneous expressions into coherent, higher-order categories aligned with customer value constructs. This taxonomy enables the projection of raw phrases onto standardized conceptual labels via a mapping function (Customer Jobs, Pains, Gains, Feature Gaps, and Usage Context), which in turn supports subsequent quantitative analyses—including frequency profiling, co-occurrence structures, job–gain/gap heatmaps, and product–market-fit scoring—while preserving interpretability for managerial decision-making.

3.7. Quantitative Analysis and Decision Metrics

Building upon the generated category label, quantitative analyses were conducted to evaluate the relative prominence of customer needs, the degree of unmet demand, and the overall alignment between product performance and user expectations. These analyses aim to establish an interpretive bridge between qualitative insights derived from LLM-based extraction and measurable indicators of product–market fit.

First, a frequency-based analysis was performed across the standardized dimensions of the VPC signals—namely Customer Jobs, Pains, Gains, Feature Gaps, Usage Context, and Emotions. Each label within these dimensions was aggregated and counted to capture the distributional structure of customer feedback expressions, thereby quantifying how frequently specific needs or perceptions were articulated in the review corpus. This frequency profiling serves as a descriptive foundation for subsequent analyses by highlighting the most relevant functional, emotional, and contextual attributes observed across user segments.

Second, a gap density analysis was performed to assess the extent to which each customer job was accompanied by corresponding gain or gap signals. For every review instance, the occurrence of gains and gaps was cross-referenced with the associated job categories to capture alignment patterns between customer expectations and realized outcomes. The proportion of “gap-only” cases, where a Customer Job was mentioned without a corresponding gain but with an identified gap, served as an indicator of latent dissatisfaction and unmet functional needs. This ratio-based measure quantifies the intensity of performance shortfalls across job categories, highlighting areas in which targeted product refinement or feature enhancement could generate the greatest incremental value.

Third, a context–gap matrix analysis was performed to examine how unmet needs are distributed across different usage scenarios. A two-dimensional matrix was constructed to quantify the co-occurrence intensity between usage contexts and unmet needs (high gaps), with the resulting heatmap illustrating where performance deficiencies are most concentrated. This can provide actionable guidance for product improvement priorities.

Finally, a Product–Market Fit (PMF) assessment was implemented to evaluate the overall balance between perceived benefits and performance deficiencies across different customer jobs. “Product–Market Fit” refers to the degree to which a product satisfies latent customer demand by delivering value that matches or exceeds expectations, such that customers adopt, use, and advocate the product [

38]. This concept is conceptually aligned with value proposition design methods that emphasize matching product offers to customer segments [

19]. In essence, achieving PMF implies a state in which a product’s value proposition is aligned with the market’s needs and enables sustainable growth. For each job

j, a composite PMF score was computed as follows:

Here, and represent the number of gain- and gap-related signals associated with job j, and indicates the total number of occurrences of that job within the corpus. A higher PMF score indicates stronger alignment between customer expectations and delivered value, whereas a lower score reveals latent dissatisfaction or structural weaknesses in product–market alignment. This composite measure provides a quantitative yet interpretable framework for evaluating how effectively the product fulfills core customer needs across heterogeneous usage conditions. PMF scores were computed for the full dataset and separately for each product generation.

4. Results

4.1. Distributional Patterns of Customer Value Signals

A frequency-based analysis was conducted to examine the distributional patterns of standardized customer value signals extracted across the six analytical dimensions of the VPC. The resulting frequency distributions summarize how frequently each extracted customer value category (Customer Jobs, Pains, Gains, etc.) appeared in the review corpus. In the case of “Customer Jobs”, 14 different category labels were identified, reflecting the diverse functional purposes associated with the smartwatch use. The top 3 most frequently observed jobs were productivity management (n = 2370), device usage experience (n = 1923), and social connection needs (n = 1498). These results indicate that customers primarily engage with the smartwatch to enhance personal efficiency, manage device-related interactions, and maintain social connectivity, highlighting its dual role as both a productivity facilitator and a lifestyle integration tool. (See

Appendix B Table A1,

Table A2,

Table A3,

Table A4 and

Table A5 for the corresponding tabular distributions.)

Within the “Customer Gains” dimension, 11 distinct category labels were identified, reflecting various forms of added value and user satisfaction derived from the smartwatch. The top 3 most prevalent gain categories were information accessibility (n = 2452), product attributes (n = 2012), and product convenience (n = 1756). These findings indicate that customers derive the greatest value from the smartwatch’s ability to provide instant information access and deliver reliable, appealing performance, underscoring its role as an integrated tool that enhances both functional efficiency and lifestyle optimization.

Analysis of the “Customer Pains” data revealed 10 distinct category labels capturing the primary sources of dissatisfaction in product usage. The top 3 most frequently mentioned pain categories were battery life problems (n = 984), user experience issues (n = 752), and device usability problems (n = 629). These results indicate that customer dissatisfaction is primarily driven by concerns over limited battery endurance, inconsistent system performance, and functional malfunctions in specific device features, highlighting the need for technical refinements that enhance reliability, efficiency, and overall user experience.

Analysis of the “Feature Gaps” data identified 14 distinct category labels that represent areas where customers perceived functional or design-related shortcomings. The 3 most frequently mentioned gap categories were battery life concerns (n = 638), user experience features (n = 370), and functionality issues (n = 265). These findings suggest that customer-perceived gaps are primarily associated with insufficient battery performance, limited or unstable feature functionality, and shortcomings in interface design, emphasizing the necessity for targeted improvements in performance stability and user-centered feature enhancement.

Regarding the “Usage Context” dimension, 11 distinct category labels were identified, describing the diverse situations and routines in which the smartwatch is utilized. The three most frequently mentioned contexts were daily life concerns (n = 1029), personal device usage (n = 940), and fitness activities (n = 928). These findings suggest that smartwatch use is deeply embedded in users’ everyday habits, personal device interactions, and fitness routines, indicating that the product functions as a seamlessly integrated companion supporting both daily convenience and fitness-oriented lifestyles.

For “Emotions”, the distribution revealed a predominantly positive emotional profile with a strong bias toward joy and love. As shown in

Table 3, joy was the most frequently observed emotion (n = 4265), followed by love (n = 2399), frustration (n = 1298) and trust (n = 148). Negative emotions such as anger (n = 114), surprise (n = 83), sadness (n = 39), disgust (n = 27), and fear (n = 4) appeared far less frequently, while neutral expressions accounted for a minor share (n = 106). This distribution indicates that overall customer sentiment toward the product was strongly positive, with only limited instances of frustration and negative affect reflecting localized dissatisfaction rather than widespread discontent. The relative dominance of joy and love further implies a highly favorable user experience, whereas the presence of frustration points to specific usability or performance gaps that merit closer examination in subsequent analyses. This overall positivity is consistent with the average star rating of 4.54 out of 5 across the review corpus, demonstrating that affective tone and quantitative satisfaction metrics converge to depict a favorable customer perception of the product.

4.2. Gap Density Analysis

According to the gap density analysis, a gap density ratio for each “Customer Jobs” dimension was calculated.

Figure 3 visualizes the proportion of “gap-only” instances, in which a customer job was mentioned without a corresponding gain signal across all job categories. The results show that the product usage experience and device usage experience exhibit the highest gap densities, indicating that customers most frequently reported unmet needs related to operational performance and device interaction quality. Moderate levels of gap density were also observed for categories such as time management tools and notification management, suggesting persistent limitations in functionality integration and responsiveness. In contrast, health tracking and sleep tracking features presented relatively low gap ratios, implying that core wellness-related functions are generally meeting user expectations. Overall, the gap distribution underscores that perceived deficiencies are concentrated in interaction- and usability-oriented dimensions rather than in fundamental monitoring capabilities. Although the overall gap density ratios were relatively low, their consistent presence across multiple interaction-related job categories underscores latent opportunities for performance and usability enhancement.

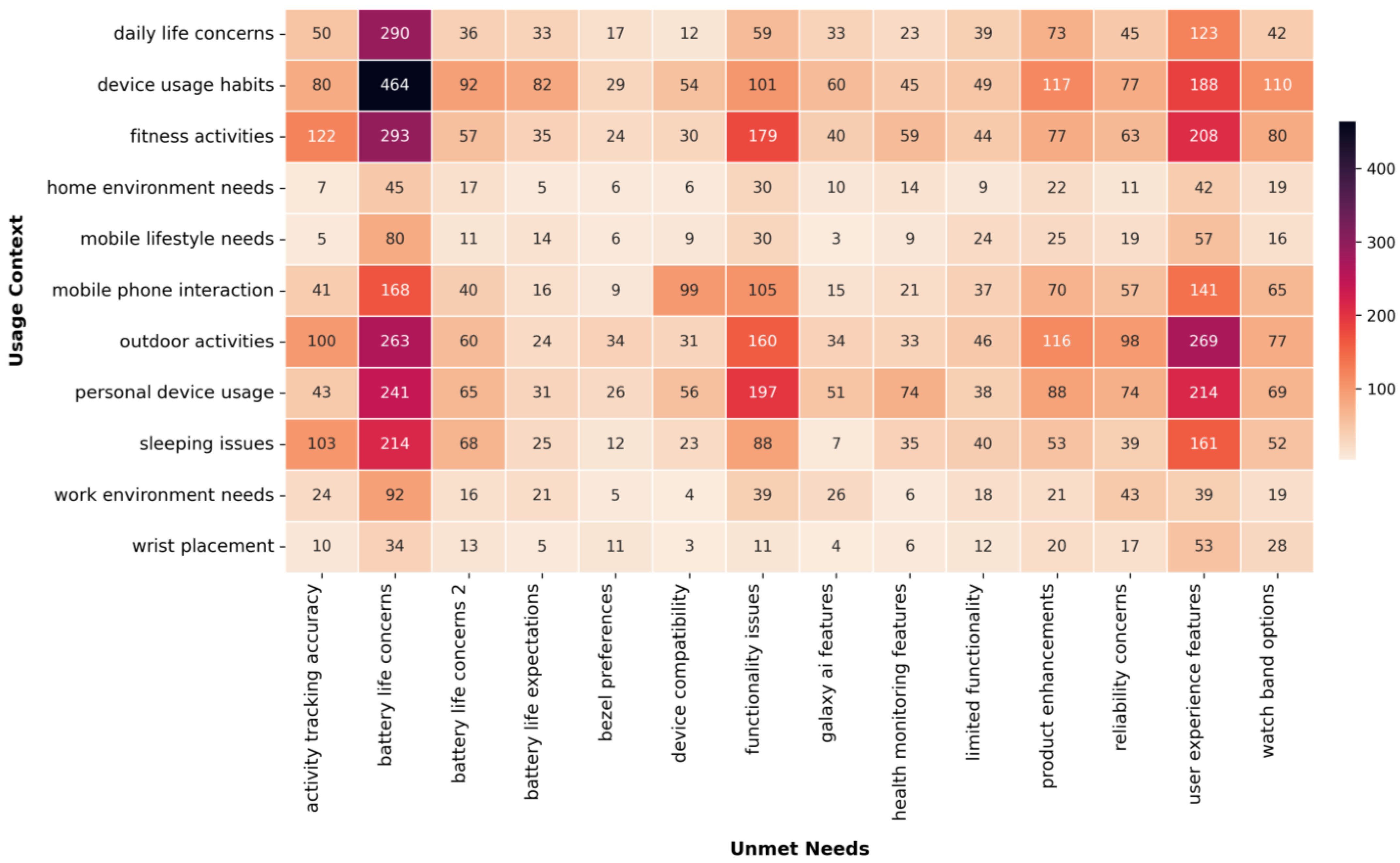

4.3. Context–Gap Matrix Analysis

As shown in

Figure 4, the visualization highlights specific contexts in which functional shortcomings are repeatedly mentioned, providing insights into which usage situations require targeted product refinement. The color scale indicates the frequency of co-occurrence between each usage context and unmet need, with deeper color intensity representing higher concentrations of reported deficiencies. Notably, the highest gap intensities are observed in “device usage habits”, “fitness activities” and “daily life concerns”, primarily driven by persistent issues related to battery life and feature reliability. This pattern suggests that customers most frequently experience dissatisfaction during prolonged or performance-intensive use, where power consumption and functional consistency become critical factors. Conversely, contexts such as “home environment needs” and “mobile lifestyle needs” exhibit relatively low gap frequencies, indicating that environment-dependent interactions contribute less to overall dissatisfaction. Overall, the distribution underscores that perceived deficiencies are predominantly associated with usage contexts involving sustained device operation and active engagement, emphasizing the importance of optimizing power efficiency and performance stability.

4.4. Product–Market Fit Analysis

As shown in

Figure 5, the visualization presents the comparative distribution of gain and gap signals across various customer jobs, illustrating the relative balance between perceived benefits and performance deficiencies. The results indicate that “productivity management” and “device usage experience” exhibit the highest overall gain counts, suggesting that customers consistently recognize these areas as primary sources of value and satisfaction. However, these same categories also display relatively high gap counts, implying that while they contribute strongly to perceived utility, they simultaneously represent key domains where functional or performance-related issues are most frequently encountered. By contrast, jobs such as “music experience,” “personal style preferences,” and “phone call management” show both lower gain and gap frequencies, reflecting their limited impact on overall user satisfaction. Collectively, this pattern suggests that the smartwatch’s product–market fit is strongest in productivity- and interaction-oriented contexts. Yet sustained improvements in reliability and responsiveness remain critical for reinforcing perceived value in high-engagement use cases.

Next, PMF score following Equation (1) was calculated and shown in

Table 4. The resulting distribution of PMF values revealed discernible variation across customer jobs, reflecting differing levels of alignment between user expectations and perceived performance. Customer Jobs such as “productivity management”, “social connection needs” and “health monitoring tools” achieved the highest PMF scores, indicating a strong alignment between functional value and user satisfaction. Conversely, lower PMF values were observed for categories such as “watch usage experience” and “time management tools”, suggesting that these domains contribute less to perceived overall fit or occupy a secondary position in users’ value hierarchy. Collectively, the PMF score analysis underscores that the smartwatch achieves its strongest product–market alignment in use cases emphasizing productivity enhancement, continuous interaction, and health-related functionality, while further improvements in peripheral features could strengthen the breadth of its market appeal. In particular, within the health monitoring family of jobs, “heart rate monitoring” exhibits a comparatively modest PMF (0.302), noticeably below “health monitoring tools” (0.329) and “health tracking features” (0.326), indicating residual concerns about sensing reliability and measurement stability. Overall, the PMF scores span a relatively narrow band (0.201–0.393), yet the dispersion is sufficient to set priorities at the lower end—especially “watch usage experience” (0.201) and “time management tools” (0.271). Targeted improvements in power efficiency, interaction latency, and the consistency of core sensing functions are therefore expected to yield the largest marginal gains in perceived product–market fit.

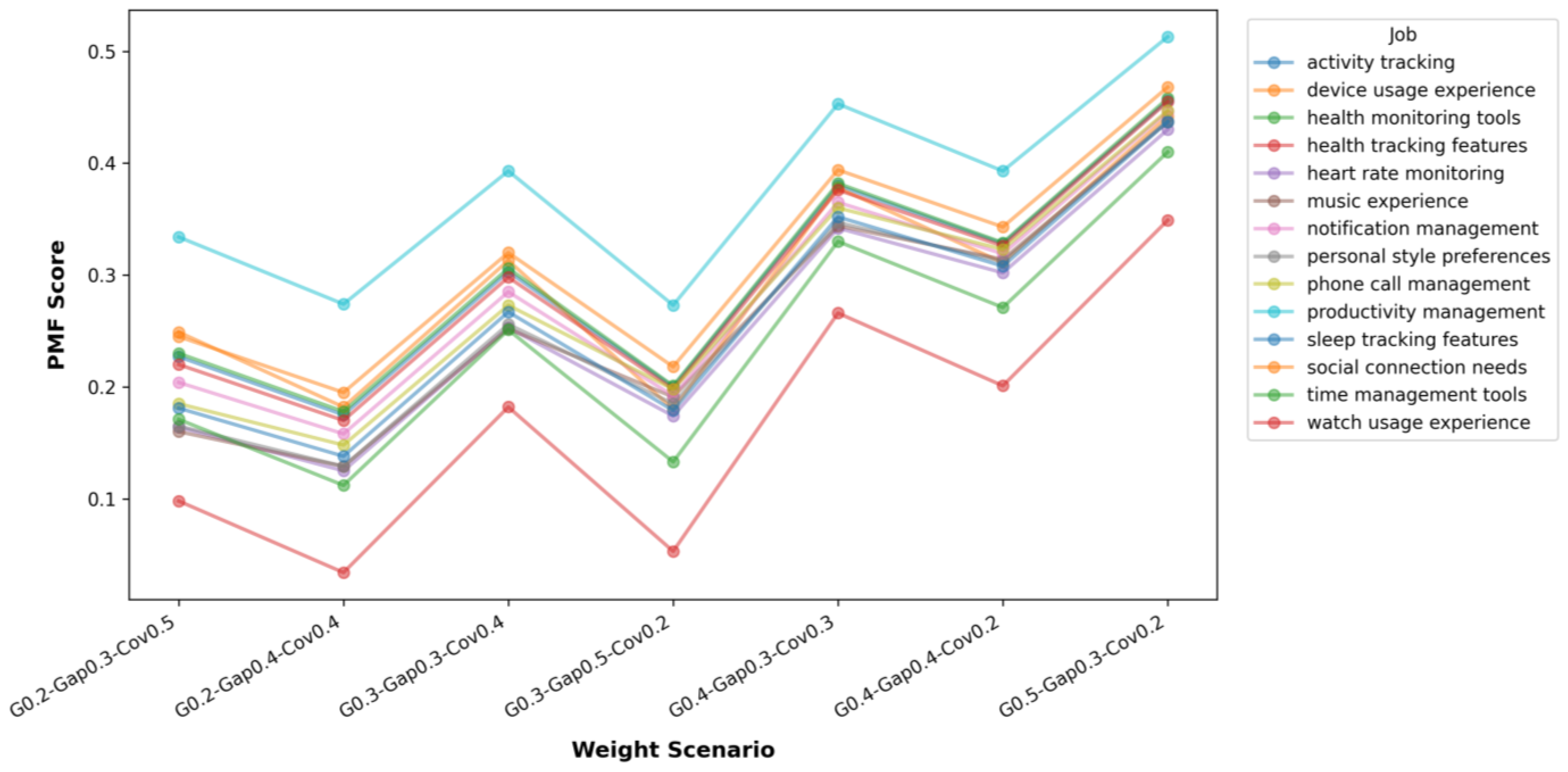

Because the baseline PMF score employed fixed weights, we conducted a sensitivity analysis using alternative weight combinations for the gain rate, gap rate, and job coverage terms. As shown in

Figure 6, varying the weights shifts absolute PMF levels but does not significantly alter the relative ordering of customer jobs across the tested scenarios. High-ranked categories (e.g., productivity management, social connection needs) remain consistently above the rest, whereas low-ranked categories (e.g., watch usage experience, time management tools) persist at the lower end. This pattern indicates that the main conclusions are robust to reasonable changes in weighting, and that the inferred product–market fit hierarchy is not an artifact of a particular weight specification.

Additionally, to evaluate whether the relative ordering of customer jobs is stable across the alternative weighting schemes, the ranks from each weight scenario were aggregated and assessed using Kendall’s coefficient of concordance (W). Kendall’s W quantifies agreement among multiple ranked lists (0 = no agreement; 1 = complete agreement). Across all tested scenarios, the analysis returned W = 0.921 (p < 0.001), indicating very strong and statistically significant concordance of the rankings across scenarios.

Moreover, although the relative ordering is invariant across weight scenarios, emphasizing the gap rate lowers the absolute PMF levels of gap-heavy jobs and increases their spread from higher-performing categories. In practice, a higher gap weight reduces the absolute PMF score for gap-heavy jobs, bringing them closer to any intervention threshold and potentially triggering earlier remediation, even if the rank order is unchanged. Accordingly, the relative priorities can be confidently maintained for future product planning, while absolute PMF score shifts under alternative weights may still inform threshold-based resourcing and timing decisions.

4.5. Variations Across Product Generations

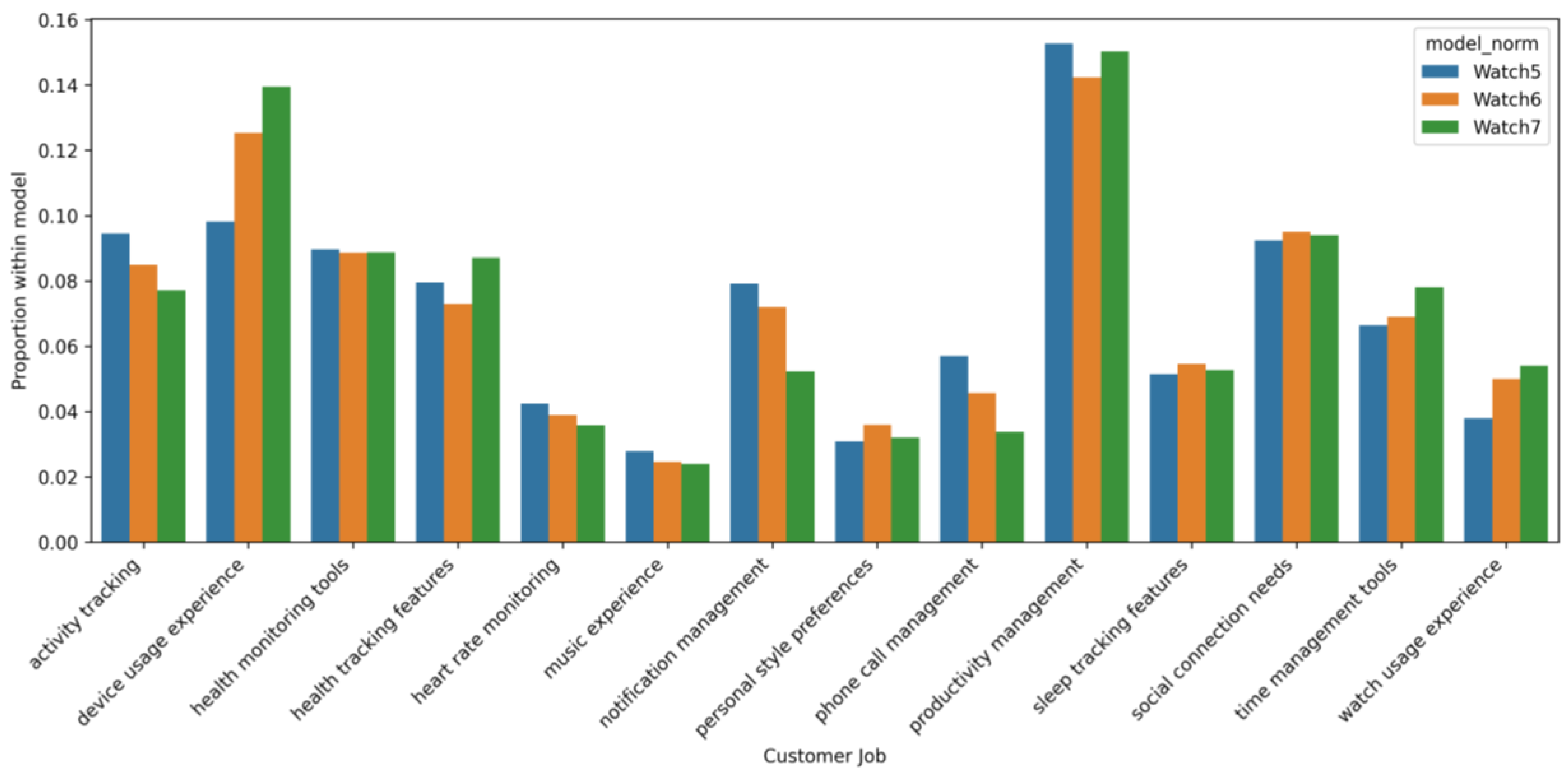

Lastly, as the analyzed data span three product generations (Watch 5–7), we examined cross-generation shifts in the prominence of customer jobs and the associated PMF scores. A chi-square test of independence indicated a significant association between product generation and job distributions (χ2(26) = 147.37, p < 0.001), implying that the mix of jobs discussed by customers changes across releases.

Figure 7 depicts proportional shifts across job category labels. “Device usage experience”, “watch usage experience”, and “time management tools” increase monotonically from Watch 5 to Watch 7, whereas “activity tracking”, “phone call management” and “notification management” exhibit a downward trend. “Productivity management” remains the single most prominent job across all generations, with only minor fluctuations, and “sleep tracking features” is comparatively stable.

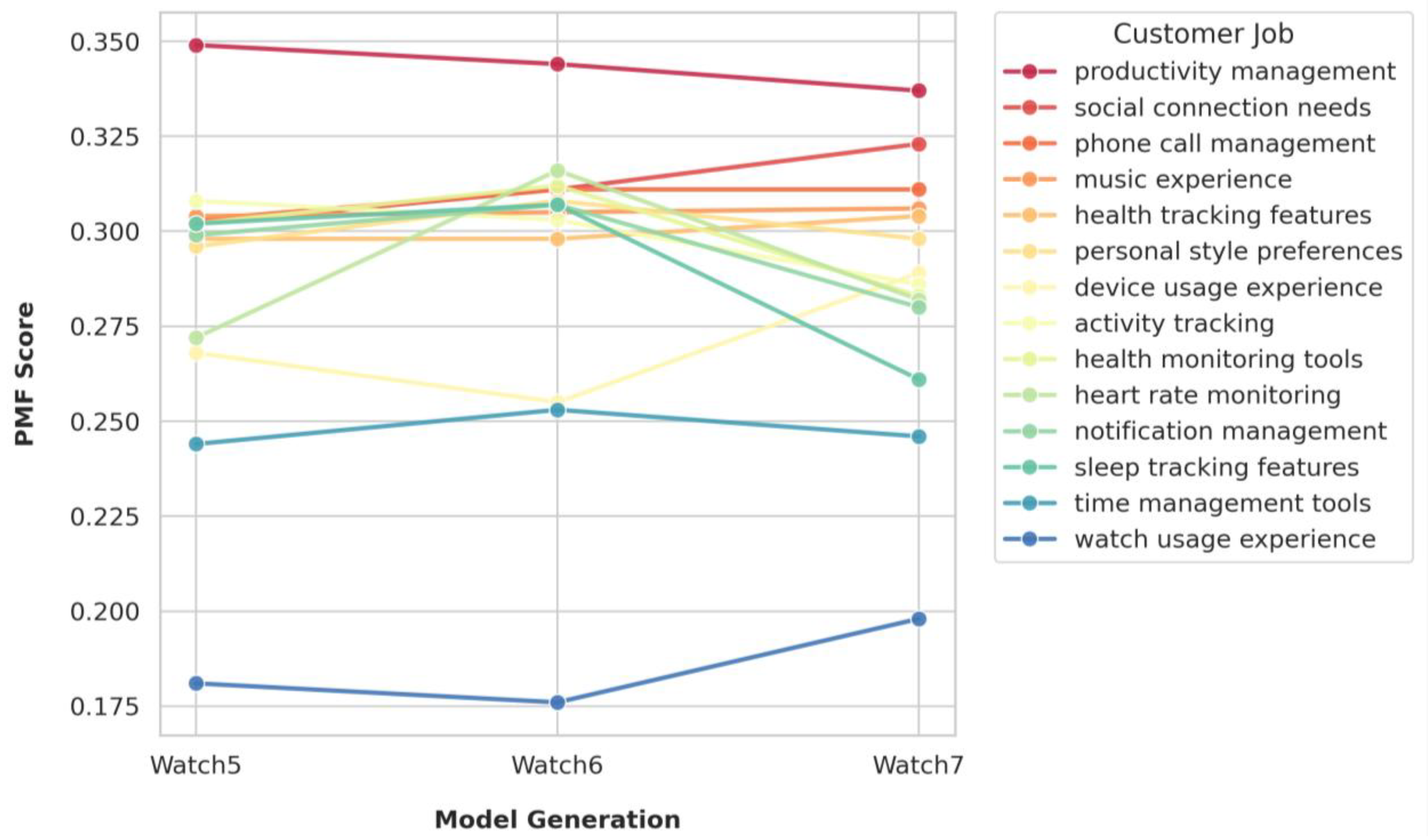

Table 5 summarizes the PMF scores for each customer job across product generations. “Productivity management” remains the top-ranked job in all models, while “device usage experience” and “social connection needs” show positive Δ (7–5) values, indicating improved alignment in later generations. In contrast, “sleep tracking features” and “activity tracking” exhibit a modest decline in PMF by Watch 7, suggesting that monitoring-centric functionality has not uniformly benefited from generational updates.

Figure 8 visualizes the changes in PMF scores across different product generations to facilitate the comparison of trends and generational differences. In addition, the derived metrics (e.g., Gap density and PMF score) offer practical implications for data-driven product and UX management. These indicators can assist decision-makers in identifying feature areas with high improvement potential and quantifying how effectively current products address user needs. For instance, a high gap density in specific functional domains may signal priorities for feature enhancement, while PMF trends across generations can inform future product roadmap adjustments. Moreover, these measures can serve as quantitative inputs for setting performance indicators (KPIs) related to user satisfaction or innovation outcomes. By integrating such metrics into the product development workflow, corporations can more systematically align design and investment decisions with empirically grounded customer insights. Accordingly, this framework advances theoretical understanding while reinforcing its practical relevance for strategic decision-making.

5. Discussion

Building on the empirical results, this section synthesizes the study’s contributions and practical takeaways. The analysis results jointly characterize how customers experience and evaluate the smartwatch. A predominantly positive effect, concentrated unmet needs around battery life and interaction quality, and the persistence of the PMF ranking under alternative weight schemes were observed. Simultaneously, the cross-generation PMF table functions as a compact diagnostic, with row-wise comparisons showing generation-specific alignment and Δ columns revealing directional change, thereby translating the empirical patterns into actionable priorities for product refinement.

In terms of theoretical implication, this study advances a computational bridge between unstructured review text and formal value proposition theory. By operationalizing “Jobs–Pains–Gains” as extractable signals via LLMs and mapping them to clusterable semantic spaces, the framework moves beyond sentiment or topic prevalence to recover actionable value constructs at scale. The integration of gap density diagnostics and a composite PMF index (balancing gain rate, gap rate, and coverage) yields a quantitative–qualitative coupling that ties narrative evidence to strategy-ready metrics across product generations. Methodologically, the sensitivity analysis of PMF weights and concordance testing of rankings demonstrated that the inferred value hierarchy is robust rather than weight-dependent, strengthening external validity. The cross-generation distributional tests further corroborate that the framework can detect structurally meaningful shifts over successive releases. Collectively, these elements contribute a generalizable pipeline for theory-driven text analytics that can be applied across product domains without extensive labeled data.

In terms of managerial implications, for product and UX management, the results prioritize where and how to intervene. First, high-engagement jobs (notably productivity management and health monitoring) deliver the greatest perceived value (high PMF) yet still exhibit salient gaps around battery endurance and interaction stability in everyday and fitness contexts. Hence, targeted work on power efficiency, interaction latency, and core sensing reliability is most likely to yield outsized gains. Second, the context–gap matrix pinpoints usage situations (e.g., device usage habits, daily life, fitness activities) in which deficiencies concentrate, guiding scenario-specific improvements and test plans rather than generic feature tweaks. Third, the generation-wise PMF table enables roadmap sequencing by highlighting which jobs strengthened or weakened in alignment, thereby supporting cohort- and release-specific prioritization. Fourth, jobs with consistently low PMF (e.g., watch usage experience, time management tools) should be considered for scope reduction, redesign, or bundling with higher-value flows to avoid diluting perceived fit. Finally, because the PMF ranking remains stable under plausible weighting schemes, cross-functional product and UX teams—together with data science and engineering—can rely on these priorities to set KPIs (e.g., gap-only ratio targets) and to design experiments (e.g., A/B tests tied to job-level gains and gaps), thereby increasing confidence that decisions are robust to modeling choices.

Moreover, the practical utility of the proposed framework is constrained by several methodological and contextual dependencies. As the analysis relies on customer reviews collected from a single e-commerce platform, representativeness may be affected by demographic or platform-specific biases. Future efforts should explore reviewing data across multiple sources (e.g., Amazon) to mitigate sampling bias and enhance generalizability. Simultaneously, the framework’s reliance on a specific LLM introduces inherent variability related to model configuration, training corpus composition, and prompt sensitivity. To reduce this dependency, the present study adopted deterministic temperature settings, schema validation, and checkpointing mechanisms to ensure output stability. Nonetheless, future research could employ ensemble-based approaches that integrate multiple LLMs to enhance robustness and reduce single-model bias. For instance, outputs derived from different LLM architectures (e.g., Llama or GPT) could be aggregated using majority voting or agreement-based filtering, ensuring that only consistently inferred insights are retained. Such cross-model validation would allow the framework to identify stable semantic patterns across architectures, thereby improving confidence in extracted customer signals and mitigating idiosyncratic model effects.

In sum, the study demonstrates how LLM-enabled value extraction, coupled with clustering and PMF-based synthesis, can translate raw customer voice into defensible product strategy. It can advance theory on value modeling while providing managers with precise, context-aware levers for improvement. By incorporating cross-generation analyses, the framework not only diagnoses current pain points and value drivers but also tracks how alignment evolves across releases, thereby informing evidence-based product planning.

6. Conclusions

Using LLMs, this study extracted and classified information related to jobs, pains, and gains from real consumer reviews, thereby introducing a new quantitative approach for interpreting large-scale VOC data. Practically, the derived outputs (e.g., frequency profiles, context–gap matrices, and generation-wise PMF scores) serve as compact diagnostics that help prioritize where and how to intervene, supporting KPI design and experiment planning in a data-driven manner. The proposed approach is model-agnostic and scalable, requiring no extensive task-specific labels, which facilitates transfer to adjacent product domains.

Despite its contributions, this study comes with certain limitations. First, the dataset is drawn from a single retailer platform and a limited time window, which may introduce selection and platform-specific biases. Second, extraction and labeling remain sensitive to prompt design, LLM configuration, and embedding choices. Although the analysis and experimental procedures are documented in detail, alternative prompt designs and model configurations should be systematically evaluated. Third, several operational choices (e.g., binary treatment of gain/gap at the review level, heuristic PMF weights) impose simplifying assumptions that may compress nuance in multi-signal reviews. Fourth, while expert–model agreement provided an initial validation of reliability, the study did not include comparative baselines, external validation on additional datasets, or quantitative performance metrics due to the absence of standardized benchmarks for VPC-aligned extraction. Building upon this research, future studies could establish comparative validation frameworks using larger and more diverse review corpora, enabling systematic evaluation across alternative model architectures and dataset conditions to further substantiate the robustness and generalizability of the proposed approach. Additionally, cross-model benchmarking lies beyond the present scope but is acknowledged as a limitation and designated as a priority for future research.

Accordingly, future research should test different varieties of LLMs and link the proposed metrics to downstream outcomes—such as A/B tests, churn/return rates, service logs, or telemetry—to support causal claims and to close the loop between insight generation and measurable product impact.