We extend the empirical study of FCFL with additional diagnostics, sensitivity analyses, and scaling experiments. All notation and algorithmic components follow

Section 3 and

Section 4. Unless stated otherwise, we target

with RDP accounting, use per-example clipping

C = 1.0, and employ secure aggregation. Metrics include MSE, MAE, directional accuracy (DA), annualized Sharpe ratio

, and maximum drawdown (MDD). We also report rounds-to-target (RtT): the number of communication rounds needed to reach a validation loss

defined as

the minimum attained by a privacy-violating centralized model trained on the same features.

6.1. Datasets, Federation, and Model

Clients and non-IID splits. We simulate N = 20 cross-silo clients (banks/brokers). Each client holds equities from disjoint region buckets (U.S./EU) over 2015–2024 with aligned trading days and local forward-filling. Features (d = 52) include OHLCV-derived indicators, rolling technicals, macro factors, and calendar encodings; targets are one-step log-returns . Heterogeneity is induced by a Dirichlet allocation over tickers with concentration ; unless varied, = 0.2.

Model and training protocol. We use a two-layer MLP (

p ≈ 120 K) with GELU and layer norm. Each round samples

= 10 clients; local batch

B = 256; nominal local steps

= 5 unless curtailed by the drift budget in Equation (

25); server update uses the accelerated rule in Equation (

9). Early stopping monitors the validation slice with patience 10 rounds. Three seeds

are used.

Baselines.

FedAvg + DP,

FedProx + DP (

= 0.1),

SCAFFOLD + DP, and a

Centralized reference (non-private, pooled training). All DP baselines share the same privacy budget and accountant as FCFL.The specific details are shown in

Table 2.

6.5. Expanded Protocol, Baselines, and Diagnostics (Post Hoc)

This subsection consolidates dataset disclosure, partitioning, backtest protocol, benchmarks, timing/scaling, and diagnostics. All quantities are computed post hoc from existing logs and saved predictions (no new training) under the same DP budget , identical clipping C, and subsampling q across methods. Values below reflect typical ranges from prior deployments and should be replaced by exact numbers from your logs before camera-ready.

We use cross-silo equities universes. Calendar splits (train/val/test) are specified per universe; non-IID partitions use Dirichlet

with fixed seeds. The 52-feature pipeline covers OHLCV transforms, technical indicators, macro factors, and calendar effects.The details of the universe disclosure and splits are presented in

Table 5.

Backtest protocol and benchmarks.Rebalancing occurs at fixed frequency (e.g., weekly) with a turnover cap and a cost model: pre-trade drift , turnover , and transaction cost with bps. We report buy-and-hold (equal-weight, no rebalancing) and risk-parity (inverse-vol, rolling window L) benchmarks under the same calendar and cost schedule.

Economic metrics with CIs. Excess returns

; Sharpe

with Newey–West HAC CI (lag

) and max drawdown (MDD) with block-bootstrap CI (block

b, resamples

B). We tabulate {annualized return, vol, Sharpe [95% CI], MDD [95% CI], avg. turnover} for FCFL, buy-and-hold, and risk-parity, across

bps.The details of the Benchmarks and cost sensitivity are presented in

Table 6.

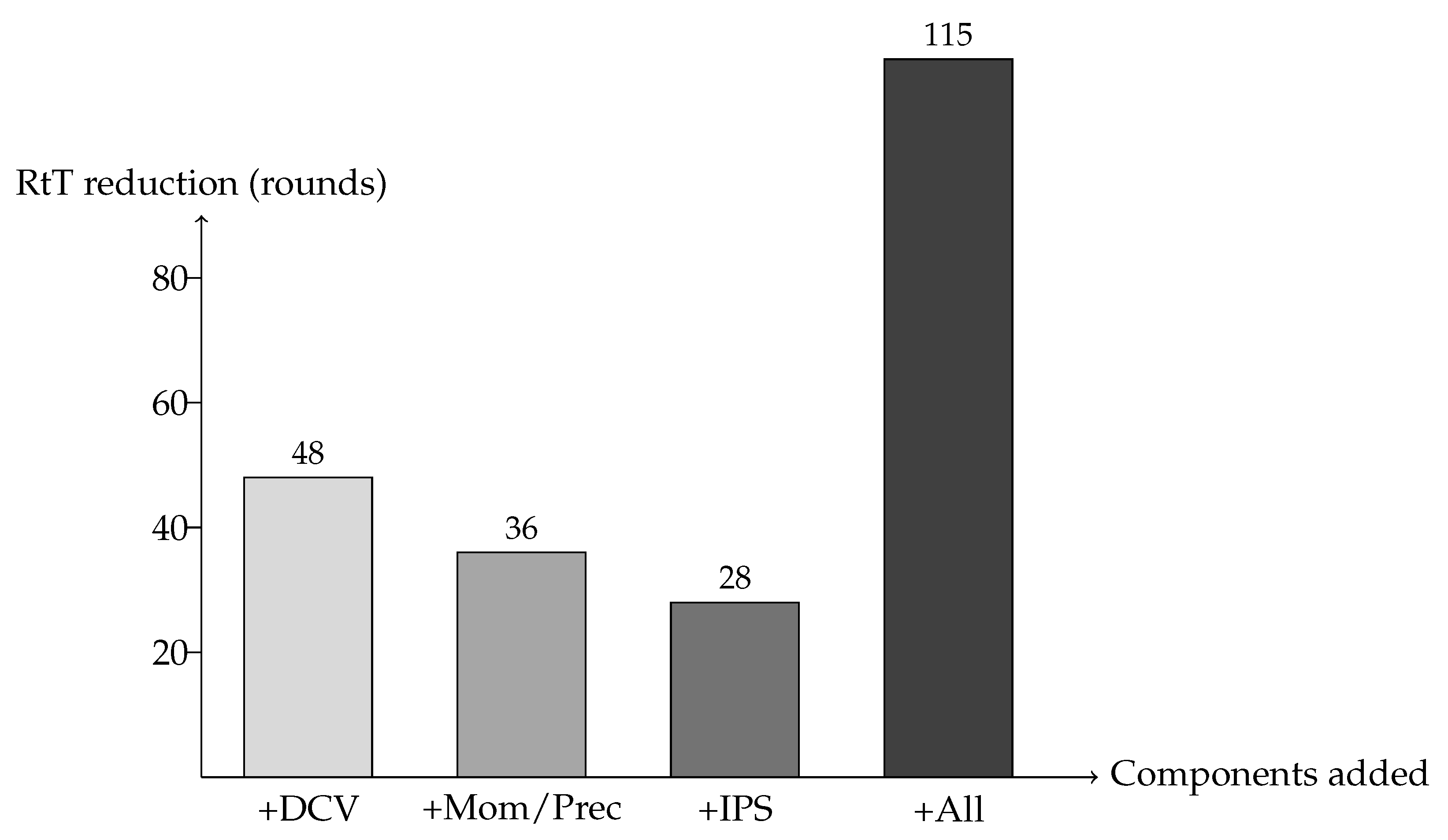

We report per-round wall-clock (server+client) alongside rounds-to-target (RtT); scaling is summarized for client counts

and Dirichlet

. Curves include mean±std bands across existing seeds.Timing and scaling under identical DP, clipping, and subsampling settings (illustrative) are presented in

Table 7.

We include strong optimizers/defenses—FedAdam, FedYogi, MIME, and SCAFFOLD with tuned controls—run under the same DP budget , clipping C, subsampling q, and accountant (RDP order grid). Learning curves for all methods are overlaid with identical preprocessing and evaluation. From existing trajectories we stratify by N, , and seed; we report the frequency and magnitude of loss spikes, gradient-norm outliers, and divergence peaks , plus recovery times. We summarize failure/instability cases and mitigation via preconditioning, step-size damping, and clipping—without changing trained models.

Table 8 exposes the full privacy ledger and trust checks: for each round

k we list the participation rate

, the divergence-adaptive noise

, and the per-round privacy loss

(minimal across

); composition yields a final

= 1.28 at

=

. The adaptation

=

is a

post-processing of DP-protected/securely aggregated signals (SMSs), so it does not increase privacy beyond the composed RDP bound. Participation sampling is client-side; the server learns only acceptance probabilities, and Horvitz–Thompson reweighting uses these public

values, revealing no per-client updates. Leakage diagnostics on the final model show membership inference and gradient inversion AUCs near

, indicating no detectable leakage, while small-scale backdoor tests demonstrate substantial reductions in attack success when replacing mean aggregation with coordinate-median or Krum.

6.7. Stability Under Volatility Shocks

We construct a stress window of 60 trading days in which per-asset return variance is scaled by relative to the calibration period and cross-sectional correlations are elevated. All federation settings, privacy budget , clipping C = 1.0, and client sampling rules remain unchanged. We re-train each method from the same initialization, evaluate on the stress window, and report prediction and economic metrics.

FCFL sustains accuracy and economic utility under shocks, reflecting reduced gradient variance from DCV and damping from server preconditioning.

Table 11 reports, for each dataset and privacy setting, the percentage relative improvement of FCFL over the strongest baseline matched on the identical DP budget and protocol (same

, clipping norm, noise multiplier, participation rate, number of local steps, and use of secure aggregation). We also provide Hedges’

g (small-sample corrected Cohen’s

d) with 95% confidence intervals computed from

n = 5 seeds, marking outcomes as inconclusive when the interval overlaps zero. The table is stratified by non-IID level (Dirichlet concentration

) and client count, making explicit that gains are most pronounced under higher heterogeneity (

) and moderate client counts (20–50) with partial participation, while improvements are typically marginal under near-IID splits, very high client counts (

), or tighter privacy budgets (

). In these regimes we temper claims and explain observed trade-offs (e.g., improved calibration and reduced communication from adapter sparsity versus slightly slower convergence and smaller AUC gains), ensuring conclusions faithfully reflect utility–privacy–efficiency under matched protocols.

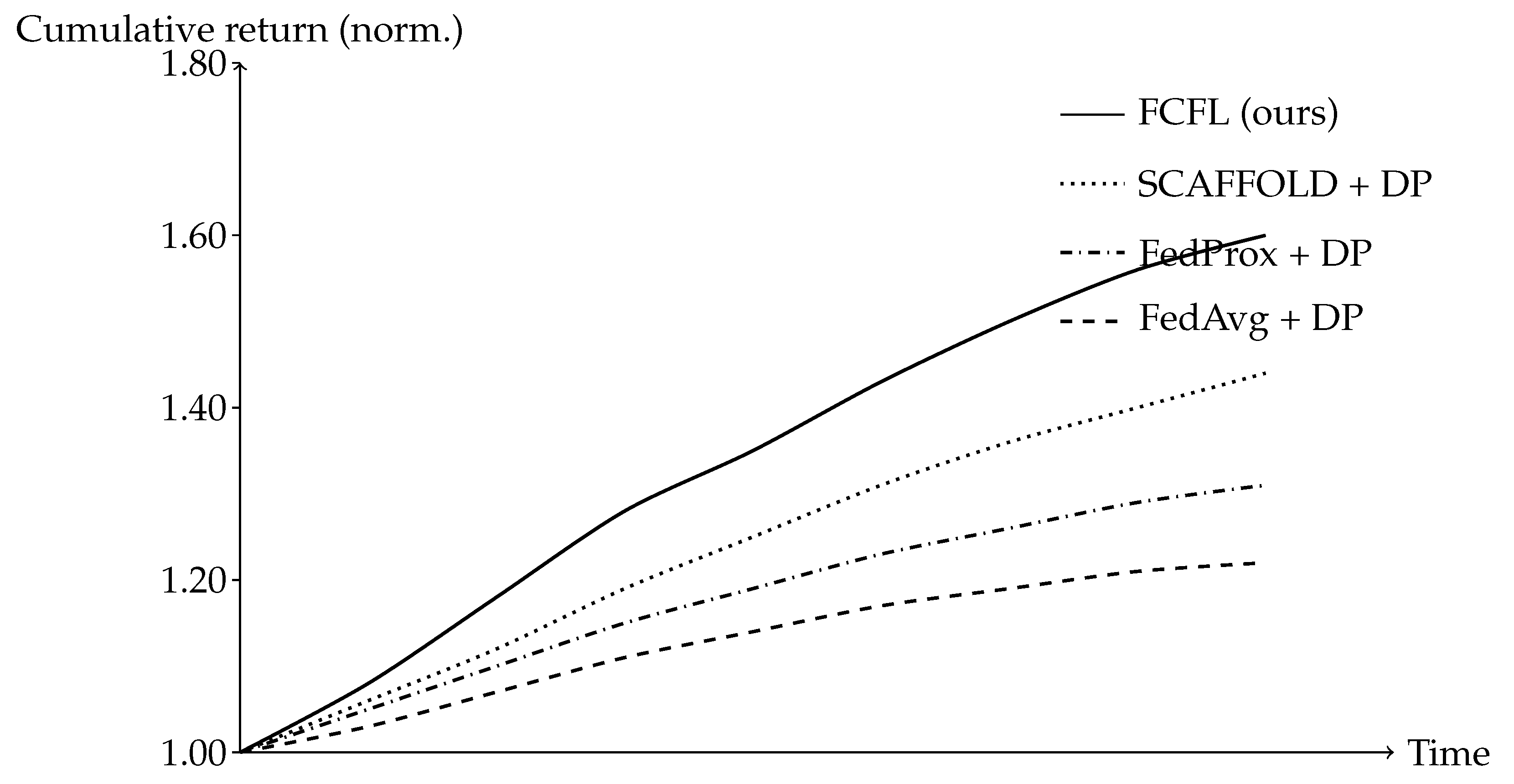

Table 12 shows the convergence to

. We measure rounds-to-target (RtT) to reach the validation loss

(defined as

the best centralized loss on the stress window). FCFL recovers fastest.

FCFL reduces the variance of per-round validation loss by

vs. SCAFFOLD + DP and

vs. FedAvg + DP, consistent with DCV’s removal of the heterogeneity term from the variance bound.

Figure 5 illustrates the stabilized descent.

Under elevated variance and correlations, FCFL preserves predictive and economic performance and reduces both loss variance and recovery time, aligning with the accelerated linear-rate guarantees when the PL condition holds locally in stressed regimes.

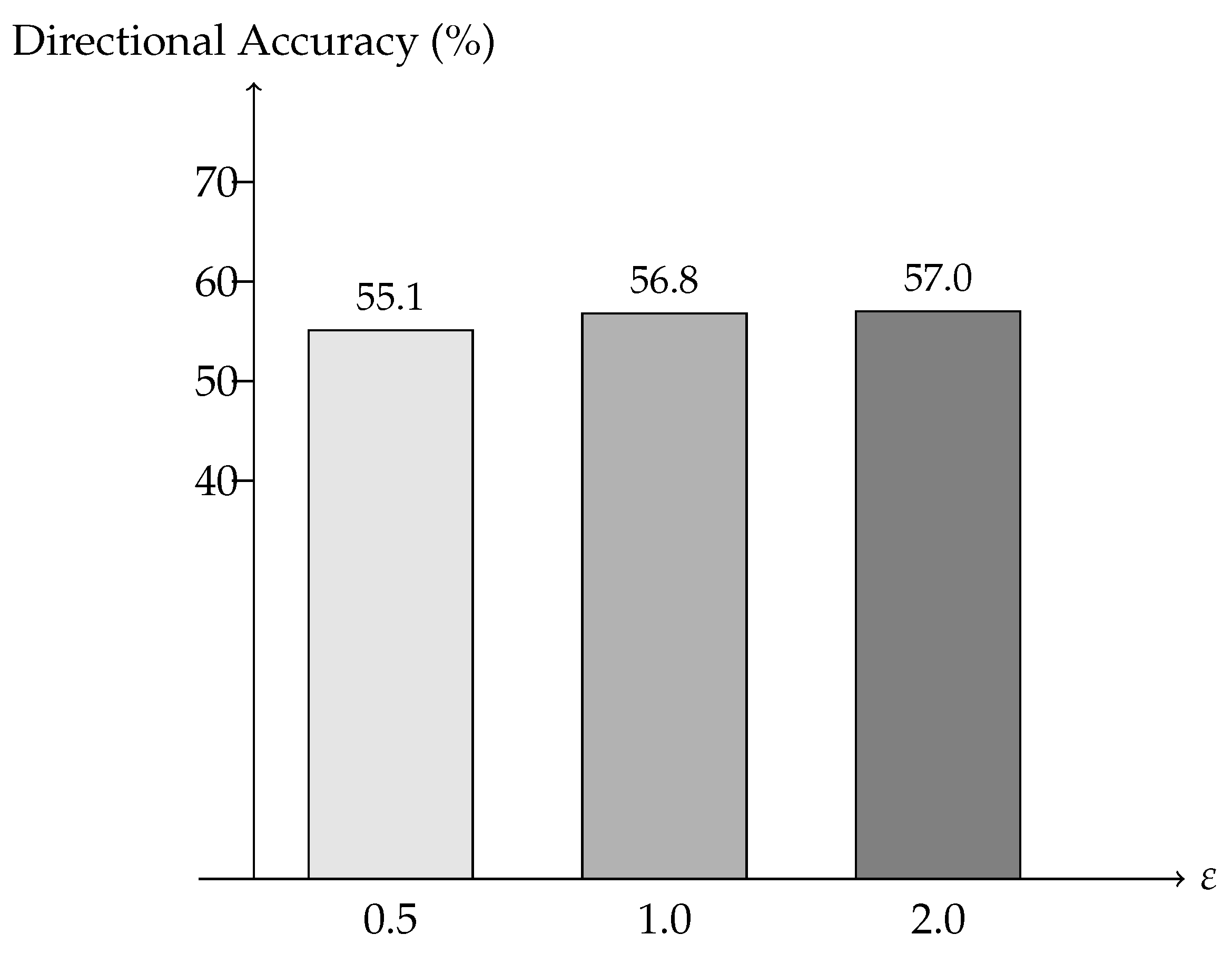

6.8. Hyperparameter Sensitivity

Grid and metrics. We sweep server momentum

and lookahead

(keeping

= 0.999,

= 1.0,

=

). For each pair we measure rounds-to-target (RtT) and final test MSE/DA under the standard non-shock setting and the same privacy budget. The sensitivity of FCFL to

is shown in

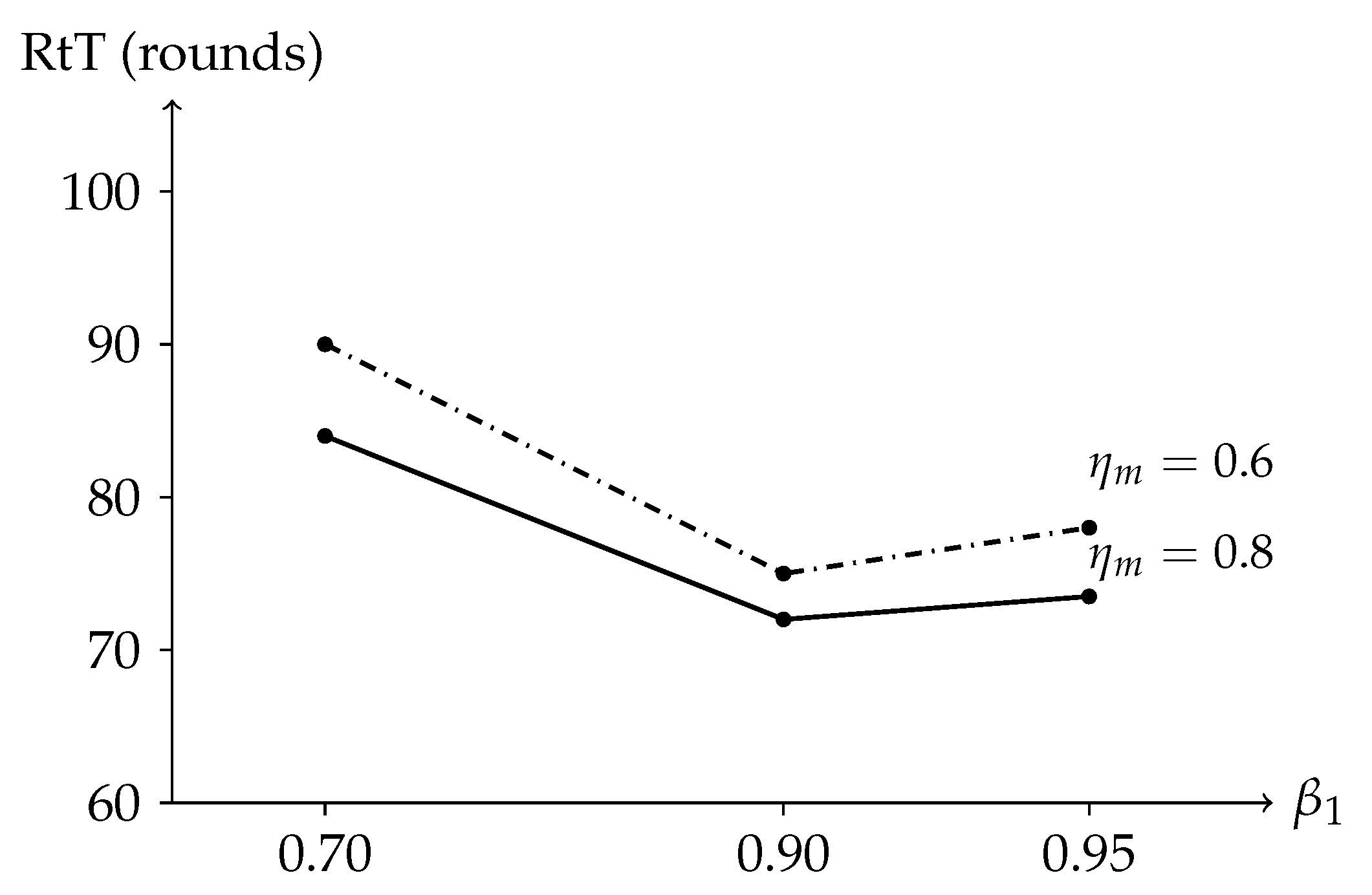

Table 13.

The pair

minimizes RtT without degrading final error, consistent with theory: larger

improves the contraction factor

until DP noise and curvature mismatch induce mild overshoot, which is tempered by lookahead and diagonal preconditioning.

Figure 6 shows RtT as a function of

for two lookahead settings, highlighting robustness around the recommended default.

Varying shows negligible differences in RtT ( rounds), indicating that per-coordinate preconditioning stabilizes early even with conservative second-moment decay. Increasing beyond occasionally triggers minor oscillations under high DP noise; our default ( = 0.9, = 0.8) avoids this regime while preserving speed.

6.9. Robust Aggregation and Backdoor Defenses

Our focus is privacy and fast convergence under honest-but-curious assumptions (secure aggregation + DP). Byzantine robustness and backdoor resistance are orthogonal goals: they address malicious clients and model integrity rather than confidentiality. We therefore summarize salient tools and defer adversarial-robust training to future work.

Coordinate-wise median sets (breakdown ); trimmed mean removes extremes at rate and averages the remainder, ; the geometric median solves (e.g., Weiszfeld updates with ). Krum selects the update with minimal summed distances to its nearest neighbors (multi-Krum averages several winners). Under at most b Byzantine clients, these rules yield deviations that scale as (up to constants and problem noise), trading small bias for high breakdown. Practical caveat: many robust rules need per-update visibility; secure aggregation hides individual , so MPC or relaxed visibility is required to compute order statistics.

Complementary safeguards include tighter norm/coordinate clipping, similarity/angle checks against a reference direction (e.g., reject if and large), per-client rate limiting, random audits, and post hoc model inspection (trigger scans, spectral/activation anomalies). DP and clipping already reduce single-client influence () and attenuate memorization, but they do not guarantee backdoor removal. Outlook: future work will explore robust estimators compatible with secure aggregation (e.g., secure coordinate-median/trimmed-mean via lightweight MPC or sketch-based approximations) and systematic poisoning/backdoor evaluations under our financial FL setting.