Advancements and Challenges in Deep Learning-Based Person Re-Identification: A Review

Abstract

1. Introduction

- Limited understanding of global research community structures and collaboration patterns;

- Narrow focus on conventional benchmarks, neglecting the broader spectrum of unimodal and multimodal datasets;

- Absence of unified taxonomies distinguishing unimodal and multimodal methodologies.

- Leveraging network science methodologies to analyze global collaboration networks, elucidating structural dynamics and knowledge diffusion patterns within person Re-ID academic ecosystems;

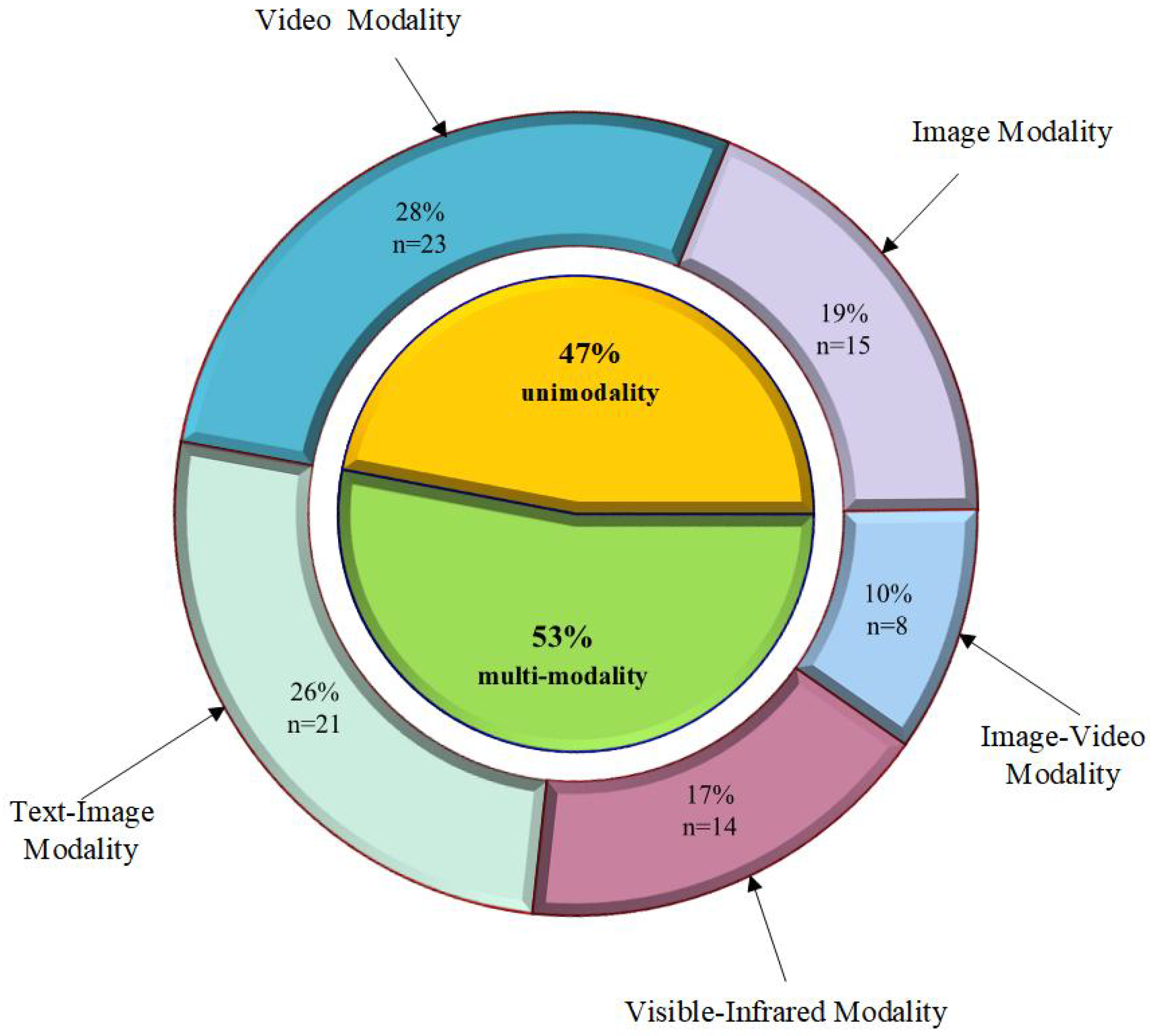

- Developing a comprehensive taxonomy for 20 benchmark datasets spanning six modality categories (RGB, IR, video, text-image, visible-infrared, and cross-temporal sequences), establishing practical guidelines for dataset selection in person Re-ID research;

- Proposing an innovative hierarchical framework that systematically categorizes 82 state-of-the-art methods into unimodal and multimodal paradigms, with further division into 15 methodologically distinct technical subclasses.

2. Bibliometric Analysis of the Person Re-ID Landscape

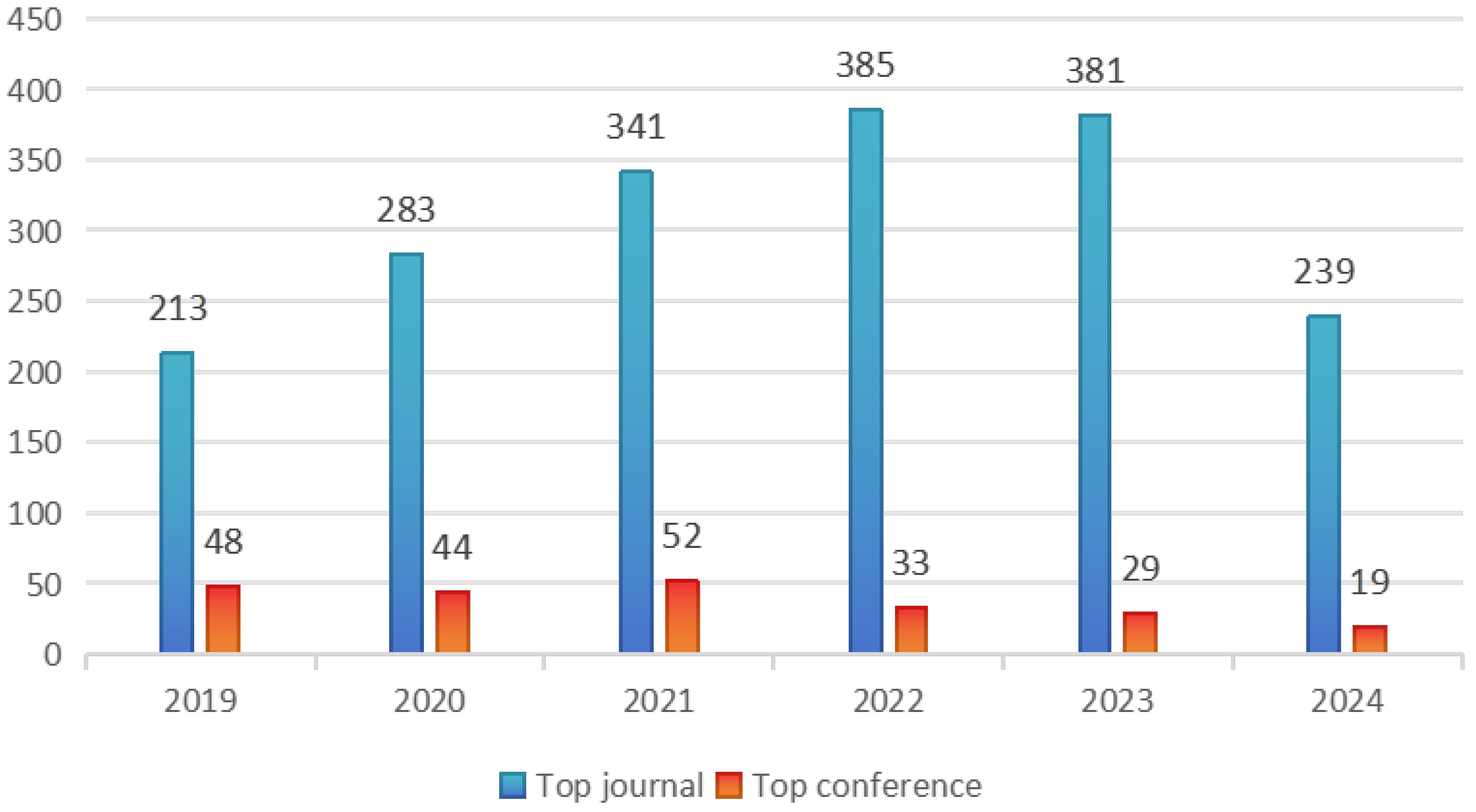

2.1. Publication Trends Analysis

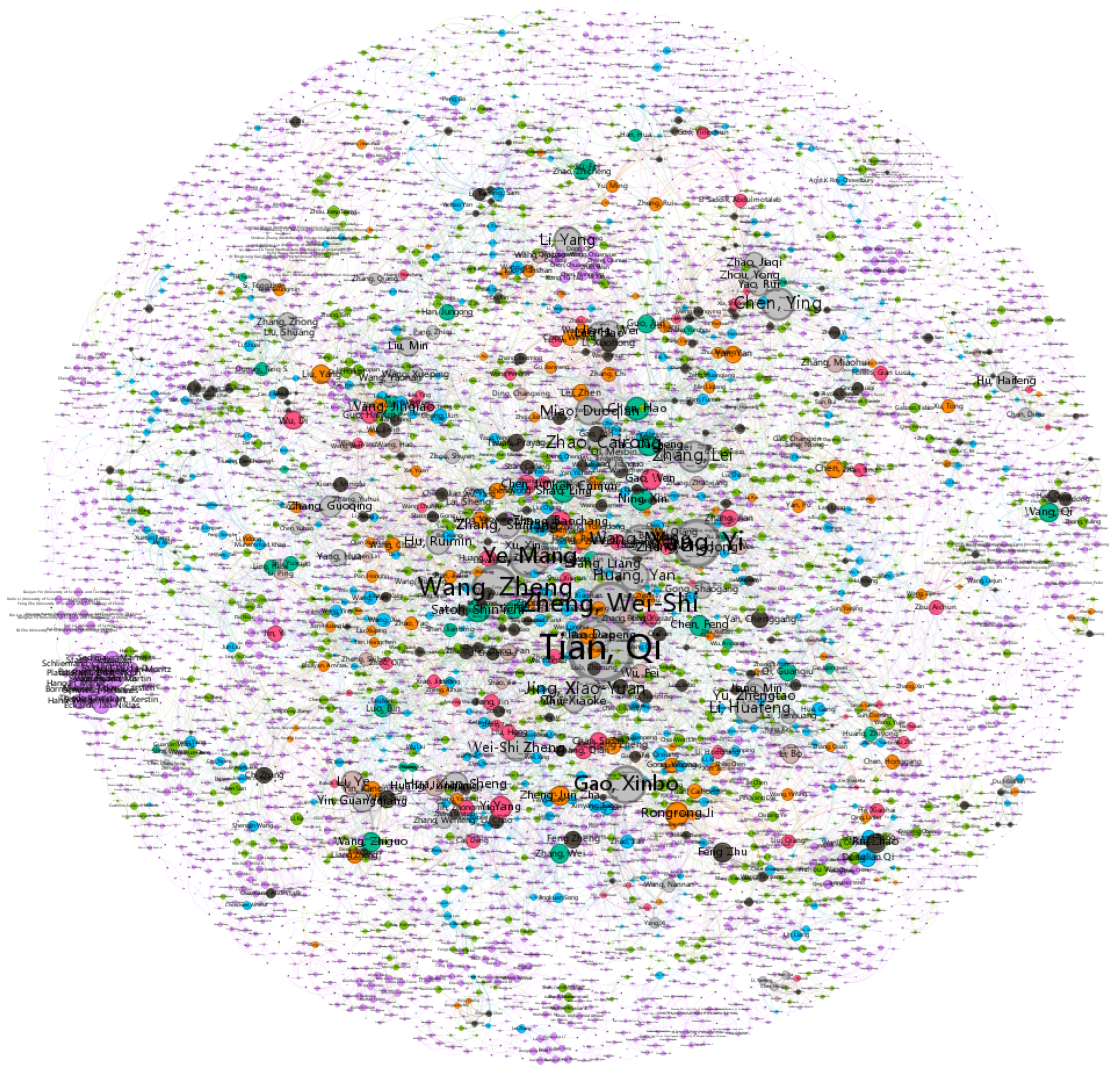

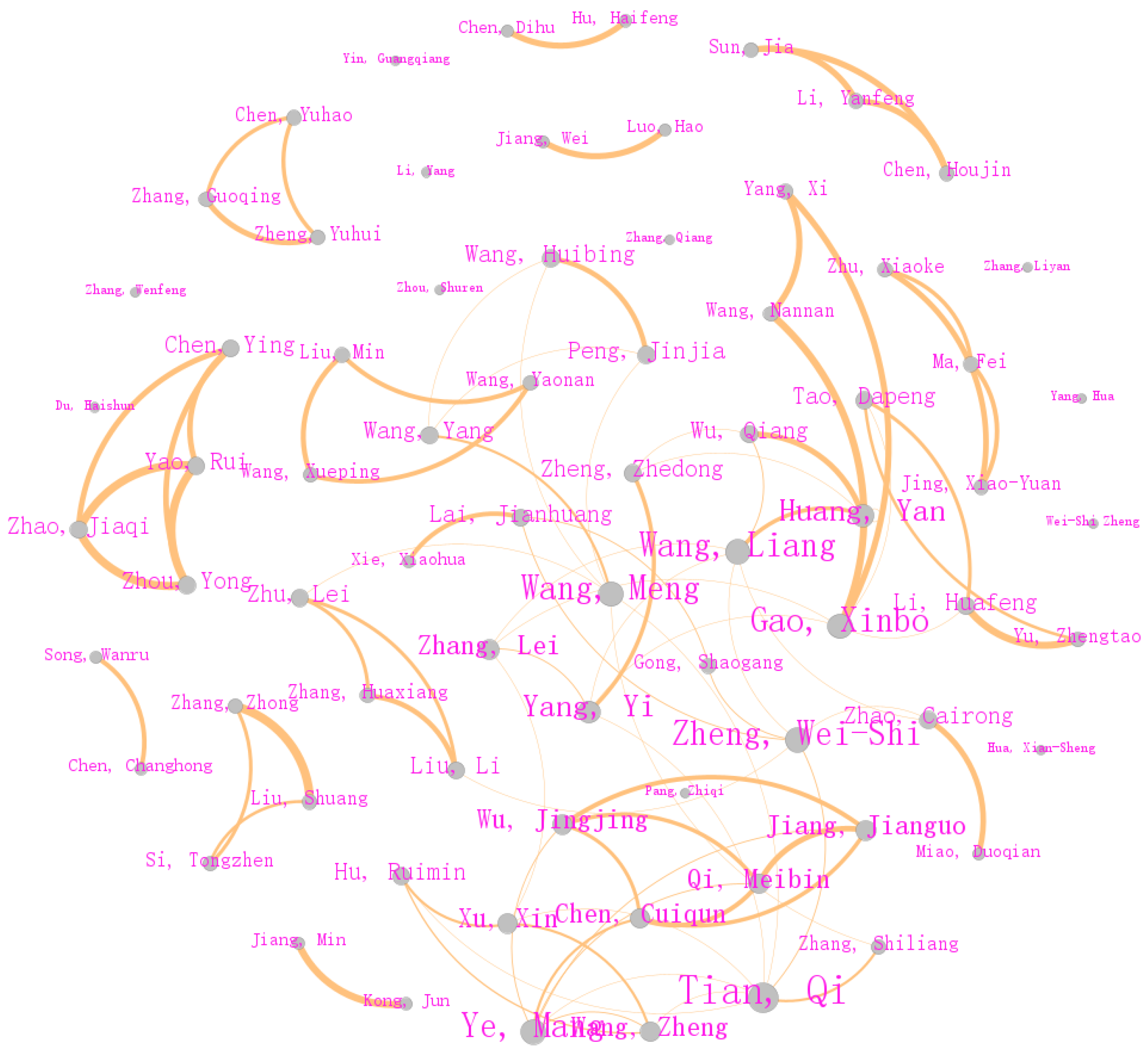

2.2. Collaboration Network Analysis

- Huawei Noah’s Ark Lab (Qi Tian team): Pioneering cross-domain adaptive frameworks

- Sun Yat-sen University (Weishi Zheng group): Leading innovations in person Re-ID with poor annotation

- MPI Informatics (B. Schiele’s group): Multispectral person retrieval under varying illumination

- Wuhan University (Zheng Wang team): Advancing transformer-based feature learning

- University of Maryland, College Park (L.S.Davis’ lab): Groundbreaking multiview human analysis methodologies

- Chongqing Key Lab of Image Cognition: Developing domain-specific architectures

2.3. Technical Landscape Mapping

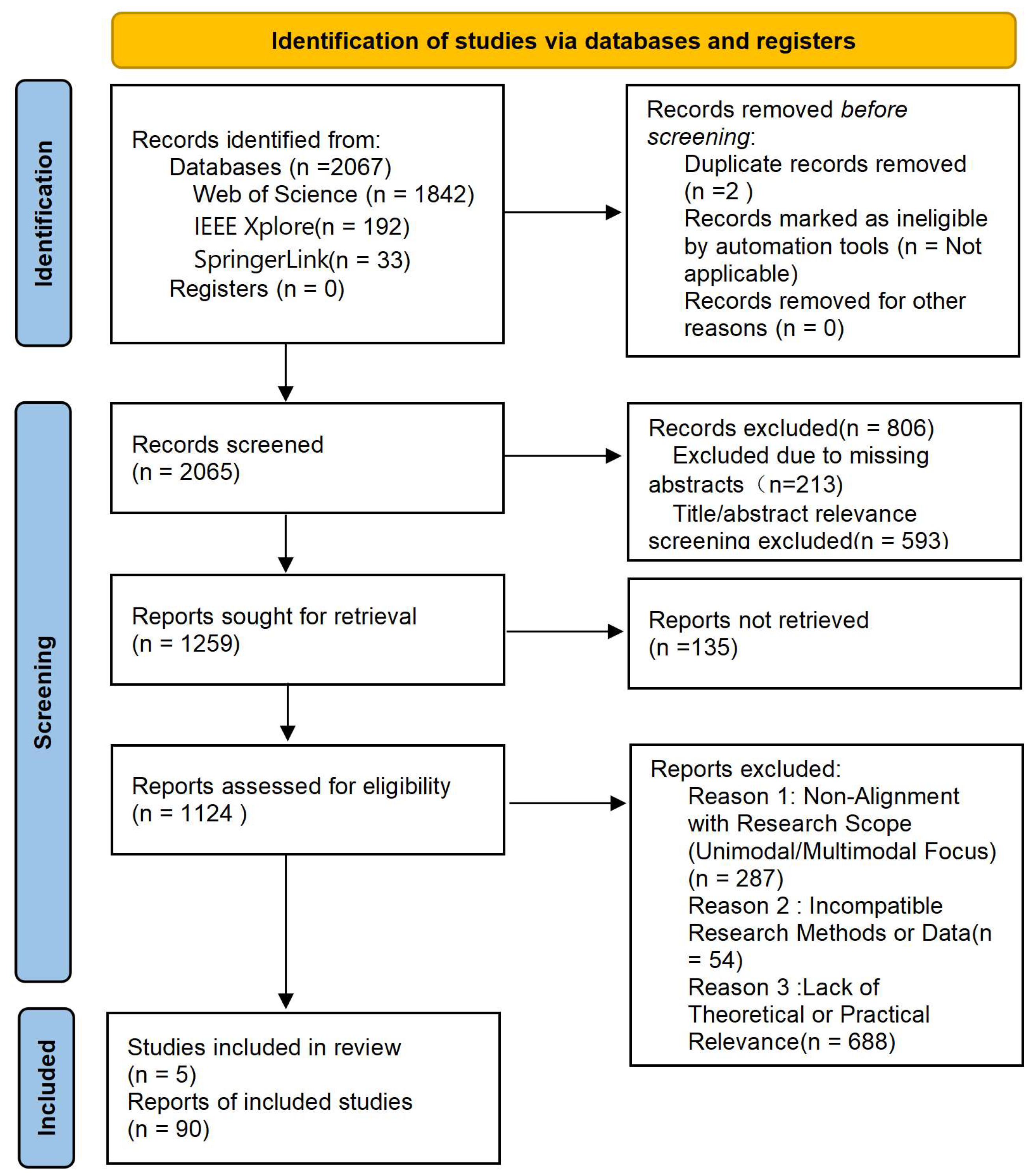

2.4. Literature Screening Protocol

- 287 reports were excluded for non-alignment with the review’s unimodal/multimodal research scope

- 54 reports were excluded due to incompatible research methods or data presentation

- 688 reports were excluded for lack of theoretical or practical relevance

3. Datasets and Evaluation Metrics

3.1. Benchmark Datasets

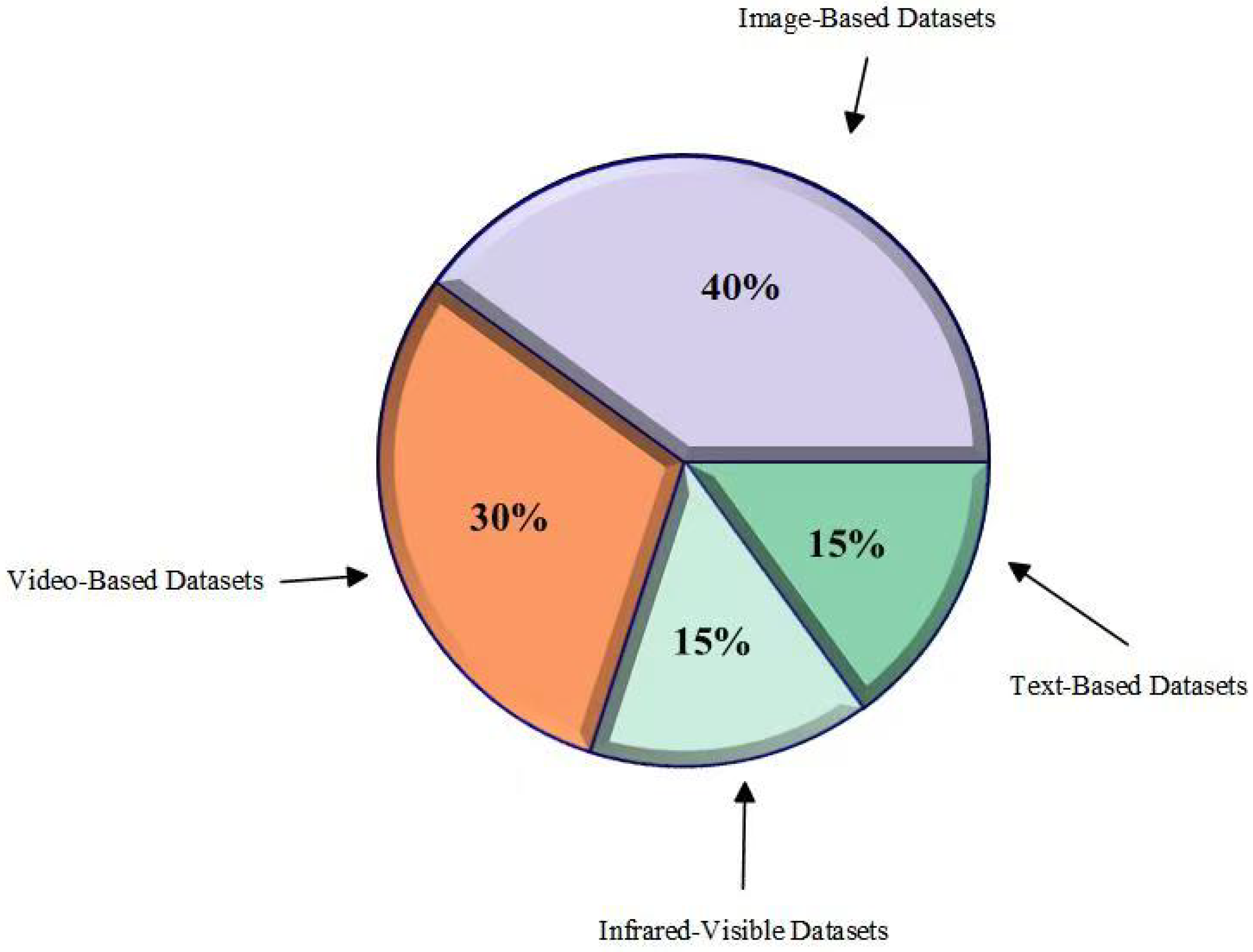

- Image-based datasets (40%) remain dominant due to established benchmarks and algorithmic compatibility.

- Video-based datasets (30%) grow rapidly, emphasizing spatio-temporal feature learning.

- Cross-modal datasets (30%) emerge to address real-world challenges, with infrared-visible datasets (15%) and text-based datasets (15%) leading this trend.

3.1.1. Image-Based Person Re-ID Datasets

- Real-world detection artifacts (misalignments, occlusions)

- Multi-query evaluation protocol

- Standard 64 × 128 resolution

- Training/test split: 751/750 identities

- Mixed resolution (60 × 60 average)

- Cross-domain evaluation (surveillance vs. cinematic data)

- Large-scale test set with 2900 identities

- Multi-season/time-of-day variations (morning, noon, afternoon)

- Indoor/outdoor camera transitions

- Complex lighting variations

- Standard splits: 1041 training vs. 3060 test identities

3.1.2. Video-Based Person Re-ID Datasets

- Multi-view coverage of ticket halls, platforms, and escalators

- 250 identity-matched tracklet pairs (average 10 frames per tracklet)

- Additional 775 distractor tracklets with no identity matches

- 320 × 320 pixel resolution with significant occlusions and low-resolution challenges

- 1261 identities with 20,715 tracklets (average 58 frames/tracklet)

- Real-world tracklet generation through automatic detection and GMMCP tracking

- Careful manual annotation for detection/tracking error correction

- Standardized 128 × 256 pixel resolution

- Realistic evaluation protocol with 625 training and 636 test identities

- High-frame-rate sampling (12 FPS) capturing detailed motion patterns

- 702 training identities (2196 tracklets) and 702 test identities (2636 tracklets)

- Additional 408 distractor identities for realistic evaluation

- Variable resolutions (1080p to 480p) across 8 cameras

- Active annotation framework ensuring tracklet quality

- 2731 identities across 11 cameras in three crowded scenarios

- Average tracklet length of 77 frames (590,000 total frames)

- 1975 training and 756 test identities with strict cross-camera splits

- Variable resolutions reflecting real-world surveillance constraints

- Significant spatial-temporal distribution differences between scenarios

3.1.3. Text-Based Person Re-ID Datasets

3.1.4. Infrared-Visible Cross-Modal Person Re-ID Datasets

3.2. Evaluation Metrics

3.3. Critical Synthesis on Data and Metrics Critical Synthesis on Data and Metrics

- Data Ecology Bottleneck: The primary trend is toward multimodality, yet dataset construction remains biased. The persistent dominance of established image and video datasets (e.g., Market-1501, DukeMTMC-ReID) traps research in static, single-scene evaluation settings. There is a severe deficit in ecologically valid datasets that capture the complexities of real-world operation, such as extreme weather, unstructured occlusion, and dynamic data streams. This bottleneck indicates that dataset evolution is lagging behind practical deployment requirements.

- Justification for Metric Dominance: The standardization of evaluation metrics is rooted in the intrinsic nature of the Re-ID task and industrial needs. Rank-k (specifically Rank-1) is the primary metric as it directly simulates the target discovery efficiency of a human operator in a surveillance system—how quickly the correct subject can be found. In contrast, mAP (Mean Average Precision) plays a more critical role in assessing overall system robustness at scale. It measures the retrieval quality when handling long-tail distributions and massive query volumes, making it the sole effective indicator for a model’s true utility in large-scale monitoring scenarios.

- Limitations of Alternative Metrics: Other retrieval metrics (e.g., AUC or F1-Score) are less utilized because Re-ID is fundamentally a multi-class, imbalanced ranking and retrieval problem. Rank-k and mAP are superior because they account for the order of retrieval, providing a more accurate reflection of the model’s performance value in a real-world ranking environment.

4. Critical Analysis of Methodological Evolution

4.1. Unimodal Person Re-ID: Image vs. Video Paradigms

4.1.1. Image-Based Methodologies–Beyond Superficial Representations

4.1.2. Video-Based Person Re-ID—From Motion Understanding to Structured Learning

4.2. Person Re-ID in Multi-Modality Scenarios: Progress and Fundamental Limitations

4.2.1. Text-Image Modality Fusion—Beyond Semantic Surface Alignment

4.2.2. Visible-Infrared Cross-Modal Re-ID–Progress and Limitations

4.2.3. Person Re-ID in Image-Video Modality–Bridging Spatiotemporal Heterogeneity

5. Critical Challenges and Emerging Paradigms

5.1. The Synthetic-Real Chasm: Beyond Data Scarcity to Domain Adaptation Failure

5.2. Multimodal Semantic Disintegration: When Alignment Becomes Illusion

5.3. The Accuracy-Efficiency Paradox: Beyond Model Compression to Dynamic Computation

5.4. Interpretability Illusion: The Epistemic Crisis in Re-ID Decisions

5.5. The Generalization Mirage: Unmasking Domain Adaptation’s False Promises

5.6. Ethical and Societal Challenges and Mitigation

6. Conclusions

- Overcoming Architectural Fragility and Theoretical Superficiality. The high Architectural fragility (58% performance degradation under topology attacks) and Theoretical superficiality (89% lack formal generalization bounds) reveal a fundamental lack of robustness and predictability in current deep Re-ID models. To address this systemic risk, we propose Causal Representation Learning. Specifically, utilizing Structural Causal Models for disentangling confounding factors in cross-camera matching (such as occlusion, pose, or illumination) allows for the isolation of the true identity feature, thereby conferring stronger theoretical guarantees and significantly enhancing the model’s robustness against unpredictable real-world variations.

- Bridging Semantic Gaps and Mitigating Ethical Myopia. The alarming semantic gap in text-to-image retrieval (32.6% rank-1 accuracy on compositional queries) and the Ethical myopia (only 12% address privacy) demonstrate a failure to handle complex, high-level attributes and deploy responsibly. To address this, we propose Neuro-Symbolic Integration. Hybrid architectures combining metric learning with first-order logic constraints offer a pathway to inject structured knowledge and explicit ethical rules (e.g., privacy filters or fairness constraints) into the system. This integration ensures that decisions are not only statistically sound but also semantically grounded and ethically compliant.

- Escaping Benchmark Traps and Deficits in Ecological Validity. The field remains trapped in benchmark-driven progress (78% optimizing for constrained scenarios), often ignoring the Ecological validity deficit and the practical necessity for continuous adaptation (e.g., handling new identities or camera streams). To address this operational chasm, we propose Self-Evolving Systems. Utilizing Continual Learning Frameworks with dynamic architecture expansion capabilities directly tackles the limitation of static models. Such systems can continuously integrate new data and adapt to changes in the environment and identity distribution over time, thereby ensuring temporal continuity and maintaining high performance in true operational deploymen.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ye, M.; Shen, J.; Lin, G.; Xiang, T.; Shao, L.; Hoi, S.C. Deep learning for person re-identification: A survey and outlook. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 2872–2893. [Google Scholar] [CrossRef]

- Ming, Z.; Zhu, M.; Wang, X.; Zhu, J.; Cheng, J.; Gao, C.; Yang, Y.; Wei, X. Deep learning-based person re-identification methods: A survey and outlook of recent works. Image Vis. Comput. 2022, 119, 104394. [Google Scholar] [CrossRef]

- Zheng, H.; Zhong, X.; Huang, W.; Jiang, K.; Liu, W.; Wang, Z. Visible-infrared person re-identification: A comprehensive survey and a new setting. Electronics 2022, 11, 454. [Google Scholar] [CrossRef]

- Huang, N.; Liu, J.; Miao, Y.; Zhang, Q.; Han, J. Deep learning for visible-infrared cross-modality person re-identification: A comprehensive review. Inf. Fusion 2023, 91, 396–411. [Google Scholar] [CrossRef]

- Zahra, A.; Perwaiz, N.; Shahzad, M.; Fraz, M.M. Person re-identification: A retrospective on domain specific open challenges and future trends. Pattern Recognit. 2023, 142, 109669. [Google Scholar] [CrossRef]

- Page, M.J.; Moher, D.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. PRISMA 2020 explanation and elaboration: Updated guidance and exemplars for reporting systematic reviews. BMJ 2021, 372, n160. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Zhao, R.; Wang, X. Human reidentification with transferred metric learning. In Proceedings of the Computer Vision—ACCV 2012: 11th Asian Conference on Computer Vision, Daejeon, Republic of Korea, 5–9 November 2012; pp. 31–44. [Google Scholar]

- Li, W.; Wang, X. Locally aligned feature transforms across views. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3594–3601. [Google Scholar]

- Li, W.; Zhao, R.; Xiao, T.; Wang, X. Deepreid: Deep filter pairing neural network for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2014, Columbus, OH, USA, 23–28 June 2014; pp. 152–159. [Google Scholar]

- Zheng, L.; Shen, L.; Tian, L.; Wang, S.; Wang, J.; Tian, Q. Scalable person re-identification: A benchmark. In Proceedings of the IEEE International Conference on Computer Vision, ICCV 2015, Santiago, Chile, 7–13 December 2015; pp. 1116–1124. [Google Scholar]

- Ristani, E.; Solera, F.; Zou, R.; Cucchiara, R.; Tomasi, C. Performance measures and a data set for multi-target, multi-camera tracking. In Proceedings of the Computer Vision, ECCV 2016 Workshops, Amsterdam, The Netherlands, 8–10 and 15–16 October 2016; Proceedings, Part II; of Lecture Notes in Computer Science. Volume 9914, pp. 17–35. [Google Scholar]

- Zhuo, J.; Chen, Z.; Lai, J.; Wang, G. Occluded person re-identification. In Proceedings of the 2018 IEEE International Conference on Multimedia and Expo (ICME), San Diego, CA, USA, 23–27 July 2018; pp. 1–6. [Google Scholar]

- Xiao, T.; Li, S.; Wang, B.; Lin, L.; Wang, X. Joint Detection and Identification Feature Learning for Person Search. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 3376–3385. [Google Scholar]

- Wei, L.; Zhang, S.; Gao, W.; Tian, Q. Person transfer gan to bridge domain gap for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 79–88. [Google Scholar]

- Hirzer, M.; Beleznai, C.; Roth, P.M.; Bischof, H. Person re-identification by descriptive and discriminative classification. In Proceedings of the Image Analysis: 17th Scandinavian Conference, SCIA 2011, Ystad, Sweden, 23–27 May 2011; pp. 91–102. [Google Scholar]

- Liu, C.; Gong, S.; Loy, C.C.; Lin, X. Person re-identification: What features are important? In Proceedings of the Computer Vision—ECCV 2012: Workshops and Demonstrations, Florence, Italy, 7–13 October 2012; pp. 391–401. [Google Scholar]

- Wang, T.; Gong, S.; Zhu, X.; Wang, S. Person re-identification by video ranking. In Proceedings of the Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 688–703. [Google Scholar]

- Zheng, L.; Bie, Z.; Sun, Y.; Wang, J.; Su, C.; Wang, S.; Tian, Q. Mars: A video benchmark for large-scale person re-identification. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 868–884. [Google Scholar]

- Wu, Y.; Lin, Y.; Dong, X.; Yan, Y.; Ouyang, W.; Yang, Y. Exploit the unknown gradually: One-shot video-based person re-identification by stepwise learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5177–5186. [Google Scholar]

- Song, G.; Leng, B.; Liu, Y.; Hetang, C.; Cai, S. Region-based quality estimation network for large-scale person re-identification. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Li, S.; Xiao, T.; Li, H.; Zhou, B.; Yue, D.; Wang, X. Person search with natural language description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 1970–1979. [Google Scholar]

- Zhu, A.; Wang, Z.; Li, Y.; Wan, X.; Jin, J.; Wang, T.; Hu, F.; Hua, G. Dssl: Deep surroundings-person separation learning for text-based person retrieval. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual, 20–24 October 2021; pp. 209–217. [Google Scholar]

- Ding, Z.; Ding, C.; Shao, Z.; Tao, D. Semantically self-aligned network for text-to-image part-aware person re-identification. arXiv 2021, arXiv:2107.12666. [Google Scholar]

- Wu, A.; Zheng, W.S.; Yu, H.X.; Gong, S.; Lai, J. RGB-infrared cross-modality person re-identification. In Proceedings of the IEEE International Conference on Computer Vision, ICCV 2017, Venice, Italy, 22–29 October 2017; pp. 5380–5389. [Google Scholar]

- Nguyen, D.T.; Hong, H.G.; Kim, K.W.; Park, K.R. Person recognition system based on a combination of body images from visible light and thermal cameras. Sensors 2017, 17, 605. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Wang, H. Diverse embedding expansion network and low-light cross-modality benchmark for visible-infrared person re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2023, Vancouver, BC, Canada, 17–24 June 2023; pp. 2153–2162. [Google Scholar]

- Ma, F.; Zhu, X.; Liu, Q.; Song, C.; Jing, X.Y.; Ye, D. Multi-view coupled dictionary learning for person re-identification. Neurocomputing 2019, 348, 16–26. [Google Scholar] [CrossRef]

- Xu, Y.; Jiang, Z.; Men, A.; Wang, H.; Luo, H. Multi-view feature fusion for person re-identification. Knowl.-Based Syst. 2021, 229, 107344. [Google Scholar] [CrossRef]

- Dong, N.; Yan, S.; Tang, H.; Tang, J.; Zhang, L. Multi-view information integration and propagation for occluded person re-identification. Inf. Fusion 2024, 104, 102201. [Google Scholar] [CrossRef]

- Xin, X.; Wang, J.; Xie, R.; Zhou, S.; Huang, W.; Zheng, N. Semi-supervised person re-identification using multi-view clustering. Pattern Recognit. 2019, 88, 285–297. [Google Scholar] [CrossRef]

- Yu, Z.; Li, L.; Xie, J.; Wang, C.; Li, W.; Ning, X. Pedestrian 3d shape understanding for person re-identification via multi-view learning. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 5589–5602. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, W.; Zhao, Z.; Su, F.; Men, A.; Dong, Y. Cluster-instance normalization: A statistical relation-aware normalization for generalizable person re-identification. IEEE Trans. Multimed. 2023, 26, 3554–3566. [Google Scholar] [CrossRef]

- Qi, L.; Wang, L.; Shi, Y.; Geng, X. A novel mix-normalization method for generalizable multi-source person re-identification. IEEE Trans. Multimed. 2022, 25, 4856–4867. [Google Scholar] [CrossRef]

- Qi, L.; Liu, J.; Wang, L.; Shi, Y.; Geng, X. Unsupervised generalizable multi-source person re-identification: A domain-specific adaptive framework. Pattern Recognit. 2023, 140, 109546. [Google Scholar] [CrossRef]

- Liu, J.; Huang, Z.; Li, L.; Zheng, K.; Zha, Z.J. Debiased batch normalization via gaussian process for generalizable person re-identification. In Proceedings of the AAAI Conference on Artificial Intelligence, 22 February–1 March 2022; Volume 36, pp. 1729–1737. [Google Scholar]

- Jiao, B.; Liu, L.; Gao, L.; Lin, G.; Yang, L.; Zhang, S.; Wang, P.; Zhang, Y. Dynamically transformed instance normalization network for generalizable person re-identification. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 285–301. [Google Scholar]

- Zhong, Y.; Wang, Y.; Zhang, S. Progressive feature enhancement for person re-identification. IEEE Trans. Image Process. 2021, 30, 8384–8395. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Liu, K.; Guo, J.; Zhao, P.; Quan, Y.; Miao, Q. Pose-Guided Attention Learning for Cloth-Changing Person Re-Identification. IEEE Trans. Multimed. 2024, 26, 5490–5498. [Google Scholar] [CrossRef]

- Chen, B.; Deng, W.; Hu, J. Mixed high-order attention network for person re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, ICCV 2019, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 371–381. [Google Scholar]

- Yan, Y.; Ni, B.; Liu, J.; Yang, X. Multi-level attention model for person re-identification. Pattern Recognit. Lett. 2019, 127, 156–164. [Google Scholar] [CrossRef]

- Zhong, W.; Jiang, L.; Zhang, T.; Ji, J.; Xiong, H. A part-based attention network for person re-identification. Multimed. Tools Appl. 2020, 79, 22525–22549. [Google Scholar] [CrossRef]

- Wu, Y.; Bourahla, O.E.F.; Li, X.; Wu, F.; Tian, Q.; Zhou, X. Adaptive graph representation learning for video person re-identification. IEEE Trans. Image Process. 2020, 29, 8821–8830. [Google Scholar] [CrossRef]

- Liu, X.; Yu, C.; Zhang, P.; Lu, H. Deeply coupled convolution–transformer with spatial–temporal complementary learning for video-based person re-identification. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 13753–13763. [Google Scholar] [CrossRef]

- Ansar, W.; Fraz, M.M.; Shahzad, M.; Gohar, I.; Javed, S.; Jung, S.K. Two stream deep CNN-RNN attentive pooling architecture for video-based person re-identification. In Proceedings of the Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications: 23rd Iberoamerican Congress, CIARP 2018, Madrid, Spain, 19–22 November 2018; pp. 654–661. [Google Scholar]

- Sun, D.; Huang, J.; Hu, L.; Tang, J.; Ding, Z. Multitask multigranularity aggregation with global-guided attention for video person re-identification. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 7758–7771. [Google Scholar] [CrossRef]

- Zhang, W.; He, X.; Lu, W.; Qiao, H.; Li, Y. Feature aggregation with reinforcement learning for video-based person re-identification. IEEE Trans. Neural Networks Learn. Syst. 2019, 30, 3847–3852. [Google Scholar] [CrossRef]

- Bai, S.; Chang, H.; Ma, B. Incorporating texture and silhouette for video-based person re-identification. Pattern Recognit. 2024, 156, 110759. [Google Scholar] [CrossRef]

- Gu, X.; Chang, H.; Ma, B.; Shan, S. Motion feature aggregation for video-based person re-identification. IEEE Trans. Image Process. 2022, 31, 3908–3919. [Google Scholar] [CrossRef]

- Pan, H.; Liu, Q.; Chen, Y.; He, Y.; Zheng, Y.; Zheng, F.; He, Z. Pose-aided video-based person re-identification via recurrent graph convolutional network. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 7183–7196. [Google Scholar] [CrossRef]

- Zhang, T.; Wei, L.; Xie, L.; Zhuang, Z.; Zhang, Y.; Li, B.; Tian, Q. Spatiotemporal transformer for video-based person re-identification. arXiv 2021, arXiv:2103.16469. [Google Scholar] [CrossRef]

- Hou, R.; Chang, H.; Ma, B.; Shan, S.; Chen, X. Temporal complementary learning for video person re-identification. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 388–405. [Google Scholar]

- Liu, J.; Zha, Z.J.; Chen, X.; Wang, Z.; Zhang, Y. Dense 3D-convolutional neural network for person re-identification in videos. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2019, 15, 1–19. [Google Scholar] [CrossRef]

- Guo, W.; Wang, H. Key Parts Spatio-Temporal Learning for Video Person Re-identification. In Proceedings of the 5th ACM International Conference on Multimedia in Asia, MMAsia 2023, Tainan, Taiwan, 6–8 December 2023; pp. 1–6. [Google Scholar]

- Pei, S.; Fan, X. Multi-Level Fusion Temporal–Spatial Co-Attention for Video-Based Person Re-Identification. Entropy 2021, 23, 1686. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Zhang, S.; Huang, T. Multi-scale temporal cues learning for video person re-identification. IEEE Trans. Image Process. 2020, 29, 4461–4473. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; He, X.; Yu, X.; Lu, W.; Zha, Z.; Tian, Q. A multi-scale spatial-temporal attention model for person re-identification in videos. IEEE Trans. Image Process. 2019, 29, 3365–3373. [Google Scholar] [CrossRef]

- Ran, Z.; Wei, X.; Liu, W.; Lu, X. MultiScale Aligned Spatial-Temporal Interaction for Video-Based Person Re-Identification. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 8536–8546. [Google Scholar] [CrossRef]

- Wei, D.; Hu, X.; Wang, Z.; Shen, J.; Ren, H. Pose-guided multi-scale structural relationship learning for video-based pedestrian re-identification. IEEE Access 2021, 9, 34845–34858. [Google Scholar] [CrossRef]

- Wu, L.; Zhang, C.; Li, Z.; Hu, L. Multi-scale Context Aggregation for Video-Based Person Re-Identification. In Proceedings of the International Conference on Neural Information Processing, ICONIP 2023, Changsha, China, 20–23 November 2023; pp. 98–109. [Google Scholar]

- Yang, Y.; Li, L.; Dong, H.; Liu, G.; Sun, X.; Liu, Z. Progressive unsupervised video person re-identification with accumulative motion and tracklet spatial–temporal correlation. Future Gener. Comput. Syst. 2023, 142, 90–100. [Google Scholar] [CrossRef]

- Ye, M.; Li, J.; Ma, A.J.; Zheng, L.; Yuen, P.C. Dynamic graph co-matching for unsupervised video-based person re-identification. IEEE Trans. Image Process. 2019, 28, 2976–2990. [Google Scholar] [CrossRef]

- Zeng, S.; Wang, X.; Liu, M.; Liu, Q.; Wang, Y. Anchor association learning for unsupervised video person re-identification. IEEE Trans. Neural Networks Learn. Syst. 2022, 35, 1013–1024. [Google Scholar] [CrossRef]

- Xie, P.; Xu, X.; Wang, Z.; Yamasaki, T. Sampling and re-weighting: Towards diverse frame aware unsupervised video person re-identification. IEEE Trans. Multimed. 2022, 24, 4250–4261. [Google Scholar] [CrossRef]

- Wang, X.; Panda, R.; Liu, M.; Wang, Y.; Roy-Chowdhury, A.K. Exploiting global camera network constraints for unsupervised video person re-identification. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 4020–4030. [Google Scholar] [CrossRef]

- Wang, Z.; Zhu, A.; Xue, J.; Jiang, D.; Liu, C.; Li, Y.; Hu, F. SUM: Serialized Updating and Matching for text-based person retrieval. Knowl.-Based Syst. 2022, 248, 108891. [Google Scholar] [CrossRef]

- Liu, C.; Xue, J.; Wang, Z.; Zhu, A. PMG—Pyramidal Multi-Granular Matching for Text-Based Person Re-Identification. Appl. Sci. 2023, 13, 11876. [Google Scholar] [CrossRef]

- Bao, L.; Wei, L.; Zhou, W.; Liu, L.; Xie, L.; Li, H.; Tian, Q. Multi-Granularity Matching Transformer for Text-Based Person Search. IEEE Trans. Multimed. 2024, 26, 4281–4293. [Google Scholar] [CrossRef]

- Wu, X.; Ma, W.; Guo, D.; Zhou, T.; Zhao, S.; Cai, Z. Text-based Occluded Person Re-identification via Multi-Granularity Contrastive Consistency Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 6162–6170. [Google Scholar]

- Jing, Y.; Si, C.; Wang, J.; Wang, W.; Wang, L.; Tan, T. Pose-guided multi-granularity attention network for text-based person search. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11189–11196. [Google Scholar]

- Niu, K.; Huang, Y.; Ouyang, W.; Wang, L. Improving description-based person re-identification by multi-granularity image-text alignments. IEEE Trans. Image Process. 2020, 29, 5542–5556. [Google Scholar] [CrossRef]

- Yan, S.; Dong, N.; Zhang, L.; Tang, J. Clip-driven fine-grained text-image person re-identification. IEEE Trans. Image Process. 2023, 32, 6032–6046. [Google Scholar] [CrossRef] [PubMed]

- Zha, Z.J.; Liu, J.; Chen, D.; Wu, F. Adversarial attribute-text embedding for person search with natural language query. IEEE Trans. Multimed. 2020, 22, 1836–1846. [Google Scholar] [CrossRef]

- Wang, Z.; Xue, J.; Zhu, A.; Li, Y.; Zhang, M.; Zhong, C. Amen: Adversarial multi-space embedding network for text-based person re-identification. In Proceedings of the Pattern Recognition and Computer Vision: 4th Chinese Conference, PRCV 2021, Beijing, China, 29 October–1 November 2021; pp. 462–473. [Google Scholar]

- Ke, X.; Liu, H.; Xu, P.; Lin, X.; Guo, W. Text-based person search via cross-modal alignment learning. Pattern Recognit. 2024, 152, 110481. [Google Scholar] [CrossRef]

- Li, Z.; Xie, Y. BCRA: Bidirectional cross-modal implicit relation reasoning and aligning for text-to-image person retrieval. Multimed. Syst. 2024, 30, 177. [Google Scholar] [CrossRef]

- Wu, T.; Zhang, S.; Chen, D.; Hu, H. Multi-level cross-modality learning framework for text-based person re-identification. Electron. Lett. 2023, 59, e12975. [Google Scholar] [CrossRef]

- Wang, W.; An, G.; Ruan, Q. A dual-modal graph attention interaction network for person Re-identification. IET Comput. Vis. 2023, 17, 687–699. [Google Scholar] [CrossRef]

- Zhao, Z.; Liu, B.; Lu, Y.; Chu, Q.; Yu, N. Unifying Multi-Modal Uncertainty Modeling and Semantic Alignment for Text-to-Image Person Re-identification. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 7534–7542. [Google Scholar]

- Gong, T.; Wang, J.; Zhang, L. Cross-modal semantic aligning and neighbor-aware completing for robust text–image person retrieval. Inf. Fusion 2024, 112, 102544. [Google Scholar] [CrossRef]

- Gan, W.; Liu, J.; Zhu, Y.; Wu, Y.; Zhao, G.; Zha, Z.J. Cross-Modal Semantic Alignment Learning for Text-Based Person Search. In Proceedings of the International Conference on Multimedia Modeling, Amsterdam, The Netherlands, 29 January–2 February 2024; pp. 201–215. [Google Scholar]

- Liu, Q.; He, X.; Teng, Q.; Qing, L.; Chen, H. BDNet: A BERT-based dual-path network for text-to-image cross-modal person re-identification. Pattern Recognit. 2023, 141, 109636. [Google Scholar] [CrossRef]

- Qi, B.; Chen, Y.; Liu, Q.; He, X.; Qing, L.; Sheriff, R.E.; Chen, H. An image–text dual-channel union network for person re-identification. IEEE Trans. Instrum. Meas. 2023, 72, 1–16. [Google Scholar] [CrossRef]

- Yan, S.; Liu, J.; Dong, N.; Zhang, L.; Tang, J. Prototypical Prompting for Text-to-image Person Re-identification. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, VIC, Australia, 28 October–1 November 2024; pp. 2331–2340. [Google Scholar]

- Huang, B.; Qi, X.; Chen, B. Cross-modal feature learning and alignment network for text–image person re-identification. J. Vis. Commun. Image Represent. 2024, 103, 104219. [Google Scholar] [CrossRef]

- Liu, J.; Wang, J.; Huang, N.; Zhang, Q.; Han, J. Revisiting modality-specific feature compensation for visible-infrared person re-identification. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 7226–7240. [Google Scholar] [CrossRef]

- Cheng, Y.; Xiao, G.; Tang, X.; Ma, W.; Gou, X. Two-phase feature fusion network for visible-infrared person re-identification. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 1149–1153. [Google Scholar]

- Xu, B.; Ye, H.; Wu, W. MGFNet: A Multi-granularity Feature Fusion and Mining Network for Visible-Infrared Person Re-identification. In Proceedings of the International Conference on Neural Information Processing, Changsha, China, 20–23 November 2023; pp. 15–28. [Google Scholar]

- Wang, X.; Chen, C.; Zhu, Y.; Chen, S. Feature fusion and center aggregation for visible-infrared person re-identification. IEEE Access 2022, 10, 30949–30958. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, X.; Chai, Y.; Xu, K.; Jiang, Y.; Liu, B. Visible-infrared person re-identification with complementary feature fusion and identity consistency learning. Int. J. Mach. Learn. Cybern. 2024, 16, 703–719. [Google Scholar] [CrossRef]

- Sarker, P.K.; Zhao, Q. Enhanced visible–infrared person re-identification based on cross-attention multiscale residual vision transformer. Pattern Recognit. 2024, 149, 110288. [Google Scholar] [CrossRef]

- Feng, Y.; Yu, J.; Chen, F.; Ji, Y.; Wu, F.; Liu, S.; Jing, X.Y. Visible-infrared person re-identification via cross-modality interaction transformer. IEEE Trans. Multimed. 2022, 25, 7647–7659. [Google Scholar] [CrossRef]

- Qi, M.; Wang, S.; Huang, G.; Jiang, J.; Wu, J.; Chen, C. Mask-guided dual attention-aware network for visible-infrared person re-identification. Multimed. Tools Appl. 2021, 80, 17645–17666. [Google Scholar] [CrossRef]

- Park, H.; Lee, S.; Lee, J.; Ham, B. Learning by aligning: Visible-infrared person re-identification using cross-modal correspondences. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 12046–12055. [Google Scholar]

- Liu, Q.; Teng, Q.; Chen, H.; Li, B.; Qing, L. Dual adaptive alignment and partitioning network for visible and infrared cross-modality person re-identification. Appl. Intell. 2022, 52, 547–563. [Google Scholar] [CrossRef]

- Cheng, X.; Deng, S.; Yu, H.; Zhao, G. DMANet: Dual-modality alignment network for visible–infrared person re-identification. Pattern Recognit. 2025, 157, 110859. [Google Scholar] [CrossRef]

- Wu, J.; Liu, H.; Su, Y.; Shi, W.; Tang, H. Learning concordant attention via target-aware alignment for visible-infrared person re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 11122–11131. [Google Scholar]

- Jiang, K.; Zhang, T.; Liu, X.; Qian, B.; Zhang, Y.; Wu, F. Cross-modality transformer for visible-infrared person re-identification. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 480–496. [Google Scholar]

- Zhang, G.; Zhang, Y.; Zhang, H.; Chen, Y.; Zheng, Y. Learning dual attention enhancement feature for visible–infrared person re-identification. J. Vis. Commun. Image Represent. 2024, 99, 104076. [Google Scholar] [CrossRef]

- Shi, W.; Liu, H.; Liu, M. Image-to-video person re-identification using three-dimensional semantic appearance alignment and cross-modal interactive learning. Pattern Recognit. 2022, 122, 108314. [Google Scholar] [CrossRef]

- Shim, M.; Ho, H.I.; Kim, J.; Wee, D. Read: Reciprocal attention discriminator for image-to-video re-identification. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 335–350. [Google Scholar]

- Wu, W.; Liu, J.; Zheng, K.; Sun, Q.; Zha, Z.J. Temporal complementarity-guided reinforcement learning for image-to-video person re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 7319–7328. [Google Scholar]

- Gu, X.; Ma, B.; Chang, H.; Shan, S.; Chen, X. Temporal knowledge propagation for image-to-video person re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9647–9656. [Google Scholar]

- Zhang, X.; Li, S.; Jing, X.Y.; Ma, F.; Zhu, C. Unsupervised domain adaption for image-to-video person re-identification. Multimed. Tools Appl. 2020, 79, 33793–33810. [Google Scholar] [CrossRef]

- Zhu, X.; Ye, P.; Jing, X.Y.; Zhang, X.; Cui, X.; Chen, X.; Zhang, F. Heterogeneous distance learning based on kernel analysis-synthesis dictionary for semi-supervised image to video person re-identification. IEEE Access 2020, 8, 169663–169675. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, J.; Zheng, L.; Liu, Y.; Sun, Y.; Li, Y.; Wang, S. Cycas: Self-supervised cycle association for learning re-identifiable descriptions. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 72–88. [Google Scholar]

- Yu, B.; Xu, N.; Zhou, J. Cross-media body-part attention network for image-to-video person re-identification. IEEE Access 2019, 7, 94966–94976. [Google Scholar] [CrossRef]

- Zhang, X.; Feng, W.; Han, R.; Wang, L.; Song, L.; Hou, J. From Synthetic to Real: Unveiling the Power of Synthetic Data for Video Person Re-ID. arXiv 2024, arXiv:2402.02108. [Google Scholar] [CrossRef]

- Defonte, A.D. Synthetic-to-Real Domain Transfer with Joint Image Translation and Discriminative Learning for Pedestrian Re-Identification. Ph.D. Thesis, Politecnico di Torino, Turin, Italy, 2022. [Google Scholar]

- Zhang, T.; Xie, L.; Wei, L.; Zhuang, Z.; Zhang, Y.; Li, B.; Tian, Q. Unrealperson: An adaptive pipeline towards costless person re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 11506–11515. [Google Scholar]

- Yuan, D.; Chang, X.; Huang, P.Y.; Liu, Q.; He, Z. Self-supervised deep correlation tracking. IEEE Trans. Image Process. 2020, 30, 976–985. [Google Scholar] [CrossRef]

- Yang, S.; Zhang, Y. MLLMReID: Multimodal Large Language Model-based Person Re-identification. arXiv 2024, arXiv:2401.13201. [Google Scholar]

- Wang, Q.; Li, B.; Xue, X. When Large Vision-Language Models Meet Person Re-Identification. arXiv 2024, arXiv:2411.18111. [Google Scholar] [CrossRef]

- Jin, W.; Yanbin, D.; Haiming, C. Lightweight Person Re-identification for Edge Computing. IEEE Access 2024, 12, 75899–75906. [Google Scholar] [CrossRef]

- Yuan, C.; Liu, X.; Guo, L.; Chen, L.; Chen, C.P. Lightweight Attention Network Based on Fuzzy Logic for Person Re-Identification. In Proceedings of the 2024 International Conference on Fuzzy Theory and Its Applications (iFUZZY), Kagawa, Japan, 10–13 August 2024; pp. 1–6. [Google Scholar]

- Yuan, D.; Chang, X.; Li, Z.; He, Z. Learning adaptive spatial-temporal context-aware correlation filters for UAV tracking. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2022, 18, 1–18. [Google Scholar] [CrossRef]

- RichardWebster, B.; Hu, B.; Fieldhouse, K.; Hoogs, A. Doppelganger saliency: Towards more ethical person re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2847–2857. [Google Scholar]

- Behera, N.K.S.; Sa, P.K.; Bakshi, S.; Bilotti, U. Explainable graph-attention based person re-identification in outdoor conditions. Multimed. Tools Appl. 2025, 84, 34781–34793. [Google Scholar] [CrossRef]

- Shu, X.; Yuan, D.; Liu, Q.; Liu, J. Adaptive weight part-based convolutional network for person re-identification. Multimed. Tools Appl. 2020, 79, 23617–23632. [Google Scholar] [CrossRef]

| Manuscript | Year | Major Innovative Aspect |

|---|---|---|

| Ye et al. [1] | 2021 | • Five-stage evolutionary analysis of person Re-ID research progress |

| • Comparative study of image/video models with detailed architectural analysis | ||

| • Identification of fundamental challenges across processing stages | ||

| Ming et al. [2] | 2022 | • Systematic categorization of deep learning approaches into four methodological classes |

| • Comprehensive analysis of image/video benchmark datasets | ||

| Zheng et al. [3] | 2022 | • Framework for visible-infrared cross-modal Re-ID challenges |

| • Systematic analysis of inter-modal and intra-modal variation mitigation | ||

| Huang et al. [4] | 2023 | • Task-oriented methodology comparison across image modalities |

| • PRISMA*-guided systematic literature selection methodology | ||

| Zahra et al. [5] | 2023 | • Historical analysis through research paradigm shifts |

| • Multimodal performance visualization and comparative evaluation |

| Metric | Value | Description |

|---|---|---|

| Average clustering coefficient | 0.81 | Indicating strong community structure with tightly interconnected node clusters |

| Modularization index | 0.67 | Showing moderate specialization of functional modules |

| Network diameter | 14 | Reflecting efficient information dissemination |

| Category | Keywords/Concept |

|---|---|

| Methodological Foundations | convolutional neural networks and metric learning |

| Technological Innovations | generative adversarial networks and graph neural networks |

| Application Orientations | video surveillance and smart cities |

| Topic/Strategy | Percentage |

|---|---|

| Novel architecture designs | 42% |

| Cross-domain adaptation strategies | 31% |

| Real-world deployment frameworks | 27% |

| Dataset | Year | IDs | Images | Cams. | Training Set | Test Set | Metrics | ||

|---|---|---|---|---|---|---|---|---|---|

| IDs | Images | IDs | Images | ||||||

| CUHK-01 [7] | 2012 | 971 | 3884 | 2 | – | – | – | – | CMC |

| CUHK-02 [8] | 2013 | 1816 | 7264 | 10 | – | – | – | – | CMC |

| CUHK-03 [9] | 2014 | 1467 | 12,696 | 10 | 767 | 7368 | 700 | 5328 | CMC |

| Market-1501 [10] | 2015 | 1501 | 32,668 | 6 | 751 | 12,936 | 750 | 19,732 | CMC, mAP |

| DukeMTMC-reID [11] | 2017 | 1812 | 36,411 | 8 | 702 | 16,522 | 702 | 17,661 | CMC, mAP |

| P-DukeMTMC-reID [12] | 2017 | 1299 | 15,090 | 8 | 665 | 12,927 | 634 | 2163 | CMC, mAP |

| CUHK-SYSU [13] | 2017 | 8432 | 18,184 | – | 5532 | 11,206 | 2900 | 6978 | mAP, Rank-k |

| MSMT17 [14] | 2018 | 4101 | 126,441 | 15 | 1041 | 32,621 | 3060 | 93,820 | CMC, mAP |

| Dataset | Year | IDs | Tracklets | Cams. | Training Set | Test Set | Metrics | ||

|---|---|---|---|---|---|---|---|---|---|

| IDs | Tracklets | IDs | Tracklets | ||||||

| PRID2011 [15] | 2011 | 200 | 400 | 2 | – | – | – | – | CMC |

| GRID [16] | 2012 | 250 | 500 | 17 | – | – | – | – | CMC |

| iLIDS-VID [17] | 2014 | 300 | 600 | 2 | – | – | – | – | CMC |

| MARS [18] | 2016 | 1261 | 20,715 | 6 | 625 | 8298 | 636 | 12,180 | CMC, mAP |

| DukeMTMC-Video [19] | 2018 | 1812 | 4832 | 8 | 702 | 2196 | 702 | 2636 | CMC, mAP |

| LPW [20] | 2018 | 2731 | 7694 | 11 | 1975 | 5938 | 756 | 1756 | CMC, mAP |

| Dataset | Year | IDs | Images | Text Desc. | Train IDs | Train Imgs. | Test IDs | Test Imgs. | Avg. Text Len. | Metric |

|---|---|---|---|---|---|---|---|---|---|---|

| CUHK-PEDES [21] | 2017 | 13,003 | 40,206 | 80,412 | 11,003 | 34,054 | 1000 | 3074 | 23.5 | Rank-1/5/10, mAP |

| RSTPReid [22] | 2021 | 4101 | 20,505 | 39,010 | 3701 | 18,505 | 200 | 1000 | 24.8 | Rank-1/5/10, CMC |

| ICFG-PEDES [23] | 2021 | 4102 | 54,522 | 54,522 | 3102 | 34,674 | 1000 | 19,848 | 37.2 | Rank-1/5/10, mAP |

| Dataset | Year | IDs | Visible | Infrared | Training Set | Test Set | Metrics | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Cams. | Imgs. | Cams. | Imgs. | IDs | Vis. | IR | IDs | Vis. | IR | ||||

| SYSU-MM01 [24] | 2017 | 491 | 4 | 30,071 | 2 | 15,792 | 395 | 22,258 | 11,909 | 96 | 7813 | 3883 | Rank-1/10, mAP |

| RegDB [25] | 2021 | 412 | 1 | 4120 | 1 | 4120 | 206 | 2060 | 2060 | 206 | 2060 | 2060 | Rank-1/20, mAP |

| LLCM [26] | 2023 | 1064 | 5 | 25,626 | 4 | 21,141 | 713 | 16,946 | 13,975 | 351 | 8680 | 7166 | Rank-1/5/10, mAP |

| Method | Dataset | Rank-1 (%) | Rank-5 (%) | mAP (%) |

|---|---|---|---|---|

| MVCDL [27] | PRID 2011 | 28.8 | 55.6 | - |

| iLIDS-VID | 18.7 | 46.2 | - | |

| MVMP [28] | Market-1501 | 95.3 | 98.4 | 88.9 |

| DukeMTMC-reID | 87.4 | 94.5 | 79.7 | |

| MVI2P [29] | Market-1501 | 95.3 | - | 87.9 |

| P-DukeMTMC-reID | 91.9 | 94.4 | 80.9 | |

| MVDC [30] | Market1501 | 75.2 | - | 52.6 |

| DukeMCMT-reID | 57.6 | - | 37.8 | |

| Yu et al. [31] | Market-1501 | 95.7 | - | 90.2 |

| DukeMCMT-reID | 91.4 | - | 84.1 |

| Method | Dataset | mAP (%) | Rank-1 (%) |

|---|---|---|---|

| CINorm [32] | Market1501 | 57.8 | 82.3 |

| MSMT17 | 21.1 | 49.7 | |

| DukeMTMC-reID | 52.4 | 71.3 | |

| MixNorm [33] | PRID | 74.3 | 65.2 |

| VIPeR | 66.6 | 56.4 | |

| UDG-ReID [34] | Market1501 | 79.7 | 53.2 |

| MSMT17 | 42.4 | 16.8 | |

| GDNorm [35] | VIPeR | 74.1 | 66.1 |

| PRID | 79.9 | 72.6 | |

| DTIN [36] | VIPeR | 70.7 | 62.9 |

| PRID | 79.7 | 71.0 |

| Method | Dataset | mAP (%) | Rank-1 (%) |

|---|---|---|---|

| PFE [37] | Market-1501 | 86.2 | 95.1 |

| DukeMTMC-ReID | 75.9 | 88.2 | |

| CUHK03 | 68.6 | 71.6 | |

| PGAL [38] | PRCC | 58.7 | 59.5 |

| LTCC | 27.7 | 62.5 | |

| HOA [39] | Market-1501 | 95.1 | 85.0 |

| DukeMTMC-ReID | 89.1 | 77.2 | |

| CUHK03 | 77.2 | 65.4 | |

| Yan et al. [40] | Market-1501 | 94.5 | 82.5 |

| DukeMTMC-ReID | 72.0 | 85.6 | |

| PAM [41] | CUHK03 | 64.1 | 60.8 |

| DukeMTMC-reID | 84.7 | 69.4 |

| Method | Dataset | Rank-1 (%) | Rank-5 (%) | mAP (%) |

|---|---|---|---|---|

| Wu et al. [42] | PRID 2011 | 94.6 | 99.1 | - |

| ILIDS-VID | 84.5 | 96.7 | - | |

| MARS | 89.8 | 96.1 | 81.1 | |

| DCCT [43] | PRID 2011 | 96.8 | 99.7 | - |

| ILIDS-VID | 91.7 | 98.6 | - | |

| MARS | 91.5 | 97.4 | 86.3 | |

| Ansar et al. [44] | PRID 2011 | 84.0 | 97.6 | - |

| ILIDS-VID | 76.6 | 90.8 | - | |

| MARS | 56.0 | 67.0 | - |

| Method | Dataset | Rank-1 (%) | Rank-5 (%) | mAP (%) |

|---|---|---|---|---|

| MMA-GGA [45] | DukeMTMC-VideoReID | 97.3 | 99.6 | 96.2 |

| PRID 2011 | 95.5 | 100 | - | |

| ILIDS-VID | 98.7 | 98.7 | - | |

| Zhang et al. [46] | PRID 2011 | 91.2 | 98.9 | - |

| ILIDS-VID | 68.4 | 87.2 | - | |

| Bai et al. [47] | MARS | 91.5 | - | 87.4 |

| ILIDS-VID | 90.6 | - | 84.2 | |

| MFA [48] | MARS | 90.4 | - | 85.0 |

| ILIDS-VID | 88.2 | - | 78.9 |

| Method | Dataset | Rank-1 (%) | Rank-5 (%) | mAP (%) |

|---|---|---|---|---|

| Pan et al. [49] | iLIDS-VID | 90.2 | 98.5 | - |

| DukeMTMC-VideoReID | 97.1 | 98.8 | 96.5 | |

| MARS | 91.1 | 97.2 | 86.5 | |

| zhang et al. [50] | iLIDS-VID | 87.5 | 95.0 | 78.0 |

| DukeMTMC-VideoReID | 97.6 | - | 97.4 | |

| MARS | 88.7 | - | 86.3 | |

| hou et al. [51] | iLIDS-VID | 86.6 | - | - |

| DukeMTMC-VideoReID | 96.9 | - | 96.2 | |

| MARS | 89.8 | - | 85.1 | |

| D3DNet [52] | iLIDS-VID | 65.4 | 87.9 | - |

| MARS | 76.0 | 87.2 | 71.4 | |

| KSTL [53] | iLIDS-VID | 93.4 | - | - |

| PRID 2011 | 96.7 | - | - | |

| MARS | 91.5 | - | 86.3 | |

| MLTS [54] | iLIDS-VID | 94.0 | 98.67 | - |

| PRID 2011 | 96.63 | 97.75 | - |

| Method | Dataset | Rank-1 (%) | Rank-5 (%) | mAP (%) |

|---|---|---|---|---|

| PM3D [55] | DukeMTMC-VideoReID | 95.49 | 99.3 | 93.67 |

| iLIDS-VID | 86.67 | 98.00 | - | |

| MARS | 88.87 | 96.64 | 85.36 | |

| MSTA [56] | iLIDS-VID | 70.1 | 88.67 | - |

| PRID-2011 | 91.2 | 98.72 | - | |

| MARS | 84.08 | 93.52 | 79.67 | |

| MS-STI [57] | DukeMTMC-VideoReID | 97.4 | 99.7 | 97.1 |

| MARS | 92.7 | 97.5 | 87.2 | |

| Wei et al. [58] | iLIDS-VID | 85.5 | 91.4 | - |

| PRID-2011 | 94.7 | 99.2 | - | |

| MARS | 90.2 | 96.6 | 83.2 | |

| MSCA [59] | iLIDS-VID | 84.7 | 94.7 | - |

| PRID-2011 | 96.6 | 100 | - | |

| MARS | 91.8 | 96.5 | 83.2 |

| Method | Dataset | Rank-1 (%) | Rank-5 (%) | mAP (%) |

|---|---|---|---|---|

| TASTC [60] | iLIDS-VID | 52.5 | 70.2 | - |

| DukeMTMC-VideoReID | 76.8 | - | 68.2 | |

| DGM [61] | iLIDS-VID | 42.6 | 67.7 | - |

| PRID-2011 | 83.3 | 96.7 | - | |

| MARS | 24.3 | 40.4 | 11.9 | |

| UAAL [62] | DukeMTMC-VideoReID | 89.7 | 97.0 | 87.0 |

| MARS | 73.2 | 86.3 | 60.1 | |

| SRC [63] | DukeMTMC-VideoReID | 83.0 | 83.3 | 76.5 |

| MARS | 62.7 | 76.1 | 40.5 | |

| PRID-2011 | 72.0 | 87.7 | - | |

| CCM [64] | DukeMTMC-VideoReID | 76.5 | 89.6 | 68.7 |

| MARS | 65.3 | 77.8 | 41.2 |

| Method | Dataset | Rank-1 (%) | Rank-5 (%) | Rank-10 (%) |

|---|---|---|---|---|

| SUM [65] | CUHK-PEDES | 59.22 ± 0.02 | 80.35 ± 0.02 | 87.6 ± 0.03 |

| PMG [66] | CUHK-PEDES | 64.59 | 83.19 | 89.12 |

| RSTPReid | 48.85 | 72.65 | 81.30 | |

| Bao et al. [67] | CUHK-PEDES | 68.23 | 86.37 | 91.65 |

| RSTPReid | 56.05 | 78.65 | 86.75 | |

| MGCC [68] | Occluded-RSTPReid | 49.85 | 74.95 | 83.45 |

| PMA [69] | CUHK-PEDES | 53.81 | 73.54 | 81.23 |

| MIA [70] | CUHK-PEDES | 53.10 | 75.00 | 82.90 |

| CFine [71] | ICFGPEDES | 60.83 | 76.55 | 82.42 |

| CUHK-PEDES | 69.57 | 85.93 | 91.15 | |

| RSTPReid | 50.55 | 72.50 | 81.60 |

| Method | Dataset | Rank-1 (%) | Rank-5 (%) | Rank-10 (%) | mAP (%) |

|---|---|---|---|---|---|

| AATE [72] | CUHK-PEDES | 52.42 | 74.98 | 82.74 | - |

| AMEN [73] | CUHK-PEDES | 57.16 | 78.64 | 86.22 | - |

| MAPS [74] | ICFGPEDES | 57.22 | - | 82.70 | - |

| CUHK-PEDES | 65.24 | - | 90.10 | - | |

| BCRA [75] | ICFGPEDES | 64.77 | 80.83 | 86.31 | 39.48 |

| CUHK-PEDES | 75.03 | 89.93 | 93.89 | 51.80 | |

| RSTPReid | 62.29 | 82.16 | 89.03 | 48.43 | |

| MCL [76] | ICFGPEDES | 53.43 | 76.45 | 84.28 | 0.46 |

| CUHK-PEDES | 61.21 | 81.52 | 88.22 | 0.52 | |

| Dual-GAIN [77] | ICFGPEDES | 53.43 | 76.45 | 84.28 | 0.46 |

| CUHK-PEDES | 61.21 | 81.52 | 88.22 | 0.52 | |

| Zhao et al. [78] | CUHK-PEDES | 63.4 | 83.3 | 90.3 | 49.28 |

| ICFGPEDES | 65.62 | 80.54 | 85.83 | 38.78 | |

| CANC [79] | CUHK-PEDES | 54.95 | 77.39 | 84.24 | - |

| ICFGPEDES | 44.22 | 64.93 | 72.86 | - | |

| RSTPReid | 45.78 | 71.28 | 81.86 | - | |

| SAL [80] | CUHK-PEDES | 69.14 | 85.90 | 90.80 | - |

| ICFGPEDES | 62.77 | 78.64 | 84.21 | - | |

| SSAN [23] | CUHK-PEDES | 64.13 | 82.62 | 88.4 | - |

| Flickr30K | 50.74 | 77.92 | 85.46 | - |

| Method | Dataset | Rank-1 (%) | Rank-5 (%) | mAP (%) |

|---|---|---|---|---|

| Liu et al. [81] | CUHK-PEDES | 66.27 | 85.07 | - |

| ICFGPEDES | 57.31 | 76.15 | - | |

| Qi et al. [82] | Market1501 | 96.5 | - | 94.9 |

| CUHK03 | 86.9 | - | 88.5 | |

| Yan et al. [83] | CUHK-PEDES | 74.89 | 89.90 | 67.12 |

| ICFGPEDES | 65.12 | 81.57 | 42.93 | |

| RSTPReid | 61.87 | 83.63 | 47.82 | |

| CFLAA [84] | CUHK-PEDES | 78.4 | 92.6 | 66.9 |

| ICFGPEDES | 69.4 | 80.7 | - | |

| RSTPReid | 64.2 | 84.1 | - |

| Method | Dataset | Classification | Rank-1 (%) | Rank-10 (%) | mAP (%) |

|---|---|---|---|---|---|

| TSME [85] | SYSU-MM01 | ALL-Search (Single-shot) | 64.23 | 95.19 | 61.21 |

| Indoor-Search (Single-shot) | 64.80 | 96.92 | 99.31 | ||

| RegDB | Visible-to-Infrared | 87.35 | 97.10 | 76.94 | |

| Infrared-to-Visible | 86.41 | 96.39 | 75.70 | ||

| TFFN [86] | SYSU-MM01 | - | 58.37 | 91.30 | 56.02 |

| RegDB | - | 81.17 | 93.69 | 77.16 | |

| MGFNet [87] | SYSU-MM01 | ALL-Search (Single-shot) | 72.63 | - | 69.64 |

| Indoor-Search (Single-shot) | 77.90 | - | 82.28 | ||

| RegDB | Visible-to-Infrared | 91.14 | - | 82.53 | |

| Infrared-to-Visible | 89.06 | - | 80.5 | ||

| F2CALNet [88] | SYSU-MM01 | ALL-Search (Single-shot) | 62.86 | - | 59.38 |

| Indoor-Search (Single-shot) | 66.83 | - | 71.94 | ||

| RegDB | Visible-to-Infrared | 86.88 | - | 76.33 | |

| Infrared-to-Visible | 85.68 | - | 74.87 | ||

| CFF-ICL [89] | SYSU-MM01 | ALL-Search (Single-shot) | 66.37 | - | 63.56 |

| Indoor-Search (Single-shot) | 68.76 | - | 72.47 | ||

| RegDB | Visible-to-Infrared | 90.63 | - | 82.77 | |

| Infrared-to-Visible | 88.65 | - | 81.97 | ||

| WF-CAMReViT [90] | SYSU-MM01 | ALL-Search | 68.05 | 97.12 | 65.17 |

| Indoor-Search | 72.43 | 97.16 | 77.58 | ||

| RegDB | Visible-to-Infrared | 91.66 | 95.97 | 85.96 | |

| Infrared-to-Visible | 92.97 | 95.19 | 86.08 | ||

| CMIT [91] | SYSU-MM01 | ALL-Search | 70.94 | 94.93 | 65.51 |

| Indoor-Search | 73.28 | 95.20 | 77.18 | ||

| RegDB | Visible-to-Infrared | 88.78 | 94.76 | 88.49 | |

| Infrared-to-Visible | 84.55 | 93.72 | 83.64 | ||

| MDAN [92] | SYSU-MM01 | ALL-Search | 39.07 | 84.85 | 40.52 |

| Indoor-Search | 39.13 | 86.37 | 48.88 | ||

| RegDB | - | 43.25 | 68.79 | 41.59 |

| Method | Dataset | Classification | Rank-1 (%) | Rank-10 (%) | mAP (%) |

|---|---|---|---|---|---|

| Park et al. [93] | SYSU-MM01 | ALL-Search (Single-shot) | 55.41 ± 0.18 | - | 54.14 ± 0.33 |

| Indoor-Search (Single-shot) | 58.46 ± 0.67 | - | 66.33 ± 1.27 | ||

| RegDB | Visible-to-Infrared | 74.17 ± 0.04 | - | 67.64 ± 0.08 | |

| Infrared-to-Visible | 72.43 ± 0.42 | - | 65.46 ± 0.18 | ||

| Liu et al. [94] | SYSU-MM01 | - | 52.99 | 91.98 | 60.73 |

| RegDB | - | 52.14 | 75.44 | 49.92 | |

| DMANet [95] | SYSU-MM01 | ALL-Search (Single-shot) | 76.33 | 97.58 | 69.38 |

| Indoor-Search (Single-shot) | 81.48 | 98.96 | 83.76 | ||

| RegDB | Visible-to-Infrared | 94.51 | - | 88.46 | |

| Infrared-to-Visible | 93.25 | - | 87.18 | ||

| CAL [96] | SYSU-MM01 | ALL-Search (Single-shot) | 74.66 | 96.47 | 71.73 |

| Indoor-Search (Single-shot) | 79.69 | 98.93 | 86.97 | ||

| RegDB | Visible-to-Infrared | 94.51 | 99.70 | 88.67 | |

| Infrared-to-Visible | 93.64 | 99.46 | 97.61 | ||

| CMT [97] | SYSU-MM01 | ALL-Search (Single-shot) | 71.88 | 96.45 | 68.57 |

| Indoor-Search (Single-shot) | 76.90 | 97.68 | 79.91 | ||

| RegDB | Visible-to-Infrared | 95.17 | 98.82 | 87.30 | |

| Infrared-to-Visible | 91.97 | 97.92 | 86.46 | ||

| Zhang et al. [98] | SYSU-MM01 | ALL-Search | 66.61 | - | 62.86 |

| Indoor-Search | 70.90 | - | 75.78 | ||

| RegDB | Visible-to-Infrared | 90.76 | - | 87.30 | |

| Infrared-to-Visible | 88.79 | - | 85.44 |

| Method | Dataset | Rank-1 (%) | Rank-5 (%) | mAP (%) |

|---|---|---|---|---|

| Shi et al. [99] | DukeMTMC-VideoReID | 82.8 | 92.0 | 81.0 |

| MARS | 79.1 | 89.4 | 69.0 | |

| READ [100] | DukeMTMC-VideoReID | 86.3 | 94.4 | 83.4 |

| MARS | 91.5 | 92.1 | 70.4 | |

| TCRL [101] | ILIDS-VID | 77.3 | 94.7 | - |

| MARS | 86.0 | 92.5 | 80.1 | |

| TKP [102] | ILIDS-VID | 54.6 | 79.4 | - |

| MARS | 75.6 | 87.6 | 65.1 |

| Method | Dataset | Rank-1 (%) | Rank-5 (%) | Rank-10 (%) |

|---|---|---|---|---|

| CMGTN [103] | ILIDS-VID | 38.4 | 66.2 | 74.8 |

| MARS | 41.2 | 70.1 | 76.5 | |

| PRID-2011 | 42.7 | 66.8 | 81.3 | |

| KADDL [104] | ILIDS-VID | 56.3 | 81.9 | 88.7 |

| MARS | 74.3 | 87.8 | 91.5 | |

| PRID-2011 | 80.4 | 95.1 | 97.2 | |

| CycAs [105] | ILIDS-VID | 73.3 | - | - |

| MARS | 72.8 | - | - | |

| PRID-2011 | 86.5 | - | - | |

| CBAN [106] | ILIDS-VID | 43.2 | 71.0 | 80.1 |

| MARS | 68.2 | 85.3 | 88.9 | |

| PRID-2011 | 74.6 | 90.6 | 95.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, L.; Han, Y.; Chen, Z. Advancements and Challenges in Deep Learning-Based Person Re-Identification: A Review. Electronics 2025, 14, 4398. https://doi.org/10.3390/electronics14224398

Zhao L, Han Y, Chen Z. Advancements and Challenges in Deep Learning-Based Person Re-Identification: A Review. Electronics. 2025; 14(22):4398. https://doi.org/10.3390/electronics14224398

Chicago/Turabian StyleZhao, Liang, Yuyan Han, and Zhihao Chen. 2025. "Advancements and Challenges in Deep Learning-Based Person Re-Identification: A Review" Electronics 14, no. 22: 4398. https://doi.org/10.3390/electronics14224398

APA StyleZhao, L., Han, Y., & Chen, Z. (2025). Advancements and Challenges in Deep Learning-Based Person Re-Identification: A Review. Electronics, 14(22), 4398. https://doi.org/10.3390/electronics14224398