A Lightweight Traffic Signal Video Stream Detection Model Based on Depth-Wise Separable Convolution

Abstract

1. Introduction

1.1. Background

1.2. Related Works

1.3. Contribution

1.4. Organization

2. Proposed Methods

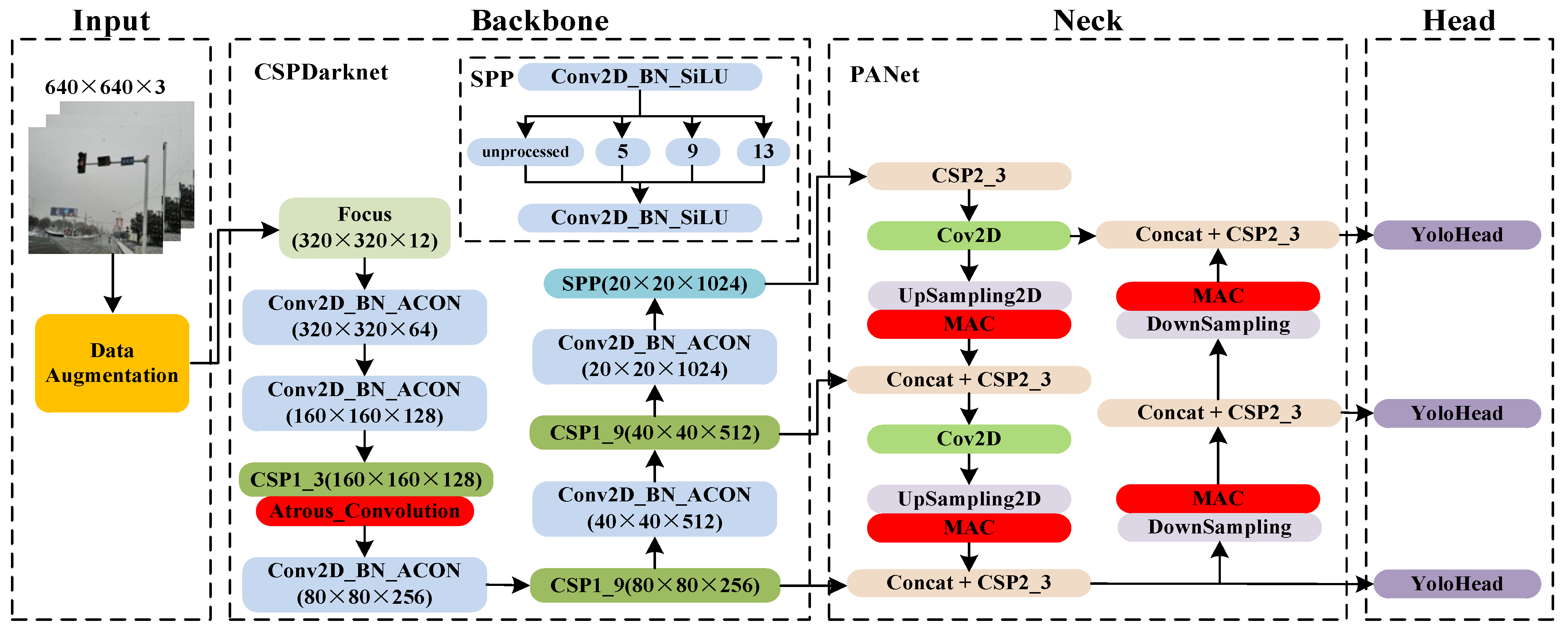

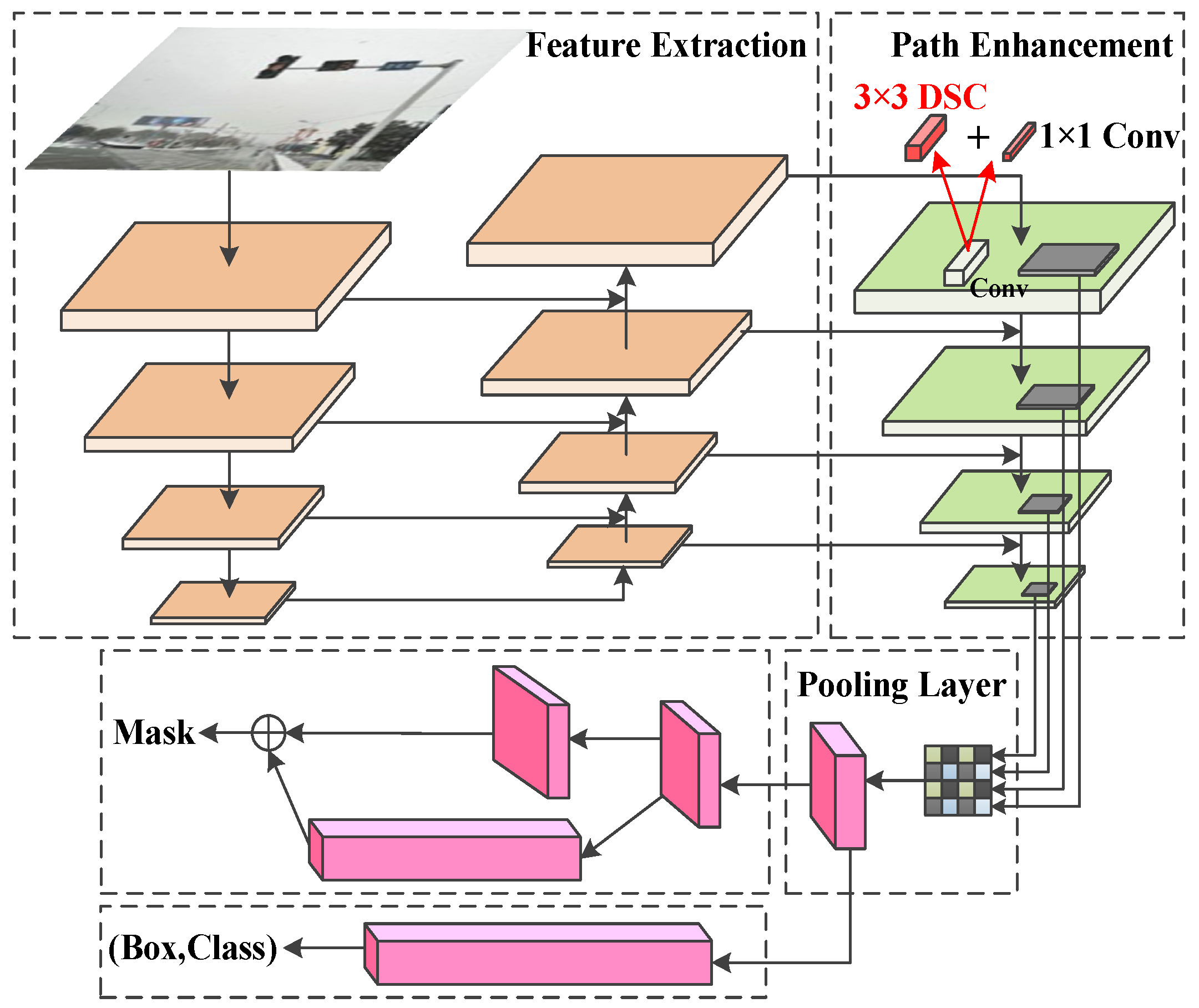

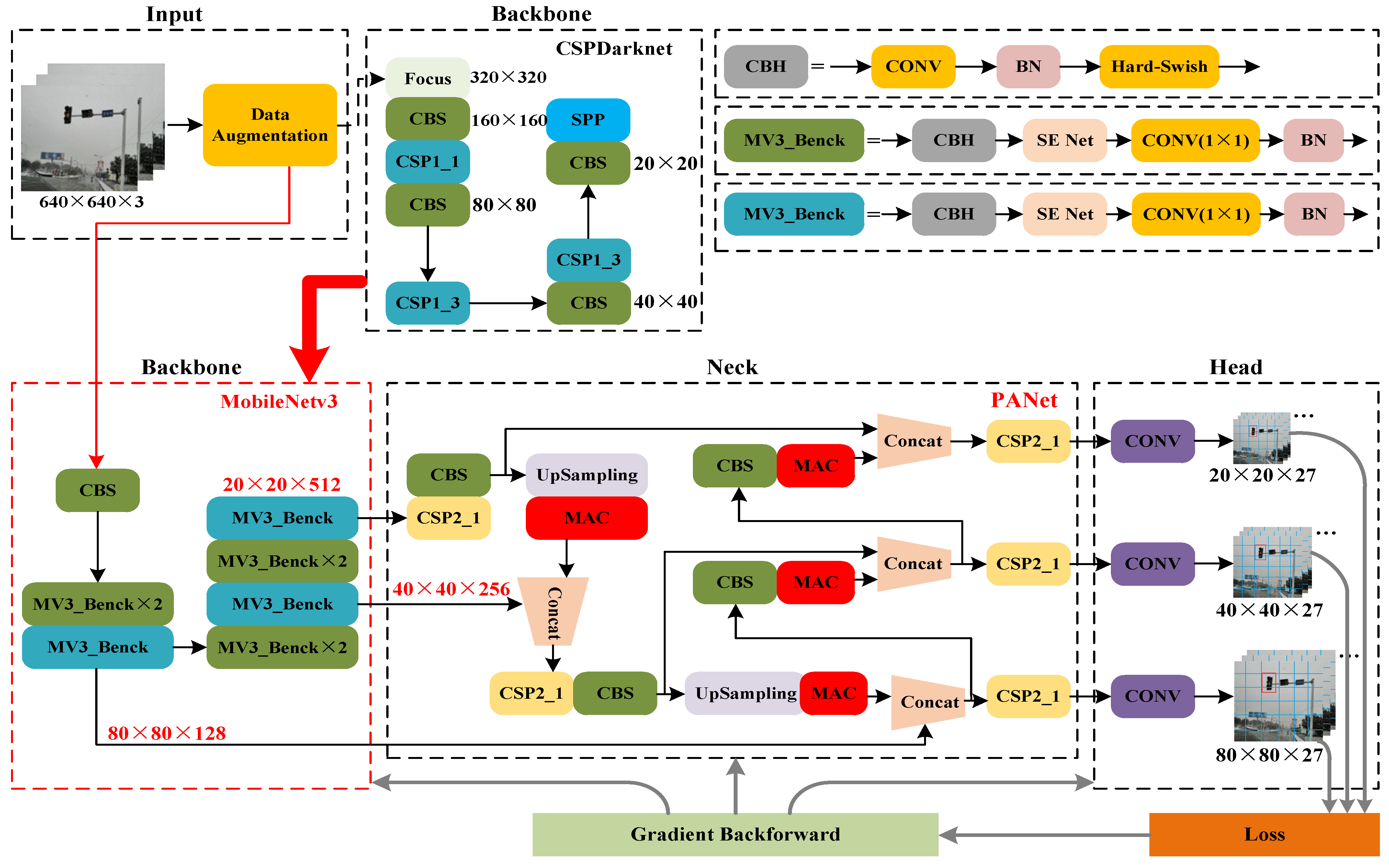

2.1. MCA-YOLOv5-ACON Model

2.2. MobileNetv3

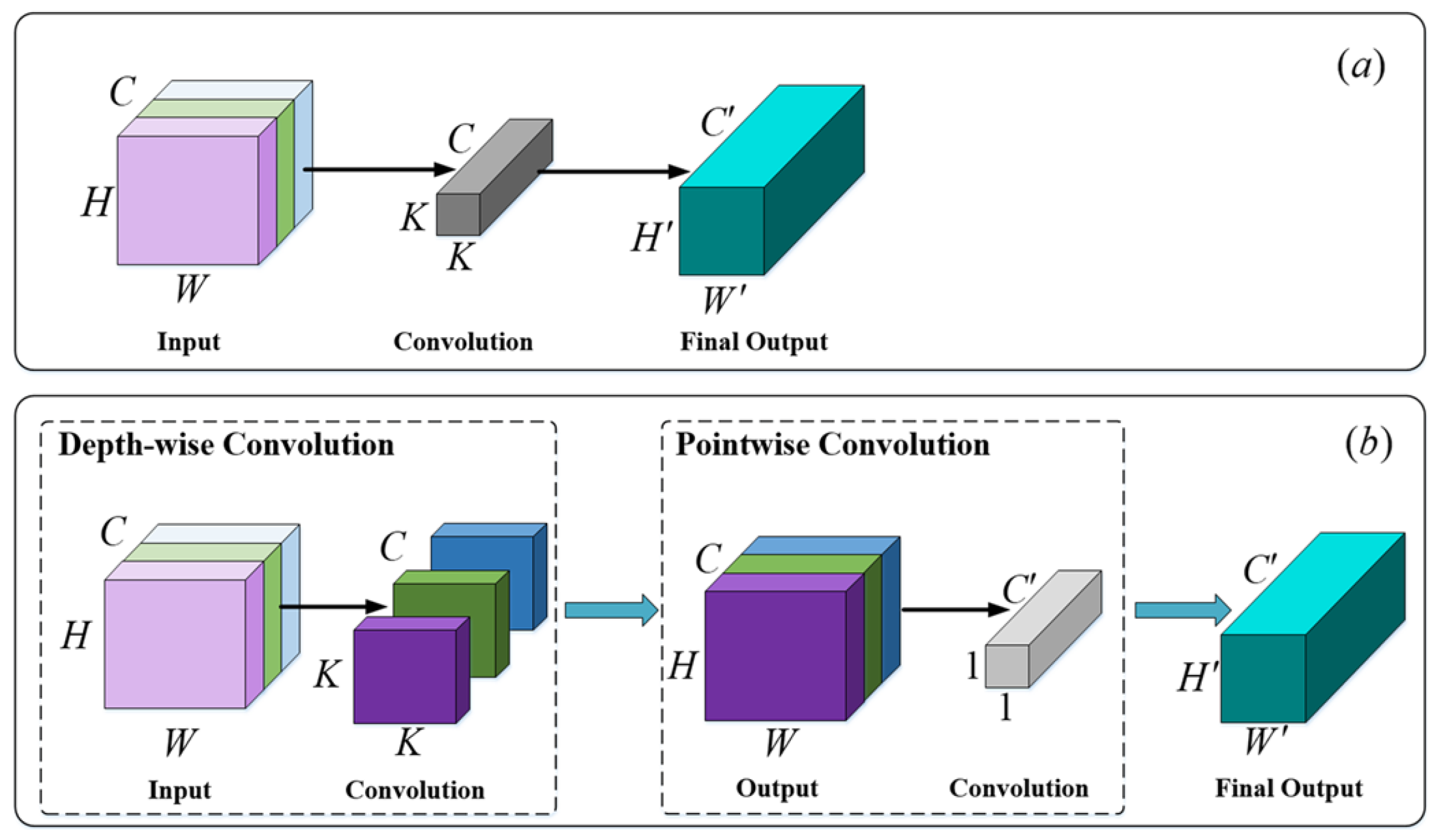

2.3. Depth-Wise Separable Convolution

2.4. Network Structure

2.5. Signal Light Fault Determination Logic

3. Experiment and Analysis

3.1. Dataset Production and Pre-Processing

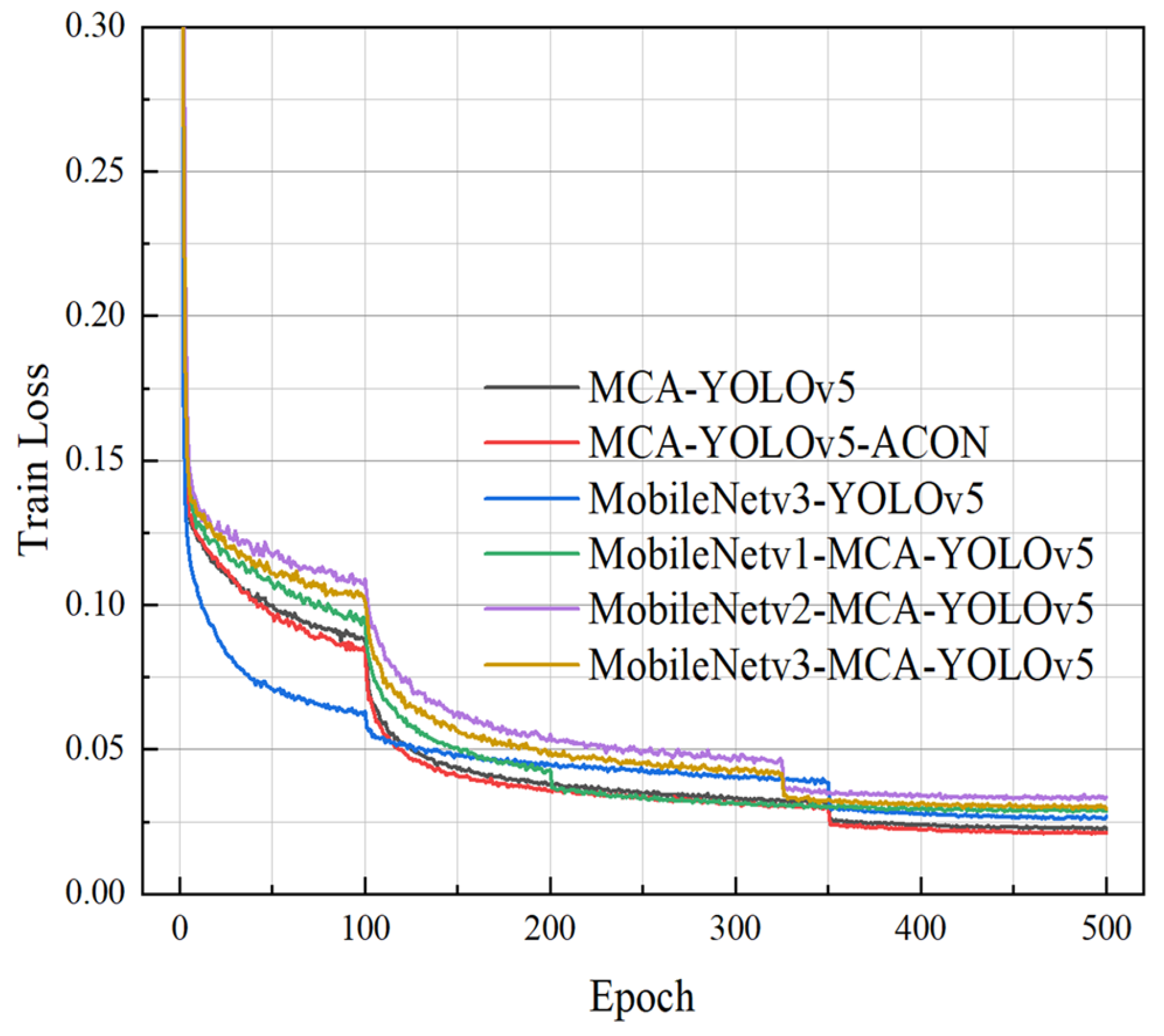

3.2. Model Training and Training Settings

3.3. Evaluation Indicators

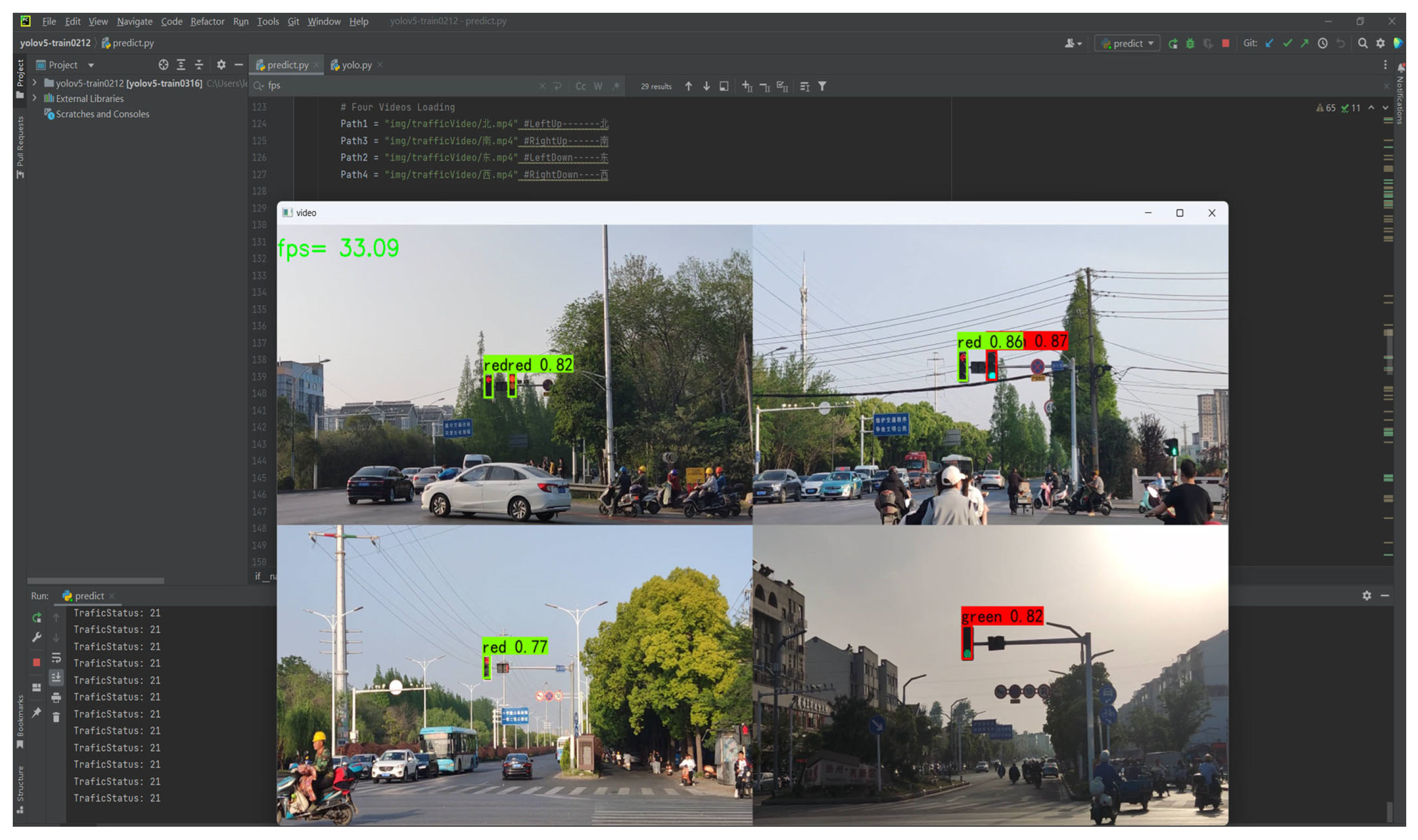

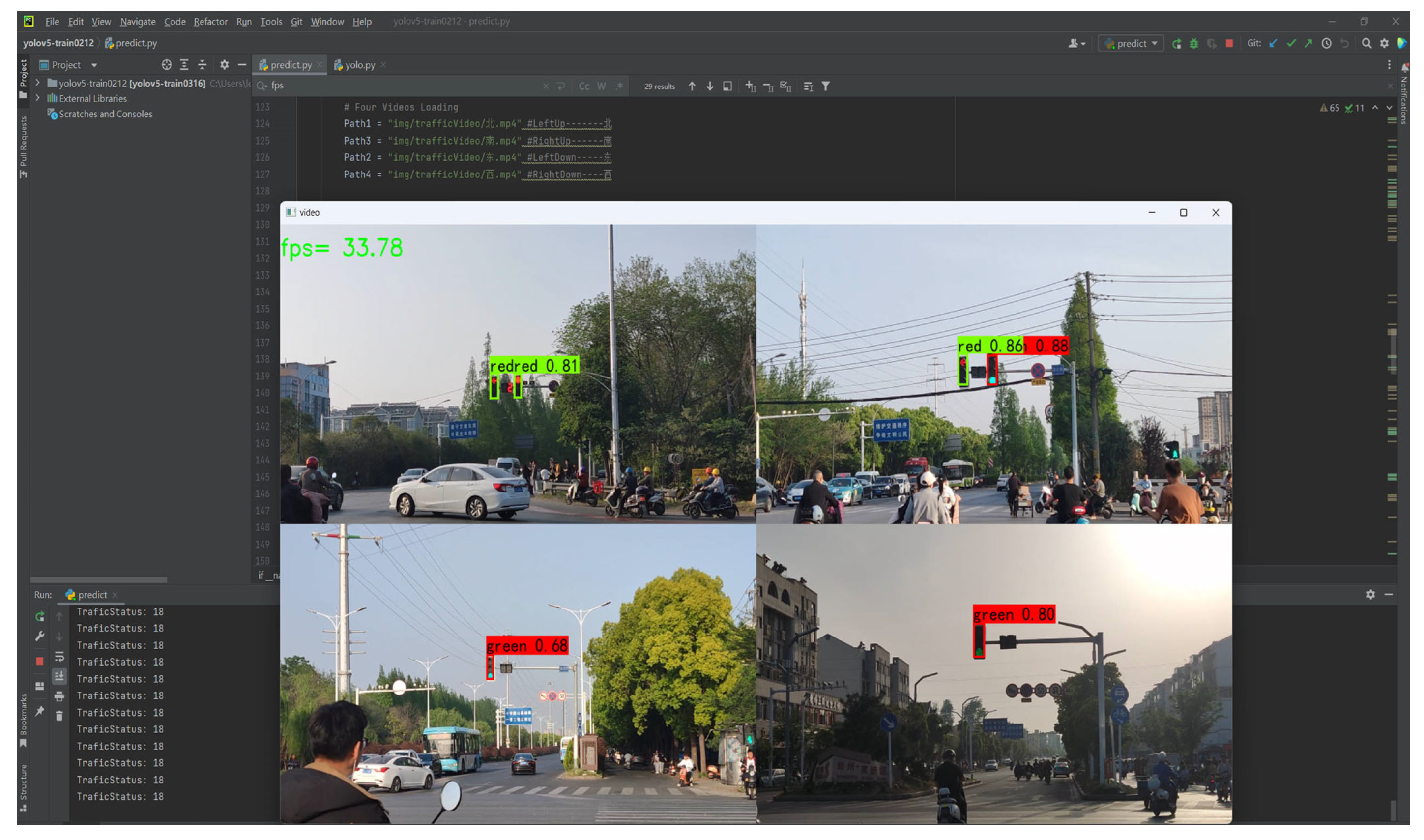

3.4. Experimental Results and Analysis

3.5. Signal Light Fault Detection

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Tomar, I.; Sreedevi, I.; Pandey, N. State-of-Art Review of Traffic Light Synchronization for Intelligent Vehicles: Current Status, Challenges, and Emerging Trends. Electronics 2022, 11, 465. [Google Scholar] [CrossRef]

- Liang, S.; Yan, F. Iterative Fault-Tolerant Control Strategy for Urban Traffic Signals Under Signal Light Failure. In Proceedings of the 2024 IEEE 13th Data Driven Control and Learning Systems Conference (DDCLS), Kaifeng, China, 5 August 2024; pp. 1190–1197. [Google Scholar]

- Mafas, A.M.M.; Amarasingha, N. An analysis of signalized intersections: Case of traffic light failure. In Proceedings of the 2017 6th National Conference on Technology and Management (NCTM), Malabe, Sri Lanka, 27 January 2017; pp. 138–141. [Google Scholar]

- Shi, T.; Devailly, F.X.; Larocque, D.; Charlin, L. Improving the Generalizability and Robustness of Large-Scale Traffic Signal Control. IEEE Open J. Intell. Transp. Syst. 2024, 5, 2–15. [Google Scholar] [CrossRef]

- Liang, S.; Wu, H.; Zhen, L.; Hua, Q.; Garg, S.; Kaddoum, G.; Hassan, M.M.; Yu, K. Edge YOLO: Real-Time Intelligent Object Detection System Based on Edge-Cloud Cooperation in Autonomous Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 25345–25360. [Google Scholar] [CrossRef]

- Duan, C.; Gong, Y.; Liao, J.; Zhang, M.; Cao, L. FRNet: DCNN for Real-Time Distracted Driving Detection Toward Embedded Deployment. IEEE Trans. Intell. Transp. Syst. 2023, 24, 9835–9848. [Google Scholar] [CrossRef]

- Lecun, Y.; Denker, J.S.; Solla, S.A.; Howard, R.E.; Jackel, L.D. Optimal brain damage. In Proceedings of the Advances in Neural Information Processing Systems 2, NIPS Conference, Denver, CO, USA, 27–30 November 1989. [Google Scholar]

- Wang, W.; Yu, Z.; Fu, C.; Cai, D.; He, X. COP: Customized correlation-based Filter level pruning method for deep CNN compression. Neurocomputing 2021, 464, 533–545. [Google Scholar] [CrossRef]

- Fernandes, F.E., Jr.; Yen, G.G. Pruning Deep Convolutional Neural Networks Architectures with Evolution Strategy. Inf. Sci. 2021, 552, 29–47. [Google Scholar] [CrossRef]

- Wang, W.; Liu, X. Research on the application of pruning algorithm based on local linear embedding method in traffic sign recognition. Appl. Sci. 2024, 14, 7184. [Google Scholar] [CrossRef]

- Zhang, Y.; Ma, H.; Ren, C.; Meng, S. RDLNet: A channel pruning-based traffic object detection algorithm. Eng. Res. Express 2025, 7, 025251. [Google Scholar] [CrossRef]

- Wu, F.; Xiao, L.; Yang, W.; Zhu, J. Defense against adversarial attacks in traffic sign images identification based on 5G. EURASIP J. Wirel. Commun. Netw. 2020, 2020, 173. [Google Scholar] [CrossRef]

- Wei, W.; Zhang, L.; Yang, K.; Li, J.; Cui, N.; Han, Y.; Zhang, N.; Yang, X.; Tan, H.; Wang, K.; et al. A lightweight network for traffic sign recognition based on multi-scale feature and attention mechanism. Heliyon 2024, 10, e26182. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, K.; Han, Y.; Li, J.; Wei, W.; Tan, H.; Yu, P.; Zhang, K.; Yang, X. TSD-DETR: A lightweight real-time detection transformer of traffic sign detection for long-range perception of autonomous driving. Eng. Appl. Artif. Intell. 2025, 139, 109536. [Google Scholar]

- Cai, K.; Yang, J.; Ren, J.; Zhang, W. A lightweight algorithm for small traffic sign detection based on improved YOLOv5s. Signal Image Video Process. 2024, 18, 4821–4829. [Google Scholar]

- Cao, L.; Kang, S.-B.; Chen, J.-P. Improved lightweight YOLOv5s Algorithm for Traffic Sign Recognition. In Proceedings of the 2023 3rd International Symposium on Computer Technology and Information Science (ISCTIS), Chengdu, China, 7–9 July 2023; pp. 289–294. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Yu, G.; Chang, Q.; Lv, W.; Xu, C.; Cui, C.; Ji, W.; Dang, Q.; Deng, K.; Wang, G.; Du, Y.; et al. PP-PicoDet: A Better Real-Time Object Detector on Mobile Devices. arXiv 2021, arXiv:2111.00902. [Google Scholar]

- Liu, Y.; Shen, S. Vehicle Detection and Tracking Based on Improved YOLOv8. IEEE Access 2025, 13, 24793–24803. [Google Scholar] [CrossRef]

- Pan, Y.; Yang, J.; Zhu, L.; Yao, L.; Zhang, B. Aerial images object detection method based on cross-scale multi-feature fusion. Math. Biosci. Eng. 2023, 20, 16148–16168. [Google Scholar] [PubMed]

- Hua, W.; Chen, Q.; Chen, W. A new lightweight network for efficient UAV object detection. Sci. Rep. 2024, 14, 13288. [Google Scholar] [CrossRef] [PubMed]

- Ikmel, G.; Najiba, E.A.E.I. Performance Analysis of YOLOv5, YOLOv7, YOLOv8, and YOLOv9 on Road Environment Object Detection: Comparative Study. In Proceedings of the 2024 International Conference on Ubiquitous Networking (UNet), Marrakech, Morocco, 26–28 June 2024; pp. 1–5. [Google Scholar]

- Yu, Y.; Zhang, Y.; Cheng, Z.; Song, Z.; Tang, C. MCA: Multidimensional collaborative attention in deep convolutional neural networks for image recognition. Eng. Appl. Artif. Intell. 2023, 126, 107079. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Liu, M.; Sun, J. Activate or Not: Learning Customized Activation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 8028–8038. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Liu, F.; Xu, H.; Qi, M.; Liu, D.; Wang, J.; Kong, J. Depth-Wise Separable Convolution Attention Module for Garbage Image Classification. Sustainability 2022, 14, 3019. [Google Scholar] [CrossRef]

- Yin, A.; Ren, C.; Yan, Z.; Xue, X.; Zhou, Y.; Liu, Y.; Lu, J.; Ding, C. C2S-RoadNet: Road Extraction Model with Depth-Wise Separable Convolution and Self-Attention. Remote Sens. 2023, 15, 4531. [Google Scholar]

- Al Amin, R.; Hasan, M.; Wiese, V.; Obermaisser, R. FPGA-Based Real-Time Object Detection and Classification System Using YOLO for Edge Computing. IEEE Access 2024, 12, 73268–73278. [Google Scholar] [CrossRef]

- Zhao, Y.; Lu, J.; Li, Q.; Peng, B.; Han, J.; Huang, B. PAHD-YOLOv5: Parallel Attention and Hybrid Dilated Convolution for Autonomous Driving Object Detection. In Proceedings of the 2022 5th International Conference on Pattern Recognition and Artificial Intelligence (PRAI), Chengdu, China, 19–21 August 2022; pp. 418–425. [Google Scholar]

- Li, R.; Chen, Y.; Wang, Y.; Sun, C. YOLO-TSF: A Small Traffic Sign Detection Algorithm for Foggy Road Scenes. Electronics 2024, 13, 3477. [Google Scholar] [CrossRef]

- Huang, Y.; Wang, F. D-TLDetector: Advancing Traffic Light Detection With a Lightweight Deep Learning Model. IEEE Trans. Intell. Transp. Syst. 2025, 26, 3917–3933. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, G.; Guo, J. Vehicle detection in surveillance videos based on YOLOv5 lightweight network. Bull. Pol. Acad. Sci.-Tech. Sci. 2022, 70, e143644. [Google Scholar] [CrossRef]

| Modelr | Parameters |

|---|---|

| MCA-YOLOv5-ACON | 48,812,541 |

| MobileNetv1-MCA-YOLOv5 | 10,235,163 |

| MobileNetv2-MCA-YOLOv5 | 8,708,883 |

| MobileNetv3-MCA-YOLOv5 | 9,455,421 |

| No. | Fault Type | Fault Description (T: Threshold Time) | Fault Name |

|---|---|---|---|

| 1 | black fault | No red light during the signal cycle | red black |

| 2 | black fault | No green light during the signal cycle | green black |

| 3 | black fault | No yellow light during the signal cycle | yellow black |

| 4 | black fault | No red/green/yellow lights during the signal cycle | all black |

| 5 | conflict faults | red lights on at the same time during the signal cycle | red conflict |

| 6 | conflict faults | green lights on at the same time during the signal cycle | green conflict |

| 7 | conflict faults | yellow lights on at the same time during the signal cycle | yellow conflict |

| 8 | conflict faults | red and yellow lights on at the same time during the signal cycle | red-yellow conflict |

| 9 | conflict faults | red and green lights on at the same time during the signal cycle | red-green conflict |

| 10 | conflict faults | yellow and green lights on at the same time during the signal cycle | yellow-green conflict |

| No | Array Value | Fault Description |

|---|---|---|

| 1 | (G = 1, R = 0, Y = 1) | red black |

| 2 | (G = 0, R = 1, Y = 1) | green black |

| 3 | (G = 1, R = 1, Y = 0) | yellow black |

| 4 | (G = 0, R = 0, Y = 1) | red-green black |

| 5 | (G = 0, R = 1, Y = 0) | yellow-green black |

| 6 | (G = 1, R = 0, Y = 0) | red-yellow black |

| 7 | (G = 0, R = 0, Y = 0) | All black |

| Signal Color | Number |

|---|---|

| Green | 1829 |

| Red | 2051 |

| Yellow | 1852 |

| Black | 1067 |

| Parameter | Set Value |

|---|---|

| Mosaic | True |

| Mosaic_prob | 0.45 |

| Mixup | True |

| Mixup_prob | 0.50 |

| Train Size | 2487 |

| Val Size | 276 |

| Test Size | 307 |

| Freeze Batch Size | 8 |

| Unfreeze Batch Size | 4 |

| Freeze Epoch | 100 |

| UnFreeze Epoch | 500 |

| Max Learing Rate | 0.012 |

| Min Learing Rate | 0.00012 |

| Momentum | 0.955 |

| Weight Decay | 0.0005 |

| Model | mAP | Precision | Recall | Size | |

|---|---|---|---|---|---|

| (%) | (%) | (%) | (%) | (MB) | |

| MCA-YOLOv5 | 96.52 | 98.96 | 94.19 | 96.00 | 179.54 |

| MCA-YOLOv5-ACON | 96.97 | 98.15 | 94.44 | 96.25 | 186.19 |

| MobileNetv3-YOLOv5 | 90.04 | 98.68 | 81.12 | 89.25 | 35.12 |

| MobileNetv1-MCA-YOLOv5 | 93.25 | 97.12 | 87.25 | 92.00 | 39.04 |

| MobileNetv2-MCA-YOLOv5 | 90.03 | 97.88 | 83.50 | 90.00 | 33.23 |

| MobileNetv3-MCA-YOLOv5 | 93.57 | 98.53 | 86.86 | 92.25 | 36.06 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, P.; Zhang, Z. A Lightweight Traffic Signal Video Stream Detection Model Based on Depth-Wise Separable Convolution. Electronics 2025, 14, 4396. https://doi.org/10.3390/electronics14224396

Shi P, Zhang Z. A Lightweight Traffic Signal Video Stream Detection Model Based on Depth-Wise Separable Convolution. Electronics. 2025; 14(22):4396. https://doi.org/10.3390/electronics14224396

Chicago/Turabian StyleShi, Peng, and Zhenghua Zhang. 2025. "A Lightweight Traffic Signal Video Stream Detection Model Based on Depth-Wise Separable Convolution" Electronics 14, no. 22: 4396. https://doi.org/10.3390/electronics14224396

APA StyleShi, P., & Zhang, Z. (2025). A Lightweight Traffic Signal Video Stream Detection Model Based on Depth-Wise Separable Convolution. Electronics, 14(22), 4396. https://doi.org/10.3390/electronics14224396