Abstract

Three-dimensional (3D) object detection constitutes a fundamental task in the field of environmental perception. While LiDAR provides high-precision 3D geometric data, its performance significantly degrades under adverse weather conditions like dense fog and heavy snow, where point cloud quality deteriorates. To address this challenge, WCGNet is proposed as a robust 3D detection framework that enhances feature representation against weather corruption. The framework introduces two key components: a Weather Codebook module and a Weather-Aware Gating Fusion module. The Weather Codebook, trained on paired clear and adverse weather scenes, learns to store clear-scene reference features, providing structural guidance for foggy scenarios. The Weather-Aware Gating Fusion module then integrates the degraded features with the codebook’s reference features through a spatial attention mechanism, a multi-head attention network, a gating mechanism, and a fusion module to dynamically recalibrate and combine features, thereby effectively restoring weather-robust representations. Additionally, a foggy point cloud dataset, nuScenes-fog, is constructed based on the nuScenes dataset. Systematic evaluations are conducted on nuScenes, nuScenes-fog, and the STF multi-weather dataset. Experimental results indicate that the proposed framework significantly enhances detection performance and generalization capability under challenging weather conditions, demonstrating strong adaptability across different weather scenarios.

1. Introduction

3D object detection, as a core task in computer vision and intelligent perception, aims to accurately identify and localize objects from three-dimensional spatial data. It is widely applied in various domains, including autonomous driving [1], robotic navigation [2,3], and unmanned aerial vehicle (UAV) perception [4]. This task plays a crucial role in enhancing a system’s understanding of its environment and its spatial perception capabilities. Among the available sensing modalities, LiDAR (Light Detection and Ranging) has become one of the most commonly used sensors in 3D object detection due to its advantages in high precision, high resolution, and robustness to lighting variations.

However, LiDAR is highly sensitive to adverse weather. Atmospheric particles such as rain, fog, and snow cause reflection, scattering, and absorption of laser signals, leading to weakened intensity, false points, and degraded point cloud structure [5]. In particular, fog induces severe degradation due to numerous tiny droplets that trigger Mie scattering and energy absorption, resulting in false echoes, shortened detection range, and distorted object geometry [6].

To address the aforementioned weather-induced degradation of LiDAR perception, several studies [7,8,9,10,11] have conducted real LiDAR tests to improve the quality of point clouds collected by sensors, while others [12,13,14,15] focus on denoising and enhancement of adverse-weather scenes in existing datasets to strengthen feature representation.

In this paper, we focus on enhancing the neural network’s feature learning capability rather than modifying the physical sensing process. Specifically, we adopt the fog simulation method from [15] to construct foggy scenes corresponding to clear scenes, which are then fed into our proposed framework, WCGNet. Since the requirement for feature enhancement differs under various weather conditions—moderate in clear scenes but substantial in foggy ones—this work addresses two core challenges: (1) how to guide the network to reconstruct clear-scene reference features under foggy conditions, and (2) how to adaptively regulate the degree of feature enhancement to meet perception demands across diverse weather scenarios.

In order to address the varying requirements of feature enhancement under different weather conditions, the Weather Codebook module (WCM) is designed to generate clear-scene reference features. Intuitively, the Weather Codebook can be thought of as a “memory bank” that stores clear-scene reference features, which the network can refer to when processing degraded or foggy inputs. During training, the network is fed with paired clear and foggy scenes. In addition to standard detection losses [16,17], a commitment loss [18] is introduced to prevent codebook collapse. Furthermore, we propose the clear-reference alignment loss () and the weather-invariant guiding loss () to facilitate the learning and memorization of clear-scene reference features. During inference, the parameters of this module are frozen, allowing it to generate corresponding weather reference features regardless of whether the input is from a clear or foggy scene.

To further enhance the model’s adaptability across diverse weather conditions, we propose the Weather-Aware Gating Fusion (WAG) module, which enables adaptive regulation of feature enhancement intensity. Specifically, the WAG module comprises three submodules: the spatial attention module (SPA) [19], which captures spatial feature dependencies and provides more reliable comparisons for the subsequent gating process; the gating mechanism (Gate), which dynamically suppresses unreliable channel features under adverse weather conditions; and the feature fusion operation (Fusion), which integrates the original BEV features with the clear-scene reference features generated by the Weather Codebook. During the training phase, the paired reference features reconstructed from the WCM are fused via a multi-head attention (MCA) mechanism [20]. Simultaneously, the clear features are processed through SPA and Gate to adaptively emphasize the most informative components, producing features with clarity-aware weights. Finally, the Fusion module integrates these representations to generate robust feature representations that are resilient to weather-induced degradation. Overall, the WCM and WAG modules together provide a reliable and flexible framework for storing clear-scene reference features and adaptively recalibrating and fusing features, effectively supporting robust feature representations and enhancing the model’s resilience across diverse and challenging weather conditions.

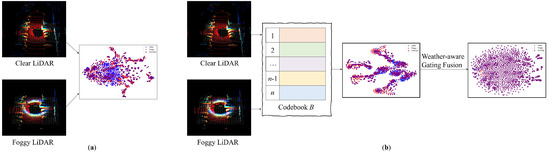

For the purpose of simulating the impact of adverse weather on LiDAR point cloud, a foggy dataset named nuScenes-fog is constructed based on clear scenes from the nuScenes [21] dataset, using established fog simulation methods [15]. As shown in Figure 1, we visualize the BEV features under clear and foggy conditions using t-distributed Stochastic Neighbor Embedding (t-SNE), which maps high-dimensional features into a two-dimensional space while preserving local structure and similarity. In the visualization, (a) corresponds to features obtained by the baseline method, whereas (b) shows features after incorporating the Weather Codebook module and the Weather-Aware Gating Fusion module. It is evident that, in (b), features from clear and foggy scenes exhibit a higher degree of overlap, demonstrating that the proposed modules can effectively align foggy-scene features with clear-scene reference features, enhance feature representations under foggy conditions, and mitigate the impact of fog on the scene. Experimental results on the nuScenes [21] and nuScenes-fog datasets demonstrate that the proposed method achieves competitive performance. Although primarily designed for foggy weather, evaluations on the STF [22] multi-weather dataset further confirm its applicability to a broader range of adverse weather conditions.

Figure 1.

t-SNE visualization results (red: Clear, blue: Foggy, purple: regions where Clear and Foggy features are close in the embedding space). (a) Visualization of features obtained by the baseline method. (b) Visualization of features after incorporating the Weather Codebook module and the Weather-Aware Gating Fusion module).

The main contributions of this work are summarized as follows:

- A robust 3D object detection network based on the Weather Codebook module and the Weather-Aware Gating Fusion module is proposed. Without relying on additional sensors, the method effectively mitigates the degradation of LiDAR point cloud quality under foggy conditions, significantly enhancing the stability and generalization capability of the detection system in adverse weather.

- A Weather Codebook module is designed to jointly train on paired clear and foggy scenes, enabling the network to learn, store, and generalize clear-scene reference features across diverse weather conditions. This mechanism facilitates the recall and reconstruction of clear-scene representations and enhances the network’s robustness and representational capacity when processing foggy inputs.

- A Weather-Aware Gating Fusion module is introduced, which adaptively regulates the degree of feature enhancement based on features with clarity-aware weights predicted by a spatial attention module and a gating module. Additionally, a multi-head cross-attention mechanism is incorporated to effectively fuse fog-aware reference features with clear-scene information, producing feature representations that are robust to weather-induced variations.

- A synthetic foggy point cloud dataset, nuScenes-fog, is constructed based on the nuScenes dataset. Extensive experiments are conducted on both nuScenes and nuScenes-fog, demonstrating the proposed method’s superior robustness and detection performance under foggy conditions. Furthermore, evaluation on the STF multi-weather dataset confirms the model’s strong adaptability across diverse weather scenarios.

2. Related Works

2.1. Real LiDAR Tests Under Adverse Weather

To obtain higher-quality point clouds in real LiDAR tests, several studies have explored sensor- and physical-level improvements. Kutila et al. [7] employed a 1550-nm pre-prototype LiDAR to mitigate visibility reduction in adverse weather. Wichmann et al. [8] investigated atmospheric extinction modeling to better characterize the impact of fog and other particles on laser propagation. Vriesman et al. [9] designed signal processing strategies to alleviate multi-sensor degradation, while Cassanelli et al. [10] proposed a fog chamber to simulate fog-induced degradation, thereby reducing experimental cost. Sezgin et al. [11] developed a degradation detection method for adaptive correction. In this paper, we train and evaluate the proposed network using the nuScenes [21] and STF [22] datasets, and a foggy dataset named nuScenes-fog is constructed based on clear scenes from the nuScenes dataset, using established fog simulation methods [15].

2.2. LiDAR-Based 3D Object Detection

LiDAR-based 3D object detection methods can be broadly categorized into two types: point-level methods and voxel-level methods. Point-level methods directly process raw point clouds, preserving geometric precision, and extract features using point-based networks or graph networks. For example, PointRCNN [23] proposes a two-stage framework, STD [24] introduces a sparse-to-dense strategy, and 3DSSD [25] further develops feature-based keypoint sampling combined with a single-stage detection approach. Voxel-level methods, on the other hand, partition the point cloud into regular grids and leverage CNN or Transformer architectures to extract features and regress bounding boxes. To reduce computational complexity, SECOND [26] employs sparse convolution, PointPillars [27] introduces a pillar-based representation to enable efficient 2D convolution, and CenterPoint [16] further simplifies the pipeline by performing anchor-free detection based on BEV (Bird’s Eye View) features. Building upon these advances, this work adopts CenterPoint [16] and TransFusion-L [17] as baseline frameworks to further investigate robustness in 3D object detection.

2.3. Point Cloud Processing Methods Under Adverse Weather

Under adverse weather, point cloud processing methods mainly fall into two categories: denoising and feature enhancement. Distance- and intensity-based denoising [12,13,28,29] improve quality with trade-offs between generality and efficiency, while fusion- and learning-based approaches [30,31,32,33] enhance robustness but often demand complex design and abundant data. For feature enhancement, several works [14,34,35,36] simulate adverse weather to generate augmented features. Building upon these advances, this work proposes an adaptive enhancement framework based on the Weather Codebook Module (WCM) and Weather-Aware Gating Fusion (WAG). By introducing a weather-aware mechanism to regulate enhancement intensity, our method improves robustness under foggy conditions while maintaining accuracy and generalization in clear scenes.

2.4. Feature Quantization

Feature quantization is a modeling paradigm that maps continuous feature representations into a discrete space, aiming to reduce redundancy, enhance generalization, and facilitate the learning of more discriminative semantic representations. Early methods such as the Variational Autoencoder (VAE) [37] reconstruct input data by learning the distribution of latent variables, yet their reliance on continuous latent spaces limits semantic controllability. To address this, the Vector Quantized VAE (VQ-VAE) [18] introduces a discrete codebook mechanism, significantly improving the structural and semantic clarity of learned features, and demonstrating superior performance in image and speech generation tasks. Subsequent variants, including VQ-VAE-2 [38] and DVAE [39], further enhance representational capacity through hierarchical structures, dynamic codebook updates, and attention mechanisms. LiDAR-PTQ [40] combines sparsity-aware initialization, task-guided loss, and adaptive rounding to reduce quantization errors, achieving INT8 performance close to FP32 on models like CenterPoint [16]. Inspired by these advancements, this work proposes the Weather Codebook module, which learn, store, and generalize clear-scene reference features across diverse weather conditions. Unlike conventional VQ-VAE, our approach specifically targets BEV features extracted from point clouds and introduces the clear-reference alignment loss and the weather-invariant guiding loss to ensure that each codebook slot accurately encodes clear-scene reference features while producing consistent reference features under adverse conditions. This design enables the model to generate robust reference features and supports resilient 3D perception in challenging environmental scenarios.

3. Method

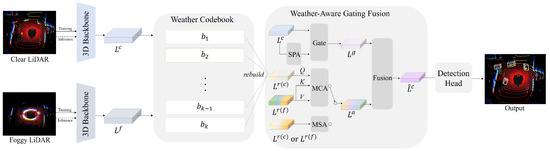

Figure 2 illustrates the overview of the WCGNet training and inference pipeline. In the training stage, the network takes paired inputs consisting of clear and foggy LiDAR point cloud scenes. These are processed by a shared 3D backbone to extract corresponding feature representations: (clear scene) and (foggy scene). Meanwhile, the Weather Codebook learns to generate corresponding reference features and based on the input pair and . These reference features are also fed into the WAG module. Within WAG, the clear features are processed through a spatial attention (SPA) module and a gating module to adaptively emphasize the most informative components, generating features with clarity-aware weights. Simultaneously, and are aligned and guided via a multi-head cross-attention mechanism (MCA) to capture the correspondence between clear and foggy scene features, resulting in an aligned feature . Finally, and are fused in the Fusion Module to generate a BEV representation that is robust to weather variations. This final feature is subsequently fed into the detection head to perform the 3D object detection task. In the inference stage, WCGNet can process inputs from arbitrary weather conditions, where the weather codebook generates the corresponding clear-scene reference feature to facilitate subsequent feature fusion. During inference, the MCA in the WAG block is replaced with a multi-head self-attention mechanism (MSA) to accommodate the single-input setting, while the remaining components remain identical to those in the training process.

Figure 2.

The architecture of WCGNet, which consists of a feature extractor, the Weather Codebook module (WCM), the weather-aware gating (WAG) module, and the detection head. During training, the network takes paired clear and foggy point clouds as input. The extracted features are processed by the WAG module with multi-head cross-attention (MCA) and subsequently passed to the detection head to generate predictions. In the inference phase, the input can originate from any weather condition, and the WAG module, equipped with multi-head self-attention (MSA), produces the final feature representation.

This work address two key issues: (1) how to guide the network to reconstruct clear-scene features under foggy conditions, and (2) how to achieve adaptive regulation of the feature enhancement degree to meet the varying perception demands under different weather scenarios. Details are in the following subsections.

3.1. The Weather Codebook Module

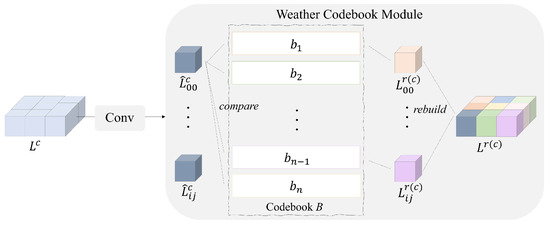

Figure 3 illustrates the process of recording and reconstructing clear-scene reference features using the codebook. Codebook B consists of N learnable slots, denoted as , where c represents the feature dimension of each slot. The clear feature is first processed by a convolutional layer to generate the BEV feature map of the clear scene, denoted as , where h and w denote the height and width of the BEV feature map, respectively. In the BEV view, can be flattened into a set of spatial features, denoted as . Then, through an element-wise quantization process , the clear-scene reference feature , which denotes the feature map composed of the selected codebook vectors, is obtained as follows:

Figure 3.

The processing pipeline of the Weather Codebook. Taking the clear feature as an example, the input BEV features of the point cloud are divided into local blocks, each of which is compared with the learnable slots in Codebook B. The most similar slot is selected to reconstruct the corresponding block. Through this reconstruction process, the original BEV features are transformed into weather-aware reference features.

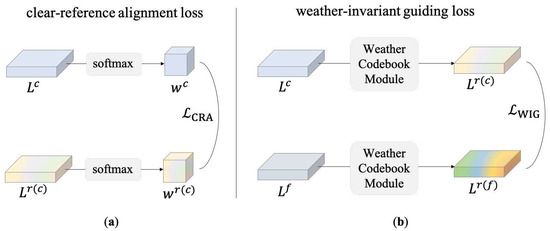

Figure 4 illustrates the design of two loss functions in the proposed module. To ensure that each slot in the Weather Codebook is trained to accurately encode and represent clear-scene reference features, and to ensure that each element in the feature vector corresponds to a probability distribution over channel-wise saliency, we introduce the Kullback–Leibler (KL) divergence as a loss function to compare the probability distributions of the average-pooled features from and . This loss is termed the clear-reference alignment loss (), and it is computed as

Figure 4.

(a) The Clear-Reference Alignment Loss (), which minimizes the discrepancy between the weight distribution of the clear features and their corresponding weather reference features , thereby enhancing alignment. (b) The Weather-Invariant Guiding Loss (), which guides the Weather Codebook to reconstruct the clear features corresponding to the foggy input, aiming to improve the model’s generalization under adverse weather conditions.

In addition, to ensure the production of consistent clear-scene reference features for the same scene under adverse conditions and to guide the Weather Codebook to ignore weather-induced interference while preserving the clarity and integrity of the learned representations, we introduce the weather-invariant guiding loss (). This loss encourages the reconstruction of clear-scene reference features from foggy inputs through the codebook. It is formulated as

To ensure stable training of the Weather Codebook and prevent codebook collapse, we introduce a commitment loss, denoted as , following the VQ-VAE [18], which is defined as

where , b denotes the selected codebook vectors, and denotes the stop-gradient operator.

The final loss is composed of the detection loss [16,17] together with , and , and is formulated as

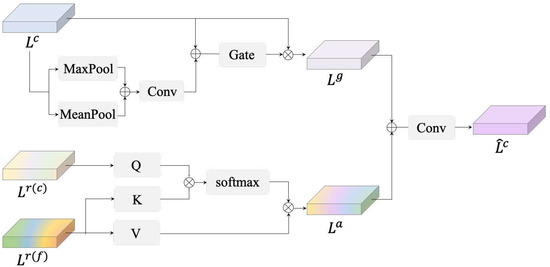

3.2. Weather-Aware Gating Fusion

To enable adaptive modulation of feature enhancement levels and meet perception demands under varying weather conditions, Figure 5 illustrates that the proposed module takes the clear features, clear reference features, and foggy reference features as input, and adopts a dual-branch structure. Specifically, the clear feature is processed through both max pooling and average pooling operations. The resulting feature maps are concatenated and passed through a convolutional layer to generate the clarity-weighted representation . The computation is formulated as follows:

Figure 5.

The training pipeline of the Weather-Aware Gating Fusion module.

Subsequently, the clear feature and the clarity-weighted feature are concatenated and passed through a gate network. The gating factor can be viewed as an approximate indicator of the current weather clarity, adaptively controlling the contribution of the clear features based on scene conditions. The output is then element-wise multiplied with to produce the clarity-adaptive gated feature . The computation is defined as follows:

Meanwhile, during the training stage, the clear reference feature , which exhibits low noise and high semantic fidelity, is used as the query to more effectively guide the attention mechanism, while the foggy reference feature serves as both the key and value. During the inference stage, the query, key, and value are all derived from the clear reference features of the input scene. These are jointly processed through a multi-head attention mechanism, which retrieves the most relevant information from the foggy reference guided by the clear feature. This facilitates the enhancement of foggy perception using clear scene guidance. The process is formulated as

where

Q, k, and V are linearly mapped to calculate the attention matrix Attn, where , , are the projection layers with the shape of , , . Then, the refined BEV feature is obtained from the output layer .

Then, the aligned BEV feature is concatenated with the clarity-adaptive gated feature , and the combined representation is passed through a convolutional layer to produce the final weather-robust feature representation , denoted as

4. Experiments

In this section, we first compare our method with existing approaches on the nuScenes [21] and STF [22] datasets. We then evaluate detection performance under various weather conditions across both datasets. Subsequently, we conduct extensive ablation studies to validate the contribution of each key component in WCGNet. Finally, we provide qualitative visualizations of detection results under different weather scenarios, comparing our method with baseline approaches.

4.1. Dataset

nuScenes Dataset. The nuScenes [21] dataset is a large-scale autonomous driving benchmark designed for 3D object detection tasks. It is collected from real-world driving scenes and comprises 1000 driving scenes, partitioned into 700 for training, 150 for validation, and 150 for testing. Each scene contains approximately 40 annotated keyframes, captured at a frequency of 2 frames per second (FPS). The nuScenes dataset employs evaluation metrics such as mean Average Precision (mAP) and the nuScenes Detection Score (NDS), which integrates multiple true positive-related metrics to comprehensively assess detection performance across various object categories and environmental conditions.

In addition, to meet the requirement of paired scenes under varying weather conditions for training our proposed network, we construct the nuScenes-fog dataset by simulating corresponding foggy scenarios based on established LiDAR fog simulation techniques. Following the settings in [22], we uniformly sample a fog density parameter from the set [0, 0.005, 0.01, 0.02, 0.03, 0.06] for each training example, which approximately corresponds to a meteorological optical range (MOR) of [∞, 600, 300, 150, 100, 50] meters, respectively.

STF Dataset. Furthermore, since this work primarily focuses on perception under adverse weather conditions, we also conduct experiments on the STF [22] dataset to evaluate performance across diverse climatic scenarios such as rain, snow, and fog. The STF dataset, collected over 10,000 km of driving in Northern Europe, includes not only data captured under mild weather conditions but also extensive data recorded during heavy snow, rain, and dense fog. Specifically, the STF dataset contains 3469 frames captured under clear conditions for training. In addition, the dataset provides 964 test scenes under light fog, 786 under dense fog, 2512 under light snowfall, 1404 under heavy snowfall, and 1847 under clear weather conditions [15,22,34]. Therefore, the results on this dataset enable us to comprehensively evaluate the effectiveness of our proposed network in real-world adverse weather scenarios.

4.2. Implementation Details

Training. We implement our proposed network using the open-source MMDetection3D (version 1.0.0rc6) framework based on PyTorch (version 1.11.0). For the nuScenes dataset, we adopt VoxelNet as the 3D backbone and employ TransFusion-L as the detection head. Regarding nuScenes-Fog, we simulate fog conditions of equivalent severity for all 1000 scenes, and during training, we utilize 750 paired samples of clear and foggy scenes as inputs. For the STF dataset, we use several representative baselines, including SECOND [26], PointPillars [27], and CenterPoint [16].

Testing. Our network is designed to generalize to test scenes under arbitrary weather conditions. The Weather Codebook recalls weather-invariant reference features corresponding to the current scene, while the Weather-Aware Gating Fusion dynamically modulates the extent of data augmentation. We compare the proposed network with several state-of-the-art methods on the nuScenes and nuScenes-fog datasets. Additionally, for the STF dataset, we conduct comparisons against existing weather simulation and deweathering methods across various weather conditions, including clear, light fog, dense fog, light snowfall, and heavy snowfall.

4.3. Quantitative Comparisons

nuScenes Results. WCGNet is evaluated against recent methods on the nuScenes test set. As shown in Table 1, our method exhibits strong overall competitiveness across a wide range of object categories. Notably, WCGNet achieves particularly promising results in detecting large-scale objects, including cars, trucks, and buses.

Table 1.

Comparison of recent works on nuScenes test set. ’C.V.’, ’Ped.’, ’Mot.’, ’Byc.’, ’T.C.’, ’Bar.’ are short for construction vehicle, pedestrian, motorcycle, bicycle, traffic_cone and barrier, respectively. All values under these categories represent the detection accuracy for each class in %. bold: the best performance, underline: the second best performance.

More importantly, as presented in Table 2, WCGNet exhibits higher robustness on the nuScenes-fog test set. In foggy conditions, most existing methods suffer from notable performance degradation, which limits their applicability in real-world scenarios. In contrast, WCGNet maintains stable 3D detection performance under both clear and foggy weather conditions. This robustness can be attributed to the proposed the Weather Codebook module and the Weather-Aware Gating Fusion module, which adaptively enhance feature representations across diverse environmental scenarios, making our approach more resilient to adverse weather compared to existing baselines.

Table 2.

A comparison of recent methods on the nuScenes and nuScenes-fog datasets in terms of mean Average Precision (mAP) and nuScenes Detection Score (NDS). For results on the nuScenes-fog dataset, the values in parentheses indicate the performance drop compared to the corresponding clear scenes. Bold: the best performance, underline: the second best performance.

STF Results. We compare WCGNet with several representative approaches, including the physical denoising method DROR [12], fog simulation methods [15], snow simulation methods [34], and the LiDAR Light Scattering Augmentation technique [14]. As shown in Table 3, we report the detection performance under different weather conditions using various baselines. Our method competitive accuracy in clear, light fog, and dense fog scenarios, demonstrating its effectiveness in both normal and degraded visual conditions. Notably, WCGNet demonstrates relatively strong generalization and adaptability to unseen weather conditions, as reflected by its consistent performance under light and heavy snowfall. This can be attributed to the fact that the Weather Codebook learns largely weather-invariant features, which in turn allows it to provide clear-scene reference features even under challenging conditions. Consequently, this indicates the weather-transferable feature modeling capability of our framework, making it reasonably suitable for deployment in diverse and moderately challenging environmental conditions.

Table 3.

Comparison of Car 3D AP@0.7IoU (%) performance across different baseline methods on all STF [22] test splits, including clear, light fog, dense fog, light snowfall, and heavy snowfall conditions. Bold indicates the best result in each split.

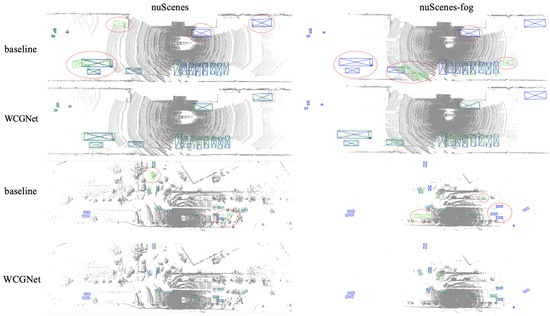

4.4. Visualization Results

As shown in Figure 6, we present a visual comparison between our proposed method and the baseline, TransFusion-L [17], on the nuScenes and nuScenes-fog datasets. It can be observed that the proposed Weather Codebook Module and Weather-Aware Gating Fusion Module effectively preserve detection accuracy under clear-scene conditions while significantly improving performance under foggy scenarios.

Figure 6.

Comparison of visualization results on the nuScenes and nuScenes-fog datasets. Ground truth bounding boxes are shown in blue, and predicted bounding boxes are shown in green. Particular attention should be paid to the circled regions, where the differences between methods under varying weather conditions are most evident.

4.5. Ablation Studies

To evaluate the effectiveness of each component in WCGNet, we conduct ablation experiments by progressively adding key modules to the baseline TransFusion-L. Specifically, we divide our network into four components: WCM, Gate, Multi-Head Attention (MA), which includes both MCA and MSA, and Fusion, where the Weather-Aware Gating Fusion module is composed of Gate, MA, and Fusion. The results, including mAP and mAP* are presented in Table 4. (a) denotes the baseline without any weather adaptation modules; (b) introduces WCM, which slightly reduces performance in clear scenes but significantly improves detection under foggy conditions by recalling clear-scene reference features; (c) adds the Gate and Fusion module, which achieves the highest accuracy under clear conditions by dynamically adjusting feature; (d) combines WCM with the MA module to enhance cross-condition feature representation; (e) combines WCM with the MA module and Fusion, achieves higher performance under foggy conditions. Compared with method (d), this demonstrates that the proposed Fusion module effectively facilitates feature alignment and fusion. (f) includes WCM, Gate, and MA and achieves higher accuracy than (d) in both clear and foggy scenes. This clearly demonstrates that the adaptive gating network can effectively adjust feature strengths, thereby facilitating subsequent feature fusion; and (g) represents the full WCGNet, demonstrating strong and balanced performance in both clear and foggy weather and confirming the effectiveness of our complete design. In addition, to verify the rationality of the N setting in the Weather Codebook, we conducted experiments with different values of N, and the results are shown in Table 5.

Table 4.

Comparison of mAP and mAP* (performance under nuScenes-Fog) across different key modules on the nuScenes dataset. Codebook refers to the Weather Codebook module; MA denotes the multi-head attention module, which includes both MCA and MSA. ‘✓’ indicates that the module is included. Bold indicates the best performance, while underline denotes the second-best result.

Table 5.

Results of different N values on the nuScenes and nuScenes-Fog datasets, reported in terms of mAP and mAP*. Bold numbers indicate the best performance for each setting, and values in parentheses show the difference from the best result.

5. Conclusions

3D object detection plays a vital role in environmental perception; however, the performance of LiDAR-based methods significantly deteriorates under adverse weather conditions due to the degradation of point cloud quality. To mitigate this issue, we propose a robust 3D object detection framework, WCGNet, which incorporates a Weather Codebook module to recall clear-scene features and a Weather-Aware Gating Fusion module that adaptively adjusts the feature enhancement strategy according to different weather conditions. Extensive evaluations conducted on the nuScenes, nuScenes-fog, and STF datasets demonstrate the effectiveness of the proposed method in improving detection accuracy and enhancing robustness across diverse weather scenarios. However, the simulated foggy data used for evaluation is based on a simplified assumption of uniform fog distribution, and the current experiments are conducted using only two types of LiDAR sensors without testing on a broader range of commercial LiDARs, which does not fully reflect real-world conditions. Future work will focus on further improving the all-weather detection performance, incorporating a comparative discussion between the simulated data and the findings of existing real LiDAR studies, and evaluating the method on additional commercial LiDAR sensors to enable a more realistic and comprehensive assessment.

Author Contributions

Writing—original draft, W.C. and F.Y.; Writing—review & editing, N.W. and J.H.; Software, W.C. and F.Y.; Visualization, J.H.; Conceptualization, Y.W.; Supervision, Y.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by the Independent Innovative Project of Changjiang Survey Planning Design and Research Co., Ltd. with Grant Number #CX2022Z10-1.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

Authors Wenfeng Chen, Fei Yan and Ning Wang were employed by the company Changjiang Survey Planning Design and Research Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Xu, X.; Kong, L.; Shuai, H.; Pan, L.; Liu, Z.; Liu, Q. LiMoE: Mixture of LiDAR Representation Learners from Automotive Scenes. arXiv 2025, arXiv:2501.04004. [Google Scholar]

- Zhong, S.; Chen, H.; Qi, Y.; Feng, D.; Chen, Z.; Wu, J.; Wen, W.; Liu, M. Colrio: Lidar-ranging-inertial centralized state estimation for robotic swarms. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 3920–3926. [Google Scholar]

- Xing, Z.; Ma, G.; Wang, L.; Yang, L.; Guo, X.; Chen, S. Towards visual interaction: Hand segmentation by combining 3D graph deep learning and laser point cloud for intelligent rehabilitation. IEEE Internet Things J. 2025, 12, 21328–21338. [Google Scholar] [CrossRef]

- Ye, H.; Sunderraman, R.; Ji, S. UAV3D: A Large-scale 3D Perception Benchmark for Unmanned Aerial Vehicles. arXiv 2024, arXiv:2410.11125. [Google Scholar]

- Dong, Y.; Kang, C.; Zhang, J.; Zhu, Z.; Wang, Y.; Yang, X.; Su, H.; Wei, X.; Zhu, J. Benchmarking robustness of 3d object detection to common corruptions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 1022–1032. [Google Scholar]

- Shinde, A.; Sharma, G.; Pattanaik, M.; Singh, S.N. Effect of Fog Particle Size Distribution on 3D Object Detection Under Adverse Weather Conditions. arXiv 2024, arXiv:2408.01085. [Google Scholar] [CrossRef]

- Kutila, M.; Pyykönen, P.; Holzhüter, H.; Colomb, M.; Duthon, P. Automotive LiDAR performance verification in fog and rain. In Proceedings of the 2018 21st International conference on intelligent transportation systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 1695–1701. [Google Scholar]

- Wichmann, M.; Kamil, M.; Frederiksen, A.; Kotzur, S.; Scherl, M. Long-term investigations of weather influence on direct time-of-flight LiDAR at 905 nm. IEEE Sens. J. 2021, 22, 2024–2036. [Google Scholar] [CrossRef]

- Vriesman, D.; Thoresz, B.; Steinhauser, D.; Zimmer, A.; Britto, A.; Brandmeier, T. An experimental analysis of rain interference on detection and ranging sensors. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–5. [Google Scholar]

- Cassanelli, D.; Cattini, S.; Medici, L.; Ferrari, L.; Rovati, L. A simple experimental method to estimate and benchmark automotive LIDARs performance in fog. Acta IMEKO 2024, 13, 1–8. [Google Scholar] [CrossRef]

- Sezgin, F.; Vriesman, D.; Steinhauser, D.; Lugner, R.; Brandmeier, T. Safe autonomous driving in adverse weather: Sensor evaluation and performance monitoring. In Proceedings of the 2023 IEEE Intelligent Vehicles Symposium (IV), Anchorage, AK, USA, 4–7 June 2023; pp. 1–6. [Google Scholar]

- Charron, N.; Phillips, S.; Waslander, S.L. De-noising of lidar point clouds corrupted by snowfall. In Proceedings of the 2018 15th Conference on Computer and Robot Vision (CRV), Toronto, ON, Canada, 8–10 May 2018; pp. 254–261. [Google Scholar]

- Kurup, A.; Bos, J. Dsor: A scalable statistical filter for removing falling snow from lidar point clouds in severe winter weather. arXiv 2021, arXiv:2109.07078. [Google Scholar] [CrossRef]

- Kilic, V.; Hegde, D.; Cooper, A.B.; Patel, V.M.; Foster, M. Lidar light scattering augmentation (lisa): Physics-based simulation of adverse weather conditions for 3D object detection. In Proceedings of the ICASSP 2025-2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; pp. 1–5. [Google Scholar]

- Hahner, M.; Sakaridis, C.; Dai, D.; Van Gool, L. Fog simulation on real LiDAR point clouds for 3D object detection in adverse weather. In Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 15283–15292. [Google Scholar]

- Yin, T.; Zhou, X.; Krahenbuhl, P. Center-based 3D object detection and tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11784–11793. [Google Scholar]

- Bai, X.; Hu, Z.; Zhu, X.; Huang, Q.; Chen, Y.; Fu, H.; Tai, C.L. Transfusion: Robust lidar-camera fusion for 3d object detection with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1090–1099. [Google Scholar]

- Van Den Oord, A.; Vinyals, O. Neural discrete representation learning. arXiv 2017, arXiv:1711.00937. [Google Scholar]

- Zhu, X.; Cheng, D.; Zhang, Z.; Lin, S.; Dai, J. An empirical study of spatial attention mechanisms in deep networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 6688–6697. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuscenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11621–11631. [Google Scholar]

- Bijelic, M.; Gruber, T.; Mannan, F.; Kraus, F.; Ritter, W.; Dietmayer, K.; Heide, F. Seeing through fog without seeing fog: Deep multimodal sensor fusion in unseen adverse weather. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11682–11692. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. Pointrcnn: 3D object proposal generation and detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Shen, X.; Jia, J. Std: Sparse-to-dense 3D object detector for point cloud. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1951–1960. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Jia, J. 3dssd: Point-based 3D single stage object detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11040–11048. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. Second: Sparsely embedded convolutional detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Park, J.I.; Park, J.; Kim, K.S. Fast and accurate desnowing algorithm for LiDAR point clouds. IEEE Access 2020, 8, 160202–160212. [Google Scholar] [CrossRef]

- Le, M.H.; Cheng, C.H.; Liu, D.G.; Nguyen, T.T. An adaptive group of density outlier removal filter: Snow particle removal from lidar data. Electronics 2022, 11, 2993. [Google Scholar] [CrossRef]

- Wang, W.; You, X.; Chen, L.; Tian, J.; Tang, F.; Zhang, L. A scalable and accurate de-snowing algorithm for LiDAR point clouds in winter. Remote Sens. 2022, 14, 1468. [Google Scholar] [CrossRef]

- Han, S.J.; Lee, D.; Min, K.W.; Choi, J. RGOR: De-noising of LiDAR point clouds with reflectance restoration in adverse weather. In Proceedings of the 2023 14th International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Republic of Korea, 11–13 October 2023; pp. 1844–1849. [Google Scholar]

- Yu, M.Y.; Vasudevan, R.; Johnson-Roberson, M. Lisnownet: Real-time snow removal for lidar point clouds. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 6820–6826. [Google Scholar]

- Seppänen, A.; Ojala, R.; Tammi, K. 4denoisenet: Adverse weather denoising from adjacent point clouds. IEEE Robot. Autom. Lett. 2022, 8, 456–463. [Google Scholar] [CrossRef]

- Hahner, M.; Sakaridis, C.; Bijelic, M.; Heide, F.; Yu, F.; Dai, D.; Van Gool, L. Lidar snowfall simulation for robust 3D object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16364–16374. [Google Scholar]

- Fang, J.; Zuo, X.; Zhou, D.; Jin, S.; Wang, S.; Zhang, L. Lidar-aug: A general rendering-based augmentation framework for 3D object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4710–4720. [Google Scholar]

- Zhan, J.; Liu, T.; Li, R.; Zhang, J.; Zhang, Z.; Chen, Y. Real-aug: Realistic scene synthesis for lidar augmentation in 3d object detection. arXiv 2023, arXiv:2305.12853. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Razavi, A.; Van den Oord, A.; Vinyals, O. Generating diverse high-fidelity images with vq-vae-2. arXiv 2019, arXiv:1906.00446. [Google Scholar] [CrossRef]

- Vahdat, A.; Andriyash, E.; Macready, W. Dvae#: Discrete variational autoencoders with relaxed boltzmann priors. arXiv 2018, arXiv:1805.07445. [Google Scholar] [CrossRef]

- Zhou, S.; Li, L.; Zhang, X.; Zhang, B.; Bai, S.; Sun, M.; Zhao, Z.; Lu, X.; Chu, X. Lidar-ptq: Post-training quantization for point cloud 3d object detection. arXiv 2024, arXiv:2401.15865. [Google Scholar]

- Zhu, B.; Jiang, Z.; Zhou, X.; Li, Z.; Yu, G. Class-balanced grouping and sampling for point cloud 3d object detection. arXiv 2019, arXiv:1908.09492. [Google Scholar] [CrossRef]

- Erabati, G.K.; Araujo, H. DDet3D: Embracing 3D object detector with diffusion. Appl. Intell. 2025, 55, 283. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, J.; Zhang, X.; Qi, X.; Jia, J. Largekernel3d: Scaling up kernels in 3D sparse cnns. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Denver, CO, USA, 3–7 June 2023; pp. 13488–13498. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).