Enhancing the Performance of Computer Vision Systems in Industry: A Comparative Evaluation Between Data-Centric and Model-Centric Artificial Intelligence

Abstract

1. Introduction

- How does the model performance change when varying the data quality and quantity additionally compared to a model-centric optimization?

- What role do synthetically generated data play in improving the detection of rare defect classes in industrial image data sets?

- How can data-centric AI be applied in optical quality control?

2. Foundations and Related Work

2.1. Synthetic Data Generation

2.2. Deep Learning for Optical Quality Inspection

2.3. DCAI in Optical Quality Assurance

2.4. MCAI in Optical Quality Assurance

3. Methodology

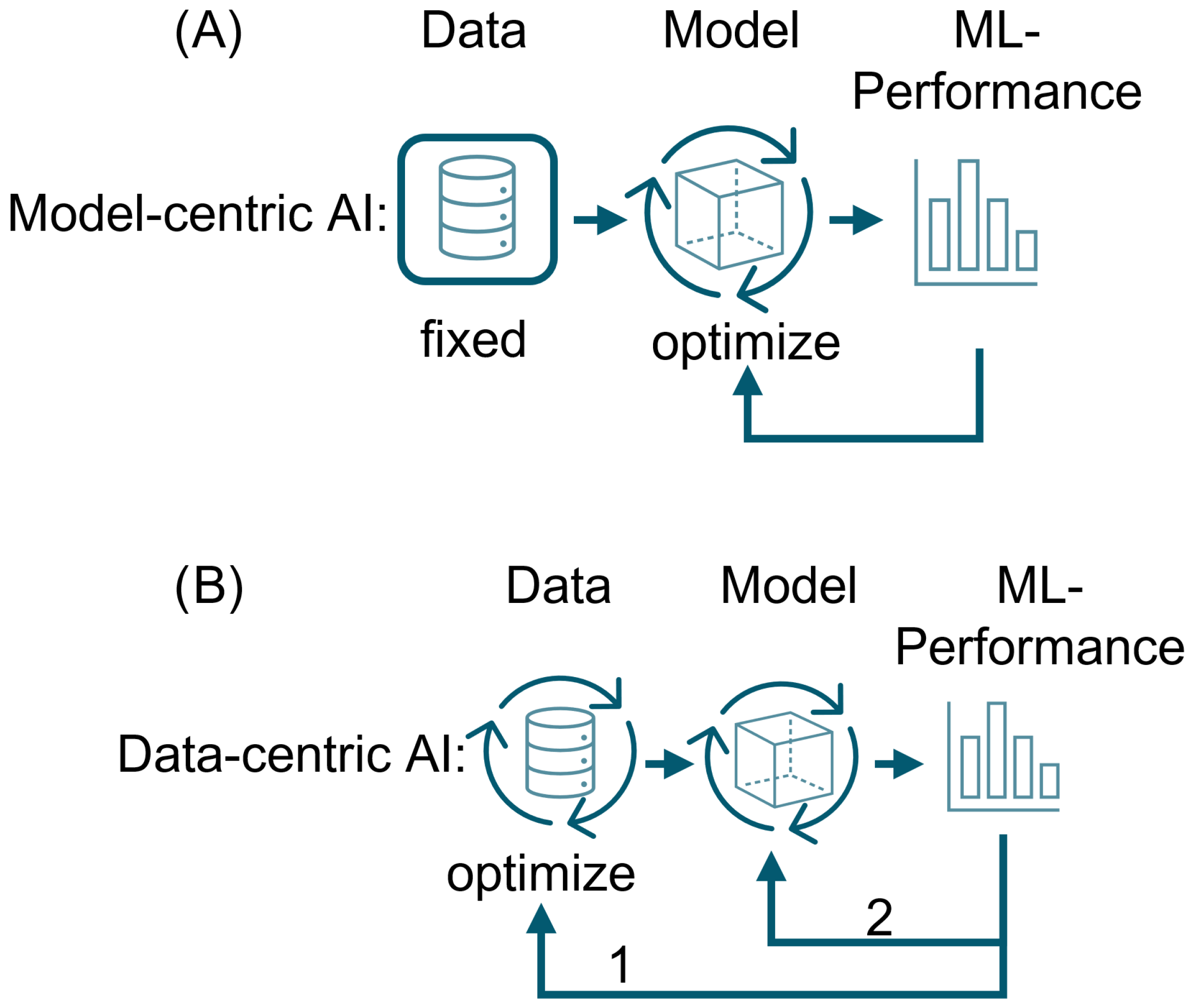

3.1. Model-Centric Artificial Intelligence

- TP: True Positives;

- TN: True Negatives;

- FP: False Positives;

- FN: False Negatives.

3.2. Data-Centric Artificial Intelligence

3.3. Data Sets

3.4. Model Design and Configuration

3.4.1. Convolution Neuronal Network

3.4.2. Diffusion Model

4. Evaluation

4.1. Metrics

4.2. Model-Centric Approach

4.3. Data-Centric Approach

Casting Data Set:

Leather Data Set:

5. Discussion

5.1. Casting Data Set

5.2. Leather Data Set

5.3. Research Questions

6. Conclusions and Future Research Work

6.1. Conclusions

6.2. Future Research Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Govaerts, R.; De Bock, S.; Stas, L.; El Makrini, I.; Habay, J.; Van Cutsem, J.; Roelands, B.; Vanderborght, B.; Meeusen, R.; De Pauw, K. Work performance in industry: The impact of mental fatigue and a passive back exoskeleton on work efficiency. Appl. Ergon. 2023, 110, 104026. [Google Scholar] [CrossRef]

- Nascimento, R.; Martins, I.; Dutra, T.A.; Moreira, L. Computer Vision Based Quality Control for Additive Manufacturing Parts. Int. J. Adv. Manuf. Technol. 2023, 124, 3241–3256. [Google Scholar] [CrossRef]

- Villalba-Diez, J.; Schmidt, D.; Gevers, R.; Ordieres-Meré, J.; Buchwitz, M.; Wellbrock, W. Deep Learning for Industrial Computer Vision Quality Control in the Printing Industry 4.0. Sensors 2019, 19, 3987. [Google Scholar] [CrossRef] [PubMed]

- Neumann, W.P.; Kolus, A.; Wells, R.W. Human Factors in Production System Design and Quality Performance – A Systematic Review. IFAC-PapersOnLine 2016, 49, 1721–1724. [Google Scholar] [CrossRef]

- Hachem, C.E.; Perrot, G.; Painvin, L.; Couturier, R. Automation of Quality Control in the Automotive Industry Using Deep Learning Algorithms. In Proceedings of the 2021 International Conference on Computer, Control and Robotics (ICCCR), Shanghai, China, 8–10 January 2021; pp. 123–127. [Google Scholar] [CrossRef]

- Msakni, M.K.; Risan, A.; Schütz, P. Using machine learning prediction models for quality control: A case study from the automotive industry. Comput. Manag. Sci. 2023, 20, 14. [Google Scholar] [CrossRef] [PubMed]

- Najafabadi, M.M.; Villanustre, F.; Khoshgoftaar, T.M.; Seliya, N.; Wald, R.; Muharemagic, E. Deep learning applications and challenges in big data analytics. J. Big Data 2015, 2, 1. [Google Scholar] [CrossRef]

- Munappy, A.R.; Bosch, J.; Olsson, H.H.; Arpteg, A.; Brinne, B. Data management for production quality deep learning models: Challenges and solutions. J. Syst. Softw. 2022, 191, 111359. [Google Scholar] [CrossRef]

- Hamid, O.H. From Model-Centric to Data-Centric AI: A Paradigm Shift or Rather a Complementary Approach? In Proceedings of the 2022 8th International Conference on Information Technology Trends (ITT), Dubai, United Arab Emirates, 25–26 May 2022; pp. 196–199. [Google Scholar] [CrossRef]

- Priestley, M.; O’donnell, F.; Simperl, E. A Survey of Data Quality Requirements That Matter in ML Development Pipelines. J. Data Inf. Qual. 2023, 15, 11. [Google Scholar] [CrossRef]

- Jarrahi, M.H.; Memariani, A.; Guha, S. The Principles of Data-Centric AI. Commun. ACM 2023, 66, 84–92. [Google Scholar] [CrossRef]

- Zha, D.; Bhat, Z.P.; Lai, K.H.; Yang, F.; Jiang, Z.; Zhong, S.; Hu, X. Data-centric Artificial Intelligence: A Survey. arXiv 2023. [Google Scholar] [CrossRef]

- Whang, S.E.; Roh, Y.; Song, H.; Lee, J.G. Data collection and quality challenges in deep learning: A data-centric AI perspective. VLDB J. 2023, 32, 791–813. [Google Scholar] [CrossRef]

- Patel, H.; Guttula, S.; Gupta, N.; Hans, S.; Mittal, R.S.; N, L. A Data-centric AI Framework for Automating Exploratory Data Analysis and Data Quality Tasks. J. Data Inf. Qual. 2023, 15, 44. [Google Scholar] [CrossRef]

- Bauer, J.C.; Trattnig, S.; Vieltorf, F.; Daub, R. Handling data drift in deep learning-based quality monitoring: Evaluating calibration methods using the example of friction stir welding. J. Intell. Manuf. 2025. [Google Scholar] [CrossRef]

- Kumar, S.; Datta, S.; Singh, V.; Singh, S.K.; Sharma, R. Opportunities and Challenges in Data-Centric AI. IEEE Access 2024, 12, 33173–33189. [Google Scholar] [CrossRef]

- Mazumder, M.; Banbury, C.; Yao, X.; Karlaš, B.; Gaviria Rojas, W.; Diamos, S.; Diamos, G.; He, L.; Parrish, A.; Kirk, H.R.; et al. DataPerf: Benchmarks for Data-Centric AI Development. Adv. Neural Inf. Process. Syst. 2023, 36, 5320–5347. [Google Scholar]

- Zha, D.; Lai, K.H.; Yang, F.; Zou, N.; Gao, H.; Hu, X. Data-centric AI: Techniques and Future Perspectives. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Long Beach, CA, USA, 6–10 August 2023; pp. 5839–5840. [Google Scholar] [CrossRef]

- Chamberland, O.; Reckzin, M.; Hashim, H.A. An Autoencoder with Convolutional Neural Network for Surface Defect Detection on Cast Components. J. Fail. Anal. Prev. 2023, 23, 1633–1644. [Google Scholar] [CrossRef]

- Zipfel, J.; Verworner, F.; Fischer, M.; Wieland, U.; Kraus, M.; Zschech, P. Anomaly detection for industrial quality assurance: A comparative evaluation of unsupervised deep learning models. Comput. Ind. Eng. 2023, 177, 109045. [Google Scholar] [CrossRef]

- Gaspar, F.; Carreira, D.; Rodrigues, N.; Miragaia, R.; Ribeiro, J.; Costa, P.; Pereira, A. Synthetic image generation for effective deep learning model training for ceramic industry applications. Eng. Appl. Artif. Intell. 2025, 143, 110019. [Google Scholar] [CrossRef]

- Rajendran, M.; Tan, C.T.; Atmosukarto, I.; Ng, A.B.; See, S. Review on synergizing the Metaverse and AI-driven synthetic data: Enhancing virtual realms and activity recognition in computer vision. Vis. Intell. 2024, 2, 27. [Google Scholar] [CrossRef]

- Man, K.; Chahl, J. A Review of Synthetic Image Data and Its Use in Computer Vision. J. Imaging 2022, 8, 310. [Google Scholar] [CrossRef]

- Dahmen, T.; Trampert, P.; Boughorbel, F.; Sprenger, J.; Klusch, M.; Fischer, K.; Kübel, C.; Slusallek, P. Digital reality: A model-based approach to supervised learning from synthetic data. AI Perspect. 2019, 1, 2. [Google Scholar] [CrossRef]

- Figueira, A.; Vaz, B. Survey on Synthetic Data Generation, Evaluation Methods and GANs. Mathematics 2022, 10, 2733. [Google Scholar] [CrossRef]

- Kim, K.; Myung, H. Autoencoder-Combined Generative Adversarial Networks for Synthetic Image Data Generation and Detection of Jellyfish Swarm. IEEE Access 2018, 6, 54207–54214. [Google Scholar] [CrossRef]

- Zhou, H.A.; Wolfschläger, D.; Florides, C.; Werheid, J.; Behnen, H.; Woltersmann, J.H.; Pinto, T.C.; Kemmerling, M.; Abdelrazeq, A.; Schmitt, R.H. Generative AI in industrial machine vision: A review. J. Intell. Manuf. 2025. [Google Scholar] [CrossRef]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. arXiv 2020. [Google Scholar] [CrossRef]

- Raisul Islam, M.; Zakir Hossain Zamil, M.; Eshmam Rayed, M.; Mohsin Kabir, M.; Mridha, M.F.; Nishimura, S.; Shin, J. Deep Learning and Computer Vision Techniques for Enhanced Quality Control in Manufacturing Processes. IEEE Access 2024, 12, 121449–121479. [Google Scholar] [CrossRef]

- Tercan, H.; Meisen, T. Machine learning and deep learning based predictive quality in manufacturing: A systematic review. J. Intell. Manuf. 2022, 33, 1879–1905. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, Y.; Oerlemans, A.; Lao, S.; Wu, S.; Lew, M.S. Deep learning for visual understanding: A review. Neurocomputing 2016, 187, 27–48. [Google Scholar] [CrossRef]

- Xia, Z. An Overview of Deep Learning. In Deep Learning in Object Detection and Recognition; Jiang, X., Hadid, A., Pang, Y., Granger, E., Feng, X., Eds.; Springer: Singapore, 2019; pp. 1–18. [Google Scholar] [CrossRef]

- Moosavian, A.; Bagheri, E.; Yazdanijoo, A.; Barshooi, A.H. An Improved U-Net Image Segmentation Network for Crankshaft Surface Defect Detection. In Proceedings of the 2024 13th Iranian/3rd International Machine Vision and Image Processing Conference (MVIP), Tehran, Iran, 6–7 March 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Zeiser, A.; Özcan, B.; Van Stein, B.; Bäck, T. Evaluation of deep unsupervised anomaly detection methods with a data-centric approach for on-line inspection. Comput. Ind. 2023, 146, 103852. [Google Scholar] [CrossRef]

- Kofler, C.; Dohr, C.A.; Dohr, J.; Zernig, A. Data-Centric Model Development to Improve the CNN Classification of Defect Density SEM Images. In Proceedings of the IECON 2022—48th Annual Conference of the IEEE Industrial Electronics Society, Brussels, Belgium, 17–20 October 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Weiher, K.; Rieck, S.; Pankrath, H.; Beuss, F.; Geist, M.; Sender, J.; Fluegge, W. Automated visual inspection of manufactured parts using deep convolutional neural networks and transfer learning. 56th CIRP Int. Conf. Manuf. Syst. 2023, 120, 858–863. [Google Scholar] [CrossRef]

- Ghansiyal, S.; Yi, L.; Simon, P.M.; Klar, M.; Müller, M.M.; Glatt, M.; Aurich, J.C. Anomaly detection towards zero defect manufacturing using generative adversarial networks. Procedia CIRP 2023, 120, 1457–1462. [Google Scholar] [CrossRef]

- Hridoy, M.W.; Rahman, M.M.; Sakib, S. A Framework for Industrial Inspection System using Deep Learning. Ann. Data Sci. 2024, 11, 445–478. [Google Scholar] [CrossRef]

- Malerba, D.; Pasquadibisceglie, V. Data-Centric AI. J. Intell. Inf. Syst. 2024, 62, 1493–1502. [Google Scholar] [CrossRef]

- Wang, Q.; Ma, Y.; Zhao, K.; Tian, Y. A Comprehensive Survey of Loss Functions in Machine Learning. Ann. Data Sci. 2022, 9, 187–212. [Google Scholar] [CrossRef]

- Dalianis, H. Evaluation Metrics and Evaluation. In Clinical Text Mining; Springer International Publishing: Cham, Switzerland, 2018; pp. 45–53. [Google Scholar] [CrossRef]

- Nieberl, M.; Zeiser, A.; Timinger, H. A Review of Data-Centric Artificial Intelligence (DCAI) and its Impact on manufacturing Industry: Challenges, Limitations, and Future Directions. In Proceedings of the 2024 IEEE Conference on Artificial Intelligence (CAI), Singapore, 25–27 June 2024; pp. 44–51. [Google Scholar] [CrossRef]

- Dabhi, R. Casting Product Image Data for Quality Inspection. 2020. Available online: https://www.kaggle.com/datasets/ravirajsinh45/real-life-industrial-dataset-of-casting-product (accessed on 3 November 2025).

- Bergmann, P.; Fauser, M.; Sattlegger, D.; Steger, C. MVTec AD—A Comprehensive Real-World Dataset for Unsupervised Anomaly Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9584–9592. [Google Scholar] [CrossRef]

- Bergmann, P.; Batzner, K.; Fauser, M.; Sattlegger, D.; Steger, C. The MVTec Anomaly Detection Dataset: A Comprehensive Real-World Dataset for Unsupervised Anomaly Detection. Int. J. Comput. Vis. 2021, 129, 1038–1059. [Google Scholar] [CrossRef]

- Sharma, S.; Guleria, K. A systematic literature review on deep learning approaches for pneumonia detection using chest X-ray images. Multimed. Tools Appl. 2023, 83, 24101–24151. [Google Scholar] [CrossRef]

- Alzhrani, K.M. From Sigmoid to SoftProb: A novel output activation function for multi-label learning. Alex. Eng. J. 2025, 129, 472–482. [Google Scholar] [CrossRef]

- Vogel-Heuser, B.; Neumann, E.M.; Fischer, J. MICOSE4aPS: Industrially Applicable Maturity Metric to Improve Systematic Reuse of Control Software. ACM Trans. Softw. Eng. Methodol. 2021, 31, 5. [Google Scholar] [CrossRef]

- Bosser, J.D.; Sorstadius, E.; Chehreghani, M.H. Model-Centric and Data-Centric Aspects of Active Learning for Deep Neural Networks. In Proceedings of the 2021 IEEE International Conference on Big Data (Big Data), Orlando, FL, USA, 15–18 December 2021; pp. 5053–5062. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, H.; Li, Y.; Lau, C.T.; You, Y. Active-Learning-as-a-Service: An Automatic and Efficient MLOps System for Data-Centric AI. arXiv 2022. [Google Scholar] [CrossRef]

| Experiment | Data Set | OK Count | NOK Count | Data Source/Method | Accuracy |

|---|---|---|---|---|---|

| Baseline (MCAI) | Casting data set | 468 | 709 | Original data (no improvement) | 83% |

| Label Improvement (DCAI) | Casting data set | 468 | 670 | Manual relabeling by experts | 86% |

| Data Augmentation (DCAI) | Casting data set | 849 | 828 | Mirroring and resizing original data | 89% |

| Data Enrichment (DCAI) | Casting data set | 849 | 887 | Generated by diffusion model | 93% |

| Baseline (MCAI) | Leather data set | 196 | 51 | Original data (no improvement) | 53% |

| Data Augmentation (DCAI) | Leather data set | 301 | 192 | Mirroring and resizing original data | 27% |

| Data Enrichment (DCAI) | Leather data set | 344 | 334 | Generated by diffusion model | 62% |

| No. | Conv_Filters | Conv_Filters_2 | Dense_Units | Dropout_Rate | Learning_Rate | Batch_Size | Loss_Function |

|---|---|---|---|---|---|---|---|

| 1 | 64 | 256 | 256 | 0.30 | 0.001 | 16 | binarycrossentropy |

| 2 | 64 | 256 | 256 | 0.20 | 0.001 | 48 | binarycrossentropy |

| 3 | 32 | 256 | 64 | 0.40 | 0.010 | 64 | binarycrossentropy |

| 4 | 32 | 192 | 192 | 0.30 | 0.010 | 48 | binarycrossentropy |

| 5 | 32 | 64 | 256 | 0.30 | 0.0001 | 32 | hinge |

| 6 | 96 | 192 | 192 | 0.40 | 0.010 | 64 | hinge |

| 7 | 64 | 256 | 256 | 0.40 | 0.0001 | 16 | hinge |

| 8 | 64 | 128 | 192 | 0.40 | 0.001 | 64 | hinge |

| 9 | 96 | 256 | 192 | 0.20 | 0.0001 | 16 | hinge |

| 10 | 64 | 128 | 128 | 0.40 | 0.010 | 16 | hinge |

| ID | Experiment | Technique | Category | Precision (P) | Recall (R) | F1 Score | Weighted P/R |

|---|---|---|---|---|---|---|---|

| a | Casting data set MCAI | MCAI | OK | 0.714 | 0.981 | 0.827 | (W: 0.870/0.829) |

| Defect | 0.980 | 0.722 | 0.832 | ||||

| b | Casting Label Improvement | Label Improvement | OK | 0.750 | 1.000 | 0.857 | (W: 0.896/0.862) |

| Defect | 1.000 | 0.764 | 0.866 | ||||

| c | Casting Augmentation | Data Augmentation | OK | 0.797 | 1.000 | 0.887 | (W: 0.916/0.894) |

| Defect | 1.000 | 0.819 | 0.901 | ||||

| d | Casting Enrichment | Data Enrichment | OK | 0.877 | 0.980 | 0.926 | (W: 0.940/0.935) |

| Defect | 0.985 | 0.903 | 0.942 | ||||

| e | Leather Data set MCAI | MCAI | OK | 0.533 | 1.000 | 0.696 | (W: 0.284/0.533) |

| Defect | 0.000 | 0.000 | 0.000 | ||||

| f | Leather Augmentation | Data Augmentation | OK | 0.167 | 0.094 | 0.120 | (W: 0.203/0.267) |

| Defect | 0.310 | 0.464 | 0.371 | ||||

| g | Leather Enrichment | Data Enrichment | OK | 0.636 | 0.656 | 0.646 | (W: 0.616/0.617) |

| Defect | 0.593 | 0.571 | 0.582 |

| Nr. | Research Question | Key Findings |

|---|---|---|

| 1 | How does the model performance change when varying the data quality and quantity additionally compared to a model-centric optimization? | The quality of the data is more important than the quantity; a large amount of data or hyperparameter optimization cannot compensate for a bad data set. We demonstrated that targeted enhancement of the data foundation using different methods can further improve performance. This result was confirmed for both data sets. Data-related issues in the training process can be addressed through iterative data improvement. |

| 2 | What role does synthetically generated data play in improving the detection of rare defect classes in industrial image data sets? | Using synthetic data generated by the DDPM model had a positive impact on model performance by aligning data sets, reducing bias, and improving metrics such as recall, precision, accuracy, and F1 score, although the creation of this synthetic data requires a certain amount of real data. |

| 3 | How can data-centric AI be applied in optical quality control? | Our experiments show that data-centric AI methods such as data augmentation, improved data quality, and precise labeling are valuable tools to increase AI system performance, although the use of these methods varies depending on the use case, production maturity, and current system performance. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nieberl, M.; Zeiser, A.; Timinger, H.; Friedrich, B. Enhancing the Performance of Computer Vision Systems in Industry: A Comparative Evaluation Between Data-Centric and Model-Centric Artificial Intelligence. Electronics 2025, 14, 4366. https://doi.org/10.3390/electronics14224366

Nieberl M, Zeiser A, Timinger H, Friedrich B. Enhancing the Performance of Computer Vision Systems in Industry: A Comparative Evaluation Between Data-Centric and Model-Centric Artificial Intelligence. Electronics. 2025; 14(22):4366. https://doi.org/10.3390/electronics14224366

Chicago/Turabian StyleNieberl, Michael, Alexander Zeiser, Holger Timinger, and Bastian Friedrich. 2025. "Enhancing the Performance of Computer Vision Systems in Industry: A Comparative Evaluation Between Data-Centric and Model-Centric Artificial Intelligence" Electronics 14, no. 22: 4366. https://doi.org/10.3390/electronics14224366

APA StyleNieberl, M., Zeiser, A., Timinger, H., & Friedrich, B. (2025). Enhancing the Performance of Computer Vision Systems in Industry: A Comparative Evaluation Between Data-Centric and Model-Centric Artificial Intelligence. Electronics, 14(22), 4366. https://doi.org/10.3390/electronics14224366