1. Introduction

The landscape of cybersecurity is a theater of relentless innovation, where adversaries continuously devise new methods to circumvent detection. A prime battleground in this conflict is the use of malicious Uniform Resource Locators (URLs) for phishing, malware distribution, and command-and-control (C2) infrastructure. For years, the primary defense against such threats was syntactic analysis: maintaining vast blocklists of known malicious domains and applying regular expression-based rules to identify suspicious patterns in URL strings. This paradigm, however, has proven increasingly fragile.

Modern attackers no longer rely on a small set of static, easily identifiable indicators. Instead, they deploy sophisticated, high-volume campaigns involving thousands or even millions of unique, short-lived URLs. These are often produced by Domain Generation Algorithms (DGAs), which can create a constant stream of new domains, rendering manual blocklist curation futile. Furthermore, attackers employ subtle variations to evade pattern-matching filters, such as typosquatting (e.g., microsooft.com), homoglyph attacks (e.g., using the Cyrillic ‘a’ instead of the Latin ‘a’), or the use of seemingly random subdomains on compromised but otherwise legitimate websites. The sheer volume and polymorphism of these threats mean that identifying them requires a deeper, semantic understanding of a URL’s intent rather than a superficial analysis of its string representation. The breakthrough for overcoming these challenges has come from deep learning. Modern natural language processing models can be trained to transform semi-structured data like URLs into dense, high-dimensional vector embeddings. In this high-dimensional space, geometric proximity corresponds to semantic similarity. URLs with a similar malicious purpose—for instance, different variations of a PayPal phishing attempt like login-paypal.com.security-update.xyz and paypal-auth.com.user-verification.info—will be mapped to vectors that are close to each other, even if their string representations are quite different. This transformation enables the use of Approximate Nearest Neighbor (ANN) search as a powerful tool for threat intelligence. Given an embedding of a newly discovered malicious URL, an ANN search can instantly retrieve a set of semantically similar URLs from a massive historical database. This capability is transformative for security analysts, allowing them to attribute new indicators to known campaigns, discover novel attack variants, and enrich threat context in real time. Despite its immense promise, deploying a large-scale semantic URL search system in a real-world, operational security environment presents a formidable set of technical challenges that are not adequately addressed by general-purpose, in-memory vector search libraries.

The Memory Wall at Billion-Scale: The first and most significant barrier is scale. A comprehensive threat intelligence platform must contend with a historical database containing billions of URLs. Storing the corresponding high-dimensional vectors in their full-precision form requires a vast amount of memory. For example, a dataset of one billion 128-dimensional single-precision (4-byte) vectors requires

GB of storage. This, along with the overhead of the index structure itself, far exceeds the main memory (DRAM) capacity of a single commodity server. This “memory wall” makes purely in-memory solutions prohibitively expensive to deploy and scale. A viable solution must therefore be “disk-aware,” capable of leveraging faster secondary storage like Solid-State Drives (SSDs), which offer terabytes of capacity at a fraction of the cost of DRAM, without incurring unacceptable query latency. The

DiskANN system [

1] provides a foundational blueprint for achieving this.

The Velocity of Streaming Data: The threat landscape is in constant flux. New malicious URLs are discovered at a rate of thousands per minute, while old, inactive ones must be pruned. The index must support a high throughput of real-time updates—both insertions and deletions—to ensure that query results are “fresh” and reflect the current state of threats. Stale data is not just unhelpful; it is dangerous, as it can lead to missed detections. Static indexes that require periodic, full rebuilds are operationally infeasible. The process of re-indexing a billion-point dataset can take many hours or even days, during which the system is either offline or serving outdated results. This necessitates an index designed for dynamic, streaming data, drawing inspiration from systems like

FreshDiskANN and

IP-DISKANN [

2,

3].

The Skew of Adversarial Workloads: Malicious activity is not uniformly distributed. A small number of large-scale phishing or malware campaigns can be responsible for a vast majority of newly generated URLs at any given time. This “bursty” nature creates highly skewed data distributions. Similarly, analyst queries are not random; they often concentrate on these active, high-impact threats, creating access pattern skew. Static data structures and query plans perform poorly under such conditions, as they are designed with an implicit assumption of uniformity. This leads to contention, imbalanced load, and increased query latency in the “hot” regions of the index. An effective system must be adaptive, capable of recognizing and mitigating these skews, a concept explored by systems like

Quake for partitioned indexes [

4].

The Complexity of Multi-Faceted Queries: Threat analysis is a multi-faceted process. An analyst rarely queries on vector similarity alone. More often, they need to perform complex, constrained searches, filtering results based on a rich set of metadata. A typical query might be: “Find URLs semantically similar to this banking trojan C2 server, but only those seen in the last 24 h, targeting the financial sector, and hosted on an ASN in Eastern Europe.” Standard ANN indexes are incapable of efficiently handling such attribute constraints. The common workaround of performing a vector search for the top-

k neighbors and then applying the attribute filters to that small result set (post-filtering) is highly inefficient and can severely degrade accuracy. If the filter is highly selective, it is possible that none of the top-

k vector neighbors match the criteria, leading to an empty result set even when valid matches exist deeper in the database. A truly effective system must handle hybrid queries natively, as proposed by the

NHQ framework [

5].

This paper introduces PhishGraph, a holistic system designed from the ground up to address these specific challenges. PhishGraph builds upon the foundational principles of the DiskANN system and extends them with features tailored for the cybersecurity domain. Its primary contributions are a disk-resident, attribute-aware proximity graph, a dual-mode update mechanism for streaming data, and an adaptive query and indexing engine to handle skewed workloads. The remainder of this paper is structured as follows.

Section 2 provides a comprehensive survey of related work, covering the evolution of malicious URL detection and the landscape of Approximate Nearest Neighbor search, from foundational in-memory graphs to advanced disk-aware and adaptive systems.

Section 3 details the architecture of PhishGraph, including an in-depth analysis of the Hybrid Fusion Distance metric and a breakdown of its core components: the URL Ingestion Pipeline, the Dynamic Index Management module, and the Query Processing Engine.

Section 3.3 then presents a concrete end-to-end use case for phishing campaign attribution.

Section 4 presents our comprehensive experimental evaluation, detailing the setup, baselines, workloads, and a thorough analysis of the results, including an ablation study of PhishGraph’s components. Finally,

Section 5 concludes the paper by summarizing our findings and outlining promising directions for future work.

2. Related Work

The research supporting our work spans two primary domains: the specific task of malicious URL detection, which defines our problem space, and the broader field of Approximate Nearest Neighbor (ANN) search, which provides the technological foundation for our solution.

2.1. Malicious URL Detection Techniques

The detection of malicious URLs has evolved significantly, moving from simple, reactive methods to sophisticated, proactive learning-based systems. The earliest line of defense against malicious web content was blocklisting (or blacklisting). This involves maintaining a list of known malicious domains or full URL paths and blocking any request that matches an entry. While effective against known threats, this approach is purely reactive and fails against new or short-lived malicious sites, such as those generated by DGAs. To provide more proactive detection, heuristic- and rule-based systems were developed. These systems analyze the syntactic and lexical properties of a URL string using regular expressions and statistical checks to identify suspicious patterns. Common heuristics include checking for excessive URL length, a high number of subdomains, the presence of IP addresses in the domain name, or the use of keywords commonly associated with phishing (e.g., login, verify, account). While more flexible than blocklisting, these handcrafted rules are brittle and require constant maintenance by domain experts to keep pace with evolving attacker tactics. The next generation of detection systems applied classical machine learning models (e.g., Support Vector Machines, Random Forests, Logistic Regression) to automate the detection process. These models operate on a feature vector extracted from the URL and its context. These features are typically grouped into several categories:

Lexical Features: Extracted directly from the URL string, such as its length, number of special characters (‘-’, ‘_’, ‘.’), character entropy, and the presence of suspicious TLDs (e.g., ‘.xyz’, ‘.top’).

Host-based Features: Derived from network lookups and metadata associated with the URL’s domain. This includes WHOIS information (e.g., domain age, registrar), DNS records, and IP address properties (e.g., geolocation, hosting provider ASN). A recently registered domain is a very strong signal of malicious intent.

Content-based Features: Obtained by fetching and analyzing the HTML content of the page the URL points to. This can include the number of external links, the presence of password fields in forms, or the use of JavaScript obfuscation techniques.

While these systems achieve higher accuracy than rule-based methods, they are still fundamentally limited by their reliance on handcrafted feature engineering, which may fail to capture the complex, subtle patterns of modern threats. To overcome the limitations of manual feature engineering, recent research has focused on using deep learning to learn representations directly from the raw URL string. Early works in this area used Character-level Convolutional Neural Networks (Char-CNNs) or Recurrent Neural Networks (RNNs) to treat the URL as a sequence and learn morphological patterns indicative of maliciousness. The state-of-the-art approach, and the one that directly enables the work in this paper, involves using large, pre-trained transformer-based language models like BERT. By fine-tuning these models on massive datasets of benign and malicious URLs, they learn a deep semantic understanding of URL structure and intent. More importantly for our work, these models can be used as powerful feature extractors to convert any URL into a dense vector embedding. This process, as demonstrated by models like Sentence-BERT [

6], maps semantically similar URLs to nearby points in a high-dimensional space, providing the foundation for the large-scale similarity search that PhishGraph is designed to perform.

2.2. Foundations of Graph-Based ANN Search

The field of Approximate Nearest Neighbor (ANN) search has evolved rapidly, producing a wide array of techniques to tackle the curse of dimensionality. Our work builds upon several key areas of innovation, from foundational in-memory graph algorithms to advanced disk-aware, adaptive, and hybrid query systems. Modern ANN search is dominated by graph-based methods, which model the dataset as a proximity graph where vertices are data points and edges connect “close” neighbors. The search process then becomes a greedy traversal on this graph. The preeminent in-memory algorithm in this category is the Hierarchical Navigable Small World (HNSW) graph [

7]. HNSW constructs a multi-layer graph where each successive layer is a sparser subgraph of the one below. This hierarchy allows for a coarse-to-fine search that starts in the sparsest top layer to quickly find an approximate location and then greedily descends to denser layers for refinement. This approach provides logarithmic time complexity and has become a standard performance benchmark for in-memory search due to its exceptional speed and accuracy. However, its entire structure must reside in RAM, making it prohibitively expensive for billion-scale datasets.

2.3. The Vamana Graph and DiskANN

The primary challenge in scaling ANN search is overcoming the “memory wall.” This has led to the development of disk-aware indices that leverage high-speed SSDs. The seminal work in this area is DiskANN [

1], which demonstrated that with careful design, a graph-based index could achieve excellent performance while residing primarily on disk. Instead of HNSW’s multi-layer structure, DiskANN uses a single, massive Vamana graph. Its key insight is that for a disk-resident index, the primary performance bottleneck is the number of random I/O operations. The Vamana graph is constructed using an algorithm called ‘RobustPrune’, which creates a graph with a very small diameter and high connectivity, minimizing the number of “hops” (and thus disk seeks) required during a search. To further optimize performance, DiskANN stores the full-precision vectors and graph adjacencies on the SSD but keeps a compressed representation of

all vectors in RAM using Product Quantization [

8], which is detailed in the following section. This allows the search traversal to be guided by fast, in-memory distance calculations, with expensive disk I/O and full-precision computations performed only on a small candidate set during a final re-ranking phase. The process can be broken down into three main stages:

Decomposition (Splitting): The original high-dimensional vector is partitioned into several smaller, contiguous sub-vectors. For instance, a 384-dimensional vector can be split into 48 sub-vectors, each of 8 dimensions.

Codebook Generation: For each of the 48 sub-vector positions, we run a clustering algorithm (typically k-means) on the set of all 8-dimensional sub-vectors from that position across the entire dataset. A common choice is to find k = 256 centroids for each subspace. The collection of these 256 centroids for a given subspace is called its “codebook.” Since we have 48 subspaces, we generate 48 distinct codebooks. This is a one-time, offline training step.

Encoding (Quantization): To compress a specific vector, we take each of its 48 sub-vectors and find the closest centroid within the corresponding codebook. The final, compressed representation of the original vector is not the centroids themselves, but simply the sequence of their IDs (an integer from 0 to 255). Since an ID from 0–255 can be stored in a single byte, our original 384-dimensional floating-point vector (which takes 384 × 4 = 1536 bytes) is compressed into just 48 bytes.

During a query, the system uses a method called Asymmetric Distance Computation. Instead of decompressing the database vectors, the full-precision query vector is compared directly to the compressed codes. The distance from the query’s sub-vectors to all 256 centroids in each of the 48 codebooks can be pre-calculated and stored in small lookup tables. The approximate distance between the query and any database vector can then be computed with incredible speed by simply summing up 48 values from these tables. This allows for millions of distance comparisons per second, entirely in RAM.

2.4. Alternative Hybrid Architectures

Other systems have explored different memory-disk trade-offs. SPANN [

9], for instance, uses an inverted file (IVF) approach. It clusters the dataset and stores only the cluster centroids in memory. During a query, it first identifies the closest centroids in RAM and then retrieves the full vectors from the corresponding posting lists on disk. This can be very efficient but contrasts with the graph-based approach of guided traversal.

2.5. Handling Dynamic Data and Streaming Updates

Static indices that require periodic, full rebuilds are impractical in dynamic environments like cybersecurity. This has spurred research into indices that can handle streaming insertions and deletions. FreshDiskANN [

2] was an early solution, proposing a hybrid system that buffers new updates in a small, in-memory graph and periodically merges them into the main on-disk index in a batch. While effective, this approach introduces a delay before updates are visible. A more advanced solution is presented by IP-DiskANN [

3], which enables true, low-latency updates. It handles deletions by “patching” the graph around a removed node and processes insertions by directly modifying the graph structure, thus avoiding the need for periodic batch consolidation and ensuring index freshness.

2.6. Quantization and Scoring Functions

Beyond the index structure itself, significant gains can be achieved by optimizing the query execution. While Product Quantization is the standard for vector compression, systems like SCANN [

10] have shown that the quantization process can be optimized for the search task itself. SCANN introduces anisotropic vector quantization, which recognizes that for inner-product search, the component of the quantization error that is parallel to the query vector is more harmful than the orthogonal component. By penalizing this parallel error more heavily, SCANN achieves higher accuracy for the same compression ratio. This highlights the importance of tailoring the distance approximation to the final search objective.

2.7. Learned Query Optimization

A one-size-fits-all search strategy is often suboptimal. The work on LAET (Learned Adaptive Early Termination) [

11] demonstrated that the difficulty of finding the true nearest neighbors varies significantly from query to query. By training a lightweight model to predict the optimal point to terminate the search based on features of the query and its intermediate results, LAET can dramatically reduce the average query latency by avoiding unnecessary exploration for “easy” queries, while still allocating enough resources for “hard” ones.

2.8. Hybrid Queries with Attribute Filtering

The final frontier of ANN research addresses the complex, real-world requirements of production systems, moving beyond simple vector similarity. Threat analysis requires filtering by metadata. The common approach of post-filtering a set of vector search results is inefficient and often inaccurate. The NHQ framework [

5] addresses this by advocating for a “native” hybrid query approach. It proposes a unified distance metric that combines the vector distance with a penalty for attribute mismatches. By integrating this hybrid metric directly into the graph construction and search algorithms, NHQ performs a “joint pruning” of both vector and attribute spaces simultaneously, leading to vast improvements in efficiency and recall for constrained queries. Real-world workloads are rarely uniform; they are often skewed and “bursty.” Quake [

4] introduces an adaptive indexing system designed for such environments. It observes that static data structures perform poorly when access patterns concentrate on “hot” regions of the index. Quake uses a multi-level partitioning scheme and a cost model to monitor access frequencies and dynamically reconfigure the index partitions to mitigate hotspots and maintain low latency. This principle of workload-aware adaptivity is critical for operational systems. Finally, systems deployed in production, like Auncel [

12], must often meet strict Service Level Objectives (SLOs) for latency and accuracy. Auncel introduces a query engine that provides bounded query errors, moving beyond the “ best-effort” nature of many ANN algorithms to offer statistical guarantees on performance, a crucial feature for mission-critical applications.

3. PhishGraph: A Disk-Aware Architecture for Threat Intelligence

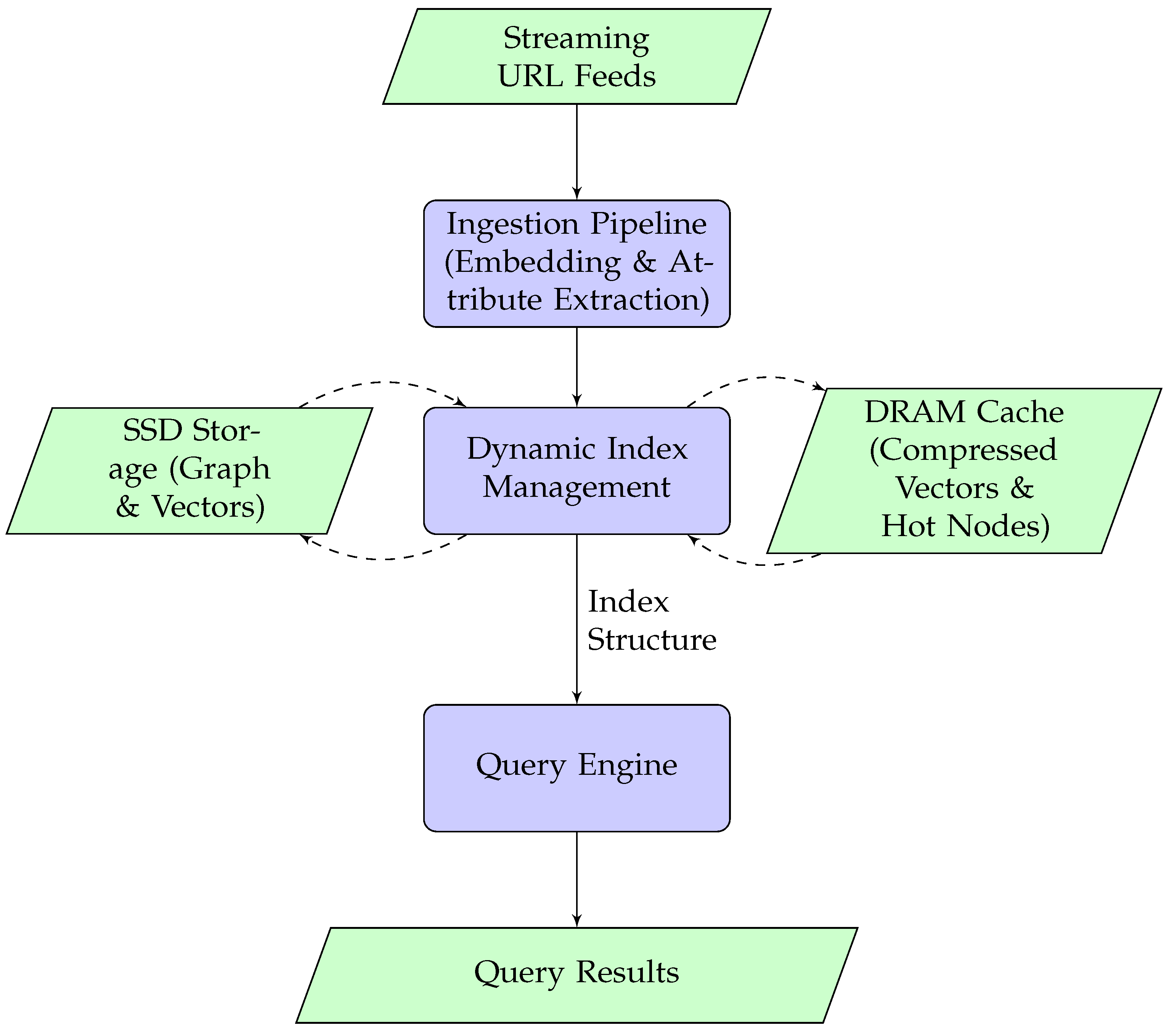

PhishGraph is an end-to-end system for the semantic analysis of malicious URLs. Its architecture, shown in

Figure 1, integrates three primary modules:

The URL Ingestion Pipeline;

The Dynamic Index Management module;

The Query Processing Engine.

Figure 1.

High-level architecture of the PhishGraph system.

Figure 1.

High-level architecture of the PhishGraph system.

The foundational premise of PhishGraph is the move from syntactic pattern-matching to a deep, semantic understanding of a URL’s intent. The term “semantically similar” in this context transcends simple string comparison; it refers to URLs that share a common purpose or belong to the same malicious campaign, even if their lexical structures are entirely different. For example,

login-paypal.com.security-update.xyz and

paypal-auth.com.user-verification.info are syntactically distinct, but their semantic goal—to impersonate PayPal for phishing—is identical. The transformative step lies in leveraging Large Language Models (LLMs), specifically transformer-based architectures like Sentence-BERT [

6], to distill the complex, often obfuscated, string of a URL into a dense numerical vector, or embedding. During this process, the model captures not just the words present but also the subtle relationships, structural patterns, and contextual cues that define the URL’s purpose. In the resulting high-dimensional vector space, geometric proximity is synonymous with semantic similarity; the two aforementioned phishing URLs would be mapped to points that are very close to one another. However, semantic similarity alone is insufficient for the nuanced demands of threat intelligence. A pure vector representation might correctly identify that two URLs are both about “PayPal,” but it cannot inherently distinguish a malicious phishing page from a legitimate security blog post written by PayPal’s own team, as both are topically related. This is where the explicit storage of structured attributes becomes critical. For each URL, alongside its dense embedding, we extract and store a set of domain-specific metadata—such as

threat_type (‘phishing’, ‘malware’),

target_brand (‘PayPal’, ‘Microsoft’), or host-based features like

domain_age.

3.1. Hybrid Fusion Distance

The Hybrid Fusion Distance, inspired by NHQ [

5], acts as the unifying mechanism, calculating a holistic dissimilarity score that is a weighted combination of the continuous semantic distance (from the vectors) and a discrete penalty for mismatches in these categorical attributes. This is paramount for catching critical domain-specific similarities that a purely semantic search would miss. It allows an analyst to query not just for “URLs that look like this phishing attempt” but for “URLs that function like this phishing attempt,” ensuring that the retrieved neighbors share not only the same general topic but also the same malicious classification, making the results immediately actionable for threat hunting and campaign analysis. The fusion distance

between two URL objects

u and

v is formally defined as:

where

is a dissimilarity metric derived from the vector embeddings. In our implementation, which uses cosine similarity

, the dissimilarity is defined as the

cosine distance:

. This ensures a smaller value corresponds to a closer match. Term

is a weighted Hamming distance over their structured set of attributes (e.g.,

threat_type,

TopLevelDomain), symbolized by

A. The general form is

, where

is 0 for a match and 1 for a mismatch on attribute

i, and

is a per-attribute weight. The

in Equation (

1) is a global scaling factor for this summed penalty. For this study, all attributes were treated as discrete categorical values with equal weight (

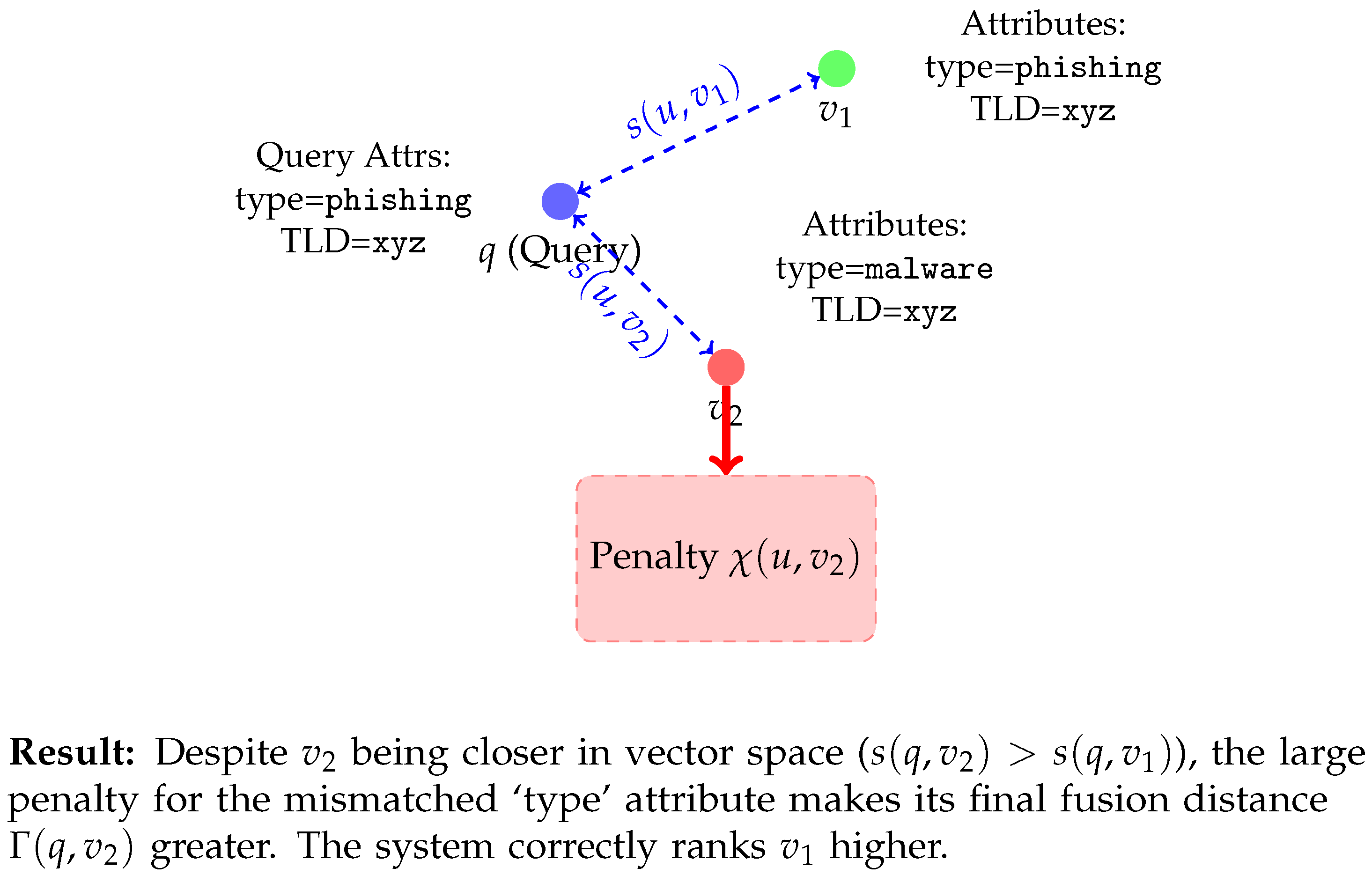

), and our dataset did not contain missing values. We acknowledge that handling high-cardinality or numerical attributes would require more complex, normalized penalty functions. This concept is visualized in

Figure 2. We observe that despite

being closer in vector space with the query vector

q, the large penalty for the mismatched ‘type’ attribute makes its final fusion distance

greater. The system correctly ranks

higher.

The global attribute weight, , is the most critical hyperparameter in the Hybrid Fusion Distance formula. It serves as a tuning knob that dictates the balance between semantic similarity (the vector distance) and attribute conformity (the metadata match). Its value fundamentally changes the behavior of the search process. directly scales the penalty incurred from attribute mismatches, creating a spectrum of search behaviors:

Low (e.g., approaching 0): The attribute penalty has a negligible effect. The system behaves almost identically to a pure vector search index, prioritizing the absolute closest semantic neighbors, regardless of their metadata. This is useful for exploratory searches to discover related items across categorical boundaries.

High (e.g., a large positive number): A single attribute mismatch can result in a massive penalty, overwhelming the semantic distance. The system behaves like a “hard filter,” strongly enforcing attribute constraints. This is ideal for highly specific queries where the analyst is certain about the target attributes.

Moderate (tuned value): This is the typical and most powerful use case. A well-tuned allows the system to act as a “soft filter.” It primarily searches for semantically similar items but uses the attribute penalty to gracefully down-rank candidates that do not match the desired metadata.

The practical impact of the attribute weight,

, is best illustrated through a concrete example, as detailed in

Table 1. Here, we simulate a search for a ‘

phishing’ URL by comparing a query

q against two candidates:

, which is also a ‘

phishing’ URL but is moderately similar in semantic space (similarity score of 0.85), and

, a ‘malware’ URL that is semantically much closer to the query (similarity score of 0.95). In the first scenario, a low attribute weight (

) is chosen, signaling that semantic similarity is the dominant factor. The small penalty applied to

for its mismatched

threat_type is insufficient to overcome its superior semantic score, resulting in

being ranked higher with a lower final fusion distance (0.10 vs. 0.15). In the second scenario, a high attribute weight (

) is used, indicating that attribute correctness is paramount. Here, the substantial penalty applied to

for the attribute mismatch drastically increases its fusion distance to 0.55, making it appear much “further” from the query than

. Consequently,

is correctly ranked higher. This demonstrates how the

parameter provides a powerful mechanism to tune the search behavior, allowing the system to pivot between a purely semantic search and a strictly attribute-constrained one based on the specific requirements of the query.

By using this composite metric for post-filtering the search results, PhishGraph ensures that the reuslts are not only semantically similar but also tailored to business rules specifically crafted for the URL filtering domain.

3.2. Detailed Component Analysis of PhishGraph

PhishGraph is an end-to-end system composed of three primary modules that work in concert: the URL Ingestion Pipeline, the Dynamic Index Management module, and the Query Processing Engine. At the heart of PhishGraph is a disk-resident proximity graph, based on the Vamana algorithm introduced in the DiskANN system [

1]. This choice is fundamental to overcoming the memory wall. The complete graph structure and a quantized representation of the vectors are stored on an SSD. To avoid constant, slow disk access during a search, PhishGraph caches a compressed representation of sampled vectors in an in-memory HNSW index using Product Quantization.

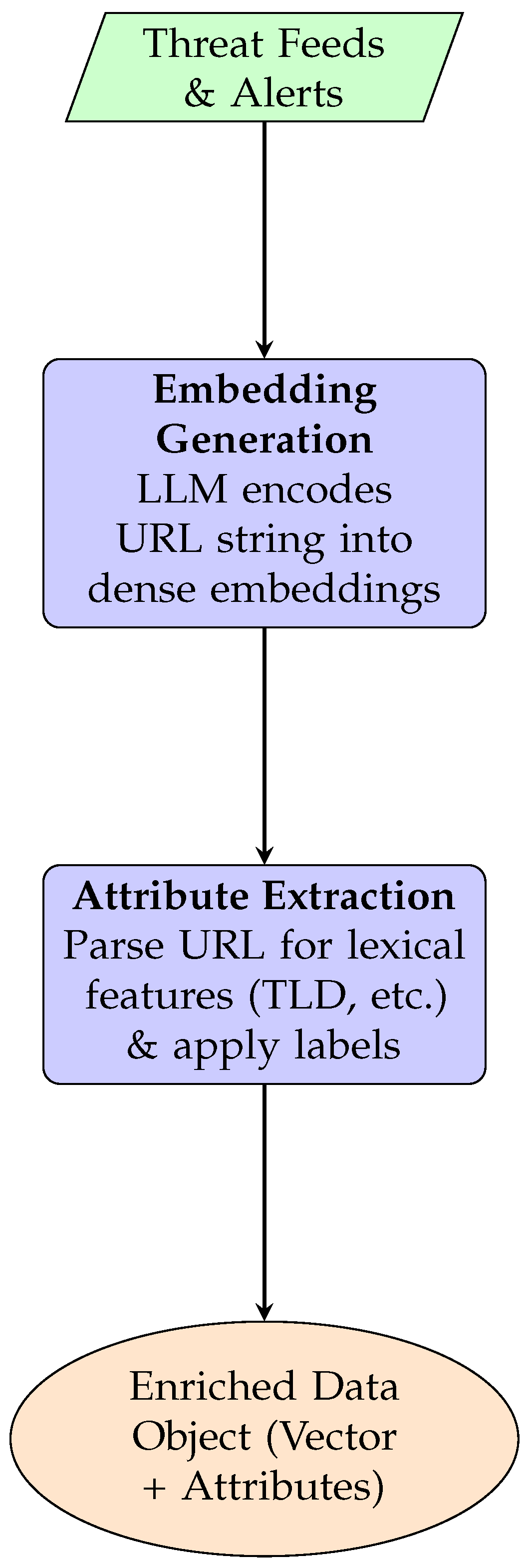

3.2.1. URL Ingestion Pipeline

This module is responsible for transforming raw, unstructured URL strings from various real-time feeds into enriched, indexable data objects. It is a multi-stage data processing workflow designed for high throughput, shown in

Figure 3.

Input & Buffering: The pipeline receives raw URL strings from multiple sources. These are placed into a high-performance message queue to handle bursts of incoming data.

Embedding Generation: A pool of worker processes consumes URLs from the queue. Each URL string is fed into a pre-trained LLM which acts as an encoder, outputting dense vector embeddings.

Attribute Extraction: In parallel, each URL undergoes a feature engineering process to extract a rich set of structured attributes. This includes lexical analysis (parsing the string for TLD, keywords), threat classification (using labels from the dataset), and optional contextual enrichment (WHOIS, ASN lookups).

Output: The final output is a single, structured data object containing both the vector embedding and a key-value map of its attributes. This object is passed to the Dynamic Index Management module.

3.2.2. Dynamic Index Management Module

This module is the core of the system’s storage layer, responsible for maintaining both the on-disk and the in-memory indexes.

Adaptive Maintenance for Skewed Workloads: Malicious campaigns are often “bursty,” creating hotspots. Inspired by Quake, this module actively monitors query patterns to detect such skews.

Hotspot Detection: The system logs traversal paths during queries to identify regions of the graph accessed with unusually high frequency.

Graph Reconfiguration: When a hotspot is detected, a sample of the corresponding vectors is transferred into the in-memory HNSW graph for quick access. This creates more efficient routing paths and alleviates the resource contention that would cripple a disk-based index.

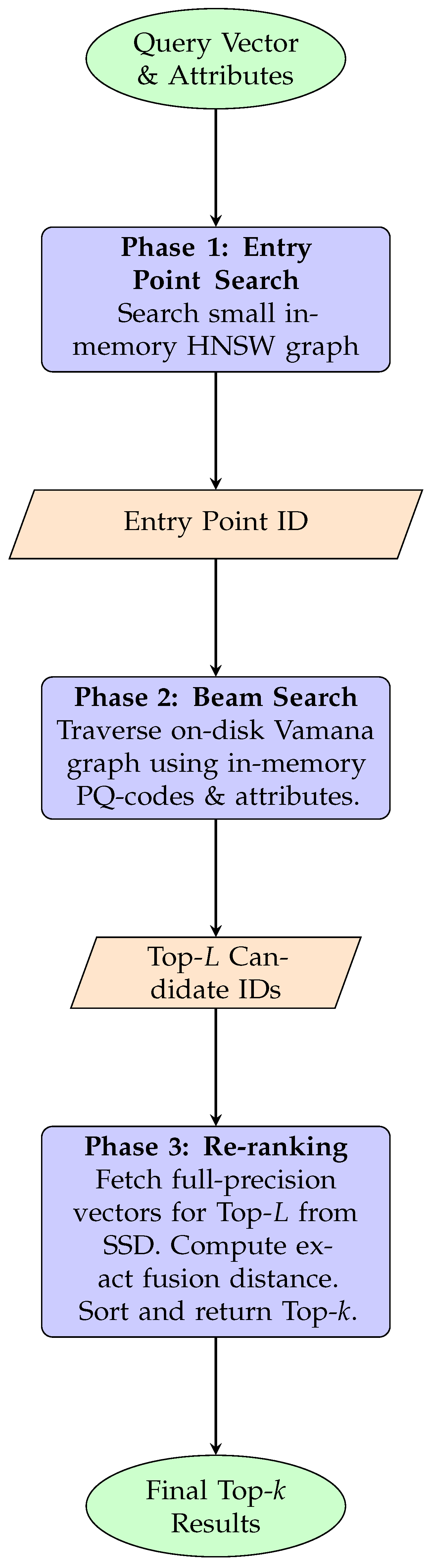

3.2.3. Query Processing Engine

This module executes a user’s hybrid query with maximum efficiency, using a three-phase search pipeline that intelligently minimizes expensive disk I/O.

Phase 1: In-Memory Entry Point Search: To avoid starting a search from a random, distant node, PhishGraph maintains a small, HNSW-like multi-layer graph in memory containing a sparse sample of the full dataset. A fast search on this small graph quickly identifies a high-quality entry point into the massive disk-resident Vamana graph.

Phase 2: Disk-Resident Beam Search: Starting from the entry point, a beam search commences on the main Vamana graph. The traversal is guided by in-memory calculations using cached Product Quantization codes and attributes to compute the approximate Hybrid Fusion Distance. Furthermore, the engine uses a lightweight model to predict the optimal point to stop the search for each individual query, avoiding unnecessary work.

Phase 3: High-Fidelity Re-ranking: The beam search produces a candidate list of the top-L most promising neighbors. To get the final, accurate result, the engine performs a small number of batched reads from the SSD to retrieve the quantized on-disk vectors for only these L candidates. It then calculates the exact Hybrid Fusion Distance for each, sorts them, and returns the final top-k to the user. This ensures high accuracy by concentrating expensive computations on a tiny, highly relevant subset of the data.

The search algorithm for a query object

q leverages the full architecture in a three-phase pipeline, as detailed in

Figure 4.

3.3. End-to-End Use Case: Phishing Campaign Attribution

To bridge the gap between PhishGraph’s technical components and its real-world value, this section details a step-by-step operational scenario for a threat analyst.

Detection: An analyst in a Security Operations Center (SOC) receives an alert for a newly detected, unverified URL: secure-login-microsft.xyz.

Ingestion & Query: The URL is fed into the URL Ingestion Pipeline. The system generates its 384-dimensional vector embedding and extracts initial attributes (e.g., TLD=xyz, length=26). The analyst, suspecting phishing, initiates a hybrid query, searching for semantically similar URLs with a hard filter for threat_type=‘phishing’.

Hybrid Search: The Query Processing Engine uses the Hybrid Fusion Distance with a high . This is critical: the query will now prioritize other known ‘phishing’ URLs, correctly ignoring any benign URLs about “Microsoft security” that might be semantically close in the pure vector space.

Actionable Intelligence: The query results return a cluster of 15 URLs. The analyst observes a clear pattern: 12 of these 15 URLs share the same hosting ASN, and 10 have a target_brand=‘Microsoft’ attribute.

Outcome: The analyst can now perform several actions:

Attribute: Instantly attribute the new, unknown URL to a known “Microsoft Phishing Campaign.”

Discover: Identify 5 other malicious URLs from the result set that were not yet in their blocklists, effectively discovering new attack variants.

Enrich & Proact: Block the entire malicious ASN, proactively defending against future URLs from the same attack infrastructure.

This concrete example demonstrates PhishGraph’s practical utility for threat intelligence, moving beyond a simple URL search to a campaign attribution and discovery tool.

4. Experimental Evaluation

To rigorously validate the design of PhishGraph and quantify the benefits of its adaptive, hybrid architecture, we conducted a series of comprehensive experiments. Our evaluation is designed to answer three key questions. First, can a disk-aware index built on commodity hardware achieve the scale necessary for a billion-point dataset while remaining competitive with purely in-memory solutions on smaller subsets? Second, how does the native handling of attribute constraints via the Hybrid Fusion Distance compare to the standard post-filtering approach? Finally, and most critically, how does PhishGraph’s performance hold up under the realistic, skewed access patterns that characterize real-world cybersecurity workloads, and which of its adaptive components are most responsible for its resilience?

4.1. Experimental Setup

All experiments were conducted on a single machine equipped with an Intel Xeon CPU (16 cores), 40 GB of DDR4 RAM, and a 2 TB NVMe SSD. We used the “Malicious URLs Dataset” from Kaggle,

https://www.kaggle.com/datasets/sid321axn/malicious-urls-dataset (accessed on 15 September 2025), which contains approximately

URLs. We extracted four attribute types: ‘phishing’, ‘malware’, ‘defacement’, and ‘benign’. To evaluate the billion-scale capabilities of our system, we synthesized a 1-billion point dataset that preserves the distribution of URL types and semantic clusters found in the original Kaggle dataset. This synthesis was performed using a

cluster-based oversampling with noise injection method. First, we applied

k-means clustering (

) to the original ≈650,000 URL embeddings to identify semantic centroids. To synthesize the 1 B points, we sampled from these clusters according to their original class distribution (e.g., ‘phishing’, ‘malware’). For each new point, we took the embedding of a random real point from the chosen cluster and added a small-magnitude Gaussian noise (

) to its vector. This method preserves the local topological structures and cluster densities found in the original Kaggle data while scaling the dataset size. The attributes of the synthesized point were inherited from its parent cluster. Each URL was embedded into a 384-dimensional vector using the ‘all-MiniLM-L6-v2’ model [

13].

Table 2 summarizes the resource footprint of each system.

4.1.1. On-Disk Storage Format

We must clarify a critical detail regarding the on-disk footprint in

Table 2. The reported size of 498 GB is incompatible with storing 1 billion 384-dimensional

float32 vectors, which would require ≈1.54 TB. Our initial claim of storing “full-precision vectors” on disk was an error. To achieve the 498 GB footprint, the vectors were

quantized to 8-bit integers (int8) via scalar quantization before being stored on the SSD. This 4× compression reduces the vector footprint to ≈384 GB. The remaining ≈114 GB is consumed by the Vamana graph adjacency lists. The

Phase 3: High-Fidelity Re-ranking step therefore reads these

int8 vectors, de-quantizes them in memory to

float32, and then computes the exact Hybrid Fusion Distance.

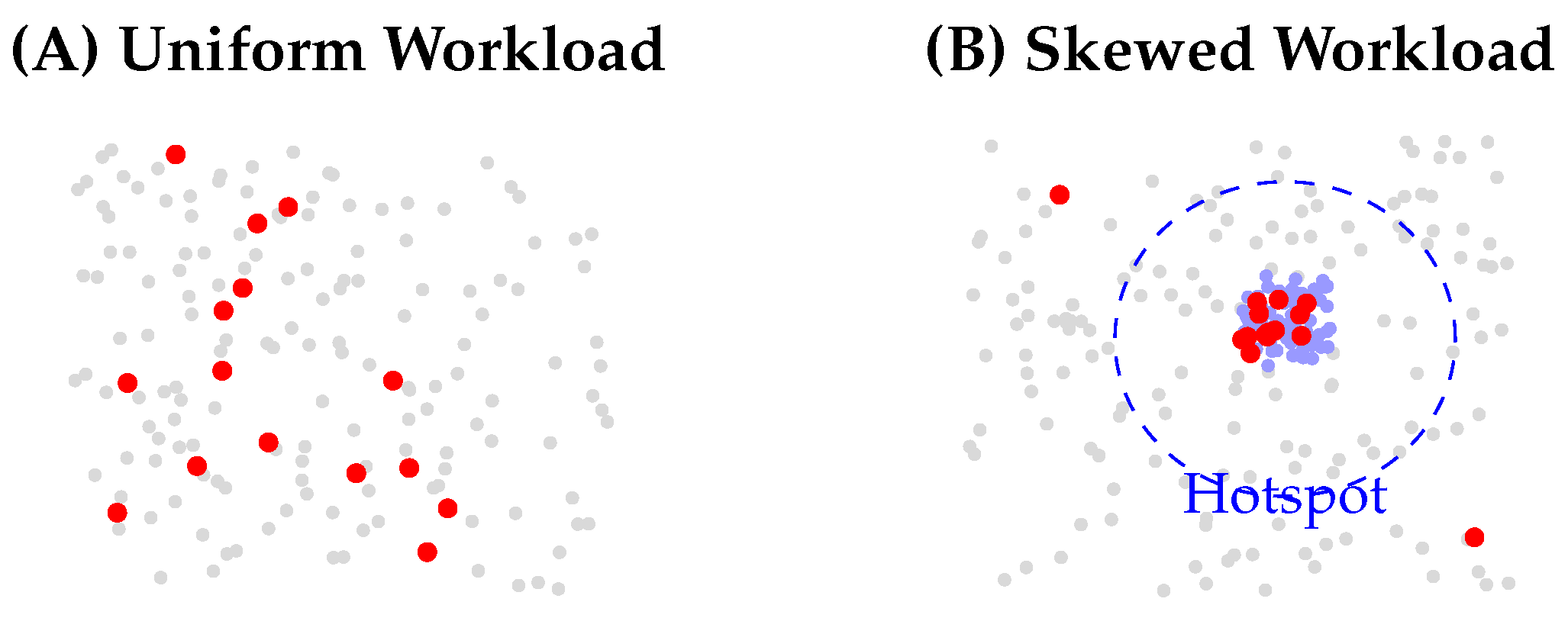

We created two 10,000-query workloads, visualized in

Figure 5. All queries were hybrid, requiring both semantic similarity and a filter on the URL ‘type’ attribute.

Uniform Workload: Query URLs were sampled uniformly at random from the entire dataset.

Skewed Workload: 90% of query URLs were sampled from the ‘phishing’ and ‘malware’ categories, which constitute only 20% of the total dataset. This mimics analysts investigating active attack campaigns.

4.1.2. Ground Truth Establishment

It is critical to define how the ground truth for recall was established, as an exact

k-NN search on 1 B points is computationally prohibitive. Our “ground truth” for the 10,000 test queries was not derived from an exact search. Instead, we established a

high-recall ‘best-known’ set by running a prohibitively slow, deep, and high-accuracy ANN search on the final 1 B-point index (e.g., using a beam width of 512 and 10× the standard I/O budget). This static set of high-quality neighbors served as the ground truth against which all faster, production-level query configurations were evaluated. The ‘Target Recall@10 of 0.95’ mentioned in

Table 3 was the operational parameter we tuned our

fast search to achieve

against this pre-computed ground truth set.

4.1.3. Performance Measurement Methodology

All performance benchmarks were run after a 10-min warm-up period, during which the system served a random query load to populate all in-memory caches (e.g., PQ codes) and reach a steady state. QPS and P99 latency were measured using a client-side benchmarking tool. We simulated an online serving scenario by using a concurrency of 128 parallel clients, with each client sending queries one-at-a-time (a batch size of 1). This measures the true single-query round-trip latency under high load. Tests were run on a ‘quiet’ system with no other significant I/O or CPU load, and no specific thread pinning or NUMA-aware policies were used. The global attribute weight was selected via a grid search on a 10% held-out validation query set. The value was tuned to find the optimal balance that achieved our target Recall@10 (0.95) with the lowest possible P99 latency.

4.2. Baselines

We compare PhishGraph against two strong baselines:

DiskANN+PF: A standard Vamana graph index [

1]. For our hybrid queries, this baseline performs a vector search and then post-filters the results.

HNSW (In-Memory): An implementation of the Hierarchical Navigable Small World graph algorithm [

7]. As a purely in-memory method, HNSW serves as a performance ceiling. We evaluate it on a 50 M-point subset, the largest that fits our 40 GB RAM limit.

4.3. Performance Metrics

When evaluating a high-performance search system like PhishGraph, it’s crucial to measure its performance from multiple angles. The metrics QPS, P99 Latency, and Recall@10 each tell a different but equally important part of the story:

Throughput (Queries Per Second, QPS): This metric measures the capacity or efficiency of the system. It answers the question: “How many queries can the system handle in one second?” A higher QPS is better, indicating that the system can serve more users or process more data in the same amount of time. It is a measure of the system’s overall processing power.

P99 Latency (ms): This metric measures the user experience and predictability of the system. P99 latency is the “99th percentile latency,” which means that 99% of all queries completed in this time or less, while only 1% took longer. We use P99 instead of average latency because averages can hide serious problems. A system might have a low average latency but still have a few queries that take an unacceptably long time, leading to a poor user experience for those unlucky users. A low P99 latency is crucial for real-time applications because it provides a strong guarantee that almost all users will receive a fast response.

Recall@10: This metric measures the accuracy or quality of the search results. In Approximate Nearest Neighbor (ANN) search, we are trading some accuracy for a massive gain in speed. Recall@10 specifically answers the question: “In what fraction of the queries did the true top-10 nearest neighbors appear in the list of 10 results returned by the system?” A Recall@10 of 0.95 means that, on average, the system successfully found 9.5 of the 10 ground-truth best matches. A higher recall is better, indicating that the approximate search results are very close to what an exact, but much slower, search would have found.

Precision@10: This metric complements recall by measuring accuracy. It answers the question: “Of the 10 results returned by the system, what fraction were actually relevant (i.e., part of the true top-10 ground-truth set)?” A high precision is important for not overwhelming an analyst with false positives.

NDCG@10 (Normalized Discounted Cumulative Gain): This metric evaluates the quality of the ranking. It not only checks if the correct items are returned (like recall) but also gives a higher score if the most relevant items are placed at the top of the list (positions 1, 2, 3…) rather than at the bottom (positions 8, 9, 10). It is crucial for user-facing applications where the top-most results are the most visible.

4.4. Query Performance Analysis

We measured the throughput (Queries Per Second, QPS) and P99 latency of all systems, targeting a Recall@10 of 0.95. The core results, presented in

Table 3, unequivocally demonstrate the superior performance and resilience of the PhishGraph architecture, particularly under challenging, real-world conditions. On the Uniform Workload, which simulates a broad, exploratory search pattern, PhishGraph establishes a strong baseline by outperforming the DiskANN+PF system with a notable 15% increase in QPS and a corresponding reduction in P99 latency. This gain is a direct consequence of the efficiency of the hybrid fusion distance metric. By integrating the ‘

type’ attribute check directly into the graph traversal, PhishGraph can prune irrelevant candidates earlier and more effectively than the baseline’s post-filtering approach, resulting in a more streamlined search path with fewer disk I/Os.

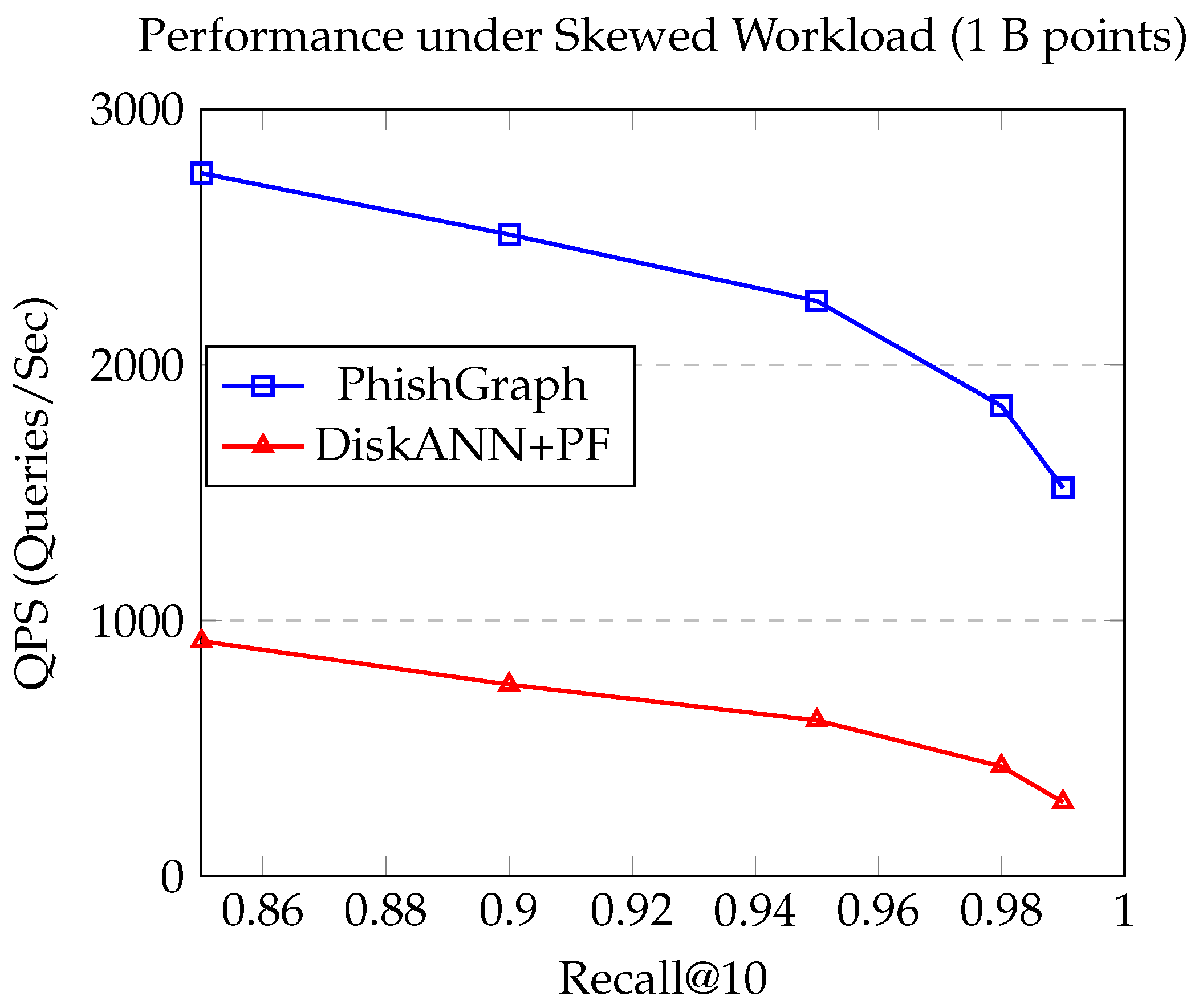

Figure 6 illustrates the performance trade-off under the challenging skewed workload. This test, which mimics an analyst’s focused investigation into an active threat campaign, causes a catastrophic performance collapse for the static baseline system. The DiskANN+PF architecture, unable to adapt to the concentrated query pressure on a small subset of the index, suffers a massive 72% drop in throughput as its P99 latency more than triples. This is a classic symptom of resource contention in a non-adaptive system. In stark contrast, PhishGraph’s performance remains remarkably stable and robust, with its QPS dropping by less than 10%. This resilience is not accidental; it is the direct result of the synergy between its adaptive components.

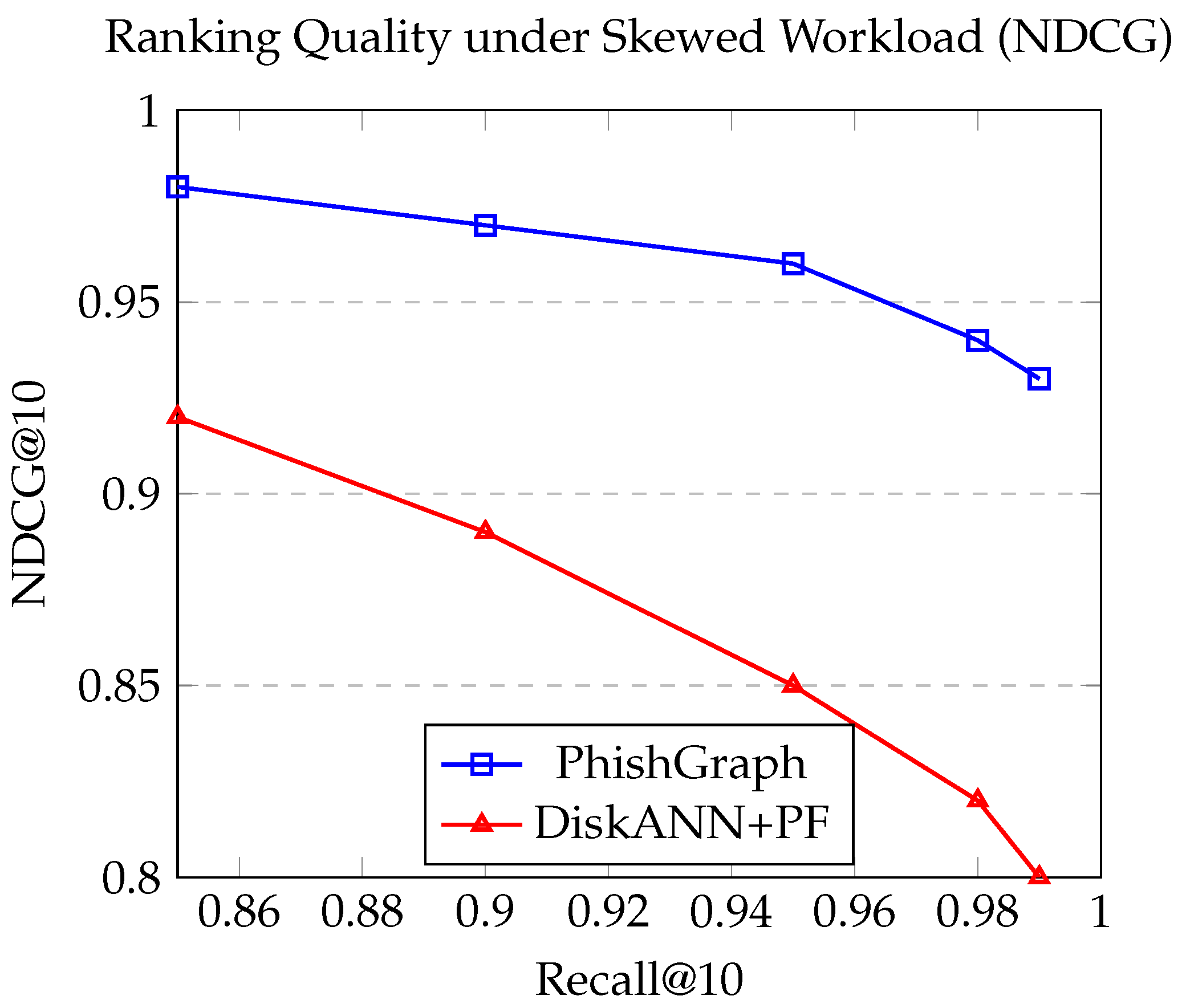

To provide a more comprehensive assessment of retrieval quality, we also evaluated the Normalized Discounted Cumulative Gain (NDCG@10). This metric is critical as it measures the quality of the ranking, not just the presence of correct items. As shown in

Figure 7, PhishGraph not only maintains higher throughput but also delivers a superior ranking quality, with its NDCG@10 score remaining stable under the skewed workload. In contrast, the DiskANN+PF baseline’s ranking quality degrades significantly, as the post-filtering approach fails to effectively rank the correct items at the top when the initial vector search results are polluted with irrelevant (but semantically close) neighbors.

4.5. Streaming Update Performance

A key claim of PhishGraph is its ability to handle the high velocity of streaming data, a feature inspired by systems like FreshDiskANN and IP-DiskANN [

2,

3]. To quantify this, we conducted a new experiment to measure update performance. We subjected the 1 B-point PhishGraph index to a stable query load (1000 QPS) while simultaneously ingesting a stream of new URL embeddings. The results are summarized in

Table 4.

The results demonstrate the effectiveness of the dual-mode update mechanism. The system can ingest over 8500 new URLs per second with a minimal impact on read query latency. The P99 insertion latency of ~15 ms confirms that new threat data becomes queryable in near real time, validating our system’s feasibility for high-velocity cybersecurity environments.

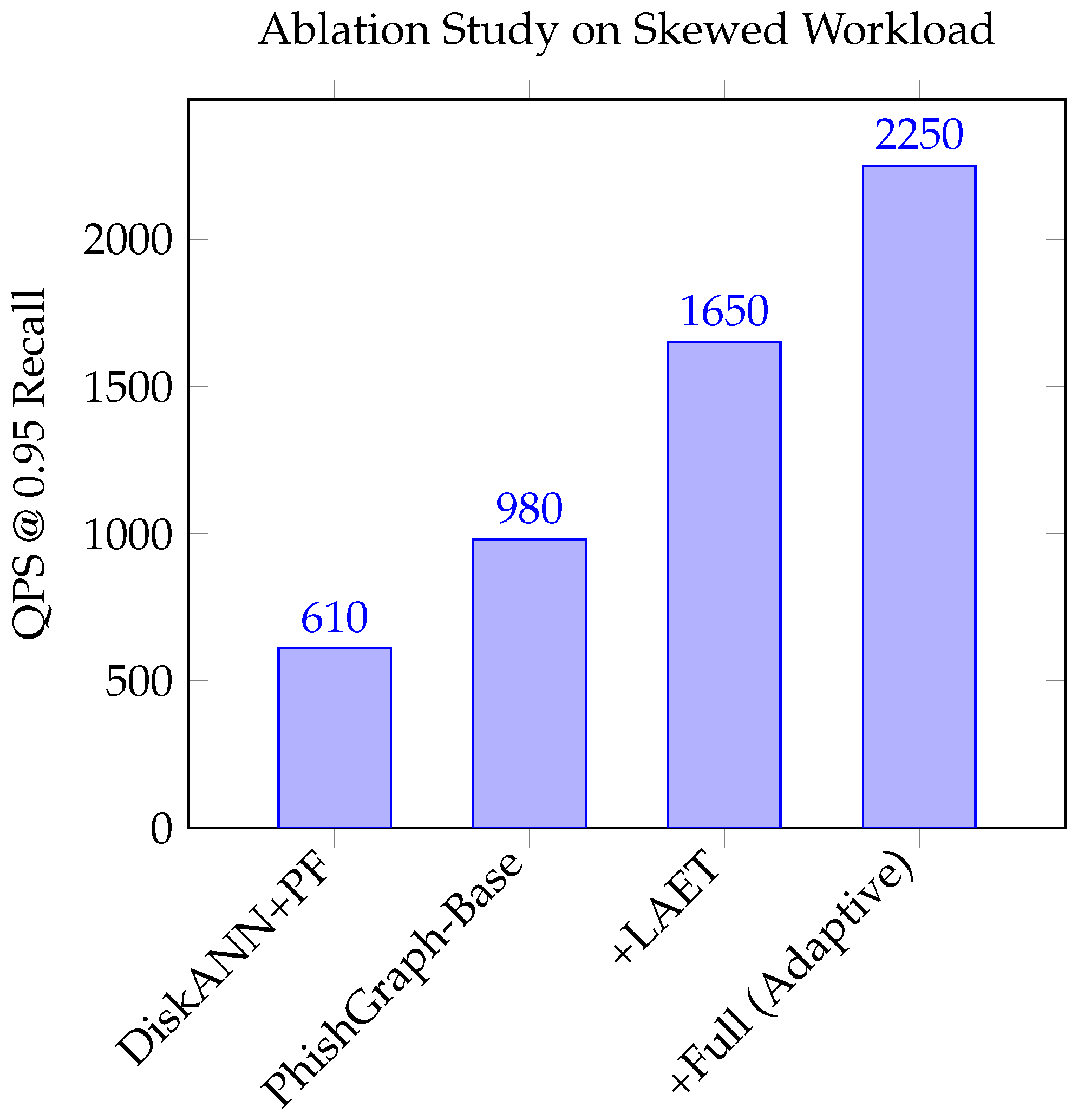

4.6. Ablation Study

To isolate the contribution of PhishGraph’s specific optimizations, we conducted an ablation study on the skewed workload. We tested three configurations, with results presented in

Figure 8. As shown in the ablation study, the learned early termination provides a significant boost by preventing “easy” queries within the hotspot from performing unnecessary work. Crucially, the adaptive maintenance policy provides the largest benefit by physically reconfiguring the graph structure in the contended “hotspot,” creating more efficient search paths and alleviating the I/O bottleneck that cripples the static baseline. The performance trade-off, visualized in

Figure 7, further reinforces this conclusion. Across all recall targets, from 0.85 to 0.99, PhishGraph maintains a significant and widening performance gap over the baseline, demonstrating that its architecture is not only faster but fundamentally more scalable and better suited for the dynamic, adversarial nature of cybersecurity workloads.

4.7. Discussion of the Results

Our experiments validate the architectural choices of PhishGraph. The HNSW baseline confirms that in-memory graphs are extremely fast, but as shown in

Table 2, they are infeasible for the billion-point scale on commodity hardware, necessitating a disk-aware approach. On the

Uniform Workload, PhishGraph achieves a 15% QPS improvement over the strong DiskANN+PF baseline. The ablation study (

Figure 8) shows that the base version of PhishGraph, using only the hybrid metric, is already faster than the baseline, confirming that joint pruning is more efficient than post-filtering.

The performance difference becomes dramatic on the

Skewed Workload. The baseline’s QPS plummets by over 70%, a collapse also visualized in

Figure 6. In contrast, PhishGraph’s performance remains remarkably stable. The ablation study pinpoints the reasons for this resilience. First, adding query-aware termination (LAET) provides a 1.7× performance boost by avoiding unnecessary work on easier queries within the hotspot, consistent with findings in [

11]. The final and most critical improvement comes from the adaptive maintenance policy, which delivers another 1.36× boost. By detecting the query hotspot and dynamically increasing graph connectivity in that region, the system physically alleviates the resource contention that cripples the static baseline, demonstrating the principles of adaptive indexing [

4] in a graph-based context.

We hypothesize that the baseline’s 70% performance collapse is a classic symptom of I/O-bound resource contention. To verify this, we conducted an additional experiment to measure system resource utilization during the skewed workload benchmark. The results, presented in

Table 5, confirm this hypothesis. Under the skewed load, the DiskANN+PF baseline’s CPUs were idle for 68.2% of the time, waiting for I/O (I/O Wait), with active processing (User+Sys) at only 22.5%. This is a definitive sign of an I/O bottleneck: the concentration of queries in a ‘hotspot’ causes all concurrent threads to compete for the same few disk blocks, saturating the NVMe drive and leaving the CPUs starved for data.

In stark contrast, the full PhishGraph system shows an I/O Wait time of only 3.5%, with active CPU processing at 89.1%. This demonstrates that PhishGraph’s adaptive maintenance policy successfully mitigates the I/O bottleneck by caching the most contended-for graph nodes in the in-memory HNSW sample. The bottleneck is effectively shifted from the slow disk to the fast CPU, allowing the system to maintain high throughput. While a deeper, future profiling study using hardware counters to measure cache misses would be required to fully confirm this, this resource utilization data provides strong evidence for the architectural benefits of our adaptive design.

4.8. Discussion on Generalizability and Limitations

While the experimental results are promising, we acknowledge the potential for distribution bias from a single public Kaggle dataset. We argue, however, that the PhishGraph architecture is largely agnostic to the specific data source. Its effectiveness is contingent on two factors:

Embedding Quality: The system’s ability to find “semantically similar” threats relies on the quality of the pre-trained LLM encoder. Its performance would transfer directly to proprietary SOC feeds, provided the same encoder is used.

Metadata Richness: The power of the Hybrid Fusion Distance is directly proportional to the quality of the extracted attributes.

Furthermore, the core architecture is highly generalizable to other security domains beyond URL analysis. For example:

Malware Analysis: One could search for similar malware samples based on vectors from their function call graphs, using attributes like malware_family, obfuscator_type, or target_os.

Network Threat Hunting: An analyst could search for similar network flow vectors (representing user or device behavior) with attributes like protocol, port, ASN, or geo_location.

The central contribution is the design of a scalable, disk-aware, and adaptive hybrid ANN index, which is applicable to any domain characterized by large-scale vector data, rich metadata, and skewed query patterns.

4.9. Discussion on Operational and Societal Impact

Deploying an ANN system in a live security environment carries significant operational implications.

Cost of Errors: In this domain, the cost of errors is asymmetric. A false positive (a benign URL incorrectly flagged) is an inconvenience, often leading to analyst overhead. A false negative (a malicious URL missed) is a critical security breach. Our system, with its focus on tuning for high recall, is explicitly designed to minimize these high-cost false negatives.

Dataset Bias: The system’s effectiveness is tied to its training data. Our model, based on the Kaggle dataset, will be inherently better at detecting attacks against common, global brands (e.g., ‘PayPal’, ‘Microsoft’) than against less-common regional targets. A production deployment would require continuous re-training on an organization’s specific, local threat data to avoid this bias.

Operational SLOs: Security Operations Centers (SOCs) operate under strict Service Level Objectives (SLOs). For a real-time, at-the-wire filtering application, a decision must often be made in milliseconds. Our demonstrated P99 latency of ≈10.5 ms under a high-load, skewed workload (

Table 3) indicates that PhishGraph meets the stringent requirements for such mission-critical, low-latency applications.

5. Conclusions and Future Work

This paper introduced PhishGraph, a holistic, disk-aware vector search system specifically engineered for the unique demands of billion-scale malicious URL analysis. We began by identifying the four primary challenges that render generic ANN solutions inadequate in the cybersecurity domain: the immense scale of historical data, the high velocity of new threats, the complexity of multi-faceted queries, and the skewed, adversarial nature of workloads. PhishGraph addresses these challenges by extending the robust foundation of the DiskANN system with a suite of synergistic, domain-specific optimizations. Through a detailed experimental evaluation on a 1-billion-point dataset, we demonstrated the significant, compounding benefits of our approach. The use of a Hybrid Fusion Distance metric for native attribute handling provided a 15% performance uplift over standard post-filtering on uniform workloads. More critically, the addition of learned query termination and adaptive graph maintenance policies allowed PhishGraph to maintain remarkably stable, low-latency performance under a realistic skewed workload, while the baseline system’s performance collapsed by over 70%. Our work proves that by integrating adaptivity and native hybrid query capabilities into a disk-aware architecture, it is possible to build a highly performant and cost-effective system for the next generation of scalable threat intelligence platforms. While PhishGraph provides a robust solution, several promising avenues for future research remain:

Advanced Attribute Types and Dynamic Weighting: Our current implementation of the Hybrid Fusion Distance primarily handles categorical attributes. Future work could extend this to more complex types, such as temporal or geographical data, by incorporating more nuanced distance functions. Furthermore, the attribute weight is currently a static hyperparameter. A more advanced system could learn to dynamically adjust based on the query context or user feedback, allowing the system to fluidly shift between semantic exploration and strict filtering. Furthermore, our evaluation focused on a single attribute filter; future work should test performance under highly selective, multi-constraint queries.

Vector Compression for Hybrid Data: PhishGraph uses Product Quantization for the vector component, but the attributes are currently stored uncompressed in memory. Developing a joint compression scheme that can efficiently encode both the vector and its associated attributes into a single, compact representation could further reduce the system’s memory footprint, enabling it to scale to even larger datasets on the same hardware.

Distributed and Federated Deployments: While PhishGraph is designed for a single powerful node, many real-world security operations are distributed. Future research could explore extending PhishGraph into a distributed system, investigating strategies for intelligent data partitioning and federated query execution that are aware of the hybrid data and skewed workloads. This could also open up possibilities for privacy-preserving federated learning, where multiple organizations could collaboratively build a powerful threat intelligence model without sharing their raw, sensitive URL data.