From Claims to Stance: Zero-Shot Detection with Pragmatic-Aware Multi-Agent Reasoning

Abstract

1. Introduction

- We propose PAMR, a pragmatic-aware multi-agent framework for zero-shot stance detection that factors inference into claim normalization, probabilistic NLI, and robustness probing, enabling interpretable and modular reasoning.

- We introduce a counterfactual and view-switching probe that quantifies stability of stance under meaning-preserving edits and perspective shifts; this signal directly informs a stability-aware fusion rule that curbs over-confident polarity errors and disentangles stance from sentiment.

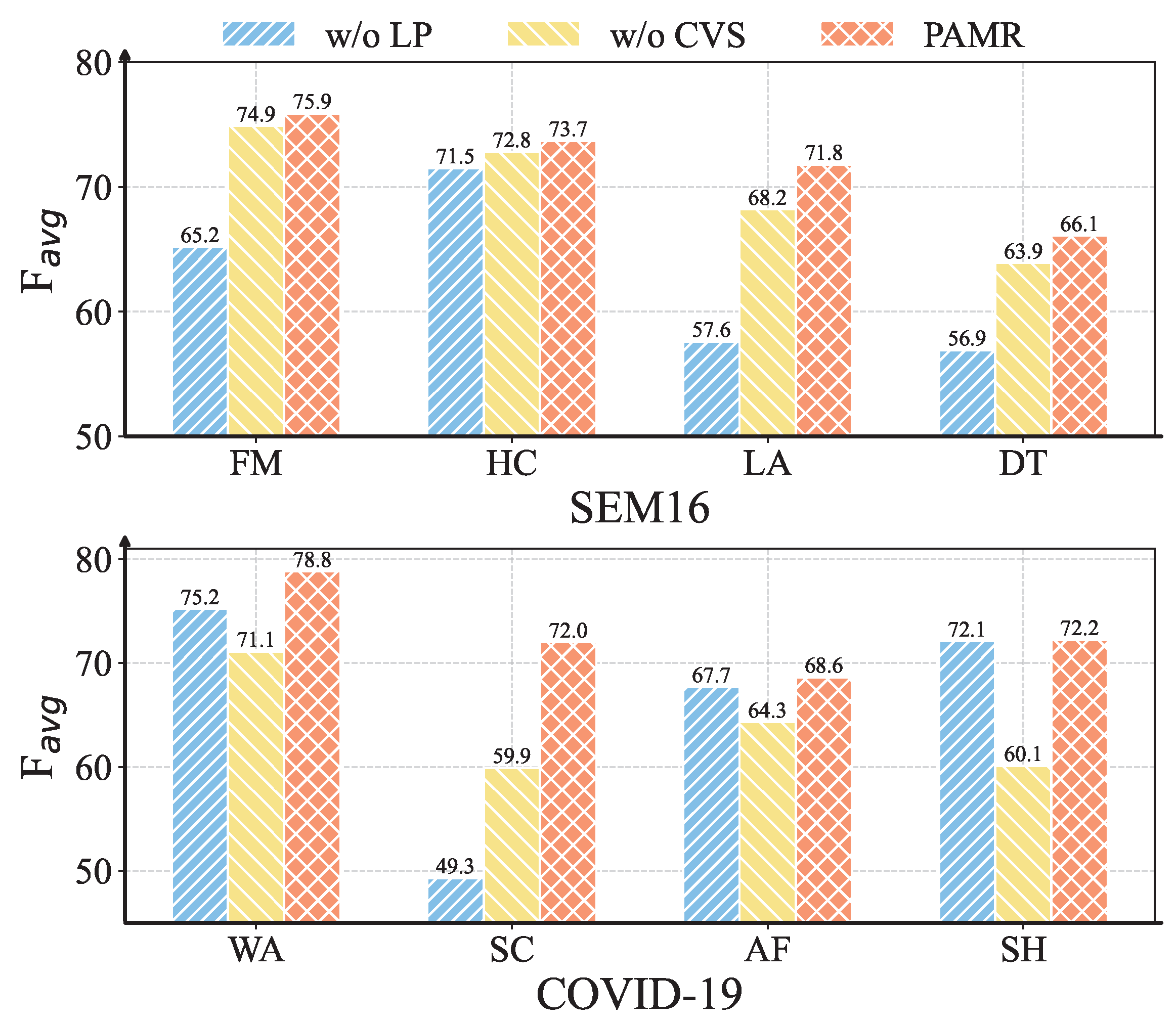

- We conduct extensive experiments on benchmark datasets, demonstrating that PAMR matches or surpasses strong zero-shot baselines. Ablations reveal the contributions of pragmatic cues and robustness probing, while additional analyses show that PAMR produces interpretable intermediate artifacts that enable fine-grained audits.

2. Related Works

2.1. Traditional Stance Detection Methods

2.2. Zero-Shot Stance Detection

2.3. LLM-Based Stance Detection Methods

2.4. Summary and Research Gap

3. Methods

3.1. Task Definition

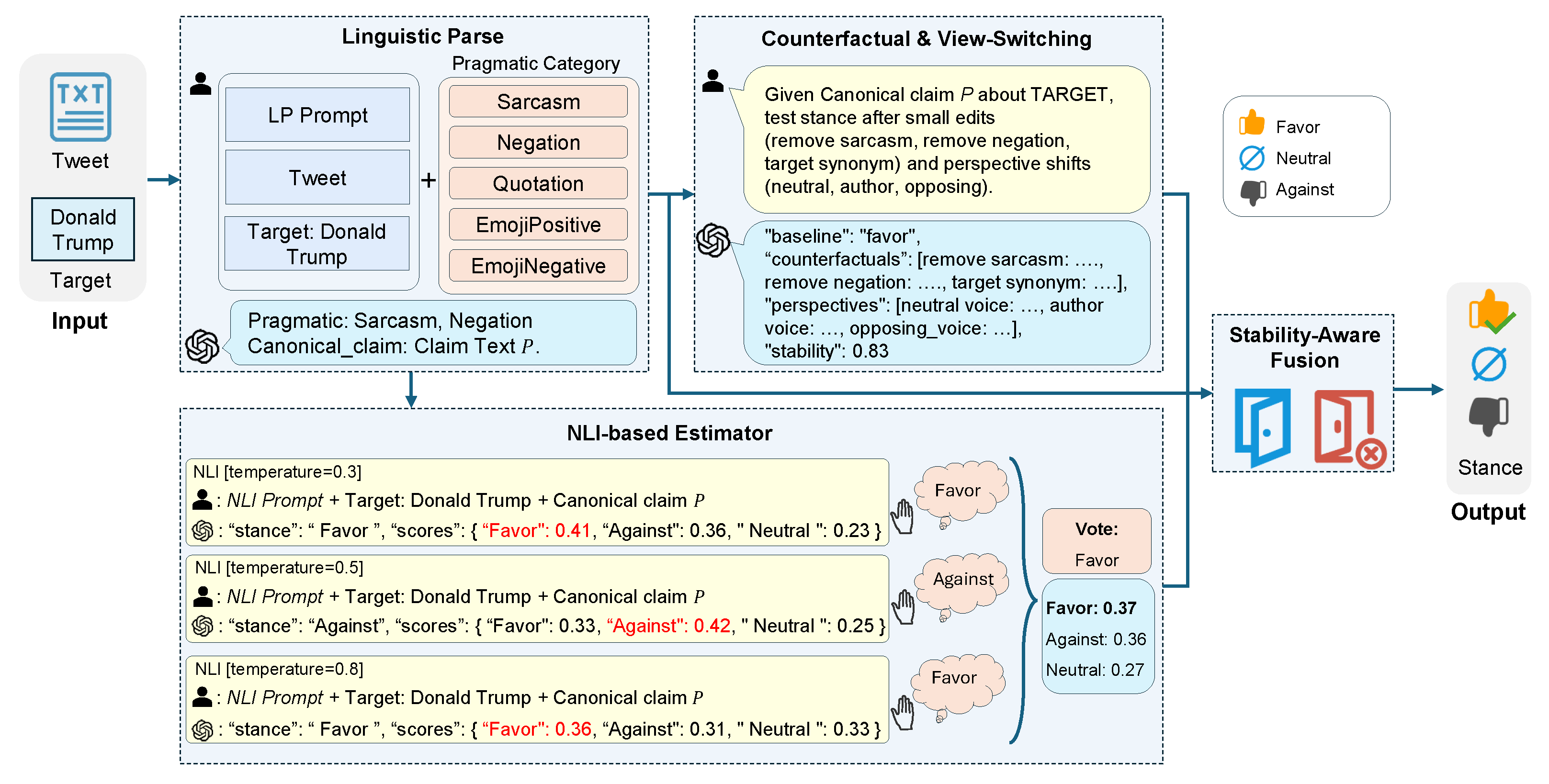

3.2. PAMR Overview

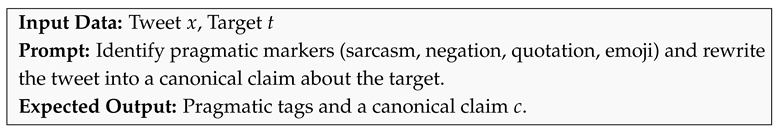

- (1)

- Linguistic Parse Agent: takes the raw social media post x and target t as input, and outputs a target-linked canonical claim along with pragmatic markers such as sarcasm, hedging, or negation. This ensures that subsequent reasoning is grounded in normalized propositions instead of noisy surface forms.

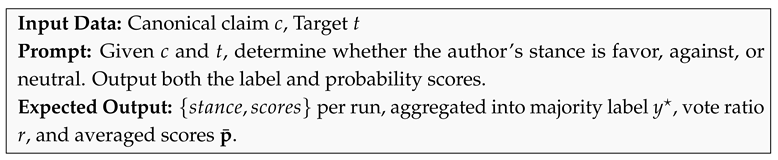

- (2)

- NLI Estimation Agent: Reformulates stance classification as a natural language inference task. Given the canonical claim and target, it runs multiple inference passes using diverse prompts to produce both a probability distribution over stance labels and a consensus vote count, mitigating randomness.

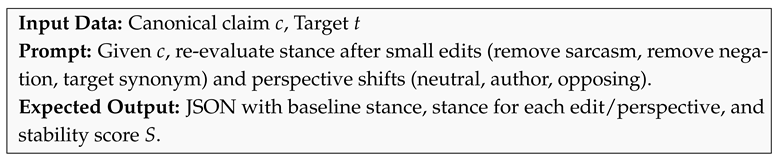

- (3)

- Counterfactual and View-Switching Agent: Evaluates the stability of the predicted stance by applying minimal, meaning-preserving perturbations (e.g., changing tone, perspective). It outputs a scalar stability score reflecting how robust the original decision is under linguistic variation.

- (4)

- Stability-Aware Fusion Agent: Receives all signals—canonical claim, pragmatic tags, NLI probabilities and consensus, stability score—and integrates them under a conservative policy. It abstains to “neutral” if confidence is low or predictions are unstable, ensuring robustness.

3.3. Linguistic Parse

3.4. NLI-Based Estimator

3.5. Counterfactual and View-Switching

3.6. Stability-Aware Fusion

Filtering Policy

| Algorithm 1 Stability-Aware Fusion. |

Require: ; thresholds ; penalty

|

4. Experiments

4.1. Experimental Data

- SemEval-2016 (SEM16) [2]: The SEM16 dataset contains 4870 tweets, each targeting various subjects and annotated with one of three stance labels: “favor”, “against”, or “neutral”. SEM16 provides six targets (Donald Trump (DT), Hillary Clinton (HC), Feminist Movement (FM), Legalization of Abortion (LA), Atheism (AT), and Climate Change (CC)). Following [25] for zero-shot evaluation, we exclude Atheism (AT) and Climate Change (CC) and use only the official test split for fair comparison with prior zero-shot settings. Per-target class counts for the four retained targets are reported in Table 1.

- COVID-19-Stance (COVID-19) [31]: We also use the COVID-19 stance dataset, which assesses public attitudes toward pandemic-related policies and figures across four targets: Wearing a Face Mask (WA), Keeping Schools Closed (SC), Anthony S. Fauci, M.D. (AF), and Stay at Home Orders (SH). Each tweet is labeled with Favor/Against/Neutral. As with SEM16, we report results using the test split only; class distributions are shown in Table 1.

4.2. Evaluation Metrics

4.3. Baseline Methods

- (1)

- TraditionalDNNs.

- BiLSTM and Bicond [32]: These two approaches utilize separate BiLSTM encoders, where one captures sentence-level semantics and the other encodes the given target, thereby enabling the model to jointly represent stance-related information.

- CrossNet [33]: This model leverages BiLSTM architectures to encode both the input text and its corresponding target, while introducing a target-specific attention mechanism before classification, which enhances the model’s ability to generalize across unseen targets.

- TPDG [34]: This method automatically identifies stance-bearing words and distinguishes target-dependent from target-independent terms, adjusting them adaptively to better capture the relationship between text and target.

- TOAD [35]: To improve generalization in zero-shot scenarios, TOAD adopts an adversarial learning strategy that allows the model to resist overfitting to specific targets while transferring stance knowledge.

- (2)

- Fine-tuning methods.

- TGA-Net [36]: This approach establishes associations between training and evaluation topics in an unsupervised way, using BERT as the encoder and fully connected layers for classification, thereby linking topic domains without annotated supervision.

- Bert-Joint [37]: It combines bidirectional encoder representations from transformers that have been pre-trained on large-scale unlabeled corpora, producing dense contextual embeddings for both tokens and full sentences.

- Bert-GCN [38]: This method enhances stance detection with common-sense knowledge by integrating both structural and semantic graph relations, which makes it more effective in generalizing to zero and few-shot target scenarios.

- JointCL [24]: It unifies stance-oriented contrastive learning with target-aware prototypical graph contrastive learning, allowing the model to transfer stance-relevant features learned from seen topics to unseen targets.

- TarBK [39]: By leveraging Wikipedia-derived background knowledge, TarBK reduces the semantic gap between training and evaluation targets, thereby improving the reasoning capability of stance classifiers.

- PT-HCL [7]: This contrastive learning approach utilizes both semantic and sentiment features to improve cross-domain stance transferability, enabling robust generalization beyond source data. Its combination of semantics and sentiment proves critical for disambiguating stance from emotion.

- KEPrompt [40]: This method proposes an automatic verbalizer to generate label words dynamically, while simultaneously injecting external background knowledge to guide stance recognition. It reduces reliance on manually designed verbalizers and improves flexibility.

- (3)

- LLM-based methods.

- COLA [14]: This approach employs a three-stage framework where different LLM roles are orchestrated for multidimensional text understanding and reasoning, resulting in state-of-the-art zero-shot stance performance. It demonstrates the effectiveness of task decomposition within LLM pipelines.

- Ts-CoT [41]: It introduces a chain-of-thought prompting mechanism for stance detection with LLMs, upgrading the base model to GPT-3.5 in order to take advantage of improved reasoning capacity. The CoT design encourages step-by-step reasoning rather than direct label prediction.

- EDDA [27]: This method exploits LLMs to automatically generate rationales and substitute stance-bearing expressions, thereby increasing semantic relevance and expression diversity for stance detection. By focusing on rationales, EDDA improves both performance and interpretability.

- FOLAR [42]: A reasoning framework that augments stance detection with factual knowledge and chain-of-thought logical reasoning, aiming to improve interpretability and robustness in zero-shot settings.

- LogiMDF [25]: A logic-augmented multi-decision fusion framework that extracts first-order logic rules from multiple LLMs, constructs a logical fusion schema, and employs a multi-view hypergraph neural network to integrate diverse reasoning processes for consistent and accurate stance detection.

4.4. Implementation Details

5. Overall Performance

5.1. Analysis of Main Results

5.2. Ablation Study

5.3. Case Study

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Küçük, D.; Can, F. Stance detection: A survey. ACM Comput. Surv. (CSUR) 2020, 53, 12. [Google Scholar] [CrossRef]

- Mohammad, S.; Kiritchenko, S.; Sobhani, P.; Zhu, X.; Cherry, C. Semeval-2016 task 6: Detecting stance in tweets. In Proceedings of the 10th International Workshop On Semantic Evaluation (SemEval-2016), San Diego, CA, USA, 16–17 June 2016; pp. 31–41. [Google Scholar]

- Addawood, A.; Schneider, J.; Bashir, M. Stance classification of twitter debates: The encryption debate as a use case. In Proceedings of the 8th International Conference on Social Media & Society, Toronto, ON, Canada, 28–30 July 2017; pp. 1–10. [Google Scholar]

- Sun, Q.; Wang, Z.; Zhu, Q.; Zhou, G. Stance detection with hierarchical attention network. In Proceedings of the 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 21–24 August 2018; pp. 2399–2409. [Google Scholar]

- Li, Y.; Caragea, C. Target-Aware Data Augmentation for Stance Detection. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 1850–1860. [Google Scholar]

- Xu, C.; Paris, C.; Nepal, S.; Sparks, R. Cross-target stance classification with self-attention networks. arXiv 2018, arXiv:1805.06593. [Google Scholar]

- Liang, B.; Chen, Z.; Gui, L.; He, Y.; Yang, M.; Xu, R. Zero-Shot Stance Detection via Contrastive Learning. In Proceedings of the ACM Web Conference 2022, Virtual, 25–29 April 2022; pp. 2738–2747. [Google Scholar]

- Hong, G.N.S.Y.; Gauch, S. Sarcasm Detection as a Catalyst: Improving Stance Detection with Cross-Target Capabilities. arXiv 2025, arXiv:2503.03787. [Google Scholar] [CrossRef]

- Maynard, D.; Greenwood, M. Who cares about Sarcastic Tweets? Investigating the Impact of Sarcasm on Sentiment Analysis. In Proceedings of the Ninth International Conference on Language Resources and Evaluation (LREC’14); Calzolari, N., Choukri, K., Declerck, T., Loftsson, H., Maegaard, B., Mariani, J., Moreno, A., Odijk, J., Piperidis, S., Eds.; European Language Resources Association (ELRA): Reykjavik, Iceland, 2014; pp. 4238–4243. [Google Scholar]

- Du, J.; Xu, R.; He, Y.; Gui, L. Stance classification with target-specific neural attention networks. In Proceedings of the International Joint Conferences on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017. [Google Scholar]

- Wei, P.; Lin, J.; Mao, W. Multi-target stance detection via a dynamic memory-augmented network. In Proceedings of the The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, Ann Arbor, MI, USA, 8–12 July 2018; pp. 1229–1232. [Google Scholar]

- Li, Y.; Sosea, T.; Sawant, A.; Nair, A.J.; Inkpen, D.; Caragea, C. P-stance: A large dataset for stance detection in political domain. In Proceedings of the Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, Online Event, 1–6 August 2021; pp. 2355–2365. [Google Scholar]

- Conforti, C.; Berndt, J.; Pilehvar, M.T.; Giannitsarou, C.; Toxvaerd, F.; Collier, N. Synthetic Examples Improve Cross-Target Generalization: A Study on Stance Detection on a Twitter corpus. In Proceedings of the Eleventh Workshop on Computational Approaches to Subjectivity, Sentiment and Social Media Analysis, WASSA@EACL 2021, Online, 19 April 2021; pp. 181–187. [Google Scholar]

- Lan, X.; Gao, C.; Jin, D.; Li, Y. Stance detection with collaborative role-infused llm-based agents. In Proceedings of the International AAAI Conference on Web and Social Media, Buffalo, NY, USA, 3–6 June 2024; Volume 18, pp. 891–903. [Google Scholar]

- Guo, T.; Chen, X.; Wang, Y.; Chang, R.; Pei, S.; Chawla, N.V.; Wiest, O.; Zhang, X. Large Language Model based Multi-Agents: A Survey of Progress and Challenges. arXiv 2024, arXiv:2402.01680. [Google Scholar] [CrossRef]

- Tran, K.T.; Dao, D.; Nguyen, M.D.; Pham, Q.V.; O’Sullivan, B.; Nguyen, H.D. Multi-Agent Collaboration Mechanisms: A Survey of LLMs. arXiv 2025, arXiv:2501.06322. [Google Scholar] [CrossRef]

- Zarrella, G.; Marsh, A. MITRE at SemEval-2016 Task 6: Transfer Learning for Stance Detection. In Proceedings of the 10th International Workshop on Semantic Evaluation (SemEval-2016), San Diego, CA, USA, 16–17 June 2016; pp. 458–463. [Google Scholar]

- Sobhani, P.; Inkpen, D.; Zhu, X. A dataset for multi-target stance detection. In Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics: Volume 2, Short Papers, Valencia, Spain, 3–7 April 2017; pp. 551–557. [Google Scholar]

- He, Z.; Mokhberian, N.; Lerman, K. Infusing Wikipedia Knowledge to Enhance Stance Detection. arXiv 2022, arXiv:2204.03839. [Google Scholar]

- Wang, S.; Pan, L. Target-Adaptive Consistency Enhanced Prompt-Tuning for Multi-Domain Stance Detection. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), Torino, Italia, 20–25 May 2024; pp. 15585–15594. [Google Scholar]

- Nguyen, D.Q.; Vu, T.; Nguyen, A.T. BERTweet: A Pre-trained Language Model for English Tweets. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Online, 16–20 November 2020; pp. 9–14. [Google Scholar] [CrossRef]

- Müller, M.; Salathé, M.; Kummervold, P.E. COVID-Twitter-BERT: A Natural Language Processing Model to Analyse COVID-19 Content on Twitter. arXiv 2020, arXiv:2005.07503. [Google Scholar] [CrossRef] [PubMed]

- Liang, B.; Li, A.; Zhao, J.; Gui, L.; Yang, M.; Yu, Y.; Wong, K.F.; Xu, R. Multi-modal Stance Detection: New Datasets and Model. arXiv 2024, arXiv:2402.14298. [Google Scholar] [CrossRef]

- Liang, B.; Zhu, Q.; Li, X.; Yang, M.; Gui, L.; He, Y.; Xu, R. Jointcl: A joint contrastive learning framework for zero-shot stance detection. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Dublin, Ireland, 22–27 May 2022; Volume 1, pp. 81–91. [Google Scholar]

- Zhang, B.; Ma, J.; Fu, X.; Dai, G. Logic Augmented Multi-Decision Fusion Framework for Stance Detection on Social Media. Inf. Fusion 2025, 122, 103214. [Google Scholar] [CrossRef]

- Li, A.; Liang, B.; Zhao, J.; Zhang, B.; Yang, M.; Xu, R. Stance Detection on Social Media with Background Knowledge. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; pp. 15703–15717. [Google Scholar]

- Ding, D.; Dong, L.; Huang, Z.; Xu, G.; Huang, X.; Liu, B.; Jing, L.; Zhang, B. EDDA: An Encoder-Decoder Data Augmentation Framework for Zero-Shot Stance Detection. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), Torino, Italia, 20–25 May 2024; pp. 5484–5494. [Google Scholar]

- Fei, H.; Li, B.; Liu, Q.; Bing, L.; Li, F.; Chua, T.S. Reasoning Implicit Sentiment with Chain-of-Thought Prompting. arXiv 2023, arXiv:2305.11255. [Google Scholar]

- Ling, Z.; Fang, Y.; Li, X.; Huang, Z.; Lee, M.; Memisevic, R.; Su, H. Deductive Verification of Chain-of-Thought Reasoning. arXiv 2023, arXiv:2306.03872. [Google Scholar]

- Cai, Z.; Chang, B.; Han, W. Human-in-the-Loop through Chain-of-Thought. arXiv 2023, arXiv:2306.07932. [Google Scholar]

- Glandt, K.; Khanal, S.; Li, Y.; Caragea, D.; Caragea, C. Stance detection in COVID-19 tweets. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Long Papers), Online, 1–6 August 2021; Volume 1. [Google Scholar]

- Augenstein, I.; Rocktaeschel, T.; Vlachos, A.; Bontcheva, K. Stance Detection with Bidirectional Conditional Encoding. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Sheffield, Austin, TX, USA, 1–5 November 2016. [Google Scholar]

- Du, J.; Xu, R.; He, Y.; Gui, L. Stance Classification with Target-specific Neural Attention. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, IJCAI-17, Melbourne, Australia, 19–25 August 2017; pp. 3988–3994. [Google Scholar] [CrossRef]

- Liang, B.; Fu, Y.; Gui, L.; Yang, M.; Du, J.; He, Y.; Xu, R. Target-Adaptive Graph for Cross-Target Stance Detection. In Proceedings of the WWW ’21: The Web Conference 2021, Virtual Event/Ljubljana, Slovenia, 19–23 April 2021; pp. 3453–3464. [Google Scholar]

- Allaway, E.; Srikanth, M.; Mckeown, K. Adversarial Learning for Zero-Shot Stance Detection on Social Media. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 4756–4767. [Google Scholar]

- Allaway, E.; Mckeown, K. Zero-Shot Stance Detection: A Dataset and Model using Generalized Topic Representations. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 8913–8931. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Liu, R.; Lin, Z.; Tan, Y.; Wang, W. Enhancing zero-shot and few-shot stance detection with commonsense knowledge graph. In Proceedings of the Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, Online Event, 1–6 August 2021; pp. 3152–3157. [Google Scholar]

- Zhu, Q.; Liang, B.; Sun, J.; Du, J.; Zhou, L.; Xu, R. Enhancing Zero-Shot Stance Detection via Targeted Background Knowledge. In Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Madrid, Spain, 11–15 July 2022; pp. 2070–2075. [Google Scholar]

- Huang, H.; Zhang, B.; Li, Y.; Zhang, B.; Sun, Y.; Luo, C.; Peng, C. Knowledge-Enhanced Prompt-Tuning for Stance Detection. ACM Trans. Asian-Low-Resour. Lang. Inf. Process. 2023, 22, 159. [Google Scholar] [CrossRef]

- Zhang, B.; Fu, X.; Ding, D.; Huang, H.; Li, Y.; Jing, L. Investigating Chain-of-thought with ChatGPT for Stance Detection on Social Media. arXiv 2023, arXiv:2304.03087. [Google Scholar]

- Dai, G.; Liao, J.; Zhao, S.; Fu, X.; Peng, X.; Huang, H.; Zhang, B. Large Language Model Enhanced Logic Tensor Network for Stance Detection. Neural Netw. 2025, 183, 106956. [Google Scholar] [CrossRef] [PubMed]

| Dataset | Target | Favor | Against | Neutral | Total |

|---|---|---|---|---|---|

| SEM16 | DT | 148 | 299 | 260 | 707 |

| HC | 163 | 565 | 251 | 979 | |

| FM | 268 | 511 | 170 | 949 | |

| LA | 167 | 544 | 222 | 933 | |

| COVID-19 | AF | 492 | 610 | 762 | 1864 |

| SH | 615 | 250 | 325 | 1190 | |

| WA | 693 | 190 | 668 | 1551 | |

| SC | 400 | 782 | 346 | 1528 |

| Method | HC | FM | LA | DT | Avg |

|---|---|---|---|---|---|

| BiLSTM | 31.6 | 40.3 | 33.6 | 30.8 | 34.1 |

| Bicond | 32.7 | 40.6 | 34.4 | 30.5 | 34.6 |

| CrossNet | 38.3 | 41.7 | 38.5 | 35.6 | 38.5 |

| TPDG | 50.9 | 53.6 | 46.5 | 47.3 | 49.6 |

| TOAD | 51.2 | 54.1 | 46.2 | 49.5 | 50.3 |

| TGA-Net | 49.3 | 46.6 | 45.2 | 40.7 | 45.5 |

| Bert-Joint | 50.1 | 42.1 | 44.8 | 41.0 | 44.5 |

| Bert-GCN | 50.0 | 44.3 | 44.2 | 42.3 | 45.2 |

| JointCL | 54.4 | 54.0 | 50.0 | 50.5 | 52.2 |

| TarBK | 55.1 | 53.8 | 48.7 | 50.8 | 52.1 |

| PT-HCL | 54.5 | 54.6 | 50.9 | 50.1 | 52.5 |

| KEPROMPT | 57.0 | 53.6 | 53.0 | 41.8 | 51.3 |

| NPS4SD | 60.1 | 56.7 | 51.0 | 51.4 | 54.8 |

| COLA | 81.7 | 63.4 | 71.0 | 68.5 | 71.2 |

| Ts-CoTGPT | 78.9 | 68.3 | 62.3 | 68.6 | 69.5 |

| EDDA | 77.4 | 69.7 | 62.7 | 69.8 | 69.9 |

| FOLAR | 81.9 | 71.2 | 69.9 | – | – |

| LogiMDF | 75.1 | 67.9 | 68.0 | 67.6 | 69.7 |

| PAMR (Ours) | 73.7 | 75.9 ‡ | 71.8 ‡ | 66.1 | 71.9 |

| Method | AF | SC | SH | WA | Avg |

|---|---|---|---|---|---|

| CrossNet | 41.3 | 40.0 | 40.4 | 38.2 | 40.0 |

| BERT | 47.3 | 45.0 | 39.9 | 44.3 | 44.1 |

| TPDG | 46.0 | 51.5 | 37.2 | 48.0 | 45.7 |

| TOAD | 53.0 | 68.3 | 62.9 | 41.1 | 56.3 |

| JointCL | 57.6 | 49.3 | 43.5 | 63.1 | 53.4 |

| Ts-CoT | 69.2 | 43.5 | 66.5 | 57.8 | 59.3 |

| COLA | 65.7 | 46.6 | 53.5 | 73.9 | 59.9 |

| FOLAR | 69.5 | 67.2 | 65.4 | 73.1 | 68.8 |

| LogiMDF | 70.4 | 68.8 | 64.9 | 75.4 | 69.9 |

| PAMR | 68.6 | 72.0 ‡ | 72.2 ‡ | 78.8 ‡ | 73.0 |

| Tweet | Claim | Pragmatic Tags | NLI Scores | Stability | Prediction |

|---|---|---|---|---|---|

| People are saying that kids will not have a safe place to go to if the schools are closed. Large gatherings are not safe with coronavirus. The coronavirus is not safe. | Keeping schools closed may leave kids without a safe place to go, but large gatherings are unsafe due to coronavirus. | [Quotation] | {favor: 0.65, against: 0.15, none: 0.20} | 0.833 | Favor |

| “2 years ago Hillary never answered whether she used private email. Liberal media passed on reporting.” #equality | Questioning Hillary’s email while excusing others reflects the sexist double standards women in politics face. | [Sarcasm, Quotation] | {favor: 0.50, against: 0.46, none: 0.04} | 0.666 | Against |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, Z.; Niu, F.; Dai, G.; Zhang, B. From Claims to Stance: Zero-Shot Detection with Pragmatic-Aware Multi-Agent Reasoning. Electronics 2025, 14, 4298. https://doi.org/10.3390/electronics14214298

Xie Z, Niu F, Dai G, Zhang B. From Claims to Stance: Zero-Shot Detection with Pragmatic-Aware Multi-Agent Reasoning. Electronics. 2025; 14(21):4298. https://doi.org/10.3390/electronics14214298

Chicago/Turabian StyleXie, Zhiyu, Fuqiang Niu, Genan Dai, and Bowen Zhang. 2025. "From Claims to Stance: Zero-Shot Detection with Pragmatic-Aware Multi-Agent Reasoning" Electronics 14, no. 21: 4298. https://doi.org/10.3390/electronics14214298

APA StyleXie, Z., Niu, F., Dai, G., & Zhang, B. (2025). From Claims to Stance: Zero-Shot Detection with Pragmatic-Aware Multi-Agent Reasoning. Electronics, 14(21), 4298. https://doi.org/10.3390/electronics14214298