RAP-RAG: A Retrieval-Augmented Generation Framework with Adaptive Retrieval Task Planning

Abstract

1. Introduction

- Query complexity. For a query problem, a small model might not be able to handle very complex queries. Can a reasonable query method be constructed to match the performance of the small model? Furthermore, for problems of varying complexity, can the complexity be properly analyzed and the most appropriate method selected to search relevant databases, maximizing the performance of the small model?

- Validation of the reasoning path. For complex problems involving multi-step reasoning, the validity of the reasoning path must be ensured to retrieve the most relevant information for the query. For a small model, constructing a reasonable path using its own capabilities alone is difficult. Therefore, a method for validating the validity of the reasoning path is necessary to assist the small model in making this determination.

- Heterogeneous Weighted Graph Index. The construction of the knowledge base determines the retrieval quality and speed of the RAG system. In order to break through the limitations of SLMs in semantic capabilities, we constructed a heterogeneous weighted graph index. This design aims to provide a unified structure that supports semantic clustering and global relationship reasoning. Specifically, the index distinguishes and models two types of relationships: similarity and dissimilarity. Similarity relationships construct local communities based on score indicators to characterize the clustering characteristics of semantically related nodes; while dissimilar relationships describe the association strength between entities through weight indicators to maintain the overall topological structure and global connectivity. In this way, the graph index not only alleviates the problem of isolated semantic retrieval, but also enhances the model’s ability to perform multi-hop reasoning and integrate global context, thereby providing comprehensive support for subsequent adaptive retrieval.

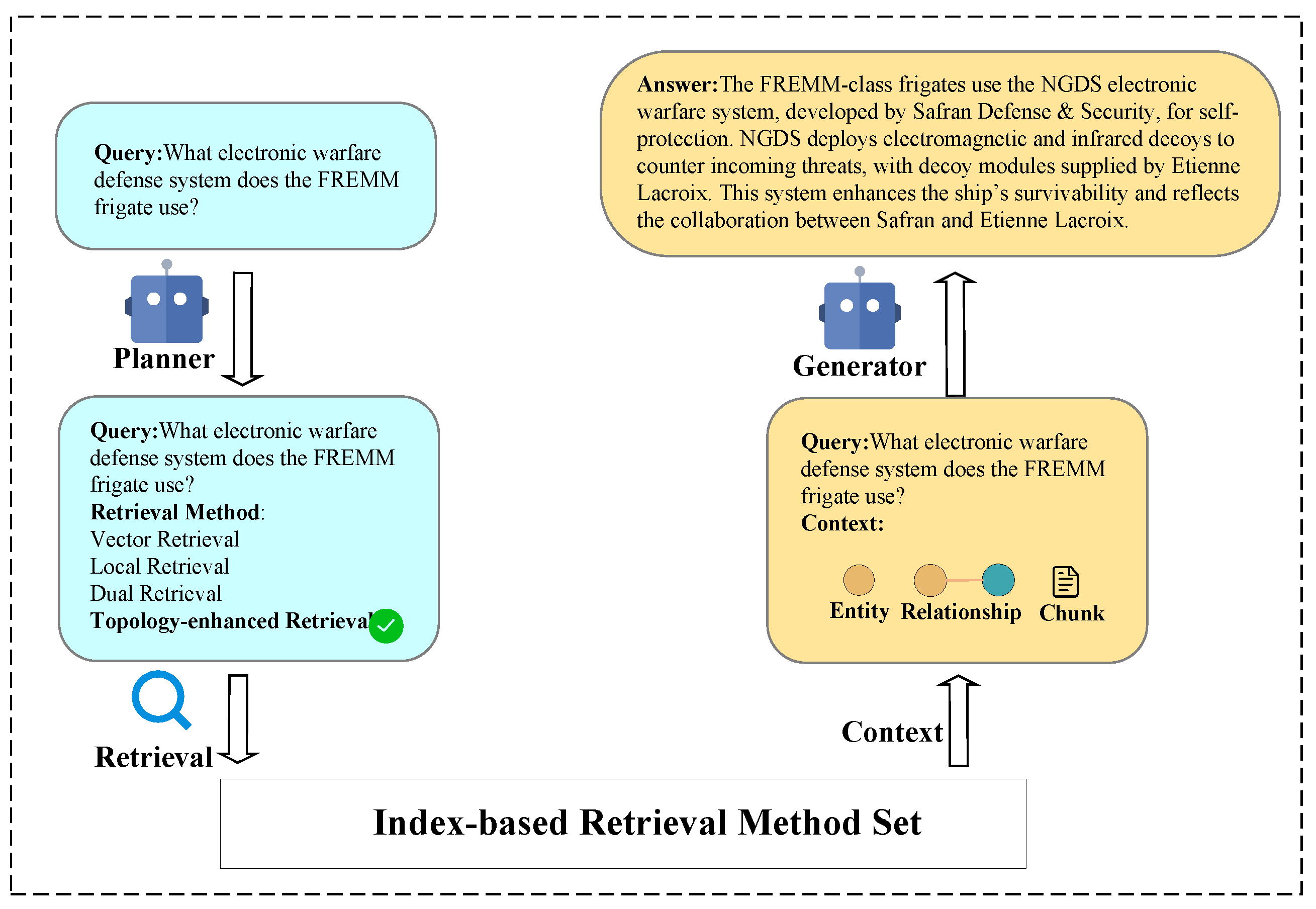

- Index-based Retrieval Method Set. To enable reasonable retrieval of knowledge bases, we systematically constructed and modified a series of retrieval methods based on the heterogeneous weighted graph indexing mechanism, including vector retrieval, improved local retrieval, dual retrieval methods, and our proposed topology-enhanced retrieval method. These methods can fully utilize the semantic aggregation capabilities of local communities and combine graph structure information to perform complex relational reasoning, thereby achieving a better balance between different types of tasks.

- Adaptive Retrieval Task Planning. For a query task, using a single retrieval method may not necessarily yield the best results. Instead, allowing the system to adaptively select the most appropriate retrieval method can be a solution. Therefore, we propose a self-planning method for retrieval tasks. This method automatically selects or combines the most appropriate retrieval strategies based on the query requirements and question characteristics. For example, it prioritizes local retrieval in short-text question answering and topology-enhanced retrieval in multi-hop reasoning scenarios. This mechanism effectively improves the system’s task generalization and usability in small-scale environments.

2. Previous Search

3. Materials and Methods

3.1. Heterogeneous Weighted Graph Index

- (1)

- Candidate Generate

- (2)

- Entity Aggregate

- (3)

- Textual And Attribute Filter

- (4)

- Final Merge

3.2. Index-Based Retrieval Method Set

- (1)

- Vector Retrieval

- (2)

- Local Retrieval

- (3)

- Dual Retrieval

- (4)

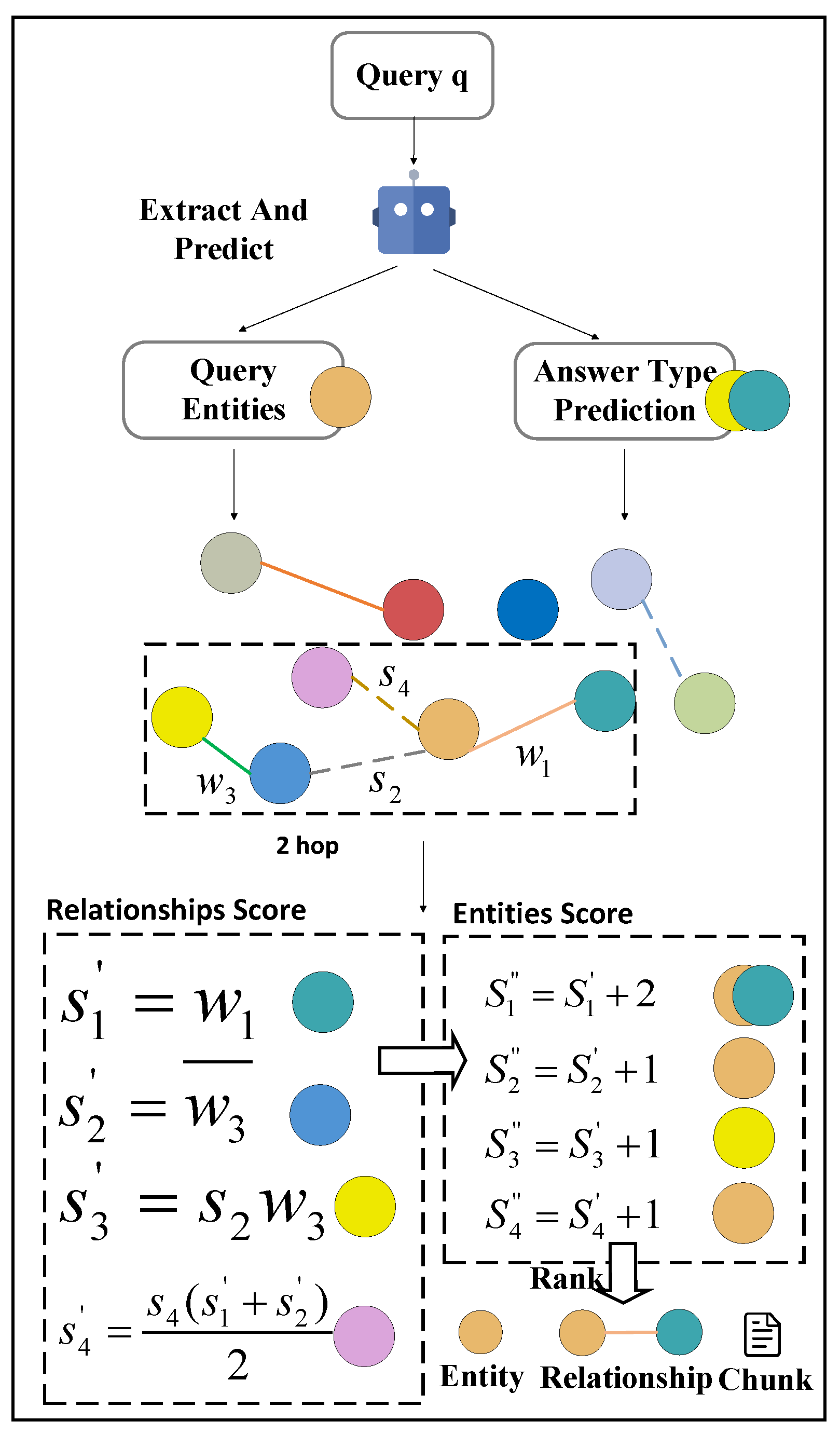

- Topology-enhanced Retrieval

3.3. Adaptive Retrieval Task Planning

- (1)

- Prompt Construction

- (2)

- Planner Fine-tuning Strategy

4. Experiment

4.1. Datasets

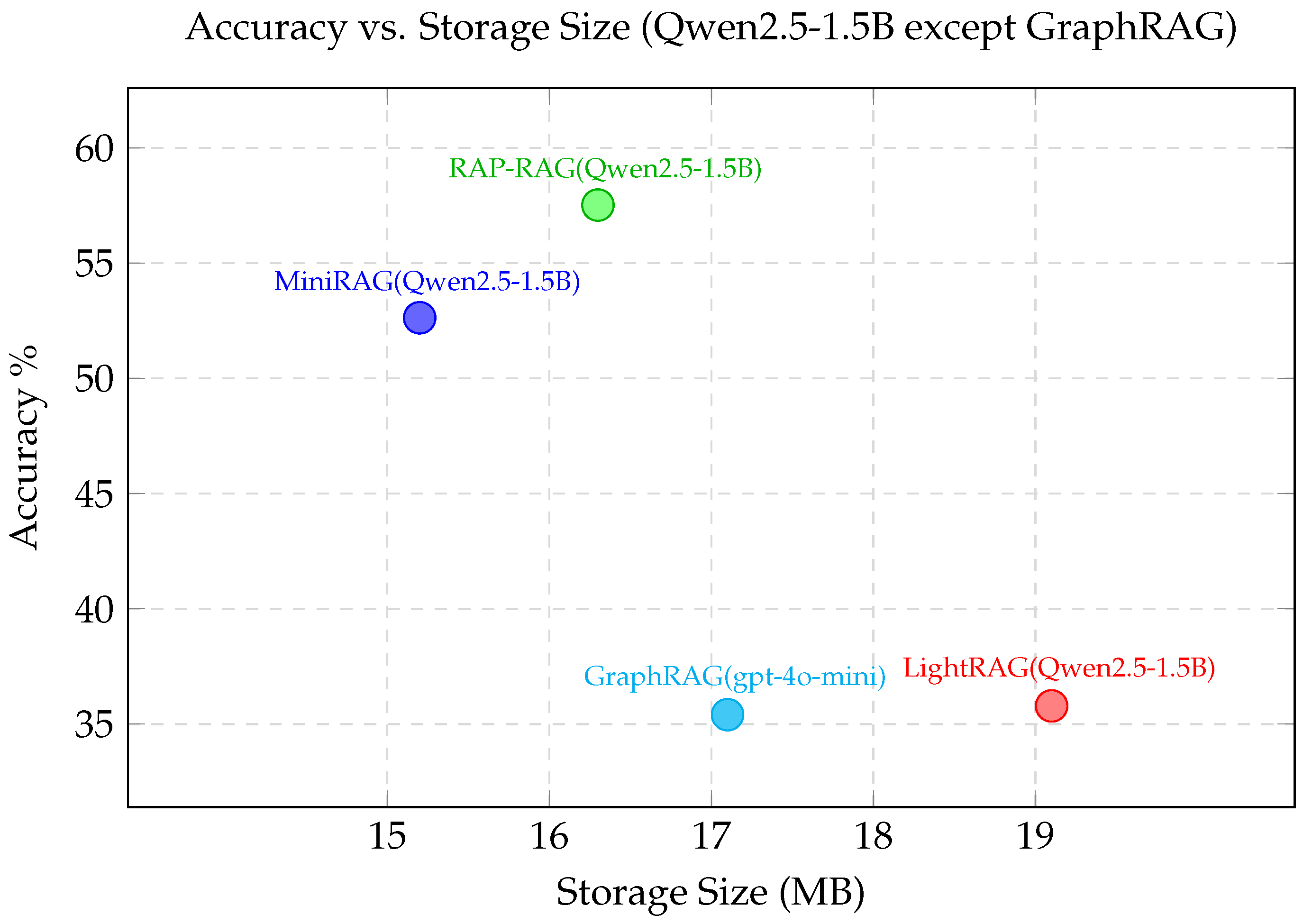

4.2. Baselines

4.3. Models

4.4. Performance Comparison

4.5. Component Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| You are a search strategy planner. Given a user query, your task is to determine which search method is most appropriate. Available methods include:

The answer contains only one method option: A, B, C, D, or E. The user’s query is as follows: {query} |

Appendix B

| Question: Who was the child of César Gaytan, the Italian navigator who explored the continent’s eastern coast? Was he born to an English parent? |

| Direct Generation: Cesare Gaitán was born on the North American continent. The Italian navigator who explored the east coast of North America for the British was Giovanni Caboto, also known as John Cabot. So the answer is: Giovanni Caboto/John Cabot. |

| Answer: Wrong |

| ...... |

| Topoloy-enhanced Search:César Gaitán was born in Guadalajara, Jalisco, Mexico. The Italian navigator who explored the east coast of the continent for the British was John Cabot. John Cabot’s son was Sebastian Cabot. So the answer is: Sebastian Cabot. |

| Answer: Right |

| Label: E |

References

- Salemi, A.; Zamani, H. Evaluating Retrieval Quality in Retrieval-Augmented Generation. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval, Washington, DC, USA, 14–18 July 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 2395–2400. [Google Scholar] [CrossRef]

- Es, S.; James, J.; Espinosa Anke, L.; Schockaert, S. RAGAs: Automated Evaluation of Retrieval Augmented Generation. In Proceedings of the 18th Conference of the European Chapter of the Association for Computational Linguistics: System Demonstrations, St. Julian’s, Malta, 17–22 March 2024; Aletras, N., De Clercq, O., Eds.; Association for Computational Linguistics: St. Julians, Malta, 2024; pp. 150–158. [Google Scholar] [CrossRef]

- Sudhi, V.; Bhat, S.R.; Rudat, M.; Teucher, R. RAG-Ex: A Generic Framework for Explaining Retrieval Augmented Generation. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval, Washington, DC, USA, 15–18 July 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 2776–2780. [Google Scholar] [CrossRef]

- Fan, W.; Ding, Y.; Ning, L.-B.; Wang, S.; Li, H.; Yin, D.; Chua, T.-S.; Li, Q. A Survey on RAG Meeting LLMs: Towards Retrieval-Augmented Large Language Models. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; pp. 1234–1245. [Google Scholar] [CrossRef]

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Dai, Y.; Sun, J.; Wang, H. Retrieval-Augmented Generation for Large Language Models: A Survey. arXiv 2023, arXiv:2312.10997. [Google Scholar]

- Liu, Z.; Zhao, C.; Iandola, F.; Lai, C.; Tian, Y.; Fedorov, I.; Xiong, Y.; Chang, E.; Shi, Y.; Krishnamoorthi, R.; et al. MobileLLM: Optimizing Sub-Billion Parameter Language Models for On-Device Use Cases. In Proceedings of the Forty-First International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Guo, Z.; Xia, L.; Yu, Y.; Ao, T.; Huang, C. LightRAG: Simple and Fast Retrieval-Augmented Generation. arXiv 2025, arXiv:2410.05779. [Google Scholar]

- Edge, D.; Trinh, H.; Cheng, N.; Bradley, J.; Chao, A.; Mody, A.; Truitt, S.; Larson, J. From Local to Global: A Graph RAG Approach to Query-Focused Summarization. arXiv 2024, arXiv:2404.16130. [Google Scholar] [CrossRef]

- Grattafiori, A.; Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Vaughan, A.; et al. The Llama 3 Herd of Models. arXiv 2024, arXiv:2407.21783. [Google Scholar] [CrossRef]

- Qwen, A.Y.; Yang, B.; Zhang, B.; Hui, B.; Zheng, B.; Yu, B.; Li, C.; Liu, D.; Huang, F.; Wei, H.; et al. Qwen2.5 Technical Report. arXiv 2025, arXiv:2412.15115. [Google Scholar]

- Allal, L.B.; Lozhkov, A.; Bakouch, E.; Blázquez, G.M.; Penedo, G.; Tunstall, L.; Marafioti, A.; Kydlíček, H.; Lajarín, A.P.; Srivastav, V.; et al. SmolLM2: When Smol Goes Big – Data-Centric Training of a Small Language Model. arXiv 2025, arXiv:2502.02737. [Google Scholar]

- Gemma Team, M.R.; Pathak, S.; Sessa, P.G.; Hardin, C.; Bhupatiraju, S.; Hussenot, L.; Mesnard, T.; Shahriari, B.; Ramé, A.; Ferret, J.; et al. Gemma 2: Improving Open Language Models at a Practical Size. arXiv 2024, arXiv:2408.00118. [Google Scholar] [CrossRef]

- Thawakar, O.; Vayani, A.; Khan, S.; Cholakal, H.; Anwer, R.M.; Felsberg, M.; Baldwin, T.; Xing, E.P.; Khan, F.S. MobiLlama: Towards Accurate and Lightweight Fully Transparent GPT. arXiv 2024, arXiv:2402.16840. [Google Scholar] [CrossRef]

- Mao, Y.; He, P.; Liu, X.; Shen, Y.; Gao, J.; Han, J.; Chen, W. Generation-Augmented Retrieval for Open-Domain Question Answering. arXiv 2020, arXiv:2009.08553. [Google Scholar]

- Qian, H.; Zhang, P.; Liu, Z.; Mao, K.; Dou, Z. MemoRAG: Moving Towards Next-Gen RAG via Memory-Inspired Knowledge Discovery. arXiv 2024, arXiv:2409.05591. [Google Scholar]

- Fan, T.; Wang, J.; Ren, X.; Huang, C. MiniRAG: Towards Extremely Simple Retrieval-Augmented Generation. arXiv 2025, arXiv:2501.06713. [Google Scholar]

- Mallen, A.; Asai, A.; Zhong, V.; Das, R.; Khashabi, D.; Hajishirzi, H. When Not to Trust Language Models: Investigating Effectiveness of Parametric and Non-Parametric Memories. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; Association for Computational Linguistics: Toronto, ON, Canada, 2023; pp. 9802–9822. Available online: https://aclanthology.org/2023.acl-long.556 (accessed on 27 October 2025).

- Qi, P.; Lee, H.; Sido, T.; Manning, C.D. Answering Open-Domain Questions of Varying Reasoning Steps from Text. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP 2021), Virtual Event, 7–11 November 2021; Association for Computational Linguistics: Punta Cana, Dominican Republic, 2021; pp. 3599–3614. Available online: https://aclanthology.org/2021.emnlp-main.285 (accessed on 27 October 2025).

- Asai, A.; Wu, Z.; Wang, Y.; Sil, A.; Hajishirzi, H. Self-RAG: Learning to Retrieve, Generate, and Critique through Self-Reflection. In Proceedings of the Twelfth International Conference on Learning Representations (ICLR 2024), Vienna, Austria, 7–11 May 2024; Available online: https://openreview.net/forum?id=hSyW5go0v8 (accessed on 27 October 2025).

- Lazaridou, A.; Gribovskaya, E.; Stokowiec, W.; Grigorev, N. Internet-Augmented Language Models through Few-Shot Prompting for Open-Domain Question Answering. arXiv 2022, arXiv:2203.05115. [Google Scholar]

- Ram, O.; Levine, Y.; Dalmedigos, I.; Muhlgay, D.; Shashua, A.; Leyton-Brown, K.; Shoham, Y. In-Context Retrieval-Augmented Language Models. Trans. Assoc. Comput. Linguist. 2023, 11, 1316–1331. [Google Scholar] [CrossRef]

- Press, O.; Zhang, M.; Min, S.; Schmidt, L.; Smith, N.A.; Lewis, M. Measuring and Narrowing the Compositionality Gap in Language Models. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, 6–10 December 2023; Available online: https://aclanthology.org/2023.findings-emnlp.745 (accessed on 27 October 2025).

- Trivedi, H.; Balasubramanian, N.; Khot, T.; Sabharwal, A. Interleaving Retrieval with Chain-of-Thought Reasoning for Knowledge-Intensive Multi-Step Questions. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; Association for Computational Linguistics: Toronto, ON, Canada, 2023; pp. 10014–10037. Available online: https://aclanthology.org/2023.acl-long.571 (accessed on 27 October 2025).

- Tang, Y.; Yang, Y. MultiHop-RAG: Benchmarking Retrieval-Augmented Generation for Multi-Hop Queries. arXiv 2024, arXiv:2401.15391. [Google Scholar]

- Taffa, T.A.; Banerjee, D.; Assabie, Y.; Usbeck, R. Hybrid-SQuAD: Hybrid Scholarly Question Answering Dataset. arXiv 2024, arXiv:2412.02788. [Google Scholar]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.-T.; Rocktäschel, T.; et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. Available online: https://proceedings.neurips.cc/paper_files/paper/2020/file/6b493230205f780e1bc26945df7481e5-Paper.pdf (accessed on 27 October 2025).

| Dataset | Model | Accuracy (%) | ||||

|---|---|---|---|---|---|---|

| NaiveRAG | GraphRAG | LightRAG | MiniRAG | RAP-RAG | ||

| LiHuaWorld | Llama-3.2-3B | 41.37 | / | 39.86 | 53.41 | 58.29 |

| Qwen2.5-1.5B | 42.83 | / | 35.79 | 52.63 | 57.52 | |

| MobiLlama-1B | 43.68 | / | 39.25 | 48.83 | 53.71 | |

| gpt-4o-mini | 46.62 | 35.41 | 56.97 | 54.23 | 61.18 | |

| MultiHop-RAG | Llama-3.2-3B | 42.65 | / | 27.19 | 49.87 | 54.71 |

| Qwen2.5-1.5B | 44.37 | / | / | 51.36 | 56.14 | |

| MobiLlama-1B | 39.52 | / | 21.95 | 48.63 | 53.41 | |

| gpt-4o-mini | 53.74 | 60.88 | 64.95 | 68.37 | 71.29 | |

| Hybrid-SQuAD | Llama-3.2-3B | 35.19 | / | 30.27 | 42.73 | 46.61 |

| Qwen2.5-1.5B | 34.46 | / | 29.57 | 41.44 | 47.38 | |

| MobiLlama-1B | 33.25 | / | 28.16 | 39.75 | 44.63 | |

| gpt-4o-mini | 45.63 | 48.24 | 51.39 | 54.18 | 59.13 | |

| Dataset | Model | Accuracy (%) | |

|---|---|---|---|

| Base | -Weights | ||

| LiHuaWorld | Llama-3.2-3B | 58.29 | 52.11 |

| Qwen2.5-1.5B | 57.52 | 51.82 | |

| MobiLlama-1B | 53.71 | 48.96 | |

| gpt-4o-mini | 61.18 | 58.33 | |

| MultiHop-RAG | Llama-3.2-3B | 54.71 | 49.01 |

| Qwen2.5-1.5B | 56.14 | 50.31 | |

| MobiLlama-1B | 53.41 | 47.69 | |

| gpt-4o-mini | 71.29 | 65.17 | |

| Hybrid-SQuAD | Llama-3.2-3B | 46.61 | 41.22 |

| Qwen2.5-1.5B | 47.38 | 42.17 | |

| MobiLlama-1B | 44.63 | 39.79 | |

| gpt-4o-mini | 59.13 | 54.03 | |

| Dataset | Model | Accuracy%/Latency (s) | ||||

|---|---|---|---|---|---|---|

| Base | Vector | Local | Dual | Topology | ||

| LiHuaWorld | Llama-3.2-3B | 58.3/1.42 | 55.1/1.08 | 52.4/0.95 | 53.8/1.21 | 57.8/1.15 |

| Qwen2.5-1.5B | 57.5/1.45 | 54.2/1.05 | 51.8/0.93 | 52.7/1.18 | 56.9/1.12 | |

| MobiLlama-1B | 53.7/1.31 | 50.6/0.97 | 48.3/0.89 | 49.5/1.09 | 52.8/1.03 | |

| gpt-4o-mini | 61.2/1.56 | 58.1/1.21 | 55.6/1.05 | 56.8/1.34 | 60.7/1.27 | |

| MultiHop-RAG | Llama-3.2-3B | 54.7/1.65 | 51.3/1.19 | 48.6/1.03 | 49.8/1.42 | 56.1/1.36 |

| Qwen2.5-1.5B | 56.1/1.69 | 52.6/1.16 | 49.7/1.01 | 51.0/1.39 | 59.3/1.32 | |

| MobiLlama-1B | 53.4/1.58 | 49.8/1.09 | 47.1/0.98 | 48.3/1.29 | 56.2/1.24 | |

| gpt-4o-mini | 71.3/1.84 | 67.9/1.34 | 64.5/1.17 | 65.8/1.58 | 72.1/1.49 | |

| Hybrid-SQuAD | Llama-3.2-3B | 46.6/1.22 | 43.5/0.92 | 40.8/0.81 | 41.9/1.05 | 46.0/0.99 |

| Qwen2.5-1.5B | 47.4/1.24 | 44.1/0.90 | 41.3/0.79 | 42.5/1.03 | 46.8/0.97 | |

| MobiLlama-1B | 44.6/1.15 | 41.0/0.85 | 38.2/0.76 | 39.3/0.98 | 43.7/0.92 | |

| gpt-4o-mini | 59.1/1.36 | 55.2/1.01 | 52.3/0.89 | 53.6/1.17 | 58.5/1.12 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ji, X.; Xu, L.; Gu, L.; Ma, J.; Zhang, Z.; Jiang, W. RAP-RAG: A Retrieval-Augmented Generation Framework with Adaptive Retrieval Task Planning. Electronics 2025, 14, 4269. https://doi.org/10.3390/electronics14214269

Ji X, Xu L, Gu L, Ma J, Zhang Z, Jiang W. RAP-RAG: A Retrieval-Augmented Generation Framework with Adaptive Retrieval Task Planning. Electronics. 2025; 14(21):4269. https://doi.org/10.3390/electronics14214269

Chicago/Turabian StyleJi, Xu, Luo Xu, Landi Gu, Junjie Ma, Zichao Zhang, and Wei Jiang. 2025. "RAP-RAG: A Retrieval-Augmented Generation Framework with Adaptive Retrieval Task Planning" Electronics 14, no. 21: 4269. https://doi.org/10.3390/electronics14214269

APA StyleJi, X., Xu, L., Gu, L., Ma, J., Zhang, Z., & Jiang, W. (2025). RAP-RAG: A Retrieval-Augmented Generation Framework with Adaptive Retrieval Task Planning. Electronics, 14(21), 4269. https://doi.org/10.3390/electronics14214269