Enhanced Research on YOLOv12 Detection of Apple Defects by Integrating Filter Imaging and Color Space Reconstruction

Abstract

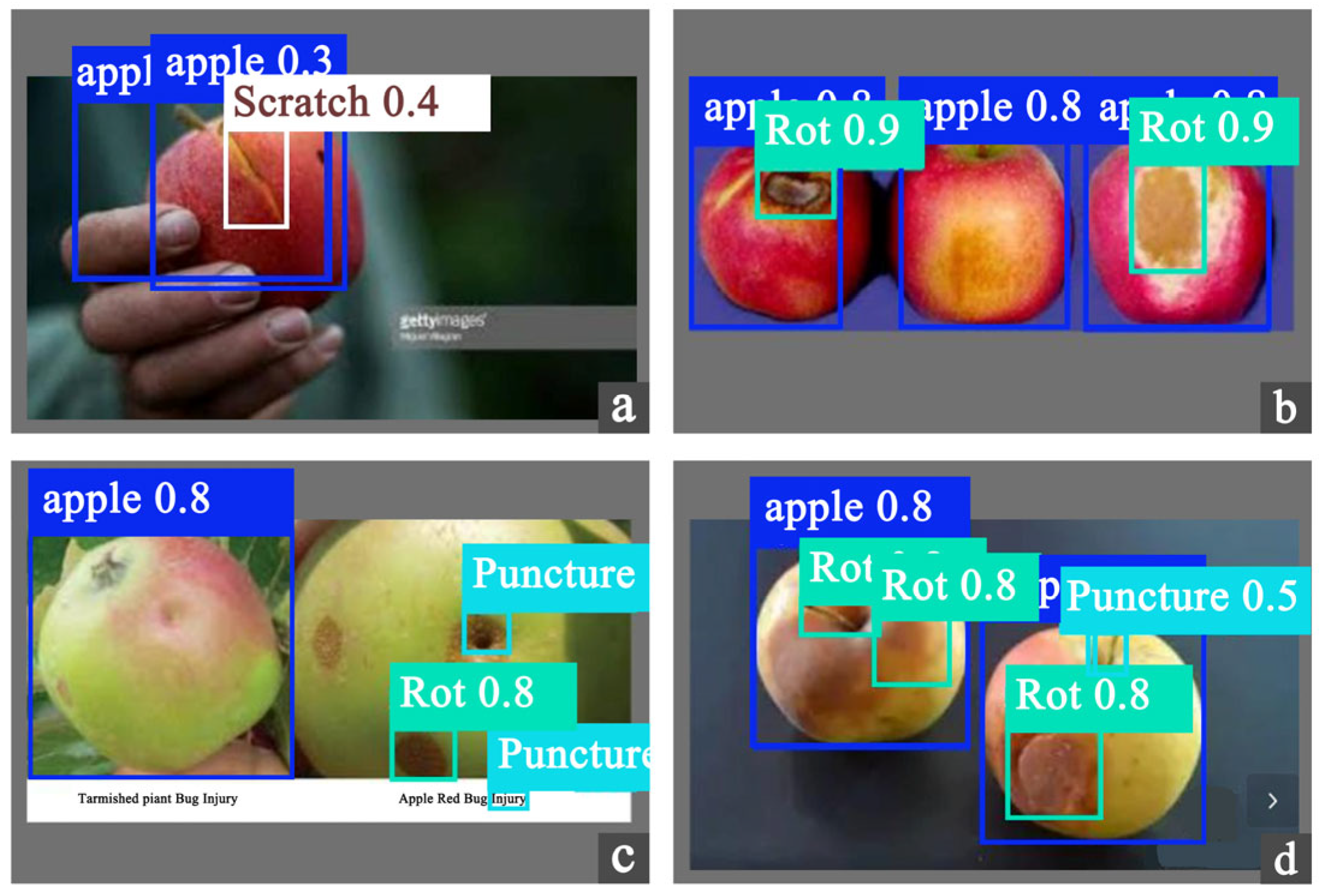

1. Introduction

1.1. Background and Related Work

1.2. Research Motivation and Contributions

- (1)

- A high-quality multi-filter and multi-illumination dataset of “Red Fuji” apples was established, providing a reproducible and reliable data foundation for this research.

- (2)

- The proposed system systematically compared the integration of RGB, HSI, and LAB color spaces with filtered imaging, evaluating their comprehensive performance across multiple dimensions, including detection accuracy, inference speed, and stability, with particular attention to performance variations under complex illumination conditions.

- (3)

- Experimental results demonstrate that the combination of green filtering and RGB reconstruction (G-RGB) achieves the optimal balance between accuracy and computational efficiency. Furthermore, external validation confirms that this configuration maintains high performance in open scenarios and significantly surpasses other color space (HSI, LAB) and filtered imaging combinations in apple defect detection reported in this study.

2. Materials and Methods

2.1. Sample Selection and Experimental Setup

2.1.1. Sample Selection

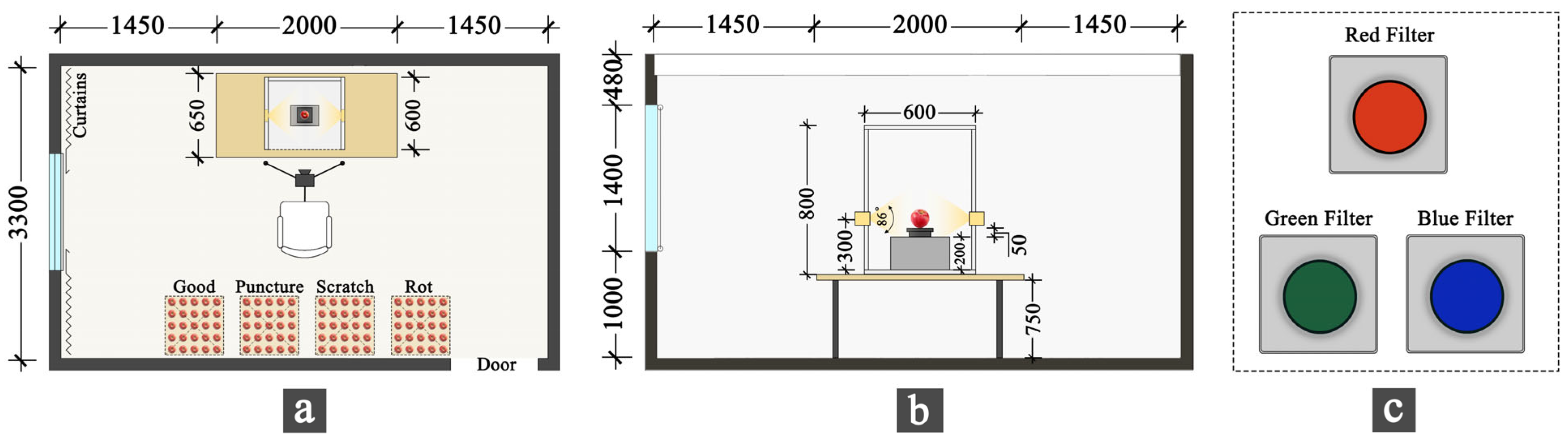

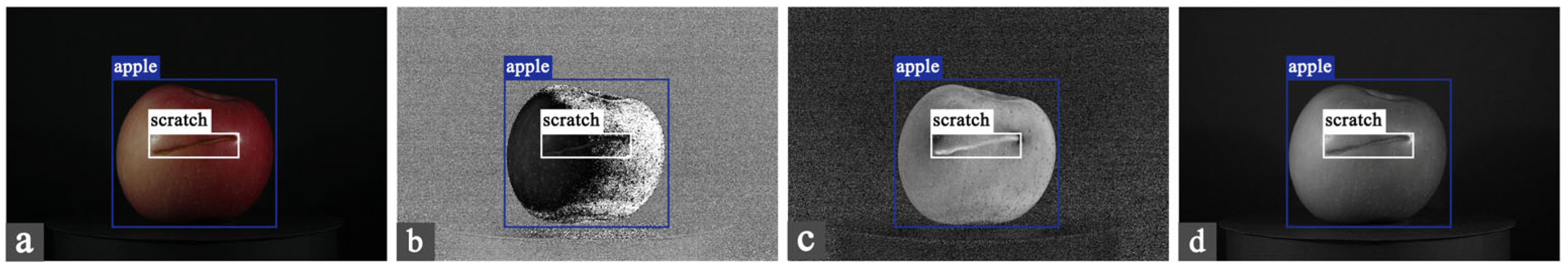

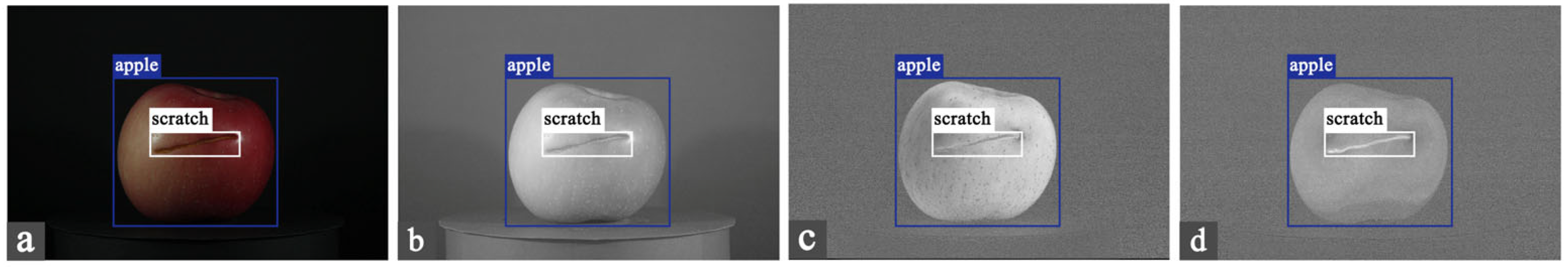

2.1.2. Experimental Setup

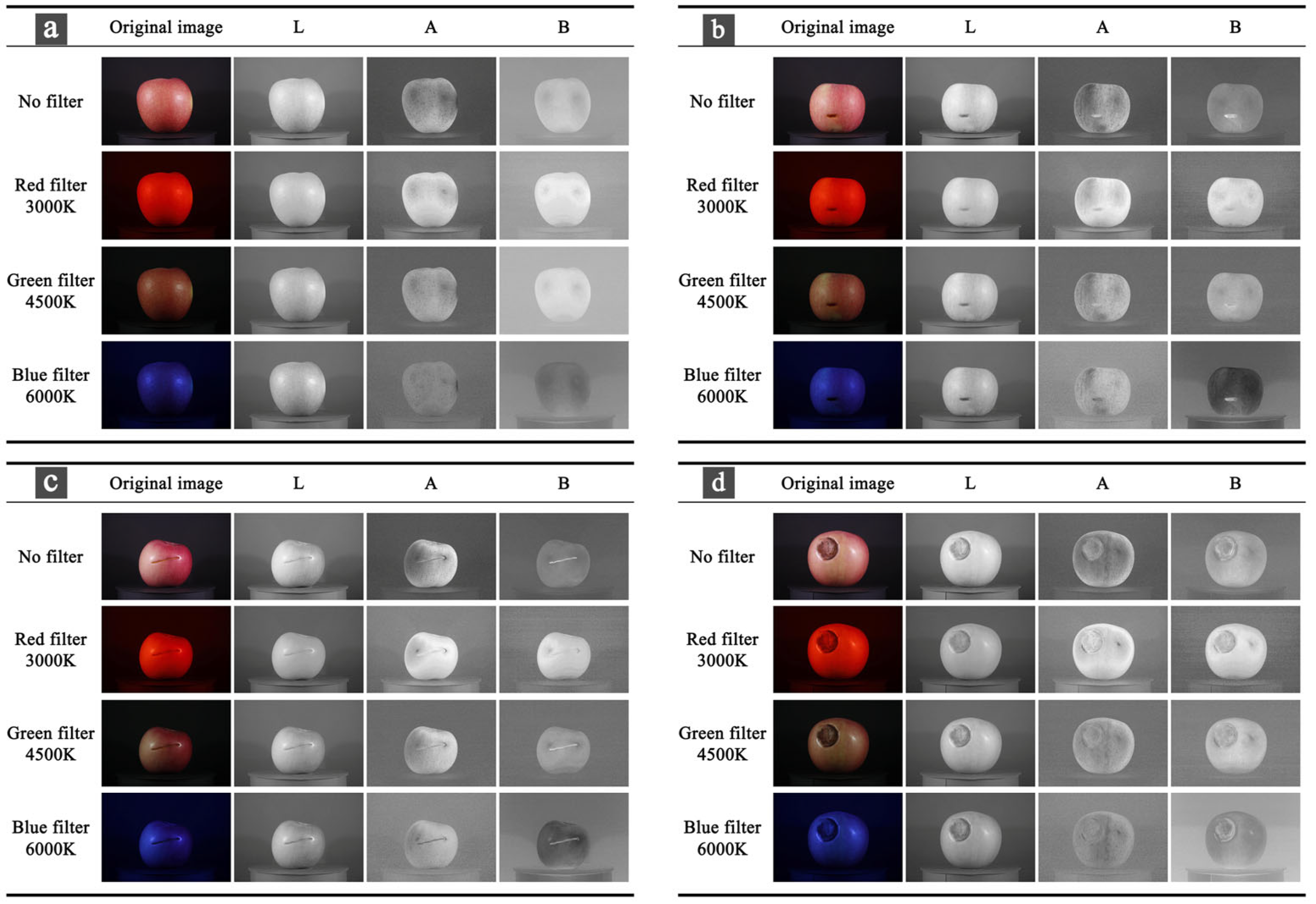

2.2. Pre-Experiment and Dataset

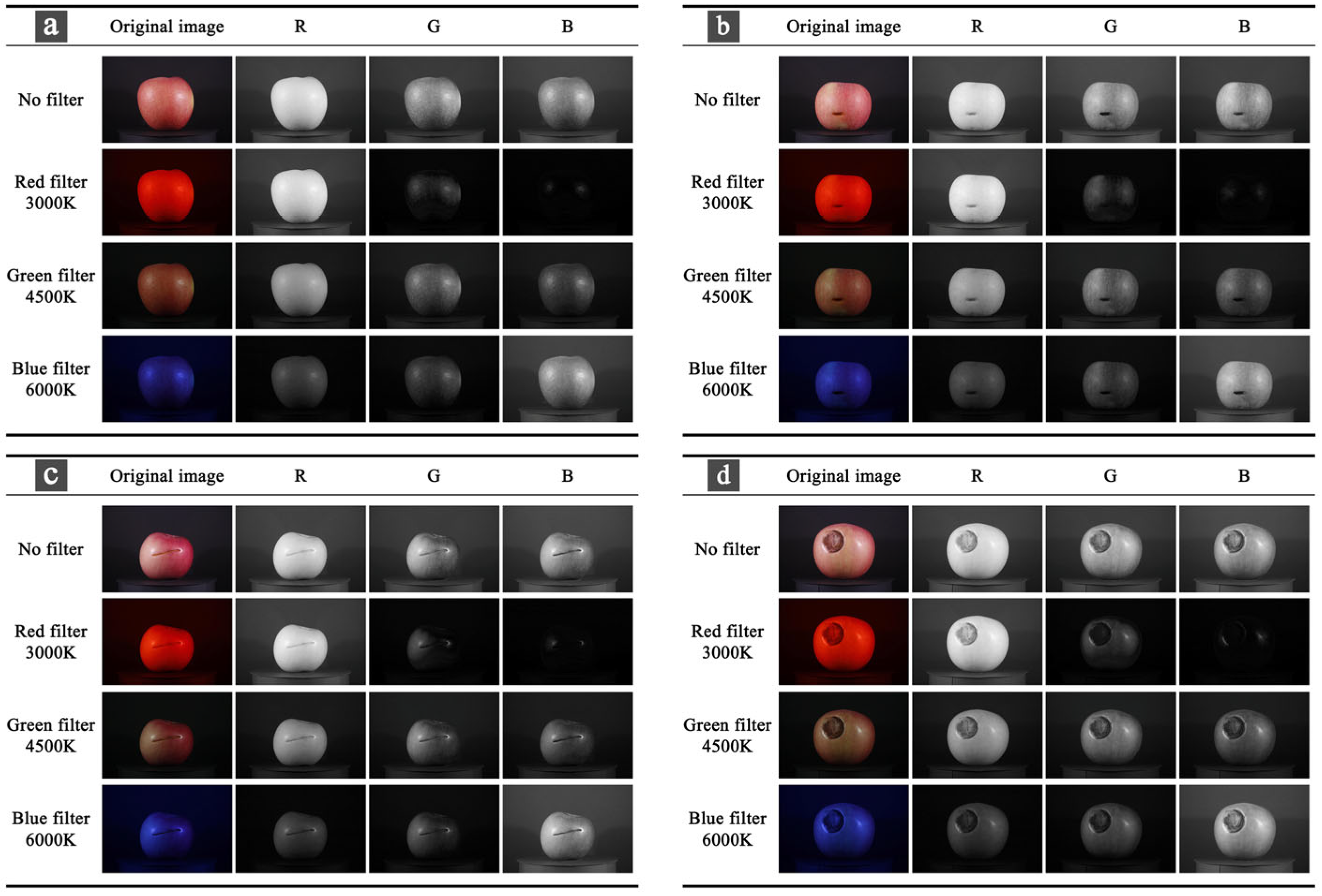

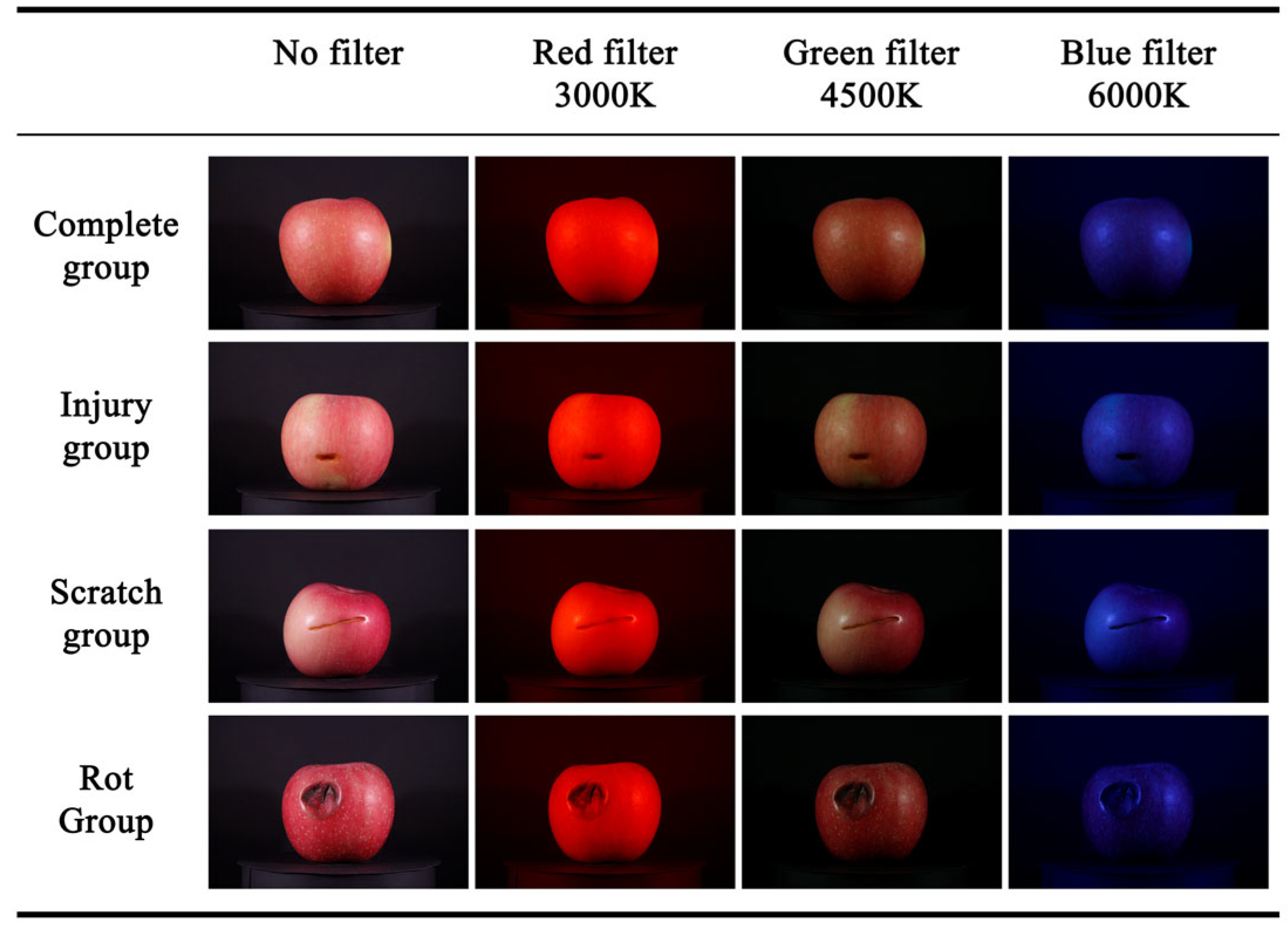

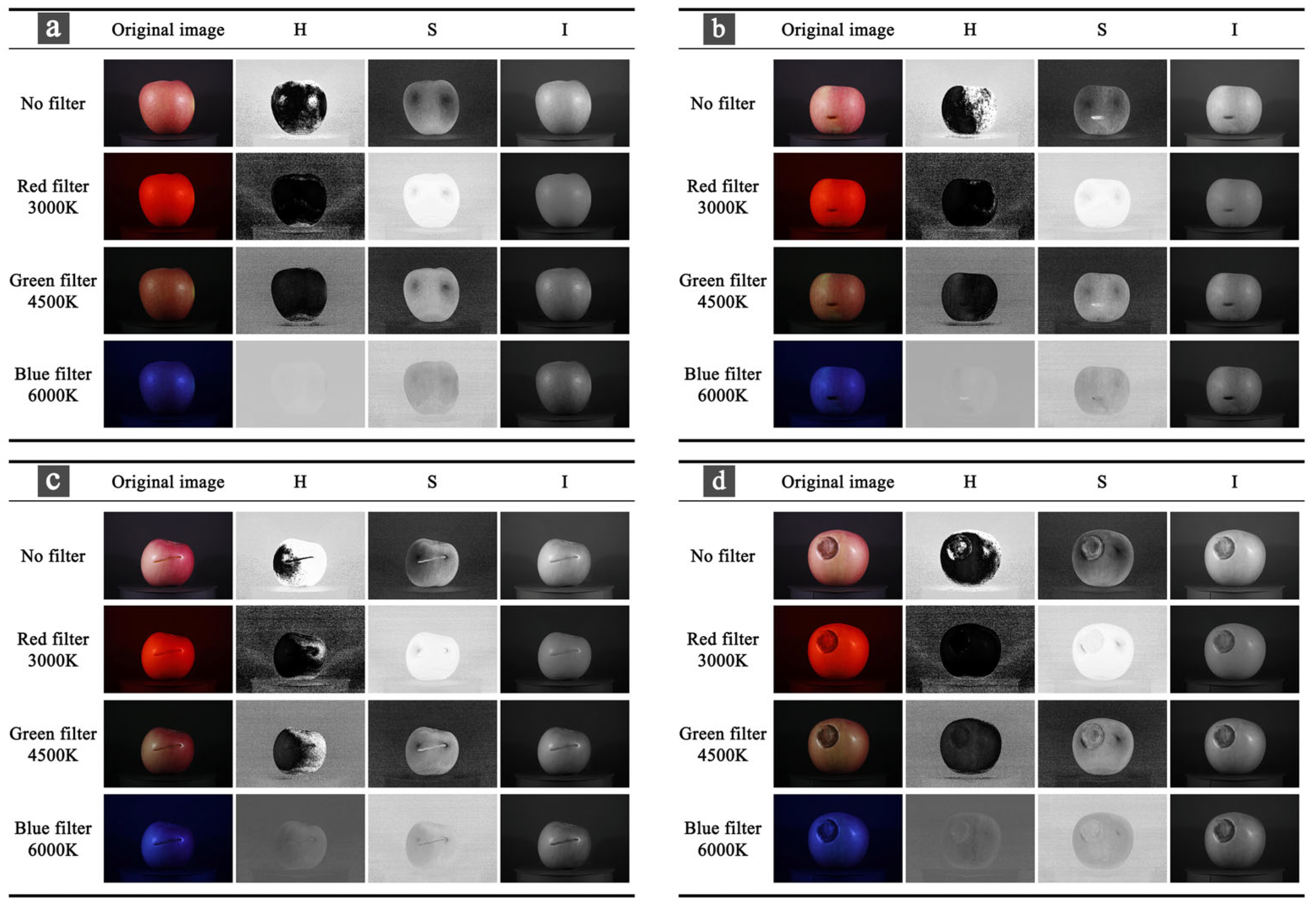

2.2.1. Pre-Experiment

2.2.2. Dataset Construction

2.3. Color Space Conversion

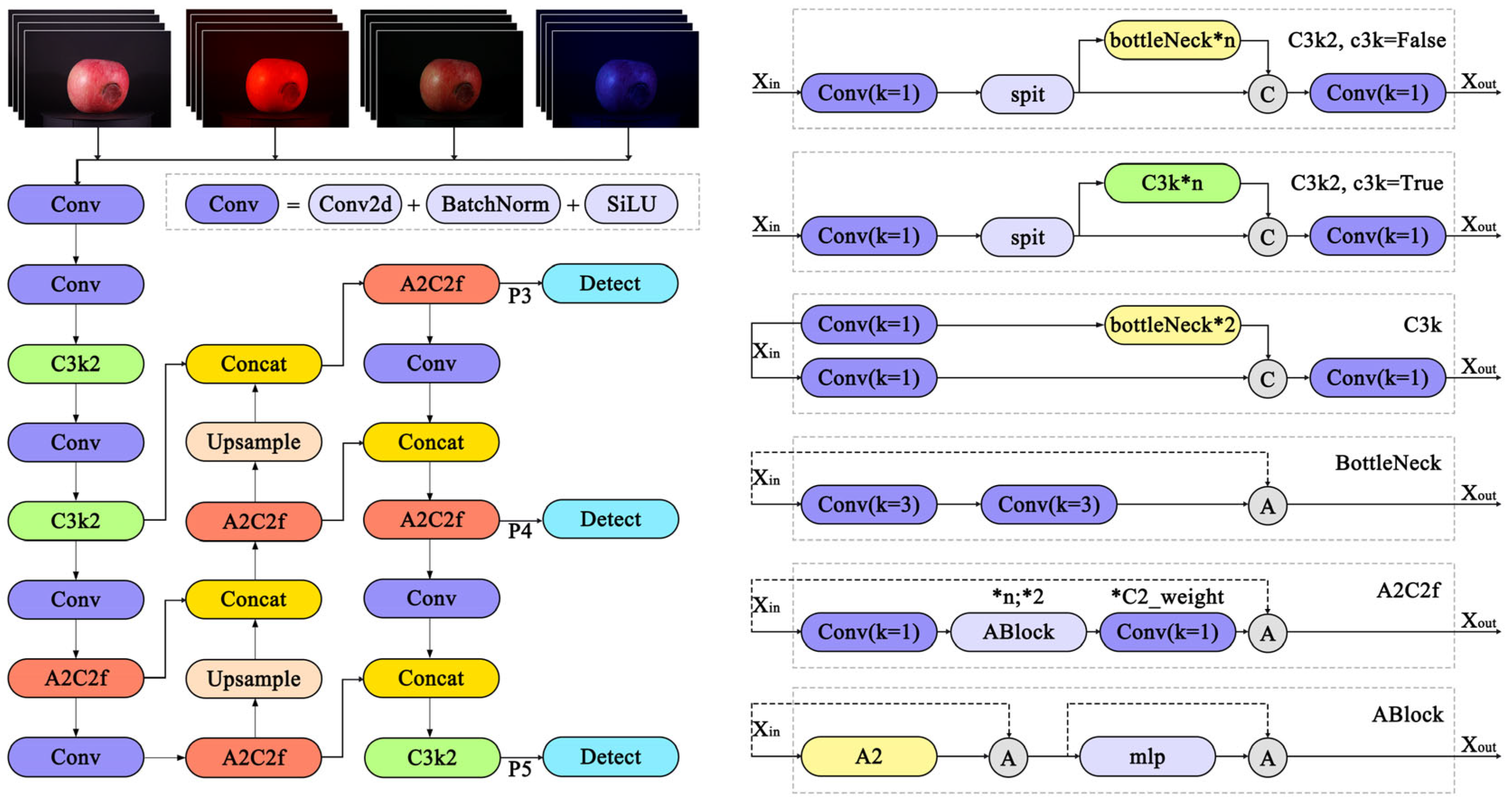

2.4. Apple Damage Detection Methods

2.5. Evaluation Methods

3. Results

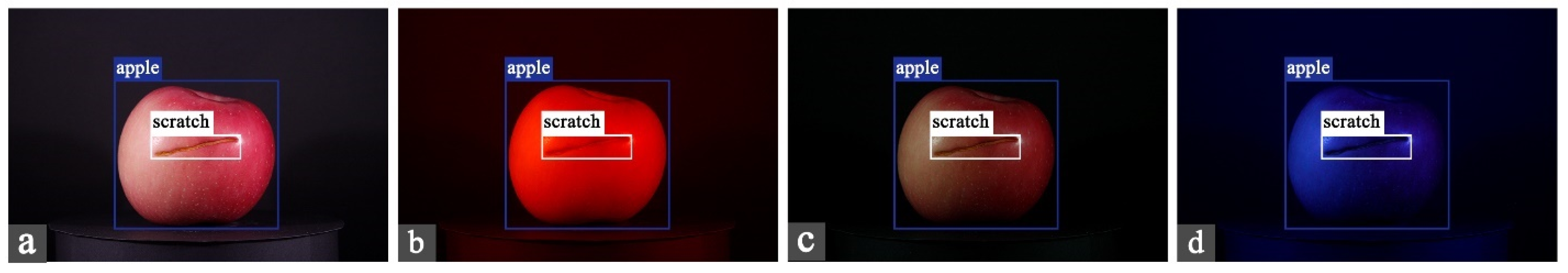

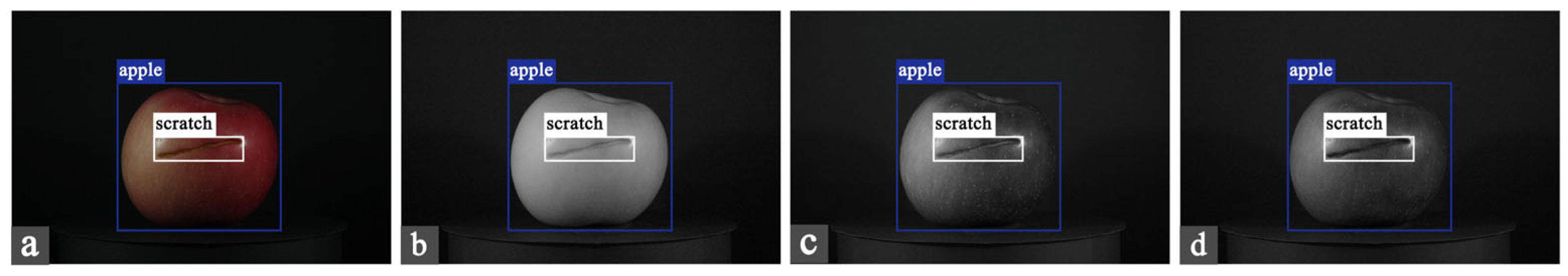

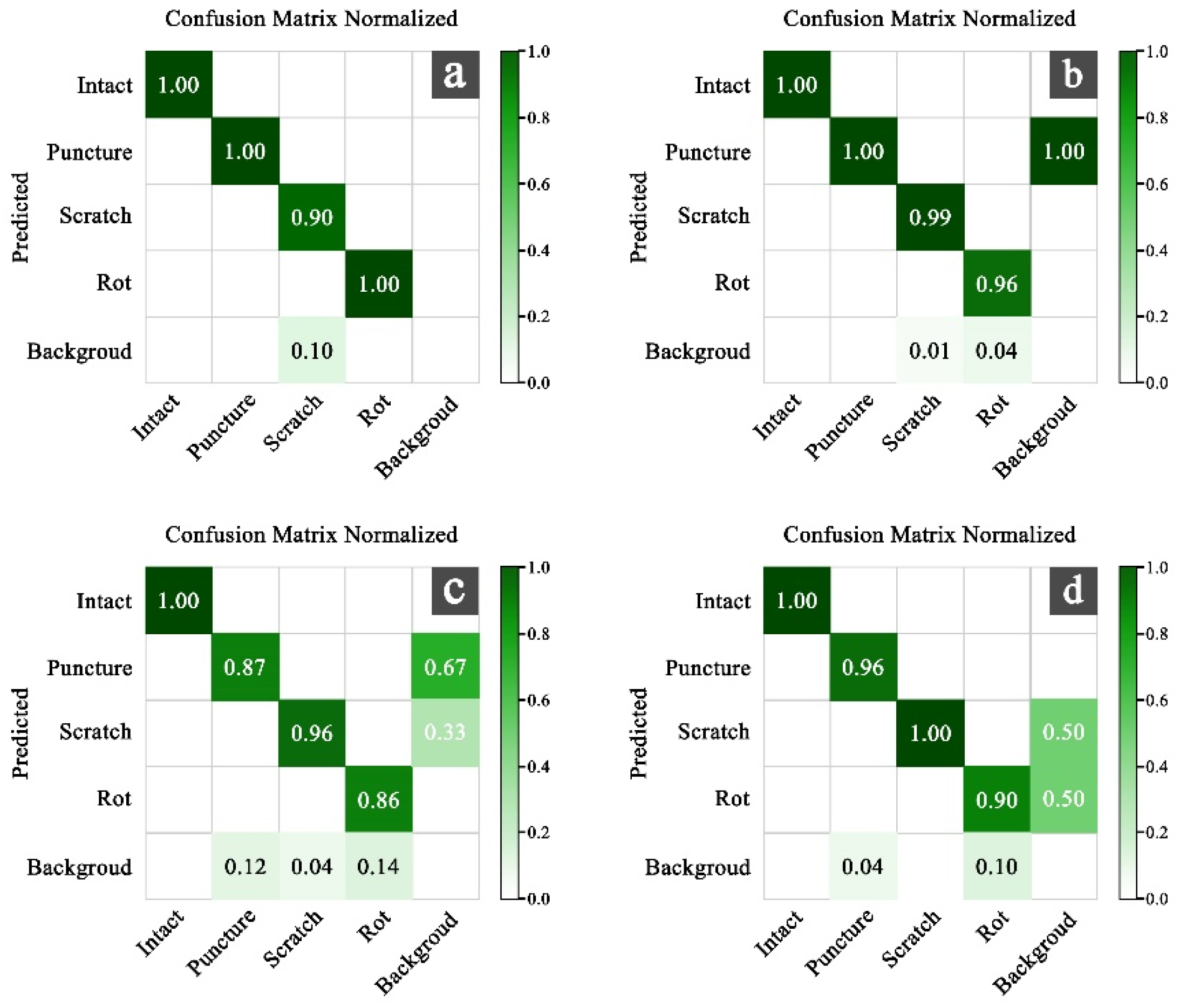

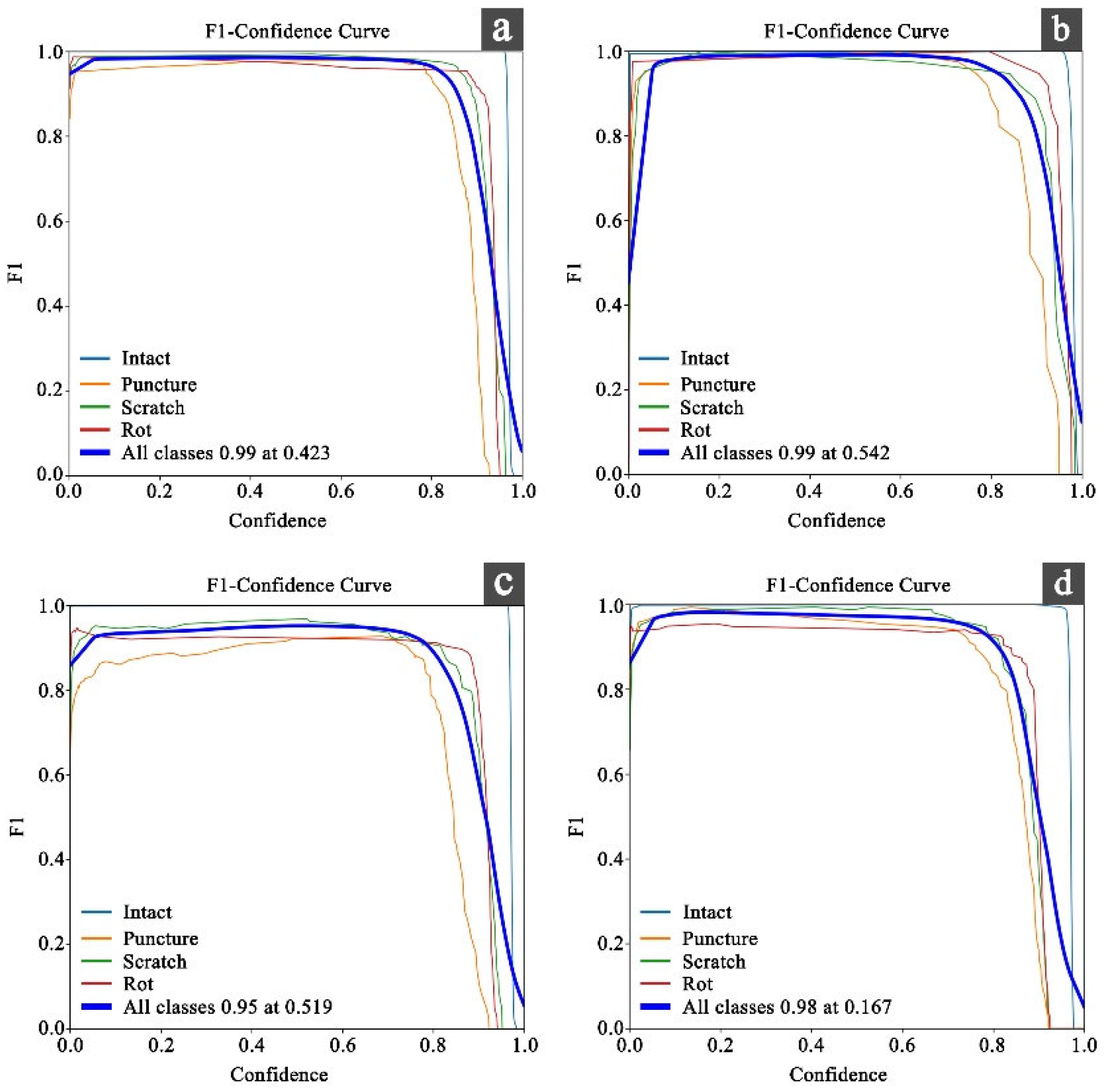

3.1. Comparison of Model Results Under Four Filtered Datasets

3.2. Comparison of Model Results of RGB Channels Under Four Filtering Conditions

3.3. Comparison of Model Results of HSI Channels Under Four Filtering Conditions

3.4. Comparison of Model Results of LAB Channels Under Four Filtering Conditions

3.5. Comparison of Model Results of Green Filters in Four Color Spaces

4. Discussion

4.1. Optimal Color Filter Space Combination and Its Mechanism of Action

4.2. Compared with Previous Studies

4.3. Restriction

4.4. Practical Significance and Future Development Direction

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Vasylieva, N.; James, H. Production and Trade Patterns in the World Apple Market. Innov. Mark. 2021, 17, 16–25. [Google Scholar] [CrossRef]

- Cubero, S.; Lee, W.S.; Aleixos, N.; Albert, F.; Blasco, J. Automated Systems Based on Machine Vision for Inspecting Citrus Fruits from the Field to Postharvest—A Review. Food Bioprocess Technol. 2016, 9, 1623–1639. [Google Scholar] [CrossRef]

- Blasco, J.; Aleixos, N.; Moltó, E. Machine vision system for automatic quality grading of fruit. Biosyst. Eng. 2003, 85, 415–423. [Google Scholar] [CrossRef]

- Chen, H.; Qiao, H.; Lin, B.; Xu, G.; Tang, G.; Cai, K. Study of modeling optimization for hyperspectral imaging quantitative determination of naringin content in pomelo peel. Comput. Electron. Agric. 2019, 157, 410–416. [Google Scholar] [CrossRef]

- Li, Q.; Wang, M.; Gu, W. Computer vision based system for apple surface defect detection. Comput. Electron. Agric. 2002, 36, 215–223. [Google Scholar] [CrossRef]

- Du, C.; Sun, D. Learning techniques used in computer vision for food quality evaluation: A review. J. Food Eng. 2006, 72, 39–55. [Google Scholar] [CrossRef]

- Safari, Y.; Nakatumba-Nabende, J.; Nakasi, R.; Nakibuule, R. A Review on Automated Detection and Assessment of Fruit Damage Using Machine Learning. IEEE Access 2024, 12, 21358–21381. [Google Scholar] [CrossRef]

- Xu, B.; Cui, X.; Ji, W.; Yuan, H.; Wang, J. Apple Grading Method Design and Implementation for Automatic Grader Based on Improved YOLOv5. Agriculture 2023, 13, 124. [Google Scholar] [CrossRef]

- Ünal, İ.; Eceoğlu, O. A Lightweight Instance Segmentation Model for Simultaneous Detection of Citrus Fruit Ripeness and Red Scale (Aonidiella aurantii) Pest Damage. Appl. Sci. 2025, 15, 9742. [Google Scholar] [CrossRef]

- Alam, M.D.N.; Ullah, I.; Al-Absi, A.A. Deep Learning-Based Apple Defect Detection with Residual SqueezeNet. In Proceedings of the International Conference on Smart Computing and Cyber Security, Gyeongsan, Republic of Korea, 7–8 July 2020; Springer: Gyeongsan, Republic of Korea, 2023. [Google Scholar] [CrossRef]

- Cardellicchio, A.; Renò, V.; Cellini, F.; Summerer, S.; Petrozza, A.; Milella, A. Incremental learning with domain adaption for tomato plant phenotyping. Smart Agric. Technol. 2025, 12, 101324. [Google Scholar] [CrossRef]

- Ribeiro, D.; Tavares, D.; Tiradentes, E.; Santos, F.; Rodriguez, D. Performance Evaluation of YOLOv11 and YOLOv12 Deep Learning Architectures for Automated Detection and Classification of Immature Macauba (Acrocomia aculeata) Fruits. Agriculture 2025, 15, 1571. [Google Scholar] [CrossRef]

- Chen, J.; Fu, H.; Lin, C.; Liu, X.; Wang, L.; Lin, Y. YOLOPears: A novel benchmark of YOLO object detectors for multi-class pear surface defect detection in quality grading systems. Front. Plant Sci. 2025, 16, 1483824. [Google Scholar] [CrossRef]

- Lu, Y.; Lu, R. Non-Destructive Defect Detection of Apples by Spectroscopic and Imaging Technologies: A Review. Trans. Asabe 2017, 60, 1765–1790. [Google Scholar] [CrossRef]

- Huang, Z.; Hou, Y.; Cao, M.; Du, C.; Guo, J.; Yu, Z.; Sun, Y.; Zhao, Y.; Wang, H.; Wang, X.; et al. Mechanisms of Machine Vision Feature Recognition and Quality Prediction Models in Intelligent Production Line for Broiler Carcasses. Intell. Sustain. Manuf. 2025, 2, 10016. [Google Scholar] [CrossRef]

- Kondoyanni, M.; Loukatos, D.; Templalexis, C.; Lentzou, D.; Xanthopoulos, G.; Arvanitis, K.G. Computer Vision in Monitoring Fruit Browning: Neural Networks vs. Stochastic Modelling. Sensors 2025, 25, 2482. [Google Scholar] [CrossRef] [PubMed]

- Ji, Y.; Zhao, Q.; Bi, S.; Shen, T. Apple Grading Method Based on Features of Color and Defect. In Proceedings of the 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018. [Google Scholar] [CrossRef]

- Yang, L.; Mu, D.; Xu, Z.; Huang, K. Apple Surface Defect Detection Based on Gray Level Co-Occurrence Matrix and Retinex Image Enhancement. Appl. Sci. 2023, 13, 12481. [Google Scholar] [CrossRef]

- Gómez-Sanchis, J.; Lorente, D.; Soria-Olivas, E.; Aleixos, N.; Cubero, S.; Blasco, J. Development of a Hyperspectral Computer Vision System Based on Two Liquid Crystal Tuneable Filters for Fruit Inspection. Application to Detect Citrus Fruits Decay. Food Bioprocess Technol. 2014, 7, 1047–1056. [Google Scholar] [CrossRef]

- Chakour, E.; Mrad, Y.; Mansouri, A.; Elloumi, Y.; Benatiya Andaloussi, I.; Hedi Bedoui, M.; Ahaitouf, A. Enhanced Retinal Vessel Segmentation Using Dynamic Contrast Stretching and Mathematical Morphology on Fundus Images. Appl. Comput. Intell. Soft Comput. 2025, 2025, 8831503. [Google Scholar] [CrossRef]

- Lee, S.; Lee, S. Efficient Data Augmentation Methods for Crop Disease Recognition in Sustainable Environmental Systems. Big Data Cogn. Comput. 2025, 9, 8. [Google Scholar] [CrossRef]

- Hu, Q.; Guo, Y.; Xie, X.; Cordy, M.; Ma, W.; Papadakis, M.; Ma, L.; Le Traon, Y. Assessing the Robustness of Test Selection Methods for Deep Neural Networks. ACM Trans. Softw. Eng. Methodol. 2025, 34, 1–26. [Google Scholar] [CrossRef]

- Khan, Z.; Shen, Y.; Liu, H. ObjectDetection in Agriculture: A Comprehensive Review of Methods, Applications, Challenges, and Future Directions. Agriculture 2025, 15, 1351. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, L.; Shi, R.; Li, J. Detection of bruised apples using structured light stripe combination image and stem/calyx feature enhancement strategy coupled with deep learning models. Agric. Commun. 2025, 3, 100074. [Google Scholar] [CrossRef]

- Komarnicki, P.; Stopa, R.; Szyjewicz, D.; Kuta, A.; Klimza, T. Influence of Contact Surface Type on the Mechanical Damages of Apples Under Impact Loads. Food Bioprocess Technol. 2017, 10, 1479–1494. [Google Scholar] [CrossRef][Green Version]

- Kleynen, O.; Leemans, V.; Destain, M.F. Selection of the most efficient wavelength bands for ‘Jonagold’ apple sorting. Postharvest Biol. Technol. 2003, 30, 221–232. [Google Scholar] [CrossRef][Green Version]

- Bennedsen, B.S.; Peterson, D.L.; Tabb, A. Identifying defects in images of rotating apples. Comput. Electron. Agric. 2005, 48, 92–102. [Google Scholar] [CrossRef]

- Siddiqi, R. Automated apple defect detection using state-of-the-art object detection techniques. Sn Appl. Sci. 2019, 1, 1345. [Google Scholar] [CrossRef]

- Cubero, S.; Aleixos, N.; Moltó, E.; Gómez-Sanchis, J.; Blasco, J. Advances in Machine Vision Applications for Automatic Inspection and Quality Evaluation of Fruits and Vegetables. Food Bioprocess Technol. 2011, 4, 487–504. [Google Scholar] [CrossRef]

- Leemans, V.; Magein, H.; Destain, M. Defects Segmentation on ‘golden Delicious’ Apples by Using Colour Machine Vision. Comput. Electron. Agric. 1998, 20, 117–130. [Google Scholar] [CrossRef]

- Mehl, P.M.; Chao, K.; Kim, M.; Chen, Y.R. Detection of Defects on Selected Apple Cultivars Using Hyperspectral and Multispectral Image Analysis. Appl. Eng. Agric. 2002, 18, 219–226. [Google Scholar] [CrossRef]

- Soltani Firouz, M.; Sardari, H. Defect Detection in Fruit and Vegetables by Using Machine Vision Systems and Image Processing. Food Eng. Rev. 2022, 14, 353–379. [Google Scholar] [CrossRef]

- Ren, Z.; Fang, F.; Yan, N.; Wu, Y. State of the Art in Defect Detection Based on Machine Vision. Int. J. Precis. Eng. Manuf.-Green Technol. 2022, 9, 661–691. [Google Scholar] [CrossRef]

- Bataineh, B.; Almotairi, K.H. Enhancement Method for Color Retinal Fundus Images Based on Structural Details and Illumination Improvements. Arab. J. Sci. Eng. 2021, 46, 8121–8135. [Google Scholar] [CrossRef]

- Burambekova, A.; Shamoi, P. Comparative Analysis of Color Models for Human Perception and Visual Color Difference. arXiv 2024, arXiv:2406.19520. [Google Scholar] [CrossRef]

- Sirisathitkul, Y.; Dinmeung, N.; Noonsuk, W.; Sirisathitkul, C. Accuracy and precision of smartphone colorimetry: A comparative analysis in RGB, HSV, and CIELAB color spaces for archaeological research. Sci. Technol. Archaeol. Res. 2025, 11, e2444168. [Google Scholar] [CrossRef]

- Goel, V.; Singhal, S.; Jain, T.; Kole, S. Specific Color Detection in Images using RGB Modelling in MATLAB. Int. J. Comput. Appl. 2017, 161, 38–42. [Google Scholar] [CrossRef]

- Saravanan, G.; Yamuna, G.; Nandhini, S. Real time implementation of RGB to HSV/HSI/HSL and its reverse color space models. In Proceedings of the 2016 International Conference on Communication and Signal Processing (ICCSP), Melmaruvathur, India, 6–8 April 2016. [Google Scholar] [CrossRef]

- Smith, T.; Guild, J. The C.I.E. Colorimetric Standards and Their Use. Trans. Opt. Soc. 1931, 33, 73–134. [Google Scholar] [CrossRef]

- Wang, M.; Li, F. Real-Time Accurate Apple Detection Based on Improved YOLOv8n in Complex Natural Environments. Plants 2025, 14, 365. [Google Scholar] [CrossRef]

- Han, B.; Lu, Z.; Dong, L.; Zhang, J. Lightweight Non-Destructive Detection of Diseased Apples Based on Structural Re-Parameterization Technique. Appl. Sci. 2024, 14, 1907. [Google Scholar] [CrossRef]

- Sikdar, A.; Igamberdiev, A.U.; Sun, S.; Debnath, S.C. Deep learning for horticultural innovation: YOLOv12s revolutionizes micropropagated lingonberry phenotyping through unified phenomic-genomic-epigenomic detection. Smart Agric. Technol. 2025, 12, 101388. [Google Scholar] [CrossRef]

- Zhao, X.; Lin, L.; Guo, X.; Wang, Z.; Li, R. Evaluation of Rural Visual Landscape Quality Based on Multi-Source Affective Computing. Appl. Sci. 2025, 15, 4905. [Google Scholar] [CrossRef]

- Allebosch, G.; Van Hamme, D.; Veelaert, P.; Philips, W. Efficient Detection of Crossing Pedestrians from a Moving Vehicle with an Array of Cameras. Opt. Eng. 2023, 62, 031210. [Google Scholar] [CrossRef]

- Gündüz, M.Ş.; Işık, G. A new YOLO-based method for real-time crowd detection from video and performance analysis of YOLO models. J. Real-Time Image Process. 2023, 20, 5. [Google Scholar] [CrossRef]

- Chen, D.; Kang, F.; Chen, J.; Zhu, S.; Li, H. Effect of light source wavelength on surface defect imaging in deep-water concrete dams. NDT E Int. 2024, 147, 103198. [Google Scholar] [CrossRef]

- Safren, O.; Alchanatis, V.; Ostrovsky, V.; Levi, O. Detection of Green Apples in Hyperspectral Images of Apple-Tree Foliage Using Machine Vision. Trans. Asabe 2007, 50, 2303–2313. [Google Scholar] [CrossRef]

- Zhao, J.; Kechasov, D.; Rewald, B.; Bodner, G.; Verheul, M.; Clarke, N.; Clarke, J.L. Deep Learning in Hyperspectral Image Reconstruction from Single RGB images—A Case Study on Tomato Quality Parameters. Remote Sens. 2020, 12, 3258. [Google Scholar] [CrossRef]

- Guerri, M.F.; Distante, C.; Spagnolo, P.; Bougourzi, F.; Taleb-Ahmed, A. Deep learning techniques for hyperspectral image analysis in agriculture: A review. Isprs Open J. Photogramm. Remote Sens. 2024, 12, 100062. [Google Scholar] [CrossRef]

- Ariana, D.; Guyer, D.E.; Shrestha, B. Integrating multispectral reflectance and fluorescence imaging for defect detection on apples. Comput. Electron. Agric. 2006, 50, 148–161. [Google Scholar] [CrossRef]

- Lee, H.; Yang, C.; Kim, M.S.; Lim, J.; Cho, B.; Lefcourt, A.; Chao, K.; Everard, C.D. A Simple Multispectral Imaging Algorithm for Detection of Defects on Red Delicious Apples. J. Biosyst. Eng. 2014, 39, 142–149. [Google Scholar] [CrossRef]

- Coello, O.; Coronel, M.; Carpio, D.; Vintimilla, B.; Chuquimarca, L. Enhancing Apple’s Defect Classification: Insights from Visible Spectrum and Narrow Spectral Band Imaging. In Proceedings of the 2024 14th International Conference on Pattern Recognition Systems (ICPRS), London, UK, 15–18 July 2024. [Google Scholar] [CrossRef]

- Elsherbiny, O.; Zhou, L.; He, Y.; Qiu, Z. A novel hybrid deep network for diagnosing water status in wheat crop using IoT-based multimodal data. Comput. Electron. Agric. 2022, 203, 107453. [Google Scholar] [CrossRef]

| Type | Number | Particulars |

|---|---|---|

| Intact | 25 | no matter |

| Scratch | 25 | Use a screwdriver to prick the surface of the apple. The depth is 5 mm, the length is 1 cm, and the width is 5 mm. |

| Puncture | 25 | Make cuts on the surface of the apple with a knife, with a depth of 2 mm, a length of 1.5 cm, and a width of 2 mm. |

| Rot | 25 | Hammer the surface of the apple with the tail of the screwdriver, fixing one position and hammering five times. And place it at a constant temperature indoors for 7 days |

| Type | No Filter | Red Filter (3000 K) | Green Filter (4500 K) | Blue Filter (6000 K) | Total (Pieces) |

|---|---|---|---|---|---|

| All apples | 400 | 400 | 400 | 400 | 1600 |

| Intact | 100 | 100 | 100 | 100 | 400 |

| Scratch | 100 | 100 | 100 | 100 | 400 |

| Puncture | 100 | 100 | 100 | 100 | 400 |

| Rot | 100 | 100 | 100 | 100 | 400 |

| Type | Precision | Recall | mAP50 | mAP50-95 | FPS | |

|---|---|---|---|---|---|---|

| YOLOv9s | Intact | 95.3% | 92.1% | 93.2% | 85.7% | |

| Scratch | 88.6% | 75.2% | 82.3% | 60.1% | ||

| Puncture | 92.5% | 88.3% | 90.4% | 55.2% | ||

| Rot | 90.8% | 86.7% | 88.7% | 52.4% | ||

| All | 91.8% | 85.6% | 88.6% | 63.3% | 9.25 | |

| YOLOv10s | Intact | 96.1% | 93.5% | 94.8% | 87.2% | |

| Scratch | 90.2% | 78.5% | 84.6% | 63.5% | ||

| Puncture | 93.4% | 90.1% | 91.7% | 58.3% | ||

| Rot | 91.7% | 88.9% | 90.3% | 55.7% | ||

| All | 92.8% | 87.8% | 90.4% | 66.2% | 8.83 | |

| YOLOv11s | Intact | 97.2% | 95.3% | 96.3% | 89.5% | |

| Scratch | 92.5% | 82.3% | 87.4% | 68.2% | ||

| Puncture | 95.1% | 92.4% | 93.7% | 62.5% | ||

| Rot | 93.6% | 90.8% | 92.2% | 60.3% | ||

| All | 94.6% | 90.2% | 92.4% | 70.1% | 10.12 | |

| YOLOv12s | Intact | 99.7% | 100% | 99.5% | 98.8% | |

| Scratch | 99.2% | 90.0% | 95.8% | 78.0% | ||

| Puncture | 99.7% | 100% | 99.5% | 66.5% | ||

| Rot | 99.0% | 100% | 99.5% | 64.6% | ||

| All | 99.4% | 97.5% | 98.6% | 79.6% | 10.52 |

| Type | Precision | Recall | mAP50 | mAP50-95 | FPS | |

|---|---|---|---|---|---|---|

| Original | Intact | 99.7% | 100% | 99.5% | 98.8% | |

| Scratch | 99.2% | 90.0% | 95.8% | 78.0% | ||

| Puncture | 99.7% | 100% | 99.5% | 66.5% | ||

| Rot | 99.0% | 100% | 99.5% | 64.6% | ||

| All | 99.4% | 97.5% | 98.6% | 79.6% | 10.52 | |

| R | Intact | 98.5% | 97.0% | 98.0% | 92.0% | |

| Scratch | 98.5% | 93.0% | 97.0% | 76.0% | ||

| Puncture | 98.0% | 99.0% | 98.5% | 64.0% | ||

| Rot | 97.0% | 96.0% | 96.5% | 75.0% | ||

| All | 98.0% | 96.3% | 97.8% | 76.8% | 8.26 | |

| G | Intact | 99.5% | 100% | 99.5% | 99.4% | |

| Scratch | 100% | 95.5% | 99.5% | 88.2% | ||

| Puncture | 94.5% | 100% | 99.0% | 69.4% | ||

| Rot | 98.3% | 100% | 99.5% | 80.6% | ||

| All | 98.1% | 98.9% | 99.4% | 82.7% | 10.78 | |

| B | Intact | 98.5% | 98.0% | 98.5% | 92.0% | |

| Scratch | 98.0% | 95.0% | 97.0% | 78.0% | ||

| Puncture | 99.0% | 100% | 99.0% | 62.0% | ||

| Rot | 97.5% | 98.0% | 98.0% | 79.0% | ||

| All | 98.1% | 97.0% | 98.1% | 77.8% | 6.74 |

| Precision | Recall | mAP50 | mAP50-95 | FPS | |

|---|---|---|---|---|---|

| O-RGB | 98.9% | 99.6% | 99.4% | 79.8% | 13.33 |

| R-RGB | 99.3% | 96.9% | 99.0% | 77.6% | 12.20 |

| G-RGB | 98.8% | 98.5% | 98.9% | 83.1% | 15.15 |

| B-RGB | 98.5% | 99.5% | 99.4% | 80.8% | 11.83 |

| O-RGB | 98.9% | 99.6% | 99.4% | 79.8% | 13.33 |

| Precision | Recall | mAP50 | mAP50-95 | FPS | |

|---|---|---|---|---|---|

| O-HSI | 97.5% | 95.3% | 97.8% | 76.6% | 6.87 |

| R-HSI | 98.7% | 90.3% | 96.9% | 73.3% | 10.78 |

| G-HSI | 98.7% | 92.3% | 97.1% | 79.1% | 7.30 |

| B-HSI | 98.4% | 97.3% | 98.6% | 78.5% | 6.70 |

| O-HSI | 97.5% | 95.3% | 97.8% | 76.6% | 6.87 |

| Precision | Recall | mAP50 | mAP50-95 | FPS | |

|---|---|---|---|---|---|

| O-LAB | 99.0% | 94.0% | 98.0% | 77.2% | 6.70 |

| R-LAB | 97.0% | 98.1% | 99.3% | 78.5% | 10.60 |

| G-LAB | 98.6% | 98.0% | 99.0% | 81.8% | 12.11 |

| B-LAB | 99.4% | 97.7% | 98.5% | 78.8% | 7.69 |

| O-LAB | 99.0% | 94.0% | 98.0% | 77.2% | 6.70 |

| Precision | Recall | mAP50 | mAP50-95 | FPS | |

|---|---|---|---|---|---|

| G | 98.1% | 98.9% | 99.4% | 82.7% | 10.78 |

| G-RGB | 98.8% | 98.5% | 98.9% | 83.1% | 15.15 |

| G-HSI | 98.7% | 92.3% | 97.1% | 79.1% | 7.30 |

| G-LAB | 98.6% | 98.0% | 99.0% | 81.8% | 12.11 |

| Precision | Recall | mAP50 | mAP50-95 | |

|---|---|---|---|---|

| Intact | 100% | 100% | 99.5% | 99.5% |

| Scratch | 99.1% | 98.8% | 99.4% | 86.3% |

| Puncture | 95.4% | 100% | 98.2% | 64.4% |

| Rot | 100% | 95.3% | 98.5% | 82.3% |

| All | 98.8% | 98.5% | 98.9% | 83.1% |

| Precision | Recall | mAP50 | mAP50-95 | |

|---|---|---|---|---|

| Intact | 99.7% | 100% | 99.5% | 98.6% |

| Scratch | 99.2% | 90.0% | 95.8% | 78.0% |

| Puncture | 99.7% | 100% | 99.5% | 66.5% |

| Rot | 99.0% | 100% | 99.5% | 64.6% |

| All | 99.4% | 97.5% | 98.6% | 76.9% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Wang, Z.; Zhao, X.; Lu, J.; Cao, Y.; Li, R.; Zhang, T. Enhanced Research on YOLOv12 Detection of Apple Defects by Integrating Filter Imaging and Color Space Reconstruction. Electronics 2025, 14, 4259. https://doi.org/10.3390/electronics14214259

Wang L, Wang Z, Zhao X, Lu J, Cao Y, Li R, Zhang T. Enhanced Research on YOLOv12 Detection of Apple Defects by Integrating Filter Imaging and Color Space Reconstruction. Electronics. 2025; 14(21):4259. https://doi.org/10.3390/electronics14214259

Chicago/Turabian StyleWang, Liuxin, Zhisheng Wang, Xinyu Zhao, Junbai Lu, Yinan Cao, Ruiqi Li, and Tong Zhang. 2025. "Enhanced Research on YOLOv12 Detection of Apple Defects by Integrating Filter Imaging and Color Space Reconstruction" Electronics 14, no. 21: 4259. https://doi.org/10.3390/electronics14214259

APA StyleWang, L., Wang, Z., Zhao, X., Lu, J., Cao, Y., Li, R., & Zhang, T. (2025). Enhanced Research on YOLOv12 Detection of Apple Defects by Integrating Filter Imaging and Color Space Reconstruction. Electronics, 14(21), 4259. https://doi.org/10.3390/electronics14214259