Unlocking Few-Shot Encrypted Traffic Classification: A Contrastive-Driven Meta-Learning Approach

Abstract

1. Introduction

1.1. The Challenge of Traffic Classification in the Encryption Era

1.2. The Emergence of the Few-Shot Dilemma

1.3. Limitations of Existing Few-Shot Learning Paradigms

- Paradigm A: Standard Meta-Learning. Meta-learning methods like Prototypical Networks [12] and MAML [13] are designed to “learn to learn,” aiming to quickly adapt to new tasks by training on a large number of simulated few-shot tasks. However, these methods typically need to learn a metric-friendly embedding space made from scratch (tabula rasa). The feature space of encrypted traffic is notoriously high-dimensional, heterogeneous, and noisy, where the differences between classes can be very subtle (e.g., distinguishing two different VoIP applications). Without strong prior knowledge, requiring a meta-learning model to directly optimize a well-structured embedding space from raw features is an extremely challenging task, often leading to sub-optimal solutions.

- Paradigm B: Pre-Training and Fine-Tuning. This paradigm first pre-trains a general-purpose feature encoder on a large dataset via self-supervised learning (e.g., contrastive learning [14]), and then fine-tunes it on downstream tasks. This approach can learn robust feature representations. However, the standard fine-tuning strategy (e.g., training a linear classifier on a few samples) is not optimized for the specific goal of “fast adaptation from few samples.” It is a generic adaptation method that may not fully unlock the potential of the pre-trained model in scenarios of extreme sample scarcity (K = 1 or K = 5).

1.4. Problem Statement and Key Challenges

- High-Dimensional, Noisy Feature Space: Encrypted traffic features are inherently high-dimensional and contain significant noise, making it difficult to learn a discriminative embedding space, especially with limited data.

- The “Learn to Adapt” Dilemma: The model must not only learn robust features but also learn the meta-skill of adapting to new classes quickly and efficiently, a task for which standard fine-tuning is ill-suited.

- Decoupling Representation and Adaptation: A single-stage training process often forces the model to learn feature representation and few-shot adaptation simultaneously, leading to a sub-optimal performance. A clear decoupling strategy is needed.

1.5. Our Work and Contributions

- 1.

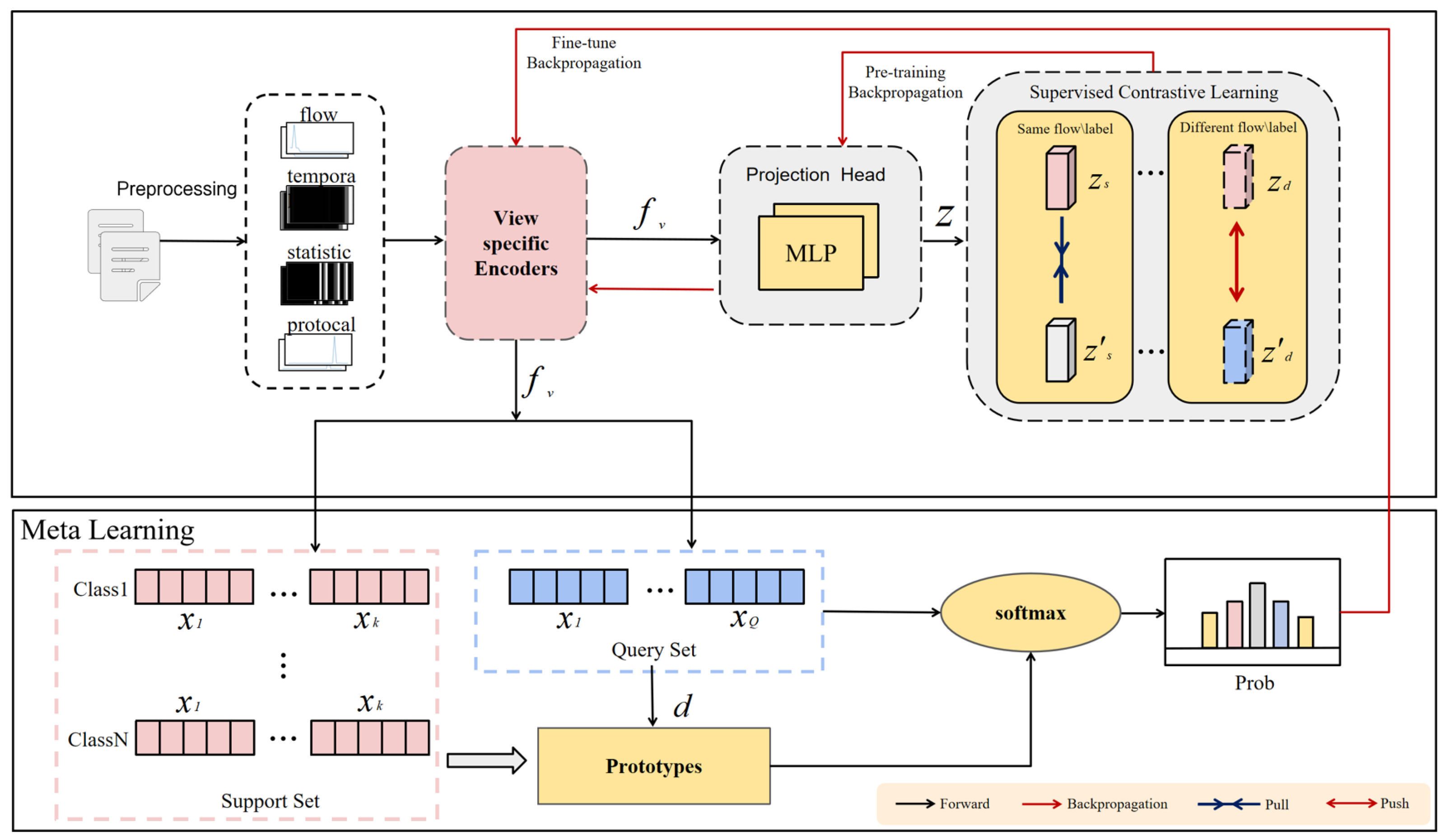

- A Domain-Adapted Framework for Few-Shot Learning: We propose CL-MetaFlow, a novel two-stage framework that synergizes supervised contrastive learning with meta-learning. This design is specifically tailored to the domain of encrypted traffic, effectively decoupling the complex processes of representation learning and few-shot adaptation.

- 2.

- Systematic Multi-View Feature Analysis: We provide a detailed methodology and analysis of the multi-view feature fusion strategy. Our work clarifies how diverse, non-payload-based traffic features are processed and integrated to form a comprehensive and discriminative representation, a critical aspect often overlooked in the related literature.

- 3.

- Rigorous Empirical Validation and Benchmarking: We conduct extensive experiments on a challenging, real-world benchmark. The results rigorously validate that CL-MetaFlow consistently and significantly outperforms a variety of established baselines, setting a new performance benchmark for this specific problem domain.

- 4.

- In-Depth Ablation and Insight: Through a deeper analysis of our ablation studies, we not only quantify the contribution of each system component but also provide critical insights into the hierarchical importance of different feature views in encrypted traffic, revealing the underlying mechanisms of our framework’s success.

1.6. Paper Structure

2. Related Work

2.1. Machine Learning-Based Encrypted Traffic Classification

2.2. Few-Shot Learning in Cybersecurity

2.3. Contrastive Learning in Network Traffic Analysis

3. Preliminaries

3.1. Problem Definition: Encrypted Traffic Session

- : Flow View represents the behavioral “shape” of the communication, capturing features derived from the sequence of packet sizes and directions.

- : Temporal View represents the “rhythm” of the communication, including not only inter-arrival times (IATs) but also features related to traffic bursts and periodicity.

- : Statistical View, a comprehensive feature vector containing over 20 aggregated statistical values, such as flow duration, total packets, packet size distribution, and payload entropy.

- : Protocol View refers to observable parameters from the transport and handshake layers, such as TCP flag sequences and direction change patterns.

3.2. Few-Shot Encrypted Traffic Classification Task (N-Way K-Shot)

- A Support Set , which contains N classes from , with K labeled samples for each class.

- A Query Set , which contains unlabeled samples from the same N classes, used for evaluation.

4. The CL-MetaFlow Framework

4.1. Design Rationale: Why a Two-Stage Approach?

- Challenge for Meta-Learning: Applying meta-learning directly to raw, multi-view encrypted traffic data forces the model to perform two difficult tasks simultaneously: (1) learn a meaningful feature representation from high-dimensional, noisy, and heterogeneous inputs, (2) learn how to perform few-shot classification within that representation space. This makes the optimization process extremely difficult, often preventing the model from converging to an ideal embedding space where classes are naturally separated.

- Inadequacy of Pre-training: While the traditional “pre-train and fine-tune” paradigm can provide good features through self-supervised learning, its fine-tuning process (e.g., training a linear classifier) is geared towards fitting large amounts of data and is not optimized for the core meta-learning problem of “how to most efficiently extract information from K samples.” This limits its fast adaptation capabilities when samples are extremely scarce.

4.2. Stage 1: Robust Representation Pre-Training via Supervised Contrastive Learning

4.3. Stage 2: Fast Meta-Learning Adaptation

4.3.1. Justification and Mechanism for Multi-View Feature Fusion

4.3.2. Justification and Adaptation with Prototypical Networks

- 1.

- Prototype Calculation: We feed all samples from the support set into the pre-trained encoder and fusion layer to obtain their fused embeddings . For each class , we compute its prototype by averaging the embeddings of its K samples:

- 2.

- Query Classification: For any query sample , we compute the probability distribution over classes by applying a Softmax function to the negative squared Euclidean distance between its embedding and all N class prototypes:where is the squared Euclidean distance.

- 3.

- Loss and Optimization: The loss is the standard cross-entropy loss on the query set. During the meta-training phase, we perform this process on tasks from and fine-tune the parameters of the encoder . During the meta-testing phase, we use tasks from to evaluate the final performance.

4.4. Overall Algorithm

| Algorithm 1: The CL-MetaFlow Algorithm |

|

5. Experiments

5.1. Experimental Setup

- Dataset: We use the publicly available ISCX-VPN-2016 [34] and ISCX-Tor-2017 [35] datasets. After preprocessing and cleaning, our combined dataset contains approximately 25,000 traffic sessions distributed across 17 application classes. The class distribution is naturally imbalanced, reflecting real-world scenarios where some applications are far more common than others. This imbalance makes the classification task more challenging and realistic. We randomly select 12 classes as the meta-training set () and the remaining 5 as the meta-test set (), ensuring no class overlap.

- Evaluation Protocol: We evaluate the models on 5-way K-shot classification tasks, where K is set to 1, 5, 10, and 15. This setting is a standard and widely adopted benchmark in the few-shot learning literature, representing a challenging scenario where the model must distinguish between 5 novel classes with very limited support data. For each task, we sample 20 query instances per class for evaluation. All reported results are the average over 1000 independently generated test episodes to ensure statistical significance. We choose Macro F1-Score as the primary metric. The F1-Score is the harmonic mean of precision and recall, providing a more balanced measure than accuracy alone. Specifically, the Macro F1-Score computes the F1-Score for each class independently and then averages them, giving equal weight to each class. This is crucial for our imbalanced dataset, as it prevents the model’s performance on dominant classes from masking its poor performance on rare classes. To further ensure a comprehensive evaluation on the imbalanced data, we also report Balanced Accuracy in our detailed logs, which confirms the trends observed with the Macro F1-Score.

- Baseline Methods: To provide a comprehensive evaluation, we compare our model against a wide range of representative baselines spanning different learning paradigms.

- –

- ProtoNet [12]: A classic metric-based meta-learning method that learns an embedding space from scratch.

- –

- MAML [13]: A classic optimization-based meta-learning method that learns good model initialization for fast adaptation.

- –

- AE + ProtoNet: A baseline that first pre-trains an Autoencoder with a reconstruction loss, then uses the encoder as the backbone for a Prototypical Network. This tests if simple reconstructive pre-training is sufficient.

- –

- SimCLR + ProtoNet: A strong baseline using a powerful self-supervised method, SimCLR [14], for pre-training. This helps quantify the benefit of using supervised contrastive learning over unsupervised contrastive learning.

- –

- Meta-Baseline [36]: A powerful pre-training baseline that uses a standard classifier on pre-trained features and then performs nearest-neighbor classification on the support set.

- –

- CL(Fine-tune): This baseline uses our powerful SupCon pre-trained encoder but follows a standard fine-tuning procedure (training a new linear classifier on K-shot samples). This is crucial to validate the superiority of our meta-adaptation stage.

- –

- T-Sanitation (re-impl.) [21]: Our re-implementation of a recent SOTA method that uses a Masked Autoencoder (MAE) for contrastive pre-training, followed by few-shot adaptation. This provides a direct comparison with a state-of-the-art external approach.

- Implementation Details: Our framework is implemented in PyTorch (version 2.1.2). For each view, we use a separate two-layer MLP as the encoder . This MLP takes the 32-dimensional view vector as the input, passes it through a 512-dimensional hidden layer with ReLU activation, and outputs a 256-dimensional feature embedding. The projection head is also a two-layer MLP, mapping the 256-dim embedding to the final 128-dim projection space. The pre-training stage (Stage 1) uses the Adam optimizer with a learning rate of 1 for 200 epochs. The meta-learning stage (Stage 2) also uses Adam with a learning rate of 1 for 100 epochs. The contrastive loss temperature is a learnable parameter initialized to 0.07.

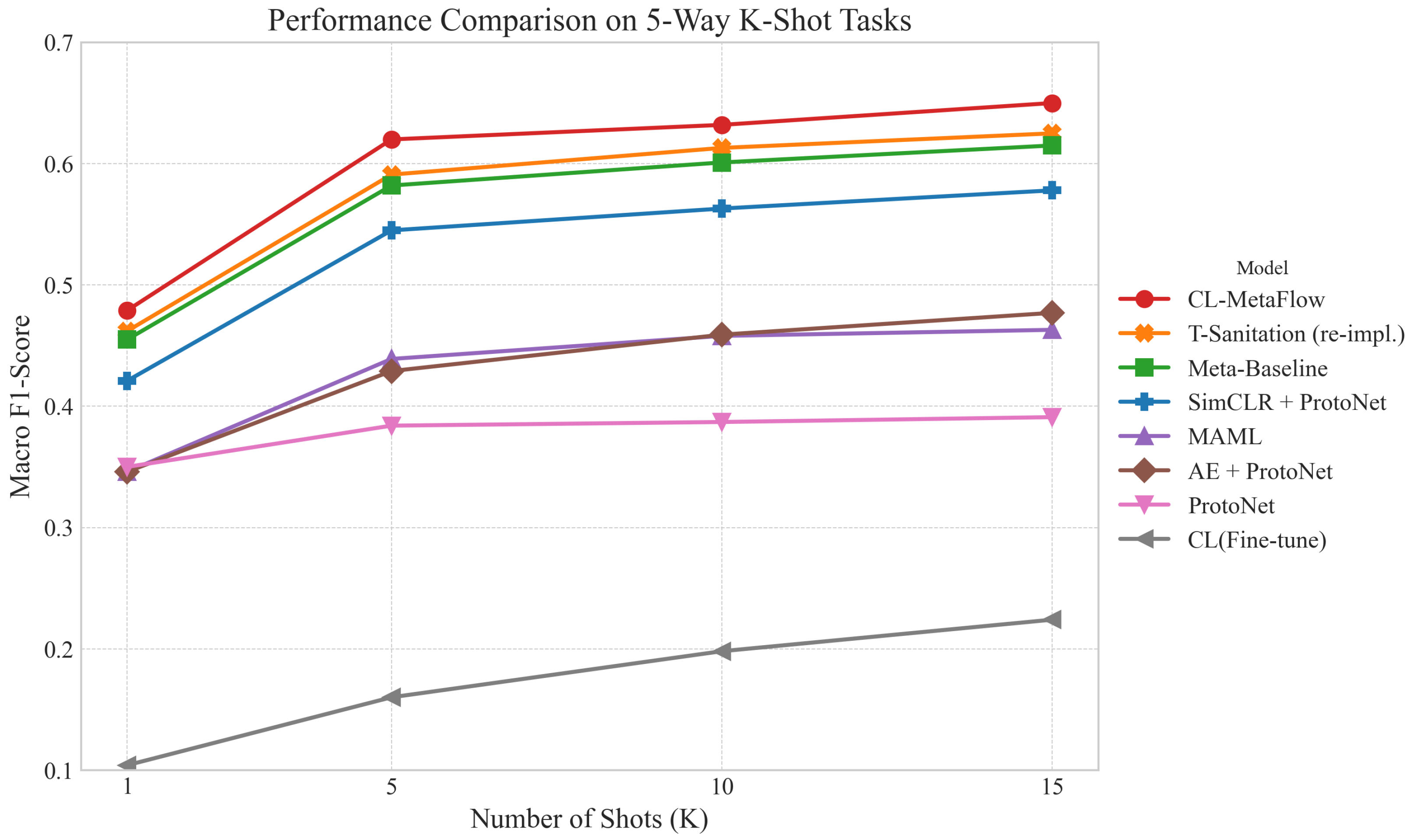

5.2. Overall Performance Comparison

- 1.

- The Necessity of Pre-training: CL-MetaFlow vastly outperforms from-scratch methods like ProtoNet and MAML (e.g., a 23.6% absolute F1-Score improvement over ProtoNet in the five-shot case). This confirms that learning from scratch is ineffective for complex traffic data; a strong feature prior is essential.

- 2.

- The Superiority of Supervised Contrastive Pre-training: Our method significantly beats both reconstructive pre-training (AE + ProtoNet) and unsupervised contrastive pre-training (SimCLR + ProtoNet). The gap between CL-MetaFlow (0.620) and SimCLR+ProtoNet (0.545) in the five-shot setting highlights that leveraging base class labels during pre-training via SupCon is critical for shaping a more discriminative feature space tailored for classification.

- 3.

- The Necessity of Meta-Learning Adaptation: The most thought-provoking comparison is with CL(Fine-tune). Despite using the same powerful pre-trained encoder, the traditional fine-tuning strategy leads to a catastrophic performance collapse due to severe overfitting. In contrast, non-parametric adaptation methods like Meta-Baseline (0.582) and our Prototypical Network-based approach (0.620) are far more robust and effective. This validates that a meta-learning adaptation strategy is superior for unlocking the potential of the pre-trained model.

- 4.

- Excellent Data Efficiency: A remarkable finding is the data efficiency of our framework. CL-MetaFlow, when trained with only a single sample per class (K = 1, F1-Score ≈ 0.48), already outperforms a standard ProtoNet trained with fifteen samples per class (K = 15, F1-Score ). This demonstrates a powerful generalization capability, indicating that the structured feature space allows the model to extract significantly more discriminative information from each scarce sample.

Comparison with State-of-the-Art Approaches

5.3. Ablation Study and Deeper Analysis

5.3.1. Analysis of Feature View Contribution

- All Views Provide Value: The removal of any single view results in a performance degradation, which confirms that our multi-view representation is effective and its components are complementary rather than redundant.

- Primacy of Behavioral Patterns: The Flow view is unequivocally the most critical feature, with its removal causing a drastic performance drop of 9.51%. This strongly indicates that the sequence of packet sizes and directions contains the most discriminative information.

- Hierarchy of Importance: The performance drops reveal a clear hierarchy of feature importance: Flow > Temporal > Protocol > Statistical. This deep analysis validates our multi-view design and provides valuable insights for future feature engineering in encrypted traffic analysis.

5.3.2. Analysis of Feature Fusion Method

5.3.3. Analysis of Non-Linear Adapter

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rescorla, E. The Transport Layer Security (TLS) Protocol Version 1.3; Technical Report, RFC 8446; Internet Engineering Task Force (IETF): Fremont, CA, USA, 2018. [Google Scholar]

- Iyengar, J.; Thomson, M. QUIC: A UDP-Based Multiplexed and Secure Transport; Technical Report, RFC 9000; Internet Engineering Task Force (IETF): Fremont, CA, USA, 2021. [Google Scholar]

- Aboaoja, F.A.; Zainal, A.; Ghaleb, F.A.; Al-Rimy, B.A.S.; Eisa, T.A.E.; Elnour, A.A.H. Malware detection issues, challenges, and future directions: A survey. Appl. Sci. 2022, 12, 8482. [Google Scholar] [CrossRef]

- Song, Y.; Zhang, D.; Wang, J.; Wang, Y.; Wang, Y.; Ding, P. Application of deep learning in malware detection: A review. J. Big Data 2025, 12, 99. [Google Scholar] [CrossRef]

- Zhang, D.; Song, Y.; Xiang, Q.; Wang, Y. IMCMK-CNN: A lightweight convolutional neural network with Multi-scale Kernels for Image-based Malware Classification. Alex. Eng. J. 2025, 111, 203–220. [Google Scholar] [CrossRef]

- Wang, K.; Song, Y.; Xu, Y.; Quan, W.; Ni, P.; Wng, P.; Li, C.; Zhi, X. A novel automated neural network architecture search method of air target intent recognition. Chin. J. Aeronaut. 2025, 38, 103295. [Google Scholar] [CrossRef]

- Li, C.; Wang, K.; Song, Y.; Wang, P.; Li, L. Air target intent recognition method combining graphing time series and diffusion models. Chin. J. Aeronaut. 2025, 38, 103177. [Google Scholar] [CrossRef]

- Li, S.; Wang, J.; Song, Y.; Wang, S.; Wang, Y. A Lightweight Model for Malicious Code Classification Based on Structural Reparameterisation and Large Convolutional Kernels. Int. J. Comput. Intell. Syst. 2024, 17, 30. [Google Scholar] [CrossRef]

- Lotfollahi, M.; Jafari Siavoshani, M.; Zade Shirazi, R.S.; Saberian, M. Deep packet: A novel approach for encrypted traffic classification using deep learning. Soft Comput. 2020, 24, 1999–2012. [Google Scholar] [CrossRef]

- Liu, C.; He, L.; Xiong, G.; Cao, Z. Fs-net: A flow sequence network for encrypted traffic classification. In Proceedings of the IEEE INFOCOM 2019—IEEE Conference on Computer Communications, Paris, France, 29 April–2 May 2019. [Google Scholar]

- Rachit Bhatt, S.; Ragiri, P.R. Security trends in Internet of Things: A survey. SN Appl. Sci. 2021, 3, 121. [Google Scholar] [CrossRef]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical networks for few-shot learning. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Finn, C.; Abbeel, P.; Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the International Conference on Machine Learning, PMLR, Sydney, Australia, 6–11 August 2017; pp. 1126–1135. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Lin, X.; Xiong, G.; Gou, G.; Li, Z.; Shi, J.; Yu, J. Et-bert: A contextualized datagram representation with pre-training transformers for encrypted traffic classification. In Proceedings of the ACM Web Conference 2022, Virtual, 25–29 April 2022; pp. 633–642. [Google Scholar]

- Jin, Z.; Liang, Z.; He, M.; Peng, Y.; Xue, H.; Wang, Y. A federated semi-supervised learning approach for network traffic classification. Int. J. Netw. Manag. 2023, 33, e2222. [Google Scholar] [CrossRef]

- Joarder, Y.A.; Fung, C. Exploring quic security and privacy: A comprehensive survey on quic security and privacy vulnerabilities, threats, attacks and future research directions. IEEE Trans. Netw. Serv. Manag. 2024, 21, 6953–6973. [Google Scholar] [CrossRef]

- Macas, M.; Wu, C.; Fuertes, W. Adversarial examples: A survey of attacks and defenses in deep learning-enabled cybersecurity systems. Expert Syst. Appl. 2024, 238, 122223. [Google Scholar] [CrossRef]

- Hu, X.; Gao, Y.; Cheng, G.; Wu, H.; Li, R. An Adversarial Learning-based Tor Malware Traffic Detection Model. In Proceedings of the GLOBECOM 2022—2022 IEEE Global Communications Conference, Rio de Janeiro, Brazil, 4–8 December 2022; pp. 74–79. [Google Scholar]

- Peng, S.; Wang, L.; Shuai, W.; Song, H.; Zhou, J.; Yu, S.; Xuan, Q. Hierarchical Local-Global Feature Learning for Few-shot Malicious Traffic Detection. arXiv 2025, arXiv:2504.03742. [Google Scholar] [CrossRef]

- Sun, J.; Zhang, B.; Li, H.; Yuan, L.; Chang, H. T-Sanitation: Contrastive masked auto-encoder-based few-shot learning for malicious traffic detection. J. Supercomput. 2025, 81, 727. [Google Scholar] [CrossRef]

- Chen, J.; Mi, R.; Wang, H.; Wu, H.; Mo, J.; Guo, J.; Lai, Z.; Zhang, L.; Leung, V.C.M. A review of few-shot and zero-shot learning for node classification in social networks. IEEE Trans. Comput. Soc. Syst. 2024, 12, 1927–1941. [Google Scholar] [CrossRef]

- Wang, H.Q.; Li, J.; Huang, D.H.; Tao, Y.D. Meta-IDS: Meta-Learning Automotive Intrusion Detection Systems with Adaptive and Learnable. Peer-Netw. Appl. 2025, 18, 152. [Google Scholar] [CrossRef]

- Yang, J.; Li, H.; Shao, S.; Zou, F.; Wu, Y. FS-IDS: A framework for intrusion detection based on few-shot learning. Comput. Secur. 2022, 122, 102899. [Google Scholar] [CrossRef]

- Xu, C.; Shen, J.; Du, X. A Method of Few-Shot Network Intrusion Detection Based on Meta-Learning Framework. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3540–3552. [Google Scholar] [CrossRef]

- Lu, C.; Wang, X.; Yang, A.; Liu, Y.; Dong, Z. A few-shot-based model-agnostic meta-learning for intrusion detection in security of internet of things. IEEE Internet Things J. 2023, 10, 21309–21321. [Google Scholar]

- Li, G.; Wang, M. A Meta-learning Approach for Few-shot Network Intrusion Detection Using Depthwise Separable Convolution. J. ICT Stand. 2024, 12, 443–470. [Google Scholar] [CrossRef]

- Zhou, K.; Lin, X.; Wu, J.; Bashir, A.K.; Li, J.; Imran, M. Metric Learning-based Few-Shot Malicious Node Detection for IoT Backhaul/Fronthaul Networks. In Proceedings of the GLOBECOM 2022—2022 IEEE Global Communications Conference, Rio de Janeiro, Brazil, 4–8 December 2022; pp. 5777–5782. [Google Scholar]

- Bovenzi, G.; Di Monda, D.; Montieri, A.; Pescapè, A. Classifying attack traffic in IoT environments via few-shot learning. J. Inf. Secur. Appl. 2024, 83, 103762. [Google Scholar] [CrossRef]

- Li, H.; Bai, Y.; Zhao, Y.; Xu, Y. MetaCL: A semi-supervised meta learning architecture via contrastive learning. Int. J. Mach. Learn. Cybern. 2024, 15, 227–236. [Google Scholar] [CrossRef]

- Liu, H.; Feng, J.; Kong, L.; Tao, D.; Chen, Y.; Zhang, M. Graph Contrastive Learning Meets Graph Meta Learning: A Unified Method for Few-shot Node Tasks. In Proceedings of the ACM Web Conference 2024, Virtual, 13–17 May 2024. [Google Scholar]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Wierstra, D. Matching networks for one shot learning. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Volume 29. [Google Scholar]

- Khosla, P.; Teterwak, P.; Wang, C.; Sarna, A.; Tian, Y.; Isola, P.; Maschinot, A.; Liu, C.; Krishnan, D. Supervised contrastive learning. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; Volume 33, pp. 18661–18673. [Google Scholar]

- Gil, G.D.; Lashkari, A.H.; Mamun, M.; Ghorbani, A.A. Characterization of encrypted and VPN traffic using time-related features. In Proceedings of the 2nd International Conference on Information Systems Security and Privacy (ICISSP 2016), Rome, Italy, 19–21 February 2016; pp. 407–414. [Google Scholar]

- Lashkari, A.H.; Gil, G.D.; Mamun, M.S.; Ghorbani, A.A. Characterization of tor traffic using time based features. In Proceedings of the International Conference on Information Systems Security and Privacy, Porto, Portugal, 19–21 February 2017; Volume 2, pp. 253–262. [Google Scholar]

- Chen, Y.; Liu, Z.; Xu, H.; Darrell, T.; Wang, X. Meta-baseline: Exploring simple meta-learning for few-shot learning. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Virtual, 11–17 October 2021; pp. 9062–9071. [Google Scholar]

| Work | Method | Focus | Limitation |

|---|---|---|---|

| Deep Packet [9] | CNN+SAE | Application Identification | Requires large labeled dataset |

| FS-Net [10] | Flow Sequence Network | Application Identification | Requires large labeled dataset |

| ET-BERT [15] | Transformer-based | General Representation | Not optimized for few-shot |

| T-Sanitation [21] | MAE+FSL | Malicious Traffic Detection | Self-supervised, no label use |

| HLGF [20] | Hierarchical GNN | Malicious Traffic Detection | Complex, specialized architecture |

| CL-MetaFlow (Ours) | SupCon+ProtoNet | Few-shot App. ID | - |

| Model Framework | K = 1 Shot | K = 5 Shots | K = 10 Shots | K = 15 Shots |

|---|---|---|---|---|

| From Scratch (no pre-training) | ||||

| ProtoNet | 0.350 | 0.384 | 0.387 | 0.391 |

| MAML | 0.346 | 0.439 | 0.458 | 0.463 |

| Pre-trained and Adapted Paradigms | ||||

| AE + ProtoNet | 0.346 | 0.429 | 0.459 | 0.477 |

| SimCLR + ProtoNet | 0.421 | 0.545 | 0.563 | 0.578 |

| Meta-Baseline | 0.455 | 0.582 | 0.601 | 0.615 |

| CL(Fine-tune) | 0.104 | 0.160 | 0.198 | 0.224 |

| T-Sanitation (re-impl.) | 0.462 | 0.591 | 0.613 | 0.625 |

| CL-MetaFlow (Ours) | 0.479 | 0.620 | 0.632 | 0.650 |

| Experiment Setting | Macro F1-Score | Drop (ΔF1) |

|---|---|---|

| Baseline (All views) | 0.6197 | - |

| Removing Flow View | 0.5246 | −0.0951 |

| Removing Temporal View | 0.5727 | −0.0470 |

| Removing Protocol View | 0.5848 | −0.0349 |

| Removing Statistical View | 0.6088 | −0.0109 |

| Fusion Method | Macro F1-Score |

|---|---|

| Concatenation (Ours) | 0.6197 |

| Attention-based Fusion | 0.6152 |

| Adapter Method | Macro F1-Score |

|---|---|

| Prototype Distance (Ours) | 0.6197 |

| Non-linear MLP Adapter | 0.5985 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Wang, J.; Song, Y.-F.; Yue, S.-H. Unlocking Few-Shot Encrypted Traffic Classification: A Contrastive-Driven Meta-Learning Approach. Electronics 2025, 14, 4245. https://doi.org/10.3390/electronics14214245

Li Z, Wang J, Song Y-F, Yue S-H. Unlocking Few-Shot Encrypted Traffic Classification: A Contrastive-Driven Meta-Learning Approach. Electronics. 2025; 14(21):4245. https://doi.org/10.3390/electronics14214245

Chicago/Turabian StyleLi, Zheng, Jian Wang, Ya-Fei Song, and Shao-Hua Yue. 2025. "Unlocking Few-Shot Encrypted Traffic Classification: A Contrastive-Driven Meta-Learning Approach" Electronics 14, no. 21: 4245. https://doi.org/10.3390/electronics14214245

APA StyleLi, Z., Wang, J., Song, Y.-F., & Yue, S.-H. (2025). Unlocking Few-Shot Encrypted Traffic Classification: A Contrastive-Driven Meta-Learning Approach. Electronics, 14(21), 4245. https://doi.org/10.3390/electronics14214245