Progressive Prompt Generative Graph Convolutional Network for Aspect-Based Sentiment Quadruple Prediction

Abstract

1. Introduction

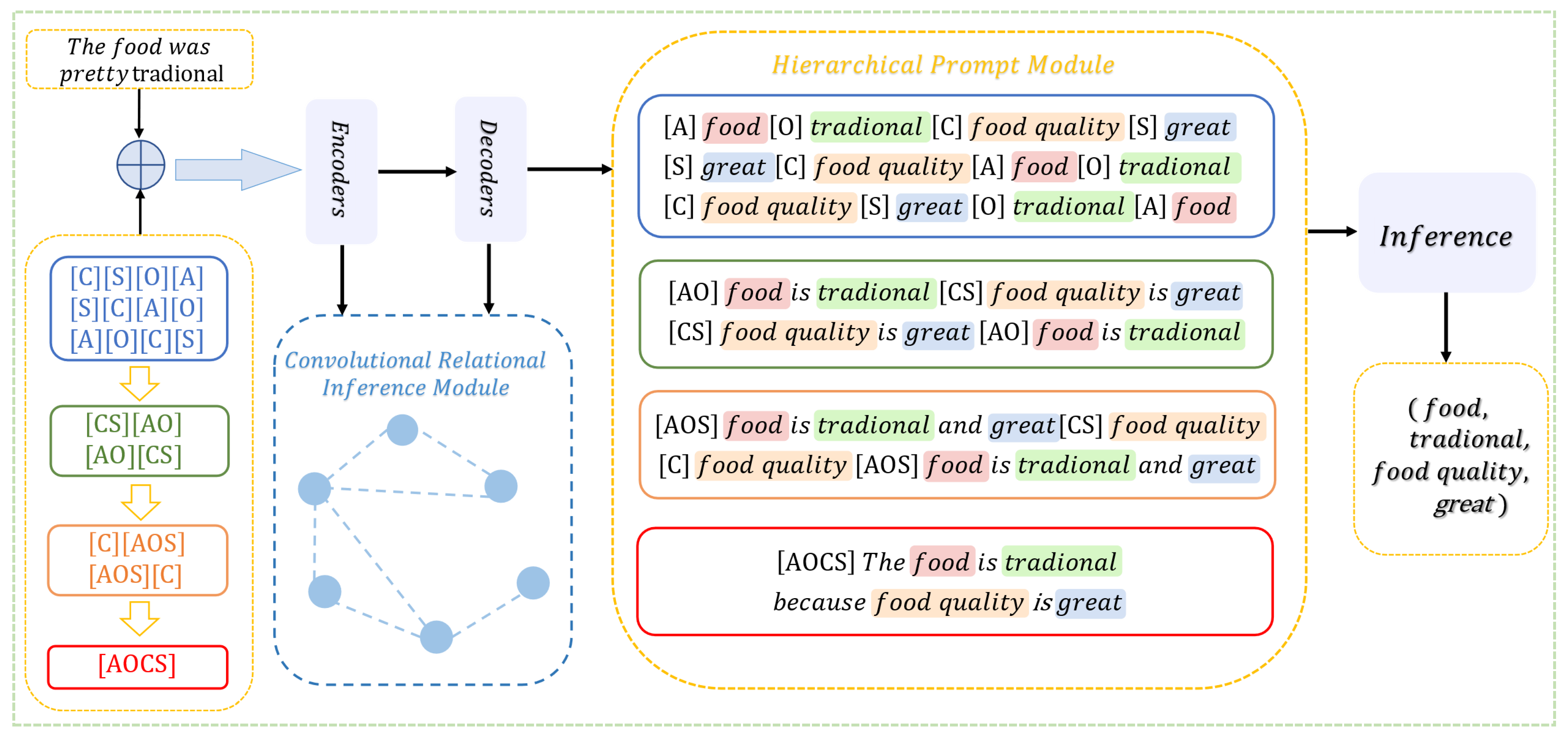

- ProPGCN uses progressive prompt templates to generate paradigm expressions of corresponding orders and introduces third-order element prompt templates to associate high-order semantics in sentences, providing a bridge for modeling the final global semantics.

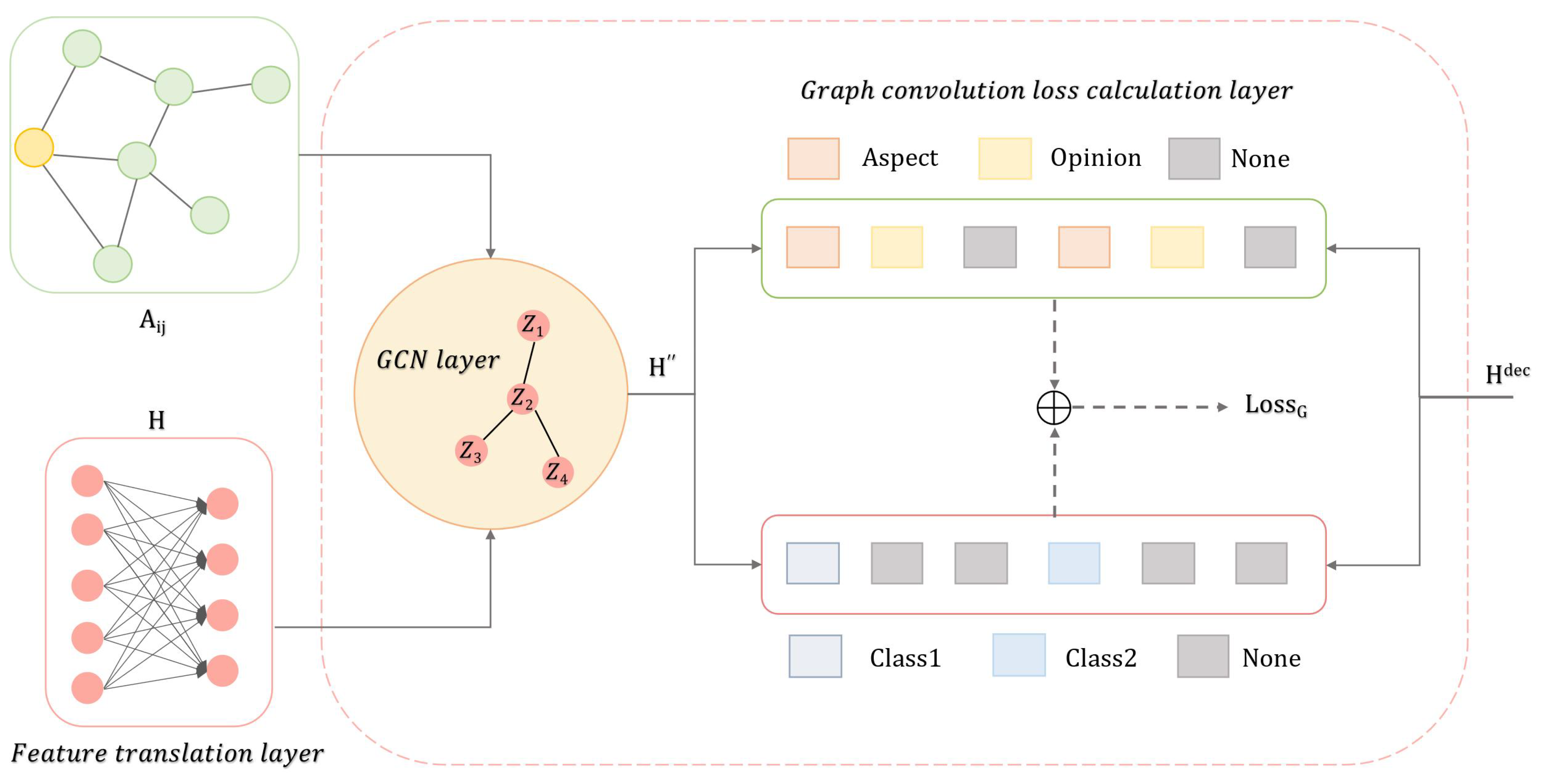

- A graph convolutional relational inference module (GRI) is designed. The module can make full use of the dependency information of the context to enhance the recognition of implicit aspects and implicit opinions.

- A graph convolution aggregation module is constructed. The module uses the graph convolutional network to aggregate the information of adjacent nodes and correct the conflicting implicit logical relationships. The influence of multi-order cueing tasks on the model is adjusted by a weighted balancing loss function, and constrained decoding is used to generate the final quadruple.

2. Related Work

2.1. BERT-Based Methods

2.2. Data Augmentation-Based Methods

2.3. Generative Model-Based Methods

3. Proposed Model

3.1. Definition

3.2. Model Architecture

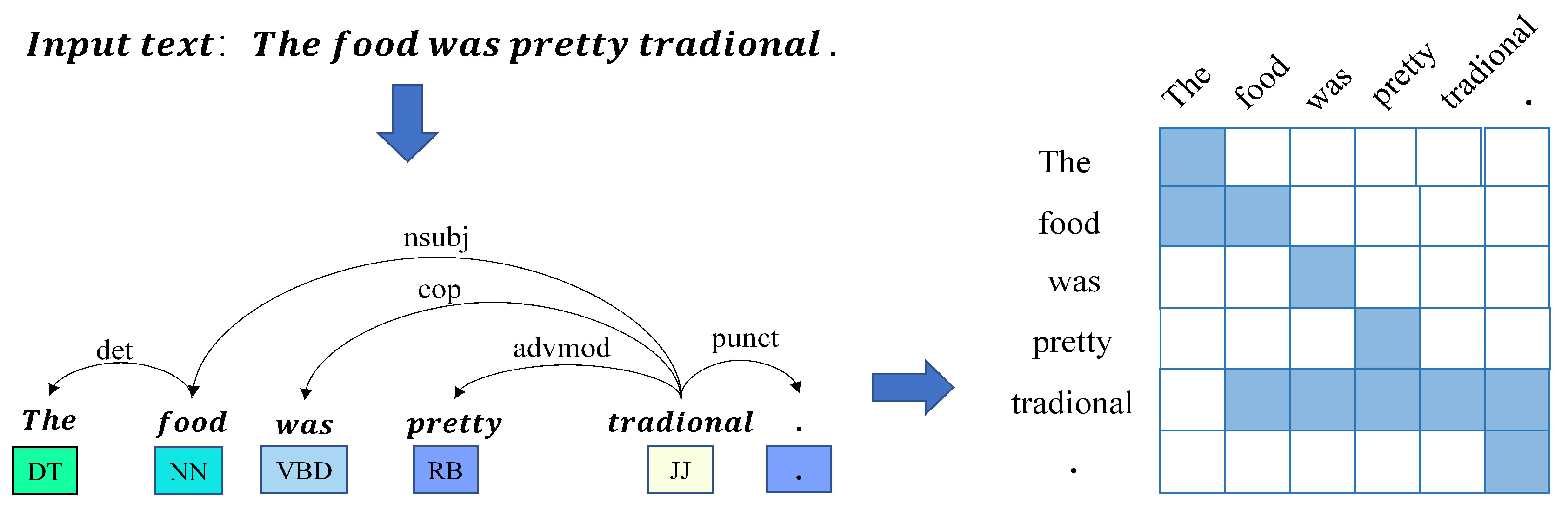

3.3. Data Preprocessing

3.4. Progressive Prompt Enhancement Module

3.5. Graph Convolutional Relational Inference (GRI) Module

3.6. Inference Layer

3.7. Model Training Loss

4. Experiments and Result Analysis

4.1. Dataset and Parameter Settings

4.2. Baseline Method

- Extract-Classify-ACOS [10]: A two-stage approach is designed to obtain quadruplets. The first stage extracts aspect–opinion pairs in sentences. The second stage classifies aspect categories and sentiments and determines whether there are implicit aspects or implicit opinions based on the obtained context-aware tokens and then predicts the final quadruplets.

- One-ASQP [19]: It is proposed to divide the ASQP task into two simultaneous subtasks, perform triple extraction and aspect category detection tasks simultaneously through a shared encoder, and then use a one-step decoding method to obtain the final quadruple extraction result.

- CACA [21]: By introducing a bidirectional cross-attention mechanism, explicit and implicit quadruple representations are modeled to enhance the alignment of aspect words and opinion words. At the same time, contrastive learning and self-attention mechanisms are introduced to capture the contextual association of the span; finally, the final prediction result is inferred through confidence.

- OTPT [22]: The role of graph attention networks in the ASQP task is explored, and a prompt fine-tuning method based on opinion tree perception is proposed. By modeling emotional elements as a tree structure, the “one-to-many” dependency relationship between elements can be accurately captured. A dynamic virtual template and soft prompt module are designed, and unique tags are used to identify implicit elements.

- UGTS [38]: A unified grid annotation scheme is proposed to represent implicit terms, and an adaptive graph diffusion convolutional network is designed to establish the association between explicit and implicit sentiments using dependency trees and abstract semantic representations. Subsequently, the Triaffine mechanism is used to integrate heterogeneous word pair relationships to capture high-order interactions.

- MRCCLRI [39]: A novel end-to-end non-generative model presented for ASQP, involving multi-task decomposition within a machine reading comprehension (MRC) framework.

- Paraphrase [40]: The ASQP task is regarded as a semantic generation problem. Two restaurant datasets are introduced for the ASQP task. The quadruple extraction task is converted into a paraphrase generation problem, and the Seq2Seq method is used to predict the quadruple.

- DLO [24]: A model based on data enhancement is proposed. By pre-training the language model, the minimum entropy is calculated to select the most appropriate output template sequence, and multiple appropriate templates are combined for data enhancement.

- UAUL [30]: For the first time, it is proposed to study the ASQP task from the perspective of “what not to generate” and to use negative samples to provide relevant information to the generative model, thereby reducing the inherent error of the generative model.

- E2TP [41]: A two-stage prompting framework is proposed, and a step-by-step prompting method from elements to tuples is designed, which imitates the process of human step-by-step reasoning. The diversified output paradigm design is used to enhance knowledge transfer from the source domain to the target domain and improve the robustness of the model.

- SIT [35]: The paper explores the guiding role of chain thinking in the quadruple generation model. It introduces step-by-step reasoning into the ASQP task for the first time and uses prefix hints and text masking strategies to enhance the understanding of the deep semantics of the text and reduce the possibility of overfitting on small data.

- STAR [34]: A framework of step-by-step task enhancement and relationship learning is proposed, which imitates the human divide-and-conquer reasoning method, enhances the model’s ability to capture complex relationships, and enhances the model’s performance in implicit emotional expression and cross-domain scenarios.

- BvSP [36]: The first dataset designed specifically for few-shot learning is constructed, and a multi-template collaborative wide-view soft hint method is proposed. The Jensen–Shannon divergence is used to quantify the template correlation and select the best template.

- GDP [37]: The application of diffusion models in ASQP tasks is explored, and a diffusion fuzzy learning strategy is proposed to simulate the noise diffusion and denoising process to reduce the distribution noise of sentiment elements.

- DuSR2 [42]: This framework presents a straightforward and effective strategy-level approach: a dual-system-based reasoning framework with intuitive reactions.

4.3. Experimental Results

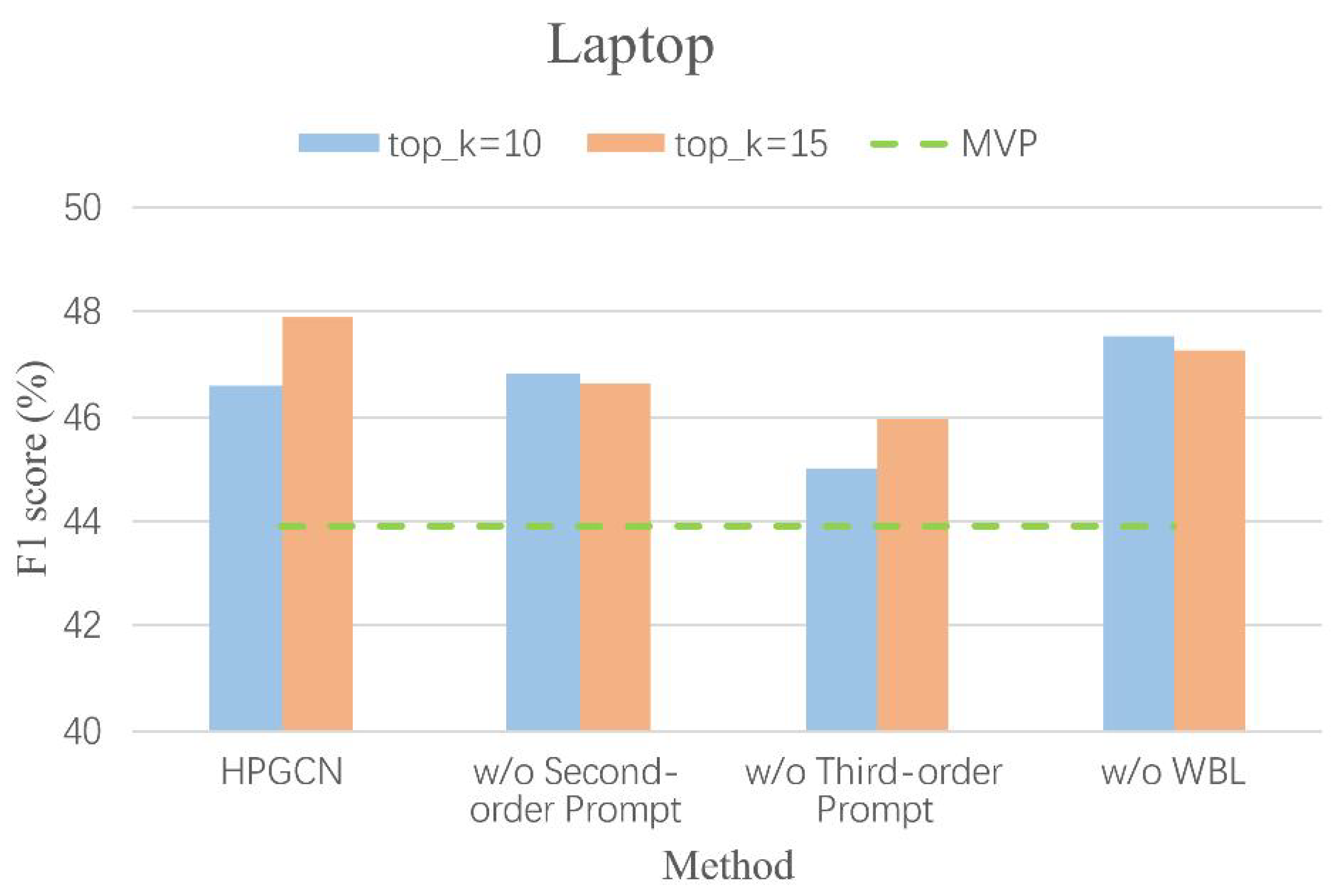

4.4. Ablation Analysis

4.5. Performance Analysis

4.6. Case Study

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wang, P.; Tao, L.; Tang, M.; Wang, L.; Xu, Y.; Zhao, M. Incorporating syntax and semantics with dual graph neural networks for aspect-level sentiment analysis. Eng. Appl. Artif. Intell. 2024, 133, 108101. [Google Scholar] [CrossRef]

- Zheng, Y.; Tang, M.; Yang, Z.; Hu, J.; Zhao, M. Semantic-enhanced relation modeling for fine-grained aspect-based sentiment analysis. Int. J. Mach. Learn. Cybern. 2025. [Google Scholar] [CrossRef]

- Xu, Y.; Tian, J.; Tang, M.; Tao, L.; Wang, L. Document-level relation extraction with entity mentions deep attention. Comput. Speech Lang. 2024, 84, 101574. [Google Scholar] [CrossRef]

- Wu, Z.; Ying, C.; Zhao, F.; Fan, Z.; Dai, X.; Xia, R. Grid Tagging Scheme for Aspect-oriented Fine-grained Opinion Extraction. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2020, Online, 16–20 November 2020; pp. 2576–2585. [Google Scholar]

- Zhang, W.; Deng, Y.; Li, X.; Bing, L.; Lam, W. Aspect-based sentiment analysis in question answering forums. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2021, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 4582–4591. [Google Scholar]

- Yang, K.; Zong, L.; Tang, M.; Hu, J.; Zheng, Y.; Chen, Y.; Zhao, M. MPGM:Multi-prompt generation model with self-supervised contrastive learning for aspect sentiment triplet extraction. Neural Netw. 2025, 192, 107894. [Google Scholar] [CrossRef] [PubMed]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics (NAACL), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Xu, H.; Tang, M.; Cai, T.; Hu, J.; Zhao, M. Dual-enhanced generative model with graph attention network and contrastive learning for aspect sentiment triplet extraction. Knowl.-Based Syst. 2024, 301, 112342. [Google Scholar] [CrossRef]

- Li, S.; Lin, N.; Wu, P.; Zhou, D.; Yang, A. Enhancing Aspect Sentiment Quad Prediction through Dual-Sequence Data Augmentation and Contrastive Learning. In Proceedings of the The 16th Asian Conference on Machine Learning (Conference Track), Hanoi, Vietnam, 5–8 December 2024. [Google Scholar]

- Cai, H.; Xia, R.; Yu, J. Aspect-category-opinion-sentiment quadruple extraction with implicit aspects and opinions. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics (ACL), Online, 1–6 August 2021; pp. 340–350. [Google Scholar]

- Wan, H.; Yang, Y.; Du, J.; Liu, Y.; Qi, K.; Pan, J.Z. Target-aspect-sentiment joint detection for aspect-based sentiment analysis. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 9122–9129. [Google Scholar]

- Yang, K.; Zong, L.; Tang, M.; Zheng, Y.; Chen, Y.; Zhao, M.; Jiang, Z. MPBE: Multi-perspective boundary enhancement network for aspect sentiment triplet extraction. Appl. Intell. 2025, 55, 301. [Google Scholar] [CrossRef]

- Zhu, L.; Bao, Y.; Xu, M.; Li, J.; Zhu, Z.; Kong, X. Aspect sentiment quadruple extraction based on the sentence-guided grid tagging scheme. World Wide Web 2023, 26, 3303–3320. [Google Scholar] [CrossRef]

- Li, S.; Zhang, Y.; Lan, Y.; Zhao, H.; Zhao, G. From implicit to explicit: A simple generative method for aspect-category-opinion-sentiment quadruple extraction. In Proceedings of the 2023 International Joint Conference on Neural Networks (IJCNN), Gold Coast, Australia, 18–23 June 2023; pp. 1–8. [Google Scholar]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics (ACL), Online, 5–10 July 2020; pp. 7871–7880. [Google Scholar]

- Li, B.; Li, Y.; Jia, S.; Ma, B.; Ding, Y.; Qi, Z.; Tan, X.; Guo, M.; Liu, S. Triple GNNs: Introducing Syntactic and Semantic Information for Conversational Aspect-Based Quadruple Sentiment Analysis. In Proceedings of the 2024 27th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Tianjin, China, 8–10 May 2024; pp. 998–1003. [Google Scholar]

- Wang, J.; Yang, A.; Zhou, D.; Lin, N.; Wang, Z.; Huang, W.; Chen, B. Simplifying aspect-sentiment quadruple prediction with cartesian product operation. In Proceedings of the International Conference on Intelligent Computing, Zhengzhou, China, 10–13 August 2023; pp. 707–719. [Google Scholar]

- Tang, M.; Tang, W.; Gui, Q.; Hu, J.; Zhao, M. A vulnerability detection algorithm based on residual graph attention networks for source code imbalance (RGAN). Expert Syst. Appl. 2024, 238, 122216. [Google Scholar] [CrossRef]

- Zhou, J.; Yang, H.; He, Y.; Mou, H.; Yang, J. A Unified One-Step Solution for Aspect Sentiment Quad Prediction. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2023, Toronto, ON, Canada, 9–14 July 2023; pp. 12249–12265. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph Attention Networks. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018; Volume 1050, p. 4. [Google Scholar]

- Chen, B.; Xu, H.; Luo, Y.; Xu, B.; Cai, R.; Hao, Z. CACA: Context-Aware Cross-Attention Network for Extractive Aspect Sentiment Quad Prediction. In Proceedings of the 31st International Conference on Computational Linguistics, Abu Dhabi, United Arab Emirates, 19–24 January 2025; pp. 9472–9484. [Google Scholar]

- Zhang, Z.; Yang, Z.; Li, Z. Opinion-Tree-aware Prompt Tuning for Aspect Sentiment Quadruple Prediction. arXiv 2024. [Google Scholar] [CrossRef]

- Mao, Y.; Shen, Y.; Yang, J.; Zhu, X.; Cai, L. Seq2Path: Generating Sentiment Tuples as Paths of a Tree. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2022, Dublin, Ireland, 22–27 May 2022; pp. 2215–2225. [Google Scholar]

- Hu, M.; Wu, Y.; Gao, H.; Bai, Y.; Zhao, S. Improving Aspect Sentiment Quad Prediction via Template-Order Data Augmentation. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing (EMNLP), Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 7889–7900. [Google Scholar]

- Zhang, W.; Zhang, X.; Cui, S.; Huang, K.; Wang, X.; Liu, T. Adaptive data augmentation for aspect sentiment quad prediction. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 11176–11180. [Google Scholar]

- Wang, A.; Jiang, J.; Ma, Y.; Liu, A.; Okazaki, N. Generative data augmentation for aspect sentiment quad prediction. J. Nat. Lang. Process. 2024, 31, 1523–1544. [Google Scholar] [CrossRef]

- Nie, Y.; Fu, J.; Zhang, Y.; Li, C. Modeling implicit variable and latent structure for aspect-based sentiment quadruple extraction. Neurocomputing 2024, 586, 127642. [Google Scholar] [CrossRef]

- Peper, J.; Wang, L. Generative Aspect-Based Sentiment Analysis with Contrastive Learning and Expressive Structure. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2022, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 6089–6095. [Google Scholar]

- Gao, C.; Zhang, W.; Lam, W.; Bing, L. Easy-to-Hard Learning for Information Extraction. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2023, Toronto, ON, Canada, 9–14 July 2023; pp. 11913–11930. [Google Scholar]

- Hu, M.; Bai, Y.; Wu, Y.; Zhang, Z.; Zhang, L.; Gao, H.; Zhao, S.; Huang, M. Uncertainty-Aware Unlikelihood Learning Improves Generative Aspect Sentiment Quad Prediction. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2023, Toronto, ON, Canada, 9–14 July 2023; pp. 13481–13494. [Google Scholar]

- Lil, Z.; Yang, Z.; Li, X.; Li, Y. Two-stage aspect sentiment quadruple prediction based on MRC and text generation. In Proceedings of the 2023 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Maui, HI, USA, 1–4 October 2023; pp. 2118–2125. [Google Scholar]

- Xiong, H.; Yan, Z.; Wu, C.; Lu, G.; Pang, S.; Xue, Y.; Cai, Q. BART-based contrastive and retrospective network for aspect-category-opinion-sentiment quadruple extraction. Int. J. Mach. Learn. Cybern. 2023, 14, 3243–3255. [Google Scholar] [CrossRef]

- Wang, P.; Tao, L.; Tang, M.; Zhao, M.; Wang, L.; Xu, Y.; Tian, J.; Meng, K. A novel adaptive marker segmentation graph convolutional network for aspect-level sentiment analysis. Knowl.-Based Syst. 2023, 270, 110559. [Google Scholar] [CrossRef]

- Lai, W.; Xie, H.; Xu, G.; Li, Q. STAR: Stepwise Task Augmentation and Relation Learning for Aspect Sentiment Quad Prediction. arXiv 2025, arXiv:2501.16093. [Google Scholar] [CrossRef]

- Qin, Y.; Lv, S. Generative Aspect Sentiment Quad Prediction with Self-Inference Template. Appl. Sci. 2024, 14, 6017. [Google Scholar] [CrossRef]

- Bai, Y.; Xie, Y.; Liu, X.; Zhao, Y.; Han, Z.; Hu, M.; Gao, H.; Cheng, R. BvSP: Broad-view Soft Prompting for Few-Shot Aspect Sentiment Quad Prediction. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL), Bangkok, Thailand, 11–16 August 2024; pp. 8465–8482. [Google Scholar]

- Zhu, L.; Chen, X.; Guo, X.; Zhang, C.; Zhu, Z.; Zhou, Z.; Kong, X. Pinpointing Diffusion Grid Noise to Enhance Aspect Sentiment Quad Prediction. In Proceedings of the Findings of the Association for Computational Linguistics ACL 2024, Bangkok, Thailand, 11–16 August 2024; pp. 3717–3726. [Google Scholar]

- Su, G.; Zhang, Y.; Wang, T.; Wu, M.; Sha, Y. Unified Grid Tagging Scheme for Aspect Sentiment Quad Prediction. In Proceedings of the 31st International Conference on Computational Linguistics, Abu Dhabi, United Arab Emirates, 19–24 January 2025; pp. 3997–4010. [Google Scholar]

- Zhang, H.; Song, X.; Jia, X.; Yang, C.; Chen, Z.; Chen, B.; Jiang, B.; Wang, Y.; Feng, R. Query-induced multi-task decomposition and enhanced learning for aspect-based sentiment quadruple prediction. Eng. Appl. Artif. Intell. 2024, 133, 108609. [Google Scholar] [CrossRef]

- Zhang, W.; Deng, Y.; Li, X.; Yuan, Y.; Bing, L.; Lam, W. Aspect Sentiment Quad Prediction as Paraphrase Generation. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP), Virtual, 7–11 November 2021; pp. 9209–9219. [Google Scholar]

- Mohammadkhani, M.G.; Ranjbar, N.; Momtazi, S. E2TP: Element to Tuple Prompting Improves Aspect Sentiment Tuple Prediction. arXiv 2024, arXiv:2405.06454. [Google Scholar] [CrossRef]

- Bai, Z.; Sun, Y.; Min, C.; Lu, J.; Zhu, H.; Yang, L.; Lin, H. Intuition meets analytics: Reasoning implicit aspect-based sentiment quadruplets with a dual-system framework. Knowl.-Based Syst. 2025, 320, 113534. [Google Scholar] [CrossRef]

| Dataset | Restaurant | Laptop | ||||||

|---|---|---|---|---|---|---|---|---|

| S | Q | N = 1 | N ≥ 2 | S | Q | N = 1 | N ≥ 2 | |

| Train | 2934 | 4172 | 2100 | 834 | 1530 | 2484 | 920 | 610 |

| Dev | 326 | 440 | 236 | 90 | 171 | 261 | 106 | 65 |

| Test | 816 | 1161 | 580 | 236 | 583 | 916 | 370 | 213 |

| Dataset | Restaurant | Laptop | ||||||

|---|---|---|---|---|---|---|---|---|

| S | Q | N = 1 | N ≥ 2 | S | Q | N = 1 | N ≥ 2 | |

| Train | 834 | 1354 | 499 | 335 | 1264 | 1989 | 784 | 480 |

| Dev | 209 | 347 | 122 | 87 | 316 | 507 | 195 | 121 |

| Test | 537 | 795 | 358 | 179 | 544 | 799 | 377 | 167 |

| Method | Comparison Model | Restaurant | Laptop | ||||

|---|---|---|---|---|---|---|---|

| Pre | Rec | F1 | Pre | Rec | F1 | ||

| BERT | Extract-Classify-ACOS [10] | 59.81 | 28.94 | 39.01 | 44.52 | 16.25 | 23.81 |

| One-ASQP [19] | 62.60 | 57.21 | 59.78 | 42.83 | 40.00 | 41.37 | |

| CACA [21] | 66.31 | 61.24 | 63.16 | 45.26 | 41.37 | 43.22 | |

| UGTS [38] | 65.94 | 63.47 | 64.68 | 48.21 | 46.39 | 47.28 | |

| MRCCLRI [39] | 61.04 | 64.30 | 62.63 | 44.93 | 45.30 | 45.11 | |

| Generative | Paraphrase [40] | 58.98 | 59.11 | 59.04 | 41.77 | 42.56 | 42.56 |

| DLO [24] | 60.02 | 59.84 | 59.93 | 43.40 | 43.80 | 43.60 | |

| UAUL [30] | 61.03 | 60.55 | 60.78 | 43.78 | 43.53 | 43.65 | |

| E2TP [41] | – | – | 61.89 | – | – | 45.00 | |

| SIT [35] | 63.13 | 63.49 | 63.31 | 44.38 | 44.61 | 44.49 | |

| STAR [34] | 61.79 | 60.37 | 61.07 | 45.53 | 44.78 | 45.15 | |

| DuSR2 [42] | 61.86 | – | 61.20 | 46.11 | – | 45.75 | |

| GDP [37] | 64.71 | 63.71 | 64.21 | 46.84 | 44.20 | 45.48 | |

| ProPGCN | 66.52 | 63.15 | 65.04 | 48.65 | 45.73 | 47.89 | |

| Method | Comparison Model | Rest15 | Rest16 | ||||

|---|---|---|---|---|---|---|---|

| Pre | Rec | F1 | Pre | Rec | F1 | ||

| BERT | Extract-Classify-ACOS [10] | 35.64 | 37.25 | 36.42 | 38.40 | 50.93 | 43.77 |

| OTPT [22] | 51.01 | 52.26 | 51.63 | 59.30 | 62.02 | 60.63 | |

| UGTS [38] | 52.76 | 52.43 | 52.59 | 65.72 | 64.50 | 65.10 | |

| MRCCLRI [39] | 53.83 | 52.36 | 53.08 | 60.09 | 65.85 | 62.84 | |

| Generative | Paraphrase [40] | 46.16 | 47.72 | 46.93 | 56.63 | 59.30 | 57.93 |

| DLO [24] | 47.08 | 49.33 | 48.18 | 57.92 | 61.80 | 59.79 | |

| UAUL [30] | 48.03 | 50.54 | 49.26 | 59.02 | 62.05 | 60.50 | |

| E2TP [41] | – | – | 51.70 | – | – | 62.90 | |

| SIT [35] | 47.89 | 50.13 | 48.98 | 58.98 | 61.60 | 60.26 | |

| STAR [34] | 50.80 | 51.95 | 51.37 | 60.54 | 62.90 | 61.70 | |

| BvSP [36] | 60.96 | 47.53 | 53.17 | 68.16 | 59.42 | 63.49 | |

| DuSR2 [42] | 50.12 | – | 50.90 | 59.71 | – | 60.99 | |

| GDP [37] | 49.20 | 50.31 | 49.75 | 61.16 | 62.08 | 61.61 | |

| ProPGCN | 56.73 | 52.68 | 53.81 | 63.49 | 64.86 | 63.74 | |

| Model | Restaurant | Laptop | Rest15 | Rest16 |

|---|---|---|---|---|

| w/o Second-Order Prompt | 63.87 | 46.64 | 54.28 | 63.91 |

| w/o Third-Order Prompt | 63.72 | 45.95 | 53.89 | 63.80 |

| w/o WBL | 63.84 | 47.28 | 54.05 | 64.42 |

| w/o GRI | 64.51 | 47.09 | 54.12 | 63.98 |

| w/o Inference | 64.09 | 47.42 | 53.97 | 63.83 |

| ProRGCN | 65.04 | 47.89 | 54.70 | 64.74 |

| Method | Restaurant-ACOS | Laptop-ACOS | ||||||

|---|---|---|---|---|---|---|---|---|

| EA &EO | EA &IO | IA &EO | IA &IO | EA &EO | EA &IO | IA &EO | IA &IO | |

| Extract-Classify | 45.0 | 23.9 | 34.7 | 33.7 | 35.4 | 16.8 | 39.0 | 18.3 |

| Paraphrase | 65.4 | 45.6 | 53.3 | 49.2 | 45.7 | 33.0 | 51.0 | 39.6 |

| GEN-SCL-NAT | 66.5 | 45.2 | 56.5 | 50.7 | 45.8 | 34.3 | 54.0 | 39.6 |

| UGTS | 67.81 | 47.52 | 60.13 | 52.65 | 50.71 | 36.82 | 57.29 | 43.50 |

| ProPGCN (ours) | 68.23 | 48.60 | 60.94 | 54.06 | 51.37 | 38.04 | 58.01 | 44.79 |

| # | Sentence | Ground Truth | UGTS | HPGCN |

|---|---|---|---|---|

| 1 | The screen looked great | (screen, display general, POS, great) | (, , , ) | (, , , ) |

| 2 | Everything was fine and I went out for an hour. | (NULL, laptop general, POS, fine) | A×, , , ) | (, , , ) |

| 3 | The display stopped working within 2 months. | (display, display general, NEG, NULL) | (, , , ) | (, , , ) |

| 4 | We were seated right away, the table was private and nice. | (table, ambience general, POS, private) (table, ambience general, POS, nice) (NULL, service general, POS, NULL) | (, , , ) (, , , ) (–, –, –, –) | (, , , ) (, , , ) (, , , ) |

| 5 | Our server continued to be attentive throughout the night, but I did remain puzzled by one issue: who thinks that Ray’s is an appropriate place to take young children for dinner? | (server, service general, POS, attentive) (ray’s, restaurant miscellaneous, NEU, NULL) | (, , , ) (, O×, , NEG×) | (, , , ) (, , , ) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, Y.; Tang, M. Progressive Prompt Generative Graph Convolutional Network for Aspect-Based Sentiment Quadruple Prediction. Electronics 2025, 14, 4229. https://doi.org/10.3390/electronics14214229

Feng Y, Tang M. Progressive Prompt Generative Graph Convolutional Network for Aspect-Based Sentiment Quadruple Prediction. Electronics. 2025; 14(21):4229. https://doi.org/10.3390/electronics14214229

Chicago/Turabian StyleFeng, Yun, and Mingwei Tang. 2025. "Progressive Prompt Generative Graph Convolutional Network for Aspect-Based Sentiment Quadruple Prediction" Electronics 14, no. 21: 4229. https://doi.org/10.3390/electronics14214229

APA StyleFeng, Y., & Tang, M. (2025). Progressive Prompt Generative Graph Convolutional Network for Aspect-Based Sentiment Quadruple Prediction. Electronics, 14(21), 4229. https://doi.org/10.3390/electronics14214229