1. Introduction and Background

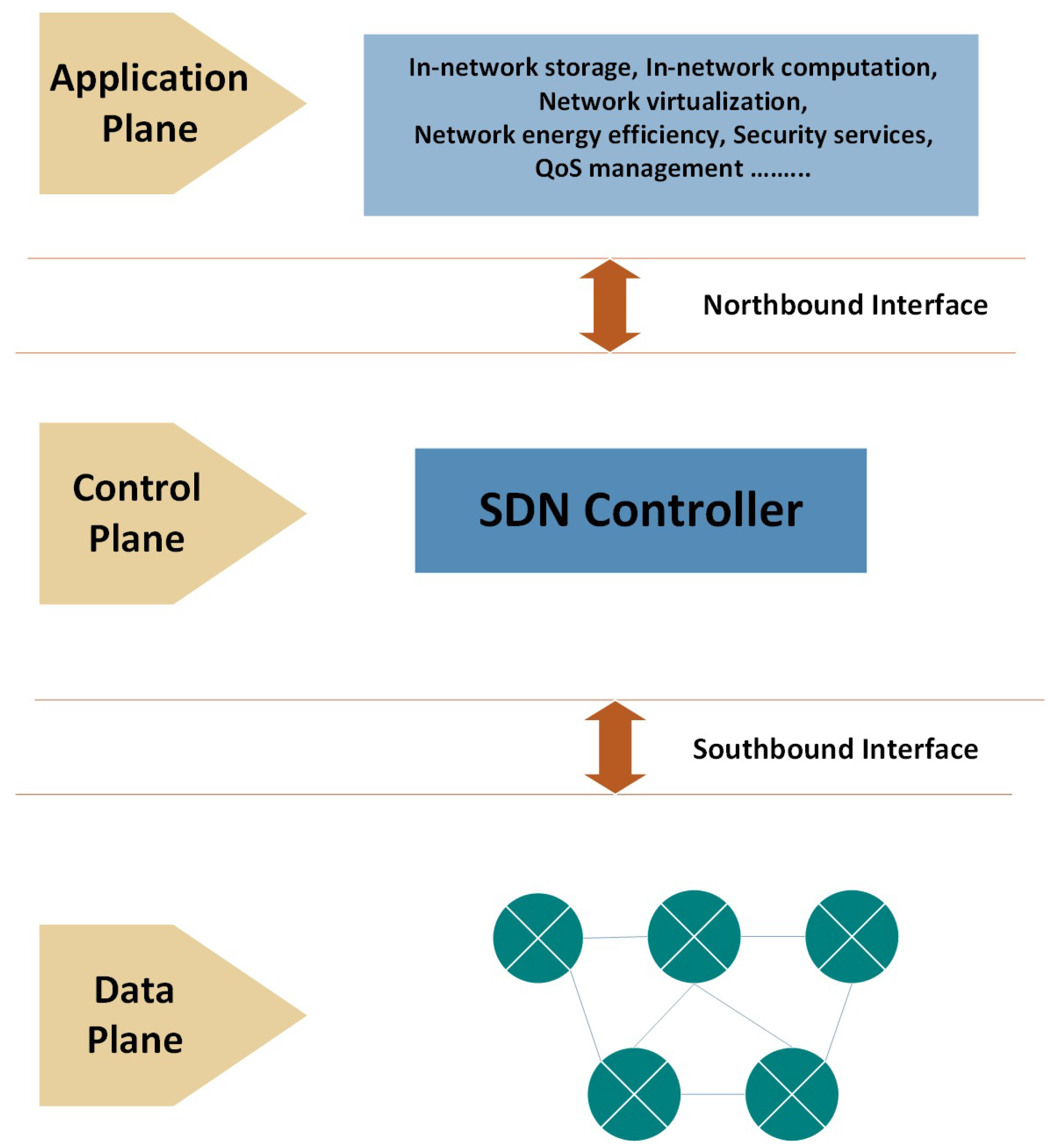

Traditional networks are static and based on the 7-layer OSI model, where the Data Link and Network layers enable networking functions. These networks comprise three planes: the management plane for monitoring, the control plane for device coordination, and the data plane for handling traffic. Network changes must be made manually, making administration labor-intensive. In contrast, software-defined networking introduces a modern architecture that distinguishes the control plane from the data plane. A centralized controller manages network devices and directs traffic, making the network programmable and easier to manage [

1,

2]. This shift allows administrators to control resources more efficiently and automate network operations.

Software-Defined Networking is a revolutionary network architecture that overcomes the shortcomings of traditional networks by demultiplexing the control logic from the hardware [

3]. Rather than controlling every network device separately, software-defined networking brings centralized control via software independent of the hardware devices. There are three chief layers that form the basis of software-defined networking [

4,

5]. (1) The Application Plane allows for instant development and instantiation of custom network services, in which developers have the ability to develop applications enhancing performance, security, and management of resources [

6,

7]. (2) The Control Plane is the centralized software-defined networking controller, overseeing traffic and policies [

8]. It makes decisions of core forwarding devices based on global network information [

9]. (3) The Data Plane includes the physical infrastructure for network communication, as shown in

Figure 1 [

10]. Packet forwarding in the data plane is controlled by rules that are defined by the control plane [

11,

12].

Communication between these layers is performed through APIs. Southbound APIs link the software-defined networking controller to network devices [

6] (e.g., routers and switches), whereas Northbound APIs help communication between the software-defined networking controller and applications [

13,

14]. This programmable and layered design provides more flexibility, automation, and scalability for advance network management.

Recent studies have shown that communication disruptions and partial observation losses in cyber–physical systems can weaken their resilience and compromise the effectiveness of attack detection mechanisms [

15]. DDoS attacks flood applications and services, causing deviation from normal operations. These attacks use a botnet or zombie network of compromised systems to make coordinated attacks on a specific server or network resource [

16]. Through various vulnerabilities, they consume important system resources like memory, CPU, and bandwidth, essentially preventing legitimate traffic from entering the destination [

17,

18].

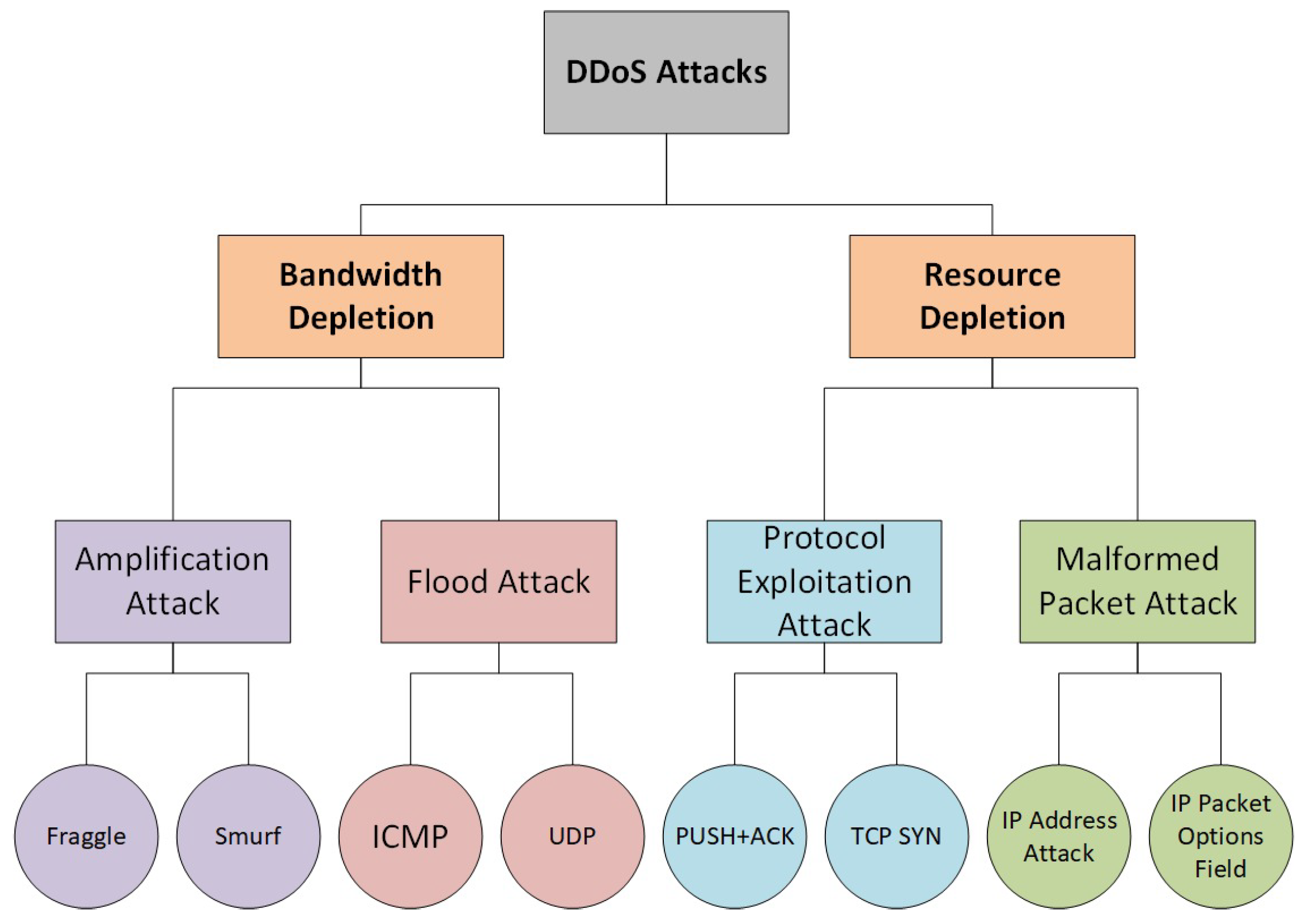

Figure 2 presents a taxonomy of DDoS attacks, which are generally classified into two primary categories. (1) Bandwidth depletion attacks (to flood the target network with malicious traffic, by blocking legitimate users from accessing network services). Its subtypes are Amplification Attack and Flood Attack. Amplification attack refers to sending requests to broadcast addresses or servers that respond with larger messages than the original request, amplifying traffic volume toward the victim, e.g., Fraggle (sending UDP packets to broadcast addresses), Smurf (sending ICMP packets to broadcast addresses), etc. Flood Attacks involve attackers using compromised devices/zombies to send massive volumes of traffic to a target system, e.g., ICMP, UDP, etc. [

19,

20]. (2) Resource depletion attacks (exhausting the victim system’s computational, memory, or protocol-handling resources, making it incapable of processing legitimate user requests). Its subtypes are Protocol Exploitation Attack and Malformed Packet Attack. In Protocol Exploitation Attack, the attacker identifies and takes advantage of flaws/weaknesses in network protocol, e.g., TCP SYN Flood, PUSH + ACK Attack, etc. [

21,

22]. In Malformed Packet Attack, the attacker sends corrupted or incorrectly structured packets to confuse/crash the target, e.g., IP Address Attack (IP spoofing), IP Packet Options Field (putting unusual values in the IPv4 options field), etc.

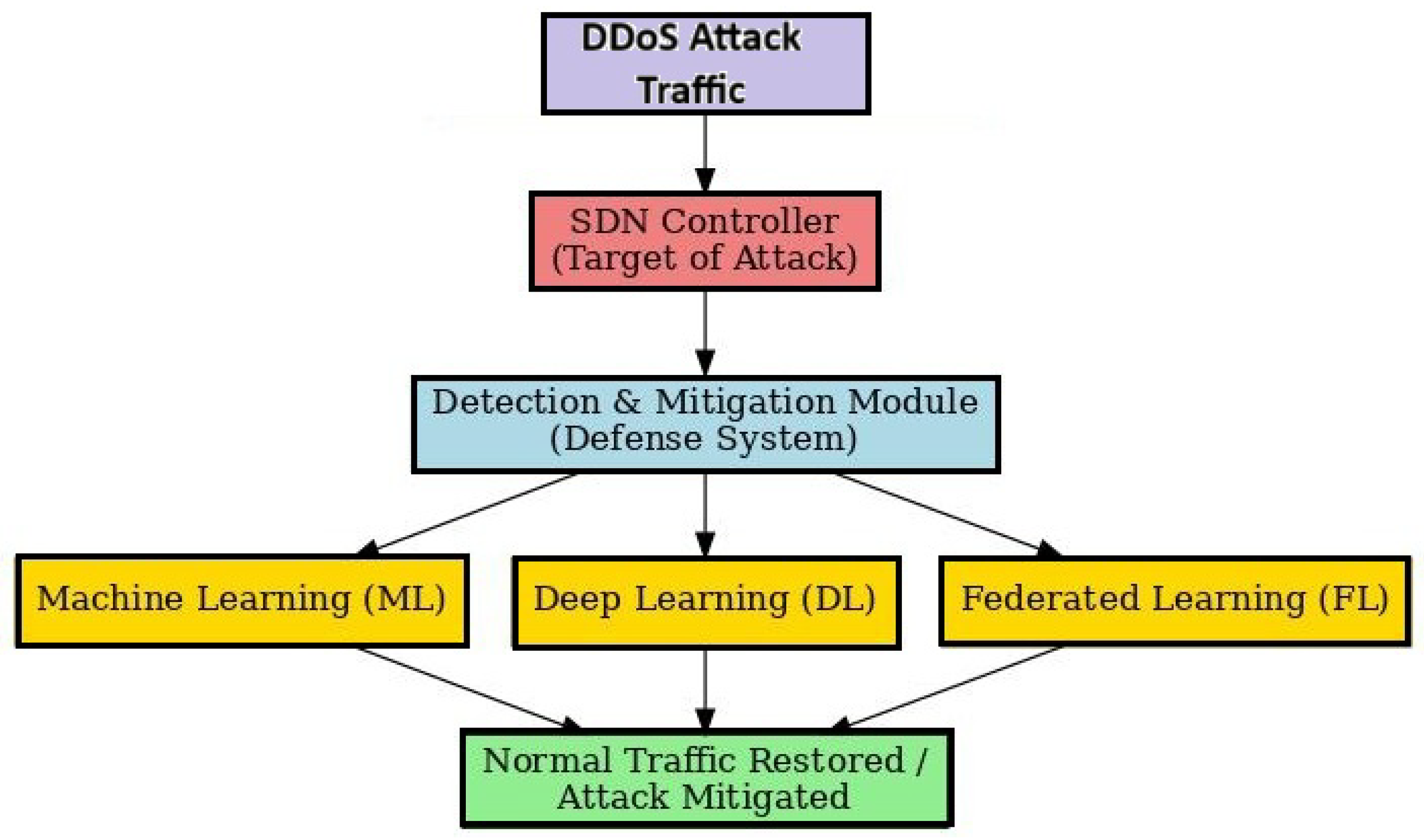

Machine learning (ML), deep learning (DL) and federated learning (FL) are the detection methods of DDoS attacks in SDN networks.

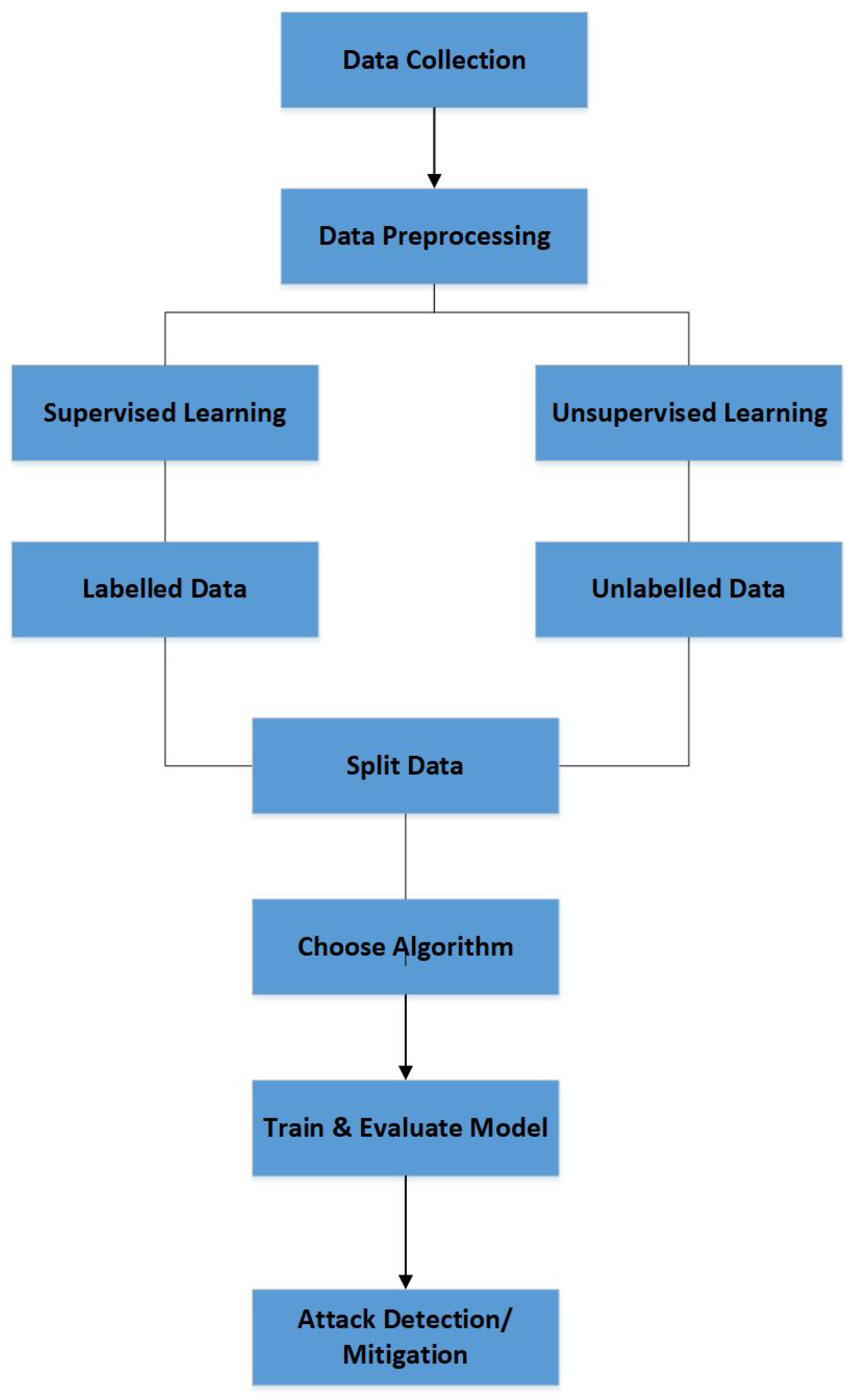

Through machine learning, computers learn automatically and improve themselves from experience without being programmed explicitly [

23]. Machine learning is a highly effective tool for use in the domain of cybersecurity. It is capable of analyzing huge datasets and determining patterns that can reveal a cyber-attack. From past data, machine learning algorithms can learn to identify suspicious activity and anomalies in real time, enabling organizations to proactively take steps to safeguard against cyber attacks [

24,

25,

26]. Machine learning entails examples like K-Nearest Neighbors (KNN) that classifies attacks according to traffic similarity, Support Vector Machine (SVM) that discriminates between benign and malicious traffic with the aid of hyperplanes, Decision Trees (DT)/Random Forest (RF) that use ensemble techniques for feature-based attack classification, Naïve Bayes (NB), which is a probabilistic approach for rapid anomaly detection, and Logistic Regression (LGR), which is the binary classification of attacks against normal traffic [

1,

27].

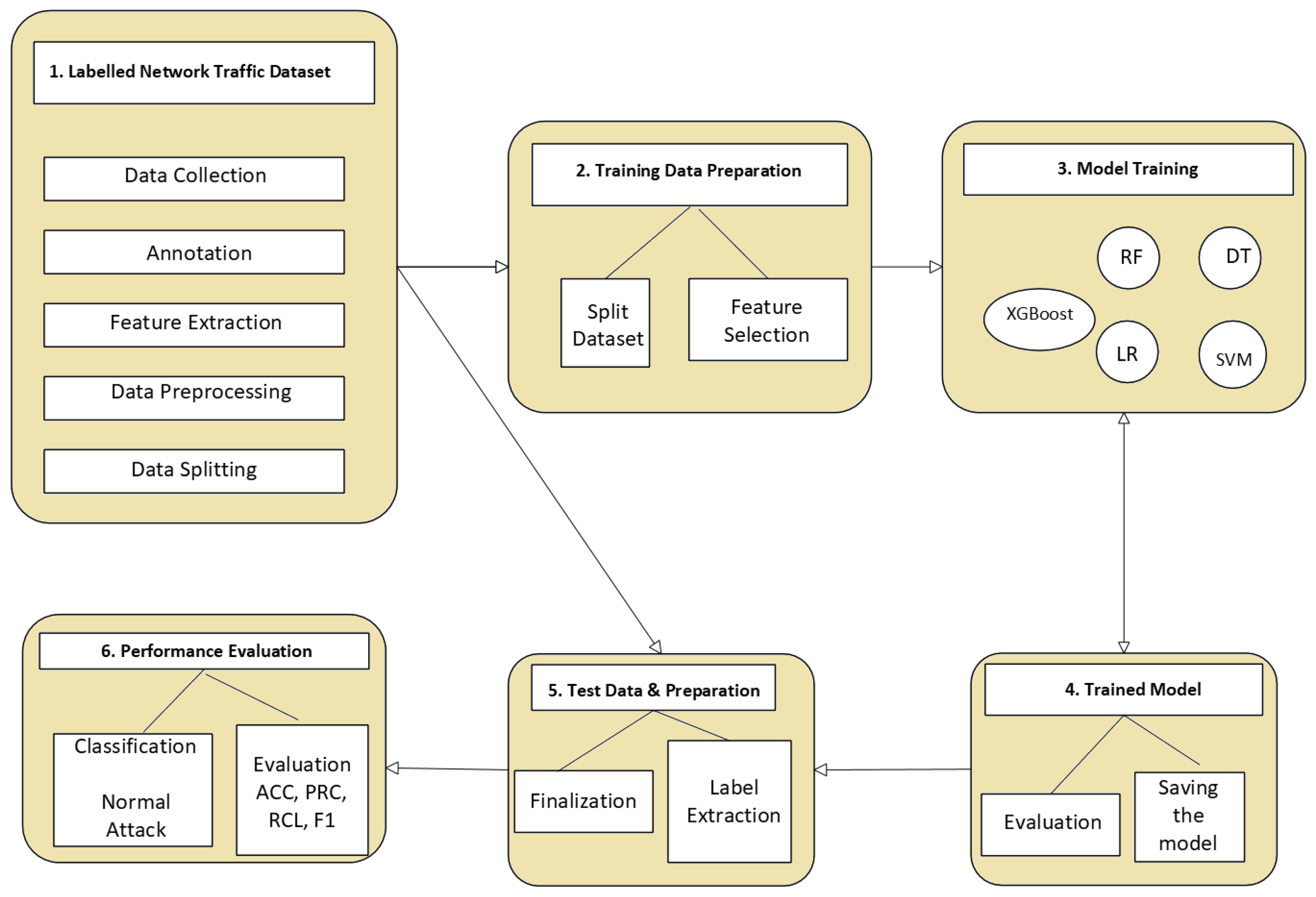

Figure 3 shows the machine learning workflow that begins with data collection. The next phase is preprocessing, which ensures that the dataset is clean and suitable for analysis. This phase often includes steps such as normalization, encoding, and, when necessary, data cleaning—which involves removing duplicate records and handling or discarding entries with missing values. The next step involves supervised learning and unsupervised learning. Supervised learning handles labeled data, while unsupervised learning treats unlabeled data. The process continues to split the data into training and testing sets by selecting an appropriate algorithm, trains the model, and then evaluates its performance on unseen data. For DDoS detection tasks, this typically involves classifying network traffic as either normal or attack. Then suitable actions such as alerts, blocking, or deploying mitigation strategies can be executed to safeguard the software-defined networking environment against identified attacks. For DDoS-specific datasets (e.g., CICDDoS2019, NSL-KDD, CAIDA), preprocessing usually entails flow reassembly to convert raw packet captures into bidirectional flow records, feature scaling (min–max or z-score normalization) to cope with skewed traffic volumes caused by attack floods, encoding categorical features such as protocol type, service, or flag values into numerical form, and removing artifacts, e.g., incomplete flows, duplicated SYN packets, or truncated attack bursts that occur during capture. These steps ensure that attack-induced anomalies (like high packet inter-arrival rates or abnormal byte counts) are kept intact as redundant data is minimized.

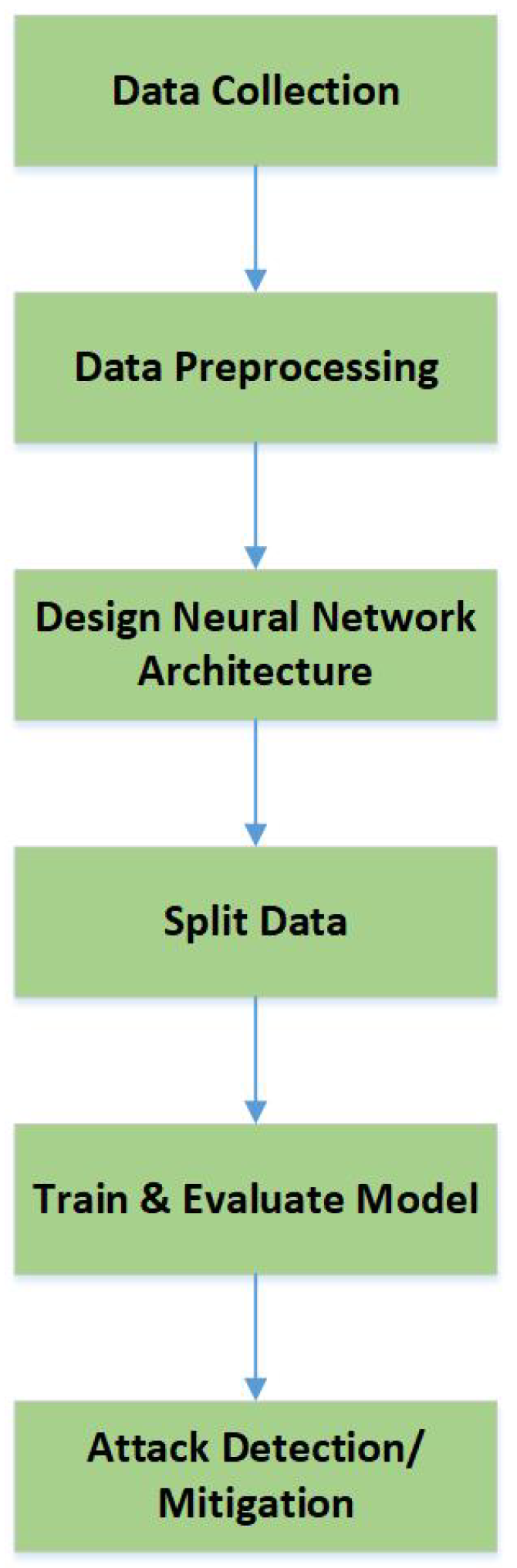

Deep learning models have a high level of abstraction and learning layers [

23]. These are widely utilized in the application of intrusion detection and malicious traffic identification, mostly due to their inherent benefits such as self-learning, self-organization, resistance, parallelism and excellent fault tolerance [

28,

29]. Examples of deep learning algorithms are Convolutional Neural Networks (CNNs), which evaluate spatial patterns within traffic data (e.g., packet headers, flow matrices), Recurrent Neural Networks (RNNs) that operate on sequential traffic data (e.g., time-series flow statistics), Long Short-Term Memory (LSTM), an advanced form of RNN used to discover long-term dependencies within attack patterns, and Deep Neural Networks (DNNs), which comprise generic multi-layer perceptrons (MLPs) to handle high-dimensional feature learning [

30,

31].

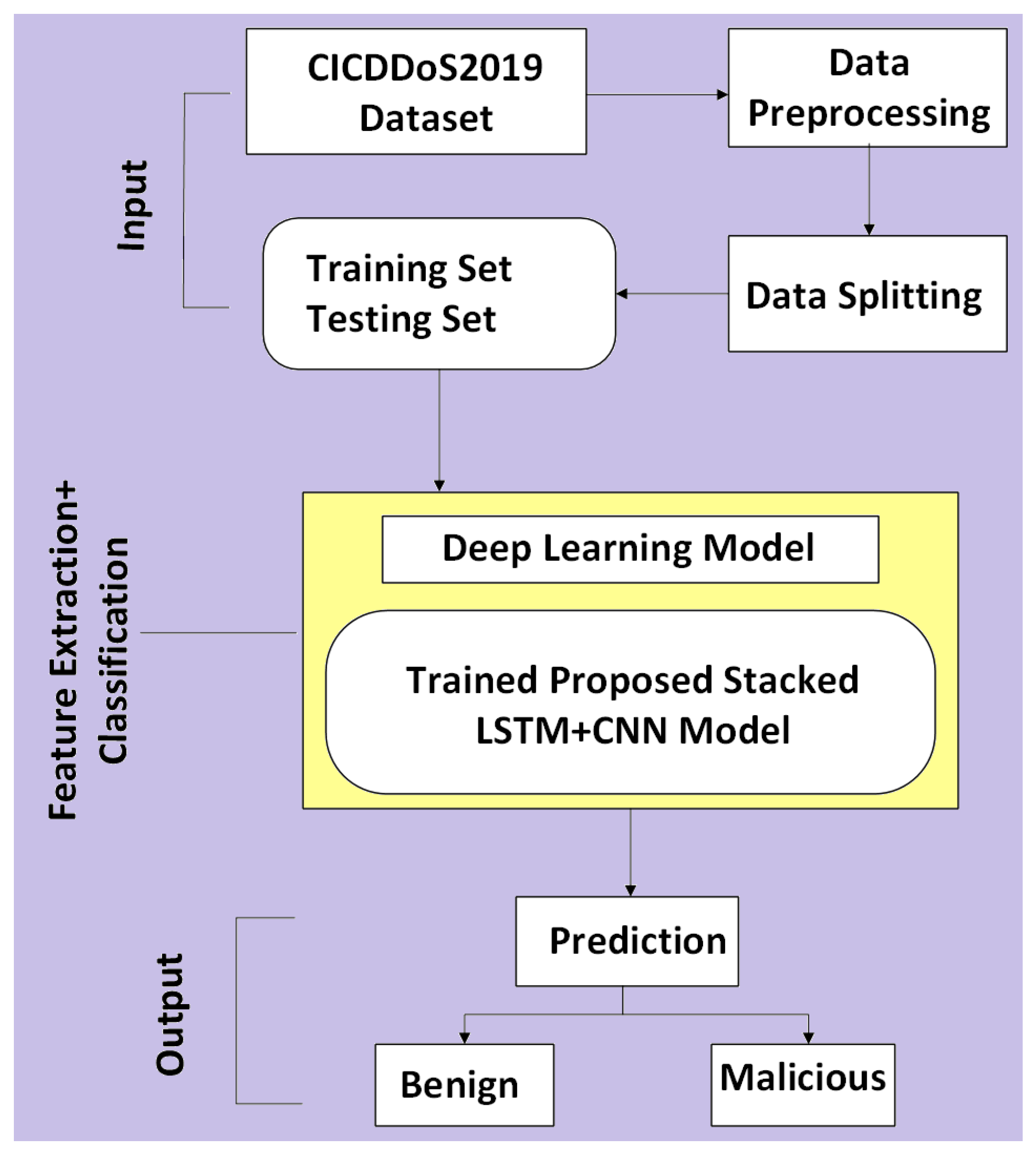

Figure 4 presents a workflow of the Deep Learning method. The process begins with data collection and then the dataset is preprocessed to ensure compatibility with the learning algorithm [

32,

33]. Pre-processing cleans the dataset [

34,

35]. Unlike conventional machine learning, deep learning requires the design of a neural network architecture, which may include multiple layers like convolutional, recurrent, or fully connected layers. Then data is split into training, validation, and test sets. During training, the model learns through forward propagation and backpropagation and adjusts weights. Following training, the model is evaluated using validation or test data to assess its performance. For DDoS detection tasks, this typically involves classifying network traffic as benign or malicious and mitigating them. For DDoS-specific detection, data preparation usually requires transforming raw flow statistics into traffic matrices or time-series windows to preserve temporal dependencies of attack bursts, applying one-hot encoding for protocol or port numbers in CNNs, handling class imbalance using oversampling (SMOTE) or undersampling, and selecting features tailored to DDoS traffic like packet rate per flow, entropy of source/destination IPs, or SYN-to-ACK ratios. These considerations boost the performance of deep learning models to capture both volumetric attacks like UDP floods and stealthy low-rate attacks like slow HTTP.

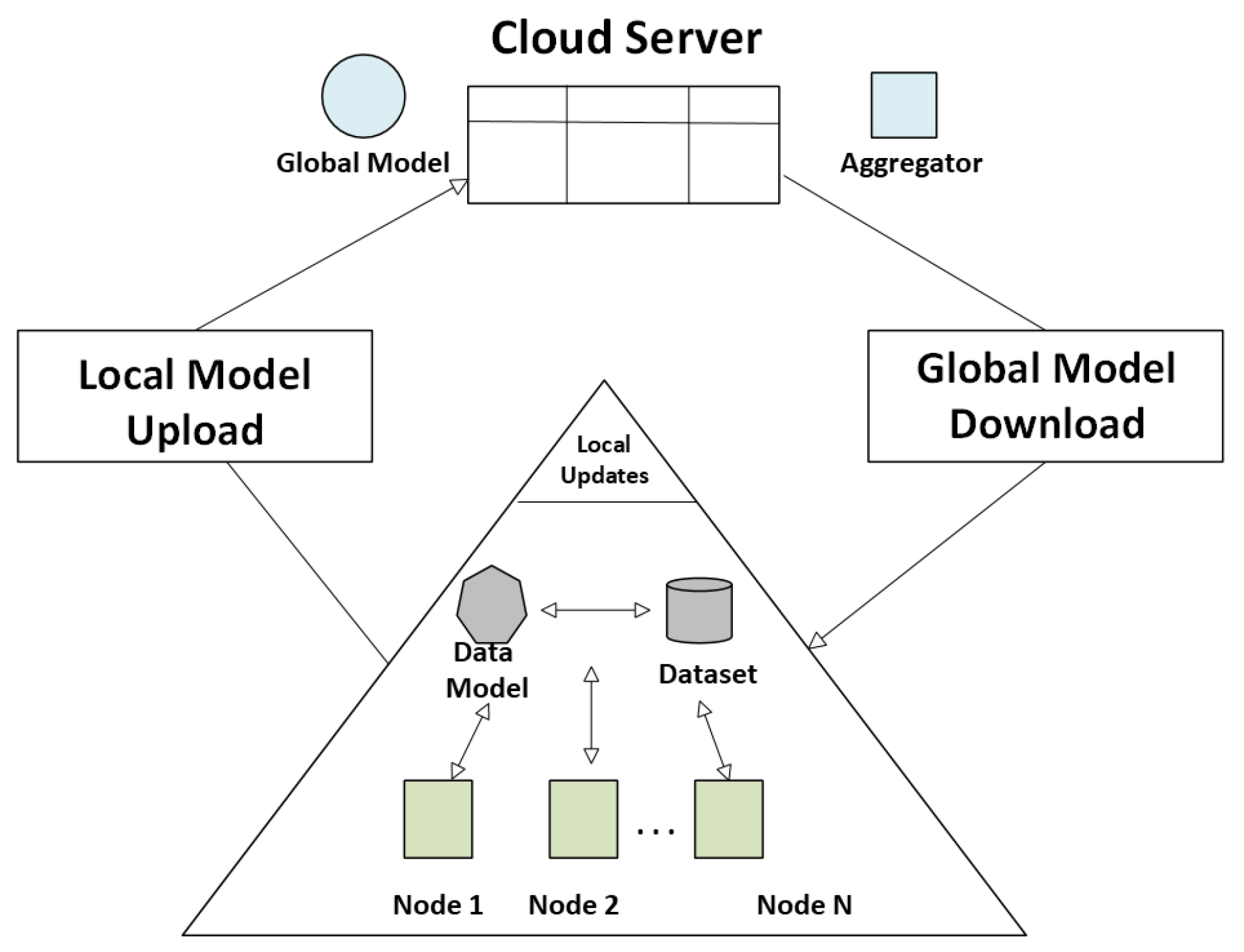

In contrast to traditional machine learning approaches, where data created by the various devices needs to be collected and transported to a common location for training and processing [

36], federated learning is distributed and collaborative, and it hinges on the notion of keeping data where it is created and training it there [

17,

37,

38]. The federated learning component indicates an Aggregated Global Model, which is a centralized software-defined networking controller (or server) that collects updates from local models to improve the global detection model and distributed software-defined networking architecture, which underscores federated learning’s place in scalable, privacy-conscious threat detection in geo-distributed networks [

39].

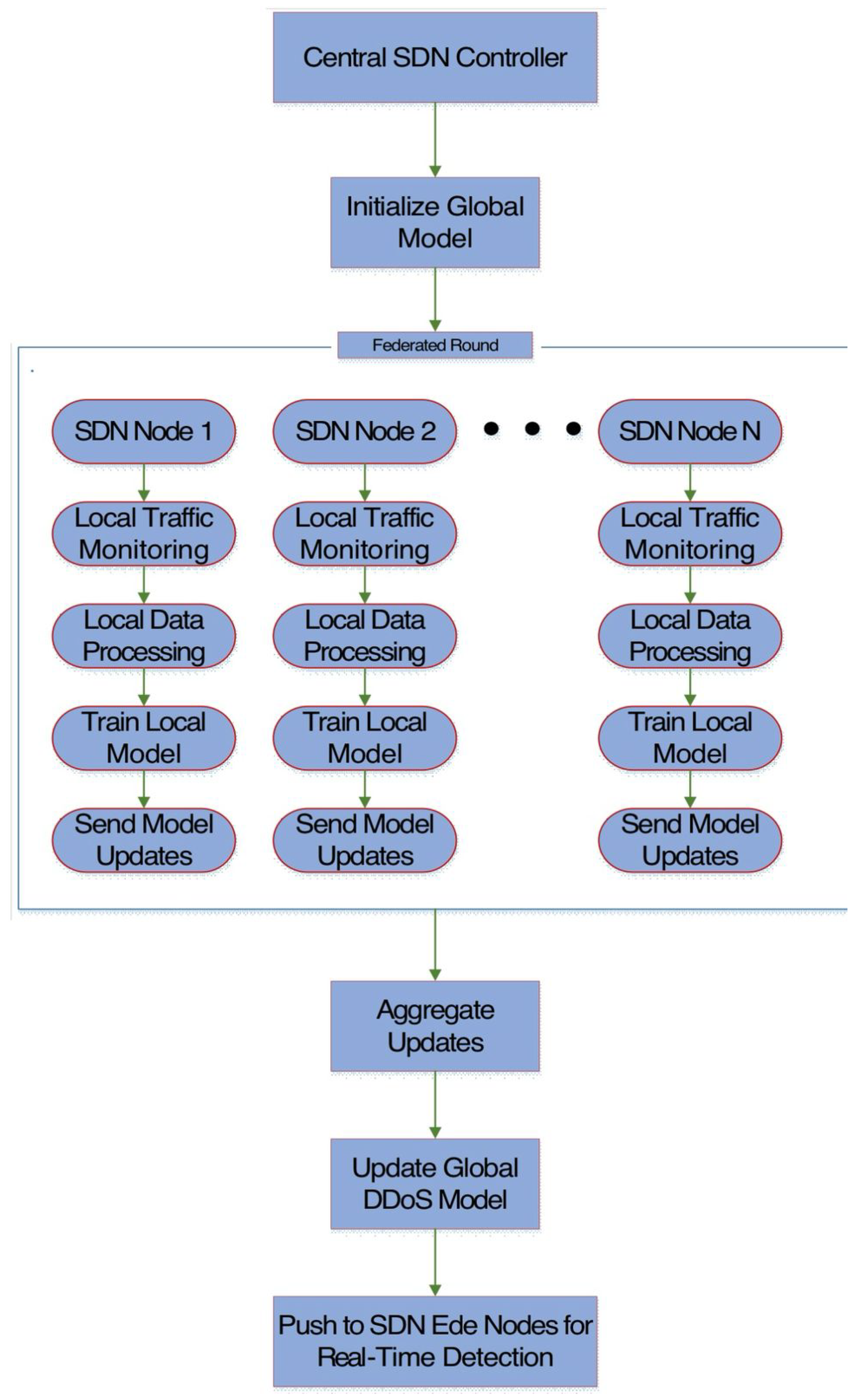

Figure 5 depicts the federated learning workflow in which each local node (distributed SDN switches/controllers) collects local traffic data (packet headers, flow stats, etc.) and independently trains a local model using its own data. Instead of sharing raw data, these nodes transmit only the model updates to a central server for aggregation. The server then combines these updates to form an improved global model that reflects collective insights from all participants and then the update global model is sent back to the edge nodes for real-time DDoS detection. This process reduces the data leakage risk, preserves data privacy and minimizes bandwidth usage by avoiding the transfer of large datasets [

40,

41]. In DDoS-specific contexts, local nodes typically preprocess their traffic by removing redundant heartbeat packets, normalizing flow-level statistics, and filtering out incomplete TCP handshakes to preserve bandwidth before training. Aggregation strategies like FedAvg or weighted averaging are adjusted to account for heterogeneous traffic intensities across different software-defined networking domains, where some controllers may experience heavy attack loads, while others only experience normal traffic. Additionally, feature extraction at the edge often emphasizes DDoS-relevant metrics like packets-per-second, average flow duration, and source IP entropy, making sure that updates capture meaningful attack behavior. This enables federated learning-based detection to remain resilient against poisoning attacks, while reducing communication overhead and preserving local privacy.

Figure 6 illustrates the overall process of detecting and mitigating DDoS attacks in SDN. Malicious traffic first targets the SDN controller, where a defense module analyzes it using Machine Learning, Deep Learning, and Federated Learning approaches. These methods enable the system to identify and mitigate the attack, restoring normal network traffic.

The research methodology involves identify search terms, selecting sources and applying inclusion/exclusion criteria. Search terms were applied for finding DDoS attacks on software-defined networking; the core terms found were “DDoS attack”, “SDN”, “Distributed Denial of Service”, “Software Defined Networking”, “DDoS detection in SDN”, “SDN-based DDoS detection techniques”. Boolean operators were also applied to obtain better results: (“DDoS” OR “Distributed Denial of Service”) AND (“SDN” OR “Software Defined Networking”) AND (“detection” OR “prevention” OR “mitigation”), “DDoS attack” AND “SDN controller”, “security challenges in SDN” AND “DDoS”. Search sources are Google Scholar, SpringerLink, IEEE Xplore, ScienceDirect, ACM Digital Library, and Scopus. Recent work has been selected (2021–2025) to be on par with changing software-defined networking technology and concentrated on papers showing performance, either in simulations (e.g., Mininet) or actual implementations. Papers dealing with unrelated attacks or technologies have been removed, i.e., DNS attacks, phishing, ransomware, or attacks in conventional networks/cloud, unless specifically related to software-defined networking. Papers that are just duplicates of previous work with no significant new results or without complete content available for analysis have also been removed.

The novelty of this survey is the categorization of DDoS detection/mitigation models based on machine learning, deep learning and federated learning models into domain-specific SDN environments and general-purpose SDN environments. Their performance parameters have also been discussed. Another novel contribution is to categorize the datasets as Legacy Compatible Datasets and SDN-Contextual Datasets.

This paper has been arranged into the following order.

Section 1 describes the ’Introduction and Background’ in which fundamentals of software-defined networking architecture, DDoS attacks, machine learning, deep learning and federated learning architecture to identify DDoS attacks in software-defined networking and research methodology have been discussed. Then a comparative analysis of DDoS detection/mitigation approaches in domain-specific vs. general-purpose software-defined networking environment is given in

Section 2.

Section 3 contains a comparison of performance parameters of DDoS detection/mitigation techniques in a software-defined networking environment. Categorization of datasets as legacy compatible datasets and SDN-contextual datasets is discussed in

Section 4. Finally, the future directions and conclusion have been presented in

Section 5.

2. Comparative Analysis

Table 1 classifies and contrasts different machine learning techniques used in general-purpose software-defined networking settings for DDoS detection and prevention. Each row provides the algorithm(s) employed, dataset(s) utilized, strengths, limitations and simulation tools in the techniques.

The authors in Ref. [

20] develop a novel SDN-specific dataset using the Mininet emulator and Ryu controller, which capture both benign and malicious traffic. They extracted 23 flow and port-level features and label the traffic data accordingly. The core methodology involves training various machine learning classifiers, with a hybrid model achieving the best performance by combining RF and SVC. However, it has computational overhead due to the hybrid model and requires tuning for real-world deployment.

The main methodology employed in Ref. [

42] to detect DDoS attacks involves a supervised machine learning framework integrated with a custom dataset. Mininet emulator was used. The authors first designed realistic SDN topologies and simulated both benign and attack-based traffic by using tools like MGEN and hping3. Network traffic was captured and processed into flow-level features. These features were then subjected to preprocessing. Five machine learning classifiers, LR, SVM, RF, KNN and XGBoost, were trained and tested on the dataset. The proposed framework is shown in

Figure 7. Performance evaluation demonstrated that the RF model achieved the highest detection accuracy with a low FAR. However, the dataset was generated using synthetic traffic, which may not fully capture real-world variability and computational overhead of hybrid models not evaluated.

The study by Ref. [

43] involves deploying a real-time, adaptive intrusion detection and prevention framework. The approach develops an ensemble of online machine learning classifiers—Bernoulli Naïve Bayes, Passive-Aggressive, SGD, and MLP—which were trained incrementally using streaming data from live network traffic which was collected via a custom-built Traffic Collector module in the Ryu software-defined networking controller. The system analyzes flow statistics continuously and dynamically selects the most suitable features using a refined Chi-squared feature selection technique. Although this architecture ensures effective handling of zero-day and low-rate DDoS attacks, it incurs higher computational complexity due to ensemble and online learning.

Previous research [

44] involves a hybrid approach called ML-Entropy, which combines statistical entropy analysis with machine learning to enhance DDoS detection in a software-defined networking environment. The detection process is divided into three steps: (1) calculate the entropy of selected network traffic to detect anomalies; (2) use these entropy values as input for a SVR model with a Radial Basis Function (RBF) kernel to dynamically learn and adjust entropy thresholds; and (3) apply the learned thresholds to incoming traffic to identify and flag potential attacks. The evaluation relies on two datasets: the DARPA2009 synthetic dataset and a corporate network (RealCorp). Initial reliance on entropy-based features and manual selection of attributes could limit adaptability to emerging attack vectors.

In Ref. [

45], authors introduce K-DDoS-SDN, a distributed methodology to detect DDoS attacks in software-defined networking environments, leveraging Apache Kafka and scalable machine learning. The approach comprises two modules; the NTClassification module employs H2O machine learning algorithms (e.g., Gradient Boosting Machine) trained on the Hadoop cluster using the CICDDoS2019 dataset, then deploys the optimized model on a Kafka Streams cluster (KC-3) to identify network traffic in real time. Kafka clusters KC-1 and KC-2 preprocess incoming traffic by extracting 21 critical features (e.g., packet length, flow intervals) using CICFlowMeter, normalizes them, and replicates data to KC-3 for analysis. The NTStorage module persistently stores raw packets, network flows, and features in HDFS for model retraining and historical analysis. Evaluated across multiple attack scenarios, the system achieves high accuracy but it has limited evaluation to CICDDoS2019 and lacks diverse SDN-specific datasets.

According to Ref. [

26], the machine learning-based methodology has been used to detect non-periodic Low-rate Denial of Service (LDoS) attacks in software-defined networking. The approach involves simulating both traditional and non-periodic LDoS attacks in a software-defined networking environment by using Mininet, Ryu controller, where non-periodic attacks employ random values for parameters like attack rate (R), duration (L), and period (T). Network traffic data is collected using tools like TCPDump, and key features such as TCP/UDP packet counts, standard deviation, skewness, and UDP-to-TCP ratio (UTR) are extracted. These features are preprocessed and used to train supervised (SVM, LR) and unsupervised (BIRCH) machine learning models. The LR model achieves the highest accuracy and fastest detection time. However, there is no real-world dataset validation and it focuses only on LDoS attacks (not all DDoS variants).

The experimental setup in Ref. [

46] involves using the Mininet tool and Ryu controller to emulate a software-defined networking environment, where normal and attack traffic data are collected and stored to a CSV file. The machine learning algorithms, including DT, RF, GNB, SVM and ETC, are trained on this dataset, classifying traffic as normal or malicious. When an attack is detected, the Ryu controller dynamically blocks the offending port to mitigate the attack, then unblocks it after a specified time. Results showing high detection rates, particularly for the Extra Tree Classifier, which achieved high accuracy. However, limited to SYN flood attacks, emulation may not fully reflect real-world network conditions.

The methodology used in [

47] involves four key modules: a Flow Collector to collect traffic data from switches, a Feature Extender to enhance native OpenFlow feature, an Anomaly Detection module using machine learning classifiers to identify flows as normal or malicious, and an Anomaly Mitigation module to block attack sources. The system was tested in a Mininet-emulated software-defined networking environment, with Random Forest achieving the highest accuracy. By extending flow features, the model improved detection performance compared to methods relying solely on native OpenFlow counters, enabling precise attack mitigation without disrupting legitimate traffic. Yet it may be ineffective in the case of IP spoofing because it relies on Ethernet address for mitigation.

The study by Ref. [

48] involves simulating a software-defined networking environment by using the Ryu controller and Mininet, where normal and attack traffic flows are generated and labeled. Key flow statistics are collected and stored in a dataset. SVM, NB, and MLP (Multi-Layered Perceptron) are trained on this dataset to classify traffic. The MLP achieved the highest accuracy in real-time detection. Upon identifying an attack, the system blocks the malicious flow, leveraging SDN’s programmability for rapid mitigation. Although the results illustrate the effectiveness of MLP in distinguishing and mitigating SYN flood attacks, only TCP-SYN flood attack is evaluated, and other attacks are not considered.

Authors in Ref. [

49] introduce a three-stage structure to detect/mitigate DDoS attacks in software-defined networking. First, it accumulates traffic data using OpenFlow from the data plane to the control plane, where key features are quantified using Renyi’s entropy. In the second stage, it frames the expected traffic status using a hybrid metaheuristic clustering algorithm combining Ant Colony Optimization (ACO) and Particle Swarm Optimization (PSO), which forms clusters of normal traffic behaviors. Multinomial logistic regression is then used to classify incoming traffic based on deviations from these clusters. Finally, the third stage implements mitigation policies that block, drop, or redirect traffic flows from suspicious IPs or ports. However, it lacks real-world dataset validation and the mitigation strategy is limited to static policies.

DenStream clustering algorithm is used in Ref. [

50]. It identifies DDoS and portscan attacks in real time using entropy-based features extracted from source, destination IP addresses and ports. DenStream operates by creating micro-clusters dynamically from network traffic data, classifying new behaviors as potential anomalies based on deviations in entropy patterns. Attacks are recognized when a new potential micro-cluster (P-MC) forms within a defined “potential area” (PA) that significantly diverges from the core micro-clusters (C-MCs), which represent normal traffic. The system updates continuously without requiring labeled data or prior training and makes it suitable for evolving and high-speed software-defined networking environments. The method was tested on 48 simulated datasets with various attack intensities.

A hybrid machine learning approach used in [

51] combines Self-Organizing Maps (SOM) and k-Nearest Neighbors (k-NN) to detect and mitigate DDoS attacks in a software-defined networking environment. The system comprises four key modules: statistics collection, feature extraction, attack detection, and defense. Network traffic features are collected from flow tables and preprocessed using SOM to reduce dimensionality and enhance pattern recognition. These preprocessed vectors are then classified with k-NN to determine whether an ongoing DDoS attack exists. Upon detection of attack flow, the system dynamically installs flow rules to drop malicious traffic and later restores blocked ports based on an adaptive recovery model. The system is evaluated using a regenerated CAIDA DDoS 2007 dataset.

Table 2 shows Machine Learning DDoS detection/mitigation policies in domain-specific SDN environment.

The authors in Ref. [

52] propose a feature selection mechanism using the Chi-square statistical test to identify the most relevant features from network traffic data. They then employ a Decision Tree classifier to train on these selected features for effective attack detection. The system collects flow statistics from the SDN controller and applies the trained model to identify the traffic as either benign or malicious, enabling DDoS attack identification. However, this is limited to flooding-based DDoS attacks and performance may vary with larger-scale IoT networks.

The paper in Ref. [

53] introduces FMDADM, a multi-layer DDoS attack detection/mitigation framework designed for stateful SDN-based IoT networks. This process comprises four key modules: (1) an early detection module that uses a 32-packet window and entropy-based analysis for quick anomaly identification, (2) a novel Double-Check Mapping Function (DCMF) to detect spoofed IP/MAC addresses at the data plane level, (3) a machine learning-based detection module that employs feature engineering, including five newly computed features, to train and classify traffic using models like SVM, GNB, kNN, BLR, DT, and RF algorithms and (4) a mitigation module that dynamically installs high-priority flow rules to drop attack packets. Although it improves accuracy, performance may vary with larger-scale networks or different attack types.

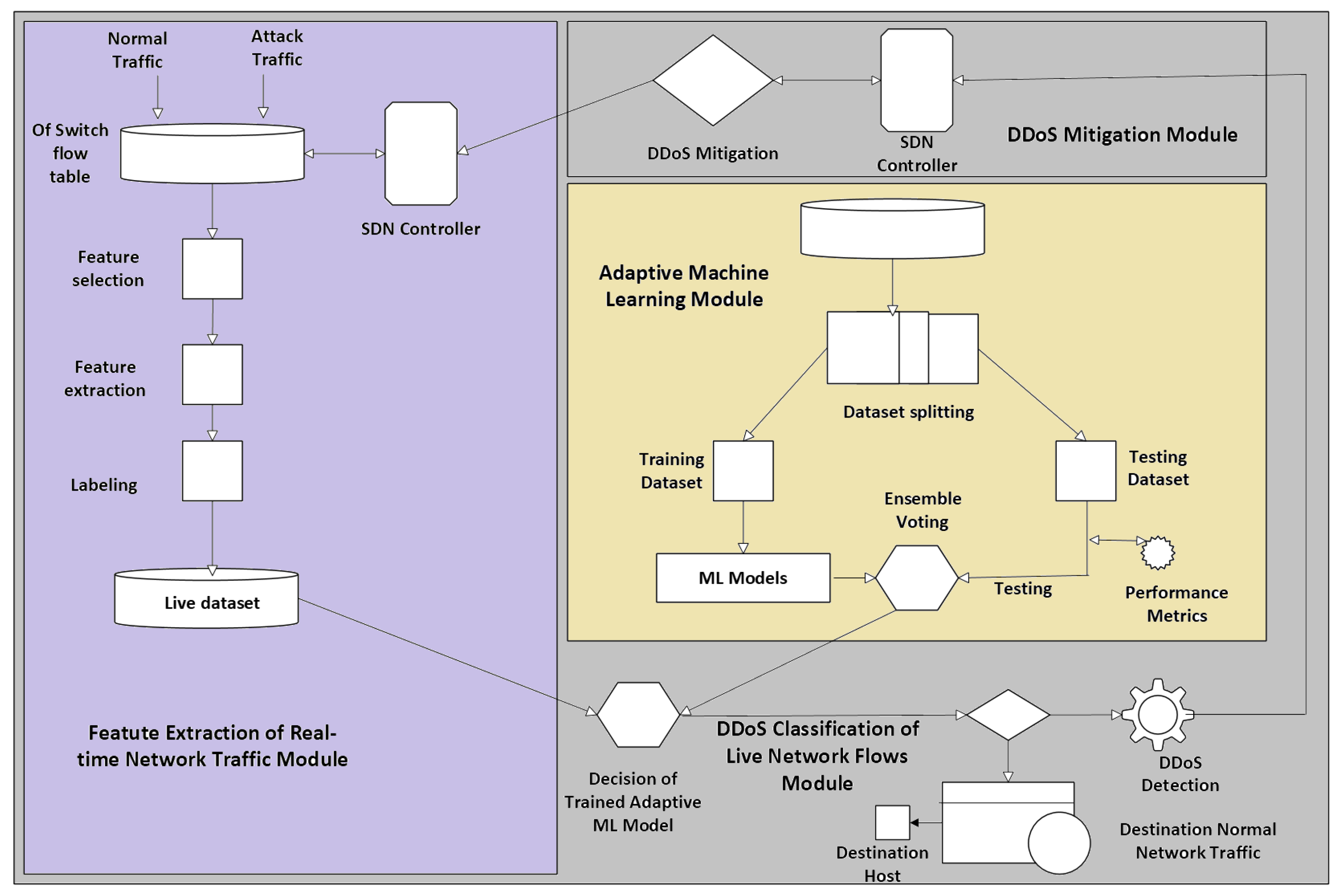

An Adaptive Machine Learning-based SDN-enabled DDoS attack Detection and Mitigation (AMLSDM) framework has been proposed in Ref. [

54], shown in

Figure 8. This employs a multilayered feed-forward architecture that begins with training an ensemble of machine learning classifiers, including SVM, NB, RF, kNN, and LR. These models are aggregated using an Ensemble Voting (EV) mechanism to enhance classification accuracy. The trained model analyzes real-time traffic features such as the rate of source IPs, standard deviation of flow packets, standard deviation of flow bytes, rate of flow entries on switch, and ratio of pair-flow entries on switches. Detection occurs at the OpenFlow (OF) switches, and upon identification of a DDoS attack, mitigation is executed by the software-defined networking controller through reconfiguration of network paths and flow rules to isolate malicious traffic and maintain legitimate traffic flows. However, it requires environment-specific dataset training and computational overhead due to multiple classifiers.

The framework used in Ref. [

55] operates in three layers: infrastructure, secure and intelligent software-defined networking, and service layers, leveraging SDN’s centralized control to monitor and manage network traffic. XGBoost is employed for binary and multiclass classification, achieving high accuracy by analyzing features such as connection counts, packet rates, and traffic statistics to distinguish between normal and malicious traffic. The model is trained and validated using the BoT-IoT dataset to evaluate its effectiveness in mitigating DDoS attacks in smart city environments. Hence, it is limited to the BoT-IoT dataset and may not generalize to all DDoS attack types. There is computational overhead due to XGBoost’s complexity.

The main method used in [

56] to detect DDoS attacks in SDN-enabled smart home networks combines signature-based detection with machine learning models. The framework employs SNORT, a signature-based intrusion detection system (IDS) for protecting the software-defined networking controller by matching traffic against predefined rules for known attacks. For smart devices, the system utilizes supervised machine learning algorithms DT, LR, SVM, and KNN trained on network traffic data to classify and detect DDoS attacks in real-time. Feature selection techniques like Principal Component Analysis (PCA) and feature selector class are applied. The framework operates within the SDN controller, leveraging its centralized control to monitor traffic and mitigating attacks dynamically. But it is limited to TCP SYN flood attacks in experiments and SNORT requires manual rule updates for new attacks.

Table 3 below shows Deep Learning DDoS detection/mitigation policies in general-purpose SDN environment.

The study by Ref. [

57] involves a CNN-based deep learning model. The approach first collects traffic statistics from the SDN controller, then extracts flow-based features to construct a dataset. These features are normalized and reshaped into a format compatible with CNN input layers. The CNN architecture consisted of convolutional and pooling layers, which is followed by fully connected layers, and is trained to classify traffic as normal or DDoS. The model leverages its ability to automatically extract hierarchical spatial features from the data. However, the computational cost for large-scale networks has not been fully explored and reliance on pre-trained models may limit adaptability to new attack patterns.

In Ref. [

58], the hybrid deep learning CNN-MLP architecture processes software-defined networking traffic features to automatically extract spatial characteristics (via CNN layers) and then classifies the traffic using fully connected MLP layers. The approach leverages flow-based traffic statistics collected from the software-defined networking controller, with preprocessing and normalization applied to the dataset. This model is trained and tested on a labeled dataset to identify DDoS attacks, demonstrating improved accuracy and performance compared to individual models. However, there are high computational complexity and potential scalability issues in large software-defined networking environments.

The proposed detection methodology in [

59] is shown in

Figure 9. It is a hybrid deep learning approach, combining CNN and Bidirectional Long Short-Term Memory (BiLSTM) model. Initially, network traffic data is collected and preprocessed using the CICDDoS2019 dataset. The CNN component is employed to extract local spatial features from the input traffic data, while the BiLSTM network captures temporal dependencies and contextual information from the sequence of network events. This combined architecture improves the model’s ability to accurately identify patterns similar to DDoS attacks. Though limited to detection only, it does not explore real-world mitigation deployment and is only tested on one dataset.

The approach proposed in Ref. [

39] involves a DNN model trained on network traffic data, which is integrated into the software-defined networking environment via the Ryu controller. The process begins with capturing real-time network traffic, preprocessing the data and feeding it into the pre-trained DNN model for classification. The model analyzes traffic patterns to differentiate between normal and malicious tasks, with a threshold-based mechanism to flag potential DDoS attacks. The model’s performance is validated on datasets like InSDN, CICIDS2018, and Kaggle DDoS. Upon detection, the system mitigates the threat by blocking malicious traffic dynamically. But no mitigation mechanism is deeply evaluated beyond detection.

The authors of Ref. [

60] proposed a technique based on a Generative Adversarial Network (GAN) framework. The system collects network traffic data in near-real time (every second) from OpenFlow switches, processes the data by extracting features such as bit rates, packet rates, and entropy metrics for IP/port distributions, and feeds these features into a GAN-based anomaly detection model. The GAN consists of a generator that creates adversarial examples and a discriminator trained to distinguish between normal traffic and malicious DDoS attacks, making the system robust against adversarial perturbations. Upon detection, a mitigation module blocks malicious traffic using an Event-Condition-Action (ECA) model and a Safe List mechanism. The approach was validated on emulated software-defined networking environments and the CICDDoS 2019 dataset. Hence, adversarial training is required, which can result in high computational load.

In Ref. [

61], a hybrid deep learning approach combining a Stacked Auto-Encoder (SAE) with a Multi-Layer Perceptron (MLP) is employed, termed SAE-MLP. The SAE is employed for dimensionality reduction and feature extraction, while the MLP performs the categorization of network traffic into normal or malicious classes. SDN-DDoS dataset has been used. The SAE-MLP model achieves a high accuracy, outperforming other deep learning models such as LSTM, CNN and CNN-LSTM. However, long processing time is required for SAE-MLP.

The technique used in Ref. [

62] involves converting raw network traffic into RGB image data by splitting the traffic into 1 MB windows, preprocessing the data and transforming it into 3D arrays representing pixel values. These arrays are then converted into images using OpenCV2 and resized to a common dimension. A custom deep learning model based on CNN architecture is employed, consisting of multiple 2D-convolution, max-pooling, batch normalization, and dense layers, optimized with the Adam algorithm. The model classifies the images as normal or attack traffic. This approach leverages CNN’s ability to automatically extract features from image-like representations of network data. However, evaluation cost is high and it lacks real-world deployment.

The architecture in Ref. [

63] includes two main components: an Intrusion Detection System (IDS) based on LSTM neural networks, and a Deep Reinforcement Learning (DRL)-based Intrusion Prevention System (IPS). Traffic is monitored at the software-defined networking data plane via edge switch mirroring, processed using CICFlowMeter to generate bidirectional flow statistics, and sampled for lightweight processing. The LSTM-based IDS detects suspicious flows and informs the DRL-based IPS, which uses a deep Q-learning agent per bidirectional connection to make mitigation decisions in real time. However, it focuses only on slow-rate attacks (e.g., application layer).

Authors in Ref. [

64] combined feature selection techniques with a deep learning model based on an LSTM-Autoencoder architecture. The method uses IG and RF to identify the most relevant SDN-specific traffic features from datasets including InSDN, CICIDS2017, and CICIDS2018. After preprocessing and normalization, the selected features are put into the deep learning model, where an unsupervised autoencoder extracts hierarchical representations, followed by supervised fine-tuning using LSTM layers to capture temporal traffic behavior. The model is merged into the software-defined networking controller and evaluated using the Cbench tool for performance impact. Although it achieves high accuracy, there is limited generalization across datasets and it is not deployed in a live software-defined networking environment.

The authors in Ref. [

65] first generate a custom SDN-specific dataset in Mininet by launching ICMP, UDP, and TCP flooding attacks. A Ryu controller monitors OpenFlow statistics, which are logged and labeled to form the dataset. To address data imbalance, SMOTE is applied at the protocol level, and the model is trained using TensorFlow/Keras. The core model is a stacked 1D-CNN, enhanced with early stopping to prevent overfitting. NSGA-II, a multi-objective genetic algorithm, is employed to tune seven critical hyperparameters, optimizing for both accuracy and training time. Although this achieves high accuracy, its evaluation is limited to a simulated software-defined networking environment.

The paper in [

66] proposes a hybrid deep learning model combining a 1D CNN, Gated Recurrent Unit (GRU), and Dense Neural Network (DNN) for detecting DDoS attacks in software-defined networking. This architecture is designed to capture both spatial and temporal patterns in network traffic, and is effective for identifying both high-rate and low-rate DDoS attacks. The model is trained and evaluated on both the CICDDoS2019 dataset and a custom-generated SDN dataset. The CNN extracts short-term features, the GRU captures long-term dependencies, and the DNN performs classification. But there is a lack of real-time deployment in a live SDN.

The CNN in Ref. [

57] is used for both binary and multi-class classification of DDoS attack types. It employs pre-processing methods including feature elimination and information gain-based selection to enhance performance. Once the software-defined networking controller is deployed, the model monitors flow statistics from OpenFlow switches in real time, classifies traffic, and applies mitigation strategies if an attack is detected. An integrated monitoring module oversees blocked IPs to reduce false positives and ensure continued access for legitimate traffic. Additionally, a base64-encoded email alert system notifies administrators upon attack detection. However, simulated data is not validated in production networks and IP spoofing mitigation could cause false blocking.

The approach in Ref. [

67] combines a 1D CNN and LSTM network, with the Siberian Tiger Optimization (STO) algorithm used for both optimal feature selection and hyperparameter tuning. To tackle dataset imbalance, a GAN is used to synthesize realistic attack samples. The processed and dimensionally reduced features are passed through the CNN-LSTM pipeline for classification for secure and efficient sharing of blacklists among distributed software-defined networking controllers. However, simulation and testing are not performed in a real software-defined networking environment and steganography for blacklist exchange is not benchmarked for speed or scalability.

An adversarial DDoS detection framework for software-defined networking is presented in Ref. [

68] using a hybrid deep learning model that combines a Deep Belief Network (DBN) and LSTM network. The system incorporates GANs to generate perturbed adversarial samples, which are added to the training set. The DBN module performs dimensionality reduction on 88 flow features (extracted using CICFlowMeter), while the LSTM captures temporal dependencies in the flow data. Together, both models detect normal and adversarial DDoS traffic. The detection module is integrated into a software-defined networking application layer with a mitigation system based on Event-Condition-Action (ECA) rules, allowing automated packet drops at the controller level. However, no real software-defined networking testbed is used and evaluation is purely offline. Source code is also not publicly available.

Table 4 pivots its attention to deep learning DDoS detection/mitigation policies in domain-specific SDN deployments like IoT, Cloud, Edge Computing and Vehicular Networks where network requirements and constraints are quite different.

The paper in [

69] involves the Metaheuristic with Multi-Layer Ensemble Deep Reinforcement Learning for DDoS Attack Detection and Mitigation (MMEDRL-ADM) technique. The approach begins with preprocessing network data to clean and transform it, followed by African Buffalo Optimization-based Feature Selection (ABO-FS) to reduce computational complexity and enhance detection accuracy. The core detection mechanism employs a Multi-Layer Ensemble Deep Reinforcement Learning (MEDRL) model, which combines deep learning and reinforcement learning to classify attacks. To optimize performance, an Improved Grasshopper Optimization Algorithm (IGOA) fine-tunes the hyperparameters of the MEDRL model. The system is tested on an SDN-specific dataset. However, it has computational complexity due to ensemble and optimization layers.

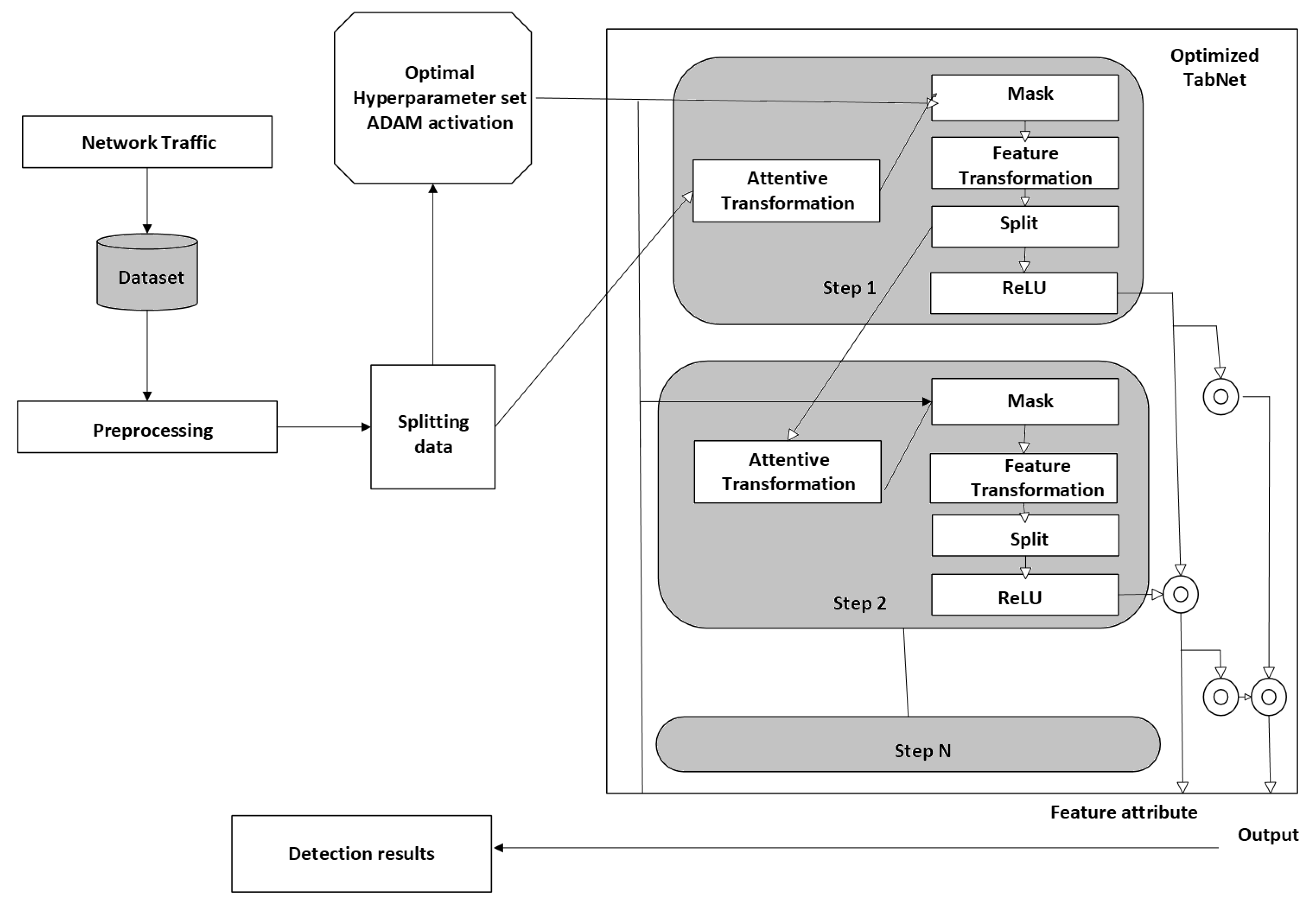

The study by Ref. [

70] proposed the technique for detection of DDoS attacks in SDN-based VANETs, shown in

Figure 10. It involves an optimized deep learning model called TabNet, enhanced with Adam optimization and hyperparameter tuning by using Grid Search Cross-Validation (GSCV). This approach processes tabular network traffic data, leveraging TabNet’s feature selection and sequential decision-making capabilities to identify attack patterns. The model dynamically adjusts learning rates and employs limited attention mechanisms to focus on suitable features and achieve high accuracy. However, datasets might not fully represent real-world VANET diversity and limited adversarial testing.

The work in Ref. [

71] introduces the technique for detecting low-rate DDoS (LDDoS) attacks in SDN-enabled IoT networks based on LSTM. The approach involves collecting and preprocessing network traffic data from the Edge-IIoTset dataset, normalizing features, and training the LSTM model with an Adam optimizer and dropout regularization to prevent overfitting. The model analyzes time-series traffic patterns to distinguish LDDoS attacks from normal traffic, achieving accuracy. Key steps include feature extraction from software-defined networking controller logs, dynamic thresholding, and real-time prediction to mitigate malicious flows, outperforming traditional methods like SVM and decision trees in IoT-specific software-defined networking environments. However, only one dataset is evaluated, and LSTM training requires high resources.

Authors in Ref. [

72] proposed CoWatch, a collaborative framework for predicting and detecting DDoS attacks in edge computing (EC) using distributed SDN. An optimal threshold model was introduced to balance collaboration efficiency and prediction accuracy, effectively filtering suspicious flows. A collaborative prediction algorithm based on LSTM was designed, and experiments on five datasets with three attack types showed CoWatch achieved high precision and accuracy. However the paper relies on limited attack types and public datasets, which may not fully represent real-world DDoS scenarios.

The paper in [

73] proposes an intrusion detection system (anomaly-based) which is called FeedForward–Convolutional Neural Network (FFCNN) to detect LR-DoS attacks in IoT-enabled software-defined networking environments. The system uses hybrid deep learning that combines a feedforward neural network and 2D convolutional layers to deeply mine traffic flow features. Using the CIC DoS 2017 dataset, the raw packets are first converted to bidirectional flow statistics by using the CIC Flow Meter. The authors perform iterative SVM-based feature selection, reducing the feature set to seven critical attributes to speed up detection. FFCNN is trained on part of the data to establish a threshold for benign flows, and then tested against unseen attack traces. However, there is no real-time deployment in a software-defined networking environment and it is evaluated in offline mode on CIC data.

DDoS attack detection in software-defined networking-based VANET networks was carried out in Ref. [

74] by using SSAE and Softmax classifier deep network models. Communication time between the the vehicles and main controller was increased using edge controller. Four different models of SSAE and Softmax classifier deep network were designed, i.e., single-, two-, three- and four-layer models. Higher accuracy was achieved via the four-layer SSAE and Softmax classifier deep network model. However, real-time responsiveness is required in VANET-based SDN systems.

Table 5 shows Federated Learning DDoS detection/mitigation policies in general-purpose SDN environment.

The technique used in Ref. [

75] is about the Federated Learning-based approach named “FedLAD,” which leverages a decentralized machine learning framework to train models across multiple local controllers without exchanging raw data. Each local controller monitors network traffic, collects local data, and trains a model using the XGBoost algorithm, which is then aggregated by a root controller to make a global model. This hierarchical structure reduces controller overhead and improves scalability, achieving high detection accuracy while preserving data privacy. The system was evaluated using datasets CICDoS2017, CICDDoS2019 and InSDN. As the model accuracy depends on aggregation technique, there is potential vulnerability to data poisoning threats.

The federated learning-based approach shown in

Figure 10 has been used in Ref. [

17]. Three deep learning classifiers, DNN, CNN, and LSTM, are trained locally on distributed SDN controllers using the CICIDS 2019 dataset. Instead of centralizing data, each controller trains its model on local traffic data and shares only the model parameters with a central server, which aggregates them by using the FedAvg algorithm to create a global model. Although the decentralized approach achieves high detection accuracy, evaluation is limited to only three DDoS attack types, i.e., UDP Flood, TCP SYN and DNS Flood, while relying solely on the CICIDS 2019 dataset.

Table 6 contains different Federated Learning DDoS detection/mitigation policies in a domain-specific SDN environment.

The research in Ref. [

76] presents a Weighted Federated Learning (WFL)-based approach to detect LR-DDoS attacks in SDN-controlled IoT networks. The method involves training local Artificial Neural Network (ANN) models using three algorithms, Bayesian Regularization (BR), Levenberg–Marquardt (LM) and Scaled Conjugate Gradient (SCG), on distributed edge devices. These locally trained models share their optimized weights with a federated server, which aggregates them by assigning dynamic preferences based on each model’s accuracy. The federated server then forms a global model, which is validated and redistributed to edge devices for real-time attack detection. But dependency on the CAIDA dataset may not generalize to all IoT/SDN scenarios, with a preference for weights assigned via hit-and-trial, lacking theoretical optimization.

Federated Learning for Decentralized DDoS Attack Detection (FL-DAD) is introduced in Ref. [

41], which employs a Convolutional Neural Network (CNN) trained in a federated learning framework, where local models are trained on distributed IoT devices without centralizing raw data. The federated learning process in IoT networks is shown in

Figure 11. Model updates are aggregated at a central server to create a global model, ensuring data privacy and reducing communication overhead. The system was evaluated using the CICIDS2017 dataset, demonstrating high accuracy. However, it has high computational load during aggregation with nodes, causing integration challenges.

According to Ref. [

77], the low-complexity CNN-MLP model is combined with filter-based Pearson Correlation Coefficient (PCC) feature selection technique. The system trains local models on distributed software-defined networking controllers (industrial agents) using private traffic data, then aggregates model updates via a central server using the Federated Averaging (FedAvg) algorithm to preserve data privacy. The CNN-MLP architecture employs residual connections and factorized convolutions to enhance feature extraction and mitigate gradient vanishing, while the PCC technique reduces feature dimensionality by selecting highly correlated features. Evaluated on the CICDDoS2019 dataset, FedDDoS achieves accuracy and low computational time in classifying DNS, UDP, and SYN flooding attacks. However, additional verification is required across varied IIoT environments.

The surveyed literature indicates that machine learning, deep learning, and federated learning approaches hold significant potential for countering DDoS threats in both general-purpose and SDN-specific contexts. Supervised algorithms generally deliver strong detection accuracy with relatively straightforward designs; however, they often depend on artificially generated or narrow datasets, which restrict their ability to generalize to real-world traffic, and they tend to be optimized for only a limited range of attacks such as SYN floods or low-rate DoS. Unsupervised strategies lessen the reliance on labeled data and can adapt to dynamic traffic conditions, but they are prone to higher false alarm rates and struggle with subtle or stealthy attacks. Deep learning-based architectures including CNNs, LSTMs, GANs, and hybrid models excel at identifying spatial–temporal traffic patterns and consistently outperform traditional methods; though their computational cost is high and large-scale, real-time deployment in SDN testbeds remains rare. Efforts targeted at IoT, VANETs, cloud, and edge networks underscore the versatility of machine learning/deep learning, yet these solutions are still limited by heterogeneous datasets and the resource constraints of such environments. Recently, federated learning-based techniques have been explored for their scalability and privacy-preserving capabilities, achieved by distributing training across SDN controllers. Nevertheless, these methods are still at an early stage, facing issues such as vulnerability to poisoning, expensive aggregation, and insufficient validation under diverse attack settings. Overall, the common gaps across current studies include heavy dependence on small or synthetic datasets, the absence of standardized SDN benchmarks, limited evaluation in adversarial or real-time conditions, and a lack of lightweight, adaptive, and deployment-ready solutions suitable for production SDN systems.