STGAN: A Fusion of Infrared and Visible Images

Abstract

1. Introduction

2. Methods

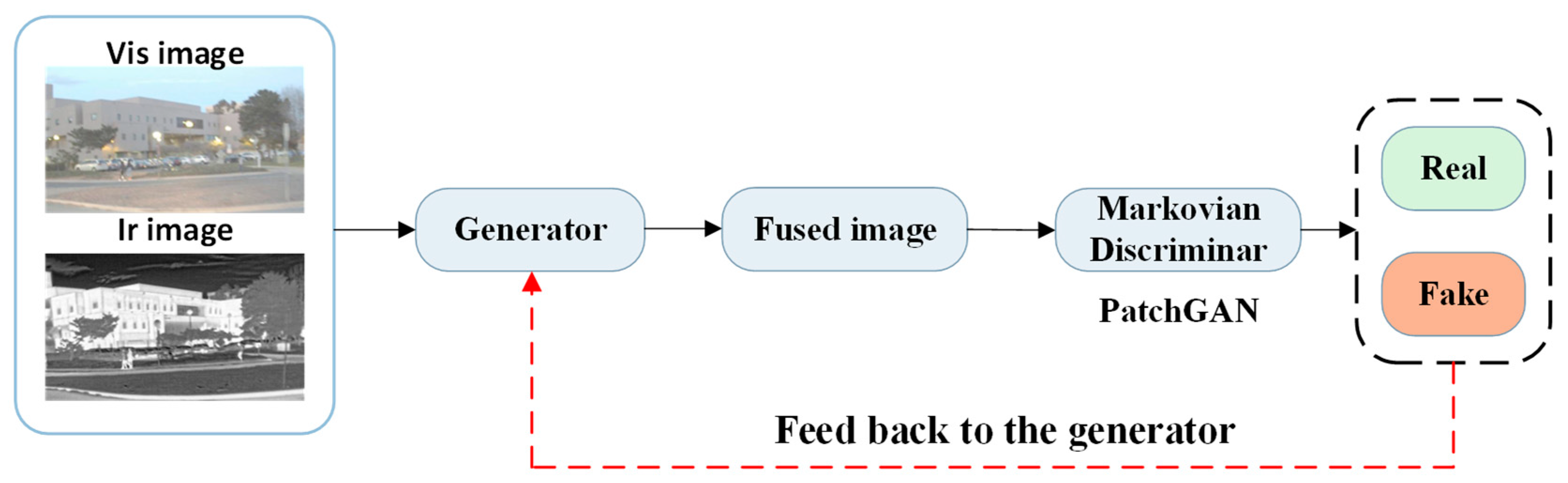

2.1. Overall Structure

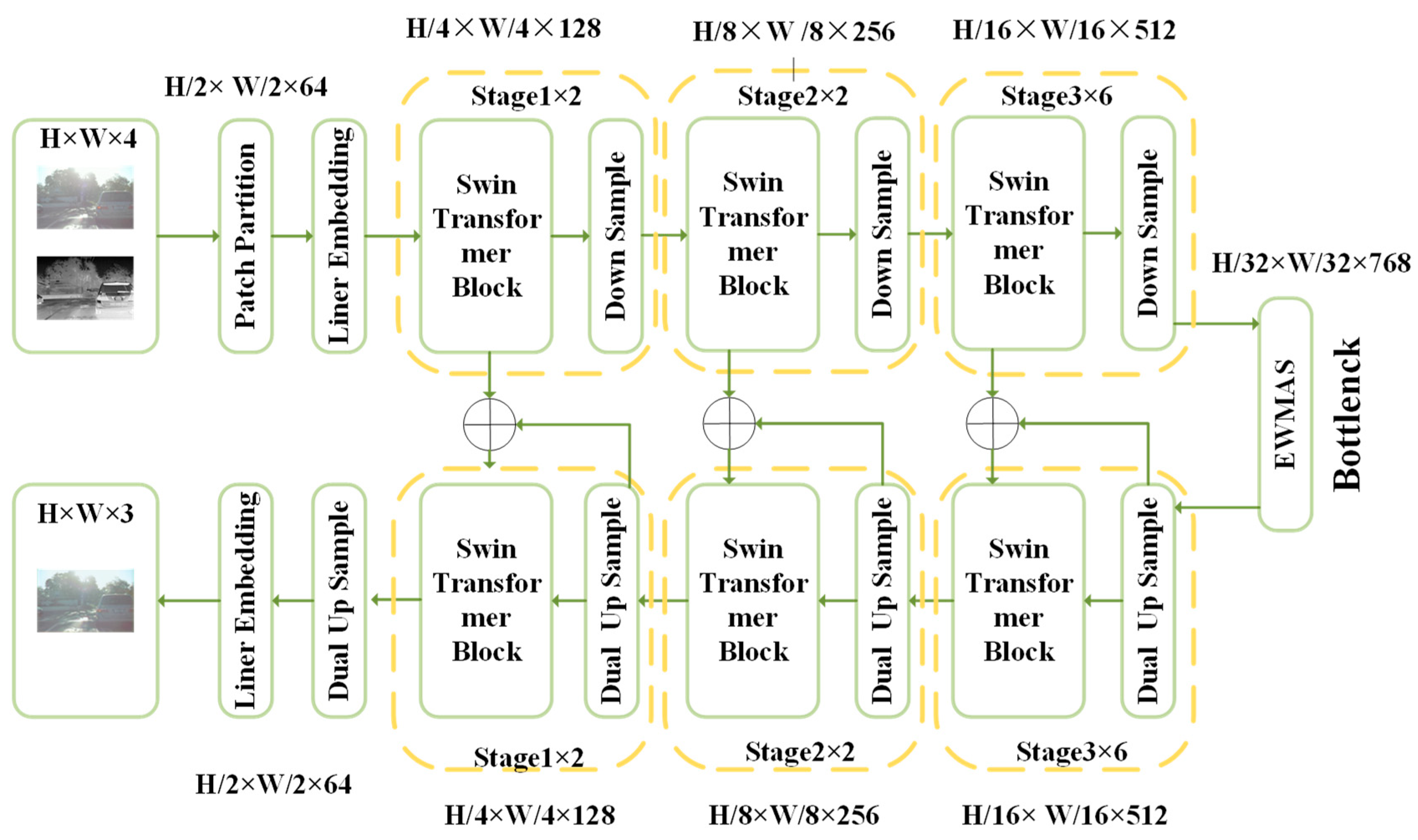

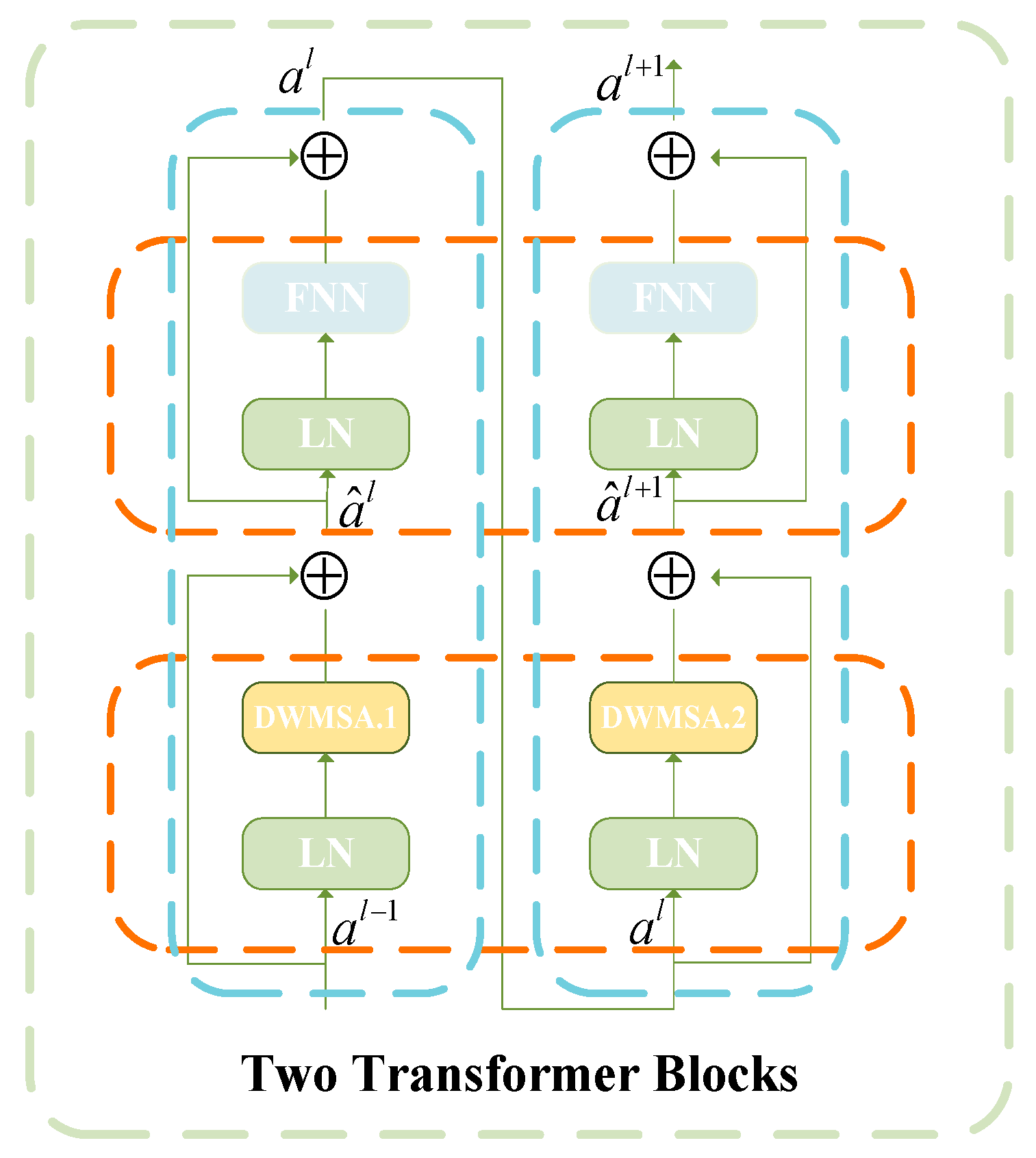

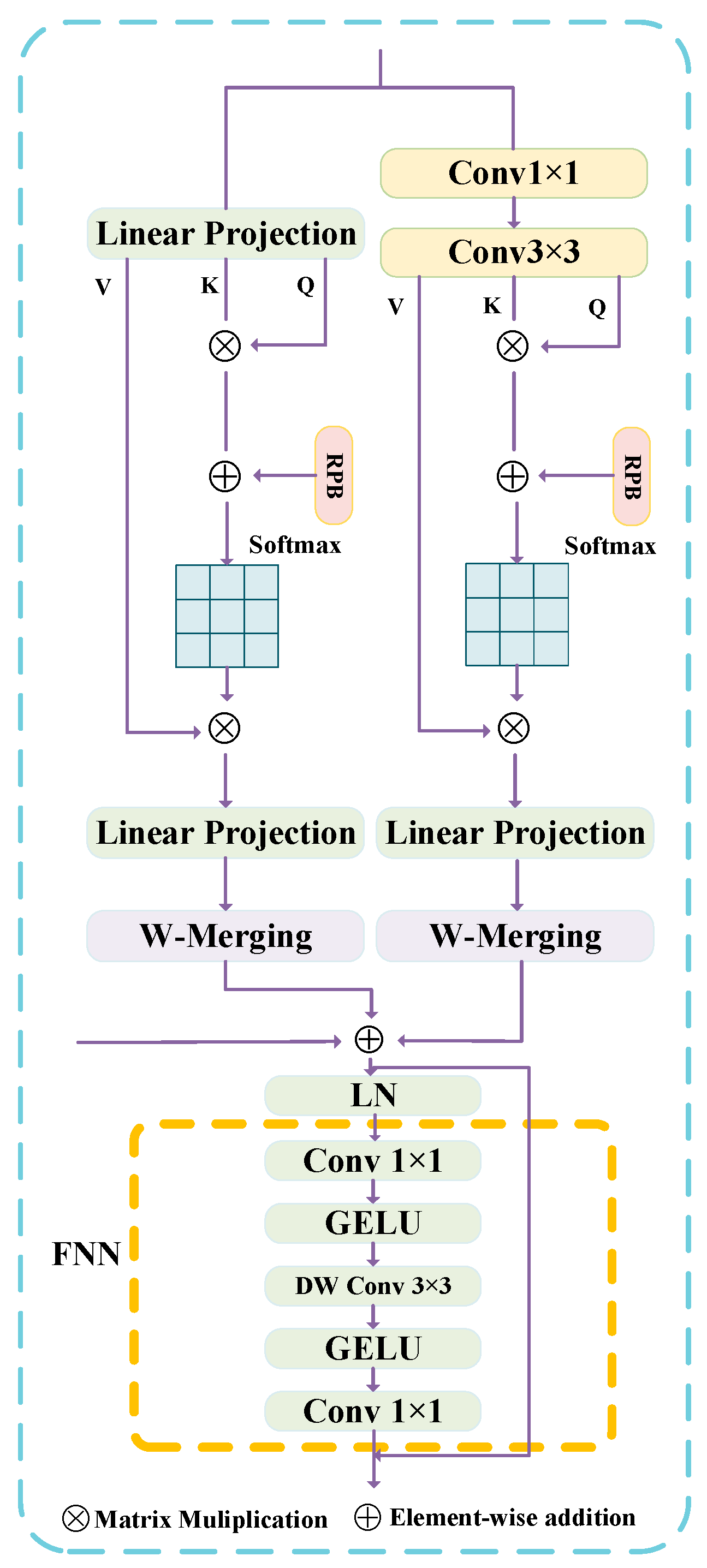

2.1.1. Generator

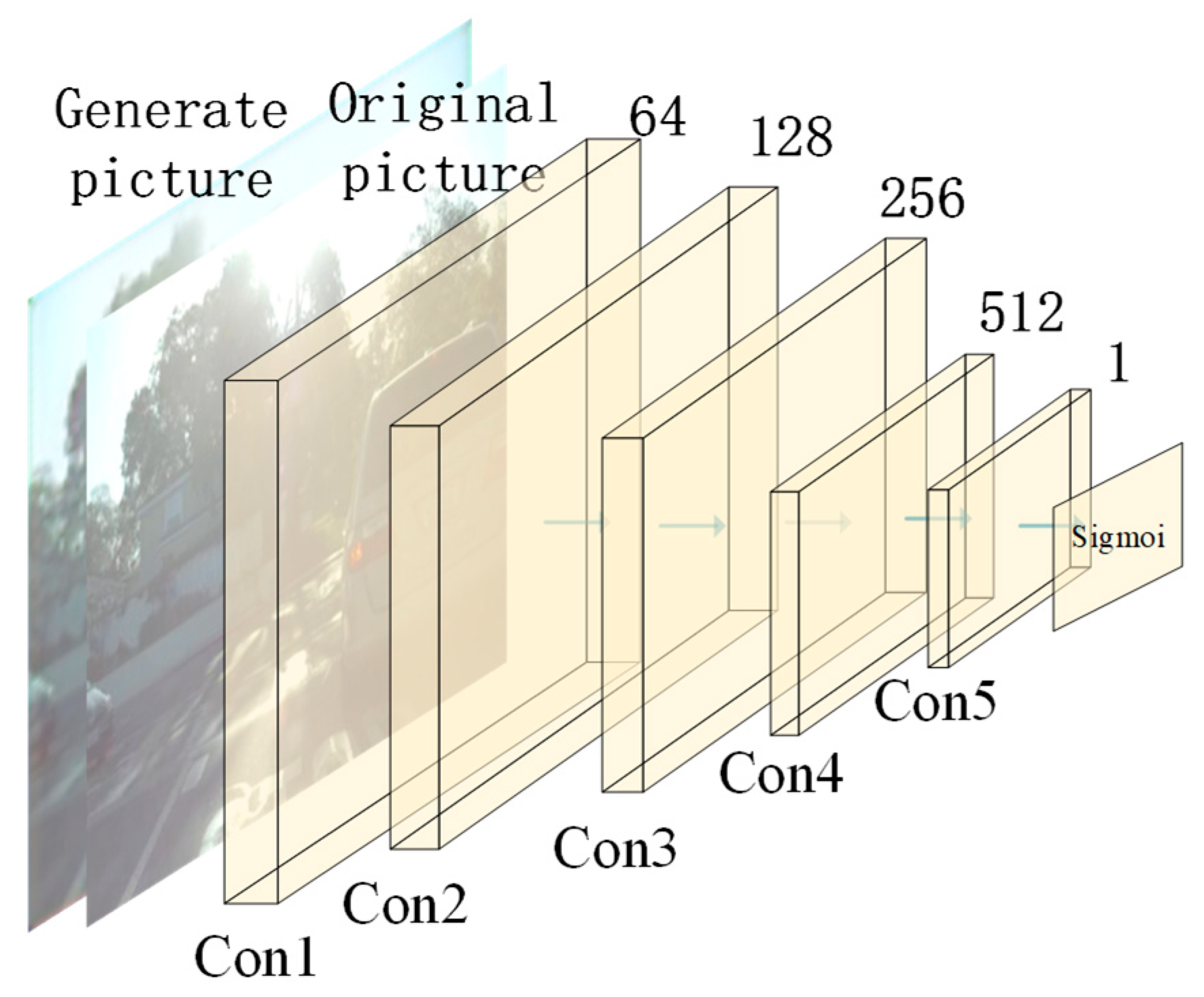

2.1.2. Discriminator

2.2. Loss Function

3. Experimental Results and Analysis

3.1. Experimental Setup

3.2. Datasets

- Weak thermal anomalies (analogous to industrial hot spots);

- Subtle linear and textured structures (analogous to surface scratches and cracks).

- MSRS dataset [25,26,27]: The MSRS dataset contains 1444 paired high-quality infrared and visible images and served as our training set, including less bright nighttime images and bright daytime images, and the dataset has been physically aligned and grouped. The dataset mainly focuses on roads and remote sensing scenes, and its infrared images are rich in the defects of thermal target feature blur and background thermal interference. For example, in some images, the temperature difference between the pedestrian or vehicle and the environment is small, resulting in its unclear outline in the infrared channel; at the same time, the widely existing thermal reflection areas in the scene (such as hot ground and building glass) form a lot of interference noise. These defects provide an ideal test benchmark to verify whether our algorithm can enhance the weak thermal target and suppress the background thermal noise.

- RoadScene dataset [28]: As a validation dataset, it includes 221 pairs of infrared visible image pairs that contain rich scene information, including pedestrians, vehicles, roads, etc. The dataset contains a large number of urban street registration image pairs, and its visible image often has artifacts and blurring caused by uneven illumination, weather changes, and object motion. These are the typical defects of visible light images. Using this dataset, we can effectively evaluate whether our fusion method can use infrared information to compensate for these defects while preserving the clear visible light texture, so as to generate more detailed and clearer fusion results.

- TNO dataset [29]: As an independent test set for generalization analysis, it comprises a variety of military and surveillance scenarios, including personnel, vehicles, and equipment in diverse environments. The image pairs in this dataset are characterized by significant spectral differences and challenging conditions, such as low-contrast and complex backgrounds. These scenarios are fundamentally distinct from the road-centric views of MSRS and RoadScene, presenting a rigorous benchmark for testing cross-domain robustness. The use of this dataset allows us to verify whether our fusion method can maintain its performance advantages when confronted with entirely unfamiliar scene distributions, thereby validating the generalizability of the learned fusion strategy.

3.3. Parameter Validation

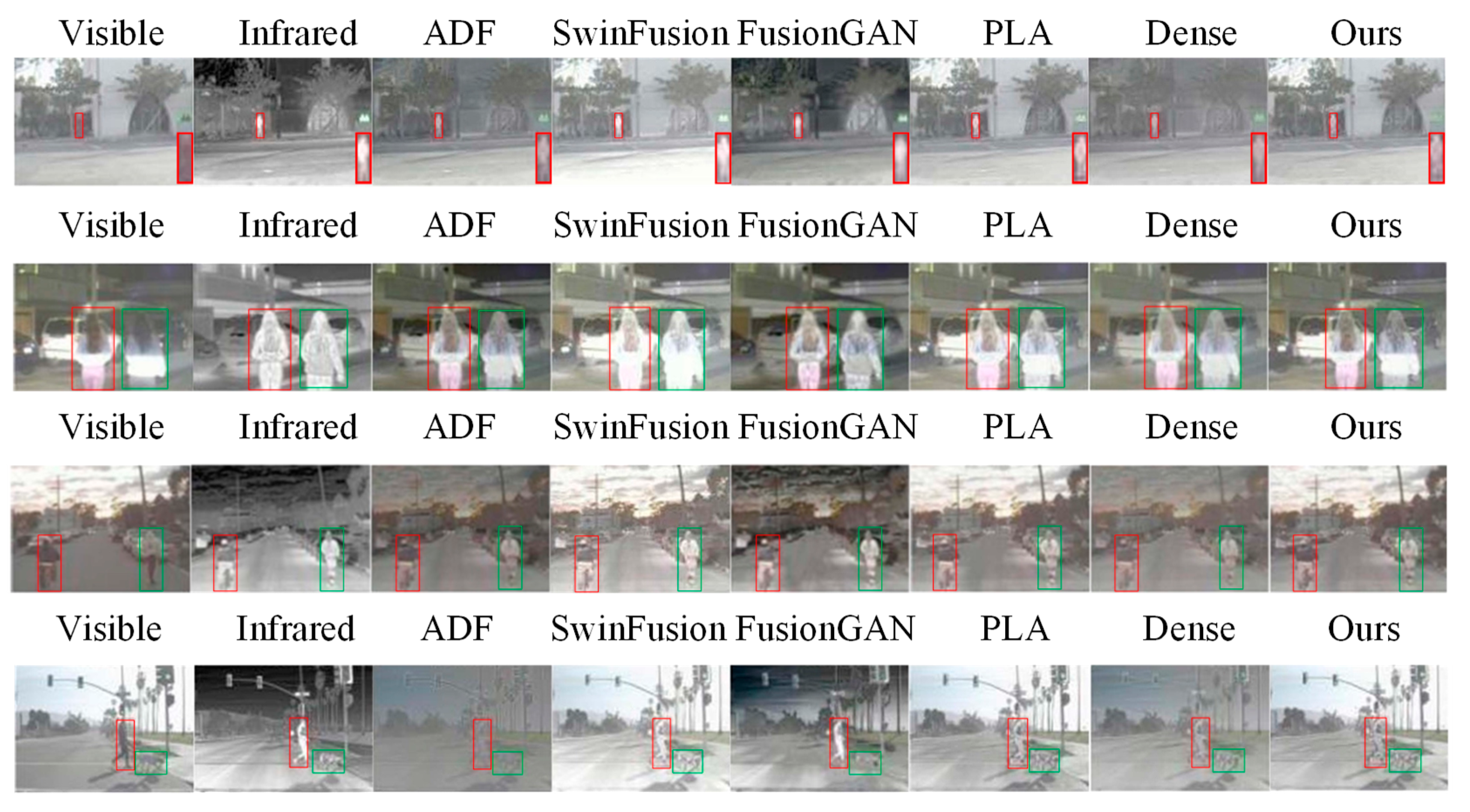

3.4. Comparative Experiment

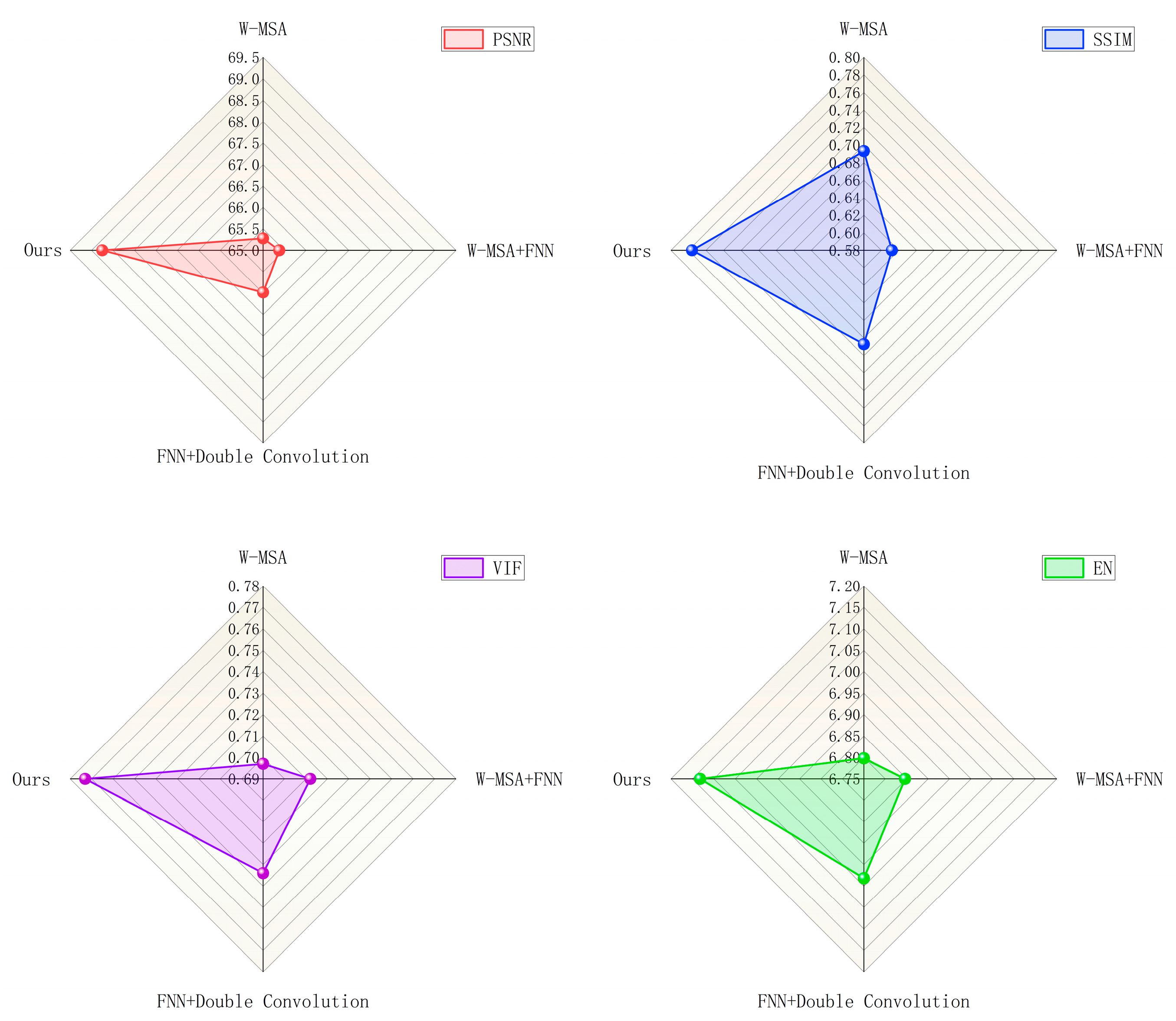

3.5. Ablation Experiments

3.6. Generalization Capability Analysis

- Strong Generalization: The superior performance of STGAN on TNO, which is disparate from its training data, unequivocally demonstrates that it has learned a generalized fusion strategy that is not over-fitted to the characteristics of the MSRS dataset.

- Consistent Advantage: The model maintains its core advantages—effective preservation of thermal targets and rich texture details—across different data domains. This consistency underlines the robustness of our network architecture and loss design.

4. Discussion

- Cooperate with industrial partners to build such datasets;

- Integrate the fusion algorithm into the complete defect detection pipeline as a preprocessing module to directly evaluate its effect on improving the final detection accuracy.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| DWMSA | Dual Window-based Multi-head Self-Attention |

| EN | Entropy |

| FNN | Feedforward Neural Network |

| GAN | Generative Adversarial Network |

| LN | Layer Normalization |

| MPL | Multi-Layer Perceptron |

| MSA | Multi-head Self-Attention |

| PSNR | Peak Signal-to-Noise Ratio |

| ReLU | Rectified Linear Unit |

| SF | Spatial Frequency |

| SSIM | Structural Similarity Index Measurement |

| VIF | Visual Information Fidelity |

| W-MSA | Window-based Multi-head Self-Attention |

References

- Song, H.; Wang, R. Underwater Image Enhancement Based on Multi-Scale Fusion and Global Stretching of Dual-Model. Mathematics 2021, 9, 595. [Google Scholar] [CrossRef]

- Rashid, M.; Khan, M.A.; Alhaisoni, M.; Wang, S.-H.; Naqvi, S.R.; Rehman, A.; Saba, T. A Sustainable Deep Learning Framework for Object Recognition Using Multi-Layers Deep Features Fusion and Selection. Sustainability 2020, 12, 5037. [Google Scholar] [CrossRef]

- Liu, Z.; Wu, W. Fusion with Infrared Images for an Improved Performance and Perception. In Pattern Recognition, Machine Intelligence and Biometrics; Wang, P.S.P., Ed.; Springer: BerlinHeidelberg, Germany, 2011. [Google Scholar] [CrossRef]

- Tang, C.; Ling, Y.; Yang, H.; Yang, X.; Lu, Y. Decision-level fusion detection for infrared and visible spectra based on deep learning. Infrared Laser Eng. 2019, 48, 626001. [Google Scholar] [CrossRef]

- Zhao, Y.; Lai, H.; Gao, G. RMFNet: Redetection Multimodal Fusion Network for RGBT Tracking. Appl. Sci. 2023, 13, 5793. [Google Scholar] [CrossRef]

- Kim, S.; Song, W.-J.; Kim, S.-H. Double Weight-Based SAR and Infrared Sensor Fusion for Automatic Ground Target Recognition with Deep Learning. Remote Sens. 2018, 10, 72. [Google Scholar] [CrossRef]

- Lewis, J.J.; O’Callaghan, R.J.; Nikolov, S.G.; Bull, D.R.; Canagarajah, N. Pixel- and region-based image fusion with complex wavelets. Inf. Fusion 2007, 8, 119–130. [Google Scholar] [CrossRef]

- Liu, C.; Du, L.; Liu, R. Infrared and visible image fusion algorithm based on multi-scale transform. In Proceedings of the 2021 5th International Conference on Electronic Information Technology and Computer Engineering (EITCE ‘21), Xiamen, China, 22–24 October 2021; Association for Computing Machinery: New York, NY, USA, 2022; pp. 432–438. [Google Scholar] [CrossRef]

- Li, L.; Shi, Y.; Lv, M.; Jia, Z.; Liu, M.; Zhao, X.; Zhang, X.; Ma, H. Infrared and Visible Image Fusion via Sparse Representation and Guided Filtering in Laplacian Pyramid Domain. Remote Sens. 2024, 16, 3804. [Google Scholar] [CrossRef]

- AlRegib, G.; Prabhushankar, M. Explanatory Paradigms in Neural Networks: Towards relevant and contextual explanations. IEEE Signal Process. Mag. 2022, 39, 59–72. [Google Scholar] [CrossRef]

- Ramírez, J.; Vargas, H.; Martinez, J.I.; Arguello, H. Subspace-Based Feature Fusion from Hyperspectral and Multispectral Images for Land Cover Classification. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 3003–3006. [Google Scholar] [CrossRef]

- Sun, S.; Bao, W.; Qu, K.; Feng, W.; Ma, X.; Zhang, X. Hyperspectral-multispectral image fusion using subspace decomposition and Elastic Net Regularization. Int. J. Remote Sens. 2024, 45, 3962–3991. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar] [CrossRef]

- Sulthana, N.T.N.; Joseph, S. Infrared and visible image: Enhancement and fusion using adversarial network. AIP Conf. Proc. 2024, 3037, 020030. [Google Scholar] [CrossRef]

- Yue, J.; Fang, L.; Xia, S.; Deng, Y.; Ma, J. Dif-Fusion: Toward High Color Fidelity in Infrared and Visible Image Fusion With Diffusion Models. IEEE Trans. Image Process. 2023, 32, 5705–5720. [Google Scholar] [CrossRef]

- Ma, J.; Ma, Y.; Li, C. Infrared and visible image fusion methods and applications: A survey. Inf. Fusion 2019, 45, 153–178. [Google Scholar] [CrossRef]

- Yin, L.; Zhao, W. Graph attention-based U-net conditional generative adversarial networks for the identification of synchronous generation unit parameters. Eng. Appl. Artif. Intell. 2023, 126, 106896. [Google Scholar] [CrossRef]

- Li, K.; Wang, Y.; Zhang, J.; Gao, P.; Song, G.; Liu, Y.; Li, H.; Qiao, Y. Unifying Convolution and Self-Attention for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 12581–12600. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5967–5976. [Google Scholar] [CrossRef]

- Xiao, Z.; Zhang, D.; Chen, X.; Li, D. SCAGAN: Wireless Capsule Endoscopy Lesion Image Generation Model Based on GAN. Electronics 2025, 14, 428. [Google Scholar] [CrossRef]

- Lv, J.; Wang, C.; Yang, G. PIC-GAN: A parallel imaging coupled generative adversarial network for accelerated multi-channel MRI reconstruction. Diagnostics 2021, 11, 61. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inf. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.; Ling, H. U2fusion: A Unified Unsupervised Image Fusion Network. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 502–518. [Google Scholar] [CrossRef] [PubMed]

- Mei, Z. Minimizing the Average Packet Access Time of the Application Layer for Buffered Instantly Decodable Network Coding. IEEE Trans. Parallel Distrib. Syst. 2023, 34, 1035–1046. [Google Scholar] [CrossRef]

- Tang, L.F.; Li, C.Y.; Ma, J.Y. Mask-DiFuser: A masked diffusion model for unified unsupervised image fusion. IEEE Trans. Pattern Anal. Mach. Intell. 2025; early access. [Google Scholar] [CrossRef] [PubMed]

- Tang, L.F.; Yan, Q.L.; Xiang, X.Y.; Fang, L.Y.; Ma, J.Y. C2RF: Bridging multi-modal image registration and fusion via commonality mining and contrastive learning. Int. J. Comput. Vis. 2025, 133, 5262–5280. [Google Scholar] [CrossRef]

- Ma, J.; Tang, L.; Fan, F.; Huang, J.; Mei, X.; Ma, Y. SwinFusion: Cross-domain long-range learning for general image fusion via Swin Transformer. IEEE/CAA J. Autom. Sin. 2022, 9, 1200–1217. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Le, Z.; Jiang, J.; Guo, X. FusionDN: A Unified Densely Connected Network for Image Fusion. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence (AAAI), Philadelphia, PA, USA, 25 February–4 March 2025; pp. 12484–12491. [Google Scholar] [CrossRef]

- Toet, A. The TNO Multiband Image Data Collection. Data Brief 2017, 15, 249–251. [Google Scholar] [CrossRef] [PubMed]

| Category | Component | Specification/Version |

|---|---|---|

| Hardware | CPU | Intel Core i9-13900K |

| GPU | 1 × NVIDIA GeForce RTX 3090 (24 GB) & 1 × NVIDIA GeForce RTX 4090 | |

| RAM | 128 GB DDR5 | |

| Storage | 1 TB SSD | |

| Software | Operating System (OS) | Microsoft Windows 11 |

| CUDA | CUDA 11.8 | |

| cuDNN | cuDNN 8.2.1 | |

| Programming Language | Python 3.11 | |

| Deep Learning Framework | PyTorch 1.13.1 | |

| IDE | PyCharm 2023.1.4 |

| Parameter Setting | Evaluation Index | |||||||

|---|---|---|---|---|---|---|---|---|

| λ1 | λ2 | λ3 | λ4 | PSNR/dB | SSIM | MI | VIF | |

| 1 | 0.1 | 0.2 | 0.1 | 0.6 | 69.92 | 0.851 | 2.859 | 0.586 |

| 2 | 0.1 | 0.2 | 0.2 | 0.5 | 69.89 | 0.855 | 2.796 | 0.586 |

| 3 | 0.1 | 0.2 | 0.3 | 0.4 | 62.02 | 0.849 | 2.841 | 0.582 |

| 4 | 0.1 | 0.2 | 0.4 | 0.3 | 69.90 | 0.859 | 2.903 | 0.583 |

| 5 | 0.1 | 0.2 | 0.5 | 0.2 | 69.92 | 0.856 | 2.844 | 0.585 |

| 6 | 0.1 | 0.2 | 0.6 | 0.1 | 61.02 | 0.858 | 2.843 | 0.583 |

| Fusion Methods | PSNR | SSIM | SF | FMI | MI | VIF | EN |

|---|---|---|---|---|---|---|---|

| ADF | 67.928 | 0.627 | 4.971 | 0.943 | 2.748 | 0.695 | 7.011 |

| SwinFusion | 68.322 | 0.771 | 5.369 | 0.943 | 3.265 | 0.771 | 7.078 |

| FusionGAN | 67.351 | 0.726 | 3.398 | 0.937 | 2.755 | 0.578 | 7.034 |

| PIA Fusion | 68.496 | 0.796 | 6.167 | 0.945 | 3.214 | 0.762 | 7.125 |

| Dense Fuse | 67.349 | 0.631 | 3.401 | 0.939 | 2.922 | 0.654 | 6.823 |

| STGAN | 68.752 | 0.776 | 5.689 | 0.945 | 3.375 | 0.773 | 7.132 |

| Average | 68.033 | 0.721 | 4.833 | 0.942 | 3.047 | 0.706 | 7.034 |

| Model | W-MSA | FNN | Double Convolution | PSNR | SSIM | VIF | EN |

|---|---|---|---|---|---|---|---|

| 1 | √ | 65.278 | 0.693 | 0.697 | 6.798 | ||

| 2 | √ | √ | 65.376 | 0.612 | 0.712 | 6.846 | |

| 3 | √ | √ | 65.984 | 0.687 | 0.734 | 6.982 | |

| Ours | √ | √ | √ | 68.752 | 0.776 | 0.773 | 7.132 |

| Fusion Methods | PSNR | SSIM | SF | FMI | MI | VIF | EN |

|---|---|---|---|---|---|---|---|

| ADF | 65.632 | 0.725 | 9.451 | 0.843 | 1.528 | 0.315 | 6.321 |

| DLF | 65.987 | 0.738 | 10.124 | 0.851 | 1.678 | 0.335 | 6.458 |

| FusionGAN | 64.895 | 0.696 | 8.923 | 0.829 | 1.486 | 0.299 | 6.194 |

| PIA Fusion | 66.254 | 0.761 | 10.567 | 0.865 | 1.724 | 0.353 | 6.587 |

| Dense Fuse | 65.123 | 0.618 | 7.124 | 0.835 | 1.617 | 0.321 | 6.277 |

| STGAN | 66.147 | 0.752 | 10.589 | 0.865 | 1.813 | 0.369 | 6.629 |

| Average | 65.673 | 0.715 | 9.463 | 0.848 | 1.641 | 0.332 | 6.411 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gong, L.; Han, Y.; Li, R. STGAN: A Fusion of Infrared and Visible Images. Electronics 2025, 14, 4219. https://doi.org/10.3390/electronics14214219

Gong L, Han Y, Li R. STGAN: A Fusion of Infrared and Visible Images. Electronics. 2025; 14(21):4219. https://doi.org/10.3390/electronics14214219

Chicago/Turabian StyleGong, Liuhui, Yueping Han, and Ruihong Li. 2025. "STGAN: A Fusion of Infrared and Visible Images" Electronics 14, no. 21: 4219. https://doi.org/10.3390/electronics14214219

APA StyleGong, L., Han, Y., & Li, R. (2025). STGAN: A Fusion of Infrared and Visible Images. Electronics, 14(21), 4219. https://doi.org/10.3390/electronics14214219