A Lightweight, Explainable Spam Detection System with Rüppell’s Fox Optimizer for the Social Media Network X

Abstract

1. Introduction

- An innovative XAI-powered machine learning model that significantly improves classification accuracy in X spam account detection is proposed.

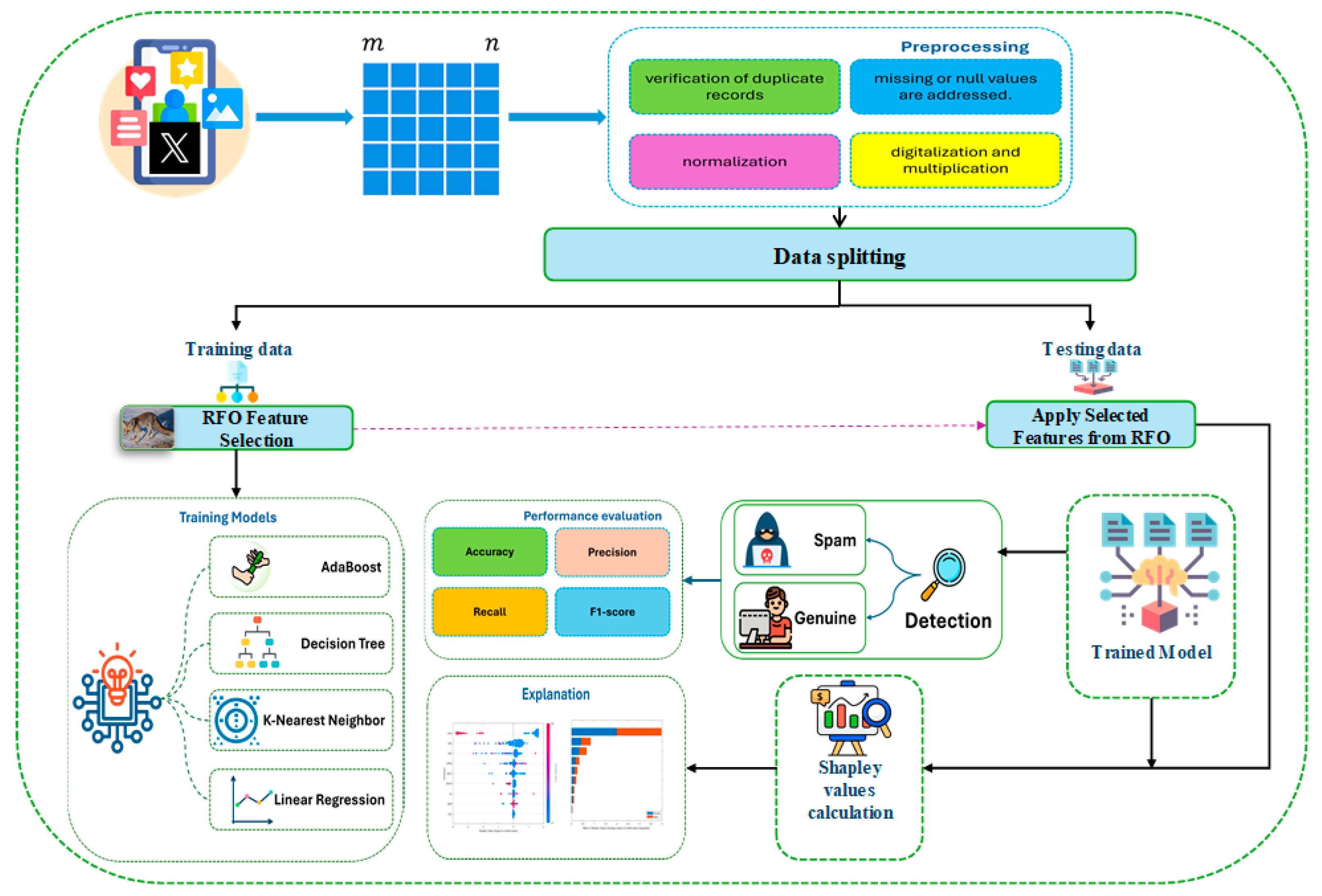

- A swarm-based, nature-inspired meta-heuristic method—called Rüppell’s Fox Optimizer (RFO) algorithm—for feature selection is proposed, which—to the best of our knowledge—is applied to cybersecurity and lightweight system loads for the first time.

- The proposed solution is experimentally evaluated using a real-world X dataset. The developed model significantly improves performance metrics such as confusion matrix, precision, recall, accuracy, F1 score, and the area-under-the-curve (AUC) value.

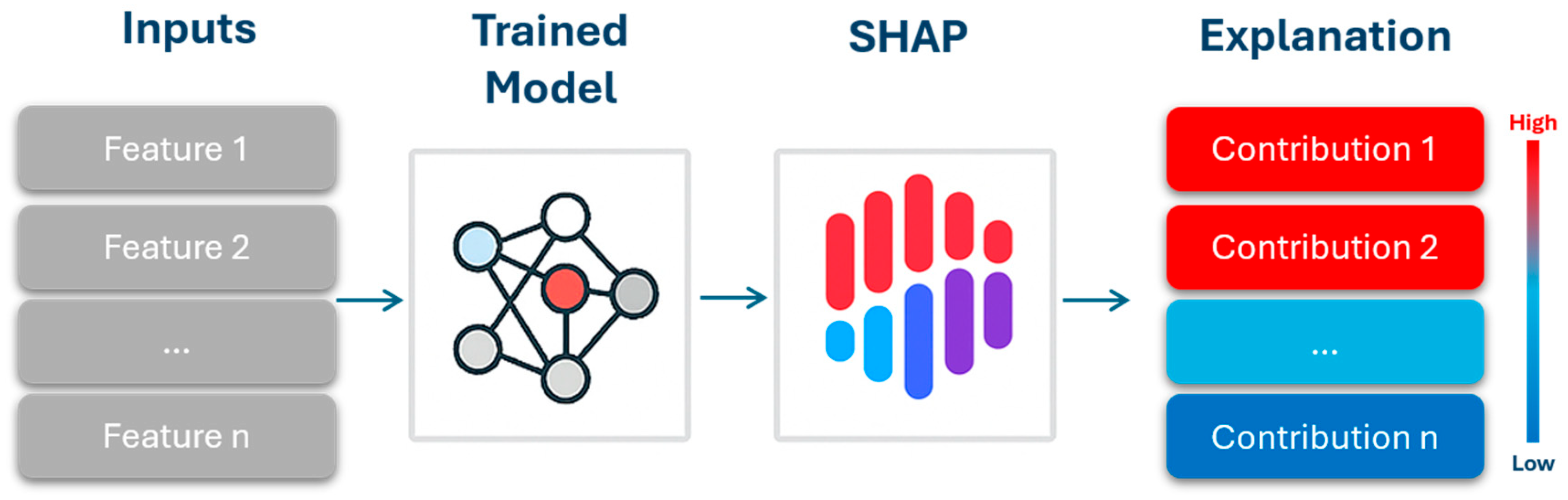

- The prediction made by the ML-driven spam detection model is interpreted by computing the Shapley values through the SHAP methodology.

2. Background

2.1. Explainable Artificial Intelligence

2.2. Machine Learning Algorithms

3. Literature Review

4. Methodology

4.1. Dataset Description

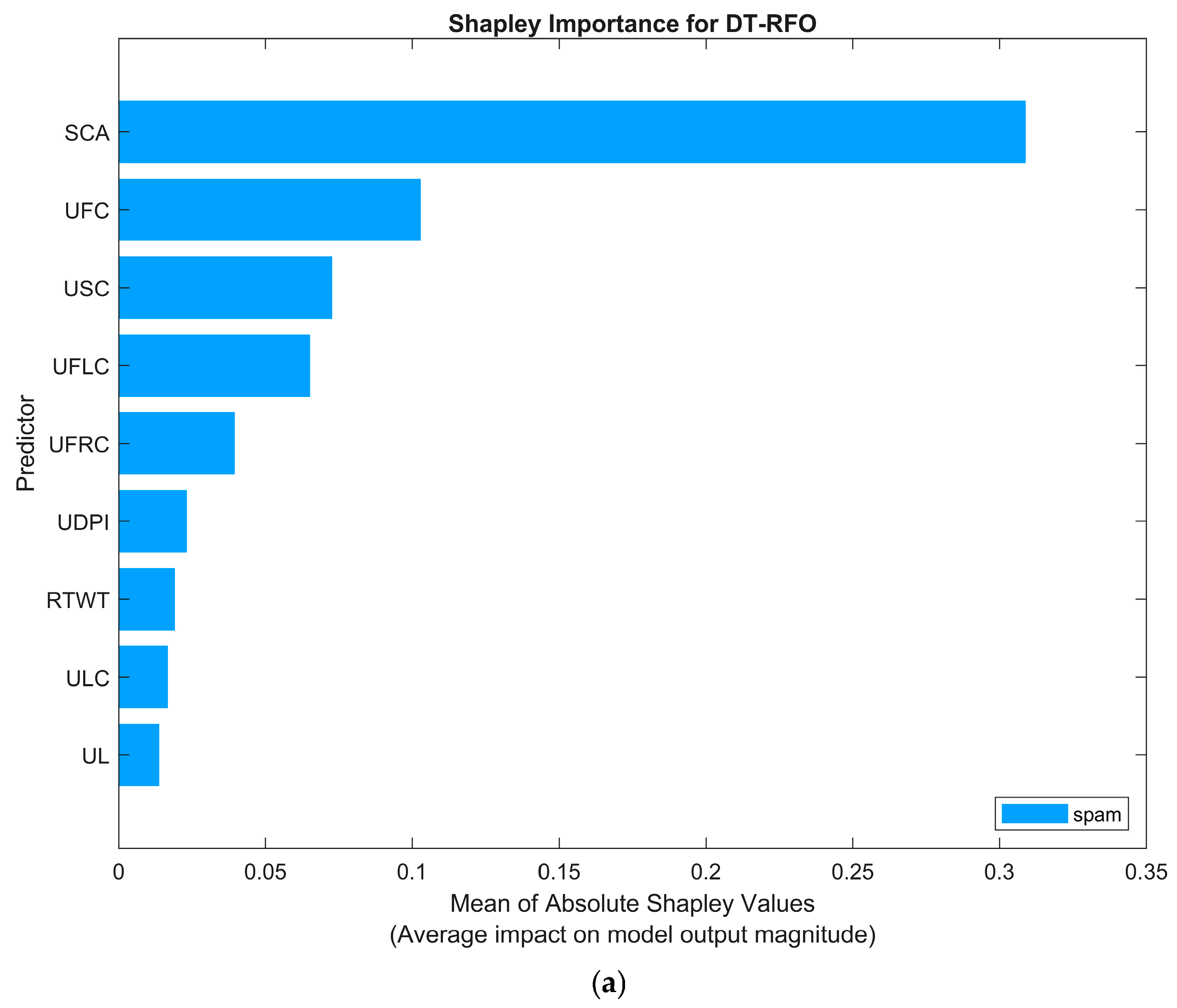

- User Profile Features: Several attributes measure account activity. The user statuses count (USC) represents the user’s latest tweets or retweets, indicating the account’s productive activity on the platform. Moreover, user followers count (UFLC) represents the total quantity of tweets this user has endorsed throughout the account’s existence. In contrast, user friends count (UFRC) represents the number of users following the account, which is also known as their “followings”. The user favorites count (UFC) shows whether the verifying user has liked (favorited) a tweet. Additionally, the user listed count (ULC) indicates the number of public lists of which the user is a member, measuring the account’s prominence among other users. These indicators essentially encapsulate the engagement of accounts and their footprint in social platforms. The inclusion of these properties enables the analysis of such patterns within the dataset.

- Tweet and Profile Indicators: Datasets additionally include a binary number (yes/no) attribute that represents tweet characteristics and profile configurations. Sensitive Content Alert (SCA) represents a Boolean value, denoting sensitive items contained in the tweet’s content or within the textual user properties. This may indicate spam, as spam tweets often contain this type of content. Source-in-X (SITW) displays the utility employed to publish the tweet, and it is formatted as an HTML string. It denotes whether the tweet was disseminated using an official X platform (YES) or a third-party source (NO). For instance, tweets originating from the X website have a source designation of web. Conversely, spammers may utilize specific applications. User location (UL) constitutes a category domain, indicating the user-provided location for the account’s profile. If the location is provided, then the text is YES; otherwise, an unfilled value is denoted by NO. The location’s actual text is not employed due to its random nature and parsing difficulty. This binary characteristic only indicates the existence of a profile’s location, as is commonly possessed by legal users. User geo-enabled (UGE) indicates whether the user has activated geotagging on tweets (if yes, it is designated as TRUE, indicating that the account permits the attachment of geographical coordinates to tweets). The user default profile image (UDPI) is a Boolean variable, and it signifies that the user is using the default X profile picture (when true, it indicates that the user has not uploaded an image for the profile). For a significant proportion of accounts, suspicious accounts typically use the default image profile and provide minimal private information [42]. Thus, this characteristic may indicate a potential threat. Finally, the ReTweet (RTWT) Boolean value indicates whether the validating user has retweeted a tweet.

- Class Attribute: This attribute signifies that an account is categorized as a spam account if true, while a false designation signifies that the account is legal.

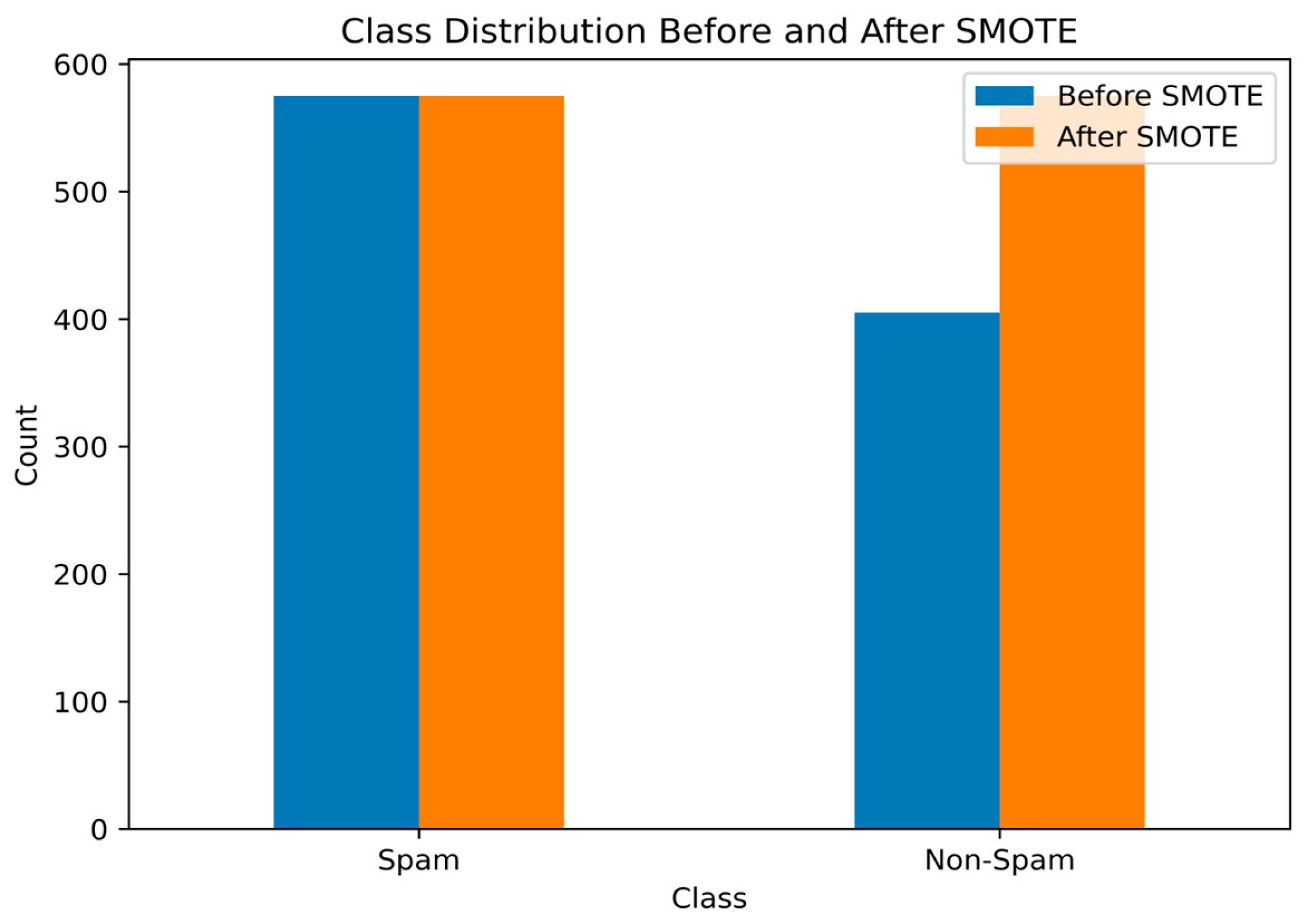

4.2. Data Preprocessing and Balancing

4.3. Feature Selection Approach

4.4. Rüppell’s Fox Optimization

| Algorithm 1. Pseudocode of the Rüppell’s Fox optimizer (RFO). |

|

4.5. Model Training

4.6. Model Evaluation

- True Positive (TP): This represents the number of accounts the model predicted correctly as spam.

- True Negative (TN): This represents the number of accounts the model predicted correctly as non-spam.

- False Positive (FP): This represents the number of non-spam accounts the model predicted as spam.

- False Negative (FN): This represents the number of spam accounts the model predicted as non-spam.

- TPR (Recall): .

- False Positive Rate (FPR): .

- : The metric value for class ; : number of classes.

- : True positives for class ; : false positives for class .

- : The number of samples belonging to class .

4.7. Implementation Environment

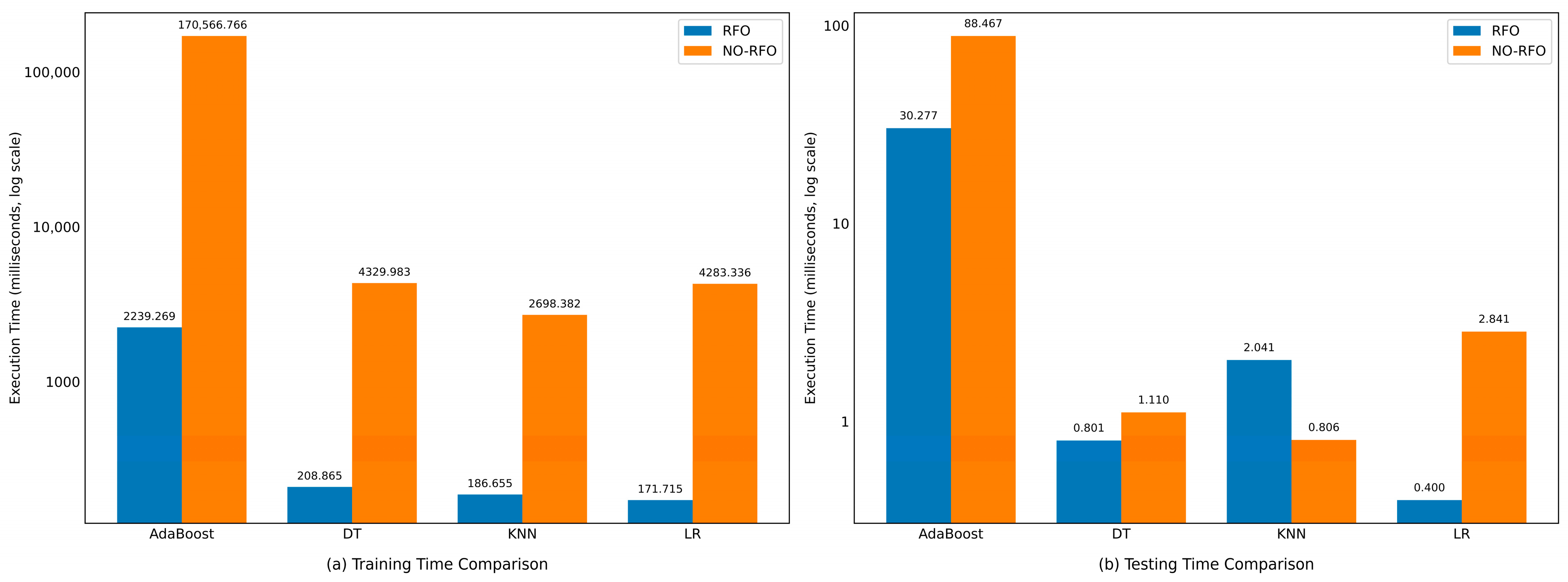

5. Results and Discussion

- KNN: 83.8% accuracy (±0.027), 92.3% recall, 82.7% precision, and an F1 score of 86.9%.

- DT: 83.8% accuracy (±0.021), 96.7% recall, 79.8% precision, and an F1 score of 87.4%.

- LR: 72.9% accuracy (±0.025), 87.8% recall, 72.2% precision, and an F1 score of 79.2%.

- AdaBoost: 72.8% accuracy (±0.030), 84.7% recall, 73.1% precision, and an F1 score of 78.4%.

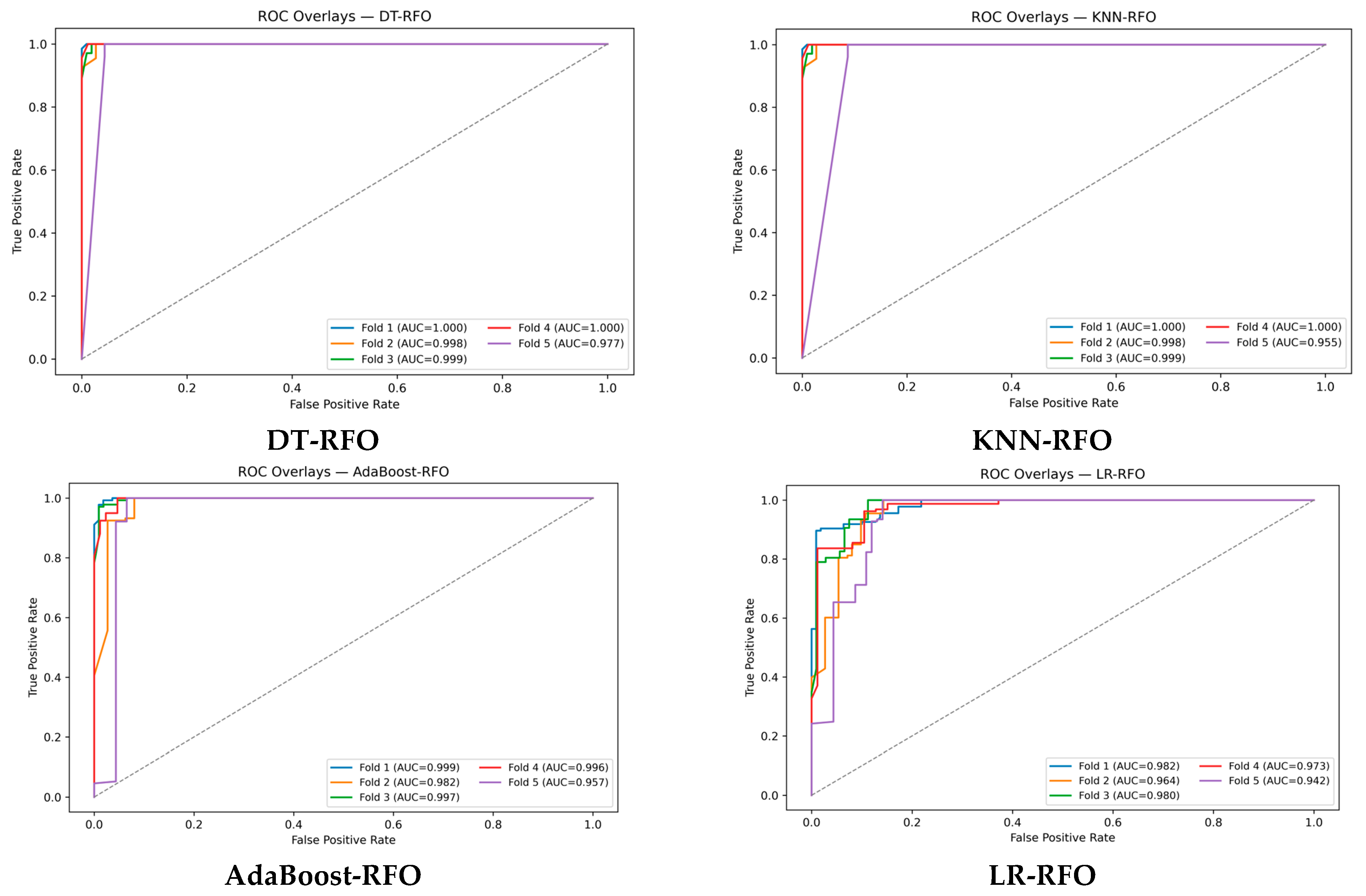

5.1. Evaluation of Performance with the ROC Curve

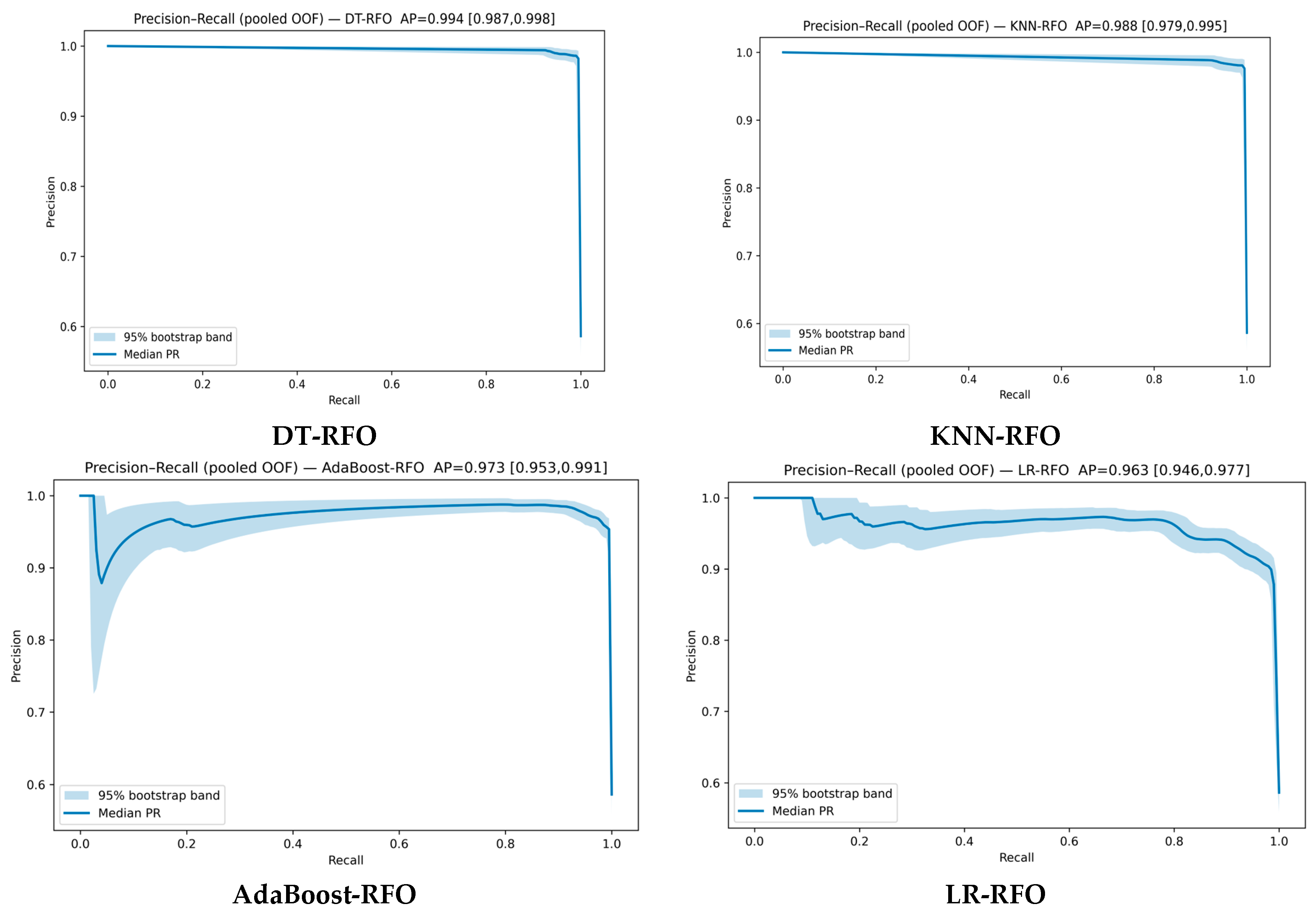

5.2. Evaluation of Performance with Precision–Recall (PR) and Confidence Intervals (CIs)

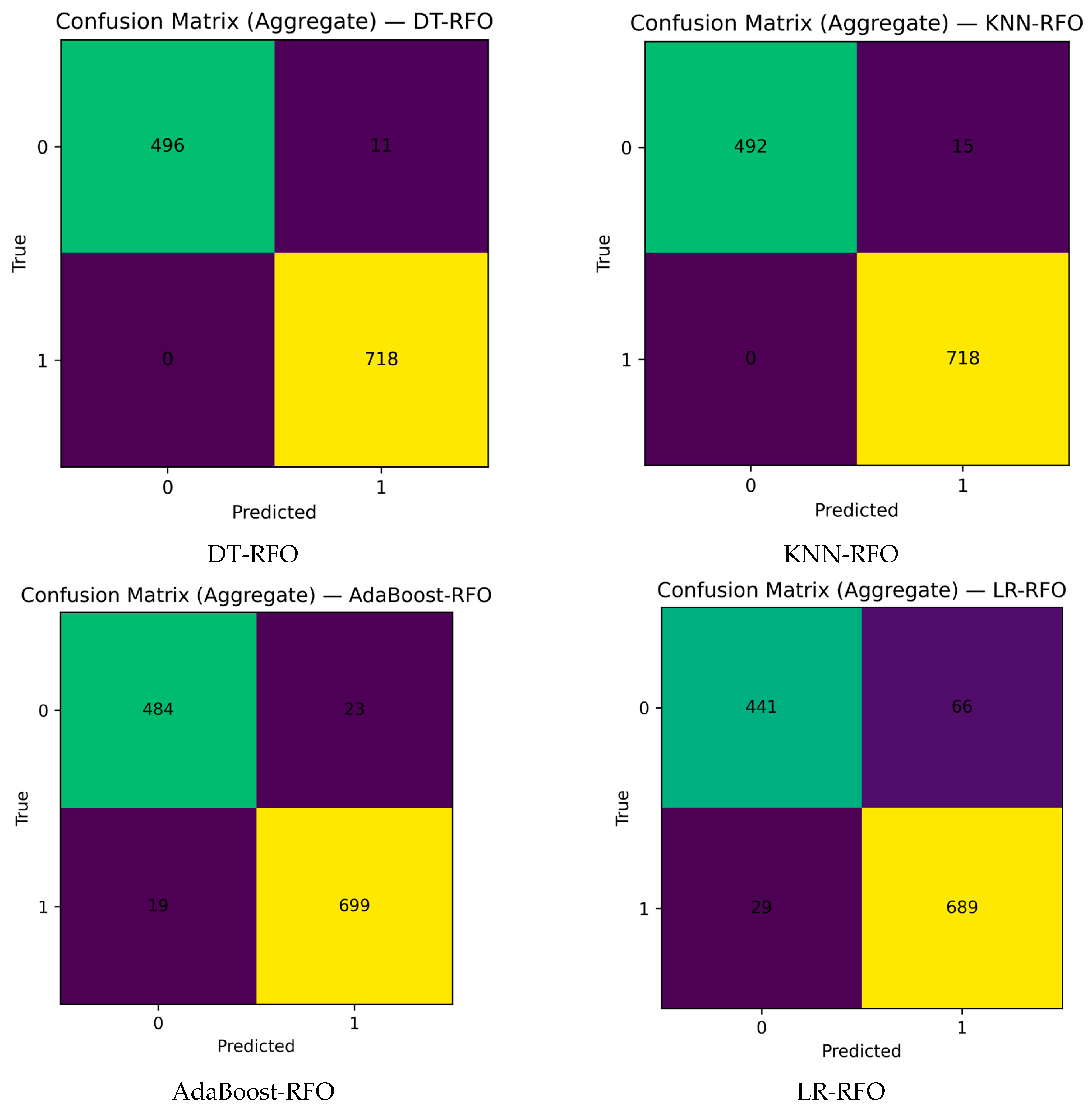

5.3. Performance Evaluation Using a Confusion Matrix

5.4. Explain Global Model Predictions Using Shapley Importance Plot

5.5. Explaining Global Model Predictions Using Shapley Summary Plots

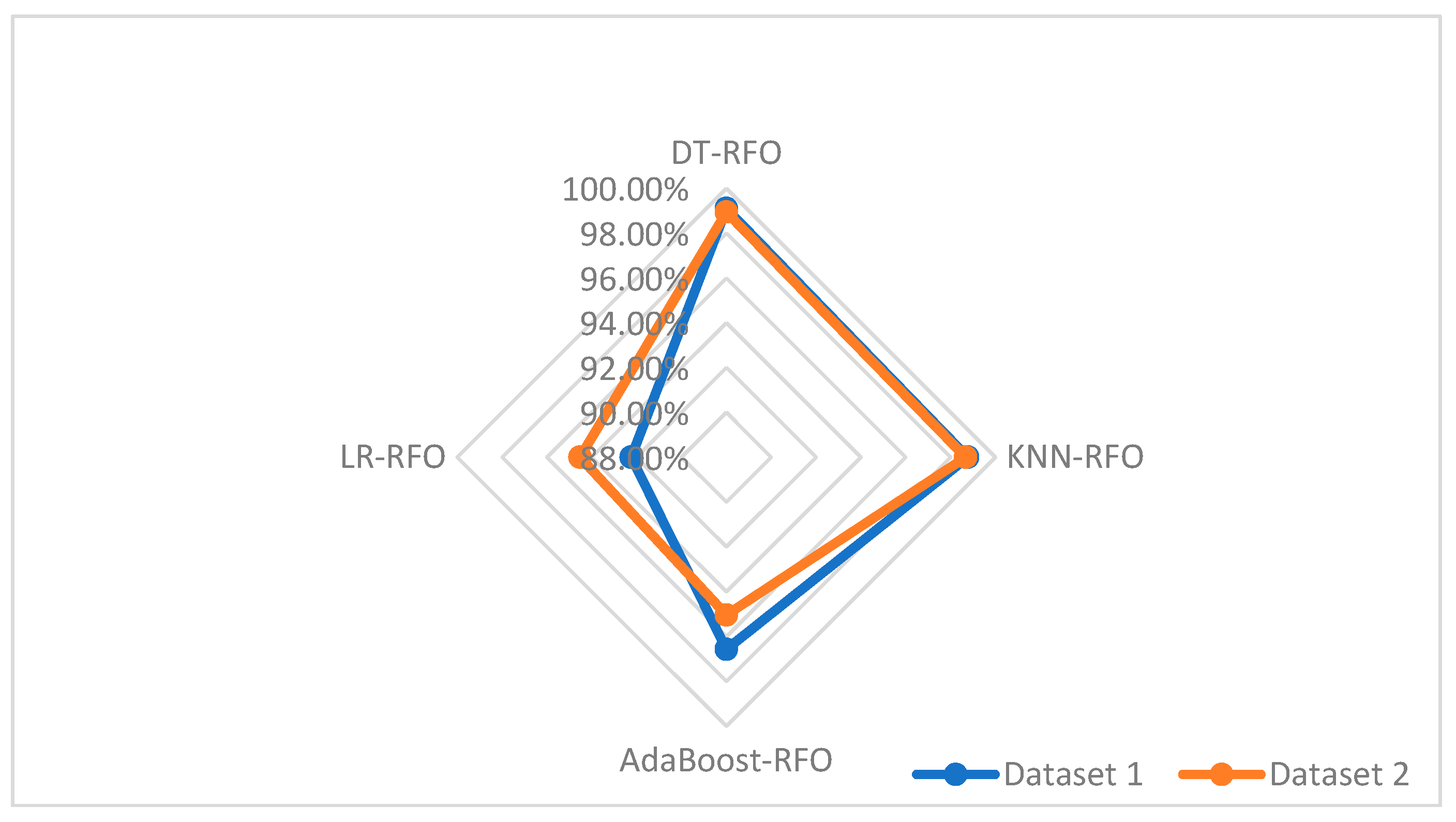

5.6. Comparison with Another X Spam Dataset

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jethava, G.; Rao, U.P. Exploring security and trust mechanisms in online social networks: An extensive review. Comput. Secur. 2024, 140, 103790. [Google Scholar] [CrossRef]

- Nevado-Catalán, D.; Pastrana, S.; Vallina-Rodriguez, N.; Tapiador, J. An analysis of fake social media engagement services. Comput. Secur. 2023, 124, 103013. [Google Scholar] [CrossRef]

- de Keulenaar, E.; Magalhães, J.C.; Ganesh, B. Modulating Moderation: A History of Objectionability in Twitter Moderation Practices. J. Commun. 2023, 73, 273–287. [Google Scholar] [CrossRef]

- Murtfeldt, R.; Alterman, N.; Kahveci, I.; West, J.D. RIP Twitter API: A eulogy to its vast research contributions. arXiv 2024, arXiv:2404.07340. [Google Scholar] [CrossRef]

- Song, J.; Lee, S.; Kim, J. Spam filtering in Twitter using sender-receiver relationship. In Recent Advances in Intrusion Detection: 14th International Symposium, RAID 2011, Menlo Park, CA, USA, 20–21 September 2011; Lecture Notes in Computer Science, Vol. 6961; Springer: Berlin/Heidelberg, Germany, 2011; pp. 301–317. [Google Scholar]

- Delany, S.J.; Buckley, M.; Greene, D. SMS spam filtering: Methods and data. Expert Syst. Appl. 2012, 39, 9899–9908. [Google Scholar] [CrossRef]

- Iman, Z.; Sanner, S.; Bouadjenek, M.R.; Xie, L. A longitudinal study of topic classification on Twitter. In Proceedings of the Eleventh International AAAI Conference on Web and Social Media (ICWSM 2017), Montréal, QC, Canada, 15–18 May 2017; AAAI Press: Palo Alto, CA, USA, 2017; Volume 11, pp. 552–555. [Google Scholar] [CrossRef]

- Rashid, H.; Liaqat, H.B.; Sana, M.U.; Kiren, T.; Karamti, H.; Ashraf, I. Framework for detecting phishing crimes on Twitter using selective features and machine learning. Comput. Electr. Eng. 2025, 124, 110363. [Google Scholar] [CrossRef]

- Ahmad, S.B.S.; Rafie, M.; Ghorabie, S.M. Spam detection on Twitter using a support vector machine and users’ features by identifying their interactions. Multimed. Tools Appl. 2021, 80, 11583–11605. [Google Scholar] [CrossRef]

- Abkenar, S.B.; Mahdipour, E.; Jameii, S.M.; Kashani, M.H. A hybrid classification method for Twitter spam detection based on differential evolution and random forest. Concurr. Comput. Pract. Exp. 2021, 33, e6381. [Google Scholar] [CrossRef]

- Galli, A.; La Gatta, V.; Moscato, V.; Postiglione, M.; Sperlì, G. Explainability in AI-based behavioral malware detection systems. Comput. Secur. 2024, 141, 103842. [Google Scholar] [CrossRef]

- Le, T.-T.-H.; Kim, H.; Kang, H.; Kim, H. Classification and explanation for intrusion detection system based on ensemble trees and SHAP method. Sensors 2022, 22, 1154. [Google Scholar] [CrossRef]

- Braik, M.; Al-Hiary, H. Rüppell’s fox optimizer: A novel meta-heuristic approach for solving global optimization problems. Clust. Comput. 2025, 28, 292. [Google Scholar] [CrossRef]

- Kaczmarek-Majer, K.; Casalino, G.; Castellano, G.; Dominiak, M.; Hryniewicz, O.; Kamińska, O.; Vessio, G.; Díaz-Rodríguez, N. PLENARY: Explaining black-box models in natural language through fuzzy linguistic summaries. Inf. Sci. 2022, 614, 374–399. [Google Scholar] [CrossRef]

- Paic, G.; Serkin, L. The impact of artificial intelligence: From cognitive costs to global inequality. Eur. Phys. J. Spec. Top. 2025, 234, 3045–3050. [Google Scholar] [CrossRef]

- Abusitta, A.; Li, M.Q.; Fung, B.C. Survey on Explainable AI: Techniques, challenges and open issues. Expert Syst. Appl. 2024, 255, 124710. [Google Scholar] [CrossRef]

- Borgonovo, E.; Plischke, E.; Rabitti, G. The many Shapley values for explainable artificial intelligence: A sensitivity analysis perspective. Eur. J. Oper. Res. 2024, 318, 911–926. [Google Scholar] [CrossRef]

- Selvakumar, V.; Reddy, N.K.; Tulasi, R.S.V.; Kumar, K.R. Data-Driven Insights into Social Media Behavior Using Predictive Modeling. Procedia Comput. Sci. 2025, 252, 480–489. [Google Scholar] [CrossRef]

- Mienye, I.D.; Jere, N. A survey of decision trees: Concepts, algorithms, and applications. IEEE Access 2024, 12, 86716–86727. [Google Scholar] [CrossRef]

- Mohammed, S.; Al-Aaraji, N.; Al-Saleh, A. Knowledge Rules-Based Decision Tree Classifier Model for Effective Fake Accounts Detection in Social Networks. Int. J. Saf. Secur. Eng. 2024, 14, 1243–1251. [Google Scholar] [CrossRef]

- Halder, R.K.; Uddin, M.N.; Uddin, M.A.; Aryal, S.; Khraisat, A. Enhancing K-nearest neighbor algorithm: A comprehensive review and performance analysis of modifications. J. Big Data 2024, 11, 113. [Google Scholar] [CrossRef]

- Teke, M.; Etem, T. Cascading GLCM and T-SNE for detecting tumor on kidney CT images with lightweight machine learning design. Eur. Phys. J. Spec. Top. 2025, 234, 4619–4634. [Google Scholar] [CrossRef]

- Ouyang, Q.; Tian, J.; Wei, J. E-mail Spam Classification using KNN and Naive Bayes. Highlights Sci. Eng. Technol. 2023, 38, 57–63. [Google Scholar] [CrossRef]

- Bisong, E. Logistic regression. In Building Machine Learning and Deep Learning Models on Google Cloud Platform: A Comprehensive Guide for Beginners; Apress: Berkeley, CA, USA, 2019; pp. 243–250. [Google Scholar] [CrossRef]

- Sarker, S.K.; Bhattacharjee, R.; Sufian, M.A.; Ahamed, M.S.; Talha, M.A.; Tasnim, F.; Islam, K.M.N.; Adrita, S.T. Email Spam Detection Using Logistic Regression and Explainable AI. In Proceedings of the 2025 International Conference on Electrical, Computer and Communication Engineering (ECCE), Chittagong, Bangladesh, 13–15 February 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1–6. [Google Scholar] [CrossRef]

- Bharti, K.K.; Pandey, S. Fake account detection in twitter using logistic regression with particle swarm optimization. Soft Comput. 2021, 25, 11333–11345. [Google Scholar] [CrossRef]

- Khan, A.A.; Chaudhari, O.; Chandra, R. A review of ensemble learning and data augmentation models for class imbalanced problems: Combination, implementation and evaluation. Expert Syst. Appl. 2024, 244, 122778. [Google Scholar] [CrossRef]

- Mohammed, A.; Kora, R. A comprehensive review on ensemble deep learning: Opportunities and challenges. J. King Saud Univ.-Comput. Inf. Sci. 2023, 35, 757–774. [Google Scholar] [CrossRef]

- Xing, H.-J.; Liu, W.-T.; Wang, X.-Z. Bounded exponential loss function based AdaBoost ensemble of OCSVMs. Pattern Recognit. 2024, 148, 110191. [Google Scholar] [CrossRef]

- Ferrouhi, E.M.; Bouabdallaoui, I. A comparative study of ensemble learning algorithms for high-frequency trading. Sci. Afr. 2024, 24, e02161. [Google Scholar] [CrossRef]

- Abkenar, S.B.; Kashani, M.H.; Akbari, M.; Mahdipour, E. Learning textual features for Twitter spam detection: A systematic literature review. Expert Syst. Appl. 2023, 228, 120366. [Google Scholar] [CrossRef]

- Qazi, A.; Hasan, N.; Mao, R.; Abo, M.E.M.; Dey, S.K.; Hardaker, G. Machine Learning-Based Opinion Spam Detection: A Systematic Literature Review. IEEE Access 2024, 12, 143485–143499. [Google Scholar] [CrossRef]

- Imam, N.H.; Vassilakis, V.G. A survey of attacks against twitter spam detectors in an adversarial environment. Robotics 2019, 8, 50. [Google Scholar] [CrossRef]

- Alnagi, E.; Ahmad, A.; Al-Haija, Q.A.; Aref, A. Unmasking Fake Social Network Accounts with Explainable Intelligence. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 1277–1283. [Google Scholar] [CrossRef]

- Atacak, İ.; Çıtlak, O.; Doğru, İ.A. Application of interval type-2 fuzzy logic and type-1 fuzzy logic-based approaches to social networks for spam detection with combined feature capabilities. PeerJ Comput. Sci. 2023, 9, e1316. [Google Scholar] [CrossRef]

- Ouni, S.; Fkih, F.; Omri, M.N. BERT-and CNN-based TOBEAT approach for unwelcome tweets detection. Soc. Netw. Anal. Min. 2022, 12, 144. [Google Scholar] [CrossRef]

- Ayo, F.E.; Folorunso, O.; Ibharalu, F.T.; Osinuga, I.A.; Abayomi-Alli, A. A probabilistic clustering model for hate speech classification in twitter. Expert Syst. Appl. 2021, 173, 114762. [Google Scholar] [CrossRef]

- Liu, S.; Wang, Y.; Chen, C.; Xiang, Y. An Ensemble Learning Approach for Addressing the Class Imbalance Problem in Twitter Spam Detection. In Information Security and Privacy; Liu, J., Steinfeld, R., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2016; Volume 9722, pp. 215–228. [Google Scholar] [CrossRef]

- Gupta, S.; Khattar, A.; Gogia, A.; Kumaraguru, P.; Chakraborty, T. Collective classification of spam campaigners on Twitter: A hierarchical meta-path based approach. In Proceedings of the 2018 World Wide Web Conference (WWW ’18), Lyon, France, 23–27 April 2018; International World Wide Web Conferences Steering Committee (IW3C2): Geneva, Switzerland, 2018; pp. 529–538. [Google Scholar] [CrossRef]

- Manasa, P.; Malik, A.; Alqahtani, K.N.; Alomar, M.A.; Basingab, M.S.; Soni, M.; Rizwan, A.; Batra, I. Tweet spam detection using machine learning and swarm optimization techniques. IEEE Trans. Comput. Soc. Syst. 2024, 11, 4870–4877. [Google Scholar] [CrossRef]

- Krithiga, R.; Ilavarasan, E. Hyperparameter tuning of AdaBoost algorithm for social spammer identification. Int. J. Pervasive Comput. Commun. 2021, 17, 462–482. [Google Scholar] [CrossRef]

- Ghourabi, A.; Alohaly, M. Enhancing spam message classification and detection using transformer-based embedding and ensemble learning. Sensors 2023, 23, 3861. [Google Scholar] [CrossRef] [PubMed]

- Kohavi, R. A study of cross-validation and bootstrap for accuracy estimation and model selection. In Proceedings of the Fourteenth International Joint Conference on Artificial Intelligence (IJCAI-95), Montréal, QC, Canada, 20–25 August 1995; Mellish, C.S., Ed.; Morgan Kaufmann: San Mateo, CA, USA, 1995; Volume 2, pp. 1137–1145. [Google Scholar]

- Schapire, R.E. Explaining AdaBoost. In Empirical Inference: Festschrift in Honor of Vladimir N. Vapnik; Schölkopf, B., Luo, Z., Vovk, V., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 37–52. [Google Scholar] [CrossRef]

- Djuric, M.; Jovanovic, L.; Zivkovic, M.; Bacanin, N.; Antonijevic, M.; Sarac, M. The AdaBoost Approach Tuned by SNS Metaheuristics for Fraud Detection. In Proceedings of the International Conference on Paradigms of Computing, Communication and Data Sciences (PCCDS 2022), Jaipur, India, 5–7 July 2022; Yadav, R.P., Nanda, S.J., Rana, P.S., Lim, M.-H., Eds.; Algorithms for Intelligent Systems. Springer: Singapore, 2023; pp. 115–128. [Google Scholar]

- Jáñez-Martino, F.; Alaiz-Rodríguez, R.; González-Castro, V.; Fidalgo, E.; Alegre, E. A review of spam email detection: Analysis of spammer strategies and the dataset shift problem. Artif. Intell. Rev. 2023, 56, 1145–1173. [Google Scholar] [CrossRef]

- Meriem, A.B.; Hlaoua, L.; Romdhane, L.B. A fuzzy approach for sarcasm detection in social networks. Procedia Comput. Sci. 2021, 192, 602–611. [Google Scholar] [CrossRef]

- Liu, S.; Wang, Y.; Zhang, J.; Chen, C.; Xiang, Y. Addressing the class imbalance problem in twitter spam detection using ensemble learning. Comput. Secur. 2017, 69, 35–49. [Google Scholar] [CrossRef]

- Ameen, A.K.; Kaya, B. Spam detection in online social networks by deep learning. In Proceedings of the 2018 International Conference on Artificial Intelligence and Data Processing (IDAP), Malatya, Turkey, 28–30 September 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Madisetty, S.; Desarkar, M.S. A neural network-based ensemble approach for spam detection in Twitter. IEEE Trans. Comput. Soc. Syst. 2018, 5, 973–984. [Google Scholar] [CrossRef]

- Ashour, M.; Salama, C.; El-Kharashi, M.W. Detecting spam tweets using character N-gram features. In Proceedings of the 2018 13th International Conference on Computer Engineering and Systems (ICCES), Cairo, Egypt, 18–19 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 190–195. [Google Scholar] [CrossRef]

- Genuine/Fake User Profile Dataset. Kaggle. Available online: https://www.kaggle.com/datasets/whoseaspects/genuinefake-user-profile-dataset (accessed on 23 September 2025).

| No | Input Model Features | Type | Range of Evaluate |

|---|---|---|---|

| 1 | User Statuses Count (USC) | Integer | 0–99, 100–199, …, 1,000,000–1,999,999 |

| 2 | Sensitive Content Alert (SCA) | Boolean | TRUE(T)/FALSE(F) |

| 3 | User Favorites Count (UFC) | Integer | 0–9, 10–19, 20–29, …, 100,000–1,999,999 |

| 4 | User Listed Count (ULC) | Integer | 0–9, 10–19, 20–29, …, 900–999 |

| 5 | Source in Twitter (SITW) | String | Yes(Y)/No(N) |

| 6 | User Friends Counts (UFRC) | Integer | 0–9, 10–19, 20–29, …, 1000–99,999 |

| 7 | User Followers Count (UFLC) | Integer | 0–9, 10–19, 20–29, …, 100,000–1,999,999 |

| 8 | User Location (UL) | String | Yes(Y)/No(N) |

| 9 | User Geo-Enabled (UGE) | Boolean | TRUE(T)/FALSE(F) |

| 10 | User Default Profile Image (UDPI) | Boolean | TRUE(T)/FALSE(F) |

| 11 | Re-Tweet (RTWT) | Boolean | TRUE(T)/FALSE(F) |

| 12 | CLASS | Boolean | TRUE(T)/FALSE(F) |

| Mod | Mod Selection Criteria (p) | Condition | Update Strategy |

|---|---|---|---|

| Day-time | p ≥ 0.5 | s ≥ h, rand ≥ 0.25 | Sense of vision (Equation (5)) |

| Day-time | p ≥ 0.5 | s ≥ h, rand < 0.25 | Eye movement (Equation (6)) |

| Day-time | p ≥ 0.5 | s < h, rand ≥ 0.75 | Sense of hearing (Equation (7)) |

| Day-time | p ≥ 0.5 | s < h, rand < 0.75 | Ear movement (Equation (9)) |

| Night-time | p < 0.5 | s < h, rand ≥ 0.25 | Sense of hearing (Equation (8)) |

| Night-time | p < 0.5 | s < h, rand < 0.25 | Ear movement (Equation (9)) |

| Night-time | p < 0.5 | s ≥ h, rand ≥ 0.75 | Sense of vision (Equation (5)) |

| Night-time | p < 0.5 | s ≥ h, rand < 0.75 | Eye movement (Equation (6)) |

| Optimal Features | Selected Features |

|---|---|

| Nine optimal features for detection models | SCA, UL, UDPI, RTWT, USC, UFC, ULC, UFRC, and UFLC |

| Eleven optimal features for detection models | Statuses_count, default_profile, profile_banner_url, favourites_count, geo_enabled, location, friends_count, description, followers_count, fav_number, and listed_count |

| Model Name | Hyperparameter Details |

|---|---|

| DT | Depth was explored at three and five with an unrestricted upper bound; the minimum split size was evaluated at two, five, and ten. All other settings followed conventional defaults: Gini impurity with best-first splitting, single-sample leaves, no limits on features or leaf nodes, a zero impurity-decrease threshold, no cost–complexity pruning, and no class weighting. |

| AdaBoost | Ensemble capacity was tuned by exploring one hundred fifty, three hundred, and five hundred learners with learning rates of 0.5 and 1.0; depth-one tree and the real AdaBoost variant was used. |

| Logistic Regression | optimization with up to two thousand iterations and ridge regularization; inverse regularization strength examined at 0.1, 1.0, and 10.0; a convergence tolerance of ten to the power of minus four, an intercept term included, automatic multiclass handling, no class weighting. |

| KNN | Neighborhood sizes studied at five, eleven, and twenty-one with uniform and distance-based weighting; Minkowski distance with power two corresponding to Euclidean geometry; the search algorithm selected automatically with a leaf size of thirty. |

| Metrics | Formula | Description |

|---|---|---|

| Accuracy | This is a measure that indicates the proportion of accurately identified cases relative to the total number of cases assessed. | |

| F1 score | This is a statistic that provides the harmonic average of the recall and precision metrics. | |

| Recall | This indicator quantifies the proportion of non-spam positives identified by the training model in a specific classification instance. | |

| Precision | This is a measure that quantifies the proportion of accurately identified positive cases among all positively classified cases. The number of accurate predictions relative to all correct predictions is termed precision. | |

| AUC | This indicator evaluates the efficacy of the training model based on the ROC curve, illustrating the relationship among the rate of false positives and the rate of true positives across various thresholds. | |

| Macro-average | Unweighted average metric values per class. | |

| Micro-average | Average of the designated metric computed from the combined predicted and actual values across all classes. | |

| Weighted average | Weighted average of per-class metric values based on the frequency of occurrence for each class. |

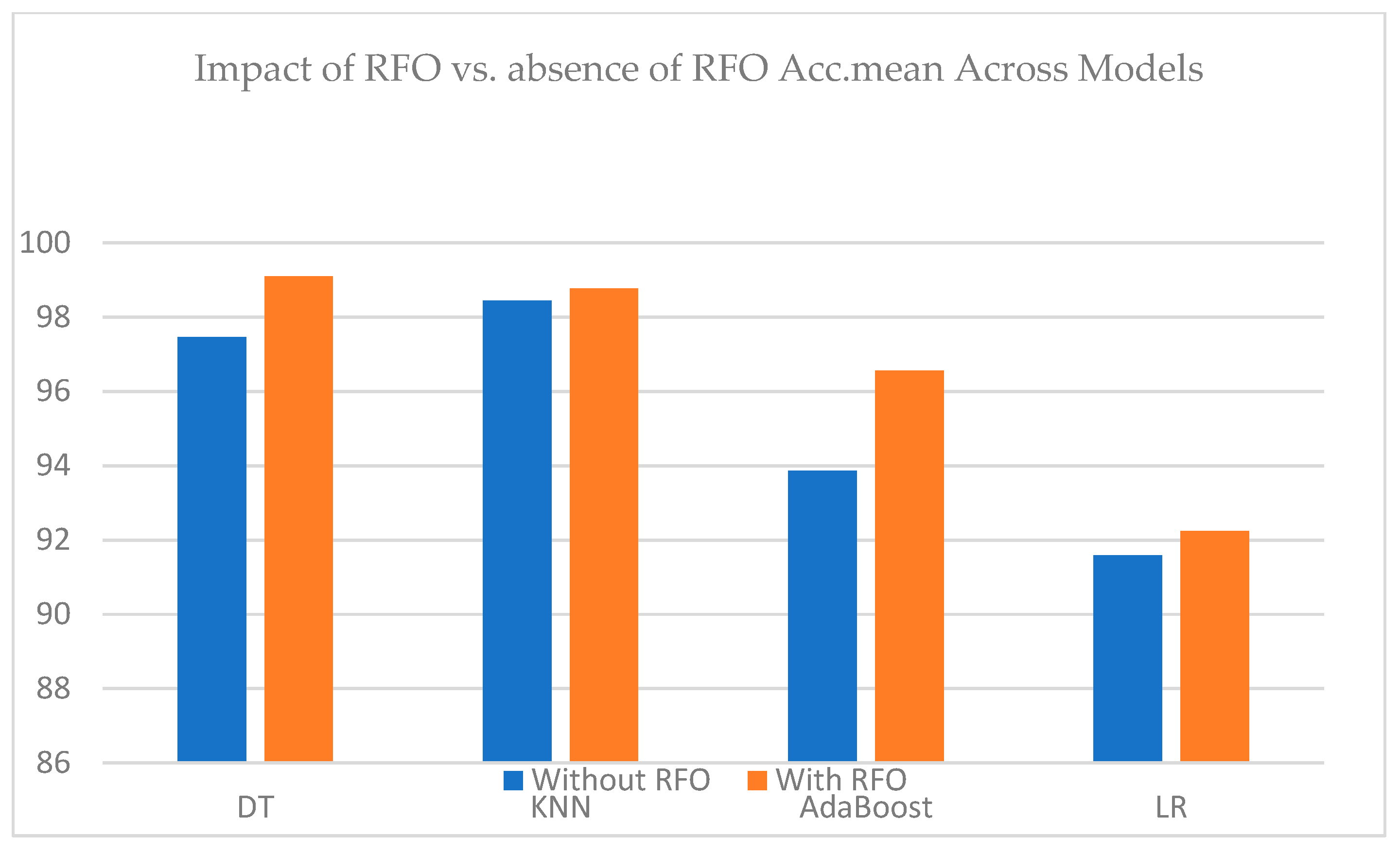

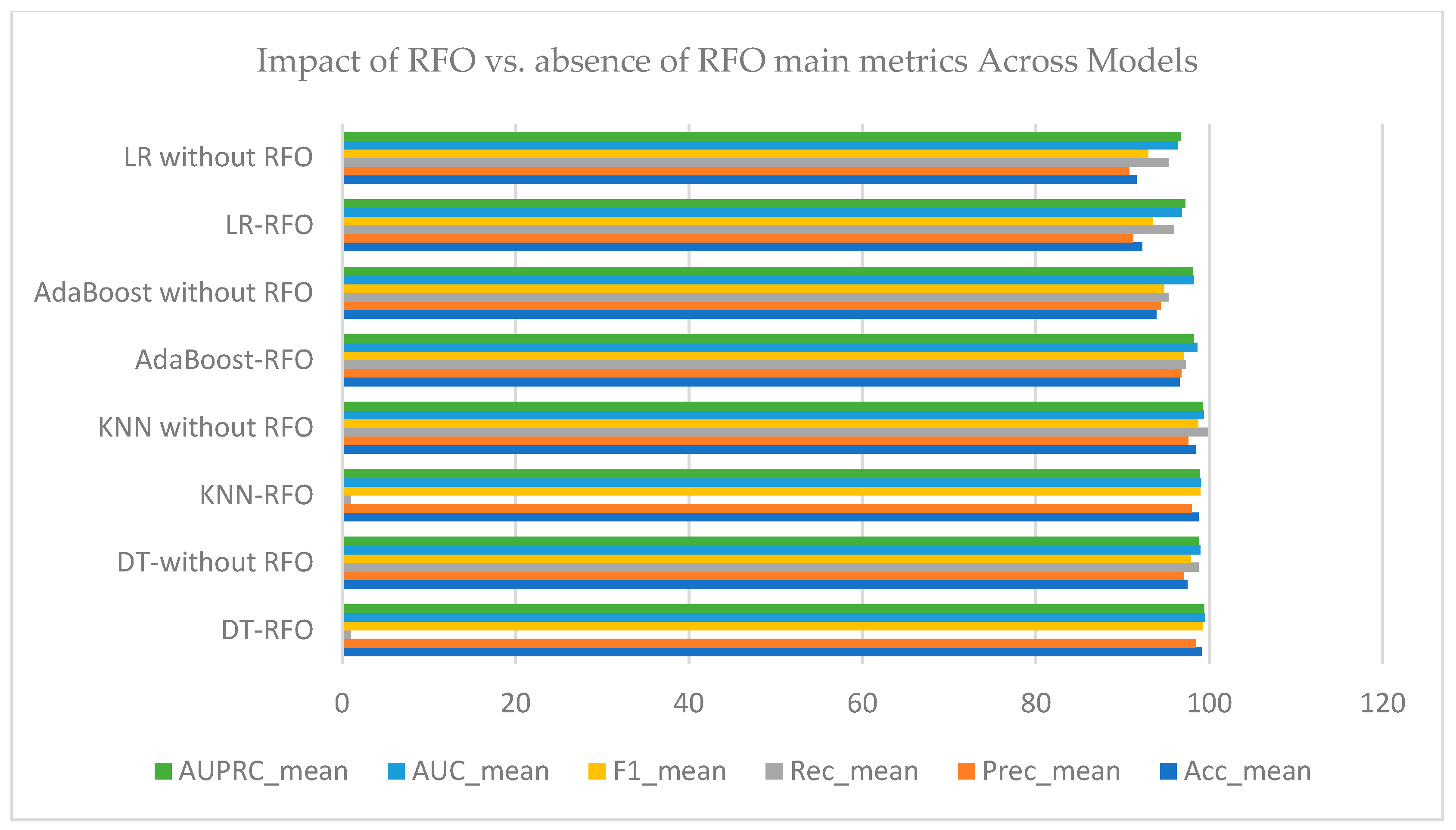

| Model | Acc_mean | Prec_mean | Rec_mean | F1_mean | AUC_mean | AUPRC_mean |

|---|---|---|---|---|---|---|

| DT-RFO | 99.10 | 98.49 | 1 | 99.23 | 99.49 | 99.40 |

| KNN-RFO | 98.77 | 98.00 | 1 | 98.98 | 99.03 | 98.90 |

| AdaBoost-RFO | 96.57 | 96.79 | 97.27 | 97.02 | 98.631 | 98.19 |

| LR-RFO | 92.24 | 91.22 | 95.90 | 93.47 | 96.83 | 97.25 |

| Metric | DT | KNN | AdaBoost | LR |

|---|---|---|---|---|

| AUC_mean | 0.99 | 0.99 | 0.99 | 0.97 |

| AUC_sd | 0.01 | 0.02 | 0.02 | 0.02 |

| AUC_t95_lo | 0.98 | 0.97 | 0.96 | 0.95 |

| AUC_t95_hi | 1.01 | 1.02 | 1.01 | 0.99 |

| AUPRC_mean | 0.99 | 0.99 | 0.98 | 0.97 |

| AUPRC_sd | 0.01 | 0.02 | 0.03 | 0.02 |

| AUPRC_t95_lo | 0.98 | 0.96 | 0.95 | 0.95 |

| AUPRC_t95_hi | 1.01 | 1.02 | 1.01 | 0.99 |

| MacroF1_mean | 0.99 | 0.99 | 0.96 | 0.92 |

| MacroF1_sd | 0.01 | 0.01 | 0.02 | 0.02 |

| MacroF1_t95_lo | 0.98 | 0.97 | 0.94 | 0.90 |

| MacroF1_t95_hi | 1.00 | 1.00 | 0.99 | 0.94 |

| F1_mean | 0.99 | 0.99 | 0.97 | 0.93 |

| F1_sd | 0.00 | 0.01 | 0.02 | 0.02 |

| F1_t95_lo | 0.99 | 0.98 | 0.94 | 0.92 |

| F1_t95_hi | 1.00 | 1.00 | 1.00 | 0.95 |

| Author | Dataset | Methodology | Performance Results (%) |

|---|---|---|---|

| [35] | X Dataset (by X API) | (IT2-M) (FIS) (IT2-S) (FIS) (IT1-M) (FIS) (IT1-S) (FIS) | Accuracy = 95.5 Precision = 95.7 Recall = 96.7 F1 score = 96.2 AUC = 97.1 |

| [36] | SemCat-(2018) | TOBEAT leveraging BERT and CNN | Accuracy = 94.97 Precision = 94.05 Recall = 95.88 F1 score = 94.95 |

| [37] | X Dataset (by hatebase.org) | A clustering framework using probabilistic rules and fuzzy sentiment classification | Accuracy = 94.53 Precision = 92.54 Recall = 91.74 F1 score = 92.56 AUC = 96.45 |

| [47] | X Dataset (Sem-Eval-2014 and Bamman) | Classification methodology based on (FL) | Accuracy = 90.9 Precision = 95.7 Recall = 82.4 F1 score = 87.4 |

| [48] | X Dataset (by [48]) | Ensemble learning technique with random oversampling (ROS) plus random undersampling (RUS) and fuzzy-based oversampling (FOS) | Mean P = 0.76–0.78 Mean F = 0.76–0.55 Mean FP = 0.11 TP = 0.74–0.43 |

| [39] | X Dataset (by X API) | (HMPS) Hierarchical meta-path-based approach with feedback and default one-class classifier | Precision = 95.0 Recall = 90.0 F1 score = 93.0 AUC = 92.0 |

| [49] | X Dataset (by X API) | Deep learning (DL) methodology utilizing a multilayer perceptron (MLP) algorithm | Precision = 92.0 Recall = 88.0 F1 score = 89.0 |

| [34] | X Dataset (by www.unipi.it) | Ensemble-based XGBoost with random forest | Accuracy = 90 Precision = 91.0 Recall = 86.0 F1 score = 89.0 |

| [50] | X Dataset (HSpam14 and 1KS10KN) | Ensemble method utilizing convolutional neural network models and a feature-based model | Accuracy = 95.7 Precision = 92.2 Recall = 86.7 F1 score = 89.3 |

| [51] | X Dataset (by [49]) | LR, SVM, and RF utilizing various N-gram character features. | Precision = 79.5 Recall = 79.4 F1-score = 79.4 |

| Proposed method | X Dataset 1 (by [35]) | Nature-inspired method with an ensemble learning approach using 9 features | Accuracy DT-RFO = 99.10 KNN-RFO = 98.77 AdaBoost-RFO = 96.57 LR-RFO = 92.24 |

| X Dataset 2 (by [52]) | Nature-inspired method with an ensemble learning approach using 11 features | Accuracy DT-RFO = 98.94 KNN-RFO = 98.67 AdaBoost-RFO = 95.04 LR-RFO = 94.52 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

AlZeyadi, H.; Sert, R.; Duran, F. A Lightweight, Explainable Spam Detection System with Rüppell’s Fox Optimizer for the Social Media Network X. Electronics 2025, 14, 4153. https://doi.org/10.3390/electronics14214153

AlZeyadi H, Sert R, Duran F. A Lightweight, Explainable Spam Detection System with Rüppell’s Fox Optimizer for the Social Media Network X. Electronics. 2025; 14(21):4153. https://doi.org/10.3390/electronics14214153

Chicago/Turabian StyleAlZeyadi, Haidar, Rıdvan Sert, and Fecir Duran. 2025. "A Lightweight, Explainable Spam Detection System with Rüppell’s Fox Optimizer for the Social Media Network X" Electronics 14, no. 21: 4153. https://doi.org/10.3390/electronics14214153

APA StyleAlZeyadi, H., Sert, R., & Duran, F. (2025). A Lightweight, Explainable Spam Detection System with Rüppell’s Fox Optimizer for the Social Media Network X. Electronics, 14(21), 4153. https://doi.org/10.3390/electronics14214153