1. Introduction

The current situation in healthcare is characterized by escalating expenses, aging populations, precision medicine, universal health coverage, and a surge in non-communicable diseases, coupled with the global impact of the COVID-19 pandemic [

1]. Meanwhile, the digital transformation of healthcare information through big data and medical imaging has led to the emergence of FL as a promising approach. However, data privacy and security concerns remain significant barriers to widespread adoption. Privacy breaches, exemplified by major incidents such as the 2015 Anthem breach (78.8 million health records) and the 2019 American Medical Collection Agency (AMCA) breach, affecting over 25 million patients, have highlighted the urgent need for robust measures to protect sensitive information. International information protection laws, as shown in

Table 1, such as HIPAA, GDPR, APPI, and the Personal Information Protection Law, have been enacted to address these concerns. These laws aim to safeguard user privacy and regulate data usage [

2].

To ensure privacy-preserving and collaborative learning, FL [

3,

4] has emerged as a promising paradigm for the training of models across decentralized institutions without sharing raw data. However, in medical imaging, FL faces several challenges: limited and noisy annotations due to privacy regulations and labeling costs, non-IID client distributions caused by demographic and device heterogeneity, and reduced transparency that undermines clinical trust [

5,

6,

7]. These issues highlight the need for UQ, which can identify unreliable clients or noisy data, provide calibrated confidence under heterogeneity, and improve interpretability in safety-critical applications [

8]. In this context, three major forms of uncertainty become particularly important:

data uncertainty, arising from measurement errors, sampling variability, or inconsistent labels [

9];

model uncertainty, introduced by local optimization and aggregation variability in decentralized training [

10]; and

distributional uncertainty, driven by inter-site heterogeneity or personalization biases across clients [

11,

12]. Addressing and quantifying these uncertainties is essential in building reliable, robust, and trustworthy federated medical AI systems.

Various approaches have been proposed to manage uncertainty in FL.

Bayesian methods provide a principled framework for incorporating prior knowledge, estimating posterior distributions, and quantifying parameter uncertainty [

13];

conformal prediction constructs prediction regions with guaranteed error rates [

14];

deterministic evidential methods offer alternative mathematical formulations to capture ambiguity in predictions [

15]; and

ensemble techniques enhance reliability by combining multiple models or predictions [

16]. Leveraging these methods, researchers have begun to adapt UQ to federated healthcare settings, recognizing its critical role in building safe, reliable, and privacy-preserving medical AI systems [

17]. In safety-critical domains, UQ highlights potential failure cases, thereby improving transparency and clinical trust. Recent studies have explored diverse strategies, including Bayesian neural networks (BNNs) and conformal prediction adapted to decentralized training, ensemble learning and distillation for robustness under heterogeneous data, and meta-learning for rapid adaptation in data-scarce domains. Privacy-preserving techniques such as differential privacy (DP), secure aggregation, and homomorphic encryption (HE) have also been combined with UQ [

17], although they may introduce additional uncertainty or degrade utility due to noise injection, overhead, or approximation errors. Overall, existing efforts can be broadly grouped into two main directions,

Bayesian federated learning (BFL) and

federated conformal prediction (FCP), with other approaches—such as evidential models, fuzzy theory, and verification-based techniques—remaining less common. These developments illustrate the growing importance of FL-UQ as a pathway toward reliable, trustworthy, and privacy-conscious medical AI, although current work remains fragmented and lacks systematic synthesis, as summarized in

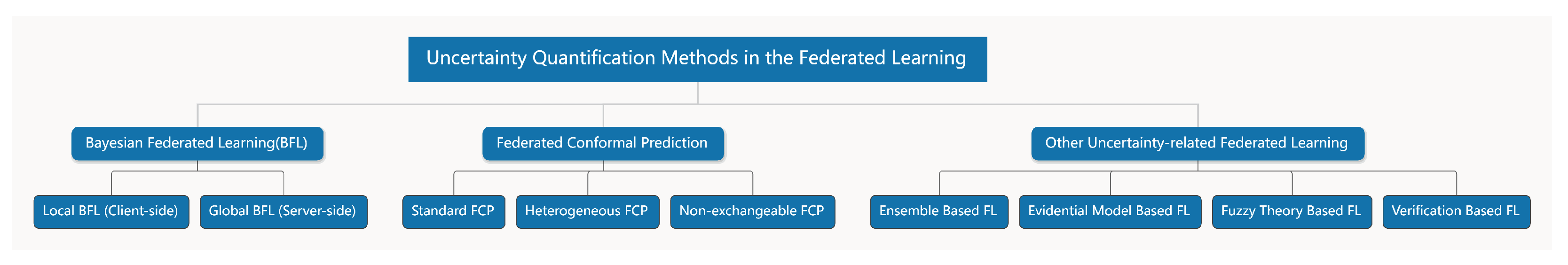

Figure 1.

Table 1.

International organizations’ laws for data privacy protection.

Table 1.

International organizations’ laws for data privacy protection.

| Ref. | Law | Country/Region | Focus |

|---|

| [18] | Health Insurance Portability and Accountability Act (HIPAA) | United States of America | Bill regarding health insurance and liability |

| [19] | Personal Information Protection and Electronic Documents Act (PIPEDA) | Canada | The provisions and directives govern personal information utilization by private individuals or companies in their business operations |

| [20] | Act on the Protection of Personal Information (APPI) | Japan | An improved process for regulating the use of data to prevent leakage, loss, and damage |

| [21] | General Data Protection Regulation (GDPR) | European Union | Comprehensive legislation that has broad scope and is systematically designed to cover a wide range of applications |

| [22] | California Consumer Privacy Act (CCPA) | California (United States) | A detailed law for safeguarding consumer privacy rights and regulating the acquisition, use, and disclosure of personal information by business operations |

| [23] | Personal Information Protection Law (PIPL) | China | A systematic and comprehensive law specifically designed to safeguard personal data, having broad scope and applicable to various sectors |

This research aims to provide a comprehensive analysis of the integration of FL and UQ in healthcare. We investigate the coexistence and interaction of uncertainties unique to federated learning—distinguishing them from those in centralized deep learning—and develop strategies to mitigate their impacts on model reliability and robustness. Our survey covers variants of FL, UQ methods, and medical applications, including distributed machine learning, with attention to security considerations such as secure multi-party computation, differential privacy, and homomorphic encryption [

24]. To achieve this, we conducted a systematic literature review of publications emerging between 2020 and 2024 from IEEE, Google Scholar, ScienceDirect, ACM, and Nature. Using Boolean search strings such as “Federated Learning”, “Uncertainty Quantification”, “Healthcare”, “Distributed Machine Learning”, and “Medical”, we retrieved 2216 articles, from which 147, were selected after rigorous filtering for relevance and quality. These works were analyzed to synthesize key themes, challenges, and methods, enabling us to provide a holistic understanding of FL-UQ for medical imaging and to highlight distinctions from existing surveys, as summarized in

Table 2.

This survey provides a comprehensive overview of UQ in explainable medical FL, emphasizing its role in building trustworthy and privacy-preserving AI systems. The key contributions of this work are as follows.

- 1.

Analysis of sources of uncertainty and comprehensive categorization of uncertainty in FL: We analyze how uncertainty arises in FL and interacts in medical settings, and we distinguish between data, model, and distributional uncertainties.

- 2.

Review of state-of-the-art UQ methods integrated with FL: We summarize and compare various approaches—including Bayesian methods, conformal prediction, ensemble-based techniques, evidential models, and meta-learning—highlighting their strengths and limitations in addressing privacy, data and model heterogeneity, and annotation scarcity.

- 3.

In-depth analysis of BFL and FCP: We provide an in-depth discussion of both server- and client-side BFL approaches, along with recent advances in applying conformal prediction to heterogeneous and non-exchangeable FL data.

- 4.

Mapping to real-world medical applications: We highlight five representative use cases: COVID-19 prognosis, tumor segmentation, breast cancer detection, large-scale radiological networks, and breast density classification. These examples illustrate how UQ enhances clinical reliability and interpretability in medical imaging.

- 5.

Identification of open challenges and future opportunities: We summarize unresolved issues such as robust UQ under non-IID data, computational efficiency, out-of-distribution (OOD) detection, and clinical explainability, outlining promising directions for future research.

The remainder of the study is structured as follows.

Section 2 discusses a preliminary study focusing on the FL background and its types. Moving forward,

Section 3 presents an overview of UQ techniques in FL. In

Section 4, we discuss federated Bayesian learning methods. In

Section 5, federated conformal prediction is discussed, followed by

Section 6, which presents other FL-UQ methods.

Section 7 highlights federated medical applications. Then, in

Section 8, uncertainty-related challenges are presented, and, lastly,

Section 9 concludes the current survey.

2. Federated Learning Background

FL, first introduced by Google in 2016, was designed to overcome the limitations of data silos while emphasizing the protection of data privacy [

29]. An example of its application involved implementing joint learning for Google Keyboard’s next-word prediction on mobile devices [

30]. The advancement of FL has expanded its reach across various fields [

31], with the medical domain being a notable beneficiary. Applying FL to train models on real-world health data collected from different hospitals and institutions has been shown to enhance the quality of medical diagnosis. As a specialized form of distributed machine learning, FL enables the development of joint models while preserving privacy. This design directly addresses many of the privacy issues inherent in conventional distributed learning. In this setting, multiple parties collaboratively update a shared model using only their local datasets, without exposing raw patient information [

32].

FL is a decentralized approach that preserves data privacy by aggregating locally computed updates on local devices to create a shared model, enhancing the user experience with learning models [

29]. FL emerges as an extension of the fundamental principles of distributed machine learning. Distributed machine learning, in turn, represents the amalgamation of machine learning techniques with distributed architectures. The concept of machine learning has gained significant prominence since the 1980s, demonstrating remarkable success in tasks such as classification and prediction. It has even achieved or exceeded human-level performance in speech, image, and text prediction and classification [

33].

As is evident from the above, FL offers the advantage of training models without uploading private data. Furthermore, since FL primarily focuses on model training rather than individual data samples, it mitigates the risk of data leakage. This characteristic makes FL an ideal framework for developing machine learning applications that involve privacy-sensitive electronic medical records [

34]. Ref. [

35] proposed “SVeriFL”, a privacy-preserving successive verifiable FL approach. They introduced a well-designed protocol based on BLS signatures and multi-party security, enabling the verification of parameter integrity when uploaded by participants and the correctness of results provided by the server, while also allowing participants to validate the consistency of the aggregation results.

Beyond privacy-preserving protocols, recent research has also explored the combination of FL with modern model architectures such as Transformers. For example, the Federated Transformer (FeT) framework [

36] introduces a multi-party vertical FL scheme designed to address the practical challenges of loosely linked data across institutions. By leveraging Transformer encoders within a vertical FL pipeline, FeT improves representation learning under limited feature overlap while ensuring privacy-preserving aggregation. Although such Transformer-based FL approaches are still in their early stages compared to convolutional or ensemble methods, they highlight the growing interest in adapting advanced neural architectures to the federated setting, particularly in medical domains, where data are heterogeneous and distributed across institutions. In parallel, multimodal FL has gained increased attention, with paradigms such as FedMBridge [

37] enabling bridgeable learning across diverse modalities and FedMFS [

38] proposing selective modality communication to improve efficiency in multimodal fusion. These studies demonstrate the potential of extending FL to more complex and clinically relevant multimodal data scenarios.

Currently, two prominent frameworks dominate the field of FL: the TensorFlow framework and the WeBank Fate framework [

39]. Alongside these, other derivative frameworks exist, including Flower [

40], which introduces unique features to facilitate large-scale FL experiments. FL has thus emerged as the most extensively adopted privacy-preserving technology for industrial and medical AI applications in next-generation advancements [

41].

2.1. Federated Learning Categories

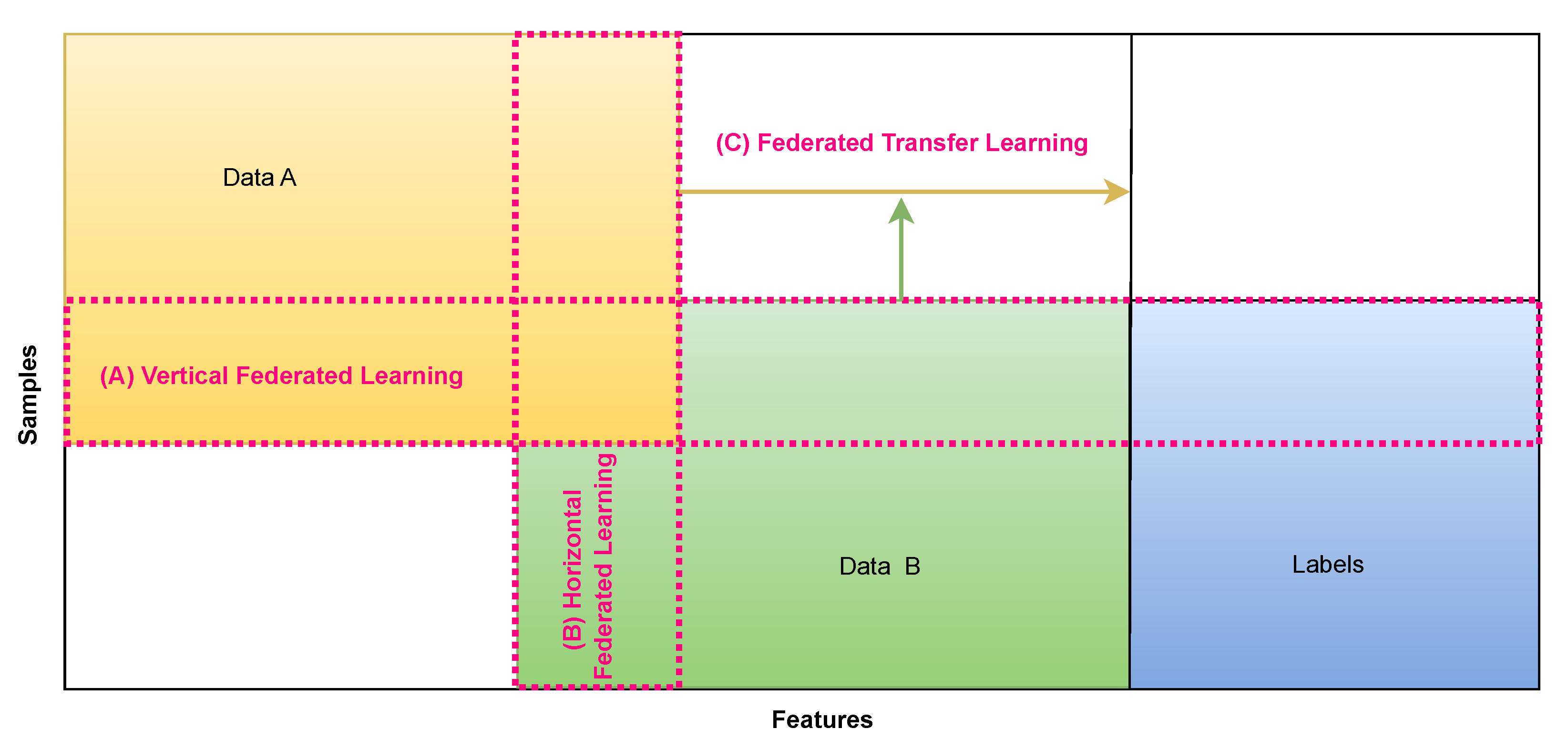

FL can be categorized into three types based on the data sample space and data feature space distribution among participants. These categories include vertical federated learning, horizontal federated learning, and federated transfer learning. Ref. [

42] has served as the foundation for subsequent enhancements and refinements in federated learning algorithms.

2.1.1. Horizontal Federated Learning

Horizontal federated learning is distinguished by substantial overlap in data feature spaces distributed among various data sources, while significant differences are observed in the data sample space. A notable intersection exists in data attributes like gender and age, with minimal overlap in intermediate samples [

43]. This concept is depicted in

Figure 2. For instance, certain user characteristics, such as gender, blood type, pulse, and blood pressure, may be shared between hospitals, but the specific user groups may vary.

2.1.2. Vertical Federated Learning

Vertical federated learning exhibits substantial overlap in data samples within the data sample space but relatively little overlap in the data features among the data sources. In other words, there is minimal similarity in data attributes like gender and age between the two sides of the data. At the same time, there is a significant intersection in the middle portion of the data samples [

44]. This concept is illustrated in

Figure 2. For instance, most individuals in these groups may be the same when considering hospitals and pharmacies within the same city. However, there is limited similarity in terms of patient-specific data characteristics.

2.1.3. Federated Transfer Learning

Federated transfer learning stands apart from vertical and horizontal FL. It typically involves minimal overlap in data characteristics across different data sources and limited overlap in the data sample space [

45]. Essentially, this approach entails a lack of similarity in characteristics such as gender and age between the two sides of the data, along with minimal overlap in the data samples. This concept is illustrated in

Figure 2. For instance, when considering hospitals and pharmacies in different cities, not only are these groups composed of distinct individuals, but the characteristics of the patients also do not exhibit significant overlap.

2.2. Federated Learning Steps

Figure 3 illustrates the federated learning model training process. In general, FL can be divided into four steps.

Local Optimization: In the context of FL, local optimization refers to the initial step where each participating client trains its local model using its own local data. This localized training allows the model to adapt to the unique characteristics of each client’s data. Local optimization is a crucial phase in FL as it ensures that local models are fine-tuned based on local data while preserving data privacy.

Global Aggregation: Global aggregation is the subsequent step in federated learning. In this phase, participating clients send their local model updates (not the raw data) to a central server. The central server aggregates these updates to update the global model. The global model is the result of collaboration among all clients, merging their localized knowledge to create a comprehensive model. Global aggregation represents the collaborative aspect of federated learning.

Personalization: Due to the heterogeneity of client data and models, personalization is a common step in federated learning. Personalization involves integrating information from different participants and introducing personalized elements into the global model. Personalization can enhance the model’s performance on heterogeneous data and cater to the unique demands of different clients.

Test: The final phase of federated learning is the test step. The testing phase evaluates the model’s performance and ensures its effectiveness and robustness across various client scenarios. Personalization and testing help to validate the effectiveness of federated learning and ensure that the global model caters to diverse use cases.

3. Uncertainty in Federated Learning

3.1. Uncertainties in Federated Learning Process

Federated learning is a decentralized machine learning paradigm that enables multiple clients to collaboratively train a shared model without exchanging raw data. Formally, let

denote the collection of datasets distributed across

M clients, where each client

m holds local data

with

samples. The objective of FL is to optimize a global model

parameterized by weights

w over the union of all client datasets:

where

denotes the empirical risk on client

m and

is the total data size.

In practice, training a federated model consists of multiple steps that correspond to different sources of uncertainty.

- 1.

The data acquisition step:

The collection and annotation of local datasets at each client institution, often subject to noise, incompleteness, or privacy-driven perturbations.

- 2.

The local optimization step:

Each client optimizes its model locally using stochastic procedures such as gradient descent on private data.

- 3.

The global aggregation step:

The central server aggregates model updates from heterogeneous clients, producing a global model.

- 4.

The personalization step:

The adaptation of the global model to local data distributions to handle heterogeneity across clients.

- 5.

The testing and deployment step:

The global or personalized models are evaluated and applied in real-world scenarios.

Each of these steps introduces potential sources of uncertainty that propagate through the entire FL process. We identify five main factors that critically affect uncertainty in federated learning:

The quality and reliability of data acquisition at distributed clients;

The randomness and instability of local training;

The variance and communication noise in global aggregation;

The distributional shifts introduced by personalization across heterogeneous clients;

The robustness of predictions under out-of-distribution or temporally shifted test data.

In the following, we describe each step of the FL pipeline in more detail, highlight the corresponding sources of uncertainty, and explain how these uncertainties are propagated and compounded. Finally, we introduce a taxonomy of uncertainty types in federated learning and discuss their implications for trustworthy medical AI.

3.1.1. Data Acquisition in Federated Medical Learning

In federated medical learning, the data acquisition step corresponds to the collection and annotation of patient information at distributed healthcare institutions. Each client

m holds its own dataset

, where

may represent a medical image (e.g., MRI, CT scan, histopathology slide) or electronic health record features, and

is the corresponding label (e.g., diagnosis, segmentation mask, survival outcome). For a real-world clinical case

from space Ω, the observed medical measurement can be expressed as

and the associated target annotation is given by

The local dataset thus consists of realizations sampled from the distribution of patients admitted to institution m. When aggregated across multiple hospitals, the global dataset represents a highly heterogeneous and potentially noisy collection of medical records.

Two principal sources of uncertainty arise already at this stage. First, the coverage of the patient population space Ω is often incomplete. Individual hospitals may only address specific demographics, disease subtypes, or imaging protocols, leading to non-representative data. This creates distribution shifts across clients and reduces the generalizability of the global model.

Factor I: Variability in Medical Populations and Protocols

Each clinical site acquires data from its own patient cohort under specific imaging devices and acquisition protocols. This leads to distribution shifts in terms of demographics, disease prevalence, and scanner characteristics. When the distribution of a new patient sample differs from that of the training data, the global model is exposed to uncertainty, which can cause degraded prediction accuracy.

|

Second, medical data acquisition and annotation are prone to errors. Images may contain artifacts from patient movement or machine calibration; electronic health records may have missing values; and labels (e.g., tumor boundaries, pathology grading) may vary across annotators due to inter-observer variability. In addition, privacy regulations (e.g., HIPAA, GDPR) may require the removal or obfuscation of sensitive features, effectively introducing artificial noise.

Factor II: Noise, Missing Data, and Labeling Errors

Measurement errors, annotation inconsistencies, and privacy-driven data suppression introduce uncertainty in the training data. These imperfections reduce the reliability of local updates and propagate to the global model, increasing the predictive variance.

|

In summary, already at the data acquisition stage, federated medical learning inherits substantial uncertainty due to heterogeneous populations, diverse acquisition protocols, and noisy or incomplete annotations. These factors lay the foundation for non-IID distributions across clients, which propagate through subsequent steps of local training and global aggregation.

3.1.2. Local Optimization in Federated Medical Learning

In federated medical learning, each client (e.g., a hospital) trains its model locally on its private dataset

. This process can be formalized as learning local parameters

by minimizing the empirical risk:

where

s denotes the model configuration (e.g., network architecture, optimizer choice). The training of

is stochastic, influenced by random initialization, mini-batch sampling, and optimizer dynamics. Moreover, local datasets in medicine are often small, imbalanced, or noisy, leading to unstable convergence. For instance, a rural hospital may only collect cases of certain demographics, whereas a specialized center may only provide data from severe cases, producing very different local optima

. This variability across clients propagates uncertainty into the global model.

Factor III: Randomness and Bias in Local Training

Uncertainty arises due to stochastic optimization and the limited, biased, or imbalanced nature of local medical datasets. Even with the same model configuration, two hospitals may produce highly divergent local updates, making the reliability of local models inherently uncertain.

|

3.1.3. Global Aggregation in Federated Medical Learning

Once local updates

are obtained, the server aggregates them to form the global model

. The most common approach is weighted averaging:

with

. However, aggregation introduces additional uncertainty. First, heterogeneity among local updates leads to variance in the aggregated parameters. Second, communication channels are imperfect: packet loss, quantization, and compression can distort updates during transmission. Third, proxy datasets sometimes used to stabilize aggregation may not reflect the true distribution, further biasing the global model. Consequently, even if each local model is well trained, the global update may be noisy or unstable.

Factor IV: Variance and Noise in Global Aggregation

Uncertainty is introduced when combining heterogeneous and potentially conflicting local updates. This is further amplified by communication noise or the use of proxy datasets, which can distort the aggregation and reduce the reliability of the global model.

|

3.1.4. Personalization in Federated Medical Learning

In medical FL, the global model

obtained after aggregation may not perform optimally on all clients due to data heterogeneity. Personalization aims to adapt

to a client’s local distribution, yielding

. Formally, this can be seen as a fine-tuning process:

where

is the client’s local dataset. However, personalization itself introduces uncertainty. Different hospitals may adapt

in inconsistent ways depending on their data biases, leading to integration variance across clients. For example, a model fine-tuned on pediatric data may deviate significantly from one adapted to geriatric populations, and both may diverge from the global model. This tension between global consistency and local specialization represents a unique uncertainty source in FL.

Factor V: Uncertainty from Personalized Adaptation

While personalization improves local accuracy, it introduces uncertainty by creating distributional divergence across clients. This may enhance performance in one medical center but undermine generalization across the federation.

|

3.1.5. Testing and Deployment in Federated Medical Learning

After training and personalization, federated models are evaluated and deployed in clinical practice. At this stage, uncertainty arises from several factors. First, models may encounter out-of-distribution (OOD) inputs, such as new disease variants or imaging protocols not seen during training. Second, temporal shifts occur as patient populations and clinical practices evolve over time. Third, the prediction confidence itself may be miscalibrated, with models appearing overconfident in cases where they are unreliable.

Formally, for a new test input

, the predictive distribution depends on whether the deployed model is the global model or a personalized local model. For the global model, we have

whereas, for a personalized client

m, the inference is based on

In both cases, the predictive distribution may deviate strongly if lies outside the training support or if the model has not been calibrated.

In a medical context, these uncertainties directly affect patient safety: a miscalibrated cancer detection model that confidently misclassifies an OOD sample poses critical risks. Therefore, reliable UQ during deployment is essential for clinical trust.

Factor VI: Prediction and Deployment Uncertainty

Uncertainty at deployment arises from out-of-distribution samples, temporal distribution shifts, and miscalibrated confidence estimates. For personalized models, this uncertainty is further affected by the adaptation from to . Without principled UQ, clinicians may misinterpret unreliable predictions, reducing trust in federated models.

|

3.2. Uncertainty Categories in Federated Learning

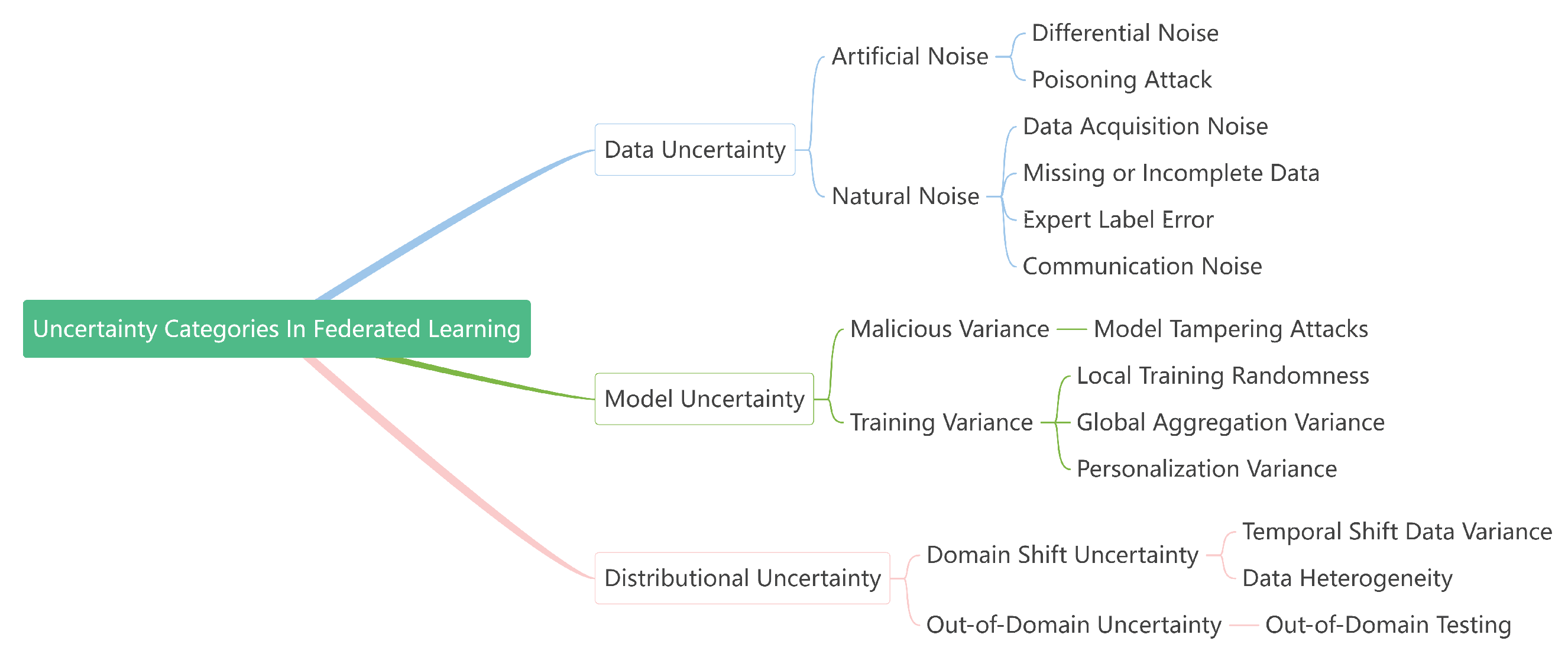

In summary, uncertainty in FL can be categorized into three types:

data uncertainty,

model uncertainty, and

distributional uncertainty, as listed in

Figure 4.

Data uncertainty. Data uncertainty in FL refers to instability introduced by noisy, incomplete, or diverse data across distributed clients. In the medical domain, this may result from imaging artifacts, annotation errors, or missing patient records. Moreover, privacy-preserving techniques such as differential privacy deliberately inject random noise into local updates, which further increases the predictive variability. In distributed settings, adversarial or malicious clients can also introduce corrupted updates, amplifying uncertainties. Thus, data uncertainty encompasses noise in data acquisition, the incompleteness of annotations, communication noise, and privacy-induced perturbations.

Model uncertainty. Model uncertainty arises from limitations in the construction and training of federated models. It reflects the instability of model parameters w under heterogeneous and noisy data distributions and is particularly relevant in medical FL, where data are often scarce and imbalanced. In addition, model uncertainty can be used to quantify the effects of model tampering or adversarial manipulations, which are realistic threats in decentralized environments. From a generalization perspective, measuring model uncertainty is crucial to prevent overfitting and to ensure reliable performance across unseen clinical data.

Formally, the Bayesian framework provides a principled way to reason about model and data uncertainty [

46]. Model uncertainty is captured as a distribution over parameters

w, while data uncertainty is captured as a distribution over predictions

given parameters

w:

Here,

is the posterior over the parameters and is generally intractable. Ensemble methods approximate this posterior by averaging over multiple models [

47], while Bayesian inference applies Bayes’ theorem [

48]:

where

denotes the prior and

is the likelihood. Loss functions such as cross-entropy or the mean squared error can be derived from log-likelihood maximization [

49]. Although exact inference is infeasible, variational and Monte Carlo approximations make Bayesian reasoning practical in FL.

Distributional uncertainty. As discussed in

Section 3.1, distributional uncertainty refers to the unpredictability arising from differences in underlying data distributions. It becomes evident when test or deployment distributions shift over time. Formally, distributional uncertainty can be modeled by introducing an intermediate latent variable

describing the predictive categorical distribution:

Here,

captures uncertainty over the categorical distribution itself (e.g., modeled via a Dirichlet prior on the softmax output [

50]). This formulation highlights the hierarchy: model uncertainty affects distributional uncertainty, which in turn affects data uncertainty.

Although distributional uncertainty is relatively underexplored in centralized learning, it becomes a dominant challenge in FL due to the ubiquity of heterogeneity. Ignoring this uncertainty can significantly degrade the testing performance across clients with diverse and evolving data distributions.

3.3. Comparison with Centralized Learning

While the above analysis highlights the sources of uncertainty within the federated learning pipeline, it is also useful to contrast these with those in conventional centralized training. In centralized learning, all data are pooled into a single repository, which reduces heterogeneity but does not eliminate intrinsic data noise or model limitations. By contrast, federated learning introduces additional sources of uncertainty due to client-specific biases, non-IID distributions, aggregation noise, and personalization divergence.

Table 3 summarizes the main uncertainty types, together with commonly used evaluation metrics and the typical performance shifts observed when moving from centralized to federated settings.

3.4. Privacy-Preserving Mechanisms, Uncertainty, and Security Trade-Offs

Privacy-preserving mechanisms are indispensable in federated medical learning, yet they inevitably affect uncertainty estimation and model reliability. Two commonly used techniques are differential privacy (DP) and homomorphic encryption (HE).

Differential Privacy (DP). DP protects patient-level information by adding random noise to model updates before transmission. This improves confidentiality but directly amplifies data uncertainty. For example, in federated medical imaging tasks, strong privacy budgets (e.g., ) have been shown to increase calibration errors (higher ECE and NLL) and degrade the out-of-distribution (OOD) detection performance, as the injected noise obscures informative gradients. Thus, while DP ensures rigorous privacy guarantees, it introduces a quantifiable trade-off between confidentiality and reliable UQ.

Homomorphic Encryption (HE). HE enables secure aggregation on encrypted updates, ensuring that the server cannot access raw model parameters. Unlike DP, HE does not inject stochastic noise, but it introduces additional model uncertainty due to the computational overhead, approximation errors in encrypted arithmetic, and increased communication latency. These factors can destabilize global optimization, thereby affecting the consistency of confidence estimates across clients.

Attacks and UQ Mitigation. Despite DP and HE, adversarial threats such as model inversion attacks remain possible, where an attacker reconstructs private patient information from gradients or model outputs. UQ provides a complementary defense: highly uncertain predictions can signal potential leakages or adversarial manipulation, enabling early detection and mitigation. For example, uncertainty-aware anomaly detection can identify abnormal gradient patterns indicative of inversion or reconstruction attempts.

Implications. Overall, DP and HE strengthen security but introduce new challenges for uncertainty-aware federated learning. Balancing these trade-offs requires adaptive privacy budgets, hybrid cryptographic protocols, and the integration of UQ methods not only for reliability but also as an auxiliary defense mechanism against privacy attacks in medical AI.

3.5. Uncertainty Quantification Objective in Federated Learning

3.5.1. Security

In this context, security refers to protecting federated learning systems from adversarial threats and failures, following the well-established CIA triad: confidentiality, integrity, and availability.

Confidentiality: Ensuring that the privacy of each participant’s raw data and model updates is preserved during the federated learning process. This involves techniques such as secure aggregation, homomorphic encryption, and differential privacy to prevent information leakage or unauthorized access during communication.

Integrity: Ensuring the correctness and trustworthiness of model updates in the presence of potentially malicious or faulty clients. This includes defending against poisoning and backdoor attacks, using Byzantine-resilient aggregation, anomaly detection, and update auditing to maintain model reliability.

Availability: Maintaining the ability of the federated system to operate and serve models even under failures or attacks (e.g., denial-of-service, stragglers, or communication faults). This can be achieved with fault-tolerant orchestration, asynchronous protocols, and robust communication mechanisms.

3.5.2. Robustness

Robust Communication: In the distributional setting of federated learning, we typically require numerous rounds of communication, which may result in new noise and errors. It is crucial to develop mechanisms to handle disconnections or failures of participating parties to ensure that the learning process can continue seamlessly without interruptions. These methods typically model the noise and errors in communication.

Non-Typical Data: Ensuring that the federated model remains effective and accurate when dealing with non-typical data, such as noisy and extreme data, thus preventing it from making incorrect predictions.

Missing or Incomplete Data: Due to the privacy demands of federated learning, we usually need to deal with missing or incomplete data.

3.5.3. Generalization

Non-IID Data: The heterogeneity of federated learning environments often breaks the fundamental hypothesis that traditional deep learning relies upon. Effectively coping with this situation usually involves modeling the distributional uncertainties. Avoiding Overfitting: Due to the privacy and distributed requirements of federated learning, data aggregation is frequently performed. Ensuring that the federated model does not overfit the data of a single participant can lead to good performance on new data.

3.5.4. Availability

Efficiency: Ensuring that model updates and the communication overhead in federated learning are controlled and efficient.

Performance: Federated learning implicitly increases the overall complexity of the deep learning model, and effectively tuning the additional global aggregation and personalization weights is crucial for performance.

4. Bayesian Federated Learning (BFL)

This section summarizes relevant studies concerning Bayesian federated learning (BFL), one of the primary categories in uncertainty-related federated learning. We explore different approaches within Bayesian learning (BL) and highlight their respective advantages. BFL effectively incorporates BL principles into FL. This integration utilizes the inherent advantages of BL to address challenges in FL.

4.1. BFL Preliminaries

Bayesian federated learning (BFL) represents a promising solution to overcome the challenges encountered in FL. BFL integrates Bayesian learning (BL) principles into FL frameworks. This integration enhances model robustness and also improves performance, especially when data are limited. BFL effectively addresses uncertainties and process-oriented challenges, offering superior model interpretability. These advances benefit applications such as financial forecasting, disease modeling, and disaster prediction [

51].

Although a standardized definition for Bayesian federated learning (BFL) is currently absent, BFL can be viewed as combining the mechanisms of FL and Bayesian learning (BL). Unlike conventional FL, a BFL system seeks to obtain not only point estimates of model parameters but also their full posterior distributions. Specifically, let denotes all client datasets. BFL aims to learn the global posterior at the server and the local posteriors on each client m based on its local dataset . A shared prior distribution can be placed on all model parameters to enable Bayesian inference.

The posterior of the global model parameters is

Similarly, the posterior of the local parameters on client

m is

In non-personalized BFL, the local parameters

are regularized to be close to the global parameters

. A common federated optimization objective is

where

is the local training loss and

controls the regularization strength.

The true posteriors

are intractable, so BFL typically employs variational inference (VI) to approximate them with tractable variational distributions

. This leads to the following evidence lower bound (ELBO) objective:

Minimizing the Kullback–Leibler (KL) divergence between the variational and true posteriors forms the core objective of BFL. This probabilistic formulation distinguishes BFL from standard FL by enabling uncertainty-aware global aggregation and personalized local adaptation.

BFL tasks and methods can be classified from both Bayesian and federated perspectives. From the Bayesian side, BFL includes client-side methods such as federated Bayesian privacy (FBP), Bayesian neural networks (BNNs) for local models, Bayesian optimization (BO) for local training, and Bayesian non-parametric (BNP) models for dynamic FL. Server-side BFL methods include Bayesian model ensemble (BME), Bayesian posterior decomposition (BPD), and Bayesian continual learning (BCL) for the aggregation of local updates into a global posterior. From the FL perspective, BFL methods can also be categorized as heterogeneous, hierarchical, dynamic, personalized, or hybrid variants.

4.1.1. Approximation Methods for Federated Bayesian Inference

The core of BFL involves computing the global posterior distribution , where is the union of all local datasets. This is computationally intractable for complex models, so two main approximation approaches are commonly used. Each faces distinct challenges in FL.

Variational inference (VI) frames inference as an optimization problem. It approximates the true posterior

with a simpler distribution

by minimizing the KL divergence:

In FL, this becomes a distributed optimization problem where clients collaborate to learn global variational parameters.

Markov Chain Monte Carlo (MCMC): This method approximates the posterior by generating samples. These methods are highly accurate. However, they are iterative and require access to the full dataset for each update and pose a significant challenge in FL. For example, a Metropolis–Hastings step requires evaluating the likelihood

for a proposed parameter

, which is distributed across clients.

Federated Variational Inference (FVI)

FVI leverages the additive structure of the ELBO objective. A common strategy is to use a global variational approximation

parameterized by

. The server maintains

and iteratively refines it by aggregating stochastic gradients from clients:

The Algorithm 1 shows the pseudocode of FVI.

| Algorithm 1 Federated Variational Inference |

- 1:

Server initializes global variational parameters - 2:

for round to T do - 3:

Server sends to participating clients - 4:

for each client i in parallel do - 5:

Compute local gradient: - 6:

Optionally apply differential privacy noise. - 7:

end for - 8:

Server aggregates: - 9:

end for

|

Challenges: FVI can suffer from client drift due to non-IID data, and the choice of the variational family imposes a trade-off between flexibility and computational and/or communication costs.

Federated MCMC Methods

This type of method aims to generate samples from the global posterior without centralizing the data. One prominent approach is to run local MCMS chains on each client and periodically synchronize them at the server. Further approaches are as follows.

Likelihood-Weighted MCMC: Clients compute the local likelihood

which is multiplied at the server for the global acceptance decision.

Local–Global MCMC: This alternates between local sampling and global synchronization:

Federated MCMC: These methods are often more communication-intensive than VI. Algorithm 2 shows the pseudocode.

| Algorithm 2 Consensus-Based Federated MCMC |

Require:

Initial global parameters , number of rounds T, local steps L- 1:

for round to T do - 2:

Server broadcasts the current global parameter estimate to all clients. - 3:

for each client to N in parallel do - 4:

Client i initializes a local MCMC chain (e.g., Langevin dynamics) from : - 5:

Client i sends a summary of its local chain (e.g., the mean ) to the server. - 6:

end for - 7:

Server aggregates local summaries to form a new global estimate. Under a Gaussian approximation, this can be - 8:

end for

Ensure:

Set of global samples approximating |

4.2. Global BFL (Server Side)

The methods of server-side Bayesian federated learning (BFL) encompass the global aggregation or decomposition of updated local models. This research paper provides a comprehensive review and identifies two server-side BFL techniques: (1) Bayesian model ensemble (BME) and (2) Bayesian posterior decomposition (BPD). Furthermore, BFL exhibits applicability in various other settings, such as continual learning, using Bayesian continual learning (BCL) approaches.

4.2.1. Bayesian Model Ensemble (BME) for Federated Learning Aggregation

Bayesian learning (BL) models employ stochastic (e.g., MCMC) or deterministic (e.g., VI) techniques, as introduced by [

52,

53], to quantify posterior distributions over model parameters. Stochastic methods involve approximating the posterior distribution through random sampling, as outlined by [

54]. Consequently, each sample can be considered a Bayesian model ensemble (BME) base learner.

Within the framework of Bayesian model ensemble (BME)-based federated learning, FedBE [

55] constructs a global posterior distribution (e.g., Gaussian or Dirichlet) from client parameters during each aggregation round. An ensemble model is then obtained by drawing MCMC samples from this posterior and applied to unlabeled data to produce pseudo-labels. The pseudo-labeled set is subsequently used to refine the global model through stochastic weighted averaging. FedPPD follows a related idea and proposes three aggregation mechanisms, one of which mirrors the ensemble strategy of FedBE. The distinction lies in the local training stage: while FedBE relies on point estimation to derive client parameters, FedPPD instead incorporates a Bayesian neural network (BNN) to model client updates.

Using a Bayesian model ensemble (BME) in server-side FL enables the more effective implementation of Bayesian inference by leveraging information from all clients and mitigating potential model performance degradation, particularly in the case of local models trained on non-IID data. Compared to other approximation methods, the sampling approach used in BME is simpler and offers higher accuracy. However, obtaining an accurate ensemble model requires more samples, increasing the devices’ computational requirements. Additionally, directly obtaining a specific global parameter through BME is not feasible. Therefore, alternative methods for distilling an appropriate global parameter are necessary for client learning in the subsequent communication round.

4.2.2. Bayesian Posterior Decomposition (BPD) for Federated Learning

In many machine learning applications, dividing a complex model into a set of simpler sub-models is both essential and challenging [

56]. To address this, model decomposition is often employed. Within Bayesian learning, Bayesian posterior decomposition (BPD) achieves this by partitioning the global model’s posterior into multiple local posteriors.

In Bayesian posterior decomposition (BPD)-based federated learning, FedPA [

57] decomposes the global posterior into the product of client-specific posteriors during each communication round by enforcing a uniform prior and assuming independence across local datasets. The parameters of the global posterior are estimated using federated least squares. However, direct computation is expensive in both communication and computation. To address this, the task is reformulated as an optimization problem and solved by sampling. Because independently approximating local posteriors may not yield an accurate global approximation, FedEP [

58] improves upon FedPA by applying expectation propagation to obtain a better global model. Similarly, ref. [

59] adopts the same decomposition strategy as FedPA but incorporates quantized Langevin stochastic dynamics (QLSD), transmitting compressed gradients to reduce the communication overhead. Unlike these methods, FOLA employs BPD to express the global posterior as a weighted product of local posteriors without imposing restrictive assumptions. VIRTUAL [

60] also applies BPD for server-side aggregation, where the global posterior in each round is decomposed into the product of the previous global posterior and the ratios of client posteriors from the current round, helping to mitigate catastrophic forgetting.

Most FL methods commonly employ naive parameter averaging, such as FedAVG, for model aggregation. However, this approach can lead to performance degradation when dealing with local data that exhibit statistical heterogeneity. In contrast, by decomposing the global posterior model, BPD-based FL models demonstrate enhanced stability on heterogeneous data. Moreover, BPD offers the advantage of improved interpretability in FL models. Nevertheless, BPD also presents certain challenges in the FL context. Firstly, it may necessitate additional algorithms to aid in model learning, which can result in substantial computational overhead or intractability. Secondly, certain decomposition methods may impose strong constraints that are often impractical to satisfy when solving real-world problems.

4.2.3. Bayesian Continual Learning for Continual FL

Continual learning addresses the challenge of training models on datasets that arrive sequentially and may evolve over time [

61]. In Bayesian learning, this is achieved by reusing the posterior from a previous task as the prior for the next, forming the basis of Bayesian continual learning (BCL).

Within FL, FOLA [

62] applies BCL by constructing the global posterior as the product of local posteriors in each round, rather than relying on a simple mixture. This design improves the robustness when handling non-IID client data. Similarly, pFedBayes updates the prior of each local model with the global distribution obtained from the previous round, improving both the performance and interpretability. Although they share the same foundation, the two methods differ in two ways. First, pFedBayes derives the global distribution incrementally through local model averaging, while FOLA aggregates local posteriors directly. Second, pFedBayes uses BNNs for clients, whereas FOLA relies on conventional neural networks.

Applying Bayesian continual learning (BCL) to FL allows us to benefit from the accumulated knowledge gathered from previous communication rounds. This online learning approach often leads to improved learning performance. However, a complex prior distribution can introduce significant computational overhead to the modeling process and may only provide limited performance gains. Finding the right balance between utilizing BCL and selecting an appropriate prior distribution for FL poses a challenging task. It requires careful consideration to achieve a trade-off that maximizes the advantages of BCL while avoiding excessive computational costs and potential limitations in performance improvement.

4.3. Local BFL (Client Side)

Different Bayesian techniques cater to the client’s needs and facilitate the learning of local models. Local methods (client side) encompass (1) Bayesian optimization (BO), (2) Bayesian neural networks (BNNs), (3) federated Bayesian privacy (FBP), and (4) Bayesian non-parametrics (BNP). These methods are selected based on individual clients’ unique objectives and requirements.

4.3.1. BO Bayesian Federated Learning Learning Optimization

Bayesian optimization (BO) is commonly used to optimize the hyperparameters of deep neural networks (DNNs) sequentially. It has also been applied in FL approaches. The study [

63] proposed a federated Bayesian optimization (FBO) framework called FTS, which utilizes BO to optimize local models in each communication round. FTS employs a Gaussian process (GP) as a surrogate model for the objective and acquisition functions, using Thompson sampling and random Fourier features to enhance scalability and information exchange. The FTS algorithm guarantees convergence even with non-IID data. In a different study [

64], BO is employed for traffic flow prediction (TFP) in FL. Unlike FTS, their FBO approach dynamically adjusts the weights of local models using BO, avoiding performance degradation with heterogeneous data. The acquisition function in FBO for TFP is based on the expected improvement, addressing the scalability challenges associated with GP. The application of Bayesian optimization (BO) in FL demonstrates its effectiveness in achieving robust learning performance, particularly for non-IID local datasets. BO possesses inherent properties that contribute to this robustness. Compared to traditional optimization algorithms, BO offers a simpler and more convenient implementation process. However, when considering the practicality of federated Bayesian optimization (FBO), challenges arise when dealing with models involving substantial data points. Additionally, the convergence rate of FBO is relatively slow, indicating the need for further investigation and improvement to enhance its efficiency.

4.3.2. Bayesian Neural Networks (BNN)

The study [

65] introduced Bayesian neural networks (BNNs), combining Bayesian inference with neural networks. In FL, BNNs are integrated in various ways. Ref. [

51] proposed pFedBayes, using BNNs to train local models in each communication round. pFedBayes employs variational inference (VI) to approximate posterior distributions at the client level and aggregates models at the server level. Sadilek et al [

66] extended pFedBayes by updating local parameters again after BNN updates, addressing non-IID data challenges. FedPPD [

67] also uses BNNs for local models but approximates posterior distributions using Markov Chain Monte Carlo (MCMC). FedPPD employs a Bayesian dark knowledge method to distill posterior distributions into a single DNN per client, sent to the server for aggregation.

The integration of Bayesian neural networks (BNNs) in FL offers several advantages, including the ability to quantify local uncertainty and improve robustness by leveraging deep neural networks (DNNs) for task learning. BNNs also enhance learning performance in FL settings with limited data. However, the adoption of BNNs in FL presents its own set of challenges. Firstly, training local models with BNNs incurs significant computational and memory costs, particularly when dealing with large-scale local model parameters. Secondly, selecting appropriate prior distributions for local model parameters becomes challenging, particularly when complex relationships between model outputs and parameters cannot be accurately estimated. These challenges must be addressed to exploit the potential benefits of BNNs in FL.

4.3.3. Federated Bayesian Privacy (FBP)

FL models have primarily focused on preserving privacy in federated learning, communication, and aggregation, often making differential privacy advancements, as [

68] demonstrates. Differential privacy methods encompass techniques such as simple statistics, object perturbation, and output perturbation. The study [

69] introduced Bayesian differential privacy (BDP), which considers the randomness of local data. BDP ensures client privacy, instance privacy, and joint privacy through a privacy loss accounting method. In another study [

70], KL divergence is used to quantify Bayesian privacy loss during data restoration. This approach forms the basis for the federated deep learning for private passport (FDL-PP) method, which is designed to mitigate FL restoration attacks and enhance privacy preservation.

Bayesian differential privacy (BDP) and the federated deep learning for private passport (FDL-PP) method represent advancements in relaxing constraints on existing FL differential privacy approaches by incorporating uncertainty. While BDP and FDL-PP offer improved privacy preservation, it is important to note that they may still face limitations when applied to complex FL and BL settings and more stringent privacy-preserving requirements. Further research and development are necessary to address these challenges and enhance the applicability of privacy-preserving techniques in FL.

4.3.4. Bayesian Non-Parametric Models for Dynamic Federated Learning

Dynamic learning is facilitated by Bayesian non-parametric (BNP) models, as demonstrated by [

71]. In Bayesian federated learning (BFL), BNP models utilize Gaussian or Beta-Bernoulli processes (BBP). In GP-based federated learning, pFedGP, proposed by [

72], employs a Gaussian process (GP) classifier to train the local model for every client while sharing a common kernel method across all clients. FedLoc, introduced by [

73], also utilizes GP to train local models for regression tasks. However, unlike pFedGP, FedLoc does not handle non-IID [

31] data in the federated learning setting. In contrast to these approaches, FedCor, developed by [

74], employs GP to predict changes in loss and selects clients to be activated in each communication round based on these loss changes. It is important to note that FedCor is specifically designed for the cross-silo federated learning framework, where the learning performance significantly deteriorates when dealing with non-IID data.

Bayesian non-parametric (BNP) models offer the advantage of adaptable model complexity to effectively train local models in FL, surpassing the limitations of parametric methods, as demonstrated by [

75]. BNP models can flexibly adjust to the data, enabling more efficient learning. However, it is important to note that the complexity of BNP models increases as the number of data grows, which poses computational challenges for clients dealing with large datasets in the FL setting. This computational requirement for BNP models in FL can potentially limit their scalability and practicality in real-world scenarios. Further research and optimization are needed to address the computational burden associated with BNP models and enhance their applicability in FL systems.

4.4. Other Bayesian Federated Learning Studies

4.4.1. Calibrated Bayesian Federated Learning

The study [

76] presents an algorithm called

-Predictive Bayes for Bayesian federated learning. The algorithm addresses the challenges in terms of communication costs, performance with heterogeneous data, and calibration in FL. It operates in a single round of communication and aims to produce well-calibrated models with accurate uncertainty estimates. The proposed

-Predictive Bayes algorithm was evaluated on various regression and classification datasets, demonstrating its superiority in calibration compared to other baselines, even with increasing data heterogeneity. The paper provides access to the code for the algorithm, enabling further exploration and replication in experiments.

Additionally, it highlights the limitations of existing Bayesian FL techniques, such as the Bayesian Committee Machine (BCM), which can suffer from calibration issues and produce overconfident predictions. To address these limitations, the authors apply the -Predictive Bayes algorithm, which combines the advantages of the BCM and a mixture model over local predictive posteriors. This approach results in an ensemble model that is then distilled into a single model to be sent back to clients. The proposed algorithm offers improved calibration and accurate uncertainty estimates, making it suitable for FL scenarios with limited training data or high variance. While the paper does not provide specific details about the scalability and computational efficiency of the algorithm, it highlights its effectiveness in addressing the challenges of FL. It provides a valuable contribution to the field.

4.4.2. Bayesian FL Using Meta-Variational Dropout

Ref. [

77] proposes a Bayesian meta-learning framework, named Meta-Variational Dropout (MetaVD), for personalized federated learning (PFL). The method tackles the issues of overfitting and divergence among client models that often arise from limited and non-IID data in conventional federated learning. MetaVD employs a shared hypernetwork to infer client-specific dropout rates, which supports effective personalization under data heterogeneity. In addition, it integrates a posterior aggregation scheme that leverages dropout uncertainty across clients, thereby improving convergence on non-IID distributions. Experimental results on multiple FL benchmarks confirm the effectiveness of MetaVD, showing strong classification accuracy and reliable uncertainty calibration, particularly for out-of-distribution clients. The approach also provides model compression, alleviating overfitting and lowering the communication overhead. Overall, MetaVD represents a novel solution to key FL challenges, achieving notable gains in personalization, stability, and efficiency.

Despite its contributions, the cited paper has certain limitations. While MetaVD demonstrates notable performance in FL scenarios, the experiments focus mainly on sparse and non-IID datasets, potentially limiting its generalizability to other data distributions. Further investigation of diverse FL settings would enhance our understanding of MetaVD’s robustness and effectiveness. Secondly, although the paper presents the advantages of model compression through MetaVD’s integration of variational dropout (VD), it does not extensively explore the implications of this model compression technique. A more comprehensive analysis of the trade-off between model size reduction and predictive performance would further elucidate the benefits and limitations of this approach. Lastly, while the authors emphasize the posterior adaptation view of meta-learning and the posterior aggregation view of Bayesian FL, they could provide additional insights into the theoretical underpinnings and implications of these perspectives, strengthening the theoretical foundation of MetaVD.

4.4.3. Integrated Multiple Uncertainty Approaches in FL

This research study [

78] integrates different approaches for UQ in federated deep learning, focusing on achieving trustworthy machine learning and preserving data privacy. The authors investigate prominent methods such as MC dropout, stochastic weight averaging Gaussian (SWAG), and deep ensembles, demonstrating their effectiveness in the FL framework. The empirical evaluation confirms that these approaches enable reliable UQ on out-of-distribution data without significant additional communication. The paper emphasizes that, while all methods perform well, deep ensembles and MC dropout offer the better identification of out-of-distribution data and misclassified instances based on uncertainty. Overall, this research provides valuable insights into UQ in federated deep learning, serving as a baseline for future developments in the field.

While the cited paper presents valuable insights, there are a few limitations. First, the empirical evaluation is limited to specific datasets and may not fully capture the generalizability of the proposed approaches across different domains. Further experiments on diverse datasets would strengthen the validity and reliability of the findings. Additionally, the paper focuses on integrating existing UQ methods into FL, without introducing novel techniques. Future research could explore the development of new approaches tailored specifically to UQ in federated deep learning, potentially addressing some of the limitations of the current methods.

4.4.4. Minimization of Uncertainty for Personalized FL (Semi-Supervised) Systems

The study [

79] proposes a semi-supervised learning paradigm for personalized FL to overcome challenges such as data heterogeneity, a lack of knowledge for personalized global models, and performance fairness. It allows partially labeled or unlabeled clients to seek labeling assistance from data-related clients, leveraging supervised training on pseudo-labels to improve performance. The paper introduces a data relation metric based on uncertainty estimation to select trustworthy helpers and presents a helper selection protocol for efficiency. Experimental results demonstrate the method’s superiority, especially in highly heterogeneous settings with partially labeled data.

However, the method assumes the availability of data-related clients for labeling assistance, which may not always be feasible in practical scenarios. The effectiveness of the method relies on the presence of suitable data-related clients. Additionally, while the paper focuses on performance fairness, it does not explicitly address privacy concerns about exchanging labeled and unlabeled data between clients. Future research should explore privacy-preserving mechanisms to ensure data security in the proposed personalized FL paradigm.

4.4.5. Bayesian Method for Personalized FL

The study [

80] introduces a new methodology for personalized FL by introducing a Bayesian approach called FedPop. The paper addresses the limitations of existing FL approaches by incorporating UQ and handling issues related to personalization in cross-silo and cross-device settings. The FedPop framework combines fixed common population parameters and random effects to model client data heterogeneity. It utilizes a new class of federated stochastic optimization algorithms based on Markov chain Monte Carlo (MCMC) methods to ensure convergence and enable UQ. The suggested methodology is robust to client drift and practical for inference on new clients and allows for efficient computation and memory usage. The paper provides non-asymptotic convergence guarantees and demonstrates the performance of FedPop in various personalized FL tasks.

While FedPop offers several advantages, there are some limitations to consider. The paper does not extensively discuss the scalability of the approach, particularly in scenarios with a large number of clients or high-dimensional data. The computational requirements of MCMC-based optimization algorithms may become a limitation when dealing with complex models or resource-constrained devices. Additionally, the paper does not provide an extensive comparison with other state-of-the-art personalized FL methods, which could further highlight the advantages and limitations of the FedPop approach. Future research should explore these aspects to assess the scalability and performance of FedPop in real-world FL scenarios.

Table 4 summarizes the mentioned Bayesian federated learning (BFL) families, their foci, and key ideas. As a predominant uncertainty-aware paradigm in FL, BFL grounds predictions in posterior reasoning and yields calibrated, principled uncertainty estimates. However, practical deployments must balance computational/communication overheads, prior/model misspecification, and brittleness under non-IID data and privacy noise. Emerging directions include scalable posterior representations, communication-efficient VI/MCMC, continual Bayesian updates, and calibrated personalization via hierarchical/empirical priors. These considerations motivate the complementary perspective of federated conformal prediction, which offers distribution-free coverage guarantees and can be combined with BFL to improve the reliability in heterogeneous clinical environments.

5. Federated Conformal Prediction

This section summarizes representative studies on federated conformal prediction (FCP), a principal uncertainty-aware paradigm in FL that provides distribution-free, finite-sample coverage guarantees for prediction sets across clients. Unlike Bayesian approaches that model posteriors over parameters, FCP calibrates a (possibly pre-trained) predictor post hoc and is therefore model- and loss-agnostic, making it attractive for heterogeneous, privacy-sensitive deployments. Recent works extend CP to federated settings by relaxing exchangeability, accounting for client heterogeneity (covariate/label shift), and improving systems’ efficiency and robustness.

5.1. FCP Preliminaries

Let

represent a dataset of size

n, where each element

denotes an input–output pair sampled from a distribution

. The input domain is

, the output domain is

, and together they define the sample space

. For a target coverage level

, the task is to construct a prediction set

such that, for a fresh draw

,

We say that

covers

Y when

. A prediction region is regarded as valid if it satisfies condition (

21), which is also referred to as the coverage guarantee or validity property.

Assessing the uncertainty associated with model predictions is essential in risk-aware decision making. Prediction sets provide probabilistic bounds for the target variable, indicating regions where future outcomes are expected to lie with a specified confidence level. One can build prediction regions via parametric likelihood/Bayesian modeling or by using distribution-free methods that make no distributional assumptions. Conformal prediction (CP) is a simple, distribution-free framework that wraps around any underlying predictive model to produce valid prediction sets. CP is notable because it achieves finite-sample validity under mild assumptions. Originating from the work of Vovk and Shafer on finite random sequences [

81], CP is popular due to its simplicity and low computational cost [

82,

83], with applications across domains [

84,

85,

86]. Current research roughly comprises three branches: (i) algorithmic variants tailored to specific learners, e.g., quantile regression [

87,

88], k-NN [

89], density estimators [

90], survival analysis [

91,

92], and risk control [

93]; (ii) relaxing exchangeability to handle distribution shift [

94,

95,

96,

97,

98]; and (iii) improving efficiency/sharpness [

88,

99,

100,

101,

102]. The time-series algorithms that we focus on fall mainly within the latter two branches.

We now formalize CP and introduce the required notation.

Given

and target coverage

, CP seeks a valid predictor

such that

Validity alone is trivial (e.g., take ). In practice, we also seek efficiency/sharpness, i.e., regions with small measurements (length/area/volume), while maintaining validity.

Let . Split (or inductive) CP partitions into a proper training set with and a calibration set of size .

A key component is the non-conformity score

, which measures how atypical a sample

z is relative to

. A common regression choice is the absolute residual under a model

trained on

:

When unambiguous, we write .

Train a predictor

on

; compute calibration scores

for

. Let

where

and

is the empirical

p-quantile. Then, the

prediction set at a new

x is

Regarding exchangeability, the data points

and the test point

are exchangeable:

for any permutation

of

. Exchangeability is weaker than IID and often reasonable. Later, we review variants that relax this assumption to handle distribution shift.

For scores

, define the empirical

p-quantile

As shown in Algorithm 3, the above is split CP. Full CP avoids splitting but retrains the model

n times (leave one out), which is often impractical for deep models. Although we focus on regression, CP extends to classification, segmentation, and outlier detection; see Vovk et al. [

81], Angelopoulos and Bates [

83].

| Algorithm 3 Split Conformal Prediction (Regression) |

Require:

Dataset , learning algorithm , test input , target confidence

Ensure:

Prediction region - 1:

Randomly split into and with - 2:

Train - 3:

Compute calibration scores - 4:

Compute - 5:

return

|

5.2. Federated Conformal Prediction (FCP) Methodology

Conformal prediction (CP) is becoming increasingly popular as it provides a reliable framework for accurately measuring uncertainty. The key benefit of this approach is its simplicity when applying it as a post-processing step to pre-trained models, offering assurances independent of the data distribution and with minimal assumptions. Implementing this method is vital in guaranteeing forecasts’ dependability, as it can tackle prevalent obstacles, including inaccurately calibrated probabilities generated by models, which often lead to excessively huge prediction sets. Nevertheless, despite its merits, it encounters notable obstacles, particularly in FL settings. One significant constraint is its strong dependence on the data exchangeability criterion, which often fails to be satisfied in real-world scenarios. Due to computing limitations, the current approaches to addressing non-exchangeability are not practically viable for more complex scenarios. This poses a significant obstacle to the extensive implementation of CP, particularly in crucial fields like healthcare and protecting patient confidentiality. Effective UQ is critical to FL, where several clients cooperatively train models while maintaining decentralized training data. Despite recent progress in FL, the UQ subject is only partially resolved. Incorporating federated models into clinical practice may face inadequate calibration and limited interpretability. These issues might result in the misuse of technologies in critical decision-making processes. Conformal prediction’s capacity to provide prediction sets that include true labels with a specified coverage guarantee is a notable solution to these difficulties.

The study [

103] discusses a methodology for improving conformal prediction, a statistical method used to generate prediction sets with a pre-determined degree of confidence. The main objective is to enhance the adaptive prediction sets (APS) method, which primarily relies on softmax probabilities. The distribution of these probabilities frequently exhibits a long-tailed pattern, including several low-probability classes, leading to needlessly huge prediction sets. Furthermore, ref. [

104] explores the intricacies of federated inference over wireless channels. The research offers valuable insights into effectively handling uncertainty in dispersed contexts, a crucial factor to consider regarding real-time medical monitoring and treatments.

In 2023, Plassier et al. made notable progress in FCP by presenting two research studies. Each study focused on a key issue in medical federated learning: the diversity of data and the occurrence of label changes. The initial study [

105] explores the intricacies of conformal prediction in varied data ecosystems, emphasizing the necessity of resilient predictive models that can uphold accuracy despite the diverse data distributions commonly found in medical contexts. The second research work [

106] focuses on efficiency, specifically optimizing conformal prediction to tackle label shifts, which are inconsistencies in label distributions among various data sources. These articles improve the comprehension and demonstrate the practicality of FCP by guaranteeing that predictive models are responsive to the intricacies of medical data, maintain privacy, remain relevant in many situations, and sustain a high level of dependability. Lastly, ref. [

107] presents innovative methods for distribution-free federated learning. The paper underscores the importance of enhancing model robustness and reliability through conformal prediction techniques, thereby paving the way for the broader adoption of FCP in medical applications.

Moreover, based on the existing research, we classify federated conformal prediction (FCP) into two broader categories. The subsequent subsections summarize research on these broader perspectives, specifically addressing data heterogeneity and alleviating exchangeability assumptions in federated learning settings. These categories cover a variety of methodologies and technologies that address the specific issues presented by diverse data and the limits of typical conformal prediction methods in unconventional data scenarios.

These collective efforts in exploring and refining the realms of conformal prediction and federated conformal prediction underscore these methodologies’ pivotal role in shaping the future of medical federated learning, particularly in ensuring that predictions are data-driven, context-aware, and clinically reliable.

5.3. FCP Under Data Heterogeneity

Data heterogeneity is a critical challenge in federated learning, particularly in medical settings, where data are sourced from diverse institutions, each with unique patient populations, data collection protocols, and device configurations. This diversity in data can lead to significant inconsistencies and biases in model training and performance.

The study [

106] addresses this issue directly. The paper explores how conformal prediction can be tailored to work effectively in environments with non-exchangeable data distributions. By focusing on calibration for data uncertainty, the study offers strategies to improve prediction reliability in federated learning settings characterized by data diversity. Similarly, the study [

105] delves into the complexities introduced by statistical heterogeneity, specifically label shifts across different datasets. The research presents methods to maintain prediction accuracy and reliability even when the underlying data distribution varies, ensuring that the predictive models remain robust and clinically relevant.

The study [

108] introduces federated conformal prediction (FCP) to tackle the issue of data heterogeneity in federated learning. The authors recognize that the diversity of data among clients contradicts the principles of exchangeability necessary for conformal prediction. To address this problem, the paper presents the notion of partial exchangeability. The FCP framework offers both theoretical assurances and empirical assessments in the presence of diverse data, showcasing its efficacy in enhancing reliability and accuracy in practical situations. The study extends the application of conformal prediction to the federated learning context and presents a realistic method for integrating meaningful uncertainty quantification in dispersed and diverse contexts. The empirical assessments encompass several medical imaging datasets, emphasizing the actual implementation of the FCP framework. In summary, it provides a solution for situations when data privacy and heterogeneity are major considerations.

Recent advances have further pushed the boundaries of FCP. Li et al. [