EDAT-BBH: An Energy-Modulated Transformer with Dual-Energy Attention Masks for Binary Black Hole Signal Classification

Abstract

1. Introduction

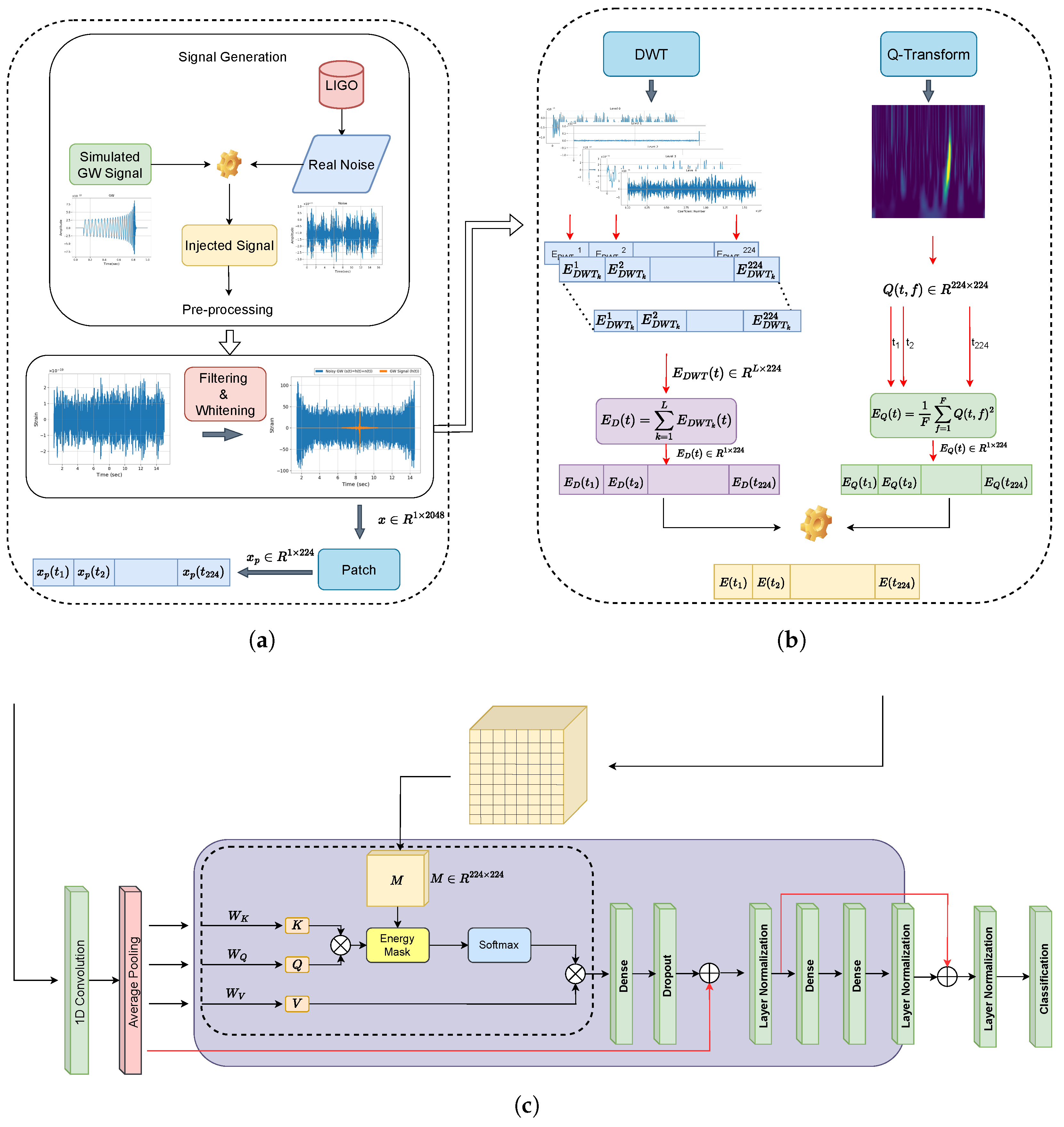

- (1)

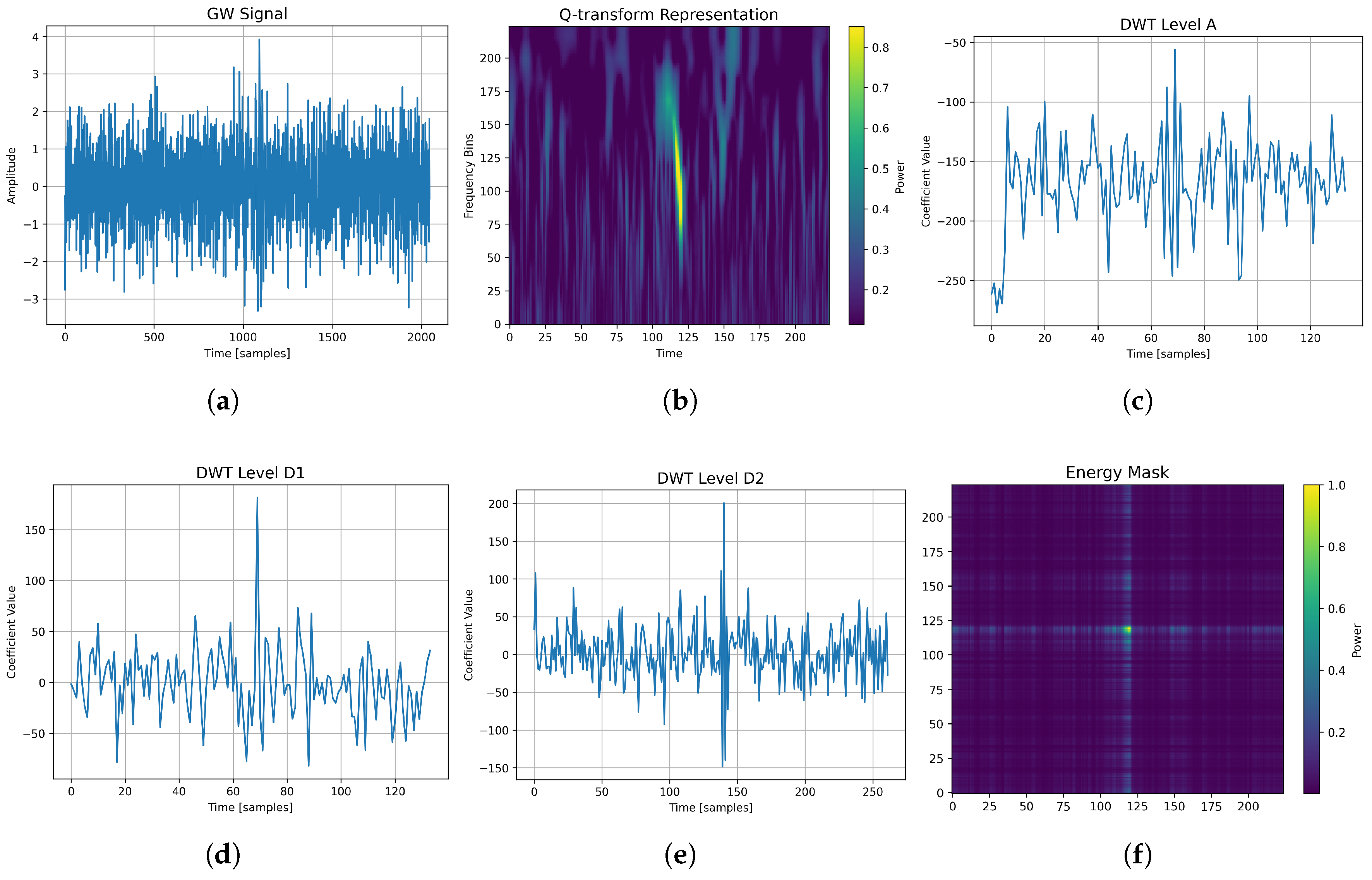

- Development of a Transformer architecture with energy-modulated attention and raw signal patching: This study introduces a modified Transformer framework in which the attention mechanism is guided by physically meaningful energy information extracted from the detector signal. For this purpose, energy vectors obtained from Q-transform and wavelet-based vectors are combined into dual-energy attention masks which dynamically modulate the attention scores in the encoder. This energy-aware attention mechanism allows the model to focus on the regions of the input signal that are rich in discriminative features, enhancing its ability to capture transient and non-Gaussian characteristics typical of gravitational-wave signals. Unlike prior works, we do not treat energy vectors as auxiliary inputs but embed them directly into the Transformer’s attention operation, which results in improved interpretability and enhanced robustness to noise. Additionally, the raw time-series gravitational-wave signal is divided into patches and embedded as the main input sequence in order to allow the model to preserve detailed temporal features.

- (2)

- Integration of Q-transform and wavelet-based energy representations: The EDAT-BBH model benefits from a combined view of global and local signal structures by leveraging both Q-transform and wavelet coefficients to derive complementary energy representations. This dual-energy strategy facilitates a comprehensive representation that is particularly effective for the complex temporal and spectral patterns found in gravitational-wave data.

- (3)

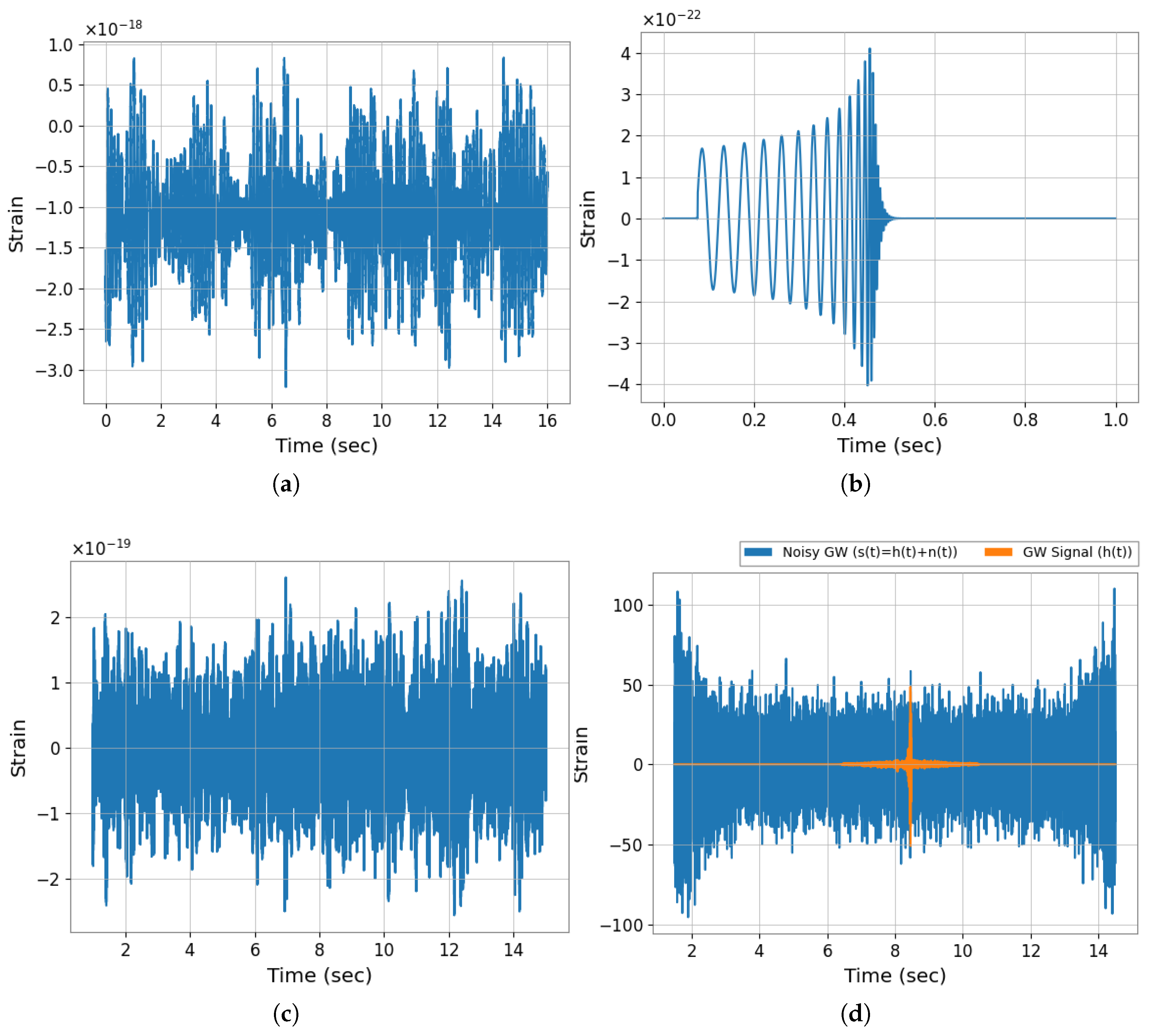

- Training with realistic, non-stationary noise from LIGO detectors: In contrast to many prior studies that rely on simulated noise, our model is trained and validated on data incorporating real non-stationary and non-Gaussian background noise sourced directly from LIGO detectors. This ensures that the model can generalize to real-world detection scenarios.

- (4)

- Optimization of model architecture and training parameters via Bayesian optimization: To maximize classification performance and computational efficiency, Bayesian optimization is employed to fine-tune the hyperparameters of the EDAT-BBH model. Experimental results show that the proposed model outperforms baseline Transformer architectures and conventional classifiers in terms of performance metrics for detecting binary black hole gravitational-wave signals.

2. Background and Methods

2.1. Methodology

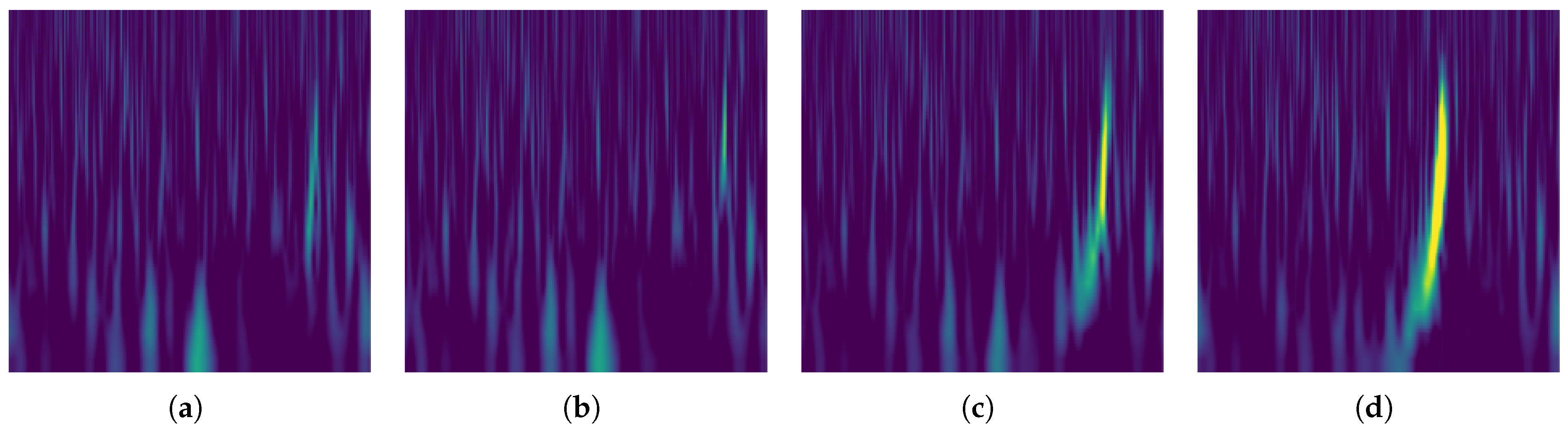

2.2. Q-Transform

2.3. Wavelet Coefficients

2.4. Transformer Architecture and Multi-Head Attention

2.5. Bayesian-Optimized Energy-Modulated Transformer

2.6. Proposed Method

2.7. Signal Patch Embedding and Attention Modulation

| Algorithm 1 EDAT-BBH: Energy-Modulated Dual-Attention Transformer for Binary Black Hole classification |

|

2.8. Classification Output

3. Results

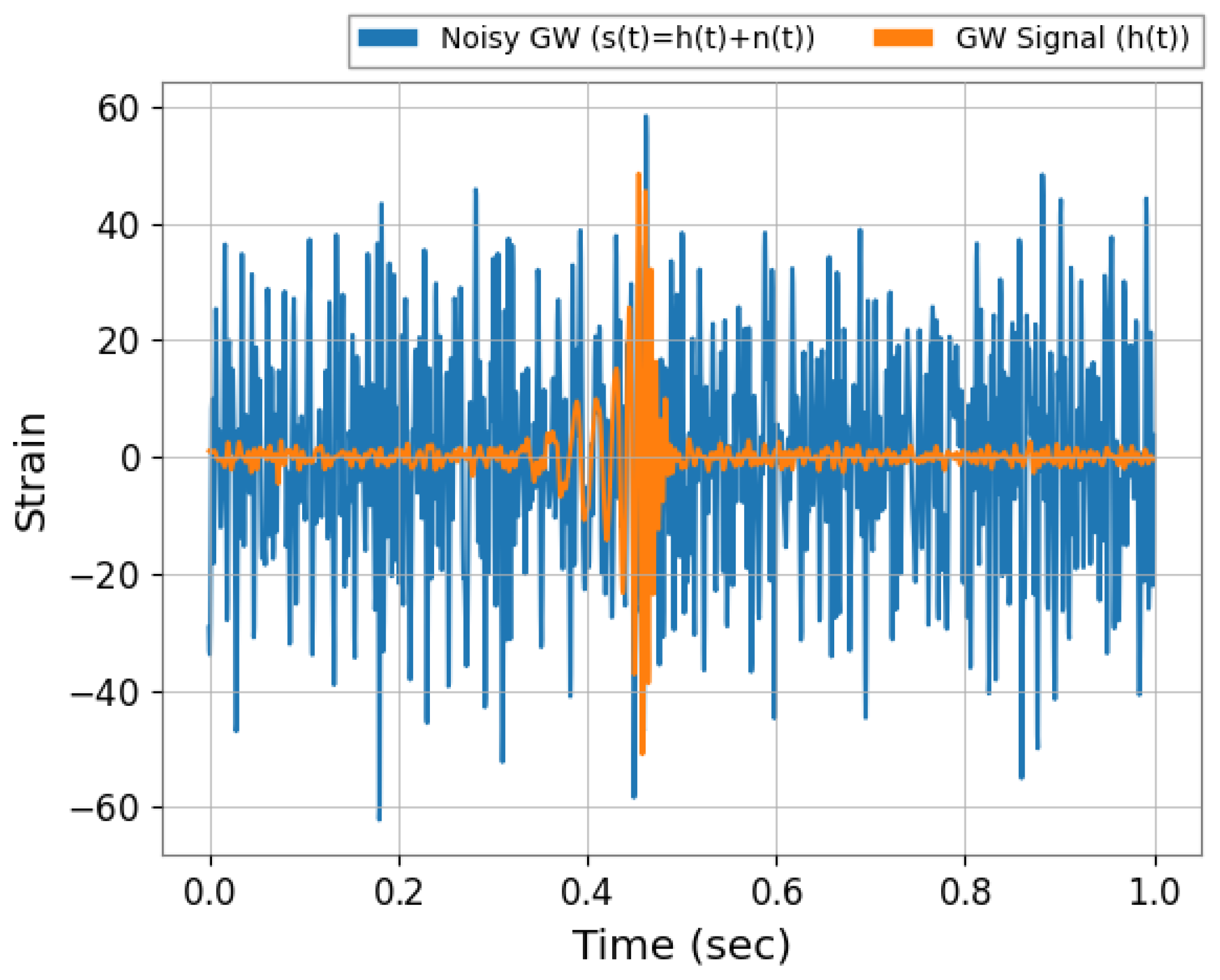

Dataset

4. Discussion

5. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| GW | Gravitational Wave |

| LIGO | Laser Interferometer Gravitational-Wave Observatory |

| BBH | Binary Black Hole |

| GWOSC | Gravitational Wave Open Science Center |

References

- Einstein, A. Approximative Integration of the Field Equations of Gravitation. Sitzungsberichte KöNiglich PreußIschen Akad. Wiss. Berl. (Math. Phys.) 1916, 1916, 688–696. [Google Scholar]

- Observation of Gravitational Waves from a Binary Black Hole Merger. 2016. Available online: https://journals.aps.org/prl/covers/116/6 (accessed on 23 April 2022).

- Accadia, T.; Acernese, F.; Antonucci, F.; Astone, P.; Ballardin, G.; Barone, F.; Barsuglia, M.; Bauer, T.S.; Beker, M.; Belletoile, A.; et al. Noise from Scattered Light in Virgo’s Second Science Run Data. Class. Quantum Gravity 2010, 27, 194011. [Google Scholar] [CrossRef]

- Abbott, B.P.; Abbott, R.; Abbott, T.; Abernathy, M.; Acernese, F.; Ackley, K.; Adamo, M.; Adams, C.; Adams, T.; Addesso, P.; et al. Characterization of Transient Noise in Advanced LIGO Relevant to Gravitational Wave Signal GW150914. Class. Quantum Gravity 2016, 33, 134001. [Google Scholar] [CrossRef]

- Lopac, N.; Hrzic, F.; Vuksanovic, I.P.; Lerga, J. Detection of Non-Stationary GW Signals in High Noise From Cohen’s Class of Time–Frequency Representations Using Deep Learning. IEEE Access 2022, 10, 2408–2428. [Google Scholar] [CrossRef]

- George, D.; Huerta, E.A. Deep Learning for Real-Time Gravitational Wave Detection and Parameter Estimation: Results with Advanced LIGO Data. Phys. Lett. B 2018, 778, 64–70. [Google Scholar] [CrossRef]

- Benedetto, V.; Gissi, F.; Ciaparrone, G.; Troiano, L. AI in Gravitational Wave Analysis, an Overview. Appl. Sci. 2023, 13, 9886. [Google Scholar] [CrossRef]

- Jiang, L.; Luo, Y. Convolutional Transformer for Fast and Accurate Gravitational Wave Detection. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022; pp. 46–53. [Google Scholar]

- Gebhard, T.D.; Kilbertus, N.; Harry, I.; Schölkopf, B. Convolutional Neural Networks: A Magic Bullet for Gravitational-Wave Detection? Phys. Rev. D 2019, 100, 063015. [Google Scholar] [CrossRef]

- Gabbard, H.; Williams, M.; Hayes, F.; Messenger, C. Matching Matched Filtering with Deep Networks for Gravitational-Wave Astronomy. Phys. Rev. Lett. 2018, 120, 141103. [Google Scholar] [CrossRef]

- Kim, K.; Li, T.G.F.; Lo, R.K.L.; Sachdev, S.; Yuen, R.S.H. Ranking Candidate Signals with Machine Learning in Low-Latency Search for Gravitational-Waves from Compact Binary Mergers. Phys. Rev. D 2020, 101, 083006. [Google Scholar] [CrossRef]

- Zevin, M.; Coughlin, S.; Bahaadini, S.; Besler, E.; Rohani, N.; Allen, S.; Cabero, M.; Crowston, K.; Katsaggelos, A.K.; Larson, S.L.; et al. Gravity Spy: Integrating Advanced LIGO Detector Characterization, Machine Learning, and Citizen Science. Class. Quantum Gravity 2017, 34, 064003. [Google Scholar] [CrossRef]

- Powell, J.; Torres-Forné, A.; Lynch, R.; Trifirò, D.; Cuoco, E.; Cavaglià, M.; Heng, I.S.; Font, J.A. Classification Methods for Noise Transients in Advanced Gravitational-Wave Detectors II: Performance Tests on Advanced LIGO Data. Class. Quantum Gravity 2017, 34, 034002. [Google Scholar] [CrossRef]

- George, D.; Shen, H.; Huerta, E. Classification and Unsupervised Clustering of LIGO Data with Deep Transfer Learning. Phys. Rev. D 2018, 97, 101501. [Google Scholar] [CrossRef]

- Cuoco, E.; Razzano, M.; Utina, A. Wavelet-Based Classification of Transient Signals for Gravitational Wave Detectors. In Proceedings of the 2018 26th European Signal Processing Conference (EUSIPCO), Rome, Italy, 3–7 September 2018; pp. 2648–2652. [Google Scholar]

- Bişkin, O.T.; Kirbaş, I.; Çelik, A. A Fast and Time-Efficient Glitch Classification Method: A Deep Learning-Based Visual Feature Extractor for Machine Learning Algorithms. Astron. Comput. 2023, 42, 100683. [Google Scholar] [CrossRef]

- Shen, H.; George, D.; Huerta, E.A.; Zhao, Z. Denoising Gravitational Waves with Enhanced Deep Recurrent Denoising Auto-Encoders. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 3237–3241. [Google Scholar]

- Torres-Forné, A.; Marquina, A.; Font, J.A.; Ibáñez, J.M. Denoising of Gravitational Wave Signals via Dictionary Learning Algorithms. Phys. Rev. D 2016, 94, 124040. [Google Scholar] [CrossRef]

- Wei, W.; Huerta, E.A. Gravitational Wave Denoising of Binary Black Hole Mergers with Deep Learning. Phys. Lett. B 2020, 800, 135081. [Google Scholar] [CrossRef]

- Vajente, G.; Huang, Y.; Isi, M.; Driggers, J.C.; Kissel, J.S.; Szczepańczyk, M.J.; Vitale, S. Machine-Learning Nonstationary Noise out of Gravitational-Wave Detectors. Phys. Rev. D 2020, 101, 042003. [Google Scholar] [CrossRef]

- Yan, R.Q.; Liu, W.; Yin, Z.Y.; Ma, R.; Chen, S.Y.; Hu, D.; Wu, D.; Yu, X.C. Gravitational Wave Detection Based on Squeeze-and-Excitation Shrinkage Networks and Multiple Detector Coherent SNR. Res. Astron. Astrophys. 2022, 22, 115008. [Google Scholar] [CrossRef]

- Chaturvedi, P.; Khan, A.; Tian, M.; Huerta, E.; Zheng, H. Inference-Optimized AI and High Performance Computing for Gravitational Wave Detection at Scale. Front. Artif. Intell. 2022, 5, 828672. [Google Scholar] [CrossRef]

- Goyal, S.; Kapadia, S.J.; Ajith, P. Rapid Identification of Strongly Lensed Gravitational-Wave Events with Machine Learning. Phys. Rev. D 2021, 104, 124057. [Google Scholar] [CrossRef]

- Moreno, E.A.; Borzyszkowski, B.; Pierini, M.; Vlimant, J.R.; Spiropulu, M. Source-Agnostic Gravitational-Wave Detection with Recurrent Autoencoders. Mach. Learn. Sci. Technol. 2022, 3, 025001. [Google Scholar] [CrossRef]

- Krastev, P.G. Real-Time Detection of Gravitational Waves from Binary Neutron Stars Using Artificial Neural Networks. Phys. Lett. B 2020, 803, 135330. [Google Scholar] [CrossRef]

- Schäfer, M.B.; Ohme, F.; Nitz, A.H. Detection of Gravitational-Wave Signals from Binary Neutron Star Mergers Using Machine Learning. Phys. Rev. D 2020, 102, 063015. [Google Scholar] [CrossRef]

- Miller, A.L.; Astone, P.; D’Antonio, S.; Frasca, S.; Intini, G.; La Rosa, I.; Leaci, P.; Mastrogiovanni, S.; Muciaccia, F.; Mitidis, A.; et al. How Effective Is Machine Learning to Detect Long Transient Gravitational Waves from Neutron Stars in a Real Search? Phys. Rev. D 2019, 100, 062005. [Google Scholar] [CrossRef]

- Astone, P.; Cerda-Duran, P.; Di Palma, I.; Drago, M.; Muciaccia, F.; Palomba, C.; Ricci, F. A New Method to Observe Gravitational Waves Emitted by Core Collapse Supernovae. Phys. Rev. D 2018, 98, 122002. [Google Scholar] [CrossRef]

- Iess, A.; Cuoco, E.; Morawski, F.; Powell, J. Core-Collapse Supernova Gravitational-Wave Search and Deep Learning Classification. Mach. Learn. Sci. Technol. 2020, 1, 025014. [Google Scholar] [CrossRef]

- Chan, M.L.; Heng, I.S.; Messenger, C. Detection and Classification of Supernova Gravitational Wave Signals: A Deep Learning Approach. Phys. Rev. D 2020, 102, 043022. [Google Scholar] [CrossRef]

- Rebei, A.; Huerta, E.; Wang, S.; Habib, S.; Haas, R.; Johnson, D.; George, D. Fusing Numerical Relativity and Deep Learning to Detect Higher-Order Multipole Waveforms from Eccentric Binary Black Hole Mergers. Phys. Rev. D 2019, 100, 044025. [Google Scholar] [CrossRef]

- Wang, H.; Wu, S.; Cao, Z.; Liu, X.; Zhu, J.Y. Gravitational-Wave Signal Recognition of LIGO Data by Deep Learning. Phys. Rev. D 2020, 101, 104003. [Google Scholar] [CrossRef]

- Bai, S.; Kolter, J.Z.; Koltun, V. An Empirical Evaluation of Generic Convolutional and Recurrent Networks for Sequence Modeling. arXiv 2018, arXiv:1803.01271. [Google Scholar] [CrossRef]

- Schörkhuber, C.; Klapuri, A. Constant-Q Transform Toolbox For Music Processing. In Proceedings of the Sound and Music Computing Conference, Barcelona, Spain, 21–24 July 2010; pp. 3–64. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Wang, A.; Singh, A.; Michael, J.; Hill, F.; Levy, O.; Bowman, S. GLUE: A Multi-Task Benchmark and Analysis Platform for Natural Language Understanding. arXiv 2018, arXiv:1804.07461. [Google Scholar]

- Dong, L.; Xu, S.; Xu, B. Speech-Transformer: A No-Recurrence Sequence-to-Sequence Model for Speech Recognition. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5884–5888. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Zhou, S.; Lashkov, I.; Xu, H.; Zhang, G.; Yang, Y. Optimized Long Short-Term Memory Network for LiDAR-Based Vehicle Trajectory Prediction Through Bayesian Optimization. IEEE Trans. Intell. Transp. Syst. 2024, 26, 2988–3003. [Google Scholar] [CrossRef]

- The O1 Data Release. 2024. Available online: https://gwosc.org/archive/O1/ (accessed on 8 January 2024).

- Abbott, R.; Abbott, T.D.; Abraham, S.; Acernese, F.; Ackley, K.; Adams, C.; Adhikari, R.X.; Adya, V.B.; Affeldt, C.; Agathos, M.; et al. Open Data from the First and Second Observing Runs of Advanced LIGO and Advanced Virgo. SoftwareX 2021, 13, 100658. [Google Scholar] [CrossRef]

- Trovato, A.; Chassande-Mottin, E.; Bejger, M.; Flamary, R.; Courty, N. Neural network time-series classifiers for gravitational-wave searches in single-detector periods. Class. Quantum Gravity 2024, 41, 125003. [Google Scholar] [CrossRef]

- Joshi, M.; Singh, B.K. Deep learning techniques for brain lesion classification using various MRI (from 2010 to 2022): Review and challenges. Medinformatics 2024. [Google Scholar] [CrossRef]

- Adeyanju, S.A.; Ogunjobi, T.T. Machine Learning in Genomics: Applications in Whole Genome Sequencing, Whole Exome Sequencing, Single-Cell Genomics, and Spatial Transcriptomics. Medinformatics 2024, 2, 1–18. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Number of attention heads | 4 |

| Number of attention layers | 2 |

| Feedforward network size | 128 |

| Dropout rate | 0.2 |

| Learning rate | |

| Input signal length | 2048 |

| Number of patches | 224 |

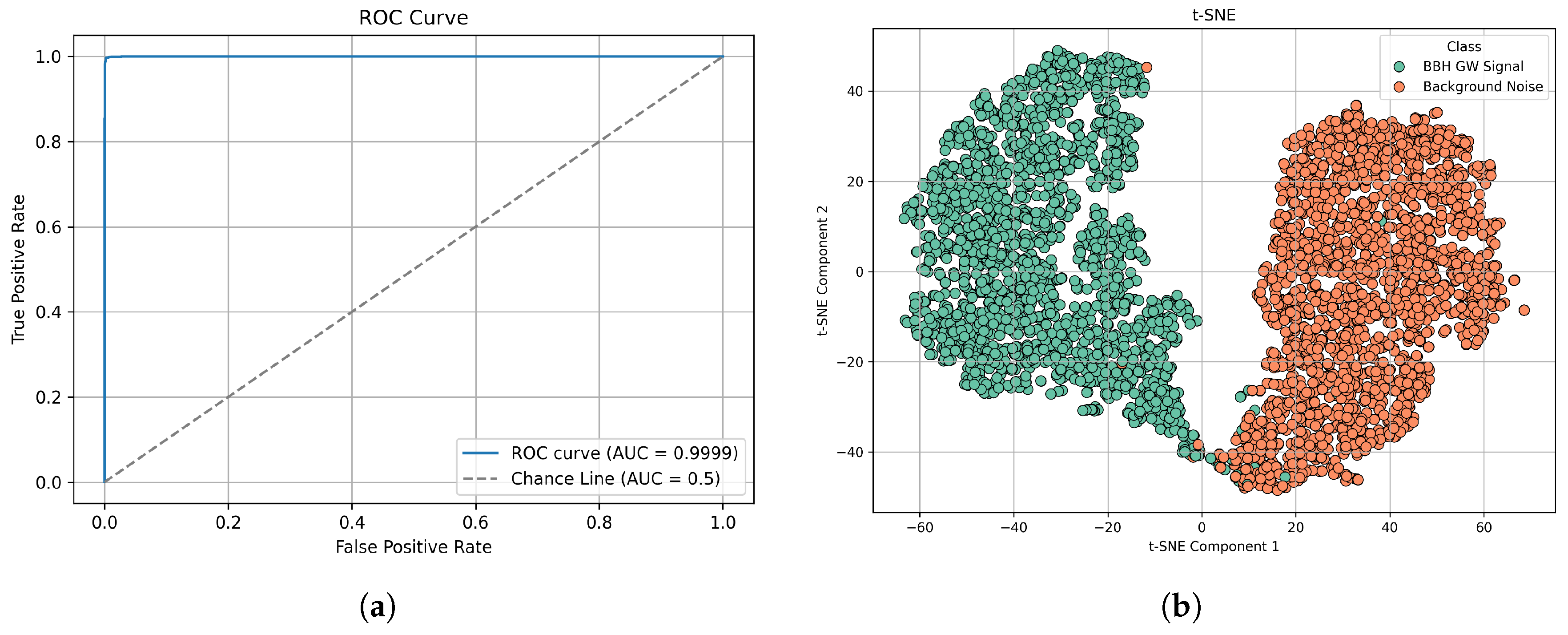

| Model | Accuracy | Precision | Recall | F1-Score | ROC AUC | PR AUC |

|---|---|---|---|---|---|---|

| Base Model [6] | 0.9315 | 0.9720 | 0.8885 | 0.9284 | 0.9679 | 0.9772 |

| TFR–ResNet-101 [5] | 0.9695 | 0.9933 | 0.9553 | 0.9688 | 0.9881 | 0.9916 |

| TFR–Xception [5] | 0.9704 | 0.9951 | 0.9587 | 0.9698 | 0.9881 | 0.9916 |

| TFR–EfficientNet [5] | 0.9710 | 0.9945 | 0.9553 | 0.9703 | 0.9885 | 0.9920 |

| EDAT-BBH | 0.9953 | 0.9956 | 0.9950 | 0.9953 | 0.9999 | 0.9998 |

| Model | F1-Score | AUC |

|---|---|---|

| CoT-temporal [8] | 0.9140 | 0.9350 |

| CoT-spectral [8] | 0.9160 | 0.9380 |

| EDAT-BBH | 0.9953 | 0.9999 |

| Metric | Stat | |||||

|---|---|---|---|---|---|---|

| (Only Q-Transform) | (1 − α) = 0.2 | (1 − α) = 0.8 | (Only DWT) | (1 − α) = 0.5 | ||

| Accuracy (%) | Mean | 99.15 | 99.36 | 99.34 | 99.32 | 99.53 |

| Std | 0.68 | 0.23 | 0.32 | 0.15 | 0.09 | |

| Min | 97.95 | 99.10 | 98.78 | 99.10 | 99.45 | |

| Max | 99.65 | 99.60 | 99.58 | 99.48 | 99.63 | |

| CI Lower | 98.30 | 99.07 | 98.94 | 99.13 | 99.38 | |

| CI Upper | 99.99 | 99.65 | 99.73 | 99.50 | 99.68 | |

| Precision | Mean | 0.9882 | 0.9901 | 0.9911 | 0.9934 | 0.9956 |

| Std | 0.0144 | 0.0057 | 0.0076 | 0.0049 | 0.0022 | |

| Min | 0.9633 | 0.9833 | 0.9775 | 0.9871 | 0.9925 | |

| Max | 0.9980 | 0.9970 | 0.9950 | 1.0000 | 0.9980 | |

| CI Lower | 0.9703 | 0.9830 | 0.9816 | 0.9840 | 0.9920 | |

| CI Upper | 1.0000 | 0.9972 | 1.0000 | 1.0000 | 0.9992 | |

| Recall | Mean | 0.9950 | 0.9972 | 0.9957 | 0.9929 | 0.9950 |

| Std | 0.0040 | 0.0021 | 0.0022 | 0.0063 | 0.0024 | |

| Min | 0.9885 | 0.9945 | 0.9925 | 0.9820 | 0.9915 | |

| Max | 0.9990 | 0.9995 | 0.9985 | 0.9975 | 0.9975 | |

| CI Lower | 0.9901 | 0.9946 | 0.9930 | 0.9851 | 0.9920 | |

| CI Upper | 0.9999 | 0.9998 | 0.9984 | 1.0000 | 0.9988 | |

| F1-Score | Mean | 0.9915 | 0.9936 | 0.9934 | 0.9931 | 0.9953 |

| Std | 0.0066 | 0.0023 | 0.0032 | 0.0015 | 0.0009 | |

| Min | 0.9799 | 0.9911 | 0.9879 | 0.9909 | 0.9945 | |

| Max | 0.9965 | 0.9960 | 0.9958 | 0.9947 | 0.9963 | |

| CI Lower | 0.9833 | 0.9908 | 0.9895 | 0.9912 | 0.9938 | |

| CI Upper | 0.9998 | 0.9965 | 0.9973 | 0.9950 | 0.9965 | |

| ROC AUC | Mean | 0.9998 | 0.9998 | 0.9997 | 0.9998 | 0.9999 |

| Std | 0.0002 | 0.0002 | 0.0002 | 0.0001 | 0.0001 | |

| Min | 0.9995 | 0.9994 | 0.9995 | 0.9997 | 0.9998 | |

| Max | 0.9999 | 0.9999 | 0.9999 | 0.9999 | 0.9999 | |

| CI Lower | 0.9995 | 0.9995 | 0.9995 | 0.9997 | 0.9998 | |

| CI Upper | 1.0000 | 1.0000 | 0.9999 | 0.9999 | 1.0000 |

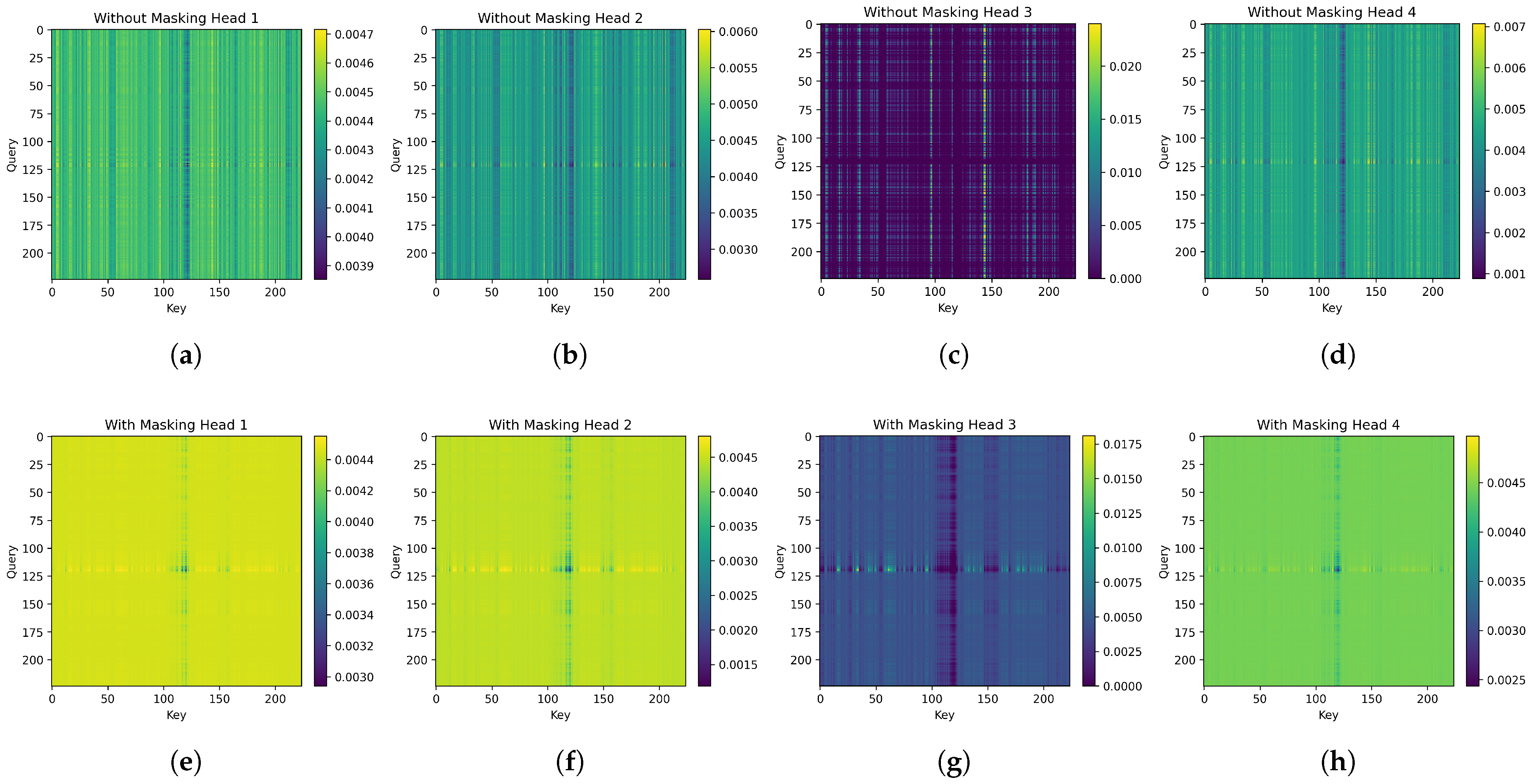

| Metric | No Mask (Mean) | Mask (Mean) | p-Value (Wilcoxon) | |

|---|---|---|---|---|

| Attention-SNR corr | −0.0778 | 0.3567 | +0.4345 | < |

| IoU | 0.0378 | 0.0519 | +0.0141 | |

| Entropy | 10.8071 | 10.7901 | −0.0169 | < |

| Model | Params (M) | FLOPs (G) | Inference (ms) (bs = 1) | Latency Std (ms) (bs = 1) | Throughput (samp/s) (bs = 1) | Inference (ms) (bs = 32) | Latency Std (ms) (bs = 32) | Throughput (samp/s) (bs = 32) |

|---|---|---|---|---|---|---|---|---|

| ResNet101 | 42.660 | 15.195 | 458.012 | 8.76 | 2.2 | 54.505 | 63.32 | 40.7 |

| Xception | 20.864 | 9.134 | 130.520 | 2.77 | 7.6 | 24.247 | 29.29 | 89.9 |

| EfficientNetB0 | 4.051 | 0.800 | 291.348 | 6.25 | 3.4 | 29.459 | 33.42 | 71.1 |

| EDAT (No Mask) | 0.216 | 0.154 | 41.500 | 1.01 | 23.8 | 5.303 | 6.32 | 417.7 |

| EDAT (With Mask) | 0.216 | 0.154 | 42.114 | 1.93 | 23.5 | 3.827 | 4.16 | 512.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bişkin, O.T. EDAT-BBH: An Energy-Modulated Transformer with Dual-Energy Attention Masks for Binary Black Hole Signal Classification. Electronics 2025, 14, 4098. https://doi.org/10.3390/electronics14204098

Bişkin OT. EDAT-BBH: An Energy-Modulated Transformer with Dual-Energy Attention Masks for Binary Black Hole Signal Classification. Electronics. 2025; 14(20):4098. https://doi.org/10.3390/electronics14204098

Chicago/Turabian StyleBişkin, Osman Tayfun. 2025. "EDAT-BBH: An Energy-Modulated Transformer with Dual-Energy Attention Masks for Binary Black Hole Signal Classification" Electronics 14, no. 20: 4098. https://doi.org/10.3390/electronics14204098

APA StyleBişkin, O. T. (2025). EDAT-BBH: An Energy-Modulated Transformer with Dual-Energy Attention Masks for Binary Black Hole Signal Classification. Electronics, 14(20), 4098. https://doi.org/10.3390/electronics14204098