Abstract

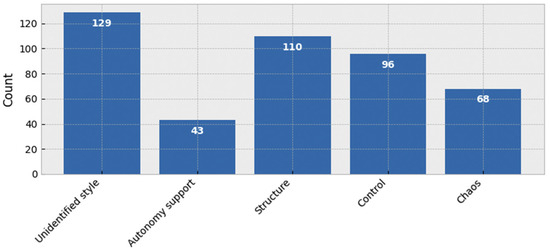

Assessing teaching behavior is essential for improving instructional quality, particularly in Physical Education, where classroom interactions are fast-paced and complex. Traditional evaluation methods such as questionnaires, expert observations, and manual discourse analysis are often limited by subjectivity, high labor costs, and poor scalability. These challenges underscore the need for automated, objective tools to support pedagogical assessment. This study explores and compares the use of Transformer-based language models for the automatic classification of teaching behaviors from real classroom transcriptions. A dataset of over 1300 utterances was compiled and annotated according to the teaching styles proposed in the circumplex approach (Autonomy Support, Structure, Control, and Chaos), along with an additional category for messages in which no style could be identified (Unidentified Style). To address class imbalance and enhance linguistic variability, data augmentation techniques were applied. Eight pretrained BERT-based Transformer architectures were evaluated, including several pretraining strategies and architectural structures. BETO achieved the highest performance, with an accuracy of 0.78, a macro-averaged F1-score of 0.72, and a weighted F1-score of 0.77. It showed strength in identifying challenging utterances labeled as Chaos and Autonomy Support. Furthermore, other BERT-based models purely trained with a Spanish text corpus like DistilBERT also present competitive performance, achieving accuracy metrics over 0.73 and and F1-score of 0.68. These results demonstrate the potential of leveraging Transformer-based models for objective and scalable teacher behavior classification. The findings support the feasibility of leveraging pretrained language models to develop scalable, AI-driven systems for classroom behavior classification and pedagogical feedback.

1. Introduction

One of the most influential factors in the teaching–learning process is the teacher’s behavior in the classroom, as it directly affects students’ motivation and, consequently, their academic performance [1]. Depending on the teacher’s behavior, different education styles can be inferred. The study of such behavior could serve as a comprehensive review of the effects on the students and to propose improvements in the knowledge transferring.

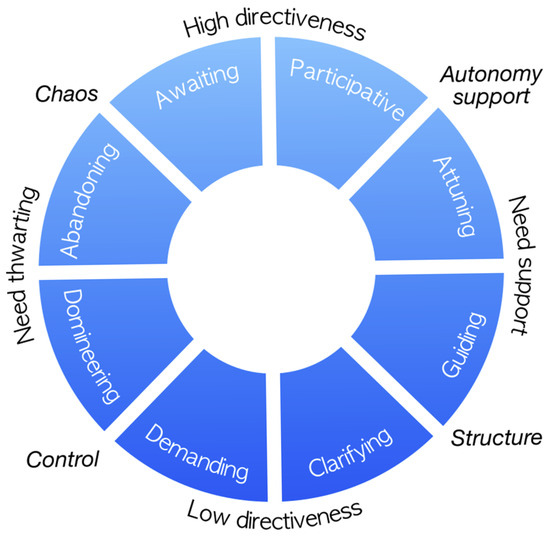

In this context, the present study is grounded in the circumplex model of motivational teaching styles see Figure 1. This model has been proposed within the framework of Self-Determination Theory (SDT) [2]. This circumplex approach posits that teaching styles are not rigid categories but rather fall along a circular continuum defined by two underlying dimensions: (1) the level of directiveness, and (2) the degree of support for—or frustration of—basic psychological needs. These dimensions give rise to four core teaching styles: (i) autonomy support, (ii) structure, (iii) control, and (iv) chaos. This framework allows for the classification of teaching behaviors within a dynamic relational space, offering a more nuanced and realistic alternative to binary categorizations (e.g., motivational vs. demotivational teaching).

Figure 1.

Visual representation of the circumplex model.

These categories are difficult to identify without a complete observation of the classes’ interactions. The automatic acquisition and classification of teacher behavior, although it is complex, represents a big step in the continuous improvement of teaching. About the circumplex categories, autonomy-supportive teaching refers to behaviors in which the teacher acknowledges students’ emotions, interests, and perspectives, offers opportunities for meaningful choice, and encourages personal initiative. This style is associated with higher levels of self-determined motivation, active engagement, and psychological well-being. Structured teaching involves clearly guiding the learning process through understandable expectations, step-by-step instructions, and feedback that fosters a sense of competence in the student. Although directive, this style does not impose but rather organizes the environment in a supportive manner. Controlling teaching relies on external pressure using commands, threats, rewards, or sanctions to enforce specific behaviors. While it may elicit immediate compliance, it is generally linked to lower motivational quality and defensive or disengaged responses. Finally, chaotic teaching is characterized by the absence of clear direction. The teacher provides neither structure nor guidance, often resulting in confusion, anxiety, or disorganization among students. This style is typically observed in contexts where teachers feel overwhelmed, act inconsistently, or display indifference. It would be worth exploring the potential of using teachers’ speech as a source of data for evaluating teaching styles. This methodological choice is supported by evidence indicating that the verbal messages expressed by teachers are reliable indicators of their classroom behaviors, which in turn are closely linked to student learning outcomes [3]. Analyzing teachers’ speech provides access not only to the instructional content but also to key dimensions of teaching style, such as clarity, emotional tone, types of feedback provided, and motivational orientation—factors that are all relevant to understanding the quality of pedagogical interactions.

Traditionally, the evaluation of teaching behaviors has been grounded in expert classroom observations or student self-reports and perceptions [4,5]. While these approaches offer valuable qualitative insights, they suffer from inherent limitations, such as subjectivity, inter-rater variability, and substantial resource demands in terms of time and personnel. To overcome these constraints, in recent years, the integration of Artificial Intelligence (AI) into the educational field has been gaining momentum. It is common to find applications ranging from personalized learning to the automation of administrative processes [5]. Among the most promising areas of AI applied to education is Natural Language Processing (NLP), which enables the analysis of large bodies of text to extract valuable insights for assessing and improving teaching practices. Nevertheless, despite advances in this domain, the application of such technologies to the analysis of such classroom interactions remains a challenging endeavor with considerable potential [6].

One of the most powerful tools in NLP is Transformer-based architectures, which have revolutionized the field by enabling the modeling of long-range dependencies and contextual relationships in textual data. Central to this advancement is the BERT (Bidirectional Encoder Representations from Transformers) model, which introduced a pre-training and fine-tuning paradigm that significantly improved performance across a wide range of downstream tasks. BERT and its derivatives, such as RoBERTa, DistilBERT, and ALBERT, have demonstrated strong results in educational applications, including automatic exam scoring, emotion recognition in instructional narratives, feedback generation, and dialogue classification in virtual learning platforms [7,8].

Despite these successes, the application of BERT-based models to the analysis of live classroom interactions remains relatively underexplored. Modeling real-world classroom discourse presents unique challenges: utterances tend to be informal, fragmented, and context-dependent; linguistic styles vary with student age and subject matter; and motivational teaching behaviors are often subtle and diffuse across sequences of speech. These complexities raise important questions regarding the suitability and adaptability of Transformer-based models for this domain [8]. The present study seeks to address this research gap by systematically evaluating the performance of multiple Transformer architectures in the task of classifying teacher utterances into predefined motivational categories. By comparing different BERT variants and Transformer-based models, this paper’s intention is to identify which architectures offer the best trade-off between accuracy, generalizability, and computational efficiency in classroom settings. Furthermore, the influence of utterance characteristics—such as length, syntactic complexity, and pragmatic markers—on model performance is studied.

Given the data-hungry nature of Transformer models, particular attention is devoted to constructing a high-quality, task-specific dataset [9]. This paper presents the results of several hours of teacher-student interactions, transcribed and expertly labeled to form a unique database. To mitigate potential data scarcity and enhance model robustness, generative AI techniques for data augmentation have been employed, producing synthetically varied utterances that retain pedagogical intent while expanding lexical and structural diversity [10]. While recent literature underscores the growing potential of Transformer-based models in education [6,7,8,9,10], most existing studies are limited to structured, online, or semi-scripted scenarios. Empirical evidence on their deployment in authentic, dynamic classroom environments—particularly for tasks requiring nuanced behavioral inference, such as identifying motivational strategies in teacher discourse—remains scarce. This gap represents a critical limitation in understanding the practical applicability of these models. Therefore, this study provides novel insights into the capabilities and constraints of BERT-based models for educational behavior analysis, offering implications for scalable, objective tools to support teacher professional development and classroom feedback systems. Specifically, this work addresses this gap by investigating the performance of multiple Transformer-based architectures in classifying teacher utterances into motivational categories defined by Self-Determination Theory. The study focuses on the following key research questions:

- (1)

- How accurately can Transformer models classify teacher behaviors from real classroom transcripts into predefined motivational categories?

- (2)

- To what extent can data augmentation techniques based on generative language models improve classification performance in the presence of class imbalance?

- (3)

- How does the length of teacher utterances affect model performance, and what preprocessing strategies might be required to optimize outcomes?

- (4)

- Which Transformer variants offer the best trade-off between accuracy and computational efficiency for this task?

The contributions of this paper are, first, a novel, annotated dataset of teacher utterances from real Physical Education classes, categorized according to a theoretically grounded motivational framework. Second, it provides a comparative evaluation of multiple Transformer models under varying training conditions and preprocessing strategies. Third, it demonstrates the practical value of synthetic data generation through large language models as a means of enhancing model robustness and generalization in low-resource educational settings. By addressing these challenges, the study contributes to the development of scalable, objective tools for analyzing instructional behavior, opening avenues for future AI-powered feedback systems to support evidence-based teaching practices.

The remainder of this paper is organized as follows. Section 2 reviews related work in the field of Natural Language Processing (NLP), with a particular focus on the application of BERT-based models to educational settings. Section 3 describes the methodology employed, including the construction and annotation of the dataset, the selection of model architectures, and the preprocessing and training procedures. Section 4 presents and analyzes the results obtained across different models and configurations, including the impact of data augmentation and utterance length. Finally, Section 5 summarizes the main findings and discusses future research directions aimed at enhancing the integration of artificial intelligence in classroom-based teaching assessment.

2. Previous Works

Natural Language Processing (NLP) has been increasingly applied in education over the past decade, offering powerful tools to analyze and support teaching and learning. By enabling computers to interpret students’ and teachers’ language data, NLP can automatically extract insights from unstructured text (or speech) that were previously accessible only through labor-intensive human analysis [11]. Educational environments generate vast textual data—from student essays and responses to classroom transcripts—and NLP techniques can help leverage this data to enhance instructional processes [12]. NLP in education holds enormous potential to improve feedback, assessment, and personalized learning by handling language-intensive tasks at scale.

Applications of NLP in education are diverse, ranging from content analysis to interactive learning support. For example, NLP systems can automatically highlight key points in textbooks or lesson materials, helping teachers and students focus on core concepts. They can offer intelligent tutoring functionalities, such as recommending learning resources or guiding students through practice questions based on their queries [13]. Another important application is automated assessment: NLP models are used for grading open-ended assignments like essays or short answers, providing quick and consistent evaluation of student work. Likewise, NLP-driven tools perform grammar and writing error correction, enabling students to receive instant feedback on language use. For instance, recent research introduced a transformer-based writing feedback system that delivers real-time suggestions on grammar, vocabulary, style, and coherence, significantly improving students’ writing quality [14]. NLP has also been employed to analyze free-response answers in science education—for example, to automatically detect misconceptions in students’ explanations, an approach that can mirror human experts in identifying gaps in understanding [15]. By handling tasks such as scoring, error detection, and content generation, NLP technologies save educators time and support more personalized, adaptive learning experiences.

Another emerging area is the use of NLP to analyze classroom discourse and teaching behavior. Researchers have begun leveraging NLP to process transcripts of classroom interactions, aiming to identify instructional strategies and provide feedback to teachers [16]. For instance, automated classifiers can detect specific “talk moves” or dialogic techniques in teacher–student dialogues, such as asking open-ended questions or building on student answers [17]. By analyzing the patterns in teachers’ utterances, these systems can generate feedback dashboards that summarize teaching practices and highlight examples from the teacher’s own dialogue [16]. Such tools can encourage reflective teaching and continuous improvement by objectively measuring aspects of pedagogy that were traditionally assessed through subjective observation. Notably, NLP-based discourse analysis has been applied in varied subject areas (e.g., math, language arts), and even in domains like Physical Education (PE), where classroom interactions are fast-paced and multimodal. In PE settings, conventional observation methods face challenges due to the high activity and complex, real-time interactions. Studies have pointed out that evaluating teaching behaviors in PE has relied heavily on manual coding, which is inefficient and prone to subjectivity [18]. This has motivated the use of AI and NLP techniques to support PE teaching assessment. By transcribing and analyzing verbal interactions in PE classes, NLP can help classify teaching behavior or style in a more scalable and objective manner. In fact, recent work suggests that AI-driven systems can enable real-time data collection, automated annotation, and in-depth analysis of PE teaching behaviors, offering a sustainable approach to improving instructional quality in Physical Education [18].

After all, NLP represents a powerful yet delicate instrument for educational innovation. The aim is not to replace teachers but to complement their practice by offering scalable and objective tools that support pedagogy while respecting the diversity and complexity of real classrooms [19]. The true potential of NLP lies in its alignment with pedagogical goals, ensuring that technological advancement serves to enhance, not standardize nor constrain, educational processes.

BERT and Transformer Models in Education

The advent of Transformer-based language models like BERT (Bidirectional Encoder Representations from Transformers) has revolutionized NLP, and educational applications are no exception. Since around 2017–2018, Transformers have set new state-of-the-art performance on many language tasks, bringing those benefits into the education domain. A key advantage of Transformers is their ability to be pre-trained on large text corpora and then fine-tuned for specific tasks, which has proven especially powerful for the types of limited-data problems often found in education [20]. Research has demonstrated that pretrained models such as BERT can significantly boost accuracy in educational NLP tasks compared to earlier approaches. For example, in [20], it was shown that a fine-tuned BERT model outperformed both traditional machine learning and other deep learning architectures in classifying segments of teachers’ reflective writings. Notably, BERT began to surpass previous models’ performance even with as little as 20–30% of the training data used, illustrating how transfer learning with Transformers can achieve high-quality results from relatively small educational datasets. This data efficiency is crucial in education, where annotated data (such as labeled student answers or teacher utterances) can be costly to obtain at scale. Overall, the introduction of Transformers has enabled more robust and generalizable models for educational text, reducing the need for hand-crafted features and rule-based analysis.

Transformer-based models have been applied across a growing range of educational use cases with great success. In automated writing evaluation, for instance, BERT and similar models form the backbone of advanced feedback systems. The previously mentioned study on AI writing feedback leveraged a fine-tuned BERT to provide multi-level critiques on student essays (grammar, structure, coherence), something unattainable with older rule-based tools [16]. In the area of classroom discourse analysis, researchers have used BERT-based classifiers to detect nuanced dialogue acts and teaching strategies. It is demonstrated that pre-trained embeddings combined with classifiers can identify six types of teacher characteristics (e.g., guiding discussions or pressing for reasoning) in classroom transcripts [15]. Follow-up work found that a Transformer-based model fine-tuned on teacher–student dialogue data outperformed feature-engineered baselines in recognizing these pedagogical moves. Even lightweight Transformer variants have shown effectiveness in education: for example, DistilBERT [21] (a compressed BERT model) has been successfully fine-tuned on classroom data to classify teacher utterances and student contributions. The consistent theme is that BERT and its relatives capture linguistic context and pedagogical nuances far better than earlier methods, yielding higher accuracy in tasks like categorizing dialogue turns, scoring answers, or predicting student outcomes. Moreover, Transformer models’ capacity to consider longer contexts (thanks to self-attention) is valuable for educational data, where the meaning of a student’s response or a teacher’s question may depend on surrounding context or prior discourse [22].

An important development is the adaptation of Transformer models to multiple languages and specific domains in education. While the original BERT was trained on English, the approach has been extended to many languages, enabling educational NLP research in non-English settings. In the Spanish language, for example, a monolingual Transformer model called BETO was introduced as the Spanish equivalent of BERT. BETO [23] was trained on a large Spanish corpus and outperformed the multilingual BERT on Spanish NLP benchmarks. This indicates that using language-specific Transformer models can yield better results than one-size-fits-all multilingual models, due to more tailored vocabulary and language structure understanding. For educational applications, this is highly relevant—it means that analyses of Spanish classroom text or student writing can leverage BETO to improve accuracy in capturing nuances of Spanish educational discourse. Indeed, in a Physical Education context where our dataset consists of Spanish classroom utterances, using BETO (along with other BERT-based architectures) has proven advantageous for classifying teaching behaviors. More broadly, domain-specific pretraining or fine-tuning is a growing trend: researchers are beginning to adapt Transformers to the unique vocabulary of disciplines or the informal language of student conversations. By combining pretraining on general language with fine-tuning on educational data, these models achieve strong performance with manageable data requirements. The feasibility of Transformer models for education is evident in their results—studies report high accuracy and F1-scores on tasks like classifying teacher feedback styles [24], predicting essay scores, and detecting whether student answers contain misconceptions. As a result, BERT and similar models are quickly becoming standard tools in the educational data mining and learning analytics communities. They enable more objective, scalable, and insightful analysis of educational language data, from written texts to spoken classroom interactions, thereby opening the door for AI-driven systems that support teachers and learners in real time. Table 1 displays the summary of the studies cited in this subsection.

Table 1.

Summary of previous studies applying BERT and Transformer Models in Education.

3. Materials and Methods

Based on our research questions, we formulate the following hypotheses to guide the methodological approach: (H1) Transformer models can accurately classify teacher behaviors from real classroom transcripts into predefined motivational categories; (H2) data augmentation using generative language models improves classification performance, particularly for underrepresented classes; (H3) the length of teacher utterances significantly influences model performance, and appropriate preprocessing strategies can optimize outcomes; and (H4) different Transformer variants exhibit varying trade-offs between accuracy and computational efficiency, enabling the identification of models best suited for practical deployment. These hypotheses underpin the design of our experiments, encompassing dataset construction, preprocessing pipelines, augmentation strategies, model selection, and evaluation metrics. In the following sections, we first describe the composition of the dataset and the data augmentation techniques applied, then present the BERT-based methodology, including the selected subvariants for comparison, and finally detail the hyperparameter tuning and model training procedures.

3.1. Dataset

The dataset used in this study consists of transcriptions from 34 real-world Physical Education lessons, collected in Spanish secondary schools and annotated by trained pedagogical experts with experience in analyzing classroom discourse. These recordings were part of a broader research project focused on classroom communication and its relationship to teachers’ motivational styles. Each lesson was recorded in full—from beginning to end—using lapel microphones to ensure high-quality capture of the teacher’s voice, minimizing background noise, and improving speech clarity. The resulting audio files were transcribed using Whisper [25], a state-of-the-art Automatic Speech Recognition (ASR) model. Whisper is a multilingual, general-purpose model trained on a large corpus of diverse audio-text pairs and has demonstrated strong performance across multiple languages and recording conditions. The model operates using an encoder–decoder Transformer architecture that maps raw audio waveforms to textual representations. It supports robust transcription even in noisy environments and is particularly effective in low-resource or domain-specific contexts. In this study, Whisper was used to generate an initial transcript of each session, producing time-aligned utterances directly from the audio signals. Following transcription, the raw text was reviewed and segmented manually into individual utterances or minimal pedagogically meaningful expressions. The initial transcript of each session was generated, producing time-aligned utterances directly from the audio signals, based on acoustic features such as pauses and prosody [25]. In most cases, this automatic segmentation aligned well with the natural flow of the interaction. However, to ensure consistency and pedagogical relevance for subsequent analysis, the transcripts were reviewed and, when necessary, manually adjusted. Modifications were guided by team-agreed criteria, which defined minimal pedagogically meaningful units as those that (a) convey a complete instructional idea or feedback move, (b) align with natural linguistic boundaries (e.g., clauses or sentences), and (c) can be independently interpreted for annotation purposes.

The resulting 22,262 utterances were annotated by a panel of seven experts, under the guidance of two PhDs in Physical Activity and Sport Sciences, both with prior teaching experience in secondary-level Physical Education. Each session was independently annotated by two of the experts. In cases where the two annotators assigned different labels to an utterance, a third expert—one of the two PhD-level supervisors—was consulted to make the final decision. Annotation followed the teaching styles proposed by the circumplex model, grounded in Self-Determination Theory, using the following five-class scheme representing different motivational orientations. Table 1 presents a set of example utterances from the annotated dataset.

- Autonomy Support: Messages that promote choice, student initiative, or personal expression.

- Structure: Phrases that provide guidance, clear explanations, expectations, or structured feedback.

- Control: Authoritative interventions involving imposition, threats, or constraints on student autonomy.

- Chaos: Disorganized, ambiguous, or pedagogically irrelevant utterances.

- Unidentified Style: Utterances that do not clearly fit any defined motivational category.

Table 2 presents a set of example utterances from the annotated dataset.

Table 2.

Sample utterances and their corresponding labels.

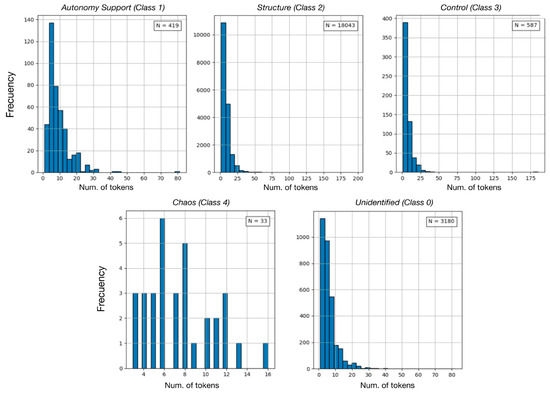

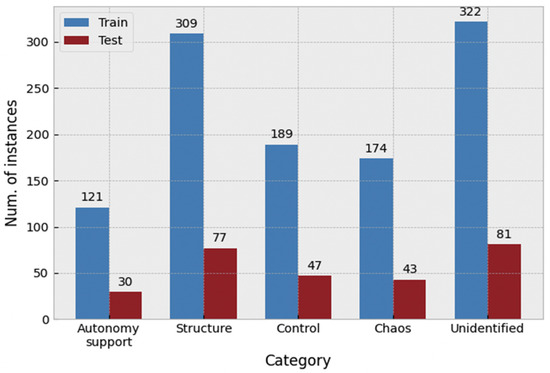

Disagreements between annotators were resolved through discussion. In cases where consensus could not be reached, a third expert adjudicated the final label. This protocol ensured consistent labeling across the dataset and reinforced its reliability for training automatic classification models. The distribution of utterance lengths—measured in tokens—varies significantly across categories. As shown in Figure 2, the dataset exhibits a pronounced class imbalance: Structure comprises over 18,000 examples, whereas Chaos contains only a few dozen. This imbalance poses a challenge for model training, as it can bias predictions toward majority classes while degrading performance on underrepresented ones. Furthermore, utterance lengths are highly variable, which may affect classification accuracy. To address these issues, data augmentation and additional preprocessing steps were applied to balance class distributions and improve model generalization. For test purposes, a single session out of the 34 was held out entirely from the training and validation processes. This reserved session served as a final test set, enabling objective evaluation of model generalization on unseen data.

Figure 2.

Dataset class distribution prior to augmentation.

3.2. Data Augmentation

The quality and quantity of the training dataset are critical factors influencing model performance in supervised learning. Initially, the dataset consisted of 22,262 examples, distributed across four target categories as follows: Structure (18,043 examples), Autonomy Support (410 examples), Control (587 examples), and Chaos (33 examples). Categories with very few examples—Autonomy Support, Control, and Chaos—were considered insufficient for stable model training, as they provided too few instances to capture meaningful patterns. For instance, Chaos contained only 33 utterances, many of which were extremely short or lacked clear motivational content (e.g., “Sit here” or “Wait your turn”), while Control and Autonomy Support examples often consisted of short, directive sentences (“Do this exercise,” “Follow the instructions”), making it difficult for the model to learn robust behavioral patterns. To address class imbalance, we applied a combination of undersampling and generative data augmentation techniques. Overrepresented categories (Structure) were downsampled, while generative AI (ChatGPT-4) was used to create additional examples for underrepresented classes. After balancing, each category contained 600 examples, except for Structure, which retained 700 examples due to its initially high representation, resulting in a total of 3100 examples. The target of 600 examples per category was chosen to ensure sufficient coverage of linguistic and behavioral diversity within each class while keeping the dataset manageable for rigorous expert validation. This number strikes a practical balance: it is large enough to allow the model to learn meaningful patterns for underrepresented classes, yet small enough to allow domain experts to review each generated example carefully, preserving high label fidelity and ecological validity. All generated utterances underwent rigorous review by two domain experts—one in physical education and another in the circumplex approach—and only those agreed to plausibly occur in authentic classroom interactions were retained, ensuring both label fidelity and ecological validity. Next, consecutive examples labeled with the same category were merged into single examples to better reflect coherent teacher turns. For instance, a sequence like “I will explain the activity step by step (Structure). First, we pass the ball (Structure). Next, we move to another hoop (Structure)” was merged into one example labeled as Structure. After this consolidation, the final dataset consisted of 1393 examples, which were used for all subsequent model training and evaluation. This careful process of augmentation, expert validation, and consolidation not only mitigates class imbalance but also preserves the integrity and realism of classroom discourse, producing a robust and high-quality dataset for training Transformer-based models.

3.3. Dataset Refinement and Statistical Analysis

Following the generation of synthetic data, additional preprocessing steps were taken to enhance the contextual quality and internal consistency of the resulting dataset. Specifically, utterances containing fewer than five tokens were merged with the preceding utterance, provided both shared the same annotated label. The five-token threshold was selected empirically after multiple iterations, where this criterion was shown to improve model performance without significantly reducing the number of available samples. This step is particularly important in Transformer-based models such as BERT, which require sufficiently informative input sequences to learn contextual patterns effectively. By merging only utterances with the same label, semantic coherence was preserved, and data quality was enhanced. This process resulted in a more robust dataset, striking a necessary balance between quality and volume.

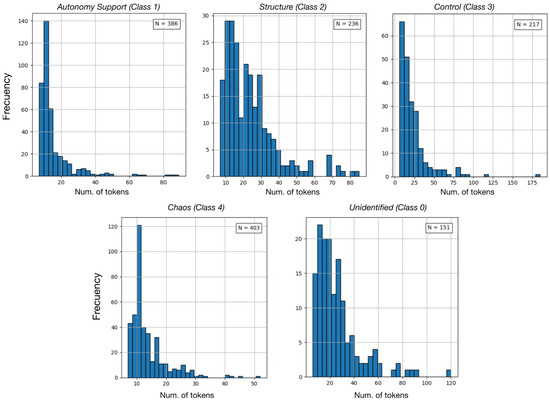

The final dataset comprised 1393 annotated utterances, distributed across five motivational categories. As shown in Table 3, the class Chaos (Label 4) was the most frequent, representing 28.93% of the data, while Unidentified Style (Label 0) was the least common, with 10.84%. The rest of the utterances were distributed across Autonomy Support (386 samples), Structure (236 samples), and Control (217 samples). This design reflects both the original classroom frequency and the intentional balancing measures applied during preprocessing. In addition to class distribution, we analyzed key linguistic features, including average length in characters and word count per utterance. Results revealed that utterances labeled as Chaos and Autonomy Support were significantly shorter on average, while those assigned to Structure and Unidentified Style tended to be longer and more syntactically complex. These differences may influence classification performance due to varying levels of contextual information available in each class. Visual analysis of class distribution is provided in Figure 3, which shows a histogram of utterance frequency per category.

Table 3.

Summary statistics by class, including representative examples.

Figure 3.

Dataset class distribution with augmentation.

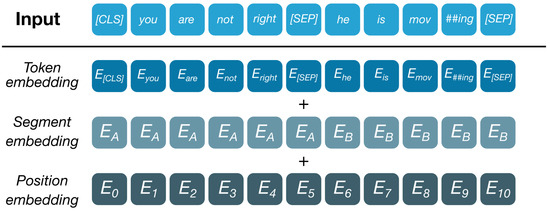

3.4. BERT Tokenization

Prior to model training, input texts were preprocessed using WordPiece tokenization, the sub-word segmentation technique employed by BERT. This method decomposes words into smaller linguistic units based on a pretrained vocabulary, allowing both frequent and out-of-vocabulary terms to be effectively represented. The algorithm segments words into the longest possible sequence of known subwords, reducing reliance on the [UNK] token and improving the model’s generalization capabilities. Each input sequence was structured by adding special tokens required by BERT: [CLS] at the beginning, used for classification output; [SEP] at the end, to mark sentence boundaries; and [PAD] for padding shorter sequences to a uniform length. Sequences exceeding the maximum length were truncated. All tokens were mapped to numerical identifiers using the BERT-base Spanish vocabulary of approximately 30,000 entries [20].

Tokens were then embedded into vector space through the sum of three components: token embeddings (semantic identity), position embeddings (sequential order), and segment embeddings (used in multi-sentence inputs). This enriched representation was fed into the BERT architecture, where the vector corresponding to [CLS] was passed through a SoftMax layer to generate class probabilities over the five motivational teaching styles.

3.5. BERT Architecture

BERT is a deep neural network architecture designed for Natural Language Processing tasks [20]. It is based entirely on the Transformer encoder architecture and represents a major shift in how language models are pre-trained and fine-tuned for downstream tasks. What distinguishes BERT from previous models is its bidirectional context modeling. Traditional language models process text either from left to right or right to left, limiting their ability to capture full contextual information. BERT overcomes this by using Masked Language Modeling (MLM): during pretraining, a random subset of input tokens is masked, and the model is trained to predict them based on the surrounding context from both directions. This enables the model to learn deep, contextual representations of language that account for a word’s position and function within the sentence. In addition, BERT is trained with a second task known as Next Sentence Prediction (NSP), which teaches the model to understand the relationship between pairs of sentences. This allows BERT to handle tasks requiring sentence-level reasoning, such as question answering or textual entailment.

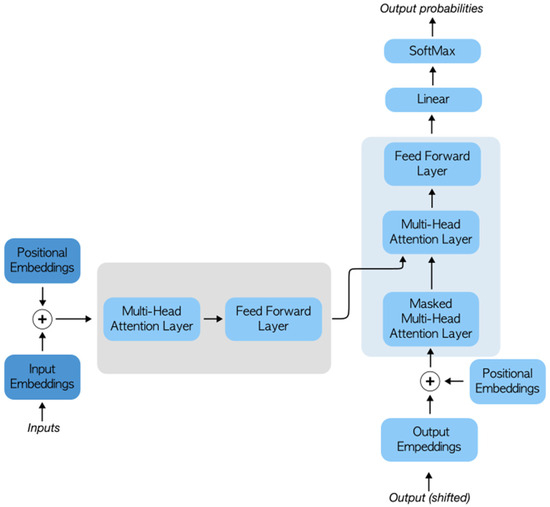

The pretrained BERT model consists of multiple stacked Transformer encoder layers (12 in the base version), each with self-attention mechanisms [26] and feed-forward networks [27] (see Figure 4). These layers progressively build contextualized vector representations for each token in the input.

Figure 4.

BERT input tokenization.

3.6. BERT Variants

In this study, several Transformer-based language models derived from or inspired by the BERT architecture are evaluated. These variants differ in their pretraining strategies, architectural modifications, and efficiency trade-offs, and have been selected to assess their relative performance in classifying motivational teaching behaviors in Spanish language classroom discourse. A summary of the main characteristics of every BERT-based model is presented in Table 4.

Table 4.

Summary of BERT-based models.

3.6.1. BETO

BETO is a monolingual BERT model (see Figure 5) specifically trained for the Spanish language [23]. It retains the original BERT-base architecture—12 layers, 12 attention heads, and approximately 110 million parameters—but its tokenizer and vocabulary were adapted for Spanish. BETO was pretrained using Masked Language Modeling (MLM) and Next Sentence Prediction (NSP) on a large corpus that includes Spanish Wikipedia, news articles, and subtitles. Because it is trained exclusively in Spanish, BETO captures linguistic, grammatical, and syntactic features unique to the language. Prior studies have shown that BETO often outperforms multilingual models like mBERT in Spanish-specific tasks, particularly in domains such as education, where discourse is context-rich and idiomatic. Its architecture and language-specific tuning make it a strong candidate for teacher discourse classification.

Figure 5.

Transformer architecture for BERT models.

3.6.2. Distill-BERT

DistilBERT is a distilled version of BERT trained using knowledge distillation, whereby a smaller student model learns to mimic the predictions of a larger teacher model [21]. This Spanish adaptation is trained solely on Spanish corpora and focuses on the MLM objective, omitting the NSP task. It has approximately 40% fewer parameters than BERT-base (66 million vs. 110 million) and uses only 6 Transformer layers. Despite its compactness, DistilBERT retains much of the semantic richness of its predecessor, while offering faster training and inference. Its reduced computational cost makes it suitable for deployment in real-time or resource-constrained educational environments.

3.6.3. RoBERTa

RoBERTa is an optimized variant of BERT developed by Facebook AI [28], with improvements focused on the pretraining phase. It removes the NSP objective and applies dynamic masking, whereby different tokens are masked in each training epoch. Additionally, RoBERTa is trained on significantly more data (~160 GB) than BERT (~16 GB), sourced from Common Crawl News, OpenWebText, and other large-scale datasets. These modifications enhance the model’s language understanding capacity. In the Spanish adaptation used here, RoBERTa inherits its base architecture but leverages a corpus tailored to Spanish texts. Its improved training strategy allows for richer contextualization and better generalization across complex utterances.

3.6.4. ALBERT

ALBERT (A Lite BERT) is a parameter-efficient variant of BERT developed by Google and the Toyota Research Institute [29]. It reduces model size through two key innovations: (1) factorized embedding parameterization, which separates the hidden layer size from the vocabulary embedding size, and (2) cross-layer parameter sharing, which uses the same parameters across all layers. These strategies allow ALBERT to achieve performance comparable to BERT-base with only a fraction of the parameters (~12M). Additionally, it replaces NSP with Sentence Order Prediction (SOP), a task designed to improve inter-sentence coherence understanding. ALBERT is especially valuable in scenarios where memory or computational resources are limited.

3.6.5. XLNet

XLNet is a Transformer-based model developed by Google and Carnegie Mellon University as an alternative to BERT [30]. It combines autoregressive pretraining (like GPT) with bidirectional context modeling via Permutation Language Modeling. Instead of masking tokens, XLNet learns to predict each token given all possible permutations of the input sequence. This approach addresses a limitation of BERT’s MLM, which introduces a mismatch between pretraining and fine-tuning, as masked tokens never appear during inference. Additionally, XLNet is built upon the Transformer-XL architecture, incorporating segment-level recurrence and a form of memory that allows it to capture longer contexts beyond BERT’s 512-token limit.

3.6.6. mBERT

Multilingual BERT (mBERT) is a version of BERT trained on Wikipedia articles from 104 different languages, using a shared WordPiece vocabulary and without any explicit language identifiers. While it retains the original BERT-base architecture, its multilingual training results in lower per-language representational depth compared to monolingual models. Despite this limitation, mBERT exhibits robust cross-lingual transfer capabilities, making it useful in low-resource settings or when training data span multiple languages. This study provides a benchmark for evaluating the benefits of language-specific pretraining (e.g., BETO) against multilingual versatility.

3.6.7. ELECTRA

ELECTRA is a BERT-like model developed by Google that replaces MLM with Replaced Token Detection (RTD) during pretraining [31]. In this paradigm, a small generator first corrupts the input by replacing some tokens, and a discriminator learns to detect which tokens have been replaced. Unlike BERT, where only masked tokens contribute to the loss function, ELECTRA learns from all tokens, making training more sample-efficient. ELECTRA-Small is a scaled-down version designed for use in constrained environments or with smaller datasets. Despite having significantly fewer parameters (~14M), it often outperforms similarly sized MLM-based models due to the efficiency of RTD. In the context of this study, ELECTRA-Small is evaluated to determine whether a lightweight architecture can perform well in scenarios with limited training data, such as classroom discourse analysis.

4. Results

This section presents the evaluation outcomes of the BERT-based models applied to the task of teacher behavior classification in Physical Education contexts. The analysis is structured in three main parts: (1) the evaluation metrics used to assess model performance, (2) the hyperparameter optimization strategy adopted, and (3) the results obtained for each model. All the computation process has been carried out using a 12th Gen Intel(R) Core(TM) i7-12650H CPU with 16 GB RAM and a NVIDIA GeForce RTX 3050 Ti Laptop. All the code has been written in Python 3.13, and is available for reproducibility in a GitHub repository (https://github.com/jhuertacejudo/teaching-behaviour-transformers, accessed on 24 September 2025).

4.1. Evaluation Metrics

To assess model performance on the teacher behavior classification task, we used a set of well-established metrics for multi-class classification. These include Accuracy, Precision, Recall, and F1-Score, both in their macro and weighted forms. These metrics enable a detailed analysis of performance across imbalanced class distributions.

Accuracy: measures the overall proportion of correct predictions across all classes:

where is the number of true positives for class , is the number of classes, and is the total number of samples.

Precision: quantifies the proportion of true positive predictions among all predicted positives for each class:

where represents false positives for class .

Recall: measures the proportion of true positives correctly identified out of all actual positives:

where denotes false negatives for class .

F1-Score: harmonic mean of Precision and Recall:

Macro F1: This treats all classes equally, regardless of their size.

Weighted F1:

Here, is the number of true instances in class , and is its proportion in the dataset.

4.2. Hyperparametrization Results

Hyperparameter tuning was performed using Optuna, a state-of-the-art optimization library based on Bayesian search. Specifically, the Tree-structured Parzen Estimator (TPE) algorithm was employed to efficiently explore the hyperparameter space. For each experiment, a stratified 80/20 train–validation split was applied to maintain class distribution (see Figure 6). The optimization process explored the following variables:

Figure 6.

Dataset distribution for training.

- Learning rate (1 × 10−6 to 1 × 10−4): Controls the step size in each optimization iteration.

- Weight decay (0.0 to 0.3): Adds regularization to reduce overfitting.

- Batch size (4 to 64): Affects gradient stability and computational efficiency.

- Number of epochs (2 to 50): Determines training duration; higher values can improve learning but also increase risk of overfitting.

- Maximum sequence length (16 to 512): Defines input size; given the complex structure of classroom utterances, this parameter was found to play a critical role in model accuracy.

Each suggested combination was evaluated using multiclass subset accuracy on the validation set as the main selection criterion. An early stopping criterion was implemented, limiting the search to 30 trials per model. The optimal configurations obtained for each architecture are summarized in Table 5.

Table 5.

Summary of the optimal parameters found with Optuna.

Several trends emerge from hyperparameter optimization. BETO required a low learning rate and prolonged training (50 epochs), together with a large maximum sequence length (436), highlighting its stability and its ability to model extended discourse—an essential feature when utterances span multiple clauses and contextual cues. DistilBERT, by contrast, converged very quickly (four epochs) with a higher learning rate and shorter input length (62), showing computational efficiency but reduced capacity to exploit long contexts. Similar patterns can be observed in other models: XLNet and mBERT benefited from long sequences (497 and 456 tokens, respectively), while RoBERTa and ALBERT performed adequately with shorter contexts, albeit at the cost of losing subtle discourse information.

These results confirm that longer sequence lengths consistently enhance classification accuracy, underscoring the importance of modeling extended context in spontaneous classroom speech. Nevertheless, despite the general improvements achieved through hyperparameter tuning, some categories remain challenging across models. Specifically, Control and Unclassified show consistently lower F1-scores. This difficulty is not only technical but also theoretical: the circumplex model assumes proximity and partial overlap between adjacent motivational styles, which naturally increases confusion between them. Furthermore, the Unclassified category is by definition heterogeneous, grouping utterances where no clear style can be identified, making them particularly ambiguous for both annotators and models. These factors explain why performance in these categories lags behind that of more distinct styles such as Chaos or Autonomy Support, and highlight the intrinsic complexity of modeling motivational discourse in real-world educational contexts.

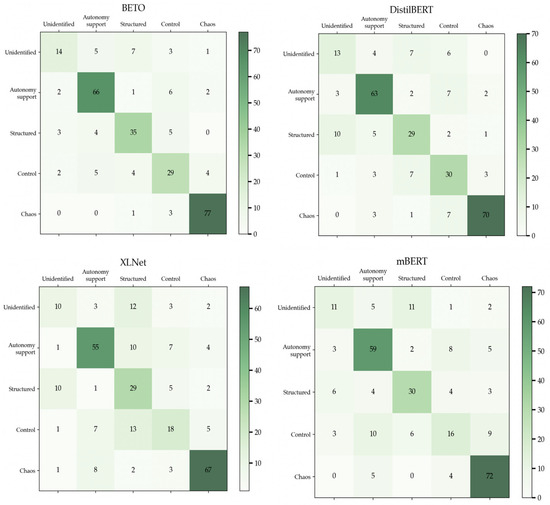

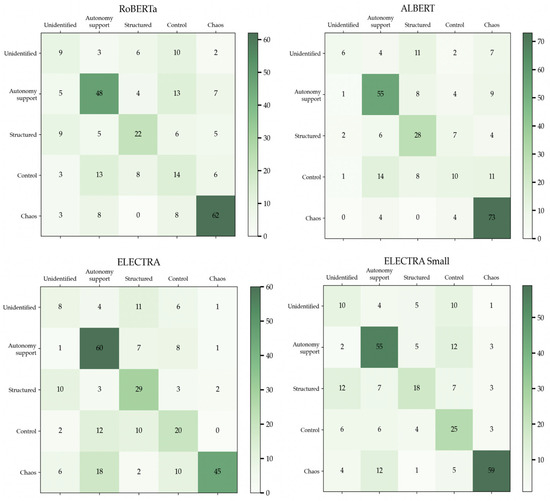

4.3. BERT Model Performance Comparison

This section compares the classification performance of all evaluated Transformer-based models, focusing on their overall effectiveness, class-wise metrics, and architectural implications. Results are analyzed based on accuracy, macro and weighted F1-Scores, and the F1 per class across the five categories: Autonomy Support, Structure, Control, Chaos, and Unclassified. Table 6 presents a synthesized comparison that facilitates the evaluation of their overall behavior in the classification task. It has been highlighted the most relevant results by using bold numeric. The table includes the main evaluation metrics obtained on the test set, highlighting the categories best and worst classified by each model, as well as the total training time.

Table 6.

Performance summary for BERT-based models.

Among all candidates, BETO clearly emerges as the most effective model. Trained exclusively on Spanish corpora, it achieves the highest global accuracy (79%), macro F1-Score (0.74), and weighted F1-Score (0.79). It performs exceptionally in “Chaos” (F1 = 0.99) and “Autonomy Support” (F1 = 0.84), with a well-balanced ability to distinguish between more nuanced categories such as “Structure” (0.74) and “Control” (0.64). Even for the ambiguous “Unclassified” category, BETO maintains an acceptable F1 of 0.55. These results reflect the strength of a monolingual model trained on large Spanish datasets, capable of capturing linguistic subtleties across discourse styles. Its performance supports the theoretical assumption that proximity between motivational styles, as represented in the circular model, often leads to confusion in borderline cases, yet BETO minimizes such confusion better than any other model. DistilBERT follows with a strong performance. Despite having fewer parameters, it reaches 73% accuracy, a macro F1-Score of 0.68, and a weighted F1 of 0.74. Its best results appear in “Chaos” (0.89) and “Autonomy Support” (0.81), while it handles “Structure” and “Control” with reasonable success (both F1 ≈ 0.62). Performance drops in “Unclassified” (F1 = 0.46), as with most models. Given its reduced computational footprint, DistilBERT provides an attractive trade-off between speed and accuracy, particularly for well-differentiated styles.

In contrast, RoBERTa, despite its robust architecture and training corpus, performs notably worse in this task, with only 56% accuracy, a macro F1 of 0.50, and a weighted F1 of 0.56. It achieves decent classification in “Chaos” (0.76) and “Autonomy Support” (0.62), but struggles considerably in “Control” (0.29), “Structure” (0.51), and “Unclassified” (0.31). These results may be explained by its original design focused on English, which may limit its capacity for syntactic and pragmatic nuances in Spanish classroom discourse. Moreover, the smaller dataset size might hinder RoBERTa’s ability to generalize effectively in more ambiguous categories. ALBERT, optimized for parameter efficiency, performs moderately (62% accuracy, macro F1 = 0.52, weighted F1 = 0.59). It reaches solid scores in “Chaos” (0.79) and “Autonomy Support” (0.69) but shows considerable degradation in “Control” (0.28) and “Unclassified” (0.30). The use of parameter sharing and factorized embeddings may limit the model’s representational depth, making it less suited for fine-grained classification tasks with subtle semantic boundaries.

XLNet, with its permutation-based autoregressive training and long-context memory, achieves 64% accuracy, macro F1 of 0.58, and weighted F1 of 0.64. It performs very well in “Chaos” (0.83) and “Autonomy Support” (0.73), validating the model’s strength in identifying strong intra-sentence dependencies. However, it performs less consistently in “Structure” (0.51), “Control” (0.45), and especially “Unclassified” (0.38), where class boundaries are less defined. This may reflect a relative architectural mismatch between XLNet’s pretraining strategy and the complexity of discourse-level differentiation in low-resource educational data. mBERT, trained on Wikipedia in over 100 languages, offers a middle-ground solution with 67% accuracy, macro F1 of 0.61, and weighted F1 of 0.66. While not tailored for Spanish, it performs well in “Chaos” (0.84) and “Autonomy Support” (0.74), and adequately in “Structure” (0.62). However, it struggles in “Control” and “Unclassified” (F1 = 0.42 each), suggesting that multilingual generalization is insufficient when fine-grained understanding of Spanish classroom pragmatics is required. Nonetheless, its performance demonstrates that mBERT can be a practical option for multilingual contexts, especially in bilingual or international classrooms. ELECTRA, despite its pretraining efficiency through Replaced Token Detection, underperforms with 58% accuracy, macro F1 = 0.53, and weighted F1 = 0.58. It classifies “Autonomy Support” and “Chaos” moderately well (F1 ≈ 0.69 each) but exhibits more confusion in “Structure” (0.55), “Control” (0.44), and “Unclassified” (0.28). These limitations highlight challenges in applying ELECTRA to nuanced multiclass classification tasks. Interestingly, ELECTRA-Small slightly outperforms its larger counterpart with 60% accuracy, macro F1 = 0.54, and weighted F1 = 0.60. It particularly improves in “Chaos” (0.79) and “Control” (0.49), likely due to its lower model capacity, which reduces overfitting risks in small datasets. Its simplified architecture appears better suited to constrained data environments, confirming that in limited-resource scenarios, smaller models can offer more stable generalization. The following Figure 7 and Figure 8 show the confusion matrixes obtained by the compared models.

Figure 7.

Confusion matrix for the different models (part I).

Figure 8.

Confusion matrix for the different models (part II).

In conclusion, performance varied significantly across models, with BETO clearly outperforming the rest due to its language-specific pretraining. DistilBERT and XLNet also deliver competitive results, while RoBERTa and ALBERT fall short in more ambiguous categories. mBERT shows solid cross-lingual generalization, and ELECTRA-Small stands out as a lightweight but robust alternative. Across all models, “Chaos” and “Autonomy Support” consistently achieved the highest F1 scores, indicating well-defined linguistic patterns. In contrast, “Control” and “Unclassified” remain the most challenging, underscoring the complexity of modeling motivational discourse categories when theoretical overlap exists.

BETO’s superior performance can be explained by several qualitative factors. First, its monolingual pretraining on large-scale Spanish corpora provides extensive vocabulary coverage and a deep understanding of Spanish syntax and pragmatics. This linguistic alignment enables the model to recognize discourse markers, idiomatic constructions, and subtle semantic cues that are frequent in classroom interactions but less well represented in multilingual or English-oriented models. Second, BETO tolerates longer input sequences during fine-tuning, which is critical for capturing the extended and complex utterances often found in Physical Education lessons. This capacity helps differentiate styles that are theoretically close in the circumplex model, such as Structure and Control, where small contextual differences determine classification. Third, BETO benefits from exposure to diverse Spanish textual genres during pretraining, which strengthens its ability to generalize across registers and to identify categories like Chaos and Autonomy Support that rely heavily on emotional tone and interactional cues.

BETO also exhibited variable performance across categories, achieving particularly strong results in Structure but consistently weaker results in Control. The strong performance in Structure can be partly attributed to the larger number of training samples available for this class, both before and after data augmentation. This larger sample size, combined with BERT’s capacity to learn from a more diverse dataset, contributed to its robust classification ability in this category. In contrast, recurrent misclassifications among Unclassified, Autonomy Support, and Control suggest that these categories share overlapping features that the model struggles to distinguish. A qualitative analysis revealed that several linguistic and contextual patterns in these categories are inherently similar, which complicates the task of drawing clear boundaries between them. This underscores the complexity of the classification task and points to the potential need for more sophisticated models or additional techniques to better separate such categories. As illustrated in Table 7, a substantial number of errors occurred when utterances labeled as Control were misclassified as Autonomy Support. In this table, phrases associated with Control—such as “Who is winning?” or “Bad, terrible”—were incorrectly predicted as Autonomy Support. These examples highlight some notable discordances between categories when there is a lack of situational context for shorter tokens.

Table 7.

Examples of misclassified utterances.

4.4. Statistical Result Analysis

In order to complement the comparative evaluation across models, an extended statistical analysis was conducted to quantify the robustness of BETO’s performance and to assess the contribution of the data augmentation strategy. Following standard practice in multi-class classification, stratified bootstrap confidence intervals, approximate randomization tests, and one-vs-rest McNemar tests were applied to the results. The ablation between BETO with and without data augmentation was first examined. Both variants were evaluated on the same held-out test set, which included expert-validated synthetic utterances to minimize evaluation bias. As presented in Table 8, accuracy was found to increase from 0.58 (95% CI: 0.54–0.62) to 0.78 (95% CI: 0.74–0.83) when augmentation was applied. Similarly, Macro-F1 rose from 0.54 (95% CI: 0.51–0.58) to 0.73 (95% CI: 0.68–0.79). Approximate randomization tests with 10,000 permutations confirmed that these improvements were statistically significant (p < 0.001 for both metrics). The most substantial gains were observed in minority classes. In Chaos (class 4), performance improved from an F1 of 0.02 without augmentation to 0.92 with augmentation, while in Autonomy Support (class 1) the F1 increased from 0.67 to 0.85. A confusion matrix comparing BETO with and without augmentation is displayed in Figure 9, illustrating the reduction in systematic misclassifications when synthetic data were incorporated.

Table 8.

Statistical BETO comparison with and without augmentation.

Figure 9.

Confusion matrix of BETO with and without augmentation.

Error disagreement patterns were further investigated through one-vs-rest McNemar tests using the chi-square approximation. The results are shown in Table 9. Statistically significant advantages for BETO were found in Chaos (χ2 = 53.77, p < 0.001) and Autonomy Support (χ2 = 15.34, p < 0.001). For the remaining categories, differences were not statistically significant. This indicates that BETO’s superiority is particularly concentrated in minority classes, where classification accuracy is most difficult to achieve and most valuable for downstream applications.

Table 9.

One-vs-Rest McNemar test results (augmentation vs. without augmentation).

Subsequently, comparisons were performed between BETO, DistilBERT, and mBERT, all trained with data augmentation to ensure fairness. As reported in Table 10 (the most relevant results have been highlighted in bold numeric), BETO achieved superior performance, with an accuracy of 0.78 (95% CI: 0.74–0.83) and Macro-F1 of 0.73 (95% CI: 0.68–0.79). DistilBERT obtained slightly lower results (Accuracy = 0.74, Macro-F1 = 0.69), with approximate randomization tests yielding p-values of approximately 0.05, indicating differences close to the threshold of statistical significance. mBERT performed notably worse (Accuracy = 0.66, Macro-F1 = 0.60), with differences confirmed as highly significant (p < 0.001 for both metrics). These findings suggest that the monolingual pretraining of BETO provides consistent advantages.

Table 10.

Comparison of BETO, DistilBERT, and mBERT (with augmentation).

Overall, the statistical analyses demonstrate that data augmentation leads to substantial and statistically significant improvements in BETO, particularly in classes with low representation. BETO was also shown to consistently outperform DistilBERT and mBERT, with significance levels ranging from marginal (p ≈ 0.05 against DistilBERT) to strong (p < 0.001 against mBERT). The combination of bootstrap intervals, approximate randomization tests with 10,000 permutations, and McNemar chi-square analyses ensures that the findings are robust and not explained by chance variation.

4.5. Generalization to Unseen Classroom Data

To assess the practical applicability of the trained BERT model, it was applied to a previously unseen classroom session, excluded from both the training and validation phases. The aim of this analysis is not to quantify classification accuracy, but rather to explore the model’s behavior when classifying real discourse in a natural instructional context. The predicted distribution of the teachers’ style is analyzed, and their progression throughout the session, and the transitions between categories are discussed. This qualitative assessment offers insight into the model’s potential utility in real-world educational environments and highlights the type of pedagogical feedback that could be derived from its deployment in authentic classroom scenarios.

It is useful to consider the overall distribution of predicted teaching styles across the entire session. Figure 10 presents the aggregated classifications generated by the model. The chart reveals that the model assigned approximately 31% of the phrases to the “Unidentified Style” category, followed by a high proportion labeled as “Structure” (25%) and “Control” (19%). The “Chaos” style accounts for 15% of the predictions, while “Autonomy Support” appears in only 10% of cases. This pattern suggests a session characterized by a strong presence of teacher-led organization and directive behavior, accompanied by a substantial number of utterances the model deemed ambiguous or difficult to classify. In contrast, the manually labeled dataset used for training exhibited a different distribution, with “Chaos” being the most prevalent category, and “Autonomy Support” having a considerably stronger presence. Additionally, the proportions of utterances labeled as “Chaos” or “Unidentified” were notably lower. These differences may be attributed to two main factors. First, the model may be overgeneralizing ambiguous utterances into the “Unidentified” category. Second, the specific teaching style of the instructor in this session may diverge from the stylistic patterns observed in the broader dataset.

Figure 10.

Distribution of the predicted classes of different utterances in a complete recorded class.

5. Discussion and Future Directions

The experimental results provide clear evidence regarding the proposed hypotheses. Transformer models achieved high accuracy in classifying teacher behaviors into motivational categories, supporting H1. The application of generative data augmentation yielded notable improvements in performance for minority classes, confirming H2. Analysis of utterance length revealed that extreme short or long teacher turns can affect classification outcomes, and preprocessing strategies such as tokenization and truncation enhanced model robustness, addressing H3. Finally, among the evaluated Transformer variants, some offered a favorable balance between accuracy and computational efficiency, validating H4 and providing practical guidance for real-world deployment. Overall, these findings demonstrate that the study’s methodological approach effectively addresses the initial research questions.

This study has proven the superior performance of BETO over other models. Its monolingual pretraining on large-scale Spanish corpora equips it with richer lexical coverage and deeper knowledge of Spanish syntax and discourse markers, enabling it to capture subtle pragmatic cues in classroom dialogues. This linguistic alignment, combined with its ability to process longer utterances, proves particularly advantageous for distinguishing between closely related styles in the circumplex model, such as Structure and Control. Nonetheless, other models also show noteworthy strengths: DistilBERT achieves competitive accuracy with far fewer parameters, making it attractive for efficiency-constrained environments; XLNet demonstrates robustness in capturing long-range dependencies, which benefits categories such as Chaos; and mBERT provides solid cross-lingual generalization, highlighting its potential in multilingual educational contexts. Together, these findings underline the importance of both language-specific pretraining, as exemplified by BETO, and the practical trade-offs offered by lighter or multilingual alternatives when adapting automated discourse analysis to diverse classroom settings.

While this work focuses on evaluating model performance, practical deployment and ethical implications are also crucial to consider. In real-world classroom settings, the feasibility of deploying large-scale language models is limited by computational resources and latency constraints. Lightweight models such as DistilBERT and ELECTRA-Small, which offer a favorable trade-off between efficiency and performance, may be better suited for real-time or low-resource educational environments. Their faster inference speeds and smaller memory footprints make them viable candidates for integration into learning platforms or classroom support tools, particularly in schools with limited infrastructure. It is important to note, however, that the primary objective of this work is not to develop a real-time evaluation system, but rather to provide a pedagogical resource that can be used retrospectively to support teachers in improving their practices. Beyond efficiency, future work could also explore real-time adaptability, enabling systems to dynamically adjust to evolving classroom interactions and feedback, thus improving robustness and responsiveness. It is worth noting that the main focus of this study is not to perform a multilingual comparative evaluation, but rather to address the specific challenge of classifying teaching styles in Spanish classroom discourse. This focus responds to the lack of resources in Spanish and allows us to build a domain-specific dataset aligned with the linguistic and pedagogical context under study. While cross-lingual generalization is an important direction for future research, our contribution lies primarily in advancing automated discourse analysis within Spanish-speaking educational environments, thereby filling a critical gap in the current literature.

An important limitation of this study lies in the size of the dataset, which remains relatively small and partially supplemented with synthetic data. Although the augmentation process was carefully designed and supervised by domain experts to ensure the quality and relevance of the synthetic samples, we acknowledge that relying on such data may still influence generalizability. Expanding the dataset to include additional subjects, institutions, and classroom contexts would therefore be crucial. Future research could also incorporate an ablation study comparing models trained with and without synthetic augmentation, in order to better quantify the specific impact of synthetic samples on performance.

Although our system was trained and tested on a self-curated dataset, this choice is justified because the goal of our work is not a generic comparison of models. Instead, we aim to classify teaching styles in Physical Education, a context for which no benchmark datasets exist. According to the No Free Lunch Theorem [32], no single model is universally best across all tasks; therefore, we focused on identifying the BERT variant that performs best for our specific classification objective. To the best of our knowledge, this is the first attempt to create a dataset capturing real teacher behaviors in this domain, providing a valuable resource for future research. Another limitation concerns the absence of publicly available benchmark datasets for the classification of teaching styles in Physical Education. As a result, a direct comparison with established baselines is not currently feasible. While this prevents situating our results within a broader benchmarking framework, it also underscores the novelty of this contribution: to the best of our knowledge, our dataset constitutes the first attempt to systematically capture real teacher behaviors in this domain. By making it available, we provide a foundation for future comparative studies and benchmarking efforts, which will be essential for advancing research in automated discourse analysis in educational contexts.

Beyond the specific setting and subject matter studied here, the proposed methodology has the potential to generalize across different academic disciplines and languages. The use of multilingual pretrained models (e.g., mBERT, XLM-R) provides a foundation for extending this approach to diverse linguistic contexts. Future research could also examine the incorporation of multimodal data—such as audio, video, or interaction logs—to capture a more holistic picture of classroom dynamics.

Finally, the use of automated systems for teacher or student monitoring raises important ethical and privacy considerations. Any deployment of such systems should be guided by principles of transparency, fairness, and respect for autonomy. Data collection and use must comply with privacy regulations and involve the informed consent of all participants. Importantly, these tools should be framed as support mechanisms for pedagogical improvement, rather than instruments for punitive surveillance. Care must also be taken to identify and mitigate potential biases in the models, which may disproportionately affect individuals based on gender, accent, socioeconomic background, or other factors.

6. Conclusions

This study has demonstrated the potential of Transformer-based architectures for the automatic classification of teacher discourse in Physical Education, highlighting both their capabilities and limitations in real-world educational settings. Among the models evaluated, BETO clearly emerged as the top performer, achieving an accuracy of 79% and exhibiting exceptional performance in well-defined categories such as Chaos and Autonomy Support. These results reaffirm the value of language-specific models for tasks that demand sensitivity to the linguistic and pragmatic nuances of Spanish. Unlike multilingual architectures such as mBERT, or models with high parameter complexity like XLNet or ELECTRA, BETO can identify more contextual relationships even with a relatively modest dataset—underscoring the importance of linguistic alignment and model scalability.

Beyond performance metrics, this study has offered a deeper understanding of the complexities involved in applying Natural Language Processing to the classification of pedagogical behavior. Identifying teaching styles from speech transcripts is not a trivial or purely technical task; it involves interpreting ambiguity, overlapping constructs, and subtle stylistic variations that emerge dynamically in real classrooms. From this perspective, classification errors should not be viewed as model failures, but as reflections of the multifaceted nature of human interaction in education. Despite the limited size of the dataset, the results are promising. The model has shown the ability to capture salient patterns in real classroom discourse, suggesting that automatic classification of teacher behavior is not only feasible, but a viable component of broader educational analysis systems. While the current model is not yet ready for deployment in classroom settings, it could serve as the basis for an early prototype—ideally integrated with expert interpretation and system-level feedback mechanisms.

Another significant contribution of the work lies in the dataset engineering process. The use of generative data augmentation, particularly through LLMs like ChatGPT (version 4o has been used in this work), proved beneficial in addressing class imbalance and increasing dataset diversity. However, the process also underscored a critical lesson: synthetic data generation must be guided by domain expertise. Volume alone does not ensure quality; the generated content must remain faithful to the real-world phenomena it aims to represent. Thus, several research directions emerge. One of the most pressing issues is the expansion and diversification of the dataset. While the current corpus was carefully constructed and expert-annotated, it remains limited in scale and scope. Future studies should aim to include data from other subjects beyond Physical Education, where the nature of verbal interaction and instructional practices may differ substantially. Additionally, increasing the number of participating teachers and institutions would help mitigate biases tied to individual teaching styles and enhance the model’s generalizability across diverse classroom contexts.

Finally, this research points to the importance of exploring multimodal approaches to teacher behavior analysis. Verbal discourse is just one layer of classroom interaction. Tone, prosody, gestures, pauses, and nonverbal cues all play crucial roles in shaping pedagogical meaning. Future work could integrate audio and video data into multimodal models capable of capturing these additional dimensions, thereby providing a more holistic understanding of teacher communication and its impact on the learning environment.

Author Contributions

Conceptualization, D.G.-R., E.F.Á., S.Y.-L. and L.M.-H.; Methodology, S.Y.-L. and L.M.-H.; Software, J.H.C.; Validation, J.H.C., E.F.Á. and D.G.-R.; Formal Analysis, S.Y.-L. and L.M.-H.; Investigation, S.Y.-L.; Resources, D.G.-R. and E.F.Á.; Data Curation, J.H.C.; Writing—Original Draft Preparation, S.Y.-L. and L.M.-H.; Writing—Review and Editing, S.Y.-L. and L.M.-H.; Visualization, S.Y.-L.; Supervision, D.G.-R. and E.F.Á.; Project Administration, D.G.-R. and E.F.Á.; Funding Acquisition, D.G.-R. and E.F.Á. All authors have read and agreed to the published version of the manuscript.

Funding

This study has been funded by the Government of Aragon for the development of I + D + i projects in priority and multidisciplinary lines for the period 2024–2026 (project code: PROY S01_24) and by the Junta de Andalucía’s Department of University, Research and Innovation, under the Resolution of 11 June 2024 (BOJA No. 115, 14 June 2024), for the call supporting applied research and experimental development projects (Line 2), project code DGP_PIDI_2024_00987. Evelia Franco was supported by a Ramón y Cajal postdoctoral fellowship (RYC2022-036278-I) funded by the Spanish Ministry of Science and Innovation (MCIN), the State Research Agency (AEI) and the European Social Fund Plus (FSE+).

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflict.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| ASR | Automatic Speech Recognition |

| BERT | Bidirectional Encoder Representations from Transformers |

| BETO | BERT for Spanish (monolingual adaptation) |

| NLP | Natural Language Processing |

| MLM | Masked Language Modeling |

| NSP | Next Sentence Prediction |

| SOP | Sentence Order Prediction |

| RTD | Replaced Token Detection |

| mBERT | Multilingual BERT |

| SDT | Self-Determination Theory |

| LLM | Large Language Model |

| TPE | Tree-structured Parzen Estimator (used in Optuna for optimization) |

| GPU | Graphics Processing Unit |

| CLS | Classification token (used in BERT input format) |

| SEP | Separator token (used in BERT input format) |

| PAD | Padding token (used in BERT input format) |

References

- Falcon, S.; Admiraal, W.; Leon, J. Teachers’ engaging messages and the relationship with students’ performance and teachers’ enthusiasm. Learn. Instr. 2023, 86, 101750. [Google Scholar] [CrossRef]

- Vermote, B.; Aelterman, N.; Beyers, W.; Aper, L.; Buysschaert, F.; Vansteenkiste, M. The role of teachers’ motivation and mindsets in predicting a (de)motivating teaching style in higher education: A circumplex approach. Motiv. Emot. 2020, 44, 270–294. [Google Scholar] [CrossRef]

- Franco, E.; Coterón, J.; Spray, C.M. Antecedents of Teachers’ Motivational Behaviours in Physical Education: A Scoping Review Utilising Achievement Goal and Self-Determination Theory Perspectives. Int. Rev. Sport Exerc. Psychol. 2024, 1–40. [Google Scholar] [CrossRef]

- González-Peño, A.; Franco, E.; Coterón, J. Do Observed Teaching Behaviors Relate to Students’ Engagement in Physical Education. Int. J. Environ. Res. Public Health 2021, 18, 2234. [Google Scholar] [CrossRef]

- Coterón, J.; González-Peño, A.; Martín-Hoz, L.; Franco, E. Predicting students’ engagement through (de)motivating teaching styles: A multi-perspective pilot approach. J. Educ. Res. 2025, 118, 243–256. [Google Scholar] [CrossRef]

- Billings, K.; Chang, H.-Y.; Lim-Breitbart, J.M.; Linn, M.C. Using Artificial Intelligence to Support Peer-to-Peer Discussions in Science Classrooms. Educ. Sci. 2024, 14, 1411. [Google Scholar] [CrossRef]

- Wang, S.; Wang, F.; Zhu, Z.; Wang, J.; Tran, T.; Du, Z. Artificial intelligence in education: A systematic literature review. Expert Syst. Appl. 2024, 252, 124167. [Google Scholar] [CrossRef]

- Adoma, A.F.; Henry, N.-M.; Chen, W. Comparative Analyses of BERT, RoBERTa, DistilBERT, and XLNet for Text-Based Emotion Recognition. Proc. Int. Comput. Conf. Wavelet Act. Media Technol. Inf. Process. 2020, 17, 117–121. [Google Scholar] [CrossRef]

- Alic, S.; Demszky, D.; Mancenido, Z.; Liu, J.; Hill, H.; Jurafsky, D. Computationally Identifying Funneling and Focusing Questions in Classroom Discourse. arXiv 2022, arXiv:2208.04715. [Google Scholar] [CrossRef]

- Jensen, E.; Pugh, S.L.; D’Mello, S.K. A Deep Transfer Learning Approach to Modeling Teacher Discourse in the Classroom. Proc. Int. Learn. Analytics Knowl. Conf. (LAK) 2021, 11, 302–312. [Google Scholar] [CrossRef]

- Kasneci, E.; Sessler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E.; et al. ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Lan, Y.; Li, X.; Du, H.; Lu, X.; Gao, M.; Qian, W.; Zhou, A. Survey of Natural Language Processing for Education: Taxonomy, Systematic Review, and Future Trends. arXiv 2024, arXiv:2401.07518. [Google Scholar] [CrossRef]

- Sajja, R.; Sermet, Y.; Cikmaz, M.; Cwiertny, D.; Demir, I. Artificial Intelligence-Enabled Intelligent Assistant for Personalized and Adaptive Learning in Higher Education. Information 2024, 15, 596. [Google Scholar] [CrossRef]