1. Introduction

Speech synthesis [

1], as one of the core technologies in the field of human-computer voice interaction, primarily aims to convert input text into natural and fluent speech. It has found wide-ranging applications in human communication. Speech synthesis technology is inherently interdisciplinary, combining phoneme conversion rules from linguistics, acoustic modeling principles from phonetics, digital encoding techniques from signal processing, and neural network architecture optimization from machine learning. This integration enables more intelligent and user-friendly voice interaction experiences [

2].

Mongolian, as one of China’s minority languages, differs significantly from mainstream languages such as Mandarin Chinese. It exhibits unique characteristics in terms of expression style, prosody, and emotional coloration, which pose additional challenges for emotional speech synthesis. Moreover, emotional expression in Mongolian is highly nuanced and polysemous—variations in intonation, stress, and speech mood often convey rich emotional information. In recent years, notable progress has been made in Mongolian speech synthesis research. However, compared to mainstream languages like Mandarin, Mongolian speech synthesis remains a relatively underexplored area, with higher research difficulty and several unresolved technical challenges in emotional synthesis. Key research difficulties include how to effectively express emotions in synthesized Mongolian speech, how to control the speaking rate of emotional speech, and how to differentiate between various emotional styles through speech synthesis.

Therefore, the study of Mongolian emotional speech synthesis remains of significant importance. On one hand, such technology can play a vital role in education, cultural preservation, and language learning, enabling people in regions where Mongolian is the primary language to better acquire and use their native language. On the other hand, integrating Mongolian emotional speech synthesis into applications such as smart home systems and voice assistants can help address real-world needs in these regions, significantly enhancing quality of life and promoting the advancement of regional intelligent infrastructure.

To address the aforementioned challenges, this paper proposes a speech Rate-Controllable Mongolian emotional speech synthesis model based on improved Tacotron2 (SRC-IT2). The main contributions of this work are as follows:

An end-to-end Mongolian speech synthesis model is constructed based on an improved Tacotron2 architecture. It incorporates the unique linguistic characteristics of Mongolian, optimizes the front-end processing module, and utilizes a G2P-Seq2Seq model to convert Mongolian characters into phonemes effectively.

Building upon the end-to-end architecture, a joint emotion analysis module for both text and speech is embedded, allowing the model to learn optimal emotional style features specific to Mongolian.

A style encoder and speech rate control variable are integrated into the acoustic modeling process, further optimizing the Tacotron2-based synthesis system to enable dynamic adjustment of speaking rate in Mongolian emotional speech generation.

2. Related Work

In recent years, with the rapid advancement of artificial intelligence technologies, deep learning has driven transformative progress across various domains. Speech synthesis techniques based on deep learning have increasingly attracted significant attention from both academia and industry.

2.1. Speech Synthesis

Jose Sotelo et al. proposed the Char2Wav model [

3], which integrates all stages of speech synthesis into a unified end-to-end neural network framework, thereby avoiding the complex intermediate processes inherent in traditional speech synthesis approaches.

Microsoft introduced the FastSpeech model [

4], which constructs a non-autoregressive generation framework based on a feed-forward Transformer architecture. By leveraging parallel computation, it significantly improves the generation speed of Mel-spectrograms, achieving a synthesis speed 38 times faster than baseline models. While FastSpeech made notable advancements in synthesis efficiency, it still suffered from issues such as Mel-spectrogram information loss and prolonged training time. To address these limitations, Microsoft Research proposed FastSpeech 2 [

5], which improved upon its predecessor by eliminating the teacher-student framework and directly training the model with ground-truth Mel-spectrograms. Additionally, it introduced multiple prosodic features such as pitch and energy to enhance the model’s capacity for prosody modeling.

Microsoft Research Asia and the Azure Speech Team released the NaturalSpeech model [

6], which enhanced the modeling of text priors while reducing the complexity of speech posteriors, significantly improving both naturalness and clarity of the synthesized speech.

Yao et al. proposed SR-TTS [

7], a Transformer-based optimized architecture composed of an encoder, a prosody regulator, and a decoder. This model not only improves the quality and naturalness of synthesized speech but also accelerates the synthesis process and improves inference efficiency, outperforming existing approaches in both speech quality and expressiveness. In the same year, Guo et al. introduced FLY-TTS [

8], a fast, lightweight, and high-quality end-to-end speech synthesis model. By replacing the decoder with a ConvNeXt module, the model size was significantly reduced. Furthermore, WavLM was utilized as a pretrained model for adversarial training, which effectively enhanced the quality and naturalness of the generated speech.

2.2. Emotional Speech Synthesis

Currently, mainstream approaches to emotional speech synthesis typically rely on large, emotion-specific datasets for each individual emotion category. Zhou et al. [

9], for example, utilized the IEMOCAP dataset to train an emotion recognition model. They then extracted emotional parameters for each category using this model and employed a Wasserstein Generative Adversarial Network (W-GAN) to transform neutral speech into emotionally expressive utterances.

Another straightforward method for emotional speech synthesis involves training synthesis models on manually labeled emotional corpora, using emotion labels as conditional inputs to guide the generation of speech with the corresponding emotional expression [

10]. However, this method often lacks the flexibility to adapt emotional expressiveness within the same emotion category based on different textual content. To overcome this limitation, Cai et al. [

11] proposed an emotion-aware speech synthesis approach that does not require explicit emotion labels. Their method integrates transfer learning, an emotion recognition model, and a speech synthesis model to generate speech with specific emotional characteristics.

Li et al. [

12] introduced DiCLET-TTS, a diffusion-based cross-lingual emotional transfer model that enables the transfer of emotional style from a source speaker to a target speaker, either within the same language or across different languages. Cho et al. [

13] proposed EmoSphere-TTS, which controls the emotional style and intensity of synthesized speech using spherical emotion vectors. Without any manual annotations, it leverages spherical Cartesian transformation to simulate emotional dimensions. They also introduced a dual-conditional adversarial network, which improves the quality of synthesized speech by modeling diverse emotional attributes. Gudmalwar et al. [

14] proposed VECL-TTS, a cross-lingual emotional speech synthesis model that supports both speaker identity and emotional style controllability. By extracting reference embeddings from the source language, VECL-TTS applies cross-lingual speech synthesis techniques to transfer both speaker characteristics and emotional expressions to the target language, thereby enhancing synthesis quality.

2.3. Mongolian Emotional Speech Synthesis

With the rapid advancement of intelligent Mongolian language processing technologies, Mongolian speech synthesis has reached a practical level of application, achieving high-quality speech generation. As a result, an increasing number of researchers are focusing on more natural, emotionally expressive, and highly expressive Mongolian emotional speech synthesis. However, current research in this area is still in its early stages.

Shan Yu [

15] proposed a Mongolian emotional speech synthesis model based on an end-to-end synthesis framework incorporating emotion adaptation techniques. This model introduced an emotion embedding layer that transforms emotion labels into vector representations, which are then concatenated with the outputs of the acoustic model, thereby improving training efficiency. Huang Aihong [

16] enhanced the emotional controllability of the Tacotron2 model by integrating a reference encoder and a variational autoencoder, further improving the model’s ability to capture and generate emotional variations in speech. Liang et al. [

17] proposed a lightweight Mongolian speech synthesis model based on deep convolutional neural networks, which significantly improved the naturalness and quality of synthesized Mongolian speech. The model also reduced training time while maintaining high synthesis accuracy.

3. Methods

3.1. Overview

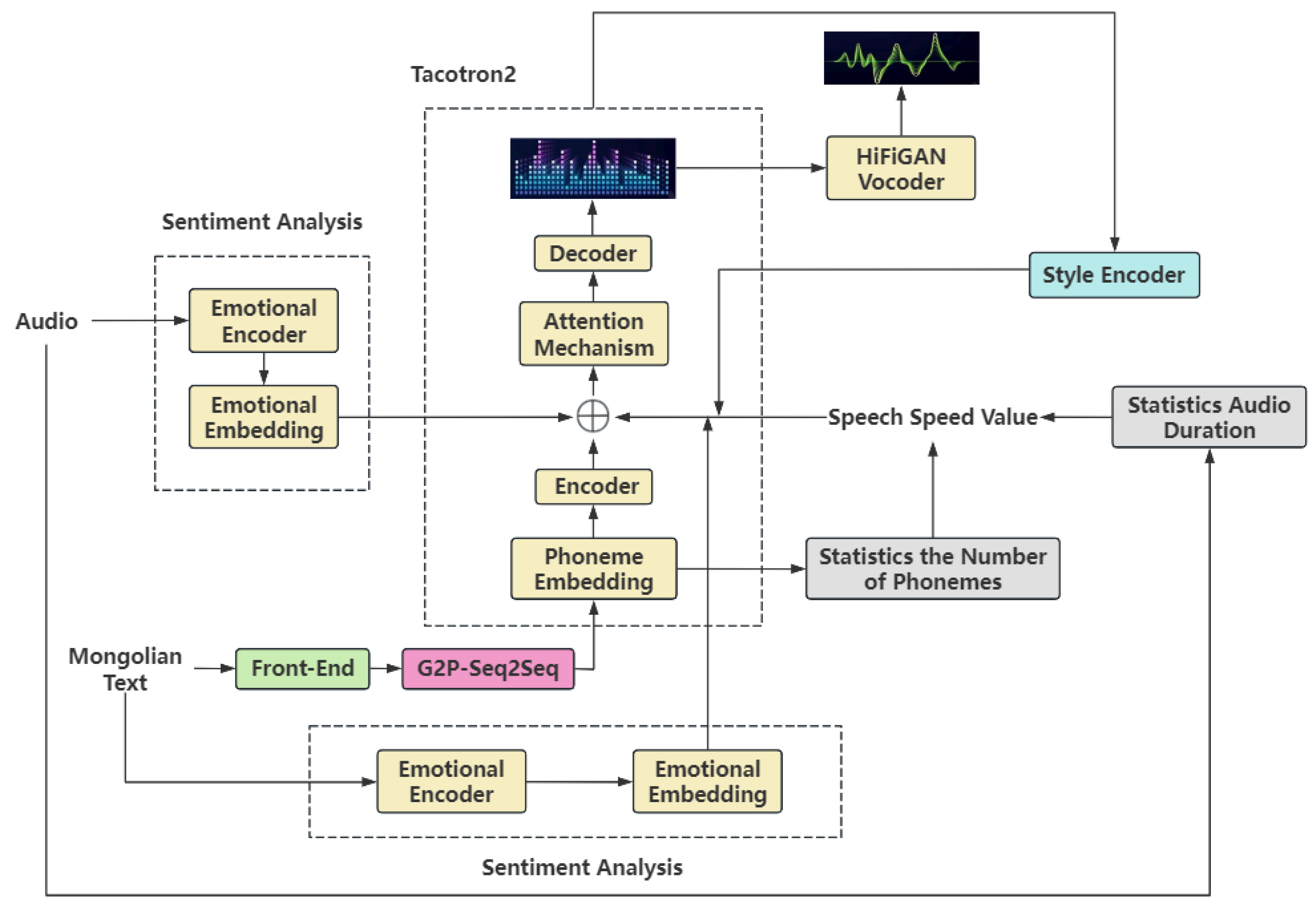

The overall architecture of the proposed SRC-IT2 is illustrated in

Figure 1. First, an end-to-end Mongolian speech synthesis module is constructed based on an enhanced Tacotron2 framework. To accommodate the unique linguistic characteristics of the Mongolian language, the front-end processing pipeline is optimized, and a G2P-Seq2Seq model is incorporated to enable accurate grapheme-to-phoneme conversion for Mongolian script. Second, a text-audio emotion analysis module is integrated into the synthesis framework, allowing the model to learn and generate representative emotional style features specific to Mongolian. Finally, a style encoder and a speech rate control variable are introduced to further improve the acoustic modeling capabilities of Tacotron2. This enhancement enables dynamic control over the speaking rate of synthesized Mongolian emotional speech, thereby achieving controllable emotional speech synthesis with respect to speaking rate.

3.2. Mongolian Speech Synthesis Based on Improved Tacotron2

Speech synthesis is a technology that converts input text into corresponding speech output. The synthesis process primarily consists of three critical components: front-end processing, acoustic modeling, and audio synthesis. The front-end processing module is responsible for analyzing the input text and extracting relevant linguistic features. The acoustic model maps these linguistic features to acoustic representations, while the audio synthesis module synthesizes the final speech waveform from the predicted acoustic features.

3.2.1. Front-End Processing

Before converting Mongolian script into phonemes, the original Mongolian characters are first transliterated into their corresponding Latin representations. Subsequently, a G2P-Seq2Seq model is employed to transform the Latin characters into the corresponding phoneme sequences. The G2P-Seq2Seq model is a Transformer-based grapheme-to-phoneme conversion model. Compared with traditional sequence-to-sequence (Seq2Seq) models based on RNN or LSTM, the Transformer architecture leverages self-attention mechanisms to capture dependencies between input and output elements more efficiently and accurately. This enables the model to achieve higher performance in sequence transformation tasks such as grapheme-to-phoneme conversion.

Specifically, the input Latin character sequence

X is first passed through an embedding layer to obtain the corresponding character embeddings

E. The encoder processes

E using a bidirectional LSTM, where the forward and backward hidden states are denoted as

and

, respectively. The final encoded representation is computed as:

where

,

n denotes the length of the input Latin character sequence

X, and

denotes the concatenation operation.

At time step

t, the decoder computes the attention weights

between the input sequence and the current decoding state via an attention mechanism.

Here,

denotes the attention scoring function, where

represents the decoder’s hidden state at the previous time step.

The context vector

is computed as:

The G2P-Seq2Seq model adopts a Transformer architecture, which effectively avoids the issues of vanishing and exploding gradients commonly encountered in traditional recurrent neural networks. This significantly enhances both the training efficiency and accuracy of the model, thereby improving the naturalness and precision of speech synthesis.

3.2.2. Acoustic Modeling

In the proposed approach, acoustic modeling is conducted based on an improved Tacotron2 architecture. The spectrogram prediction network adopts an encoder–attention–decoder structure, which primarily aims to convert the input text sequence into a frame-level Mel-spectrogram representation.

Specifically, the input character sequence is first encoded into character embeddings

E through an embedding layer. Then, local features are extracted using a three-layer convolutional module

, and the resulting features are passed through a bidirectional LSTM to generate the encoded sequence:

The decoder predicts the Mel-spectrogram frames sequentially from the encoded sequence

H, generating one frame at a time. The previously predicted spectrogram frame

is first fed into a two-layer fully connected pre-net, and the output of the pre-net at time step

t, denoted as

, is computed as:

where

and

are weight matrices, and

and

are bias terms.

Subsequently,

is concatenated with the attention context vector, and the combined representation is passed through a unidirectional LSTM network. The resulting output is then concatenated again with the attention context vector and projected through a linear transformation to obtain the predicted spectrogram frame

:

Finally, the predicted spectrogram frame is passed through a post-net, which estimates a residual that is added to the initial spectrogram frame (before convolution), thereby refining the overall spectrogram reconstruction process and producing the final predicted Mel-spectrogram.

3.2.3. Audio Synthesis

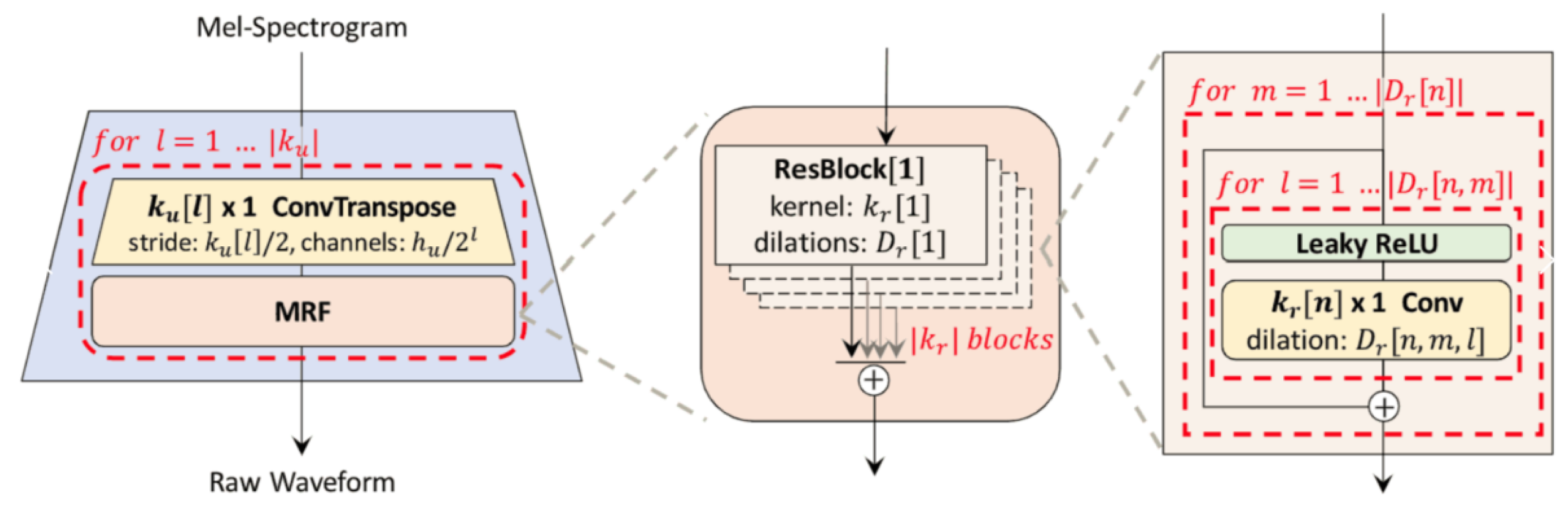

HiFi-GAN is a vocoder based on Generative Adversarial Networks (GANs). Its generator enhances speech quality through an upsampling architecture combined with Multi-Receptive Field (MRF) modules, while the discriminator improves the ability to distinguish between real and synthesized audio using Multi-Scale Discriminators (MSD) and Multi-Period Discriminators (MPD).

As illustrated in

Figure 2, the HiFi-GAN vocoder adopts a modular architecture for its generator, which primarily consists of two components: an upsampling structure and a MRF module. The generator performs upsampling of the Mel-spectrogram through one-dimensional transposed convolution layers, mapping low-temporal-resolution spectral features into high-resolution speech waveforms. Given the input Mel-spectrogram features

, after

N layers of transposed convolution operations, the output speech waveform

is obtained. The core mapping relationship can be expressed as:

The MRF module integrates information from multiple receptive fields, facilitating multi-scale feature fusion. This allows the generator to capture hierarchical acoustic patterns more effectively, thus enhancing the quality and naturalness of the synthesized speech.

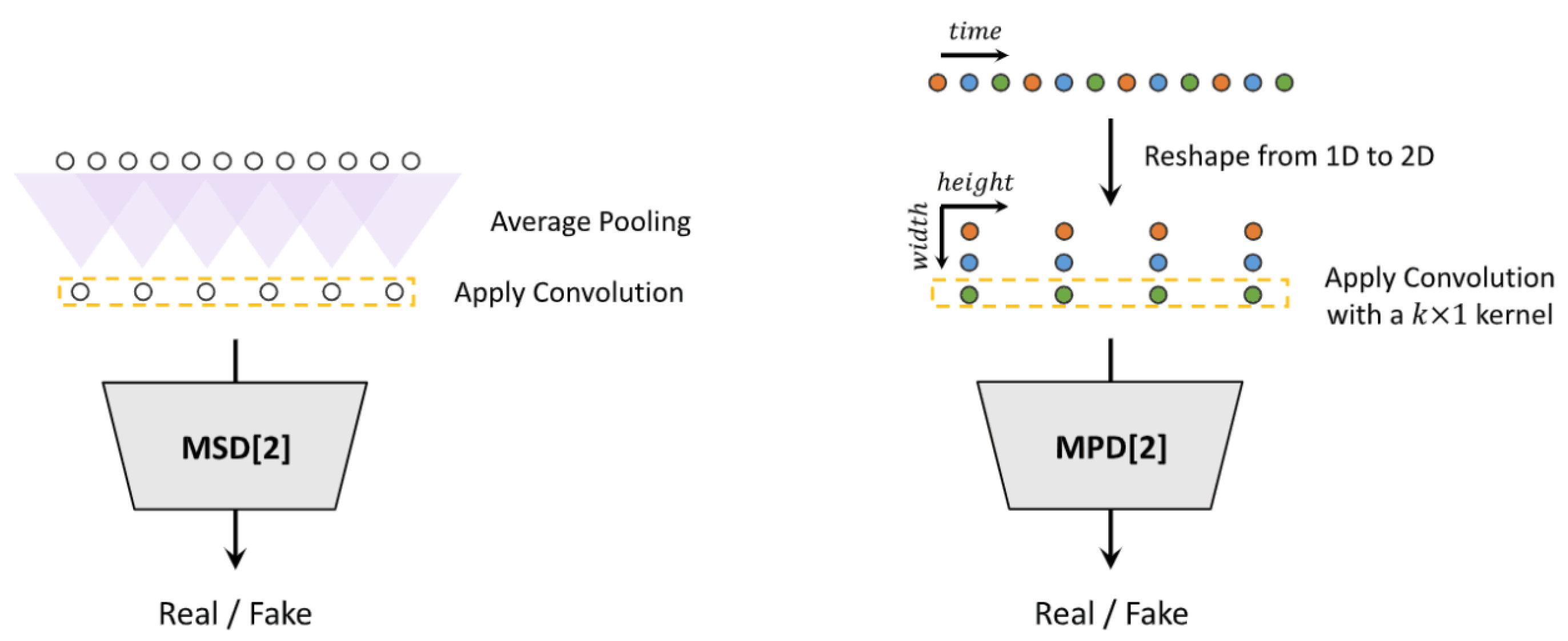

The discriminator of the HiFi-GAN vocoder, as illustrated in

Figure 3, is primarily designed to distinguish whether the input audio originates from the real dataset or is synthesized by the generator. It comprises two components: the MSD and the MPD. The discriminator adopts a combined architecture of MSD and MPD, where adversarial training is achieved by minimizing the generator loss and maximizing the discriminator loss. The generator loss function

consists of the adversarial loss

and the feature matching loss

, formulated as follows:

Here,

denotes the weighting coefficient of the feature matching loss, which is employed to balance the stability of adversarial training with the quality of the synthesized speech.

Traditional discriminators evaluate audio at a single fixed scale, whereas the MSD employs a multi-scale discrimination architecture that assesses audio waveforms across multiple temporal resolutions. This enables the capture of subtle variations within the audio, thereby enhancing the discriminator’s ability to differentiate between real and generated speech. The MPD focuses on modeling the periodic characteristics of the audio signal. By performing discrimination at various periodic scales, it helps the discriminator accurately identify the periodic structure inherent in natural speech, improving recognition accuracy and guiding the generator to produce more natural and coherent audio outputs.

3.3. Mongolian Emotional Speech Synthesis Based on Improved Tacotron2

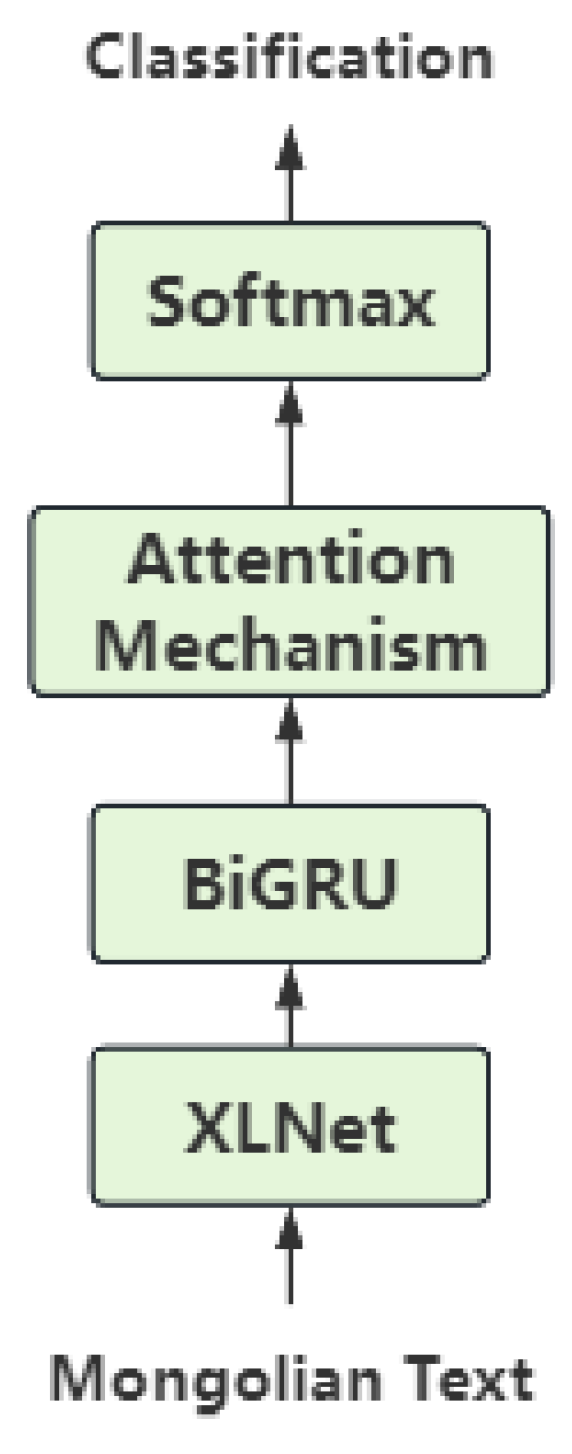

3.3.1. Text Sentiment Analysis

In the textual emotion analysis module, XLNet is first utilized to obtain context-aware word embeddings that capture semantic dependencies within the input text. Subsequently, a Bidirectional Gated Recurrent Unit (BiGRU) is employed to extract global textual features. An attention mechanism is then applied to dynamically adjust the weights of the extracted features—assigning higher weights to features with greater emotional relevance and lower weights to less informative ones. Finally, a softmax classifier is used to classify the refined emotional features, yielding the final sentiment prediction.

Figure 4 illustrates the architecture of the text emotion analysis module.

The XLNet model integrates the advantages of both autoregressive and autoencoding approaches, enabling it to capture richer contextual information and long-range dependencies within sequences. It incorporates two key pretraining tasks. The first is the permutation-based language modeling task, in which the input tokens are reordered according to all possible permutations. The model predicts each token based on these permutations, thereby learning to extract contextual information under various token orderings. The second is the application of the self-attention mechanism, which allows the model to effectively learn the dependencies between all tokens in the input sequence, thereby improving its ability to model context. Compared to other pretrained models, XLNet has demonstrated superior performance in tasks such as text classification and sentiment analysis.

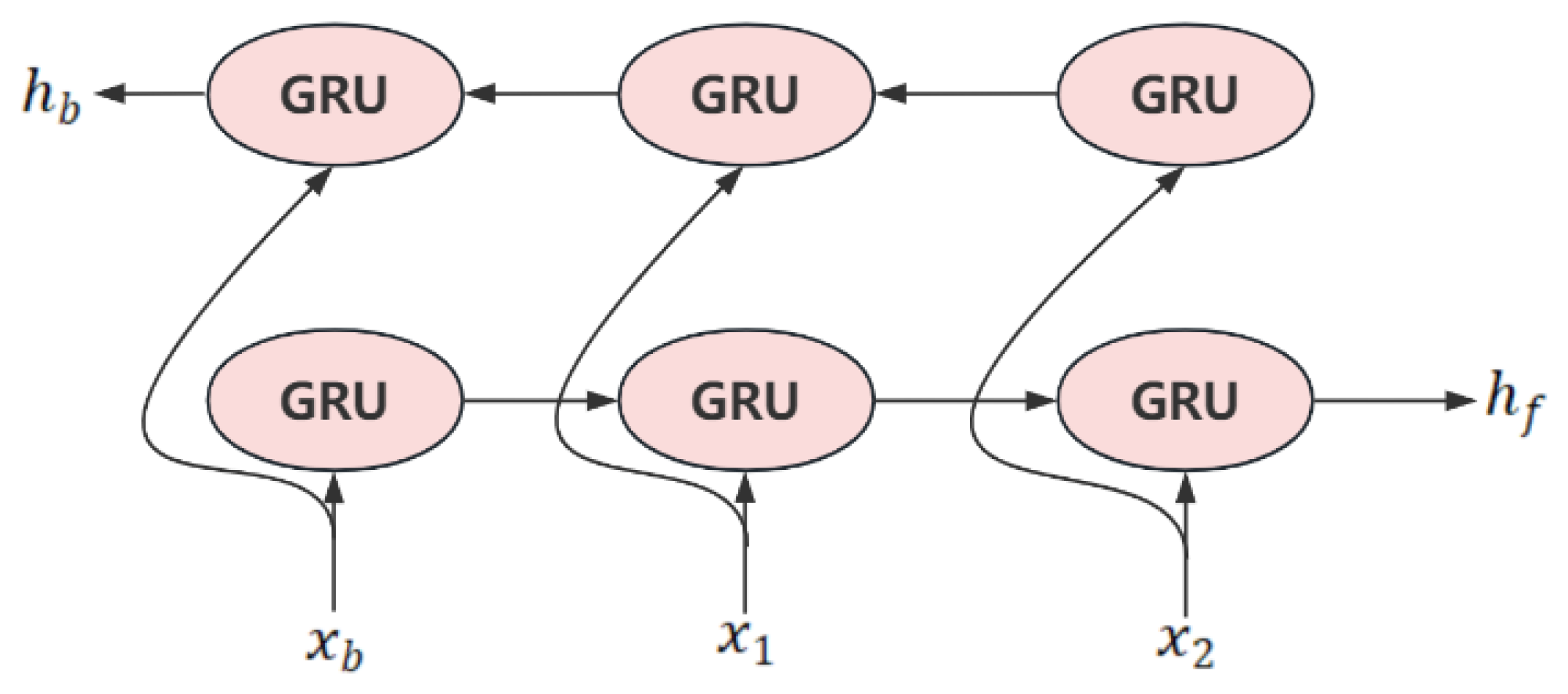

The BiGRU extends the traditional GRU by processing the input sequence in both forward and backward directions. While the standard GRU captures only forward dependencies, BiGRU consists of two independent GRUs—one operating in the forward direction and the other in reverse—thereby leveraging both past and future contextual information for more comprehensive sequence modeling. The architecture of BiGRU is illustrated in

Figure 5.

In BiGRU, each GRU unit utilizes an update gate and a reset gate to regulate the flow of information. The update gate determines how much of the current state should be updated, while the reset gate controls the extent to which the previous state should be discarded.

For a given input sequence

, the computation of the BiGRU is defined as follows:

Here,

and

denote the hidden states at time step

i generated by the forward and backward GRU, respectively. The function

represents a nonlinear transformation applied to the input word vector, encoding it into the corresponding GRU hidden state, and

is the

element of the input sequence.

The hidden states from both directions are then concatenated to form the final hidden representation

. Finally,

is passed through a fully connected layer to produce the output

.

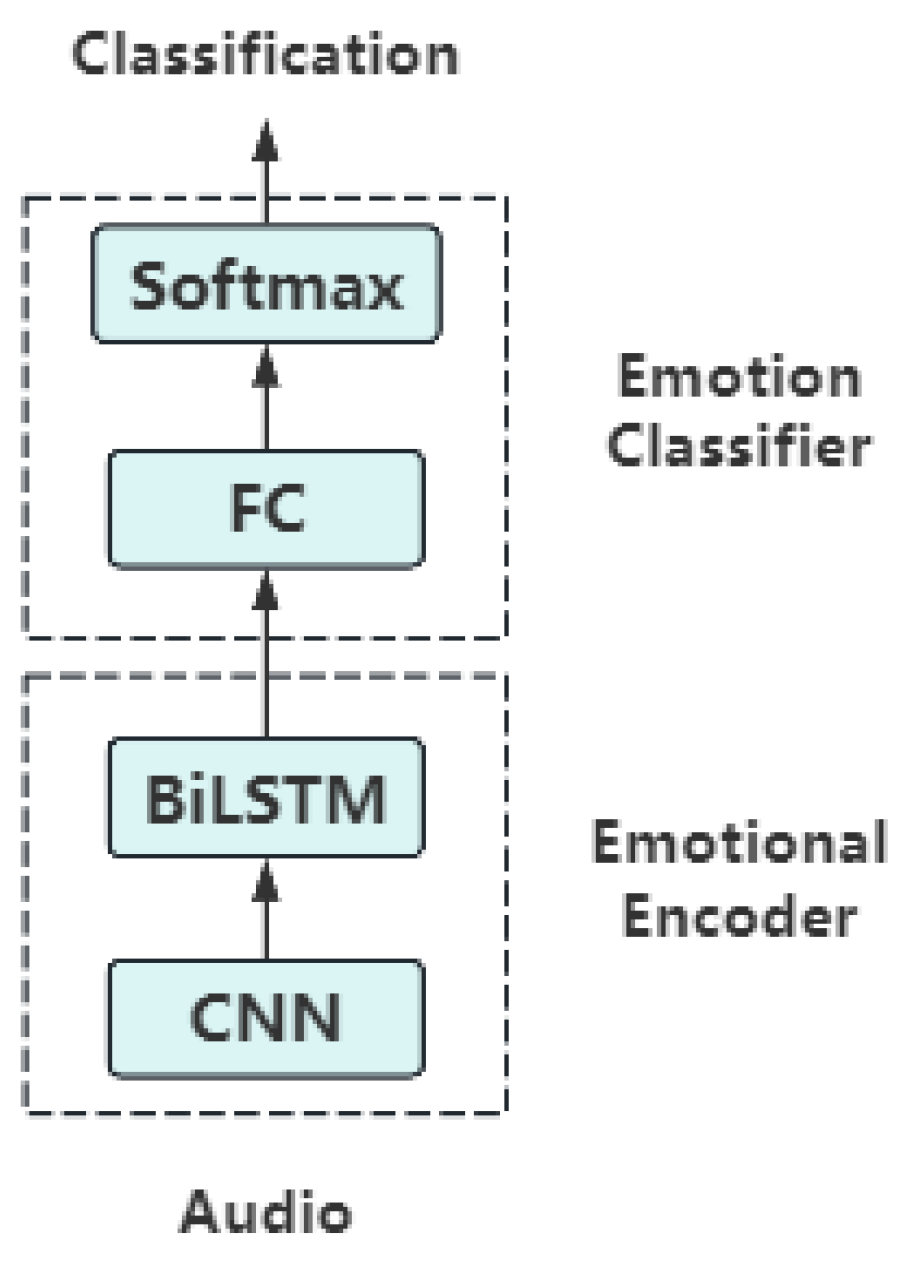

3.3.2. Audio Sentiment Analysis

In audio-based emotion analysis, emotional features are first extracted from the audio signal using an emotion encoder. These features are then passed to an emotion classifier, which performs the final classification to determine the corresponding emotional category.

As shown in

Figure 6, the emotion encoder consists of a CNN followed by a BiLSTM layer, which collaboratively generate the emotional feature representations. The emotion classifier comprises a fully connected layer and a softmax layer, with the final emotional category predicted through the output of the softmax layer.

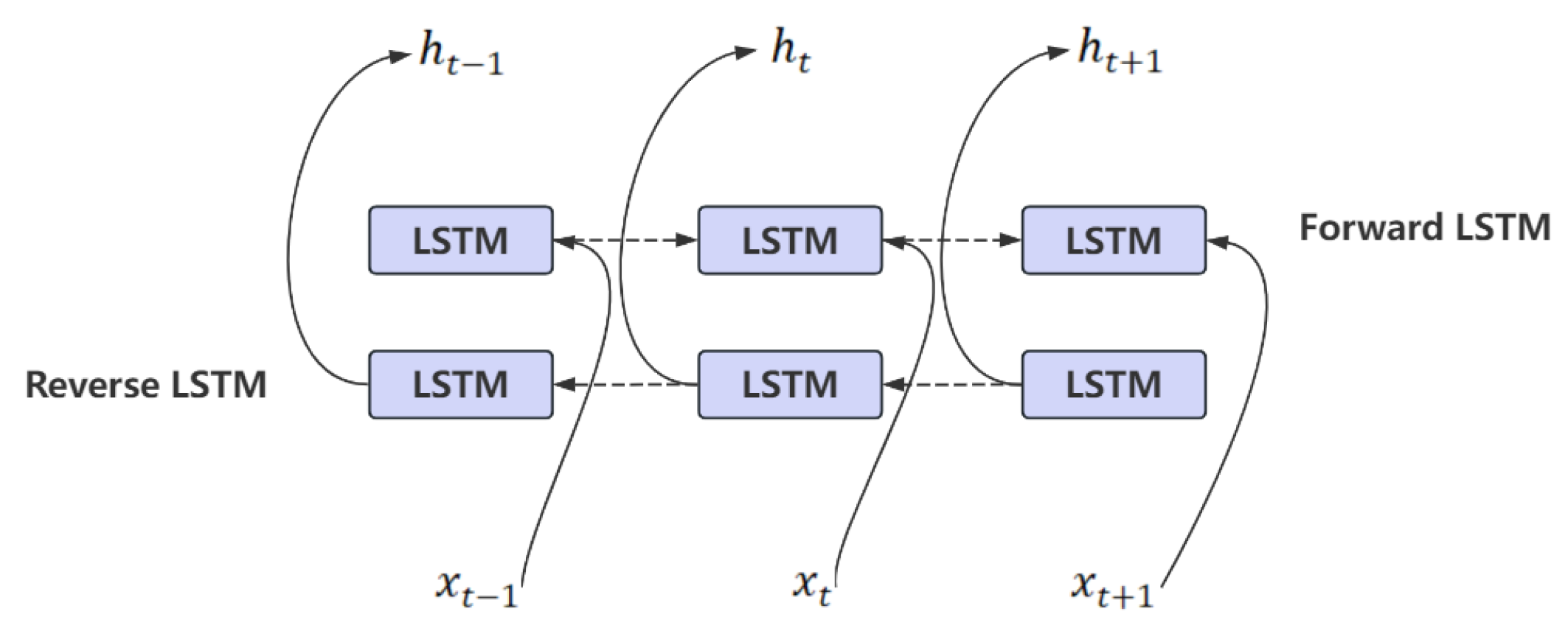

Figure 7 illustrates the structure of the BiLSTM network, which simultaneously processes sequential data in both forward and backward directions using forward and backward LSTM layers. This enables the model to capture bidirectional contextual information and learn more complex dependency structures. Such capabilities significantly enhance the model’s expressiveness, particularly in tasks involving long sequential data. In addition, the BiLSTM network demonstrates strong robustness, as it does not rely heavily on word embeddings or handcrafted features. Instead, it can effectively learn rich and informative feature representations directly from the data.

3.4. Speech Rate-Controllable Mongolian Emotional Speech Synthesis Based on Improved Tacotron2

Building upon the improved Tacotron2-based Mongolian emotional speech synthesis model, a style encoder and a speech rate control variable are incorporated to construct a speech Rate-Controllable Mongolian emotional speech synthesis model. The style encoder is primarily designed to extract acoustic features—such as pitch, intonation, and emotional attributes—from the Mel-spectrogram of emotional speech. This allows the model to retain other prosodic and acoustic characteristics while dynamically adjusting speech rate, thereby minimizing undesirable alterations to non-temporal attributes. The speech rate variable, on the other hand, is responsible for explicitly controlling the speaking rate of the synthesized speech, enabling the generation of both fast-paced and slow-paced emotional utterances. The enhanced model is thus capable of adjusting speaking rate dynamically while preserving the integrity of other speech attributes as much as possible.

3.4.1. Style Encoder

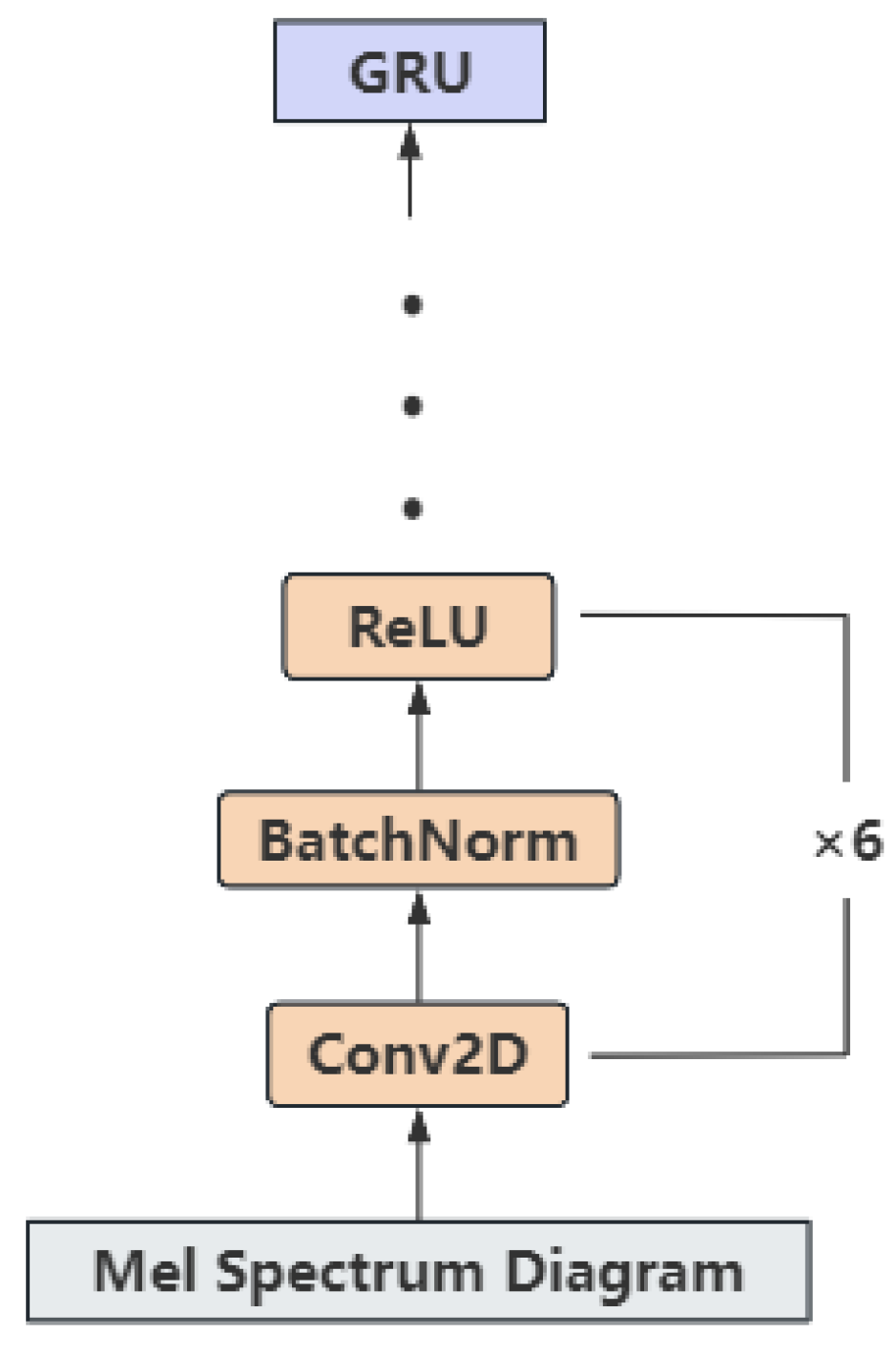

The style encoder consists of six convolutional layers, each followed by batch normalization and a ReLU activation function. A GRU layer is connected after the convolutional layers to further capture temporal dependencies in the encoded features.

As illustrated in

Figure 8, the architecture of the style encoder takes the Mel-spectrogram of emotional speech as input. The input is passed through six convolutional layers to extract local features across different dimensions of the spectrogram. Subsequently, a GRU is employed to capture the temporal dependencies within the spectrogram sequence. The style encoder is capable of learning acoustic features such as pitch, intonation, and emotion types from the Mel-spectrogram. Batch Normalization (BN) is an optimization technique in deep learning that helps mitigate issues such as vanishing or exploding gradients during model training. By normalizing the mini-batch inputs to each layer, BN alleviates internal covariate shift, thereby improving the training stability and convergence speed of the model.

During the execution of BN, for a given input mini-batch

x, the mean

and variance

of each feature are first computed as follows:

Here,

m denotes the mini-batch size, and

represents the individual input data sample.

Subsequently, the input data are normalized using the computed mean and variance:

where

is a small constant introduced to prevent division by zero.

Finally, a linear transformation is applied:

where

is used to scale the normalized data, and

is employed to shift the normalized data.

3.4.2. Speech Rate Variable

Common methods for measuring speech rate include Words Per Minute (WPM), Syllables Per Minute (SPM), and Words Per Silence (WPS). Among these, WPM is the most widely adopted metric, calculated by dividing the total number of words by the overall speech duration. In the field of phonetics, however, SPM is more frequently used because syllable counts offer a more fine-grained representation of speech structure. SPM is computed by dividing the total number of syllables by the total duration of the utterance. Subsequent studies have also introduced WPS as a relevant metric, which is calculated by dividing the number of words by the number of pauses. This metric has been shown to correlate strongly with a speaker’s rhythmic patterns and fluency. Nonetheless, all of the above methods have certain limitations when compared to phoneme-based approaches for estimating speech rate, especially in contexts where phonemes serve as the primary modeling unit.

SRC-IT2 takes phonemes as the model’s input. Therefore, it is more appropriate to compute the speech rate based on phoneme-level information. Inverse Mean Duration (IMD) is commonly employed as a measure of phoneme duration characteristics. A higher IMD value indicates a faster speaking rate, while a lower IMD value corresponds to slower speech. Accordingly, this study adopts IMD to quantify the speech rate. The calculation of speech rate using IMD is formulated as follows:

where

is the scaling factor for the speech rate,

S denotes the number of phonemes in the text sequence, and

T represents the total duration of the audio.

4. Experiment

4.1. Dataset

The dataset employed in this study was constructed by the Artificial Intelligence Laboratory of Inner Mongolia University of Technology. It comprises a speech corpus covering seven discrete emotional categories: ‘happy’, ‘angry’, ‘sad’, ‘surprise’, ‘fear’, ‘disgusted’, and ‘neutral’. For each emotional category, 300 utterances were recorded, resulting in a total duration of approximately 2.25 h.

Table 1 presents the duration range of emotional speech samples across different categories.

Prior to model training, a Mongolian text front-end processing method was applied to convert Mongolian script into its corresponding Latin-based sequences. Each processed text sequence was then aligned with its corresponding emotional speech audio file. The resulting dataset was randomly divided into training, testing, and validation sets according to an 8:1:1 ratio, yielding 1680, 210, and 210 samples, respectively, for each subset.

4.2. Experimental Environment and Evaluation Index

4.2.1. Experimental Environment

The detailed experimental environment configuration is summarized in

Table 2.

The hyperparameters are shown in

Table 3.

4.2.2. Evaluation Index

In this study, the Mean Opinion Score (MOS) metric was adopted to evaluate the quality of synthesized speech. Additionally, accuracy was used to assess the emotion recognition performance of speech rate perception.

The MOS is a widely used subjective evaluation method in speech synthesis. Therefore, this study employs MOS to conduct subjective assessments of the speech synthesis model. In the MOS evaluation, a group of listeners is invited to rate the synthesized speech samples on a five-point scale, where 1 indicates poor quality and 5 indicates excellent quality. Generally, a MOS score below 3 is considered unacceptable in terms of speech quality, while a score above 4 is regarded as indicating high-quality speech. Assuming

M evaluators assess

N synthesized speech samples, the MOS is calculated as follows:

4.3. Sentiment Classification Experiment of Speech Speed Perception

As shown in

Table 4, the proposed model demonstrates a good ability to distinguish between the three speech rates. From the perspective of emotional categories, the recognition accuracies of ‘angry’, ‘surprise’, ‘fear’, and ‘neutral’ emotions at all three speech rates are higher than the average recognition accuracy for each corresponding rate. Specifically, the recognition accuracies for anger at fast and medium speech rates are the highest, reaching 0.87 and 0.82, respectively. The ‘surprise’ category achieves the highest recognition accuracy of 0.85 at the slow speech rate. Conversely, ‘disgusted’ yields the lowest recognition accuracies at medium and slow speech rates, at 0.62 and 0.69, respectively. The ‘happy’ emotion achieves its lowest recognition accuracy (0.72) at the fast speech rate. From the perspective of speech rate, the average recognition accuracy across all seven emotion categories is lowest at the medium speech rate (0.74), whereas the fast and slow rates yield higher average accuracies, 0.81 and 0.78, respectively. This indicates that fast and slow emotional speech samples are more distinguishable and perceptually salient than those at a medium speech rate.

Overall, the experimental results show slight variations in synthesis performance across different speech rates. The ‘angry’, ‘surprise’, ‘fear’, and ‘neutral’ categories demonstrate consistently strong synthesis quality at fast, medium, and slow rates. In contrast, ‘happy’, ‘sad’, and ‘disgusted’ show better synthesis performance at fast and slow rates compared to medium. These findings also suggest that the model exhibits varying learning capabilities across different speech rate conditions.

4.4. Emotional Speech Synthesis Quality Comparison Experiment

To evaluate the quality of emotional speech synthesis, a panel of 30 native Mongolian-speaking listeners was invited to serve as professional evaluators. A blind and independent listening test was conducted, in which participants rated the synthesized speech samples. The MOS was then calculated as the arithmetic mean of all valid scores. The evaluators independently rated audio samples synthesized by three baseline models—FastSpeech2 [

5], Deep Voice3 [

18], and VAE-Tacotron2 [

19]—as well as the proposed SRC-IT2 model presented in this study.

The results presented in

Table 5 indicate that the proposed SRC-IT2 model achieves an average MOS score of 3.70, slightly outperforming the other three baseline models. The VAE-Tacotron2 and Deep Voice3 models yield comparable MOS scores of 3.62 and 3.65, respectively, while FastSpeech2 demonstrates the lowest performance among the four models with an average MOS score of 3.54. Notably, FastSpeech2 underperforms particularly in the emotional categories of ‘happy’, ‘disgusted’, and ‘sad’.

The SRC-IT2 model achieves the highest MOS scores in multiple emotional categories. Specifically, it outperforms all baseline models in the ‘happy’, ‘angry’, ‘sad’, ‘fear’, ‘disgusted’, and ‘neutral’ categories. Only in the ‘surprise’ category does it score slightly lower than Deep Voice3. Overall, the results suggest that the SRC-IT2 model delivers superior performance in emotional speech synthesis compared to the three baseline models.

4.5. SRC-IT2 Model Ablation Experiment

4.5.1. Ablation Experiment of Emotional Module

To evaluate the impact of emotional embeddings on the SRC-IT2 model, ablation experiments were conducted and categorized into two groups: A and B. In Group A, the audio-based emotion analysis module was removed to assess the contribution of the text-based emotion analysis module to the overall model performance. In Group B, the text-based emotion analysis module was excluded to examine the influence of the audio-based emotion analysis module.

As shown in

Table 6, the complete model (SRC-IT2) achieved the highest average MOS score of 3.70. The scores for Group A and Group B were slightly lower, at 3.62 and 3.59, respectively. These results indicate that incorporating both text-based and audio-based emotion analysis modules contributes to improved emotional speech synthesis quality, resulting in more natural and expressive synthesized speech.

4.5.2. Ablation Experiments at Different Speech Rates

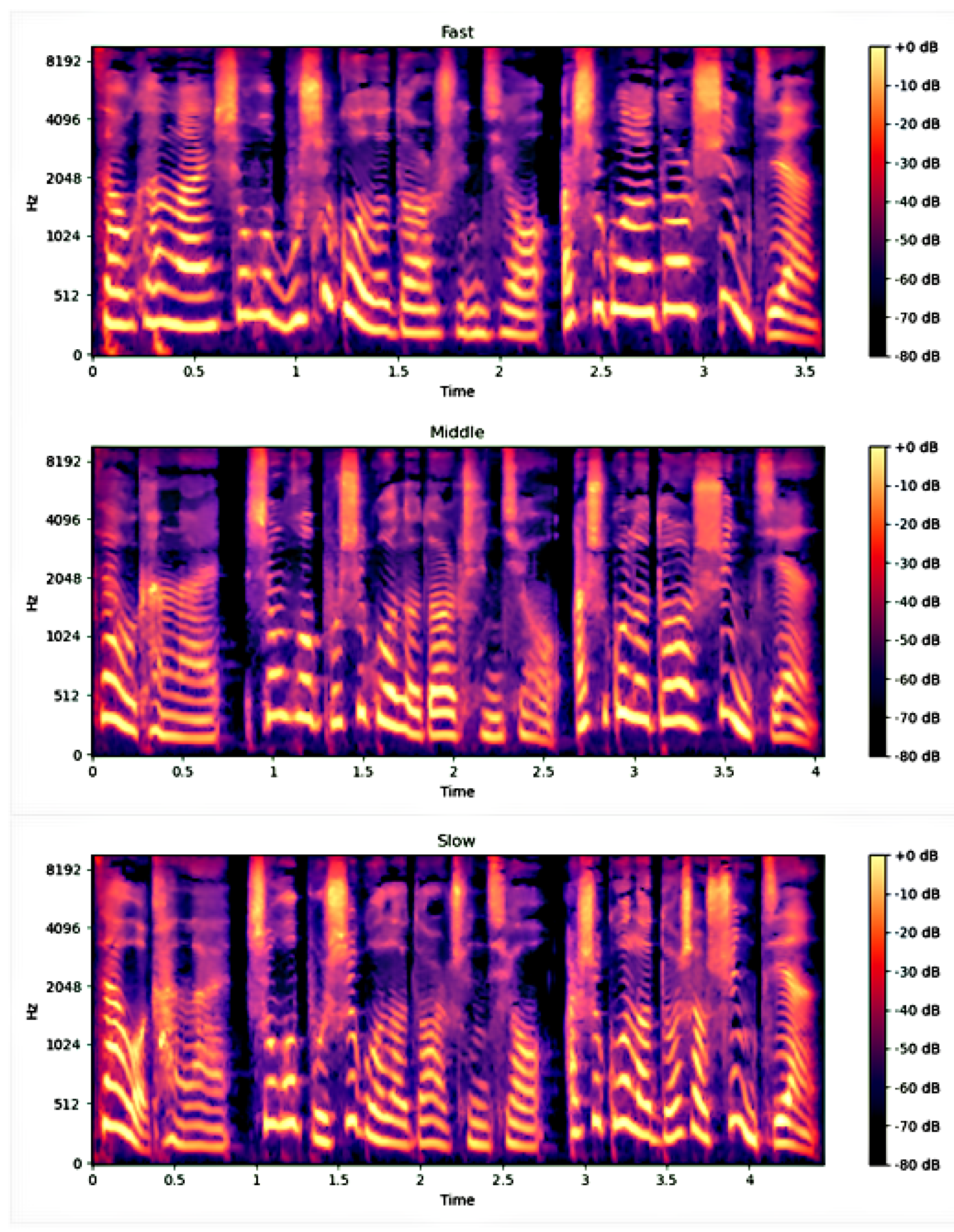

To evaluate the impact of different speech rates on the model’s performance, an ablation study was conducted. One emotional speech category was selected from the seven synthesized emotion types for spectrogram-based analysis at varying speech rates.

Figure 9 presents the Mel-spectrograms of synthesized speech with different speech rates under the ‘angry’ emotion condition.

As observed from the Mel-spectrograms, noticeable differences exist among the synthesized emotional speech at various speaking rates. In terms of audio duration, the fast speech lasts approximately 3.6 s, the normal-speed speech lasts around 4 s, and the slow speech extends to approximately 4.5 s, which aligns with natural variations in speech rate. From the perspective of spectrogram structure, the fast speech Mel-spectrogram appears more compact, with clearly visible high-frequency components; however, due to the accelerated rate, the representation of fine-grained details is less prominent compared to the normal and slow speeds. The normal-speed Mel-spectrogram exhibits a relatively stable and evenly distributed spectral pattern, effectively capturing subtle emotional nuances. In contrast, the slow-speed Mel-spectrogram shows a more relaxed structure with a longer temporal span and smoother spectral transitions, thereby enhancing the expressiveness of emotional content.

5. Conclusions

To address the challenges of limited Mongolian emotional speech corpora, slow synthesis speed, unstable quality, insufficient emotional expressiveness, and lack of controllable speech rate, this study proposes the SRC-IT2 model. Under the constraints of a limited emotional speech corpus, the model employs various optimizations to enhance the quality of synthesized Mongolian emotional speech while allowing dynamic adjustment of speaking rate. Firstly, an end-to-end Mongolian speech synthesis module is constructed based on an improved Tacotron2 framework. The front-end processing pipeline is optimized to account for the unique characteristics of Mongolian script, and a G2P-Seq2Seq is used for grapheme-to-phoneme conversion. Secondly, text and audio emotion analysis modules are integrated into the synthesis framework to learn and represent Mongolian emotional style features effectively. Finally, a style encoder and a speech rate control variable are introduced into the acoustic modeling pipeline, enabling the model to dynamically adjust speaking rate while adhering to emotional expression. Experiments demonstrate that the proposed SRC-IT2 model can generate high-quality, expressive Mongolian speech even with limited training data, while exhibiting controllable speech rate and robust overall performance.

In future work, we plan to collect a more diverse Mongolian emotional speech corpus and explore prosody-driven dynamic speaking rate adjustment strategies to further enhance the naturalness of speech rate modulation.

Author Contributions

Conceptualization, Q.R.; methodology, Q.R. and Q.B.; software, Q.B.; validation, Q.B. and C.Z.; formal analysis, Q.B.; investigation, Y.J. and N.W.; resources, Q.R.; data curation, Q.B.; writing—original draft preparation, Q.R. and Q.B.; writing—review and editing, Q.R., Q.B. and C.Z.; visualization, Y.J. and N.W.; supervision, Q.R. and C.Z.; project administration, Q.R. and Q.B.; funding acquisition, Q.R. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (62466044), the Basic Research Business Fee Project of Universities Directly under the Autonomous Region (JY20240062), (ZTY2024072), the ’Youth Science and Technology Talent Support Program’ Project of Universities in Inner Mongolia Autonomous Region (NJYT23059), the Inner Mongolia Natural Science Foundation General Project (2022MS06013) and Inner Mongolia Autonomous Region Key R & D and Achievement Transformation Plan Project (2025YFHH0115).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

As Mongolian is a low-resource language, the construction of the dataset by our Artificial Intelligence Laboratory involved significant effort, time, and financial resources. In accordance with the laboratory’s policy, all users of the dataset are required to sign a confidentiality agreement. Therefore, we regret that the original data cannot be publicly released.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Dong, Y.; Fu, Z.; Stankovski, S.; Peng, Y.; Li, X. A call center system based on Expert systems for the acquisition of agricultural knowledge transferred from text-to-speech in China. Comput. J. 2021, 64, 895–908. [Google Scholar] [CrossRef]

- Taylor, P. Text-To-Speech Synthesis; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Sotelo, J.; Mehri, S.; Kumar, K.; Santos, J.F.; Kastner, K.; Courville, A.; Bengio, Y. Char2wav: End-to-End Speech Synthesis. 2017. Available online: https://openreview.net/forum?id=B1VWyySKx (accessed on 25 June 2025).

- Ren, Y.; Ruan, Y.; Tan, X.; Qin, T.; Zhao, S.; Zhao, Z.; Liu, T.Y. Fastspeech: Fast, robust and controllable text to speech. Adv. Neural Inf. Process. Syst. 2019, 32, 236–242. [Google Scholar]

- Ren, Y.; Hu, C.; Tan, X.; Qin, T.; Zhao, S.; Zhao, Z.; Liu, T.Y. Fastspeech 2: Fast and high-quality end-to-end text to speech. arXiv 2020, arXiv:2006.04558. [Google Scholar]

- Tan, X.; Chen, J.; Liu, H.; Cong, J.; Zhang, C.; Liu, Y.; Wang, X.; Leng, Y.; Yi, Y.; He, L.; et al. Naturalspeech: End-to-end text-to-speech synthesis with human-level quality. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 4234–4245. [Google Scholar] [CrossRef] [PubMed]

- Yao, Y.; Liang, T.; Feng, R.; Shi, K.; Yu, J.; Wang, W.; Li, J. SR-TTS: A rhyme-based end-to-end speech synthesis system. Front. Neurorobot. 2024, 18, 1322312. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Lv, Y.; Dou, J.; Zhang, Y.; Wang, Y. FLY-TTS: Fast, Lightweight and high-quality end-to-end Text-to-Speech Synthesis. arXiv 2024, arXiv:2407.00753. [Google Scholar]

- Zhou, K.; Sisman, B.; Liu, R.; Li, H. Seen and unseen emotional style transfer for voice conversion with a new emotional speech dataset. In Proceedings of the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 920–924. [Google Scholar]

- Lorenzo-Trueba, J.; Henter, G.E.; Takaki, S.; Yamagishi, J.; Morino, Y.; Ochiai, Y. Investigating different representations for modeling and controlling multiple emotions in DNN-based speech synthesis. Speech Commun. 2018, 99, 135–143. [Google Scholar] [CrossRef]

- Cai, X.; Dai, D.; Wu, Z.; Li, X.; Li, J.; Meng, H. Emotion controllable speech synthesis using emotion-unlabeled dataset with the assistance of cross-domain speech emotion recognition. In Proceedings of the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 5734–5738. [Google Scholar]

- Li, T.; Hu, C.; Cong, J.; Zhu, X.; Li, J.; Tian, Q.; Wang, Y.; Xie, L. Diclet-tts: Diffusion model based cross-lingual emotion transfer for text-to-speech—A study between english and mandarin. IEEE/ACM Trans. Audio Speech Lang. Process. 2023, 31, 3418–3430. [Google Scholar] [CrossRef]

- Cho, D.H.; Oh, H.S.; Kim, S.B.; Lee, S.H.; Lee, S.W. EmoSphere-TTS: Emotional style and intensity modeling via spherical emotion vector for controllable emotional text-to-speech. arXiv 2024, arXiv:2406.07803. [Google Scholar]

- Gudmalwar, A.; Shah, N.; Akarsh, S.; Wasnik, P.; Shah, R.R. VECL-TTS: Voice identity and emotional style controllable cross-lingual text-to-speech. arXiv 2024, arXiv:2406.08076. [Google Scholar]

- Shan, Y. Research and Implementation of Mongolian Emotional Speech Synthesis System. Master’s Thesis, Inner Mongolia University, Hohhot, China, 2021. [Google Scholar]

- Huang, A. Research and Implementation of Mongolian Emotional SPEECH synthesis System Based on Deep Learning. Master’s Thesis, Inner Mongolia University, Hohhot, China, 2022. [Google Scholar]

- Liang, Z.; Shi, H.; Wang, J.; Lu, K. EM-TTS: Efficiently Trained Low-Resource Mongolian Lightweight Text-to-Speech. In Proceedings of the 2024 27th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Tianjin, China, 8–10 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1134–1139. [Google Scholar]

- Ping, W.; Peng, K.; Gibiansky, A.; Arik, S.O.; Kannan, A.; Narang, S.; Raiman, J.; Miller, J. Deep voice 3: 2000-speaker neural text-to-speech. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018; Volume 79, pp. 1094–1099. [Google Scholar]

- Liu, R. Research on Mongolian Speech Synthesis Based on Deep Learning. Ph.D. Thesis, Inner Mongolia University, Hohhot, China, 2020. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).